热门标签

热门文章

- 1基于 mmaction2 的 Non-local 详解

- 2Rust与网络编程:同步网络I/O_rust网络编程

- 3Transformer细节(五)——详解Transformer解码器的自注意力层和编码器-解码器注意力层数据处理机制_编解码注意力层作用

- 4MySQL海量数据项目实战_mysql项目

- 5有序链表的基本用法

- 6华为云电脑和马云无影比_阿里云发布首款名为“无影”云电脑,卡片大小,却拥有强劲算力...

- 7AI智能体开发平台Dify一键部署

- 8python做app用什么框架?,python用什么框架写web_python app框架

- 9ZeroTier 内网穿透,并搭建 moon 中转服务器_zerotier moon服务器搭建

- 10无监督学习与有监督学习的本质区别是什么_强化学习、监督学习和无监督学习...

当前位置: article > 正文

【机器学习笔记】多元线性回归_7.多元线性回归 w b

作者:知新_RL | 2024-07-17 21:28:03

赞

踩

7.多元线性回归 w b

简介

多元线性回归,将多个自变量x,拟合因变量y,形成表达式:y = x β + μ,能够对新的自变量进行回归预测;

前提假设

- 因变量y与参数β的表达式呈线性性;

- 样本随机性;

- 自变量x不存在线性共线性;

- 残差项独立同分布于均值为0,方差固定的高斯分布;

- 残差与自变量独立;

参数求解

损失函数:误差平方和SSE,(Xb - y)'(Xb - y);

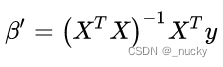

X’X满秩时,利用最小二乘法,对X求偏导等于0,可整理求得β的表达式:

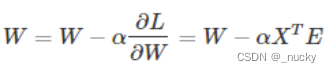

X’X不满秩时,可以利用梯度下降法求解β的近似解,也可用岭回归方式:

进而得到μ的表达式:μ = y - x β

拓展

- 岭回归:将损失函数引入系数β的L2范数惩罚项(最小二乘法求解参数);

- Lasso:将损失函数引入系数β的L1范数惩罚项(坐标下降法求解参数);

- 优缺点:引入惩罚项减少过拟合、且避免了自变量多重共线性问题导致的模型失真;但容易引起欠拟合,且对参数的估计为有偏估计,降低了可解释性;

sklearn实战

重要参数

class sklearn.linear_model.Ridge( alpha=1.0, fit_intercept=True, normalize=False, copyX=True, maxiter=None, tol=0.001, solver='auto', random_state=None ): # alpha 损失函数惩罚项系数,默认为1,越大对系数值惩罚越大; # fit_intercept 是否计算该模型的截距,默认计算; class sklearn.linear_model.Lasso( alpha=1.0, fit_intercept=True, normalize=False, precompute=False, copy_X=True, max_iter=1000, tol=1e-4, warm_start=False, positive=False, random_state=None, selection=’cyclic’ ): # 同上

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

回归任务

from sklearn import datasets from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression, LassoCV, RidgeCV # 波士顿房价数据 data = datasets.load_boston() X_train, X_test, y_train, y_test = train_test_split(data["data"], data["target"], test_size=0.3, random_state=42) # 线性回归 model = LinearRegression(normalize=True) model.fit(X_train, y_train) print("linear loss train: ", model.score(X_train, y_train)) print("linear loss test: ", model.score(X_test, y_test)) # 岭回归 model = RidgeCV(normalize=True) model.fit(X_train, y_train) print("Ridge loss train: ", model.score(X_train, y_train)) print("Ridge loss test: ", model.score(X_test, y_test)) # Lasso回归 model = LassoCV(normalize=True) model.fit(X_train, y_train) print("Lasso loss train: ", model.score(X_train, y_train)) print("Lasso loss test: ", model.score(X_test, y_test))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

参考

https://zhuanlan.zhihu.com/p/400443773

https://mp.weixin.qq.com/s?__biz=MzA3NDg2NzQzNw==&mid=2650966686&idx=1&sn=2fb088a8c2c2627ca2caa9b8d0ec63df&chksm=848f1d1cb3f8940a63e4311f4c7858186c0cd352f2ef16ec80df37b84825b82b2d65d45823d5&scene=27

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/842077

推荐阅读

相关标签