- 1编程语言命名规范_请结合所学以及网络资料,介绍一下编程语言有哪些命名规则

- 2linux安装redis超级详细教程_linux环境部署redis6.2.10

- 3推动内容安全生态与通用大模型良性融合_内容安全通过大模型解决

- 4Linux : 解决ssh命令失败(ssh: Network is unreachable),MobaXtermSSH连接超时(Network error:Conection timed out )_ssh network is unreachable

- 5分布式系统—ELK日志分析系统概述及部署

- 6mysql添加用户_mysql添加用户

- 7C语言写二叉树_建立二叉树的代码c语言

- 8超详细的VSCode下载和安装教程(非常详细)从零基础入门到精通,看完这一篇就够了。

- 9PyCharm查看运行状态的步骤及方式!_pycharm 运行进度

- 10等保系列之——网络安全等级保护测评:工作流程及工作内容_网络安全等级保护测评流程

Kubeedge安装配置

赞

踩

kubeedge从入门到入坑

- 一、docker安装

- 二、kubenetes安装

- 10、问题解决们

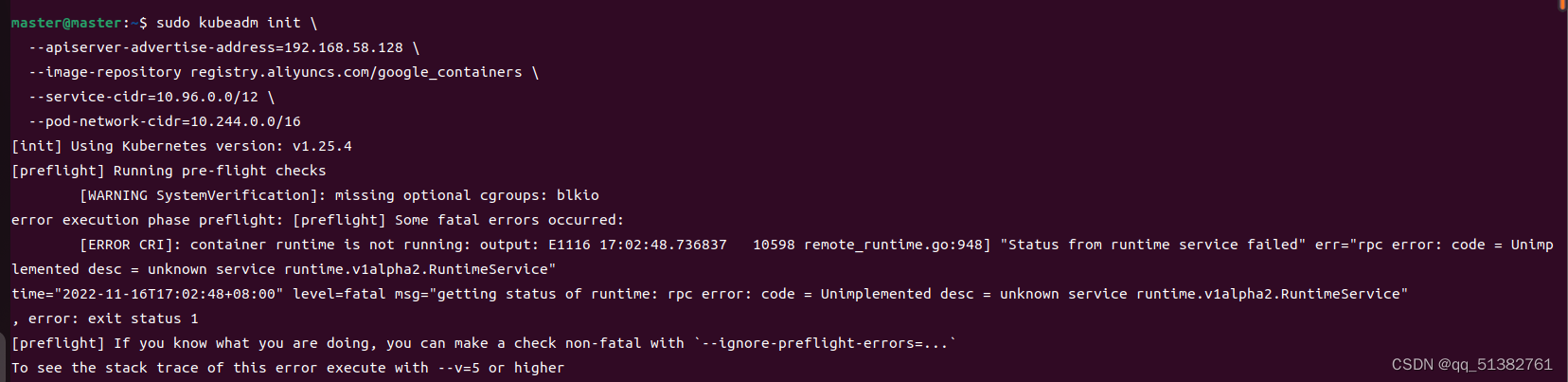

- 主节点初始化不过

- 端口占用

- 卸载 kubenet

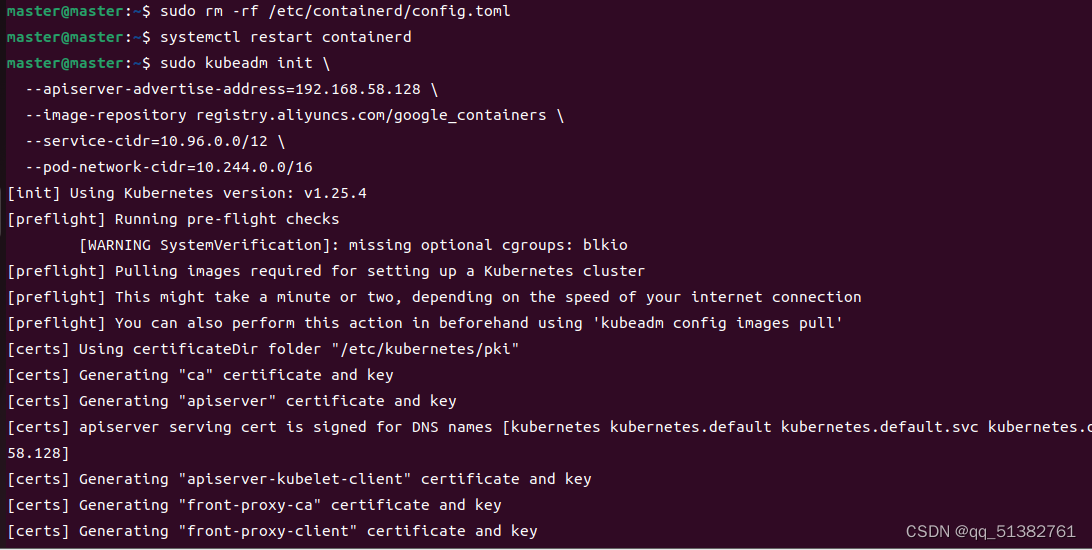

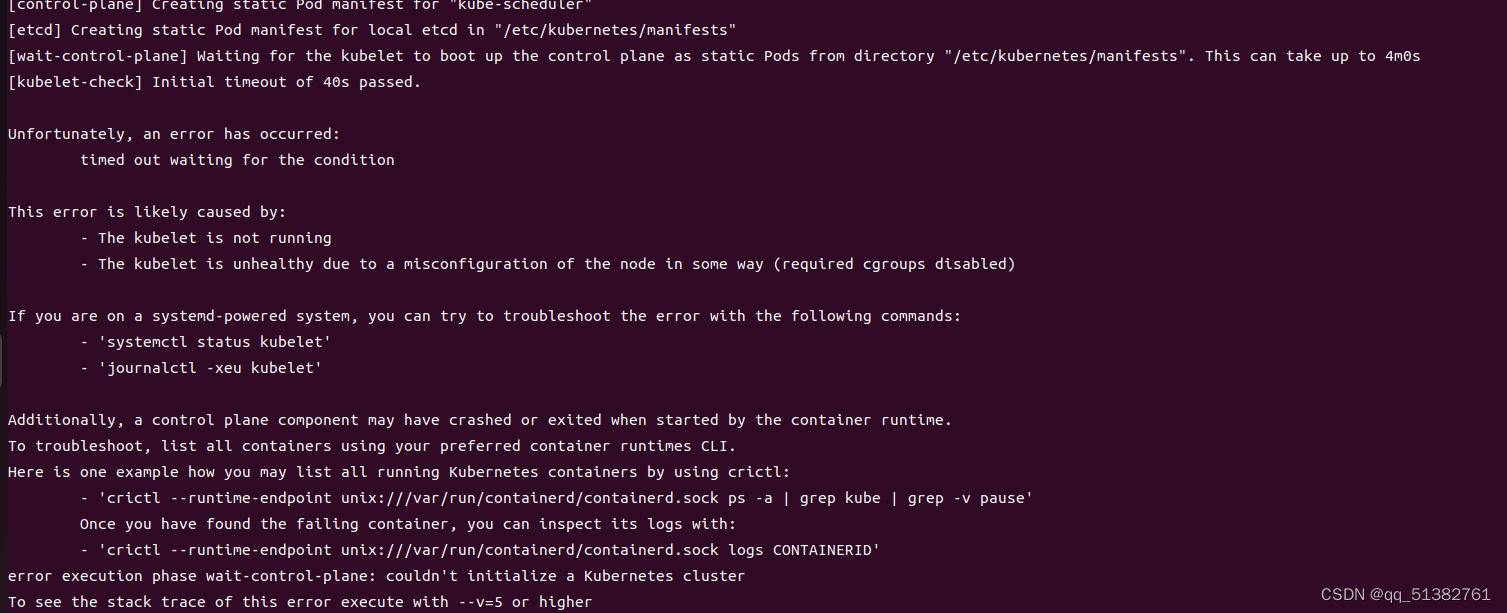

- 初始化不过

- node节点加入集群一直卡

- node节点kubelet不启动

- sudo apt-get upgrade显示E: 无法获得锁 /var/lib/dpkg/lock-frontend。锁正由进程 3963(unattended-upgr)持有

- node节点加入集群,安装 kubeadm, kubelet 和 kubectl

- 安装网络组件

- 网络组件有两个显示pendng、Init:0/4

- keadm init有另一个程序正在运行another operation (install/upgrade/rollback) is in progress

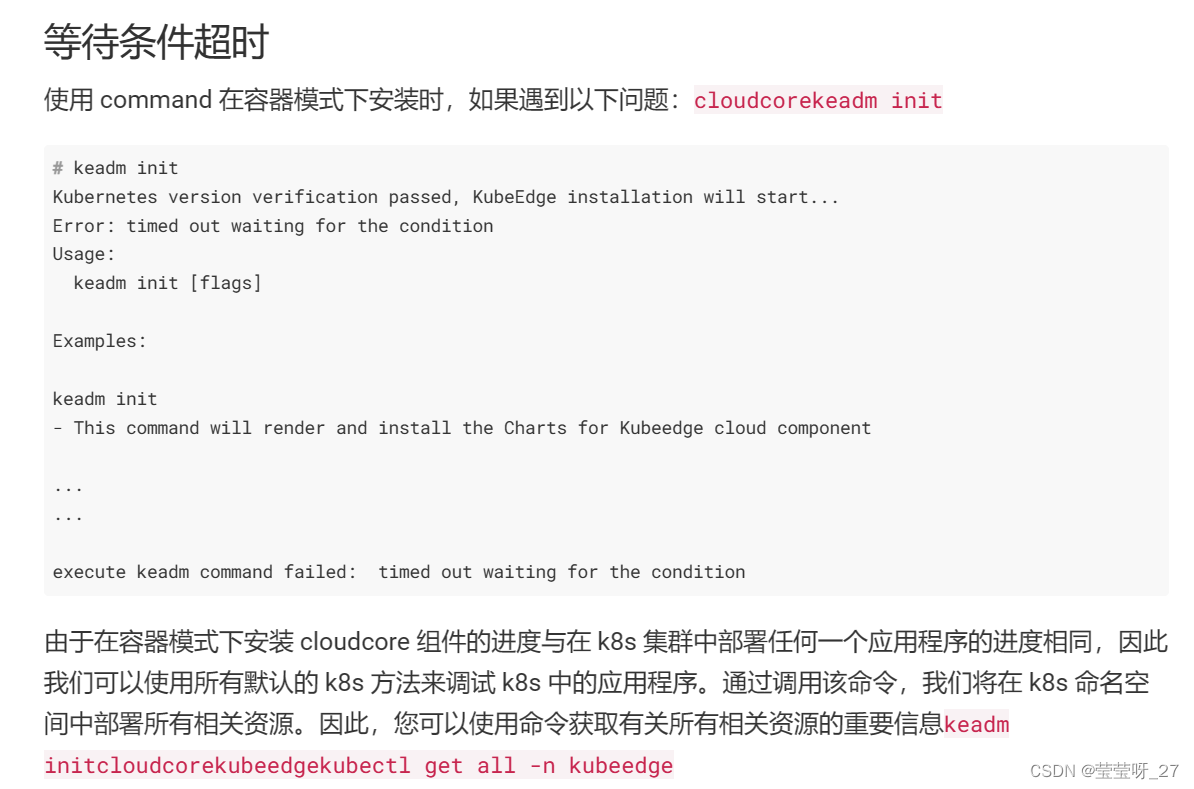

- keadm init Error: timed out waiting for the condition

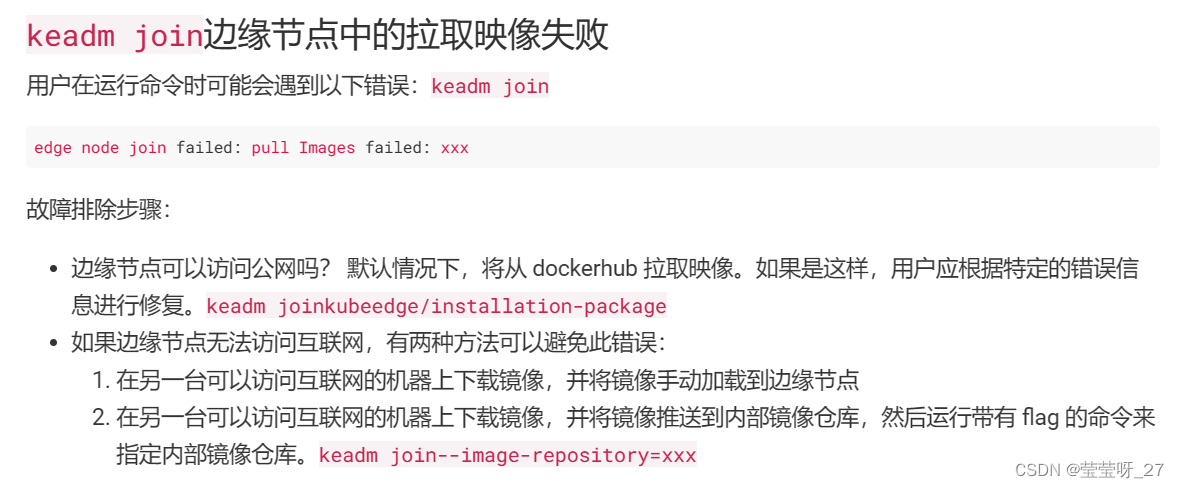

- keadm join边缘节点加入集群:镜像错误

- keadm join边缘节点加入集群:文件复制错误

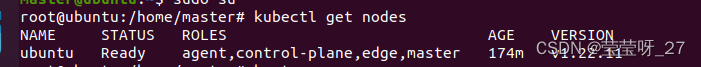

- keadm join边缘节点加入集群:主节点获取不到edge

- node节点加入集群错误1

- node节点加入集群错误2

- master和node名字一样

- 其他操作

(全是踩过的坑,能踩的差不多踩了个遍,呜呜呜┭┮﹏┭┮)

一、docker安装

主节点 master

换国内源

cd /etc/apt/

sudo cp sources.list sources.list.bak

sudo vi sources.list #本文使用中科大源

sudo apt-get update #换源后要更新列表

sudo apt-get upgrade

- 1

- 2

- 3

- 4

- 5

这里涉及到vim编辑器的用法:

i --> insert,编辑模式

esc --> 退出编辑

:q! --> 不保存强制退出

:wq --> 保存并退出

- 1

- 2

- 3

- 4

中科大的源如下(源码暂时用不上,相关地址注释掉),也可以视网络情况使用阿里、清华等源

deb https://mirrors.ustc.edu.cn/ubuntu/ focal main restricted universe multiverse

#deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

#deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

#deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu/ focal-security main restricted universe multiverse

#deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-security main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

#deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

安装必要基本工具

sudo apt-get install -y \

net-tools \

vim \

ca-certificates \

curl \

gnupg \

lsb-release \

apt-transport-https

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

安装docker

添加 Docker 官方 GPG 密钥:

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

- 1

设置存储库:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update #换源后不要忘记更新列表

sudo apt-cache madison docker-ce #查看可安装的版本,此步可选

- 1

- 2

- 3

- 4

- 5

安装docker:

sudo apt-get install docker-ce=20.10.21 docker-ce-cli=20.10.21 containerd.io docker-compose-plugin

- 1

(如果没有指定版本,把版本号去掉)

配置加速:

访问国外服务器网速较慢,可做如下配置:

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"], #注意:node节点的驱动为cgroupfs

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

(注意:把注释那句删掉,否则会报错)

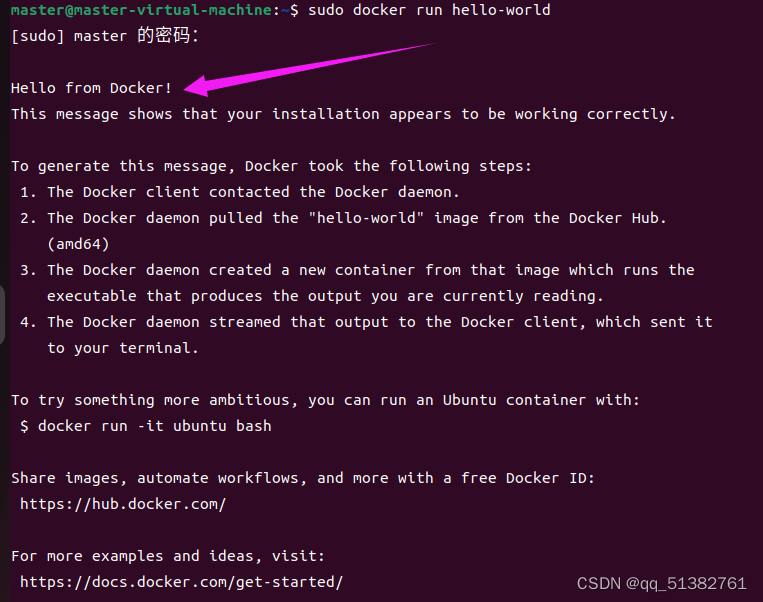

应用配置并重新启动docker:

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo docker run hello-world #检查是否安装成功(即显示"Hello from Docker!...")

- 1

- 2

- 3

二、kubenetes安装

准备工作

关闭防火墙和磁盘交换分区:

(新装的系统没有开防火墙(可在参考文档中查找方法),本文只说明关闭防火墙的方法。)

(这个不知道啥意思。。。)

sudo swapoff -a #关闭交换分区

sudo vim /etc/fstab # 注释掉swap分区那一行,永久禁用swap分区

- 1

- 2

网络设置:

设置2个k8s.conf文件,允许 iptables 检查桥接流量:

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system #应用设置

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

下载公开签名秘钥并添加阿里云镜像源:

sudo curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

sudo apt-get update

- 1

- 2

- 3

- 4

- 5

开始安装kubenetes:

这里建议用1.23以下的版本,不然后面初始化不好过

sudo apt-get install -y kubelet=1.20.2-00 kubeadm=1.20.2-00 kubectl=1.20.2-00

sudo systemctl enable --now kubelet #使服务生效

- 1

- 2

初始化主节点master:

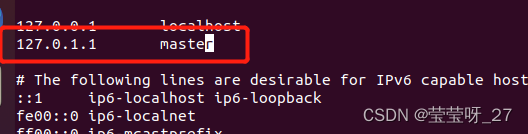

在/etc/hosts中设置地址映射:

sudo vim /etc/hosts

- 1

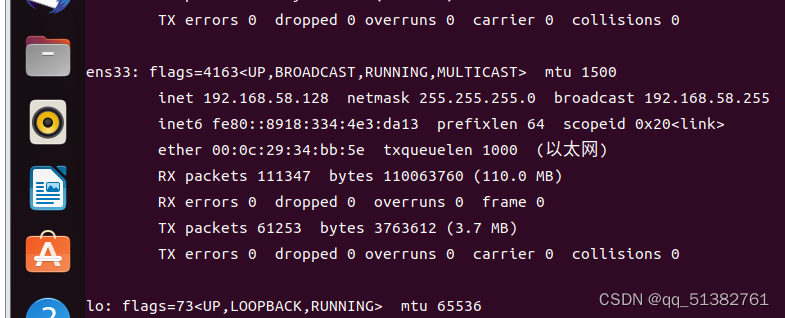

主节点和从节点的地址如下(用ifconfig查询地址):

192.168.58.... master # 用ifconfig查出的ens33 下 地址

192.168.58.... node

- 1

- 2

初始化:

sudo kubeadm init \

--apiserver-advertise-address=196.168.58.128 \

--control-plane-endpoint=master \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.20.2 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

- 1

- 2

- 3

- 4

- 5

- 6

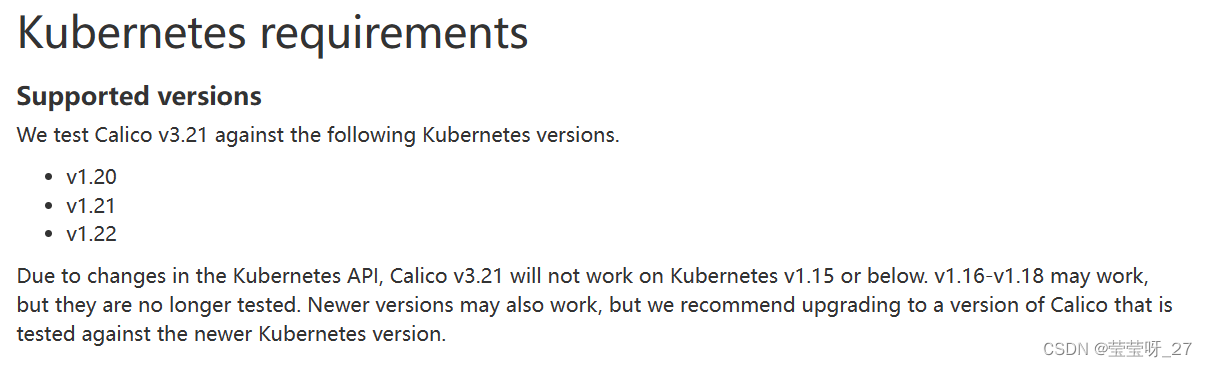

安装网络组件

calico是k8s集群安装所用到的网络组件,calico与fannel相比具有访问控制功能,复杂性也更高些。

curl https://docs.projectcalico.org/manifests/calico.yaml -O

- 1

这个地址可以去https://docs.tigera.io/archive/v3.22/getting-started/kubernetes/requirements这个地方查看所需要的版本

然后在换成https://docs.tigera.io/archive/v3.22/manifests/calico.yaml这个就可以

sudo kubectl apply -f calico.yaml

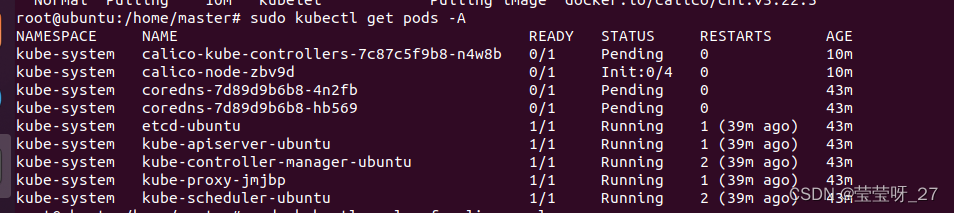

sudo kubectl get pods -A #检查主节点是否完成初始化并准备就绪

- 1

- 2

查看主节点的情况

root@ubuntu:/home/master# sudo kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-7c87c5f9b8-92df9 1/1 Running 0 3m

kube-system calico-node-bbwbq 1/1 Running 0 3m

kube-system coredns-7f6cbbb7b8-8sb2l 1/1 Running 0 12h

kube-system coredns-7f6cbbb7b8-gxtll 1/1 Running 0 12h

kube-system etcd-ubuntu 1/1 Running 1 (7m5s ago) 12h

kube-system kube-apiserver-ubuntu 1/1 Running 2 (6m56s ago) 12h

kube-system kube-controller-manager-ubuntu 1/1 Running 2 (7m6s ago) 12h

kube-system kube-proxy-4m8gc 1/1 Running 1 (7m7s ago) 12h

kube-system kube-scheduler-ubuntu 1/1 Running 2 (7m6s ago) 12h

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

多了calico。。。的name

卸载网络插件

kubectl delete -f calico.yaml

- 1

在 Kubernetes 集群中创建pod

root@master-virtual-machine:/home/master# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

root@master-virtual-machine:/home/master# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

root@master-virtual-machine:/home/master# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-wts25 0/1 Pending 0 36s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d1h

service/nginx NodePort 10.111.58.187 <none> 80:31019/TCP 17s

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

Kubeedge安装

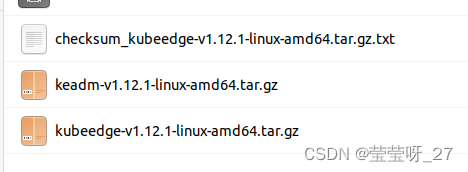

去这个网站下载一个版本的kubeedge,下载对应版本的这三个文件

https://github.com/kubeedge/kubeedge/releases/

云端和边端分别执行以下命令,都需要安装keadm:

tar -xvf keadm-v1.12.1-linux-amd64.tar.gz

cp keadm-v1.12.1-linux-amd64/keadm/keadm /usr/bin/

sudo mkdir /etc/kubeedge/

# 边缘端不需要这两步???

sudo cp kubeedge-v1.12.1-linux-amd64.tar.gz /etc/kubeedge/

sudo cp checksum_kubeedge-v1.12.1-linux-amd64.tar.gz.txt /etc/kubeedge/

- 1

- 2

- 3

- 4

- 5

- 6

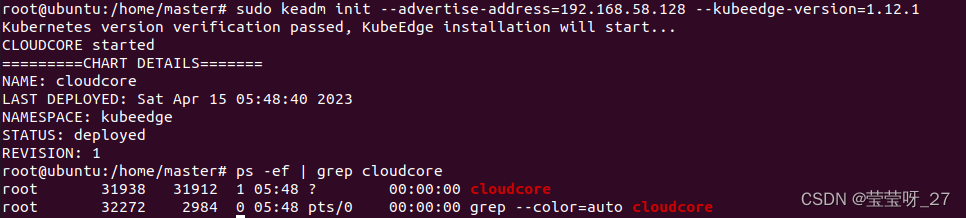

云端执行

sudo keadm init --advertise-address=192.168.58.128 --kubeedge-version=1.12.1

- 1

检查keadm是否初始化完成,执行如下操作,有两个进程

root@ubuntu:/# ps -ef | grep cloudcore

root 190216 190196 0 07:11 ? 00:00:01 cloudcore

root 198535 3782 0 07:20 pts/0 00:00:00 grep --color=auto cloudcore

- 1

- 2

- 3

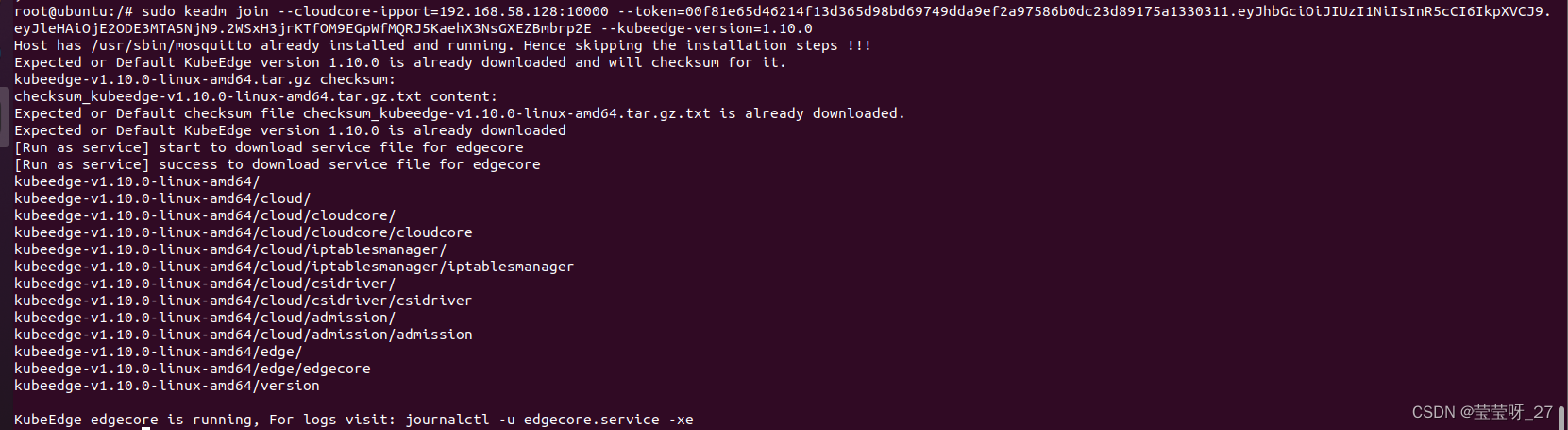

边缘端执行

sudo keadm join --cloudcore-ipport=192.168.58.128:10000 --token=f8548c503b29218a9f72c935c0643ec0ce8d066e349b71c7270248ccf549ec16.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2ODEzMDg3MjB9.wO19krdazmd0EOkEm2s1V7LkAQqFQokykHonOXe0djE --kubeedge-version=1.10.0 --edgenode-name=node --kubeedge-version=1.10.0

- 1

token从云端获取

keadm gettoken

- 1

运行结果:(建议换个好点的网,再科学上个网)

root@ubuntu:/# sudo keadm join --cloudcore-ipport=192.168.58.128:10000 --token=f8548c503b29218a9f72c935c0643ec0ce8d066e349b71c7270248ccf549ec16.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2ODEzMDg3MjB9.wO19krdazmd0EOkEm2s1V7LkAQqFQokykHonOXe0djE

I0413 07:07:13.705589 5717 command.go:845] 1. Check KubeEdge edgecore process status

I0413 07:07:13.720827 5717 command.go:845] 2. Check if the management directory is clean

I0413 07:07:15.582436 5717 join.go:100] 3. Create the necessary directories

I0413 07:07:15.589387 5717 join.go:176] 4. Pull Images

Pulling kubeedge/installation-package:v1.12.1 ...

Successfully pulled kubeedge/installation-package:v1.12.1

Pulling eclipse-mosquitto:1.6.15 ...

Pulling kubeedge/pause:3.1 ...

I0413 07:09:20.248314 5717 join.go:176] 5. Copy resources from the image to the management directory

I0413 07:09:22.285317 5717 join.go:176] 6. Start the default mqtt service

I0413 07:09:23.671202 5717 join.go:100] 7. Generate systemd service file

I0413 07:09:23.671439 5717 join.go:100] 8. Generate EdgeCore default configuration

I0413 07:09:23.671866 5717 join.go:230] The configuration does not exist or the parsing fails, and the default configuration is generated

W0413 07:09:23.672736 5717 validation.go:71] NodeIP is empty , use default ip which can connect to cloud.

I0413 07:09:23.676088 5717 join.go:100] 9. Run EdgeCore daemon

I0413 07:09:24.359615 5717 join.go:317]

I0413 07:09:24.359634 5717 join.go:318] KubeEdge edgecore is running, For logs visit: journalctl -u edgecore.service -xe

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

结束之后,在edge端运行:查看edgecore情况

root@ubuntu:/# sudo systemctl status edgecore

● edgecore.service

Loaded: loaded (/etc/systemd/system/edgecore.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2023-04-13 07:11:11 PDT; 1s ago

Main PID: 7435 (edgecore)

Tasks: 12 (limit: 4572)

Memory: 25.3M

CGroup: /system.slice/edgecore.service

└─7435 /usr/local/bin/edgecore

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.909902 7435 cpu_manager.go:210] "Reconciling" reconcilePeriod="10s"

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.909931 7435 state_mem.go:36] "Initialized new in-memory state store"

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.910444 7435 state_mem.go:88] "Updated default CPUSet" cpuSet=""

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.910499 7435 state_mem.go:96] "Updated CPUSet assignments" assignments=map[]

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.910515 7435 policy_none.go:49] "None policy: Start"

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.912246 7435 memory_manager.go:168] "Starting memorymanager" policy="None"

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.912428 7435 state_mem.go:35] "Initializing new in-memory state store"

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.912752 7435 state_mem.go:75] "Updated machine memory state"

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.913984 7435 manager.go:609] "Failed to read data from checkpoint" checkpoint="kubelet_internal_checkpoint" err="checkpoint >

Apr 13 07:11:11 ubuntu edgecore[7435]: I0413 07:11:11.914368 7435 plugin_manager.go:114] "Starting Kubelet Plugin Manager"

lines 1-19/19 (END)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

10、问题解决们

主节点初始化不过

哭死,在这卡了一个周了(呜呜呜)

master@master:~$ sudo kubeadm init \

--apiserver-advertise-address=192.168.58.128 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.25.4

[preflight] Running pre-flight checks

[WARNING SystemVerification]: missing optional cgroups: blkio

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: E1116 17:02:48.736837 10598 remote_runtime.go:948] "Status from runtime service failed" err="rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService"

time="2022-11-16T17:02:48+08:00" level=fatal msg="getting status of runtime: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService"

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

执行以下命令进行修复:(版本问题)

rm -rf /etc/containerd/config.toml

systemctl restart containerd

- 1

- 2

输入后再次执行kubeadm init,正常运行。

端口占用

[init] Using Kubernetes version: v1.25.4

[preflight] Running pre-flight checks

[WARNING SystemVerification]: missing optional cgroups: blkio

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-10250]: Port 10250 is in use

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

- 6

- 7

直接reset !!!(不好用的话,加sudo权限)

reset完了之后,再执行那个kubeadm init。。。。

kubeadm reset

W1116 17:26:48.455084 11929 preflight.go:55] [reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: t

error execution phase preflight: won't proceed; the user didn't answer (Y|y) in order to continue

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

卸载 kubenet

sudo apt-get remove -y kubelet kubeadm kubectl

- 1

初始化不过

/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

不知道怎么好了!!!真离谱

好像,要切换sudo用户?

2023.4.4 由于版本问题,为什么不建议用新出的版本,就是这个原因

1.24 1.25 之后的,都会出现这个问题

降回1.22以下版本,刷刷的,就过了

(1.20.2也过不了。。。。只有22.11的过了。。。)

sudo apt-get install -y kubelet=1.22.11-00 kubeadm=1.22.11-00 kubectl=1.22.11-00

- 1

root@master-virtual-machine:/# sudo kubeadm init \

--apiserver-advertise-address=192.168.58.128 \

--control-plane-endpoint=master \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

I1122 20:20:53.108441 9316 version.go:255] remote version is much newer: v1.25.4; falling back to: stable-1.22

[init] Using Kubernetes version: v1.22.16

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master master-virtual-machine] and IPs [10.96.0.1 192.168.58.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master-virtual-machine] and IPs [192.168.58.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master-virtual-machine] and IPs [192.168.58.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.507226 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master-virtual-machine as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master-virtual-machine as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: vboxf5.4lxl1wjk2waqd0ad

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join master:6443 --token vboxf5.4lxl1wjk2waqd0ad \

--discovery-token-ca-cert-hash sha256:9cbb5fb089cd59805d7919f8c7399fc235cbaff6c2c8f9b376413b15a4e0bb37 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master:6443 --token vboxf5.4lxl1wjk2waqd0ad \

--discovery-token-ca-cert-hash sha256:9cbb5fb089cd59805d7919f8c7399fc235cbaff6c2c8f9b376413b15a4e0bb37

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

node节点加入集群一直卡

master运行

kubeadm token create --print-join-command

- 1

输出

kubeadm join master:6443 --token ajbsip.biy32ut5t29pezvx --discovery-token-ca-cert-hash sha256:445b80b9e6f44dd9aa1c78599e6e9a789543ed9fec58db03236d870e4f40c016

- 1

输入node节点

root@node-virtual-machine:/etc# kubeadm join master:6443 --token ajbsip.biy32ut5t29pezvx --discovery-token-ca-cert-hash sha256:445b80b9e6f44dd9aa1c78599e6e9a789543ed9fec58db03236d870e4f40c016

[preflight] Running pre-flight checks

[WARNING SystemVerification]: missing optional cgroups: blkio

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: E1129 20:04:40.892054 54777 remote_runtime.go:948] "Status from runtime service failed" err="rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService"

time="2022-11-29T20:04:40+08:00" level=fatal msg="getting status of runtime: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService"

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

爬!!!

root@master01:~# kubeadm init phase upload-certs --upload-certs

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

8e4961700ae059535f4bc60bc64ae9f6e9badb113f4eb2f8d8253a3a0a92b5f3

# 此命令在master节点上执行可以生成一条命令,直接拿到work节点执行,加入到集群中,前提是节点初始化过了

kubeadm token create --print-join-command

kubeadm join 192.168.195.128:6443 --token 2cl24c.zuuuv813otnocq38 --discovery-token-ca-cert-hash sha256:22c12a103ae84f0f7eeace12ed8d98db392cb42782bfe50a205f73f4013b471a# 所以此处加入master的命令如下

kubeadm join 192.168.195.128:6443 --token 2cl24c.zuuuv813otnocq38 \ --discovery-token-ca-cert-hash sha256:22c12a103ae84f0f7eeace12ed8d98db392cb42782bfe50a205f73f4013b471a \ --control-plane --certificate-key 8e4961700ae059535f4bc60bc64ae9f6e9badb113f4eb2f8d8253a3a0a92b5f3

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

重启

kubeadm reset

- 1

node节点kubelet不启动

root@edge1-virtual-machine:/# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Tue 2022-11-29 23:13:59 CST; 5s ago

Docs: https://kubernetes.io/docs/home/

Process: 12755 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status>

Main PID: 12755 (code=exited, status=1/FAILURE)

CPU: 168ms

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

日志

11月 29 23:24:11 edge1-virtual-machine kubelet[13251]: E1129 23:24:11.709838 13251 run.go:74] "command failed" err="failed to load kubelet config file, error: failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to read kubelet config file \"/var/lib/kubelet/config.yaml\", error: open /var/lib/kubelet/config.yaml: no such file or directory, path: /var/lib/kubelet/config.yaml"

11月 29 23:24:11 edge1-virtual-machine systemd[1]: kubelet.service: Main process exited, code=exited, status=1/FAILURE

░░ Subject: Unit process exited

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ An ExecStart= process belonging to unit kubelet.service has exited.

░░

░░ The process' exit code is 'exited' and its exit status is 1.

11月 29 23:24:11 edge1-virtual-machine systemd[1]: kubelet.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ The unit kubelet.service has entered the 'failed' state with result 'exit-code'.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

sudo apt-get upgrade显示E: 无法获得锁 /var/lib/dpkg/lock-frontend。锁正由进程 3963(unattended-upgr)持有

E: 无法获得锁 /var/lib/dpkg/lock-frontend。锁正由进程 3963(unattended-upgr)持有

N: 请注意,直接移除锁文件不一定是合适的解决方案,且可能损坏您的系统。

E: 无法获取 dpkg 前端锁 (/var/lib/dpkg/lock-frontend),是否有其他进程正占用它?

- 1

- 2

- 3

强制解锁

rm -r /var/lib/dpkg/lock-frontend

- 1

node节点加入集群,安装 kubeadm, kubelet 和 kubectl

node节点不用下 kubeadm, kubelet 和 kubectl这些,加入集群直接kubeadm join…就行了,费那个劲干嘛。。。

但是还有个问题,不下kubeadm这些,kubeadm join 命令执行不了啊。。。。

这个再说

安装网络组件

root@ubuntu:/home/master# curl https://docs.projectcalico.org/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 73 100 73 0 0 133 0 --:--:-- --:--:-- --:--:-- 133

root@ubuntu:/home/master# sudo kubectl apply -f calico.yaml

error: error validating "calico.yaml": error validating data: invalid object to validate; if you choose to ignore these errors, turn validation off with --validate=false

- 1

- 2

- 3

- 4

- 5

- 6

就真,版本问题

这是k8s不支持当前calico版本的原因,可以在在官网查看版本是否兼容

https://projectcalico.docs.tigera.io/archive/v3.20/getting-started/kubernetes/requirements

在这查看对应版本

- 1

- 2

- 3

然后就,好了,如果还不好的话,可以换个网,重启 等手段

之前用校园网不好用,可能是这个问题

root@ubuntu:/home/master# curl https://docs.tigera.io/archive/v3.22/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 218k 100 218k 0 0 163k 0 0:00:01 0:00:01 --:--:-- 163k

root@ubuntu:/home/master# sudo kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

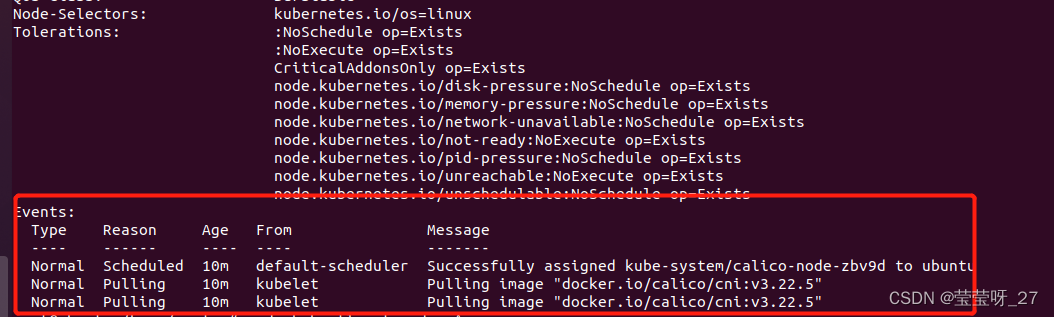

网络组件有两个显示pendng、Init:0/4

查看pod信息

kubectl describe pods calico-node-zbv9d -n kube-system

- 1

换网。。。。。

keadm init有另一个程序正在运行another operation (install/upgrade/rollback) is in progress

root@ubuntu:/# sudo keadm init --advertise-address=192.168.58.128 --kubeedge-version=1.12.1

Kubernetes version verification passed, KubeEdge installation will start...

Error: another operation (install/upgrade/rollback) is in progress

Usage:

keadm init [flags]

Examples:

keadm init

- This command will render and install the Charts for Kubeedge cloud component

keadm init --advertise-address=127.0.0.1 --profile version=v1.9.0 --kube-config=/root/.kube/config

- kube-config is the absolute path of kubeconfig which used to secure connectivity between cloudcore and kube-apiserver

- a list of helm style set flags like "--set key=value" can be implemented, ref: https://github.com/kubeedge/kubeedge/tree/master/manifests/charts/cloudcore/README.md

Flags:

--advertise-address string Use this key to set IPs in cloudcore's certificate SubAltNames field. eg: 10.10.102.78,10.10.102.79

-d, --dry-run Print the generated k8s resources on the stdout, not actual excute. Always use in debug mode

--external-helm-root string Add external helm root path to keadm.

-f, --files string Allow appending file directories of k8s resources to keadm, separated by commas

--force Forced installing the cloud components without waiting.

-h, --help help for init

--kube-config string Use this key to set kube-config path, eg: $HOME/.kube/config (default "/root/.kube/config")

--kubeedge-version string Use this key to set the default image tag

--manifests string Allow appending file directories of k8s resources to keadm, separated by commas

--profile string Set profile on the command line (iptablesMgrMode=external or version=v1.9.1)

--set stringArray Set values on the command line (can specify multiple or separate values with commas: key1=val1,key2=val2)

execute keadm command failed: another operation (install/upgrade/rollback) is in progress

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

root@ubuntu:/# kubectl get pod -n kubeedge

NAME READY STATUS RESTARTS AGE

cloudcore-5876c76687-wnnll 0/1 Pending 0 4d1h

cloudcore-7c6869c5bf-nb47d 0/1 Pending 0 3d23h

root@ubuntu:/# kubectl get all -n kubeedge

NAME READY STATUS RESTARTS AGE

pod/cloudcore-5876c76687-wnnll 0/1 Pending 0 4d2h

pod/cloudcore-7c6869c5bf-nb47d 0/1 Pending 0 4d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cloudcore ClusterIP 10.101.109.217 <none> 10000/TCP,10001/TCP,10002/TCP,10003/TCP,10004/TCP 4d2h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cloudcore 0/1 1 0 4d2h

NAME DESIRED CURRENT READY AGE

replicaset.apps/cloudcore-5876c76687 1 1 0 4d2h

replicaset.apps/cloudcore-7c6869c5bf 1 1 0 4d

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

将keadm reset

root@ubuntu:/# keadm reset

[reset] WARNING: Changes made to this host by 'keadm init' or 'keadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

- 1

- 2

- 3

- 4

在重新初始化

root@ubuntu:/# sudo keadm init --advertise-address=192.168.58.128 --kubeedge-version=1.12.1

Kubernetes version verification passed, KubeEdge installation will start...

Error: timed out waiting for the condition

Usage:

keadm init [flags]

Examples:

keadm init

- This command will render and install the Charts for Kubeedge cloud component

keadm init --advertise-address=127.0.0.1 --profile version=v1.9.0 --kube-config=/root/.kube/config

- kube-config is the absolute path of kubeconfig which used to secure connectivity between cloudcore and kube-apiserver

- a list of helm style set flags like "--set key=value" can be implemented, ref: https://github.com/kubeedge/kubeedge/tree/master/manifests/charts/cloudcore/README.md

Flags:

--advertise-address string Use this key to set IPs in cloudcore's certificate SubAltNames field. eg: 10.10.102.78,10.10.102.79

-d, --dry-run Print the generated k8s resources on the stdout, not actual excute. Always use in debug mode

--external-helm-root string Add external helm root path to keadm.

-f, --files string Allow appending file directories of k8s resources to keadm, separated by commas

--force Forced installing the cloud components without waiting.

-h, --help help for init

--kube-config string Use this key to set kube-config path, eg: $HOME/.kube/config (default "/root/.kube/config")

--kubeedge-version string Use this key to set the default image tag

--manifests string Allow appending file directories of k8s resources to keadm, separated by commas

--profile string Set profile on the command line (iptablesMgrMode=external or version=v1.9.1)

--set stringArray Set values on the command line (can specify multiple or separate values with commas: key1=val1,key2=val2)

execute keadm command failed: timed out waiting for the condition

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

keadm init Error: timed out waiting for the condition

root@ubuntu:/# sudo keadm init --advertise-address=192.168.58.128 --kubeedge-version=1.12.1

Kubernetes version verification passed, KubeEdge installation will start...

Error: timed out waiting for the condition

Usage:

keadm init [flags]

Examples:

keadm init

- This command will render and install the Charts for Kubeedge cloud component

keadm init --advertise-address=127.0.0.1 --profile version=v1.9.0 --kube-config=/root/.kube/config

- kube-config is the absolute path of kubeconfig which used to secure connectivity between cloudcore and kube-apiserver

- a list of helm style set flags like "--set key=value" can be implemented, ref: https://github.com/kubeedge/kubeedge/tree/master/manifests/charts/cloudcore/README.md

Flags:

--advertise-address string Use this key to set IPs in cloudcore's certificate SubAltNames field. eg: 10.10.102.78,10.10.102.79

-d, --dry-run Print the generated k8s resources on the stdout, not actual excute. Always use in debug mode

--external-helm-root string Add external helm root path to keadm.

-f, --files string Allow appending file directories of k8s resources to keadm, separated by commas

--force Forced installing the cloud components without waiting.

-h, --help help for init

--kube-config string Use this key to set kube-config path, eg: $HOME/.kube/config (default "/root/.kube/config")

--kubeedge-version string Use this key to set the default image tag

--manifests string Allow appending file directories of k8s resources to keadm, separated by commas

--profile string Set profile on the command line (iptablesMgrMode=external or version=v1.9.1)

--set stringArray Set values on the command line (can specify multiple or separate values with commas: key1=val1,key2=val2)

execute keadm command failed: timed out waiting for the condition

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

在kubeedge官网有一系列常见安装问题,等待条件超时时,具体解释如下图

root@ubuntu:/# kubectl get all -nkubeedge

NAME READY STATUS RESTARTS AGE

pod/cloudcore-5876c76687-gf7n7 0/1 Pending 0 21h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cloudcore ClusterIP 10.98.127.81 <none> 10000/TCP,10001/TCP,10002/TCP,10003/TCP,10004/TCP 21h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cloudcore 0/1 1 0 21h

NAME DESIRED CURRENT READY AGE

replicaset.apps/cloudcore-5876c76687 1 1 0 21h

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

查看pod描述信息:

root@ubuntu:/# kubectl describe pod cloudcore-5876c76687-gf7n7 -nkubeedge

Name: cloudcore-5876c76687-gf7n7

Namespace: kubeedge

Priority: 0

Node: <none>

Labels: k8s-app=kubeedge

kubeedge=cloudcore

pod-template-hash=5876c76687

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/cloudcore-5876c76687

Containers:

cloudcore:

Image: kubeedge/cloudcore:v1.12.1

Ports: 10000/TCP, 10001/TCP, 10002/TCP, 10003/TCP, 10004/TCP

Host Ports: 10000/TCP, 10001/TCP, 10002/TCP, 10003/TCP, 10004/TCP

Limits:

cpu: 200m

memory: 1Gi

Requests:

cpu: 100m

memory: 512Mi

Environment: <none>

Mounts:

/etc/kubeedge from certs (rw)

/etc/kubeedge/config from conf (rw)

/etc/localtime from host-time (ro)

/var/lib/kubeedge from sock (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-pmcqv (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

conf:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: cloudcore

Optional: false

certs:

Type: Secret (a volume populated by a Secret)

SecretName: cloudcore

Optional: false

sock:

Type: HostPath (bare host directory volume)

Path: /var/lib/kubeedge

HostPathType: DirectoryOrCreate

host-time:

Type: HostPath (bare host directory volume)

Path: /etc/localtime

HostPathType:

kube-api-access-pmcqv:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 46s (x60 over 21h) default-scheduler 0/1 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

看最底下Events展示的Warning信息

污点和容忍度。。。。

查看污点信息:

root@ubuntu:/# kubectl get no -o yaml | grep taint -A 5

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

status:

addresses:

- address: 192.168.58.128

- 1

- 2

- 3

- 4

- 5

- 6

- 7

解决方法

删除master节点污点

root@ubuntu:/# kubectl taint nodes --all node-role.kubernetes.io/master-

node/ubuntu untainted

- 1

- 2

再次开启master节点污点 命令

kubectl taint nodes k8s node-role.kubernetes.io/master=true:NoSchedule

- 1

v1.10.0没有这个问题。。。。。。。。

keadm join边缘节点加入集群:镜像错误

sudo keadm join --cloudcore-ipport=192.168.58.128:10000 --token=f8548c503b29218a9f72c935c0643ec0ce8d066e349b71c7270248ccf549ec16.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2ODEzMDg3MjB9.wO19krdazmd0EOkEm2s1V7LkAQqFQokykHonOXe0djE

I0412 07:08:16.274669 2221 command.go:845] 1. Check KubeEdge edgecore process status

I0412 07:08:16.304693 2221 command.go:845] 2. Check if the management directory is clean

I0412 07:08:21.870202 2221 join.go:100] 3. Create the necessary directories

I0412 07:08:21.872891 2221 join.go:176] 4. Pull Images

Pulling kubeedge/pause:3.1 ...

Successfully pulled kubeedge/pause:3.1

Pulling kubeedge/installation-package:v1.12.1 ...

Successfully pulled kubeedge/installation-package:v1.12.1

Pulling eclipse-mosquitto:1.6.15 ...

Error: edge node join failed: pull Images failed: Error response from daemon: Get "https://registry-1.docker.io/v2/": dial tcp: lookup registry-1.docker.io: Temporary failure in name resolution

execute keadm command failed: edge node join failed: pull Images failed: Error response from daemon: Get "https://registry-1.docker.io/v2/": dial tcp: lookup registry-1.docker.io: Temporary failure in name resolution

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

官网解释:

keadm join边缘节点加入集群:文件复制错误

root@ubuntu:/home/node1# sudo keadm join --cloudcore-ipport=192.168.58.128:10000 --token=f8548c503b29218a9f72c935c0643ec0ce8d066e349b71c7270248ccf549ec16.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2ODEzMDg3MjB9.wO19krdazmd0EOkEm2s1V7LkAQqFQokykHonOXe0djE

I0412 08:30:35.987033 2981 command.go:845] 1. Check KubeEdge edgecore process status

I0412 08:30:36.004307 2981 command.go:845] 2. Check if the management directory is clean

I0412 08:30:37.856531 2981 join.go:100] 3. Create the necessary directories

I0412 08:30:37.858055 2981 join.go:176] 4. Pull Images

Pulling eclipse-mosquitto:1.6.15 ...

Successfully pulled eclipse-mosquitto:1.6.15

Pulling kubeedge/installation-package:v1.12.1 ...

Successfully pulled kubeedge/installation-package:v1.12.1

Pulling kubeedge/pause:3.1 ...

I0413 06:30:52.276493 2981 join.go:176] 5. Copy resources from the image to the management directory

Error: edge node join failed: copy resources failed: Error response from daemon: No such image: kubeedge/installation-package:v1.12.1

execute keadm command failed: edge node join failed: copy resources failed: Error response from daemon: No such image: kubeedge/installation-package:v1.12.1

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

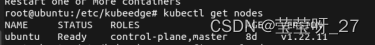

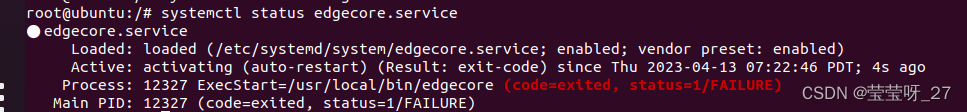

keadm join边缘节点加入集群:主节点获取不到edge

edge端第一次加入成功之后,显示启动成功,但是一会之后edge会自己挂掉

root@ubuntu:/# systemctl status edgecore.service

● edgecore.service

Loaded: loaded (/etc/systemd/system/edgecore.service; enabled; vendor preset: enabled)

Active: activating (auto-restart) (Result: exit-code) since Thu 2023-04-13 07:22:46 PDT; 4s ago

Process: 12327 ExecStart=/usr/local/bin/edgecore (code=exited, status=1/FAILURE)

Main PID: 12327 (code=exited, status=1/FAILURE)

- 1

- 2

- 3

- 4

- 5

- 6

edge端查看日志

root@ubuntu:/# journalctl -u edgecore -n 50

- 1

systemctl daemon-reload

systemctl restart docker

systemctl restart edgecore.service

systemctl status edgecore.service

node节点加入集群错误1

啥也不是

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[kubelet-check] Initial timeout of 40s passed.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp 127.0.0.1:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp 127.0.0.1:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp 127.0.0.1:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp 127.0.0.1:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp 127.0.0.1:10248: connect: connection refused.

error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

node节点加入集群错误2

root@edge1-virtual-machine:/home/edge1# kubeadm join 192.168.58.128:6443 --token ch11xz.czk96sc8eavmh0zz --discovery-token-ca-cert-hash sha256:5312b7877ad6e846dcb0335af5c2482faacb6cb54d15b85f98e7a4ce7bb84244

[preflight] Running pre-flight checks

[WARNING SystemVerification]: missing optional cgroups: blkio

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get component configs: could not download the kubelet configuration from ConfigMap "kubelet-config": configmaps "kubelet-config" is forbidden: User "system:bootstrap:ch11xz" cannot get resource "configmaps" in API group "" in the namespace "kube-system"

To see the stack trace of this error execute with --v=5 or higher

- 1

- 2

- 3

- 4

- 5

- 6

- 7

kubeadm master 和 node 版本不同

kubeadm version

- 1

又在这卡了半个月,想摔电脑了,呸

master和node名字一样

修改主机名:

1、两种方法打开文件,文件里面改成自己想要的名字

sudo vi /etc/hostname

sudo gedit /etc/hostname

- 1

- 2

- 3

2、修改主机名:两种方法打开文件

sudo vi /etc/hosts

sudo gedit /etc/hosts

- 1

- 2

- 3

3、reboot

func (ehd *EdgeHealthDaemon) SyncNodeList() {

// Only sync nodes when self-located found

var host *v1.Node

if host = ehd.metadata.GetNodeByName(ehd.cfg.Node.HostName); host == nil {

klog.Errorf("Self-hostname %s not found", ehd.cfg.Node.HostName)

return

}

// Filter cloud nodes and retain edge ones

masterRequirement, err := labels.NewRequirement(common.MasterLabel, selection.DoesNotExist, []string{})

if err != nil {

klog.Errorf("New masterRequirement failed %+v", err)

return

}

masterSelector := labels.NewSelector()

masterSelector = masterSelector.Add(*masterRequirement)

if mrc, err := ehd.cmLister.ConfigMaps(metav1.NamespaceSystem).Get(common.TaintZoneConfigMap); err != nil {

if apierrors.IsNotFound(err) { // multi-region configmap not found

if NodeList, err := ehd.nodeLister.List(masterSelector); err != nil {

klog.Errorf("Multi-region configmap not found and get nodes err %+v", err)

return

} else {

ehd.metadata.SetByNodeList(NodeList)

}

} else {

klog.Errorf("Get multi-region configmap err %+v", err)

return

}

} else { // multi-region configmap found

mrcv := mrc.Data[common.TaintZoneConfigMapKey]

klog.V(4).Infof("Multi-region value is %s", mrcv)

if mrcv == "false" { // close multi-region check

if NodeList, err := ehd.nodeLister.List(masterSelector); err != nil {

klog.Errorf("Multi-region configmap exist but disabled and get nodes err %+v", err)

return

} else {

ehd.metadata.SetByNodeList(NodeList)

}

} else { // open multi-region check

if hostZone, existed := host.Labels[common.TopologyZone]; existed {

klog.V(4).Infof("Host %s has HostZone %s", host.Name, hostZone)

zoneRequirement, err := labels.NewRequirement(common.TopologyZone, selection.Equals, []string{hostZone})

if err != nil {

klog.Errorf("New masterZoneRequirement failed: %+v", err)

return

}

masterZoneSelector := labels.NewSelector()

masterZoneSelector = masterZoneSelector.Add(*masterRequirement, *zoneRequirement)

if nodeList, err := ehd.nodeLister.List(masterZoneSelector); err != nil {

klog.Errorf("TopologyZone label for hostname %s but get nodes err: %+v", host.Name, err)

return

} else {

ehd.metadata.SetByNodeList(nodeList)

}

} else { // Only check itself if there is no TopologyZone label

klog.V(4).Infof("Only check itself since there is no TopologyZone label for hostname %s", host.Name)

ehd.metadata.SetByNodeList([]*v1.Node{host})

}

}

}

// Init check plugin score

ipList := make(map[string]struct{})

for _, node := range ehd.metadata.Copy() {

for _, addr := range node.Status.Addresses {

if addr.Type == v1.NodeInternalIP {

ipList[addr.Address] = struct{}{}

ehd.metadata.InitCheckPluginScore(addr.Address)

}

}

}

// Delete redundant check plugin score

for _, checkedIp := range ehd.metadata.CopyCheckedIp() {

if _, existed := ipList[checkedIp]; !existed {

ehd.metadata.DeleteCheckPluginScore(checkedIp)

}

}

// Delete redundant check info

for checkerIp := range ehd.metadata.CopyAll() {

if _, existed := ipList[checkerIp]; !existed {

ehd.metadata.DeleteByIp(ehd.cfg.Node.LocalIp, checkerIp)

}

}

klog.V(4).Infof("SyncNodeList check info %+v successfully", ehd.metadata)

}

...

func (cm *CheckMetadata) DeleteByIp(localIp, ip string) {

cm.Lock()

defer cm.Unlock()

delete(cm.CheckInfo[localIp], ip)

delete(cm.CheckInfo, ip)

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

其他操作

重启docker, kubelet:

systemctl restart docker

systemctl restart kubelet

- 1

- 2

查看kubelet启动状态:

systemctl status kubelet

- 1

查看日志

journalctl -xefu kubelet

- 1

https://github.com/kubeedge/kubeedge/releases/