热门标签

热门文章

- 1git submodule

- 2Spring Boot如何压缩Json并写入redis?

- 3Vivado软件初探:FPGA编程基础操作

- 4原子性、可见性、有序性_说说你对原子性、可见性、有序性的理解

- 5Spine正确的导出和Unity导入设置(解决黑边/彩条带问题)_spine导出的图片有黑边

- 6微服务Spring Boot 整合Redis 阻塞队列实现异步秒杀下单_springboot抢优惠券线程阻塞

- 7如何快速选择性价比高的国标视频监控平台?

- 8基于Matlab的回归分析

- 9CentOS linux unzip解压缩含中文文件名zip包时出现乱码的解决方案_centos unzip 乱码

- 10Linux配置网关

当前位置: article > 正文

Slurm集群部署

作者:神奇cpp | 2024-08-22 16:16:47

赞

踩

slurm集群

一、环境准备

此次安装部署均在Esxi虚拟机上运行。系统采用通用稳定的centos7系统,移植到其他(linux)系统应该问题不大。软件服务器的Esxi虚拟机的创建部分就跳过了.

1.1服务器的配置

| IP | 主机名 | 配置 | 备注 |

|---|---|---|---|

| 192.168.0.23 | master | 4C/8G/80G | 管理、存储节点 |

| 192.168.0.24 | node01 | 4C/8G/80G | 计算节点 |

| 192.168.0.25 | node01 | 4C/8G/80G | 计算节点 |

二、准备前的工作

2.1系统内核优化,使用脚本优化(每台都要执行,需要根据实际情况调整)

#!/bin/bash #服务器一键优化工具 function define_check_network() { echo 主机名为`hostname -f` ping www.baidu.com -c 6 } function define_yum () { #关闭selinux sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config #常用软件安装 yum clean all yum -y install bash vim wget curl sysstat gcc gcc-c++ make lsof sudo unzip openssh-clients net-tools systemd rpm yum rsyslog logrotate crontabs python-libs centos-release p7zip file # yum -y update && yum -y upgrade } function define_tuning_services() { #关闭多余服务 systemctl stop postfix firewalld chronyd cups #停止开机自启动 systemctl disable postfix firewalld chronyd cups echo "非关键系统服务已经关闭" } function define_tuning_kernel () { #4.内核参数优化 echo "内核参数优化" cp /etc/sysctl.conf /etc/sysctl.conf.bak cat /dev/null > /etc/sysctl.conf cat >> /etc/sysctl.conf << EOF ##内核默认参数 kernel.sysrq = 0 kernel.core_uses_pid = 1 kernel.msgmnb = 65536 kernel.msgmax = 65536 kernel.shmmax = 68719476736 kernel.shmall = 4294967296 kernel.sem=500 64000 64 256 ##打开文件数参数(20*1024*1024) fs.file-max= 20971520 ##WEB Server参数 net.ipv4.tcp_tw_reuse=1 net.ipv4.tcp_tw_recycle=1 net.ipv4.tcp_fin_timeout=30 net.ipv4.tcp_keepalive_time=1200 net.ipv4.ip_local_port_range = 1024 65535 net.ipv4.tcp_rmem=4096 87380 8388608 net.ipv4.tcp_wmem=4096 87380 8388608 net.ipv4.tcp_max_syn_backlog=8192 net.ipv4.tcp_max_tw_buckets = 5000 ##TCP补充参数 net.ipv4.ip_forward = 1 net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_sack = 1 net.ipv4.tcp_window_scaling = 1 net.core.wmem_default = 8388608 net.core.rmem_default = 8388608 net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 net.core.netdev_max_backlog = 262144 net.core.somaxconn = 65535 net.ipv4.tcp_max_orphans = 3276800 net.ipv4.tcp_timestamps = 0 net.ipv4.tcp_synack_retries = 1 net.ipv4.tcp_syn_retries = 1 net.ipv4.tcp_mem = 94500000 915000000 927000000 ##禁用ipv6 net.ipv6.conf.all.disable_ipv6 =1 net.ipv6.conf.default.disable_ipv6 =1 ##swap使用率优化 vm.swappiness=0 EOF echo "系统参数设置OK" } function define_tuning_system () { #加一个防呆判断 if [ ` cat /etc/fstab |grep noatime|wc -l` = 0 ]; then echo "脚本首次执行" else echo "第二次执行脚本,请手动检查错误" exit 1 fi #磁盘IO优化 sed -i '/xfs/s/defaults/defaults,noatime/' /etc/fstab ##nproc设置仅适合centos6 #sed -i 's/1024/65535/' /etc/security/limits.d/90-nproc.conf ##nproc设置仅适合centos7 sed -i 's/4096/524288/' /etc/security/limits.d/20-nproc.conf #管理open files数量 echo "* soft nofile 1024000" >> /etc/security/limits.conf echo "* hard nofile 1024000" >> /etc/security/limits.conf #管理最大进程数 echo "* soft nproc 1024000" >> /etc/security/limits.conf echo "* hard nproc 1024000" >> /etc/security/limits.conf echo "session required /lib64/security/pam_limits.so" >> /etc/pam.d/login #全局变量设置优化 echo 'export TMOUT=600' >> /etc/profile echo 'export TIME_STYLE="+%Y/%m/%d %H:%M:%S"' >> /etc/profile echo 'export HISTTIMEFORMAT="%F %T `whoami` "' >> /etc/profile echo 'unset MAILCHECK' >> /etc/profile sed -i '/HISTSIZE/s/1000/12000/' /etc/profile source /etc/profile #关闭日志无效输出 echo 'if $programname == "systemd" and ($msg contains "Starting Session" or $msg contains "Started Session" or $msg contains "Created slice" or $msg contains "Starting user-" or $msg contains "Starting User Slice of" or $msg contains "Removed session" or $msg contains "Removed slice User Slice of" or $msg contains "Stopping User Slice of") then stop' >/etc/rsyslog.d/ignore-systemd-session-slice.conf systemctl restart rsyslog #权限优化 # echo 'umask 0022' >> /etc/profile #禁止Ctrl+Alt+Del重启 rm -rf /usr/lib/systemd/system/ctrl-alt-del.target #修改运行级别 systemctl set-default multi-user.target #关闭hugepage chmod +x /etc/rc.d/rc.local echo "echo never > /sys/kernel/mm/transparent_hugepage/enabled;" >> /etc/rc.d/rc.local echo "echo never > /sys/kernel/mm/transparent_hugepage/defrag;" >> /etc/rc.d/rc.local ##启用日志压缩 sed -i 's/'#compress'/'compress'/' /etc/logrotate.conf ## ssh弱密码算法修复 echo "Ciphers aes128-ctr,aes192-ctr,aes256-ctr" >> /etc/ssh/sshd_config ## 限制journal大小 echo "SystemMaxUse=2048M" >> /etc/systemd/journald.conf echo "ForwardToSyslog=no" >> /etc/systemd/journald.conf echo "MaxFileSec=14day" >> /etc/systemd/journald.conf systemctl restart systemd-journald.service #系统别名设置 cat >> /etc/bashrc << EOF ##系统别名设置 alias vi='vim' alias ls='ls -trlh --color=auto' alias grep='grep --color=auto' EOF source /etc/bashrc echo '系统别名设置完成' } function define_ntpdate1 () { #本地时间同步 yum -y install ntpdate echo "/usr/sbin/ntpdate -us ntp1.aliyun.com;hwclock -w;" >> /etc/rc.d/rc.local ##时区校正 timedatectl set-timezone Asia/Shanghai ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && hwclock -w ##时间同步 /usr/sbin/ntpdate -us ntp1.aliyun.com;hwclock -w; cat >> /var/spool/cron/root << EOF ##时间同步 0-59/20 * * * * /usr/sbin/ntpdate -us ntp1.aliyun.com;hwclock -w; EOF } function define_update () { ## yum update cat >> /var/spool/cron/root << EOF #yum update software 45 00 * * * /usr/bin/yum -y install bash sudo ntpdate openssh openssl vim systemd rpm yum rsyslog logrotate crontabs curl; > /dev/null 2>&1; EOF } function denfine_swap () { cat >> /var/spool/cron/root << EOF ## swap enable/disable 15 * * * * /usr/sbin/swapoff -a && /usr/sbin/swapon -a; EOF } function define_localhost () { define_yum define_tuning_services define_tuning_kernel define_tuning_system define_ntpdate1 define_update denfine_swap } function define_exit () { echo '' > /tmp/one_key.sh exit } while : do echo "" echo "服务器一键优化脚本" echo "" echo "" echo " 0) 检查服务器网络 1) 本地环境专用" echo " 2) 退出脚本" echo read -p "请输入一个选项: " opmode echo case ${opmode} in 0) define_check_network;; 1) define_localhost;; 2) define_exit;; *) echo "无效输入" ;; esac

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

2.2修改主机名(每台机器都要执行,根据IP的规划命名)

hostnamectl set-hostname master

hostnamectl set-hostname node01

hostnamectl set-hostname node02

- 1

- 2

- 3

2.3 修改hosts(管理节点操作)

主节点上设置

vim /etc/hosts

//添加如下内容

192.168.0.23 master

192.168.0.24 node01

192.168.0.25 node02

//按:wq保存

将hosts文件scp到其他两个节点

scp -r /etc/hosts root@node01:/etc/ 输入密码

scp -r /etc/hosts root@node02:/etc/ 输入密码

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

2.4配置免密(管理节点操作)

//一路敲回车生成密钥

ssh-keygen -t rsa

//发一份密钥给自己

ssh-copy-id -i master

//发送到其他两个节点

ssh-copy-id -i node01

ssh-copy-id -i node02

- 1

- 2

- 3

- 4

- 5

- 6

- 7

2.5验证(管理节点操作)

ssh node01

ssh node02

- 1

- 2

2.6部署系统需要的软件库(每台都要执行,因为操作系统是最小化安装)

yum install -y rpm-build bzip2-devel openssl openssl-devel zlib-devel perl-DBI perl-ExtUtils-MakeMaker pam-devel readline-devel mariadb-devel python3 gtk2 gtk2-devel gcc make perl-ExtUtils* perl-Switch lua-devel hwloc-devel

- 1

2.7禁用防火墙(每台都要执行)

//查看防火墙状态

systemctl status firewalld

//禁用防火墙

systemctl stop firewalld

//开机禁止防火墙

systemctl disable firewalld

- 1

- 2

- 3

- 4

- 5

- 6

2.8部署时间同步(每台上都部署,如果有时间公司内网有时间服务器,同步公司内网时间服务器)

//部署chrony软件 yum install chrony -y //修改配置文件 vim /etc/chrony.conf //添加以下内容,把原来的server centos开头的删掉 server time1.aliyun.com iburst server time2.aliyun.com iburst server time3.aliyun.com iburst server time4.aliyun.com iburst server time5.aliyun.com iburst server time6.aliyun.com iburst server time7.aliyun.com iburst //启动、开机自启动、查看状态 systemctl start chronyd systemctl enable chronyd systemctl status chronyd //查看时间同步情况 chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 377 12 -760us[-1221us] +/- 22ms

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

三、部署NFS共享目录

3.1部署NFS软件(管理节点操作)

yum install nfs-utils rpcbind -y

- 1

3.2检查部署结果(管理节点操作)

rpm -qa nfs-utils rpcbind

- 1

3.3创建共享目录(管理节点操作)

mkdir -p /public

chmod 755 /public

- 1

- 2

3.3编辑配置(管理节点操作)

//打开配置文件

vim /etc/exports

//加入以下内容

/public *(rw,sync,insecure,no_subtree_check,no_root_squash)

- 1

- 2

- 3

- 4

3.4启动服务(管理节点操作)

//启动服务

systemctl enable nfs

systemctl enable rpcbind

systemctl start nfs

systemctl start rpcbind

systemctl status nfs

//查看配置文件是否正常

[root@master ~]# showmount -e localhost

Export list for localhost:

/public *

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

3.5计算节点部署(计算节点部署)

//部署命令 yum install nfs-utils rpcbind -y //设置开机启动nfs systemctl enable nfs //设置开机启动rpc协议 systemctl enable rpcbind //启动nfs服务 systemctl start nfs //启动rpc服务 systemctl start rpcbind //创建文件位置 mkdir /public //开启开机自动挂载 vim /etc/fstab //添加以下内容 192.168.0.23:/public /public nfs rw,sync 0 0 //挂载 mount -a

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

3.6查看是否挂载成功(计算节点部署)

node01节点 [root@node01 ~]# df -Th Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 79G 1.7G 78G 3% / devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs tmpfs 3.9G 8.9M 3.9G 1% /run tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 133M 882M 14% /boot tmpfs tmpfs 799M 0 799M 0% /run/user/0 192.168.0.23:/public nfs4 79G 1.7G 78G 3% /public node02节点 [root@node02 ~]# df -Th Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos-root xfs 79G 1.7G 78G 3% / devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs tmpfs 3.9G 8.9M 3.9G 1% /run tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/sda1 xfs 1014M 133M 882M 14% /boot tmpfs tmpfs 799M 0 799M 0% /run/user/0 192.168.0.23:/public nfs4 79G 1.7G 78G 3% /public

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

四、部署NIS用户管理

网络信息服务(Network Information Service,NIS)是一种用于集中管理网络中用户和组信息、主机名、邮件别名等数据的协议。通过NIS,系统管理员可以在一个中心位置管理用户账户和配置文件,简化了跨多个系统的用户管理工作,提高了网络的可维护性和安全性。NIS常用于需要集中管理用户和资源的大型网络环境中。

在SLURM环境中,NIS通过集中管理用户账户和权限,确保在整个计算集群中用户身份的一致性,简化了用户管理,减少了维护成本。

4.1部署nis服务并配置域名(管理节点操作)

//部署nis服务

yum install ypserv ypbind yp-tools rpcbind -y

//设置nisdomainname临时域名

nisdomainname steven.com

//设置永久域名

echo NISDOMAIN=steven.com>> /etc/sysconfig/network

//为了使每次重启之后域名都能生效需要将nisdomainname命令添加到rc.local文件中

echo /usr/bin/nisdomainname steven.com >> /etc/rc.d/rc.local

//添加需要解析IP段

vim /etc/ypserv.conf添加

192.168.0.0/24 : * : * : none

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

4.2启动服务(管理节点操作)

systemctl start ypserv

systemctl start yppasswdd

systemctl start rpcbind

//设置系统启动的时候自动启动,需要使用system enable命令手动进行设置:

systemctl enable ypserv

systemctl enable yppasswdd

systemctl enable rpcbind

/usr/lib64/yp/ypinit –m

//每次修改用户后,更新数据库

make -C /var/yp

systemctl restart rpcbind

systemctl restart yppasswdd

systemctl restart ypserv

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

4.3计算节点部署

yum install ypbind yp-tools rpcbind -y

echo NISDOMAIN=steven.com>> /etc/sysconfig/network

echo /usr/bin/nisdomainname steven.com >> /etc/rc.d/rc.local

echo domain steven.com server master >> /etc/yp.conf

echo ypserver master >> /etc/yp.conf

- 1

- 2

- 3

- 4

- 5

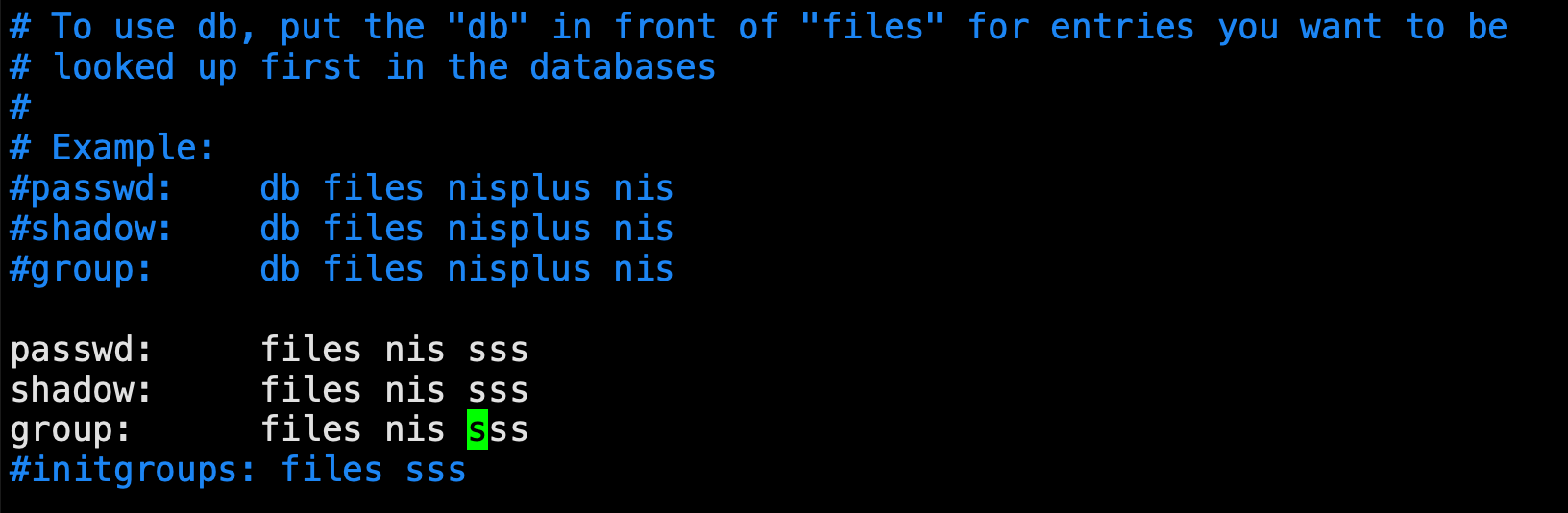

4.4修改/etc/nsswitch.conf文件,至下图所示:(计算节点修改)

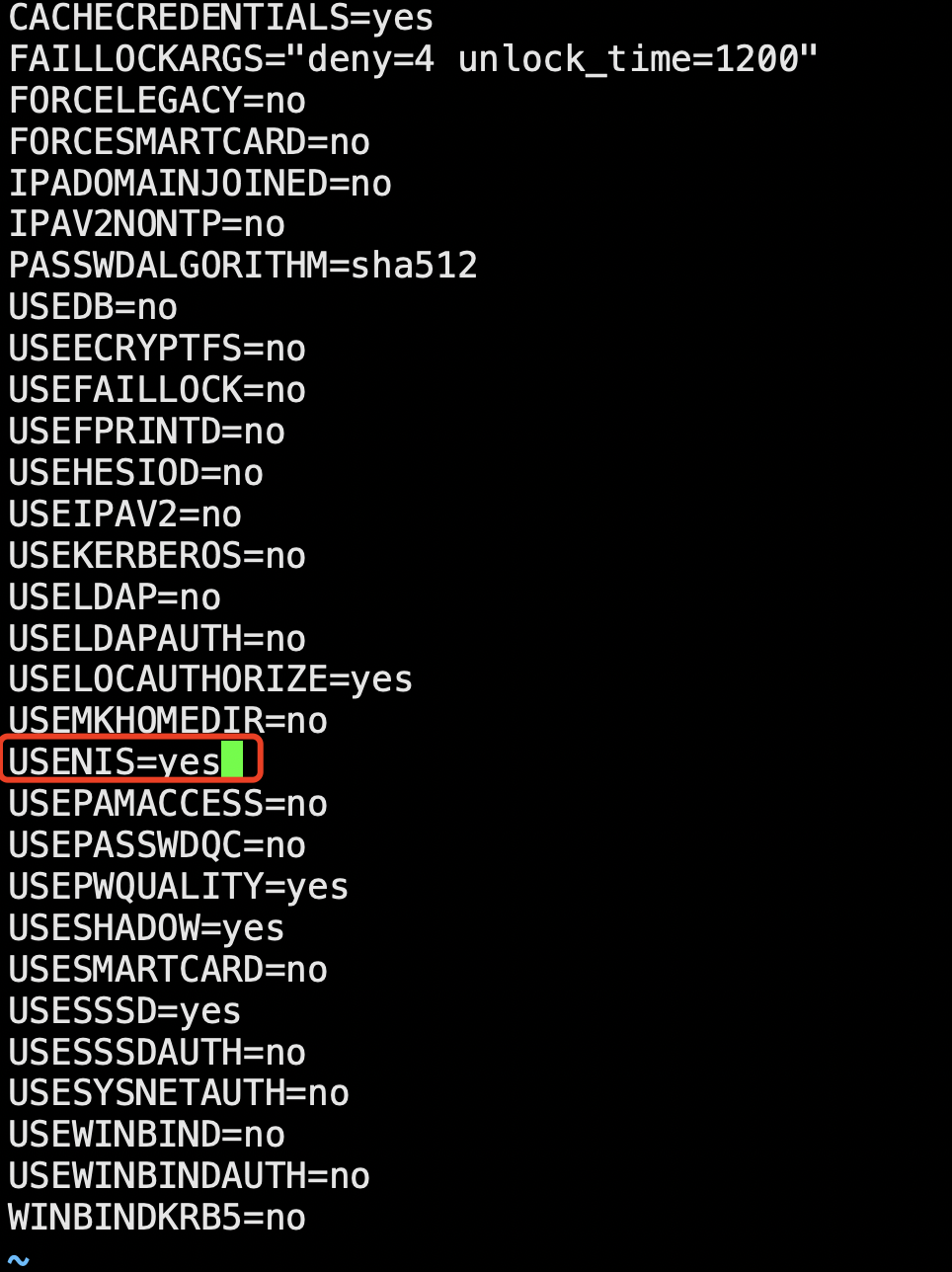

4.5修改/etc/sysconfig/authconfig文件,将USENIS修改为yes,至下图所示:(计算节点修改)

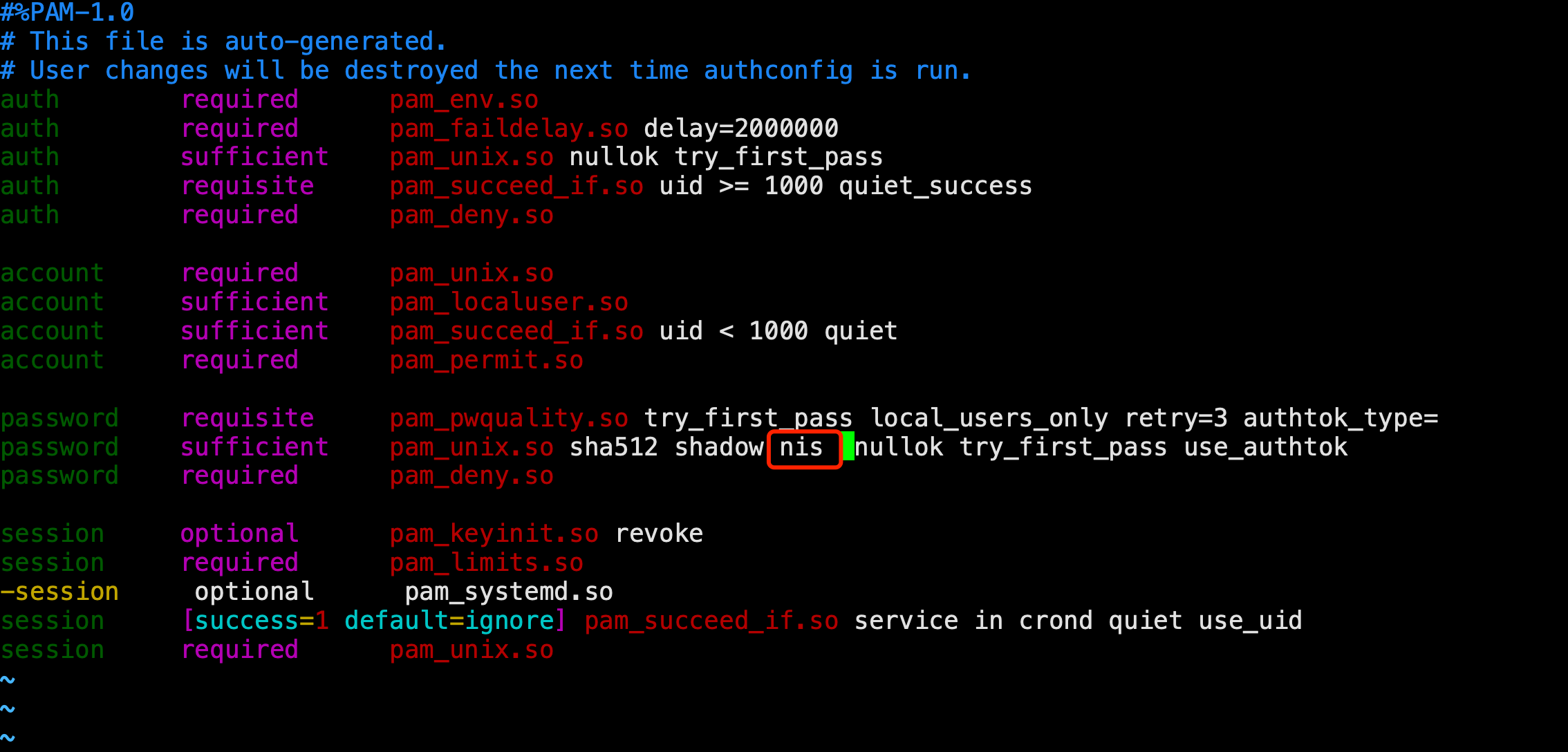

4.6修改/etc/pam.d/system-auth文件,添加nis,至下图所示:(计算节点修改)

4.6启动服务(计算节点启动)

systemctl start ypbind

systemctl enable ypbind

- 1

- 2

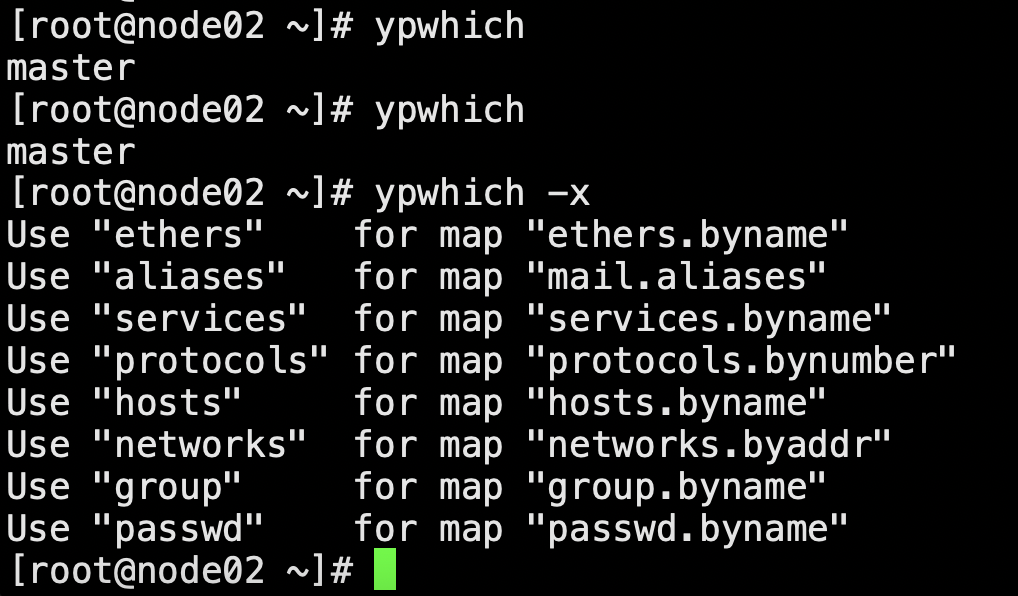

4.7测试

ypwhich

ypwhich -x

yptest

- 1

- 2

- 3

yptest中的用例3测试不通过没有关系。

至此,NIS已经初步配置完成。

五、Munge通信部署

MUNGE(MUNGE Uid ‘N’ Gid Emporium)是一种用于认证的工具,特别设计用于在分布式系统中验证用户的身份。MUNGE通过生成和验证带有加密签名的认证令牌,确保只有被授权的用户和进程才能访问系统资源。这种轻量级的认证机制具有高效、安全、易于配置的特点,广泛应用于高性能计算和集群环境中。

在SLURM环境中,MUNGE起到了至关重要的认证作用。SLURM利用MUNGE来验证提交作业的用户身份,确保只有合法用户才能提交和管理作业。通过这种机制,SLURM能够有效地防止未经授权的访问,保护计算资源和数据的安全。同时,MUNGE的高效性保证了认证过程不会成为系统性能的瓶颈,使得SLURM能够在大规模集群中高效运行。

5.1所有的节点同步UID、GID(所有节点部署)

groupadd -g 1108 munge

useradd -m -c "Munge Uid 'N' Gid Emporium" -d /var/lib/munge -u 1108 -g munge -s /sbin/nologin munge

- 1

- 2

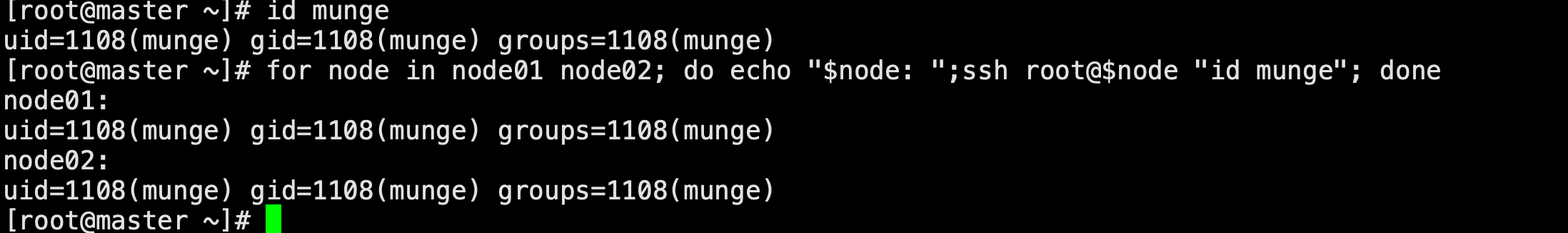

5.2查看munge id(管理节点查看)

//查看主节点

id munge

//在主节点上查看node节点

for node in node01 node02; do echo "$node: ";ssh root@$node "id munge"; done

- 1

- 2

- 3

- 4

5.3查看所有节点的mungeID是同步

5.4部署生成熵池(管理节点部署)

//在管理节点上,生成熵池

yum install -y rng-tools

rngd -r /dev/urandom

//修改service参数

vim /usr/lib/systemd/system/rngd.service

//修改如下:

[service]

ExecStart=/sbin/rngd -f -r /dev/urandom

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

5.5启动服务(管理节点)

systemctl daemon-reload

systemctl start rngd

systemctl enable rngd

- 1

- 2

- 3

5.6部署munge服务(所有节点)

yum install epel-release -y

yum install munge munge-libs munge-devel -y

- 1

- 2

5.7密钥同步(管理节点操作)

//密钥同步

/usr/sbin/create-munge-key -r

dd if=/dev/urandom bs=1 count=1024 > /etc/munge/munge.key

scp -p /etc/munge/munge.key root@node01:/etc/munge/

scp -p /etc/munge/munge.key root@node02:/etc/munge/

- 1

- 2

- 3

- 4

- 5

5.8赋权、启动(所有节点)

chown munge: /etc/munge/munge.key

chmod 400 /etc/munge/munge.key

systemctl start munge

systemctl enable munge

- 1

- 2

- 3

- 4

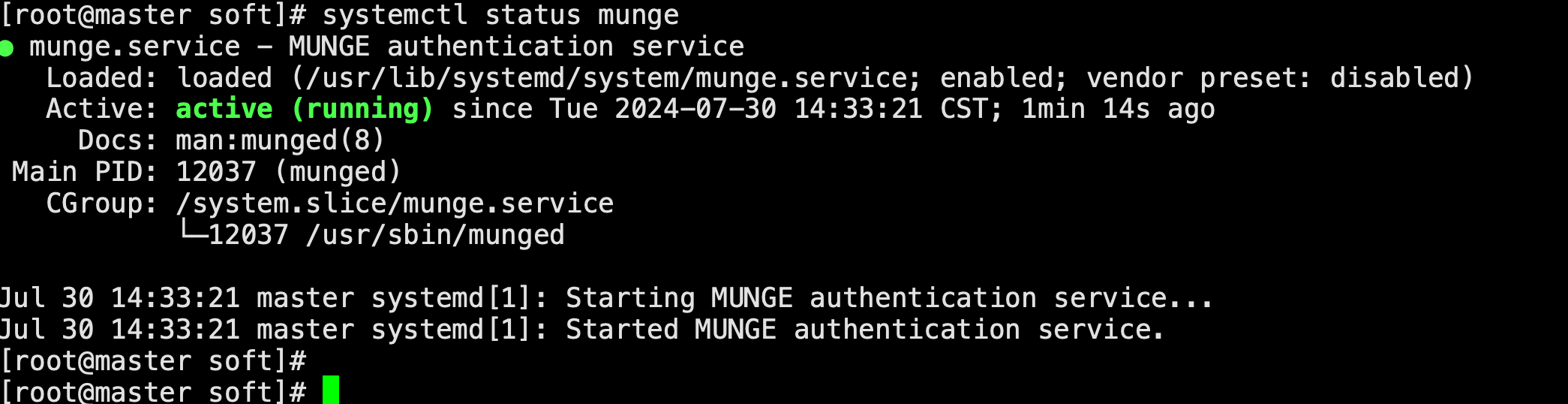

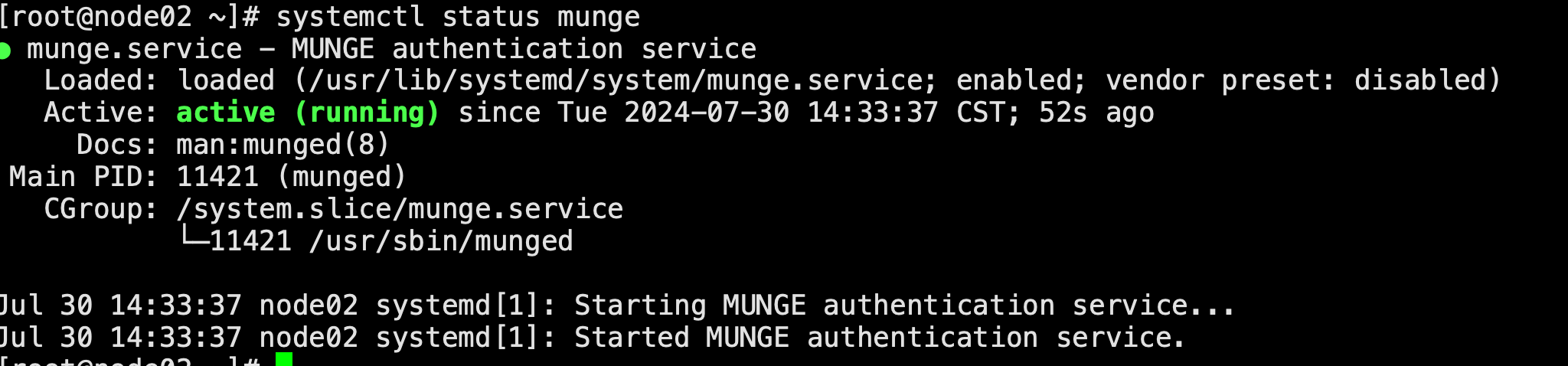

5.9查看状态,如下图所示(所有节点)

systemctl status munge

- 1

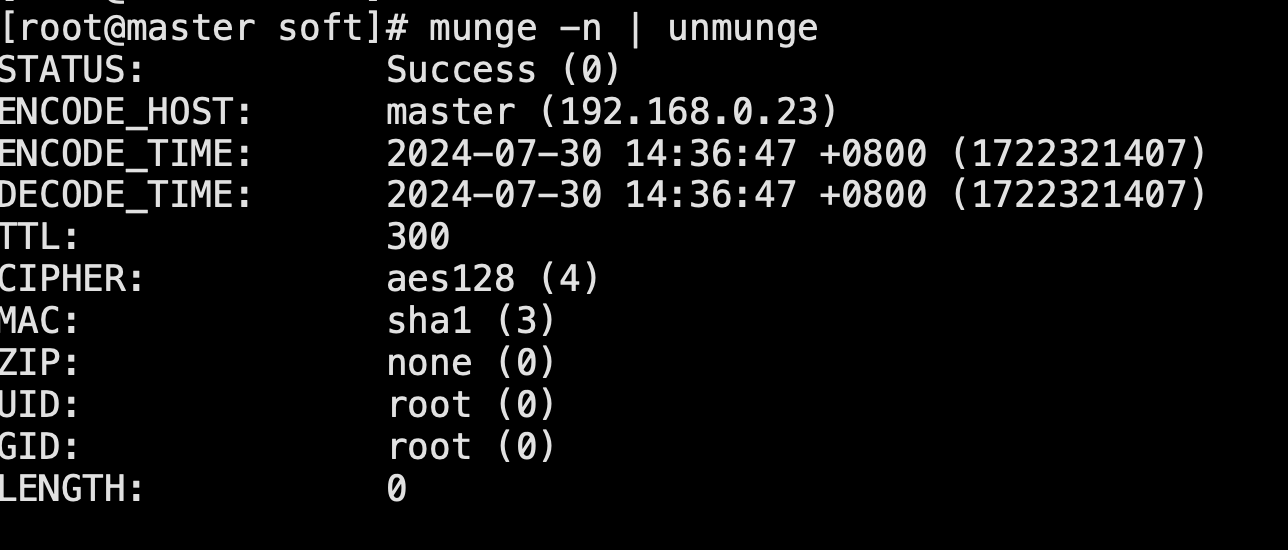

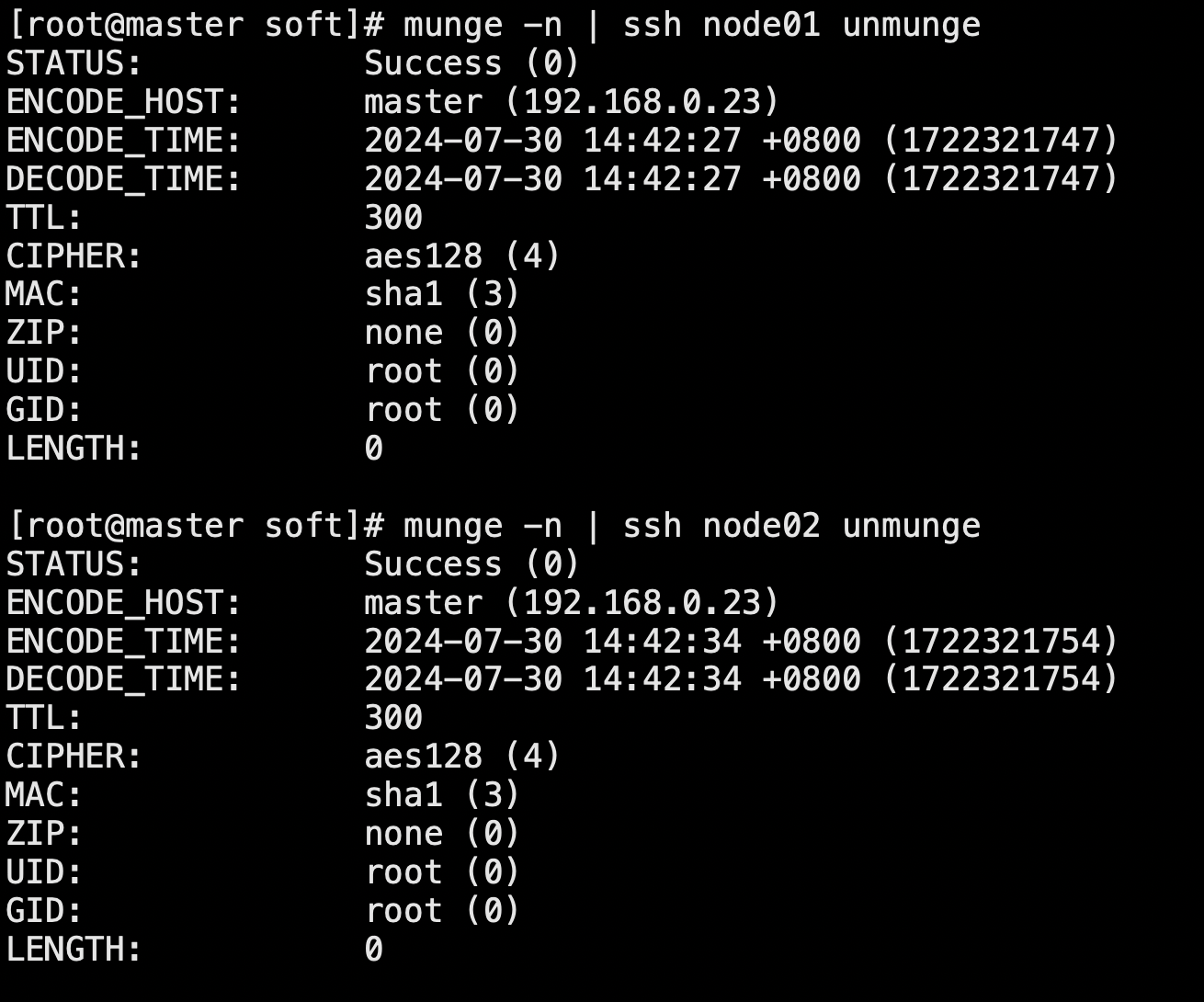

5.10测试:本地查看凭据(管理节点)

//本地查看凭据

munge -n

//本地解码

munge -n | unmunge

//验证compute node,远程解码

munge -n | ssh node01 unmunge

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

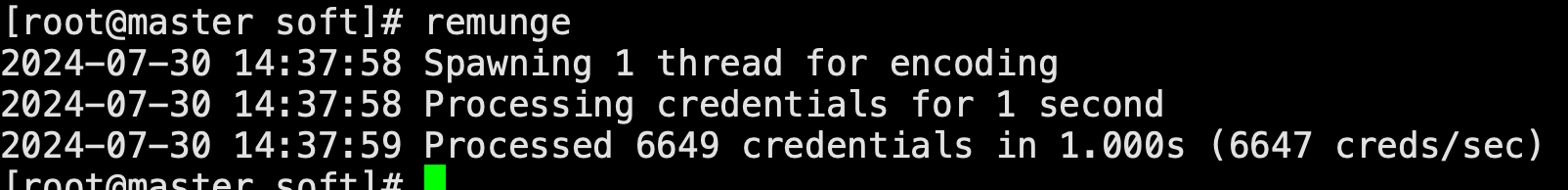

5.11凭证基准测试

remunge

- 1

munge已经初步配置完成。

六、安装Mariadb数据库以及Slurm安装配置

6.1在master节点部署mysql(管理节点)

//yum安装mariadb数据库 yum -y install mariadb-server //启动mariadb systemctl start mariadb systemctl enable mariadb //输入mysql,第一次进去不需要密码,敲回车即可 mysql //设置密码 >set password=password('Wang@023878'); //创建slurm需要的数据库名称 >create database slurm_acct_db; //退出 >quit //重新进入 mysql -uroot -p'Wang@023878' //创建slurm需要的用户名 >create user slurm; //给slurm用户授权 >grant all on slurm_acct_db.* TO 'slurm'@'localhost' identified by '123456' with grant option; //刷新数据库并退出 >flush privileges; >quit

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

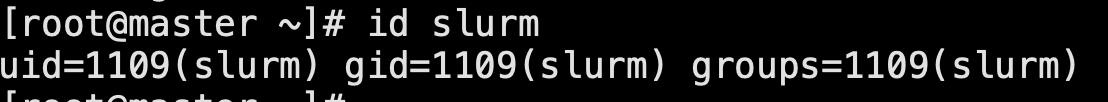

6.2创建slurm用户(所有节点)

groupadd -g 1109 slurm

useradd -m -c "Slurm manager" -d /var/lib/slurm -u 1109 -g slurm -s /bin/bash slurm

- 1

- 2

6.3验证

id slurm

- 1

6.4Slurm安装配置,部署依赖(管理节点)

yum install gcc gcc-c++ readline-devel perl-ExtUtils-MakeMaker pam-devel rpm-build mysql-devel python3 -y

- 1

6.5管理节点制作rpm包(管理节点)

//下载slurm源码

wget https://download.schedmd.com/slurm/slurm-22.05.3.tar.bz2

//部署rpm制作包

yum install rpm-build -y

//制作sulrm的rpm安装包

rpmbuild -ta --nodeps slurm-22.05.3.tar.bz2

- 1

- 2

- 3

- 4

- 5

- 6

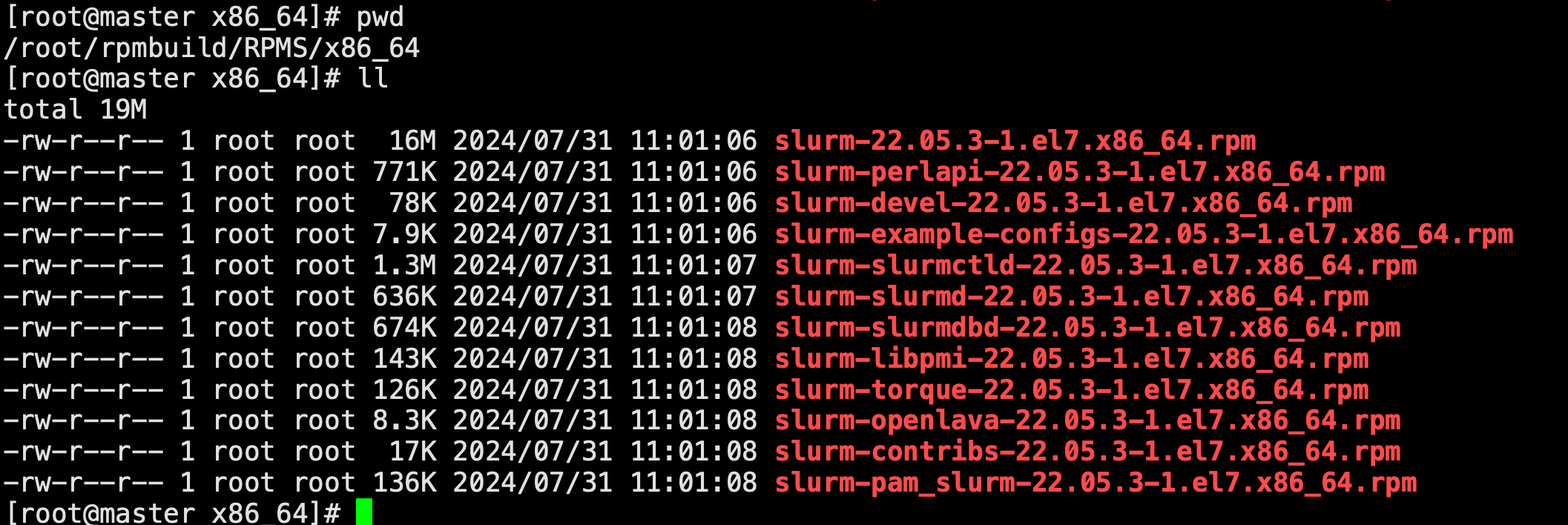

6.6编译制作完成后,可在目录/root/rpmbuild/RPMS/x86_64下得到rpm包(管理节点操作)

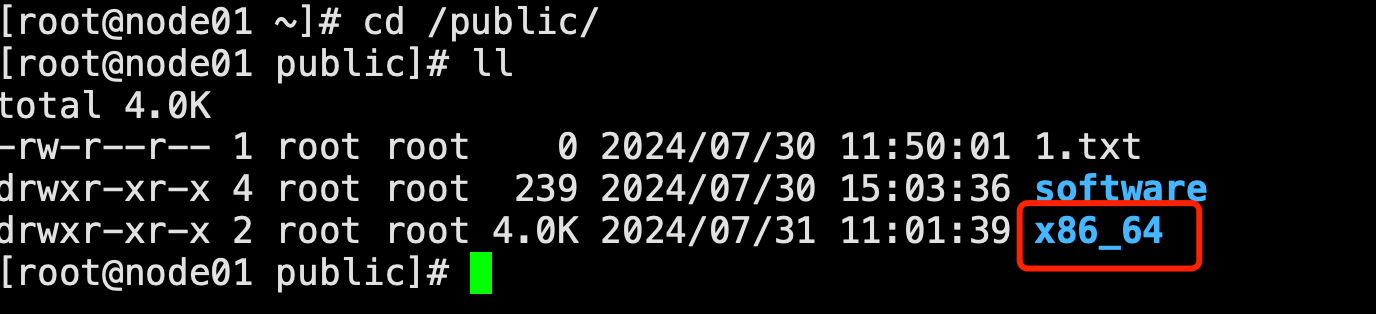

6.7把slurm的rpm包copy到nfs存储中public文件夹中,这样不用每一台去scp过去了(管理节点操作)

//进入此文件夹

cd /root/rpmbuild/RPMS

//复制一份到共享存储里面

cp -R x86_64/ /public/

- 1

- 2

- 3

- 4

6.8安装slurm

//管理节点部署

cd /root/rpmbuild/RPMS/x86_64

yum localinstall slurm-*

//计算节点部署

cd /public/x86_64

yum localinstall slurm-*

- 1

- 2

- 3

- 4

- 5

- 6

6.9修改配置文件slurm.conf以及slurmdbd.conf,并将slurm.conf传输至计算节点。

//配置文件复制并备份一份 cp /etc/slurm/cgroup.conf.example /etc/slurm/cgroup.conf cp /etc/slurm/slurm.conf.example /etc/slurm/slurm.conf cp /etc/slurm/slurmdbd.conf.example /etc/slurm/slurmdbd.conf //给slurmdbd.conf文件授权(不授权后面的服务启动不了) chown -R slurm:slurm /etc/slurm/slurmdbd.conf chmod 600 /etc/slurm/slurmdbd.conf //slurm.conf的配置内容如下 vim /etc/slurm/slurm.conf ########配置文件如下####### # # slurm.conf file. Please run configurator.html # (in doc/html) to build a configuration file customized # for your environment. # # # slurm.conf file generated by configurator.html. # Put this file on all nodes of your cluster. # See the slurm.conf man page for more information. # ################################################ # CONTROL # ################################################ ClusterName=steven #集群名称 SlurmctldHost=master #管理服务节点名称 SlurmctldPort=6817 #slurmctld服务端口 SlurmdPort=6818 #slurmd服务的端口 SlurmUser=slurm #slurm的主用户 #SlurmdUser=root #slurmd服务的启动用户 ################################################ # LOGGING & OTHER PATHS # ################################################ SlurmctldDebug=info SlurmctldLogFile=/var/log/slurm/slurmctld.log SlurmdDebug=info SlurmdLogFile=/var/log/slurm/slurmd.log SlurmctldPidFile=/var/run/slurmctld.pid SlurmdPidFile=/var/run/slurmd.pid SlurmdSpoolDir=/var/spool/slurmd StateSaveLocation=/var/spool/slurmctld ################################################ # ACCOUNTING # ################################################ AccountingStorageEnforce=associations,limits,qos #account存储数据的配置选项 AccountingStorageHost=master #数据库存储节点 AccountingStoragePass=/var/run/munge/munge.socket.2 #munge认证文件,与slurmdbd.conf文件中的AuthInfo文件同名。 AccountingStoragePort=6819 #slurmd服务监听端口,默认为6819 AccountingStorageType=accounting_storage/slurmdbd #数据库记账服务 ################################################ # JOBS # ################################################ JobCompHost=localhost #作业完成信息的数据库本节点 JobCompLoc=slurm_acct_db #数据库名称 JobCompPass=123456 #slurm用户数据库密码 JobCompPort=3306 #数据库端口 JobCompType=jobcomp/mysql #作业完成信息数据存储类型,采用mysql数据库 JobCompUser=slurm #作业完成信息数据库用户名 JobContainerType=job_container/none JobAcctGatherFrequency=30 JobAcctGatherType=jobacct_gather/linux ################################################ # SCHEDULING & ALLOCATION # ################################################ SchedulerType=sched/backfill SelectType=select/cons_tres SelectTypeParameters=CR_Core ################################################ # TIMERS # ################################################ InactiveLimit=0 KillWait=30 MinJobAge=300 SlurmctldTimeout=120 SlurmdTimeout=300 Waittime=0 ################################################ # OTHER # ################################################ MpiDefault=none ProctrackType=proctrack/cgroup ReturnToService=1 SwitchType=switch/none TaskPlugin=task/affinity ################################################ # NODES # ################################################ NodeName=master NodeAddr=192.168.0.23 CPUs=1 CoresPerSocket=1 ThreadsPerCore=1 RealMemory=200 Procs=1 State=UNKNOWN NodeName=node[01-02] NodeAddr=192.168.0.2[4-5] CPUs=1 CoresPerSocket=1 ThreadsPerCore=1 RealMemory=200 Procs=1 State=UNKNOWN ################################################ # PARTITIONS # ################################################ PartitionName=compute Nodes=node[01-02] Default=YES MaxTime=INFINITE State=UP //打开slurmdbd的配置如 vim /etc/slurm/slurmdbd.conf ##############slurmdbd.conf文件配置如下################ # # slurmdbd.conf file. # # See the slurmdbd.conf man page for more information. # # Authentication info AuthType=auth/munge #认证方式,该处采用munge进行认证 AuthInfo=/var/run/munge/munge.socket.2 #为了与slurmctld控制节点通信的其它认证信息 # # slurmDBD info DbdAddr=master #数据库节点名 DbdHost=master #数据库IP地址 SlurmUser=slurm #用户数据库操作的用户 DebugLevel=verbose LogFile=/var/log/slurm/slurmdbd.log #slurmdbd守护进程日志文件绝对路径 PidFile=/var/run/slurmdbd.pid #slurmdbd守护进程存储进程号文件绝对路径 # # Database info StorageType=accounting_storage/mysql #数据存储类型 StoragePass=123456 #存储数据库密码 StorageUser=slurm #存储数据库用户名 StorageLoc=slurm_acct_db #数据库名称

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

配置中slurmdbd.conf文件不授权,启动时就会报以下错误,红色的字体

[root@master slurm]# systemctl status slurmdbd

● slurmdbd.service - Slurm DBD accounting daemon

Loaded: loaded (/usr/lib/systemd/system/slurmdbd.service; disabled; vendor preset: disabled)

Active: failed (Result: exit-code) since Wed 2024-07-31 11:10:02 CST; 2min 49s ago

Process: 4790 ExecStart=/usr/sbin/slurmdbd -D -s $SLURMDBD_OPTIONS (code=exited, status=1/FAILURE)

Main PID: 4790 (code=exited, status=1/FAILURE)

Jul 31 11:10:02 master systemd[1]: Started Slurm DBD accounting daemon.

Jul 31 11:10:02 master slurmdbd[4790]: slurmdbd: fatal: slurmdbd.conf not owned by SlurmUser root!=slurm

Jul 31 11:10:02 master systemd[1]: slurmdbd.service: main process exited, code=exited, status=1/FAILURE

Jul 31 11:10:02 master systemd[1]: Unit slurmdbd.service entered failed state.

Jul 31 11:10:02 master systemd[1]: slurmdbd.service failed.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

6.10复制cgrop.conf和slurm.conf文件到node01和node02节点(管理节点操作)

for node in node01 node02; do echo "Copying to $node"; scp -r /etc/slurm/cgroup.conf root@$node:/etc/slurm/; done

for node in node01 node02; do echo "Copying to $node"; scp -r /etc/slurm/slurm.conf root@$node:/etc/slurm/; done

- 1

- 2

6.11设置各节点文件权限(所有的节点都要操作)

mkdir /var/spool/slurmd

chown slurm: /var/spool/slurmd

mkdir /var/log/slurm

chown slurm: /var/log/slurm

mkdir /var/spool/slurmctld

chown slurm: /var/spool/slurmctld

- 1

- 2

- 3

- 4

- 5

- 6

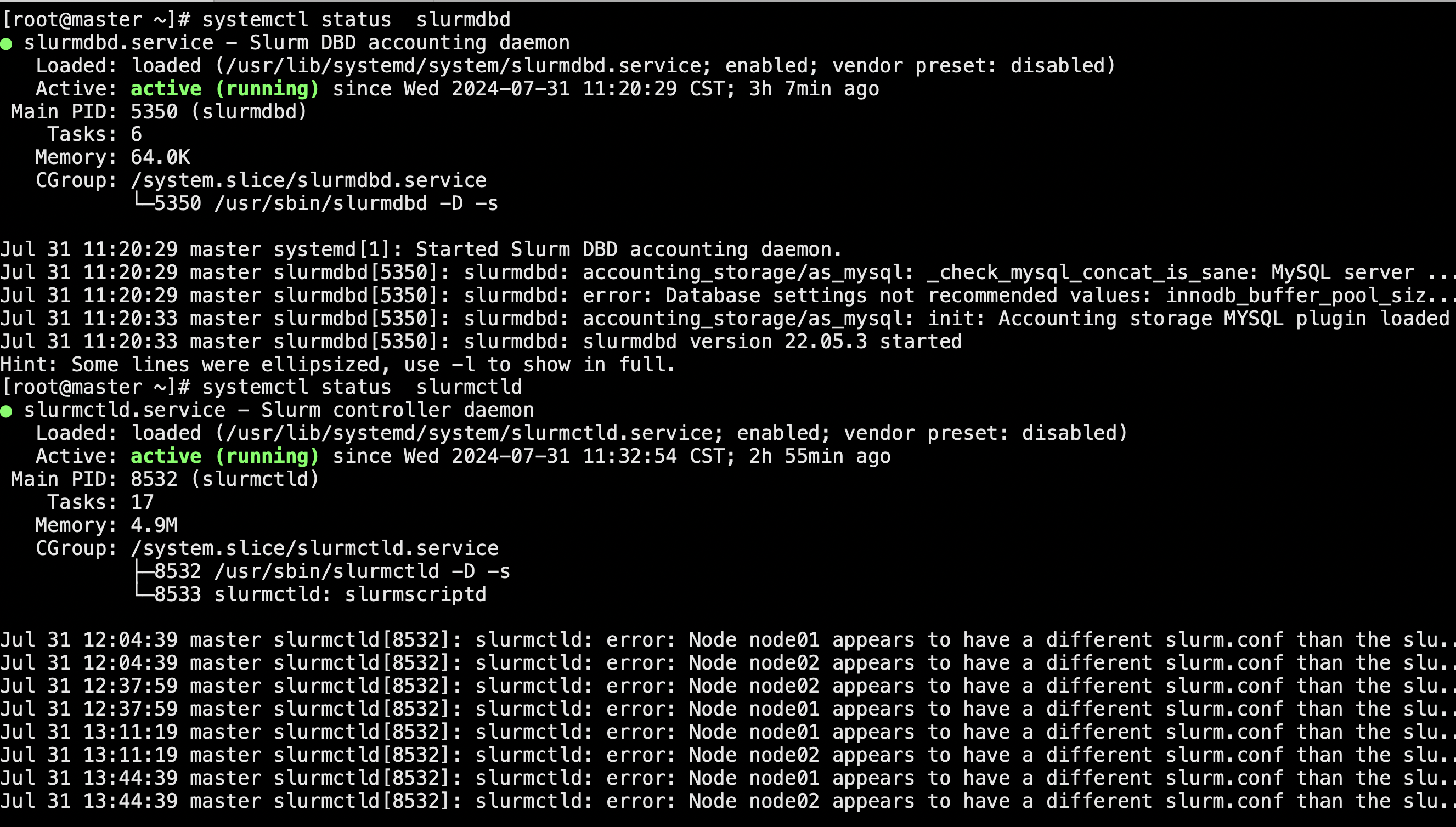

6.12启动服务

6.12.1管理节点启动服务(管理节点操作)

systemctl start slurmdbd

systemctl enable slurmdbd

systemctl start slurmctld

systemctl enable slurmctld

- 1

- 2

- 3

- 4

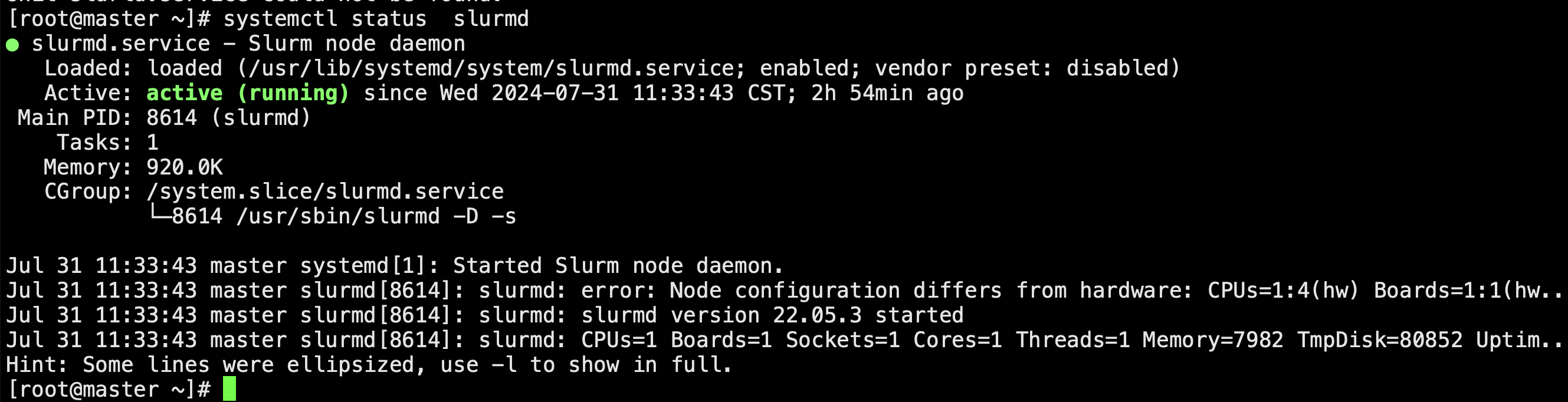

6.12.2所有的节点启动服务

systemctl start slurmd

systemctl enable slurmd

- 1

- 2

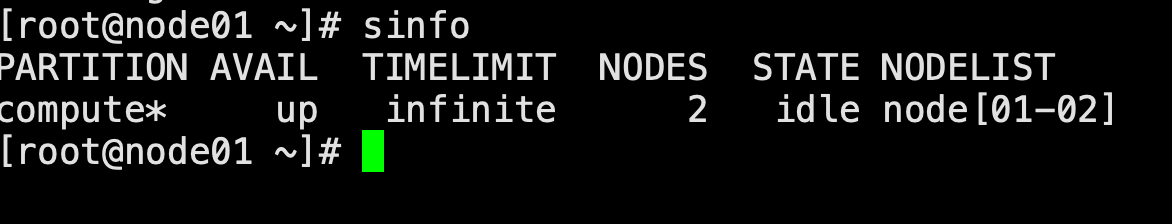

6.13验证测试,出现下图代表slurm部署完成

6.14查看所有的服务是否正常启动

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/神奇cpp/article/detail/1017101

推荐阅读

相关标签