Ubuntu22安装K8S实战

赞

踩

一、前言

现在k8s基本上是每一个后端开发人员必学的技术了,为了能够花费最小的成本学习k8s技术,我用自己的电脑跑了三个虚拟机节点,并希望在这三个节点上安装k8s 1.26版本,部署成一个master两个node的架构来满足最基本的学习;下面我们就来逐步讲解整个安装过程。

二、虚拟机镜像安装

我在机器上使用的虚拟机软件是VMware Fusion13.0.0,安装的操作系统是ubuntu 22.04,对应的镜像下载链接如下:ubuntu-22.04.2-live-server-amd64.iso。友情提示:可以拷贝下载链接通过迅雷下载,比浏览器快;

- 网络设置

在虚拟机软件安装成功后,我们会单独创建一个网络供里面的实例使用,主要目的就是保证三个节点能够在同一个子网内相互通信,避免网络问题影响后续操作;

在虚拟机软件安装成功后,我们会单独创建一个网络供里面的实例使用,主要目的就是保证三个节点能够在同一个子网内相互通信,避免网络问题影响后续操作;

最终我们三个节点的ip分别是:

192.168.56.135 k8s.master1

192.168.56.134 k8s.node1

192.168.56.133 k8s.node2

podSubnet: 10.244.0.0/24

serviceSubnet: 10.96.0.0/12

-

处理器、内存、磁盘配置

在节点安装过程中,需要修改一下处理器、内存以及磁盘的配置:

三、修改系统设置

在Ubuntu 22.04安装完毕后,我们需要做以下检查和操作:

- 检查网络

在每个节点安装成功后,需要通过ping命令检查以下几项:

1.是否能够

ping通baidu.com;2.是否能够

ping通宿主机;3.是否能够

ping通子网内其他节点;

- 检查时区

cat /etc/timezone

- 1

时区不正确的可以通过下面的命令来修正:

timedatectl list-timezones ## 找到时区名字

##修改

sudo timedatectl set-timezone Asia/Shanghai

##验证

timedatectl

- 1

- 2

- 3

- 4

- 5

- 6

- 配置ubuntu系统国内源

因为我们需要在ubuntu 22.04系统上安装k8s,为了避免遭遇科学上网的问题,我们需要配置一下国内的源;

- 备份默认源:

sudo cp /etc/apt/sources.list /etc/apt/sources.list.bak

sudo rm -rf /etc/apt/sources.list

- 1

- 2

- 配置国内源:

sudo vi /etc/apt/sources.list

- 1

内容如下:

deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

-

更新

修改完毕后,需要执行以下命令生效

sudo apt-get update sudo apt-get upgrade- 1

- 2

- 禁用selinux

默认ubuntu下没有这个模块,centos下需要禁用selinux;

- 禁用swap

- 临时禁用命令:

sudo swapoff -a

- 1

- 永久禁用:

sudo vi /etc/fstab

- 1

将最后一行注释后重启系统即生效:

#/swap.img none swap sw 0 0

- 1

- 修改内核参数:

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

- 1

- 2

- 3

- 4

- 5

运行以下命令使得上述配置生效:

sudo sysctl --system

- 1

设置ssh访问

#安装openssh

sudo apt install openssh-server

vim /etc/ssh/sshd_config

将permitRootLogin prohibit-password 改为

PermitRootLogin yes

重启ssh

sudo systemctl restart ssh

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

设置主机名

vim /etc/hostname

vim /etc/hosts

- 1

- 2

- 3

重启

设置主机跨密码访问

使用A机器登录B机器。

ssh-copy-id -i ~/.ssh/id_rsa.pub -p 22 es@local3

- 1

四、安装Docker Engine

官方文档在这里ubuntu安装Docker Engine;

- 卸载旧版本

sudo apt-get remove docker docker-engine docker.io containerd runc

- 1

- 更新

apt

sudo apt-get update

sudo apt-get install \

ca-certificates \

curl \

gnupg \

lsb-release

- 1

- 2

- 3

- 4

- 5

- 6

- 添加官方

GPG key

sudo mkdir -m 0755 -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

- 1

- 2

此处会报警告,但是不碍事;

- 配置

repository

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

- 1

- 2

- 3

- 更新

apt索引

sudo apt-get update

- 1

- 安装

Docker Engine

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

- 1

- 配置阿里云镜像源

需要登陆阿里云:cr.console.aliyun.com/cn-hangzhou…

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["阿里云镜像源链接"]

}

EOF

- 1

- 2

- 3

- 4

- 5

- 6

不想登陆的小伙伴可以用中国科学技术大学镜像源:docker.mirrors.ustc.edu.cn

- 设置开机自启动docker

sudo systemctl enable docker.service

- 1

- 重启docker engine

sudo systemctl restart docker.service

- 1

五、修改containerd配置

修改这个配置是关键,否则会因为科学上网的问题导致k8s安装出错;比如后续kubeadm init失败,kubeadm join后节点状态一直处于NotReady状态等问题;

- 备份默认配置

sudo mv /etc/containerd/config.toml /etc/containerd/config.toml.bak

- 1

- 修改配置

sudo vi /etc/containerd/config.toml

- 1

配置内容如下:

disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_image_defined_volumes = false max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = false tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] root_path = "" upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 重启containerd服务

sudo systemctl enable containerd

sudo systemctl daemon-reload && systemctl restart containerd

- 1

- 2

六、安装k8s组件

- 添加k8s的阿里云yum源

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo apt-add-repository "deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main"

sudo apt-get update

##用这个

vi /etc/apt/sources.list

##添加

deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

##更新

apt-get update

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 安装k8s组件

sudo apt update

sudo apt install -y kubelet=1.26.1-00 kubeadm=1.26.1-00 kubectl=1.26.1-00

sudo apt-mark hold kubelet kubeadm kubectl

- 1

- 2

- 3

可以通过apt-cache madison kubelet命令查看kubelet组件的版本;其他组件查看也是一样的命令,把对应位置的组件名称替换即可;

七、下载k8s镜像

- 配置k8s镜像列表

sudo kubeadm config images list --kubernetes-version=v1.26.1

- 1

- 下载镜像

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.26.1

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.26.1

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.26.1

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.26.1

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

sudo docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3

- 1

- 2

- 3

- 4

- 5

- 6

- 7

八、初始化master

- 生成kubeadm默认配置文件

sudo kubeadm config print init-defaults > kubeadm.yaml

- 1

- 修改默认配置

sudo vi kubeadm.yaml

- 1

总共做了四处修改:

1.修改localAPIEndpoint.advertiseAddress为master的ip;

2.修改nodeRegistration.name为当前节点名称;

3.修改imageRepository为国内源:registry.cn-hangzhou.aliyuncs.com/google_containers

4.添加networking.podSubnet,该网络ip范围不能与networking.serviceSubnet冲突,也不能与节点网络192.168.56.0/24相冲突;所以我就设置成192.168.66.0/24;

修改后的内容如下:

apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.56.136 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: k8s-master1 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.26.0 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 192.168.66.0/24 scheduler: {}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 执行初始化操作

sudo kubeadm init --config kubeadm.yaml

- 1

如果在执行init操作中有任何错误,可以使用journalctl -u kubelet查看到的错误日志;失败后我们可以通过下面的命令重置,否则再次init会存在端口冲突的问题:

sudo kubeadm reset

- 1

初始化成功后,按照提示执行下面命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 1

- 2

- 3

再切换到root用户执行下面命令:

export KUBECONFIG=/etc/kubernetes/admin.conf

- 1

先不要着急kubeadm join其他节点进来,可以切换root用户执行以下命令就能看到节点列表:

kubectl get nodes

- 1

但是此时的节点状态还是NotReady状态,我们接下来需要安装网络插件;

9.给master安装calico网络插件

- 下载calico.yaml配置

curl https://docs.tigera.io/archive/v3.25/manifests/calico.yaml -O

- 1

如果下载不了的小伙伴可以自己通过上面的链接去浏览器下载,这个是calico 3.25版本的配置;

下载后需要修改其中的一项配置:

-

找到以下配置项:

- name: CLUSTER_TYPE value: "k8s,bgp"- 1

- 2

-

在该配置项下添加以下配置:

- name: IP_AUTODETECTION_METHOD value: "interface=ens.*"- 1

- 2

-

安装插件

sudo kubectl apply -f calico.yaml- 1

卸载该插件可以使用下面命令:

sudo kubectl delete -f calico.yaml- 1

calico网络插件安装成功后,master节点的状态将逐渐转变成Ready状态;如果状态一直是NotReady,建议重启一下master节点;

九、接入两个工作节点

我们在master节点init成功后,会提示可以通过kubeadm join命令把工作节点加入进来。我们在master节点安装好calico网络插件后,就可以分别在两个工作节点中执行kubeadm join命令了:

sudo kubeadm join --token ......

- 1

如果我们忘记了master中生成的命令,我们依然可以通过以下命令让master节点重新生成一下kubeadm join命令:

sudo kubeadm token create --print-join-command

- 1

我们在工作节点执行完kubeadm join命令后,需要回到master节点执行以下命令检查工作节点是否逐渐转变为Ready状态:

sudo kubectl get nodes

- 1

如果工作节点长时间处于NotReady状态,我们需要查看pods状态:

sudo kubectl get pods -n kube-system

- 1

查看目标pod的日志可以使用下面命令:

kubectl describe pod -n kube-system [pod-name]

- 1

当所有工作节点都转变成Ready状态后,我们就可以安装Dashboard了;

安装Dashboard

- 准备配置文件

可以科学上网的小伙伴可以按照github上的文档来:github.com/kubernetes/…,我选择的是2.7.0版本;(不能再机器外访问)

不能科学上网的小伙伴就按照下面步骤来,在master节点操作:(未成功)

sudo vi recommended.yaml

- 1

删除现有的dashboard服务,dashboard 服务的 namespace 是 kubernetes-dashboard,但是该服务的类型是ClusterIP,不便于我们通过浏览器访问,因此需要改成NodePort型的

# 查看 现有的服务

[root@master1 ~]# kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d13h

default nginx NodePort 10.102.220.172 <none> 80:31863/TCP 8h

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 6d13h

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.100.246.255 <none> 8000/TCP 61s

kubernetes-dashboard kubernetes-dashboard ClusterIP 10.109.210.35 <none> 443/TCP 61s

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

[root@k8s-master01 dashboard]# kubectl delete service kubernetes-dashboard --namespace=kubernetes-dashboard

service "kubernetes-dashboard" deleted

- 1

- 2

## 下载推荐的部署文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml -O k8s-dashboard.yaml

- 1

- 2

- 3

# 修改后的yaml文件,默认创建名为 "kubernetes-dashboard“ 的service 是ClusterIP 类型,我们要通过外网访问的话需要修改下,这里我们修改为 NodePort。 #编辑 recommended.yaml 在大约 40行的位置添加一行 type: NodePort # Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort ##新增 ports: - port: 443 targetPort: 8443 nodePort: 30003 selector: k8s-app: kubernetes-dashboard apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.7.0 imagePullPolicy: Always ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux "kubernetes.io/hostname": k8s-02 # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: dashboard-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.8 ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux "kubernetes.io/hostname": k8s-02 # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

执行部署

kubectl apply -f k8s-dashboard.yaml

- 1

查看状态、创建账号

# 查看状态

kubectl get pod,svc -n kubernetes-dashboard

# 创建账号、绑定权限、生成token

kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

kubectl create clusterrolebinding dashboard-admin-rb --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

kubectl -n kubernetes-dashboard create token dashboard-admin --duration 3153600000s

# 复制token并登录dashboard ui

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 安装

通过执行以下命令安装Dashboard:

sudo kubectl apply -f recommended.yaml

- 1

此时就静静等待,直到kubectl get pods -A命令下显示都是Running状态:

kubernetes-dashboard dashboard-metrics-scraper-7bc864c59-tdxdd 1/1 Running 0 5m32s

kubernetes-dashboard kubernetes-dashboard-6ff574dd47-p55zl 1/1 Running 0 5m32s

- 查看端口

sudo kubectl get svc -n kubernetes-dashboard

- 1

- 浏览器访问Dashboard

根据上面的命令我们知道端口后,那么就可以在宿主机的浏览器上访问Dashboard了;如果chorme浏览器显示该网站不可信,请点击继续前往;  选择使用

选择使用Token登录

- 生成Token

在master节点中执行下面命令创建admin-user:

sudo vi dash.yaml

- 1

配置文件内容如下:

apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

接下来我们通过下面命令创建admin-user:

sudo kubectl apply -f dash.yaml

- 1

日志如下:

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

这就表示admin-user账号创建成功,接下来我们生成该用户的Token:

kubectl -n kubernetes-dashboard create token admin-user

- 1

我们把生成的Token拷贝好贴进浏览器对应的输入框中并点击登录按钮:

至此,我们就在ubuntu 22.04上完成了k8s的安装。

测试K8s集群

这里部署了一个nginx的app来进行测试,

kubectl create deployment nginx-app --image=nginx --replicas=2

- 1

查看nginx的状态:

kubectl get deployment nginx-app

- 1

将deployment暴露出去,采用NodePort的方式(这种方式会在每个节点上开放同一个端口,外部可以通过节点ip+port的方式进行访问)

kubectl expose deployment nginx-app --type=NodePort --port=80

- 1

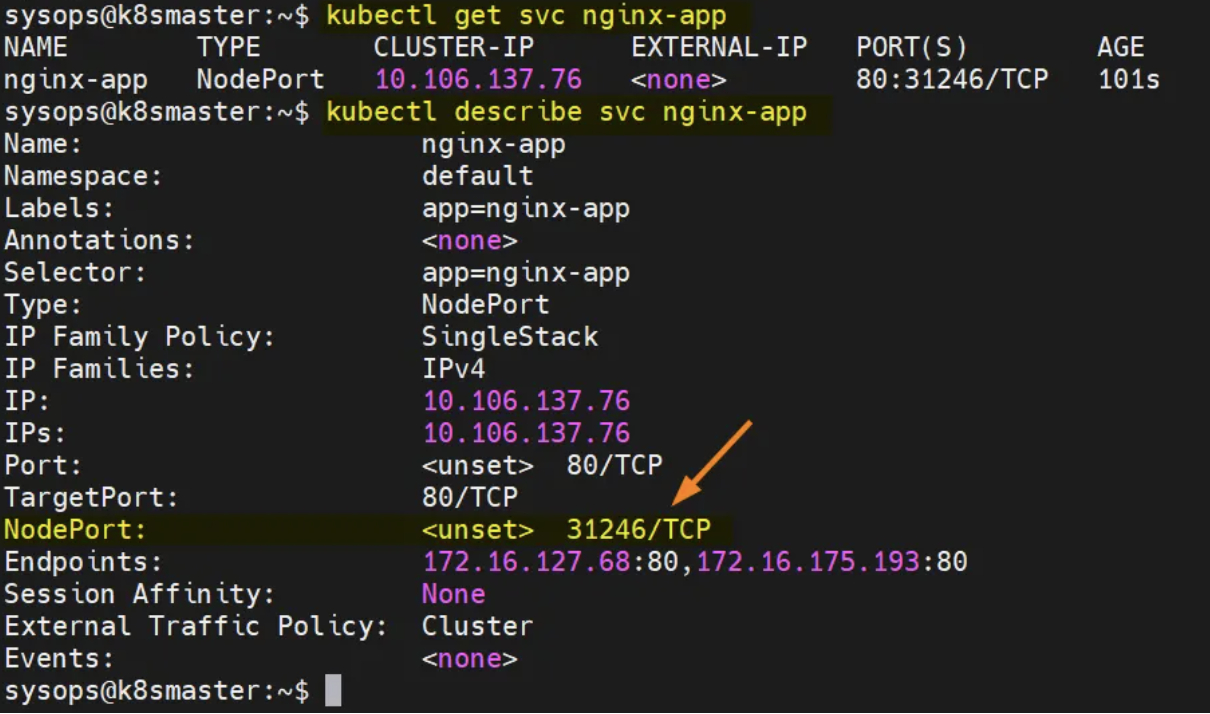

可以检查service的状态,

kubectl get svc nginx-app

kubectl describe svc nginx-app

- 1

- 2

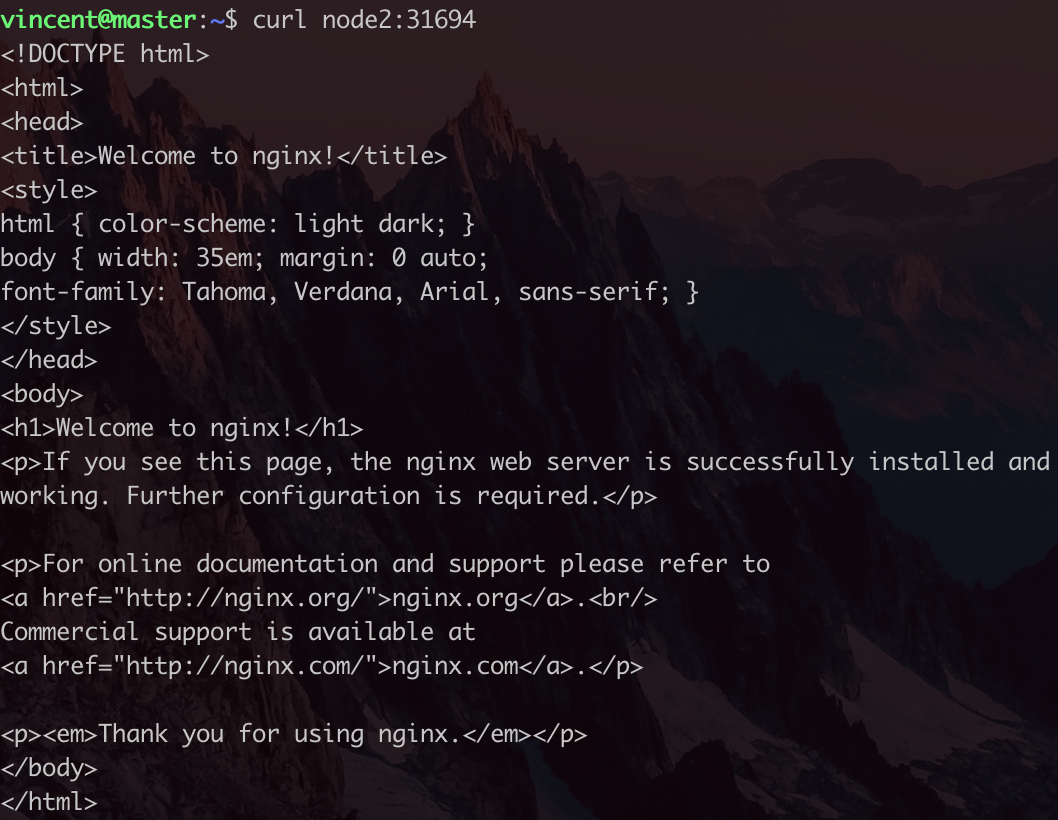

下面是测试结果:

说明Nginx运行正常,整个k8s节点部署成功。