- 1介绍一下QNX Neutrino、WindRiver VxWorks和Green Hills INTEGRITY、 NVIDIA DRIVE OS、Mentor Nucl等智能驾驶操作系统平台的特点?_ghs integrity rtos

- 2Hystrix之四种触发fallback情况的验证_@hystrixcommand(fallbackmethod = "failback") 触发 条件

- 3【MongoDB】Windows安装MongoDB_mongodb supported win10 or 2016

- 4命名数据网络(NDN)上的路由转发过程_ndn网络 pit路由

- 5web前端-------css盒子模型_web前端css部分弹性边框里再设计一个边框标签

- 6狂神 MYSQL 笔记整理_java狂神mysql

- 7Java基于web的软件资源库的设计与实现(源码+mysql+文档)_javaweb做一个智库

- 8论文翻译:从传统数据仓库到实时数据仓库_rtdw

- 9Jenkins通过Nexus artifact uploader 上传制品失败排查_failed to deploy artifacts: could not transfer art

- 10“hadoop:未找到命令”解决办法

LSTM进行情感分析_lstm能计算情感得分吗

赞

踩

LSTM进行情感分析的复现–pytorch的实现

关于TextCNN的复现参考本文章

TextCNN的复现–pytorch实现 - 知乎 (zhihu.com)

接下来主要是对代码内容的详解,完整代码将在文章末尾给出。

使用的数据集为电影评论数据集,其中正面数据集5000条左右,负面的数据集也为5000条。

pyroch的基本训练过程:

加载训练集–构建模型–模型训练–模型评价

首先,是要对数据集进行加载,在对数据集加载时候需要继承一下Dataset类,代码如下

import re

from collections import Counter

from collections import OrderedDict

import gensim

import torch.nn

from sympy.parsing.sympy_parser import _flatten

from torch.utils.data import Dataset

import numpy as np

import pandas as pd

from torchtext.vocab import vocab

class Data_loader(Dataset):

def __init__(self, file_pos, file_neg, model_path, word2_vec=False):

self.file_pos = file_pos

self.file_neg = file_neg

if word2_vec:

self.x_train, self.y_train = self.get_word2vec(model_path)

else:

self.x_train, self.y_train, self.dictionary = self.pre_process()

def __getitem__(self, idx):

data = self.x_train[idx]

label = self.y_train[idx]

data = torch.tensor(data)

label = torch.tensor(label)

return data, label

def __len__(self):

return len(self.x_train)

def clean_sentences(self, string):

string = re.sub(r"[^A-Za-z0-9(),!?\'\`]", " ", string)

string = re.sub(r"\'s", " \'s", string)

string = re.sub(r"\'ve", " \'ve", string)

string = re.sub(r"n\'t", " n\'t", string)

string = re.sub(r"\'re", " \'re", string)

string = re.sub(r"\'d", " \'d", string)

string = re.sub(r"\'ll", " \'ll", string)

string = re.sub(r",", " , ", string)

string = re.sub(r"!", " ! ", string)

string = re.sub(r"\(", " \( ", string)

string = re.sub(r"\)", " \) ", string)

string = re.sub(r"\?", " \? ", string)

string = re.sub(r"\s{2,}", " ", string)

return string.strip().lower()

def load_data_and_labels(self):

positive_examples = list(open(self.file_pos, "r", encoding="utf-8").readlines())

positive_examples = [s.strip() for s in positive_examples] # 对评论数据删除每一行数据的\t,\n

negative_examples = list(open(self.file_neg, "r", encoding="utf-8").readlines())

negative_examples = [s.strip() for s in negative_examples] # 对评论数据删除每一行数据的\t,\n

x_text = positive_examples + negative_examples

x_text = [self.clean_sentences(_) for _ in x_text]

positive_labels = [1 for _ in positive_examples] # 正样本数据为1

negative_labels = [0 for _ in negative_examples] # 负样本数据为0

y = np.concatenate((positive_labels, negative_labels), axis=0)

return x_text, y.T # 返回的是dataframe对象,[0]data[0]为文本数据,data[1]为标签

def pre_process(self):

'''

加载数据,并对之前使用的数据进行打乱返回,同时根据训练集和测试集的比列进行划分,默认百分80和百分20

:return:测试数据、训练数据、以及生成的词汇表

'''

x_data, y_label = self.load_data_and_labels()

max_document_length = max(len(x.split(' ')) for x in x_data)

voc = []

word_split = []

[voc.extend(x.split()) for x in x_data] # 生成词典

[word_split.append(x.split()) for x in x_data]

if len(voc) != 0:

ordere_dict = OrderedDict(sorted(Counter(_flatten(voc)).items(), key=lambda x: x[1], reverse=True))

# 把文档映射成词汇的索引序列

dictionary = vocab(ordere_dict)

x_data = []

for words in word_split:

x = list(dictionary.lookup_indices(words))

temp_pos = max_document_length - len(x)

if temp_pos != 0:

for i in range(1, temp_pos + 1):

x.extend([0])

x_data.append(x)

x_data = np.array(x_data)

np.random.seed(10)

# 将标签打乱顺序,返回索引

shuffle_indices = np.random.permutation(np.arange(len(y_label)))

x_shuffled = x_data[shuffle_indices]

y_shuffled = y_label[shuffle_indices]

return x_shuffled, y_shuffled, dictionary

def get_word2vec(self, model_path):

model = gensim.models.Word2Vec.load(model_path)

x_data, y_label = self.load_data_and_labels()

word_split = []

[word_split.append(x.split()) for x in x_data]

sentence_vectors = []

for sentence in word_split:

sentence_vector = []

for word in sentence:

try:

v = model.wv.get_index(word)

except Exception as e:

v = np.random.randint(0, 71289)

sentence_vector.append(int(v))

sentence_vectors.append(sentence_vector) # 获取到句子单词在词表中的位置

max_document_length = max(len(x) for x in sentence_vectors)

for vector in sentence_vectors:

for i in range(1, max_document_length - len(vector) + 1):

vector.append(int(71289))

vector_data = np.asarray(sentence_vectors)

# vector_data = sentence_vectors

np.random.seed(10)

# 将标签打乱顺序,返回索引

shuffle_indices = np.random.permutation(np.arange(len(y_label)))

x_shuffled = vector_data[shuffle_indices]

y_shuffled = y_label[shuffle_indices]

return x_shuffled, y_shuffled

# return vector_data, y_label

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

上述代码中的__init__ 、getitem 、len是必须要继承实现的方法,clean_sentence是对读取的数据进行清洗,load_data_and_label是加载数据且返回清洗过后的数据以及数据标签。pre_process是对数据进行编码,原始的数据是英文数据,因此需要对其进行分词、编码,最后返回的数据将是数字,一行数据就是一句评论。

例如:

I like this movie

在对其进行编码返回后将是 0 1 2 3,0对应的为I,1对应的为like以此类推。

在这儿设置的每个词的维度是256维。

接下来就是LSTM模型的构建

class LSTM_RNN(nn.Module):

def __init__(self, num_embeddings=-1, drop_rate=0.8, embedding_dim=256, hidden_size=64, output_size=1, num_layers=2):

super().__init__()

self.num_layers = num_layers

self.hidden_size = hidden_size

self.num_embeddings = num_embeddings # num_embeddings为单词的维度

self.embedding = nn.Embedding(num_embeddings, embedding_dim)

self.lstm = nn.LSTM(embedding_dim, hidden_size, num_layers, bidirectional=True, batch_first=True)

self.compute = nn.Linear(hidden_size, output_size)

self.drop = nn.Dropout(drop_rate)

self.sigmod = nn.Sigmoid()

def forward(self, x, hidden):

"""

x: 本次的输入,其size为(batch_size, 200),200为句子长度

hidden: 上一时刻的Hidden State和Cell State。类型为tuple: (h, c),

其中h和c的size都为(n_layers, batch_size, hidden_dim), 即(2, 200, 512)

"""

if self.num_embeddings > 0:

x = self.embedding(x)

batch_size = x.size(0)

x, hidden = self.lstm(x, hidden) # _x is input, size (seq_len, batch, input_size)

# s, b, h = x.shape # x is output, size (seq_len, batch, hidden_size)

x = x.contiguous().view(-1, self.hidden_size)

x = self.drop(x)

x = self.compute(x)

# x = x.view(s, b, -1)

predict = self.sigmod(x)

predict = predict.view(batch_size, -1)

out = predict[:, -1] # 取得是最后一个单词的概率

return out, hidden

def init_hidden(self, batch_size):

"""

初始化隐状态:第一次送给LSTM时,没有隐状态,所以要初始化一个

这里的初始化策略是全部赋0。

这里之所以是tuple,是因为LSTM需要接受两个隐状态hidden state和cell state

"""

hidden = (torch.zeros(self.num_layers*2, batch_size, self.hidden_size).to('cpu'),

torch.zeros(self.num_layers*2, batch_size, self.hidden_size).to('cpu')

)

return hidden

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

在构建模型时候需要继承nn.moudule,同时要实现__init__、以及forward方法,可以看作init在定义各个层,forward在对各个层之间来进行连接。

接下来就是对模型进行训练,代码如下所示:

import torch

from torch import nn

from torch.utils.data import DataLoader

import torch.nn

from LSTM import LSTM_RNN

from TextCNN import TextCNN

from dataLoader import Data_loader

batch_size = 830

# num_classes = 2

file_pos = 'E:\\PostGraduate\\Paper_review\\pytorch_TextCnn/data/rt-polarity.pos'

file_neg = 'E:\\PostGraduate\\Paper_review\\pytorch_TextCnn/data/rt-polarity.neg'

word2vec_path = 'E:\\PostGraduate\\Paper_review\\pytorch_TextCnn/word2vec1.model'

train_data = Data_loader(file_pos, file_neg, word2vec_path)

train_size = int(len(train_data) * 0.8)

test_size = len(train_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(train_data, [train_size, test_size])

train_iter = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_iter = DataLoader(test_dataset, batch_size=batch_size, shuffle=True)

# model = TextCNN(num_classes, embeddings_pretrained=True)

model = LSTM_RNN(num_embeddings=18764)

# 开始训练

epoch = 100 # 训练轮次

optmizer = torch.optim.Adam(model.parameters(), lr=0.001)

# optmizer = torch.optim.SGD(model.parameters(),lr=0.01,momentum=0.04)

train_losses = []

train_counter = []

test_losses = []

log_interval = 5

test_counter = [i * len(train_iter.dataset) for i in range(epoch + 1)]

device = 'cpu'

def train_loop(n_epochs, optimizer, model, train_loader, device, test_iter):

for epoch in range(1, n_epochs + 1):

print("开始第{}轮训练".format(epoch))

model.train()

correct = 0

for i, data in enumerate(train_loader):

# print(i)

optimizer.zero_grad()

(text_data, label) = data

text_data = text_data.to(device)

label = label.to(device)

label = label.long()

# print(len(text_data))

h = model.init_hidden(len(text_data)) # 初始化第一个Hidden_state

output, h = model(text_data, h)

# print(torch.mean(output))

loss_func = nn.BCELoss()

# output = output.long()

loss = loss_func(output, label.float())

loss.backward()

optimizer.step()

pred = [1 if x >= 0.5 else 0 for x in output] # 返回的是列表

for index in range(0, len(pred)):

if pred[index] == label[index]:

correct += 1

if i % log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, i * len(text_data), len(train_loader.dataset),

100. * i / len(train_loader), loss.item()))

train_losses.append(loss.item())

train_counter.append(

(i * 64) + ((epoch - 1) * len(train_loader.dataset)))

torch.save(model.state_dict(), './model.pth')

torch.save(optimizer.state_dict(), './optimizer.pth')

# if 100. * correct / len(train_loader.dataset)<94:

print("Accuracy: {}/{} ({:.0f}%)\n".format(correct, len(train_loader.dataset),

100. * correct / len(train_loader.dataset)))

test_loop(model, device, test_iter)

# else:

# break

# model.eval()

# PATH = 'E:\\PostGraduate\\Paper_review\\pytorch_TextCnn\\LSTM/model.pth'

# dictionary = torch.load(PATH)

# model.load_state_dict(dictionary)

def test_loop(model, device, test_iter):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_iter:

data = data.to(device)

target = target.to(device)

h = model.init_hidden(len(data)) # 初始化第一个Hidden_state

output, h = model(data, h)

loss_func = nn.BCEWithLogitsLoss()

loss = loss_func(output, target.float())

test_loss += loss

pred = [1 if x >= 0.5 else 0 for x in output] # 返回的是列表

for index in range(0, len(pred)):

if pred[index] == target[index]:

correct += 1

test_loss /= len(test_iter.dataset)

test_losses.append(test_loss)

print('\nTest set: Avg. loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_iter.dataset),

100. * correct / len(test_iter.dataset)))

train_loop(epoch, optmizer, model, train_iter, device, test_iter)

PATH = 'E:\\PostGraduate\\Paper_review\\pytorch_TextCnn\\LSTM/model.pth'

model = LSTM_RNN(num_embeddings=18764)

dictionary = torch.load(PATH)

model.load_state_dict(dictionary)

test_loop(model, device, test_iter)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

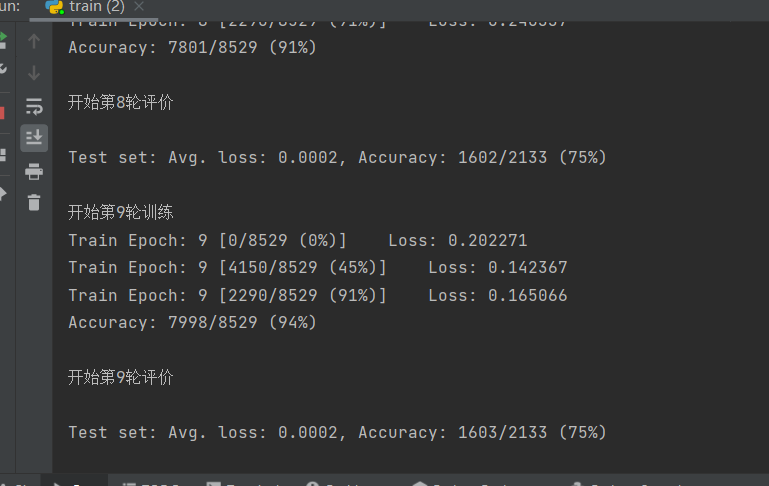

在上述中,首先会对数据集加载进来,然后分为80%的训练集和20%的测试集,定义使用的优化器为adam。同时在训练的过程中会对优化器、损失函数等信息进行保存。

训练结果如下所示:

完整代码链接,后续会使用其他数据来对模型进行测试。

/model.pth’

model = LSTM_RNN(num_embeddings=18764)

dictionary = torch.load(PATH)

model.load_state_dict(dictionary)

test_loop(model, device, test_iter)

在上述中,首先会对数据集加载进来,然后分为80%的训练集和20%的测试集,定义使用的优化器为adam。同时在训练的过程中会对优化器、损失函数等信息进行保存。

训练结果如下所示:

[外链图片转存中...(img-KOExQS59-1706516526357)]

完整代码链接,后续会使用其他数据来对模型进行测试。

[木南/TextCNN (gitee.com)](https://gitee.com/nanwang-crea/text-cnn)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10