- 1Notepad++ 使用正则表达式删除空行空格方法_正则表达式如何去掉空行

- 230个Kafka常见错误小集合_kafka无法查看topic报错

- 3前端:移动端和PC端的区别_手机端请求和pc端请求头的区别

- 4【浏览器安全】漏洞的生命周期_漏洞生命周期

- 5【初阶数据结构】深入解析循环队列:探索底层逻辑

- 6使用Jmeter轻松实现AES加密测试_jmeter aes加密

- 7iOS不能跳转到支付宝的解决办法_uni ios跳转支付宝ios16不能跳转

- 8Vue3/Vite引入EasyPlayer.js播放H265视频错误的问题_vue easyplayerjs

- 9Hive 基于 Hadoop 进行数据清洗及数据统计_hdfs+hive做数据统计

- 10信创 | 已支持信创化的数据库都有哪些?_信创数据库

Java-Spark系列3-RDD介绍_spark javardd

赞

踩

一.RDD概念

RDD(resilient distributed dataset ,弹性分布式数据集),是 Spark 中最基础的抽象。它表示了一个可以并行操作的、不可变的、被分区了的元素集合。用户不需要关心底层复杂的抽象处理,直接使用方便的算子处理和计算就可以了。

1.1 RDD的特点

1) . 分布式

RDD是一个抽象的概念,RDD在spark driver中,通过RDD来引用数据,数据真正存储在节点机的partition上。

2). 只读

在Spark中RDD一旦生成了,就不能修改。

那么为什么要设置为只读,设置为只读的话,因为不存在修改,并发的吞吐量就上来了。

3). 血缘关系

我们需要对RDD进行一系列的操作,因为RDD是只读的,我们只能不断的生产新的RDD,这样,新的RDD与原来的RDD就会存在一些血缘关系。

Spark会记录这些血缘关系,在后期的容错上会有很大的益处。

4). 缓存

当一个 RDD 需要被重复使用时,或者当任务失败重新计算的时候,这时如果将 RDD 缓存起来,就可以避免重新计算,保证程序运行的性能。

RDD 的缓存有三种方式:cache、persist、checkPoint。

-

cache

cache 方法不是在被调用的时候立即进行缓存,而是当触发了 action 类型的算子之后,才会进行缓存。 -

cache 和 persist 的区别

其实 cache 底层实际调用的就是 persist 方法,只是缓存的级别默认是 MEMORY_ONLY,而 persist 方法可以指定其他的缓存级别。 -

cache 和 checkPoint 的区别

checkPoint 是将数据缓存到本地或者 HDFS 文件存储系统中,当某个节点的 executor 宕机了之后,缓存的数据不会丢失,而通过 cache 缓存的数据就会丢掉。

checkPoint 的时候会把 job 从开始重新再计算一遍,因此在 checkPoint 之前最好先进行一步 cache 操作,cache 不需要重新计算,这样可以节省计算的时间。

- persist 和 checkPoint 的区别

persist 也可以选择将数据缓存到磁盘当中,但是它交给 blockManager 管理的,一旦程序运行结束,blockManager 也会被停止,这时候缓存的数据就会被释放掉。而 checkPoint 持久化的数据并不会被释放,是一直存在的,可以被其它的程序所使用。

1.2 RDD的核心属性

RDD 调度和计算都依赖于这五属性

1). 分区列表

Spark RDD 是被分区的,每一个分区都会被一个计算任务 (Task) 处理,分区数决定了并行计算的数量,RDD 的并行度默认从父 RDD 传给子 RDD。默认情况下,一个 HDFS 上的数据分片就是一个 partiton,RDD 分片数决定了并行计算的力度,可以在创建 RDD 时指定 RDD 分片个数,如果不指定分区数量,当 RDD 从集合创建时,则默认分区数量为该程序所分配到的资源的 CPU 核数 (每个 Core 可以承载 2~4 个 partition),如果是从 HDFS 文件创建,默认为文件的 Block 数。

2). 依赖列表

由于 RDD 每次转换都会生成新的 RDD,所以 RDD 会形成类似流水线一样的前后依赖关系,当然宽依赖就不类似于流水线了,宽依赖后面的 RDD 具体的数据分片会依赖前面所有的 RDD 的所有数据分片,这个时候数据分片就不进行内存中的 Pipeline,一般都是跨机器的,因为有前后的依赖关系,所以当有分区的数据丢失时, Spark 会通过依赖关系进行重新计算,从而计算出丢失的数据,而不是对 RDD 所有的分区进行重新计算。RDD 之间的依赖有两种:窄依赖 ( Narrow Dependency) 和宽依赖 ( Wide Dependency)。RDD 是 Spark 的核心数据结构,通过 RDD 的依赖关系形成调度关系。通过对 RDD 的操作形成整个 Spark 程序。

3). Compute函数,用于计算RDD各分区的值

每个分区都会有计算函数, Spark 的 RDD 的计算函数是以分片为基本单位的,每个 RDD 都会实现 compute 函数,对具体的分片进行计算,RDD 中的分片是并行的,所以是分布式并行计算,有一点非常重要,就是由于 RDD 有前后依赖关系,遇到宽依赖关系,如 reduce By Key 等这些操作时划分成 Stage, Stage 内部的操作都是通过 Pipeline 进行的,在具体处理数据时它会通过 Blockmanager 来获取相关的数据,因为具体的 split 要从外界读数据,也要把具体的计算结果写入外界,所以用了一个管理器,具体的 split 都会映射成 BlockManager 的 Block,而体的 splt 会被函数处理,函数处理的具体形式是以任务的形式进行的。

4). 分区策略(可选)

每个 key-value 形式的 RDD 都有 Partitioner 属性,它决定了 RDD 如何分区。当然,Partiton 的个数还决定了每个 Stage 的 Task 个数。RDD 的分片函数可以分区 ( Partitioner),可传入相关的参数,如 Hash Partitioner 和 Range Partitioner,它本身针对 key- value 的形式,如果不是 key-ale 的形式它就不会有具体的 Partitioner, Partitioner 本身决定了下一步会产生多少并行的分片,同时它本身也决定了当前并行 ( Parallelize) Shuffle 输出的并行数据,从而使 Spak 具有能够控制数据在不同结点上分区的特性,用户可以自定义分区策略,如 Hash 分区等。 spark 提供了 partition By 运算符,能通过集群对 RDD 进行数据再分配来创建一个新的 RDD。

5). 优先位置列表(可选,HDFS实现数据本地化,避免数据移动)

优先位置列表会存储每个 Partition 的优先位置,对于一个 HDFS 文件来说,就是每个 Partition 块的位置。观察运行 Spark 集群的控制台就会发现, Spark 在具体计算、具体分片以前,它已经清楚地知道任务发生在哪个结点上,也就是说任务本身是计算层面的、代码层面的,代码发生运算之前它就已经知道它要运算的数据在什么地方,有具体结点的信息。这就符合大数据中数据不动代码动的原则。数据不动代码动的最高境界是数据就在当前结点的内存中。这时候有可能是 Memory 级别或 Tachyon 级别的, Spark 本身在进行任务调度时会尽可能地将任务分配到处理数据的数据块所在的具体位置。据 Spark 的 RDD。 Scala 源代码函数 getParferredlocations 可知,每次计算都符合完美的数据本地性。

二.RDD概述

2.1 准备工作

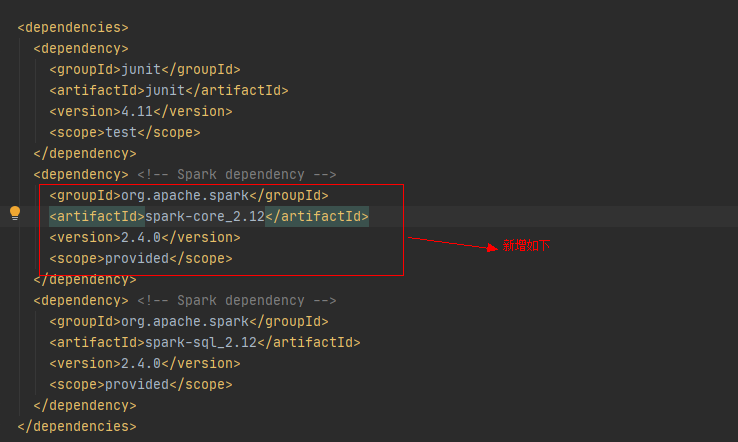

要用Java编写Spark应用程序,需要添加对Spark的依赖项。Spark可通过Maven中心获得:

groupId = org.apache.spark

artifactId = spark-core_2.12

version = 2.4.2

- 1

- 2

- 3

此外,如果您希望访问HDFS集群,则需要为您的HDFS版本添加对hadoop-client的依赖。

groupId = org.apache.hadoop

artifactId = hadoop-client

version = <your-hdfs-version>

- 1

- 2

- 3

最后,需要将一些Spark类导入到程序中。添加以下几行:

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.SparkConf;

- 1

- 2

- 3

2.2 初始化spark

Spark程序必须做的第一件事是创建一个JavaSparkContext对象,它告诉Spark如何访问集群。要创建SparkContext,首先需要构建包含应用程序信息的SparkConf对象。

SparkConf conf = new SparkConf().setAppName(appName).setMaster(master);

JavaSparkContext sc = new JavaSparkContext(conf);

- 1

- 2

2.3 RDD数据集

Spark围绕弹性分布式数据集(RDD)的概念展开,RDD是一个可以并行操作的容错元素集合。有两种创建rdd的方法:在驱动程序中并行化一个现有的集合,或者在外部存储系统中引用一个数据集,例如共享文件系统、HDFS、HBase或任何提供Hadoop InputFormat的数据源。

2.3.1 并行集合

通过在驱动程序中调用JavaSparkContext的parallelize方法可以创建并行集合。集合的元素被复制,形成一个可以并行操作的分布式数据集。例如,下面是如何创建一个包含数字1到5的并行集合:

List<Integer> data = Arrays.asList(1, 2, 3, 4, 5);

JavaRDD<Integer> distData = sc.parallelize(data);

- 1

- 2

一旦创建了分布式数据集(distData),就可以并行操作它了。例如,我们可以调用distData。Reduce ((a, b) -> a + b)将列表中的元素相加。稍后我们将描述对分布式数据集的操作。

并行集合的一个重要参数是将数据集分割成多个分区。Spark将为集群的每个分区运行一个任务。通常,集群中的每个CPU需要2-4个分区。通常,Spark会根据集群自动设置分区数量。但是,你也可以通过将它作为第二个参数传入parallelize来手动设置它(例如sc.parallelize(data, 10))。注意:代码中的某些地方使用术语片(分区的同义词)来保持向后兼容性。

2.3.2 外部数据集

Spark可以从Hadoop支持的任何存储源创建分布式数据集,包括您的本地文件系统、HDFS、Cassandra、HBase、Amazon S3等。Spark支持文本文件、SequenceFiles和任何其他Hadoop InputFormat。

文本文件rdd可以使用SparkContext的textFile方法创建。该方法接受文件的URI(机器上的本地路径,或者hdfs://, s3a://等URI),并将其作为行集合读取。下面是一个示例调用:

JavaRDD<String> distFile = sc.textFile("data.txt");

- 1

一旦创建,数据集操作就可以对distFile进行操作。例如,我们可以使用map和reduce操作将所有行的大小相加,如下所示:地图(s - > s.length())。使((a, b) -> a + b)

使用Spark读取文件的一些注意事项:

-

如果使用本地文件系统上的路径,则必须在工作节点上的相同路径上访问该文件。要么将文件复制到所有工作人员,要么使用网络挂载的共享文件系统。

-

所有Spark基于文件的输入法,包括textFile,都支持在目录、压缩文件和通配符上运行。例如,可以使用textFile("/my/directory")、textFile("/my/directory/.txt")和textFile("/my/directory/.gz")。

-

textFile方法还接受第二个可选参数,用于控制文件的分区数。默认情况下,Spark为文件的每个块创建一个分区(HDFS默认为128MB),但你也可以通过传递一个更大的值来请求更多的分区。注意,分区不能少于块。

除了文本文件,Spark的Java API还支持其他几种数据格式:

-

JavaSparkContext。wholeTextFiles允许您读取包含多个小文本文件的目录,并以(文件名,内容)对的形式返回每个小文本文件。这与textFile相反,textFile在每个文件中每行返回一条记录。

-

对于SequenceFiles,使用SparkContext的sequenceFile[K, V]方法,其中K和V是文件中的键和值类型。这些应该是Hadoop的Writable接口的子类,比如IntWritable和Text。

-

对于其他Hadoop InputFormats,您可以使用JavaSparkContext。hadoopRDD方法,它接受一个任意的JobConf和输入格式类、键类和值类。设置这些参数的方法与使用输入源设置Hadoop作业的方法相同。你也可以使用JavaSparkContext。newAPIHadoopRDD用于InputFormats,基于“新的”MapReduce API (org.apache.hadoop.mapreduce)。

-

JavaRDD。saveAsObjectFile JavaSparkContext。objectFile支持以由序列化的Java对象组成的简单格式保存RDD。虽然这种格式不如Avro这样的专用格式有效,但它提供了一种保存任何RDD的简单方法。

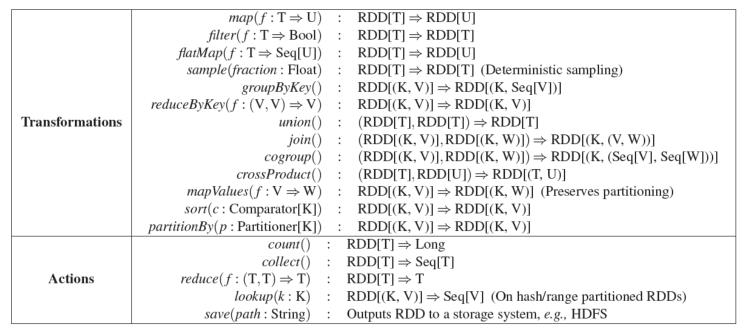

三.操作RDD

rdd支持两种类型的操作:转换(从现有数据集创建一个新的数据集)和操作(在数据集上运行计算后向驱动程序返回一个值)。例如,map是一种转换,它通过一个函数传递每个数据集元素并返回一个表示结果的新RDD。另一方面,reduce是一个操作,它使用一些函数聚合RDD的所有元素,并将最终结果返回给驱动程序(尽管也有一个并行的reduceByKey返回一个分布式数据集)。

Spark中的所有转换都是惰性的,因为它们不会立即计算结果。相反,他们只记住应用到一些基本数据集(例如,一个文件)的转换。只有当一个操作需要将结果返回给驱动程序时,才会计算转换。这种设计使Spark能够更高效地运行。例如,我们可以认识到通过map创建的数据集将用于reduce,并且只将reduce的结果返回给驱动程序,而不是更大的映射数据集。

默认情况下,每次在转换后的RDD上运行操作时都可能重新计算。但是,您也可以使用persist(或cache)方法将RDD持久化到内存中,在这种情况下,Spark将在集群中保留元素,以便在下次查询时更快地访问它。它还支持在磁盘上持久化rdd,或跨多个节点复制rdd。

3.1 基础知识

为了说明RDD的基础知识,考虑下面这个简单的程序:

JavaRDD<String> lines = sc.textFile("data.txt");

JavaRDD<Integer> lineLengths = lines.map(s -> s.length());

int totalLength = lineLengths.reduce((a, b) -> a + b);

- 1

- 2

- 3

第一行从外部文件定义了一个基本RDD。这个数据集不会被加载到内存中,也不会被执行:lines仅仅是一个指向文件的指针。第二行定义linelength作为映射转换的结果。同样,由于惰性,不能立即计算linelength。最后,我们运行reduce,这是一个动作。此时,Spark将计算分解为任务,在不同的机器上运行,每台机器同时运行自己的部分map和局部reduce,只将其答案返回给驱动程序。

如果我们以后还想使用lineLengths,我们可以添加:

lineLengths.persist(StorageLevel.MEMORY_ONLY());

- 1

在reduce之前,这将导致linelength在第一次计算之后被保存在内存中。

3.2 将函数传递给Spark

Spark的API在很大程度上依赖于在驱动程序中传递函数来在集群上运行。在Java中,函数由实现org.apache.spark.api.java.function包中的接口的类表示。有两种方法可以创建这样的函数:

- 在您自己的类中实现Function接口,无论是匿名内部类还是命名内部类,并将其实例传递给Spark。

- 使用lambda表达式来精确定义一个实现。

虽然本指南的大部分内容都使用lambda语法以达到简练性,但是很容易以长格式使用所有相同的api。例如,我们可以这样写上面的代码:

JavaRDD<String> lines = sc.textFile("data.txt");

JavaRDD<Integer> lineLengths = lines.map(new Function<String, Integer>() {

public Integer call(String s) { return s.length(); }

});

int totalLength = lineLengths.reduce(new Function2<Integer, Integer, Integer>() {

public Integer call(Integer a, Integer b) { return a + b; }

});

- 1

- 2

- 3

- 4

- 5

- 6

- 7

或者,如果编写内联函数是笨拙的:

class GetLength implements Function<String, Integer> {

public Integer call(String s) { return s.length(); }

}

class Sum implements Function2<Integer, Integer, Integer> {

public Integer call(Integer a, Integer b) { return a + b; }

}

JavaRDD<String> lines = sc.textFile("data.txt");

JavaRDD<Integer> lineLengths = lines.map(new GetLength());

int totalLength = lineLengths.reduce(new Sum());

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

注意,Java中的匿名内部类也可以访问外围作用域中的变量,只要它们被标记为final。Spark将把这些变量的副本传送到每个工作节点,就像它对其他语言所做的那样。

3.3 理解闭包

Spark的难点之一是理解跨集群执行代码时变量和方法的作用域和生命周期。在RDD范围之外修改变量的操作经常会引起混淆。在下面的示例中,我们将查看使用foreach()增加计数器的代码,但其他操作也可能出现类似的问题。

举例:

考虑下面的简单RDD元素和,它的行为可能会因执行是否发生在同一个JVM中而不同。一个常见的例子是在本地模式下运行Spark(——master = local[n])与在集群中部署Spark应用(例如通过Spark -submit to YARN):

int counter = 0;

JavaRDD<Integer> rdd = sc.parallelize(data);

// Wrong: Don't do this!!

rdd.foreach(x -> counter += x);

println("Counter value: " + counter);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

Local vs. cluster modes

上述代码的行为是未定义的,可能无法按照预期工作。为了执行作业,Spark将RDD操作的处理分解为任务,每个任务由一个执行器执行。在执行之前,Spark会计算任务的闭包。闭包是执行器在RDD上执行计算时必须可见的变量和方法(在本例中为foreach())。这个闭包被序列化并发送给每个执行器。

闭包中发送给每个执行器的变量现在是副本,因此,当counter在foreach函数中被引用时,它不再是驱动程序节点上的计数器。驱动程序节点的内存中仍然有一个计数器,但对执行器不再可见!执行器只能看到序列化闭包的副本。因此,counter的最终值仍然为零,因为计数器上的所有操作都引用了序列化闭包中的值。

在本地模式下,在某些情况下,foreach函数实际上将在与驱动程序相同的JVM中执行,并将引用相同的原始计数器,并可能实际更新它。

为了确保在这些场景中定义良好的行为,应该使用累加器。Spark中的累加器专门用于提供一种机制,当执行在集群中的工作节点之间拆分时,可以安全地更新变量。本指南的“累加器”一节将对此进行更详细的讨论。

一般来说,闭包——像循环或局部定义的方法这样的构造,不应该用来改变某些全局状态。Spark不定义或保证从闭包外部引用的对象的突变行为。有些代码可能在本地模式下工作,但这只是偶然的,这样的代码在分布式模式下不会像预期的那样工作。如果需要一些全局聚合,则使用累加器。

打印RDD的元素

另一个常见的习惯用法是尝试使用RDD .foreach(println)或RDD .map(println)打印RDD的元素。在一台机器上,这将生成预期的输出并打印所有RDD的元素。然而,在集群模式下,执行器调用的到stdout的输出现在正在写入执行器的stdout,而不是驱动程序上的stdout,所以驱动程序上的stdout不会显示这些!要打印驱动程序上的所有元素,可以使用collect()方法首先将RDD带到驱动程序节点:RDD .collect().foreach(println)。但是,这可能会导致驱动程序耗尽内存,因为collect()将整个RDD获取到一台机器上;如果你只需要打印RDD的一些元素,一个更安全的方法是使用take(): RDD .take(100).foreach(println)。

3.4 使用键值对

虽然大多数Spark操作在包含任何类型对象的rdd上工作,但有一些特殊操作只在键值对的rdd上可用。最常见的是分布式“洗牌”操作,例如按键对元素进行分组或聚合。

在Java中,键值对使用scala表示。来自Scala标准库的Tuple2类。您可以简单地调用new Tuple2(a, b)来创建一个元组,然后使用tuple._1()和tuple._2()来访问它的字段。

键值对的rdd由JavaPairRDD类表示。可以使用特殊版本的映射操作(如mapToPair和flatMapToPair)从javardd构建javapairrdd。JavaPairRDD既有标准的RDD函数,也有特殊的键值函数。

例如,以下代码使用键值对上的reduceByKey操作来计算每行文本在文件中出现的次数:

JavaRDD<String> lines = sc.textFile("data.txt");

JavaPairRDD<String, Integer> pairs = lines.mapToPair(s -> new Tuple2(s, 1));

JavaPairRDD<String, Integer> counts = pairs.reduceByKey((a, b) -> a + b);

- 1

- 2

- 3

例如,我们也可以使用counts.sortByKey()来按字母顺序排序,最后使用counts.collect()将它们作为对象数组返回到驱动程序中。

**注意:**当在键值对操作中使用自定义对象作为键时,必须确保自定义equals()方法与匹配的hashCode()方法一起使用。有关详细信息,请参阅Object.hashCode()文档中概述的契约。

3.5 常见Transformations操作及Actions操作

常见Transformations操作

| Transformation | 含义 |

|---|---|

| map(func) | 对每个RDD元素应用func之后,构造成新的RDD |

| filter(func) | 对每个RDD元素应用func, 将func为true的元素构造成新的RDD |

| flatMap(func) | 和map类似,但是flatMap可以将一个输出元素映射成0个或多个元素。(也就是说func返回的是元素序列而不是单个元素). |

| mapPartitions(func) | 和map类似,但是在RDD的不同分区上独立执行。所以函数func的参数是一个Python迭代器,输出结果也应该是迭代器【即func作用为Iterator => Iterator】 |

| mapPartitionsWithIndex(func) | 和mapPartitions类似, but also provides func with an integer value representing the index of the partition, 但是还为函数func提供了一个正式参数,用来表示分区的编号。【此时func作用为(Int, Iterator) => Iterator 】 |

| sample(withReplacement, fraction, seed) | 抽样: fraction是抽样的比例0~1之间的浮点数; withRepacement表示是否有放回抽样, True是有放回, False是无放回;seed是随机种子。 |

| union(otherDataset) | 并集操作,重复元素会保留(可以通过distinct操作去重) |

| intersection(otherDataset) | 交集操作,结果不会包含重复元素 |

| distinct([numTasks])) | 去重操作 |

| groupByKey([numTasks]) | 把Key相同的数据放到一起【(K, V) => (K, Iterable)】,需要注意的问题:1. 如果分组(grouping)操作是为了后续的聚集(aggregation)操作(例如sum/average), 使用reduceByKey或者aggregateByKey更高效。2.默认情况下,并发度取决于分区数量。我们可以传入参数numTasks来调整并发任务数。 |

| reduceByKey(func, [numTasks]) | 首先按Key分组,然后将相同Key对应的所有Value都执行func操作得到一个值。func必须是(V, V) => V’的计算操作。numTasks作用跟上面提到的groupByKey一样。 |

| sortByKey([ascending], [numTasks]) | 按Key排序。通过第一个参数True/False指定是升序还是降序。 |

| join(otherDataset, [numTasks]) | 类似SQL中的连接(内连接),即(K, V) and (K, W) => (K, (V, W)),返回所有连接对。外连接通过:leftOUterJoin(左出现右无匹配为空)、rightOuterJoin(右全出现左无匹配为空)、fullOuterJoin实现(左右全出现无匹配为空)。 |

| cogroup(otherDataset, [numTasks]) | 对两个RDD做groupBy。即(K, V) and (K, W) => (K, Iterable, Iterable(W))。别名groupWith。 |

| pipe(command, [envVars]) | 将驱动程序中的RDD交给shell处理(外部进程),例如Perl或bash脚本。RDD元素作为标准输入传给脚本,脚本处理之后的标准输出会作为新的RDD返回给驱动程序。 |

| coalesce(numPartitions) | 将RDD的分区数减小到numPartitions。当数据集通过过滤减小规模时,使用这个操作可以提升性能。 |

| repartition(numPartitions) | 将数据重新随机分区为numPartitions个。这会导致整个RDD的数据在集群网络中洗牌。 |

| repartitionAndSortWithinPartitions(partitioner) | 使用partitioner函数充分去,并在分区内排序。这比先repartition然后在分区内sort高效,原因是这样迫使排序操作被移到了shuffle阶段。 |

常见Actions操作

| Action | 含义 |

|---|---|

| reduce(func) | 使用func函数聚集RDD中的元素(func接收两个参数返回一个值)。这个函数应该满足结合律和交换律以便能够正确并行计算。 |

| collect() | 将RDD转为数组返回给驱动程序。这个在执行filter等操作之后返回足够小的数据集是比较有用。 |

| count() | 返回RDD中的元素数量。 |

| first() | 返回RDD中的第一个元素。(同take(1)) |

| take(n) | 返回由RDD的前N个元素组成的数组。 |

| takeSample(withReplacement, num, [seed]) | 返回num个元素的数组,这些元素抽样自RDD,withReplacement表示是否有放回,seed是随机数生成器的种子)。 |

| takeOrdered(n, [ordering]) | 返回RDD的前N个元素,使用自然顺序或者通过ordering函数对将个元素转换为新的Key. |

| saveAsTextFile(path) | 将RDD元素写入文本文件。Spark自动调用元素的toString方法做字符串转换。 |

| saveAsSequenceFile(path)(Java and Scala) | 将RDD保存为Hadoop SequenceFile.这个过程机制如下:1. Pyrolite用来将序列化的Python RDD转为Java对象RDD;2. Java RDD中的Key/Value被转为Writable然后写到文件。 |

| countByKey() | 统计每个Key出现的次数,只对(K, V)类型的RDD有效,返回(K, int)词典。 |

| foreach(func) | 在所有RDD元素上执行函数func。 |

上图是关于Transformations和Actions的一个介绍图解,可以发现,Transformations操作过后的RDD依旧是RDD,而Actions过后的RDD都是非RDD。

四.RDD实例

4.1 初始化RDD

4.1.1 通过集合创建RDD

代码:

package org.example; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.SparkConf; import java.util.Arrays; import java.util.List; public class RDDTest1 { public static void main(String[] args){ SparkConf conf = new SparkConf().setAppName("RDDTest1").setMaster("local[*]"); JavaSparkContext sc = new JavaSparkContext(conf); //通过集合创建rdd List<Integer> data = Arrays.asList(1, 2, 3, 4, 5); JavaRDD<Integer> rdd = sc.parallelize(data); System.out.println("\n"); System.out.println("\n"); System.out.println("\n"); //查看list被分成了几部分 System.out.println(rdd.getNumPartitions() + "\n"); //查看分区状况 System.out.println(rdd.glom().collect() + "\n"); System.out.println(rdd.collect() + "\n"); System.out.println("\n"); System.out.println("\n"); System.out.println("\n"); sc.stop(); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

测试记录:

[root@hp2 javaspark]# spark-submit \ > --class org.example.RDDTest1 \ > --master local[2] \ > /home/javaspark/SparkStudy-1.0-SNAPSHOT.jar 21/08/10 10:27:43 INFO spark.SparkContext: Running Spark version 2.4.0-cdh6.3.1 21/08/10 10:27:43 INFO logging.DriverLogger: Added a local log appender at: /tmp/spark-32205eb6-26e1-4a05-a3a3-6d9ef1de2758/__driver_logs__/driver.log 21/08/10 10:27:43 INFO spark.SparkContext: Submitted application: RDDTest1 21/08/10 10:27:43 INFO spark.SecurityManager: Changing view acls to: root 21/08/10 10:27:43 INFO spark.SecurityManager: Changing modify acls to: root 21/08/10 10:27:43 INFO spark.SecurityManager: Changing view acls groups to: 21/08/10 10:27:43 INFO spark.SecurityManager: Changing modify acls groups to: 21/08/10 10:27:43 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 21/08/10 10:27:43 INFO util.Utils: Successfully started service 'sparkDriver' on port 43299. 21/08/10 10:27:43 INFO spark.SparkEnv: Registering MapOutputTracker 21/08/10 10:27:43 INFO spark.SparkEnv: Registering BlockManagerMaster 21/08/10 10:27:43 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 21/08/10 10:27:43 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 21/08/10 10:27:43 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-205a05e3-46e2-43f8-8cbc-db73a53678a9 21/08/10 10:27:43 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB 21/08/10 10:27:43 INFO spark.SparkEnv: Registering OutputCommitCoordinator 21/08/10 10:27:43 INFO util.log: Logging initialized @1527ms 21/08/10 10:27:43 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: 2018-09-05T05:11:46+08:00, git hash: 3ce520221d0240229c862b122d2b06c12a625732 21/08/10 10:27:43 INFO server.Server: Started @1604ms 21/08/10 10:27:43 INFO server.AbstractConnector: Started ServerConnector@2c5d601e{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 21/08/10 10:27:43 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5489c777{/jobs,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6bc28a83{/jobs/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@324c64cd{/jobs/job,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5bd73d1a{/jobs/job/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@aec50a1{/stages,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2555fff0{/stages/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70d2e40b{/stages/stage,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@702ed190{/stages/stage/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@173b9122{/stages/pool,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7c18432b{/stages/pool/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7646731d{/storage,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70e29e14{/storage/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3b1bb3ab{/storage/rdd,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a4bef8{/storage/rdd/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@40bffbca{/environment,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2449cff7{/environment/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@42a9a63e{/executors,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@62da83ed{/executors/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5d8445d7{/executors/threadDump,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@37d80fe7{/executors/threadDump/json,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@384fc774{/static,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5bd1ceca{/,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@30c31dd7{/api,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@241a53ef{/jobs/job/kill,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@344344fa{/stages/stage/kill,null,AVAILABLE,@Spark} 21/08/10 10:27:43 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hp2:4040 21/08/10 10:27:43 INFO spark.SparkContext: Added JAR file:/home/javaspark/SparkStudy-1.0-SNAPSHOT.jar at spark://hp2:43299/jars/SparkStudy-1.0-SNAPSHOT.jar with timestamp 1628562463771 21/08/10 10:27:43 INFO executor.Executor: Starting executor ID driver on host localhost 21/08/10 10:27:43 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 40851. 21/08/10 10:27:43 INFO netty.NettyBlockTransferService: Server created on hp2:40851 21/08/10 10:27:43 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 21/08/10 10:27:43 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hp2, 40851, None) 21/08/10 10:27:43 INFO storage.BlockManagerMasterEndpoint: Registering block manager hp2:40851 with 366.3 MB RAM, BlockManagerId(driver, hp2, 40851, None) 21/08/10 10:27:43 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hp2, 40851, None) 21/08/10 10:27:43 INFO storage.BlockManager: external shuffle service port = 7337 21/08/10 10:27:43 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, hp2, 40851, None) 21/08/10 10:27:44 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@512d4583{/metrics/json,null,AVAILABLE,@Spark} 21/08/10 10:27:44 INFO scheduler.EventLoggingListener: Logging events to hdfs://nameservice1/user/spark/applicationHistory/local-1628562463816 21/08/10 10:27:44 INFO spark.SparkContext: Registered listener com.cloudera.spark.lineage.NavigatorAppListener 21/08/10 10:27:44 INFO logging.DriverLogger$DfsAsyncWriter: Started driver log file sync to: /user/spark/driverLogs/local-1628562463816_driver.log 4 21/08/10 10:27:45 INFO spark.SparkContext: Starting job: collect at RDDTest1.java:24 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Got job 0 (collect at RDDTest1.java:24) with 4 output partitions 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (collect at RDDTest1.java:24) 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Parents of final stage: List() 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Missing parents: List() 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[2] at glom at RDDTest1.java:24), which has no missing parents 21/08/10 10:27:45 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 2.6 KB, free 366.3 MB) 21/08/10 10:27:45 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1578.0 B, free 366.3 MB) 21/08/10 10:27:45 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hp2:40851 (size: 1578.0 B, free: 366.3 MB) 21/08/10 10:27:45 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1164 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Submitting 4 missing tasks from ResultStage 0 (MapPartitionsRDD[2] at glom at RDDTest1.java:24) (first 15 tasks are for partitions Vector(0, 1, 2, 3)) 21/08/10 10:27:45 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 4 tasks 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7723 bytes) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7723 bytes) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, localhost, executor driver, partition 2, PROCESS_LOCAL, 7723 bytes) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, localhost, executor driver, partition 3, PROCESS_LOCAL, 7725 bytes) 21/08/10 10:27:45 INFO executor.Executor: Running task 2.0 in stage 0.0 (TID 2) 21/08/10 10:27:45 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1) 21/08/10 10:27:45 INFO executor.Executor: Running task 3.0 in stage 0.0 (TID 3) 21/08/10 10:27:45 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0) 21/08/10 10:27:45 INFO executor.Executor: Fetching spark://hp2:43299/jars/SparkStudy-1.0-SNAPSHOT.jar with timestamp 1628562463771 21/08/10 10:27:45 INFO client.TransportClientFactory: Successfully created connection to hp2/10.31.1.124:43299 after 35 ms (0 ms spent in bootstraps) 21/08/10 10:27:45 INFO util.Utils: Fetching spark://hp2:43299/jars/SparkStudy-1.0-SNAPSHOT.jar to /tmp/spark-32205eb6-26e1-4a05-a3a3-6d9ef1de2758/userFiles-06ce7bc2-3bc9-4c0d-8cc0-3c345e08cc24/fetchFileTemp2728528362571858765.tmp 21/08/10 10:27:45 INFO executor.Executor: Adding file:/tmp/spark-32205eb6-26e1-4a05-a3a3-6d9ef1de2758/userFiles-06ce7bc2-3bc9-4c0d-8cc0-3c345e08cc24/SparkStudy-1.0-SNAPSHOT.jar to class loader 21/08/10 10:27:45 INFO executor.Executor: Finished task 2.0 in stage 0.0 (TID 2). 778 bytes result sent to driver 21/08/10 10:27:45 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 778 bytes result sent to driver 21/08/10 10:27:45 INFO executor.Executor: Finished task 3.0 in stage 0.0 (TID 3). 780 bytes result sent to driver 21/08/10 10:27:45 INFO executor.Executor: Finished task 1.0 in stage 0.0 (TID 1). 778 bytes result sent to driver 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 229 ms on localhost (executor driver) (1/4) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 228 ms on localhost (executor driver) (2/4) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 224 ms on localhost (executor driver) (3/4) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 253 ms on localhost (executor driver) (4/4) 21/08/10 10:27:45 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 21/08/10 10:27:45 INFO scheduler.DAGScheduler: ResultStage 0 (collect at RDDTest1.java:24) finished in 0.616 s 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Job 0 finished: collect at RDDTest1.java:24, took 0.684849 s [[1], [2], [3], [4, 5]] 21/08/10 10:27:45 INFO spark.SparkContext: Starting job: collect at RDDTest1.java:25 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Got job 1 (collect at RDDTest1.java:25) with 4 output partitions 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Final stage: ResultStage 1 (collect at RDDTest1.java:25) 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Parents of final stage: List() 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Missing parents: List() 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Submitting ResultStage 1 (ParallelCollectionRDD[0] at parallelize at RDDTest1.java:16), which has no missing parents 21/08/10 10:27:45 INFO memory.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 1688.0 B, free 366.3 MB) 21/08/10 10:27:45 INFO memory.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 1083.0 B, free 366.3 MB) 21/08/10 10:27:45 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on hp2:40851 (size: 1083.0 B, free: 366.3 MB) 21/08/10 10:27:45 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1164 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Submitting 4 missing tasks from ResultStage 1 (ParallelCollectionRDD[0] at parallelize at RDDTest1.java:16) (first 15 tasks are for partitions Vector(0, 1, 2, 3)) 21/08/10 10:27:45 INFO scheduler.TaskSchedulerImpl: Adding task set 1.0 with 4 tasks 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 1.0 (TID 4, localhost, executor driver, partition 0, PROCESS_LOCAL, 7723 bytes) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 1.0 (TID 5, localhost, executor driver, partition 1, PROCESS_LOCAL, 7723 bytes) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 2.0 in stage 1.0 (TID 6, localhost, executor driver, partition 2, PROCESS_LOCAL, 7723 bytes) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Starting task 3.0 in stage 1.0 (TID 7, localhost, executor driver, partition 3, PROCESS_LOCAL, 7725 bytes) 21/08/10 10:27:45 INFO executor.Executor: Running task 2.0 in stage 1.0 (TID 6) 21/08/10 10:27:45 INFO executor.Executor: Running task 0.0 in stage 1.0 (TID 4) 21/08/10 10:27:45 INFO executor.Executor: Running task 3.0 in stage 1.0 (TID 7) 21/08/10 10:27:45 INFO executor.Executor: Running task 1.0 in stage 1.0 (TID 5) 21/08/10 10:27:45 INFO executor.Executor: Finished task 2.0 in stage 1.0 (TID 6). 669 bytes result sent to driver 21/08/10 10:27:45 INFO executor.Executor: Finished task 1.0 in stage 1.0 (TID 5). 669 bytes result sent to driver 21/08/10 10:27:45 INFO executor.Executor: Finished task 3.0 in stage 1.0 (TID 7). 671 bytes result sent to driver 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 1.0 (TID 5) in 26 ms on localhost (executor driver) (1/4) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 3.0 in stage 1.0 (TID 7) in 21 ms on localhost (executor driver) (2/4) 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 2.0 in stage 1.0 (TID 6) in 24 ms on localhost (executor driver) (3/4) 21/08/10 10:27:45 INFO executor.Executor: Finished task 0.0 in stage 1.0 (TID 4). 669 bytes result sent to driver 21/08/10 10:27:45 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 1.0 (TID 4) in 37 ms on localhost (executor driver) (4/4) 21/08/10 10:27:45 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool 21/08/10 10:27:45 INFO scheduler.DAGScheduler: ResultStage 1 (collect at RDDTest1.java:25) finished in 0.045 s 21/08/10 10:27:45 INFO scheduler.DAGScheduler: Job 1 finished: collect at RDDTest1.java:25, took 0.048805 s [1, 2, 3, 4, 5] 21/08/10 10:27:45 INFO spark.SparkContext: Invoking stop() from shutdown hook 21/08/10 10:27:45 INFO server.AbstractConnector: Stopped Spark@2c5d601e{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 21/08/10 10:27:45 INFO ui.SparkUI: Stopped Spark web UI at http://hp2:4040 21/08/10 10:27:46 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 21/08/10 10:27:46 INFO memory.MemoryStore: MemoryStore cleared 21/08/10 10:27:46 INFO storage.BlockManager: BlockManager stopped 21/08/10 10:27:46 INFO storage.BlockManagerMaster: BlockManagerMaster stopped 21/08/10 10:27:46 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 21/08/10 10:27:46 INFO spark.SparkContext: Successfully stopped SparkContext 21/08/10 10:27:46 INFO util.ShutdownHookManager: Shutdown hook called 21/08/10 10:27:46 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-42d5a411-6727-4c4b-9f06-f9a57d581150 21/08/10 10:27:46 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-32205eb6-26e1-4a05-a3a3-6d9ef1de2758 [root@hp2 javaspark]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

4.1.2 通过文件创建rdd

读取一个idcard.txt,获取年龄和性别。

代码:

package org.example; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.SparkConf; import org.apache.spark.api.java.function.VoidFunction; import java.util.Calendar; public class RDDTest2 { public static void main(String[] args){ SparkConf conf = new SparkConf().setAppName("RDDTest2").setMaster("local[2]"); JavaSparkContext sc = new JavaSparkContext(conf); JavaRDD<String> rdd1 = sc.textFile("file:///home/pyspark/idcard.txt"); System.out.println("\n" + "\n" + "\n"); rdd1.foreach(new VoidFunction<String>() { @Override public void call(String s) throws Exception { //System.out.println(s + "\n"); Calendar cal = Calendar.getInstance(); int yearNow = cal.get(Calendar.YEAR); int monthNow = cal.get(Calendar.MONTH)+1; int dayNow = cal.get(Calendar.DATE); int year = Integer.valueOf(s.substring(6, 10)); int month = Integer.valueOf(s.substring(10,12)); int day = Integer.valueOf(s.substring(12,14)); String age; if ((month < monthNow) || (month == monthNow && day<= dayNow) ){ age = String.valueOf(yearNow - year); }else { age = String.valueOf(yearNow - year-1); } int sexType = Integer.valueOf(s.substring(16).substring(0, 1)); String sex = ""; if (sexType%2 == 0) { sex = "女"; } else { sex = "男"; } System.out.println("年龄: " + age + "," + "性别:" + sex + ";"); } }); sc.stop(); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

测试记录:

[root@hp2 javaspark]# spark-submit \ > --class org.example.RDDTest2 \ > --master local[2] \ > /home/javaspark/SparkStudy-1.0-SNAPSHOT.jar 21/08/10 11:19:17 INFO spark.SparkContext: Running Spark version 2.4.0-cdh6.3.1 21/08/10 11:19:17 INFO logging.DriverLogger: Added a local log appender at: /tmp/spark-7bb3e4ce-ce8f-420f-98cf-636f83d36185/__driver_logs__/driver.log 21/08/10 11:19:17 INFO spark.SparkContext: Submitted application: RDDTest2 21/08/10 11:19:17 INFO spark.SecurityManager: Changing view acls to: root 21/08/10 11:19:17 INFO spark.SecurityManager: Changing modify acls to: root 21/08/10 11:19:17 INFO spark.SecurityManager: Changing view acls groups to: 21/08/10 11:19:17 INFO spark.SecurityManager: Changing modify acls groups to: 21/08/10 11:19:17 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 21/08/10 11:19:17 INFO util.Utils: Successfully started service 'sparkDriver' on port 42332. 21/08/10 11:19:17 INFO spark.SparkEnv: Registering MapOutputTracker 21/08/10 11:19:17 INFO spark.SparkEnv: Registering BlockManagerMaster 21/08/10 11:19:17 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 21/08/10 11:19:17 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 21/08/10 11:19:17 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-5e61909d-7341-46a0-ae56-7990cf63f7fd 21/08/10 11:19:17 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB 21/08/10 11:19:17 INFO spark.SparkEnv: Registering OutputCommitCoordinator 21/08/10 11:19:17 INFO util.log: Logging initialized @1659ms 21/08/10 11:19:17 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: 2018-09-05T05:11:46+08:00, git hash: 3ce520221d0240229c862b122d2b06c12a625732 21/08/10 11:19:17 INFO server.Server: Started @1739ms 21/08/10 11:19:17 INFO server.AbstractConnector: Started ServerConnector@2d72f75e{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 21/08/10 11:19:17 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5c77053b{/jobs,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@41200e0c{/jobs/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@40f33492{/jobs/job,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6bc28a83{/jobs/job/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@324c64cd{/stages,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@13579834{/stages/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@24be2d9c{/stages/stage,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2555fff0{/stages/stage/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70d2e40b{/stages/pool,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@120f38e6{/stages/pool/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7a0e1b5e{/storage,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@702ed190{/storage/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@173b9122{/storage/rdd,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7c18432b{/storage/rdd/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7646731d{/environment,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70e29e14{/environment/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3b1bb3ab{/executors,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a4bef8{/executors/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@40bffbca{/executors/threadDump,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2449cff7{/executors/threadDump/json,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@42a9a63e{/static,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1a15b789{/,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@57f791c6{/api,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@30c31dd7{/jobs/job/kill,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@499b2a5c{/stages/stage/kill,null,AVAILABLE,@Spark} 21/08/10 11:19:17 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hp2:4040 21/08/10 11:19:17 INFO spark.SparkContext: Added JAR file:/home/javaspark/SparkStudy-1.0-SNAPSHOT.jar at spark://hp2:42332/jars/SparkStudy-1.0-SNAPSHOT.jar with timestamp 1628565557741 21/08/10 11:19:17 INFO executor.Executor: Starting executor ID driver on host localhost 21/08/10 11:19:17 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 43944. 21/08/10 11:19:17 INFO netty.NettyBlockTransferService: Server created on hp2:43944 21/08/10 11:19:17 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 21/08/10 11:19:17 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hp2, 43944, None) 21/08/10 11:19:17 INFO storage.BlockManagerMasterEndpoint: Registering block manager hp2:43944 with 366.3 MB RAM, BlockManagerId(driver, hp2, 43944, None) 21/08/10 11:19:17 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hp2, 43944, None) 21/08/10 11:19:17 INFO storage.BlockManager: external shuffle service port = 7337 21/08/10 11:19:17 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, hp2, 43944, None) 21/08/10 11:19:18 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1ddd3478{/metrics/json,null,AVAILABLE,@Spark} 21/08/10 11:19:18 INFO scheduler.EventLoggingListener: Logging events to hdfs://nameservice1/user/spark/applicationHistory/local-1628565557783 21/08/10 11:19:18 INFO spark.SparkContext: Registered listener com.cloudera.spark.lineage.NavigatorAppListener 21/08/10 11:19:18 INFO logging.DriverLogger$DfsAsyncWriter: Started driver log file sync to: /user/spark/driverLogs/local-1628565557783_driver.log 21/08/10 11:19:19 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 290.4 KB, free 366.0 MB) 21/08/10 11:19:19 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 28.4 KB, free 366.0 MB) 21/08/10 11:19:19 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hp2:43944 (size: 28.4 KB, free: 366.3 MB) 21/08/10 11:19:19 INFO spark.SparkContext: Created broadcast 0 from textFile at RDDTest2.java:15 21/08/10 11:19:19 INFO mapred.FileInputFormat: Total input files to process : 1 21/08/10 11:19:19 INFO spark.SparkContext: Starting job: foreach at RDDTest2.java:18 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Got job 0 (foreach at RDDTest2.java:18) with 2 output partitions 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (foreach at RDDTest2.java:18) 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Parents of final stage: List() 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Missing parents: List() 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (file:///home/pyspark/idcard.txt MapPartitionsRDD[1] at textFile at RDDTest2.java:15), which has no missing parents 21/08/10 11:19:19 INFO memory.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 3.5 KB, free 366.0 MB) 21/08/10 11:19:19 INFO memory.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.1 KB, free 366.0 MB) 21/08/10 11:19:19 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on hp2:43944 (size: 2.1 KB, free: 366.3 MB) 21/08/10 11:19:19 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1164 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (file:///home/pyspark/idcard.txt MapPartitionsRDD[1] at textFile at RDDTest2.java:15) (first 15 tasks are for partitions Vector(0, 1)) 21/08/10 11:19:19 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 2 tasks 21/08/10 11:19:19 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7889 bytes) 21/08/10 11:19:19 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7889 bytes) 21/08/10 11:19:19 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0) 21/08/10 11:19:19 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1) 21/08/10 11:19:19 INFO executor.Executor: Fetching spark://hp2:42332/jars/SparkStudy-1.0-SNAPSHOT.jar with timestamp 1628565557741 21/08/10 11:19:19 INFO client.TransportClientFactory: Successfully created connection to hp2/10.31.1.124:42332 after 32 ms (0 ms spent in bootstraps) 21/08/10 11:19:19 INFO util.Utils: Fetching spark://hp2:42332/jars/SparkStudy-1.0-SNAPSHOT.jar to /tmp/spark-7bb3e4ce-ce8f-420f-98cf-636f83d36185/userFiles-24e1e0f6-8248-46e5-8d05-cfb4fc65f603/fetchFileTemp212516759783248335.tmp 21/08/10 11:19:19 INFO executor.Executor: Adding file:/tmp/spark-7bb3e4ce-ce8f-420f-98cf-636f83d36185/userFiles-24e1e0f6-8248-46e5-8d05-cfb4fc65f603/SparkStudy-1.0-SNAPSHOT.jar to class loader 21/08/10 11:19:19 INFO rdd.HadoopRDD: Input split: file:/home/pyspark/idcard.txt:0+104 21/08/10 11:19:19 INFO rdd.HadoopRDD: Input split: file:/home/pyspark/idcard.txt:104+105 年龄: 65,性别:男; 年龄: 51,性别:男; 年龄: 52,性别:男; 年龄: 40,性别:男; 年龄: 42,性别:男; 年龄: 52,性别:男; 年龄: 52,性别:女; 年龄: 30,性别:男; 年龄: 45,性别:男; 年龄: 57,性别:女; 年龄: 41,性别:男; 21/08/10 11:19:19 INFO executor.Executor: Finished task 1.0 in stage 0.0 (TID 1). 752 bytes result sent to driver 21/08/10 11:19:19 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 752 bytes result sent to driver 21/08/10 11:19:19 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 201 ms on localhost (executor driver) (1/2) 21/08/10 11:19:19 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 218 ms on localhost (executor driver) (2/2) 21/08/10 11:19:19 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 21/08/10 11:19:19 INFO scheduler.DAGScheduler: ResultStage 0 (foreach at RDDTest2.java:18) finished in 0.302 s 21/08/10 11:19:19 INFO scheduler.DAGScheduler: Job 0 finished: foreach at RDDTest2.java:18, took 0.387116 s 21/08/10 11:19:19 INFO server.AbstractConnector: Stopped Spark@2d72f75e{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 21/08/10 11:19:19 INFO ui.SparkUI: Stopped Spark web UI at http://hp2:4040 21/08/10 11:19:19 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 21/08/10 11:19:19 INFO memory.MemoryStore: MemoryStore cleared 21/08/10 11:19:19 INFO storage.BlockManager: BlockManager stopped 21/08/10 11:19:19 INFO storage.BlockManagerMaster: BlockManagerMaster stopped 21/08/10 11:19:19 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 21/08/10 11:19:19 INFO spark.SparkContext: Successfully stopped SparkContext 21/08/10 11:19:19 INFO util.ShutdownHookManager: Shutdown hook called 21/08/10 11:19:19 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-7bb3e4ce-ce8f-420f-98cf-636f83d36185 21/08/10 11:19:19 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-a8bc97f8-33cb-44c1-8d55-5a156b85add7 [root@hp2 javaspark]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

4.2 RDD的map操作

求txt文档文本总长度

代码:

package org.example; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.SparkConf; import org.apache.spark.api.java.function.Function; import org.apache.spark.api.java.function.VoidFunction; public class RDDTest3 { public static <integer> void main(String[] args){ SparkConf conf = new SparkConf().setAppName("RDDTest3").setMaster("local[2]"); JavaSparkContext sc = new JavaSparkContext(conf); JavaRDD<String> rdd1 = sc.textFile("file:///home/pyspark/idcard.txt"); JavaRDD<Integer> rdd2 = rdd1.map(s -> s.length()); Integer total = rdd2.reduce((a, b) -> a + b); System.out.println("\n" + "\n" + "\n"); System.out.println(total); } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

测试记录

[root@hp2 javaspark]# spark-submit \ > --class org.example.RDDTest3 \ > --master local[2] \ > /home/javaspark/SparkStudy-1.0-SNAPSHOT.jar 21/08/10 14:11:59 INFO spark.SparkContext: Running Spark version 2.4.0-cdh6.3.1 21/08/10 14:11:59 INFO logging.DriverLogger: Added a local log appender at: /tmp/spark-42222c27-84c0-43f6-ae47-36be1aa8bb42/__driver_logs__/driver.log 21/08/10 14:11:59 INFO spark.SparkContext: Submitted application: RDDTest3 21/08/10 14:11:59 INFO spark.SecurityManager: Changing view acls to: root 21/08/10 14:11:59 INFO spark.SecurityManager: Changing modify acls to: root 21/08/10 14:11:59 INFO spark.SecurityManager: Changing view acls groups to: 21/08/10 14:11:59 INFO spark.SecurityManager: Changing modify acls groups to: 21/08/10 14:11:59 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 21/08/10 14:11:59 INFO util.Utils: Successfully started service 'sparkDriver' on port 35539. 21/08/10 14:11:59 INFO spark.SparkEnv: Registering MapOutputTracker 21/08/10 14:11:59 INFO spark.SparkEnv: Registering BlockManagerMaster 21/08/10 14:11:59 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 21/08/10 14:11:59 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 21/08/10 14:11:59 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-a84fcf89-efde-4c5d-814d-957a8737f526 21/08/10 14:11:59 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB 21/08/10 14:11:59 INFO spark.SparkEnv: Registering OutputCommitCoordinator 21/08/10 14:11:59 INFO util.log: Logging initialized @1525ms 21/08/10 14:11:59 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: 2018-09-05T05:11:46+08:00, git hash: 3ce520221d0240229c862b122d2b06c12a625732 21/08/10 14:11:59 INFO server.Server: Started @1602ms 21/08/10 14:11:59 INFO server.AbstractConnector: Started ServerConnector@5400db36{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 21/08/10 14:11:59 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@21a5fd96{/jobs,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@72efb5c1{/jobs/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6d511b5f{/jobs/job,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4fbdc0f0{/jobs/job/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2ad3a1bb{/stages,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6bc28a83{/stages/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@324c64cd{/stages/stage,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5bd73d1a{/stages/stage/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@aec50a1{/stages/pool,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2555fff0{/stages/pool/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70d2e40b{/storage,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@120f38e6{/storage/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7a0e1b5e{/storage/rdd,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@702ed190{/storage/rdd/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@173b9122{/environment,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7c18432b{/environment/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7646731d{/executors,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@70e29e14{/executors/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3b1bb3ab{/executors/threadDump,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a4bef8{/executors/threadDump/json,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@40bffbca{/static,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@69adf72c{/,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@797501a{/api,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6c4f9535{/jobs/job/kill,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5bd1ceca{/stages/stage/kill,null,AVAILABLE,@Spark} 21/08/10 14:11:59 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hp2:4040 21/08/10 14:11:59 INFO spark.SparkContext: Added JAR file:/home/javaspark/SparkStudy-1.0-SNAPSHOT.jar at spark://hp2:35539/jars/SparkStudy-1.0-SNAPSHOT.jar with timestamp 1628575919713 21/08/10 14:11:59 INFO executor.Executor: Starting executor ID driver on host localhost 21/08/10 14:11:59 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 46347. 21/08/10 14:11:59 INFO netty.NettyBlockTransferService: Server created on hp2:46347 21/08/10 14:11:59 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 21/08/10 14:11:59 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hp2, 46347, None) 21/08/10 14:11:59 INFO storage.BlockManagerMasterEndpoint: Registering block manager hp2:46347 with 366.3 MB RAM, BlockManagerId(driver, hp2, 46347, None) 21/08/10 14:11:59 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hp2, 46347, None) 21/08/10 14:11:59 INFO storage.BlockManager: external shuffle service port = 7337 21/08/10 14:11:59 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, hp2, 46347, None) 21/08/10 14:12:00 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@189b5fb1{/metrics/json,null,AVAILABLE,@Spark} 21/08/10 14:12:00 INFO scheduler.EventLoggingListener: Logging events to hdfs://nameservice1/user/spark/applicationHistory/local-1628575919757 21/08/10 14:12:00 INFO spark.SparkContext: Registered listener com.cloudera.spark.lineage.NavigatorAppListener 21/08/10 14:12:00 INFO logging.DriverLogger$DfsAsyncWriter: Started driver log file sync to: /user/spark/driverLogs/local-1628575919757_driver.log 21/08/10 14:12:01 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 290.4 KB, free 366.0 MB) 21/08/10 14:12:01 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 28.4 KB, free 366.0 MB) 21/08/10 14:12:01 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hp2:46347 (size: 28.4 KB, free: 366.3 MB) 21/08/10 14:12:01 INFO spark.SparkContext: Created broadcast 0 from textFile at RDDTest3.java:15 21/08/10 14:12:01 INFO mapred.FileInputFormat: Total input files to process : 1 21/08/10 14:12:01 INFO spark.SparkContext: Starting job: collect at RDDTest3.java:17 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Got job 0 (collect at RDDTest3.java:17) with 2 output partitions 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (collect at RDDTest3.java:17) 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Parents of final stage: List() 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Missing parents: List() 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[2] at map at RDDTest3.java:16), which has no missing parents 21/08/10 14:12:01 INFO memory.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.5 KB, free 366.0 MB) 21/08/10 14:12:01 INFO memory.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.6 KB, free 366.0 MB) 21/08/10 14:12:01 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on hp2:46347 (size: 2.6 KB, free: 366.3 MB) 21/08/10 14:12:01 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1164 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[2] at map at RDDTest3.java:16) (first 15 tasks are for partitions Vector(0, 1)) 21/08/10 14:12:01 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 2 tasks 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7889 bytes) 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7889 bytes) 21/08/10 14:12:01 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1) 21/08/10 14:12:01 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0) 21/08/10 14:12:01 INFO executor.Executor: Fetching spark://hp2:35539/jars/SparkStudy-1.0-SNAPSHOT.jar with timestamp 1628575919713 21/08/10 14:12:01 INFO client.TransportClientFactory: Successfully created connection to hp2/10.31.1.124:35539 after 30 ms (0 ms spent in bootstraps) 21/08/10 14:12:01 INFO util.Utils: Fetching spark://hp2:35539/jars/SparkStudy-1.0-SNAPSHOT.jar to /tmp/spark-42222c27-84c0-43f6-ae47-36be1aa8bb42/userFiles-04d965d1-4884-4055-a54a-d219f83bc4dd/fetchFileTemp1205702625799865151.tmp 21/08/10 14:12:01 INFO executor.Executor: Adding file:/tmp/spark-42222c27-84c0-43f6-ae47-36be1aa8bb42/userFiles-04d965d1-4884-4055-a54a-d219f83bc4dd/SparkStudy-1.0-SNAPSHOT.jar to class loader 21/08/10 14:12:01 INFO rdd.HadoopRDD: Input split: file:/home/pyspark/idcard.txt:104+105 21/08/10 14:12:01 INFO rdd.HadoopRDD: Input split: file:/home/pyspark/idcard.txt:0+104 21/08/10 14:12:01 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 808 bytes result sent to driver 21/08/10 14:12:01 INFO executor.Executor: Finished task 1.0 in stage 0.0 (TID 1). 763 bytes result sent to driver 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 203 ms on localhost (executor driver) (1/2) 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 220 ms on localhost (executor driver) (2/2) 21/08/10 14:12:01 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 21/08/10 14:12:01 INFO scheduler.DAGScheduler: ResultStage 0 (collect at RDDTest3.java:17) finished in 0.298 s 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Job 0 finished: collect at RDDTest3.java:17, took 0.384907 s [18, 18, 18, 18, 18, 18, 18, 18, 18, 18, 18] 21/08/10 14:12:01 INFO spark.SparkContext: Starting job: reduce at RDDTest3.java:18 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Got job 1 (reduce at RDDTest3.java:18) with 2 output partitions 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Final stage: ResultStage 1 (reduce at RDDTest3.java:18) 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Parents of final stage: List() 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Missing parents: List() 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Submitting ResultStage 1 (MapPartitionsRDD[2] at map at RDDTest3.java:16), which has no missing parents 21/08/10 14:12:01 INFO memory.MemoryStore: Block broadcast_2 stored as values in memory (estimated size 4.8 KB, free 366.0 MB) 21/08/10 14:12:01 INFO memory.MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 2.7 KB, free 366.0 MB) 21/08/10 14:12:01 INFO storage.BlockManagerInfo: Added broadcast_2_piece0 in memory on hp2:46347 (size: 2.7 KB, free: 366.3 MB) 21/08/10 14:12:01 INFO spark.SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1164 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 1 (MapPartitionsRDD[2] at map at RDDTest3.java:16) (first 15 tasks are for partitions Vector(0, 1)) 21/08/10 14:12:01 INFO scheduler.TaskSchedulerImpl: Adding task set 1.0 with 2 tasks 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 1.0 (TID 2, localhost, executor driver, partition 0, PROCESS_LOCAL, 7889 bytes) 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 1.0 (TID 3, localhost, executor driver, partition 1, PROCESS_LOCAL, 7889 bytes) 21/08/10 14:12:01 INFO executor.Executor: Running task 0.0 in stage 1.0 (TID 2) 21/08/10 14:12:01 INFO executor.Executor: Running task 1.0 in stage 1.0 (TID 3) 21/08/10 14:12:01 INFO rdd.HadoopRDD: Input split: file:/home/pyspark/idcard.txt:104+105 21/08/10 14:12:01 INFO rdd.HadoopRDD: Input split: file:/home/pyspark/idcard.txt:0+104 21/08/10 14:12:01 INFO executor.Executor: Finished task 0.0 in stage 1.0 (TID 2). 755 bytes result sent to driver 21/08/10 14:12:01 INFO executor.Executor: Finished task 1.0 in stage 1.0 (TID 3). 755 bytes result sent to driver 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 20 ms on localhost (executor driver) (1/2) 21/08/10 14:12:01 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 1.0 (TID 3) in 20 ms on localhost (executor driver) (2/2) 21/08/10 14:12:01 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool 21/08/10 14:12:01 INFO scheduler.DAGScheduler: ResultStage 1 (reduce at RDDTest3.java:18) finished in 0.032 s 21/08/10 14:12:01 INFO scheduler.DAGScheduler: Job 1 finished: reduce at RDDTest3.java:18, took 0.034827 s 198 21/08/10 14:12:01 INFO spark.SparkContext: Invoking stop() from shutdown hook 21/08/10 14:12:01 INFO server.AbstractConnector: Stopped Spark@5400db36{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 21/08/10 14:12:01 INFO ui.SparkUI: Stopped Spark web UI at http://hp2:4040 21/08/10 14:12:02 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 21/08/10 14:12:02 INFO memory.MemoryStore: MemoryStore cleared 21/08/10 14:12:02 INFO storage.BlockManager: BlockManager stopped 21/08/10 14:12:02 INFO storage.BlockManagerMaster: BlockManagerMaster stopped 21/08/10 14:12:02 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 21/08/10 14:12:02 INFO spark.SparkContext: Successfully stopped SparkContext 21/08/10 14:12:02 INFO util.ShutdownHookManager: Shutdown hook called 21/08/10 14:12:02 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-db4c777c-23e1-4bb4-9c97-22cfdbfd6435 21/08/10 14:12:02 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-42222c27-84c0-43f6-ae47-36be1aa8bb42 [root@hp2 javaspark]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

4.3 RDD使用函数

上一例,只是将len函数封装成一个函数。

代码:

package org.example; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.SparkConf; import org.apache.spark.api.java.function.Function; import org.apache.spark.api.java.function.VoidFunction; public class RDDTest4 { public static void main(String[] args){ SparkConf conf = new SparkConf().setAppName("RDDTest3").setMaster("local[2]"); JavaSparkContext sc = new JavaSparkContext(conf); JavaRDD<String> rdd1 = sc.textFile("file:///home/pyspark/idcard.txt"); JavaRDD<Integer> rdd2 = rdd1.map(s -> len(s)); Integer total = rdd2.reduce((a, b) -> a + b); System.out.println("\n" + "\n" + "\n"); System.out.println(total); } public static int len(String s){ int str_length; str_length = s.length(); return str_length; } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

测试记录: