热门标签

热门文章

- 1痞子衡嵌入式:恩智浦i.MX RT1xxx系列MCU启动那些事(8.1)- SEMC NAND启动时间(RT1170)...

- 2【数据库】SQL Server 数据库、附加数据库时出错。有关详细信息,请单击“消息”列中的超链接_附加数据库时出错,请单击消息中的超链接

- 3隐藏StatusBars_windowinsetscontroller.hide(int)

- 4Spring IOC 控制反转总结

- 5Python 基础API_python api

- 6MySQL可重复读应用场景_mysql-repeatable read 可重复读隔离级别-幻读实例场景

- 7“店+“简化电商平台与客服系统的API无代码集成

- 805-1 SQL语言数据查询_单表查询_sql表查询

- 9常用黑客指令【建议收藏】_黑客代码

- 10python语言程序设计基础,python编程代码大全

当前位置: article > 正文

spark--Structured Streaming实战-★★★★_spark structured streaming编程实践

作者:羊村懒王 | 2024-06-12 22:46:36

赞

踩

spark structured streaming编程实践

http://spark.apache.org/docs/latest/structured-streaming-programming-guide.html#overview

Source-数据源

- file source

- kafka source

- 开发使用

- scoket source

- 这里案例使用

- rate souce

package cn.hanjiaxiaozhi.structedstream import org.apache.spark.SparkContext import org.apache.spark.sql.streaming.Trigger import org.apache.spark.sql.{DataFrame, Dataset, Row, SparkSession} /** * Author hanjiaxiaozhi * Date 2020/7/26 16:35 * Desc 演示使用StructuredStreaming读取Socket数据并做WordCount */ object WordCount { def main(args: Array[String]): Unit = { //1.准备StructuredStreaming执行环境 //SparkContext?--RDD //SparkSession?--DataFrame/DataSet //StreamingContext?--DStream //这里应该要使用SparkSession,因为StructuredStreaming的编程API还是DataFrame/DataSet val spark: SparkSession = SparkSession.builder.appName("wc").master("local[*]").getOrCreate() val sc: SparkContext = spark.sparkContext sc.setLogLevel("WARN") //2.读取node01:9999端口的数据 val df: DataFrame = spark.readStream//表示使用DataFrame/DataSet做流处理并加载数据 .option("host", "node01")//指定ip .option("port", 9999)//指定端口 .format("socket")//指定数据源为socket .load()//开始加载数据 //3.做WordCount import spark.implicits._ val ds: Dataset[String] = df.as[String] val result: Dataset[Row] = ds.flatMap(_.split(" ")).groupBy("value").count().sort($"count".desc) //4.输出结果 //result.show()//错误,这是离线处理是的输出,现在做实时处理 //Queries with streaming sources must be executed with writeStream.start();; result.writeStream .format("console")//指定往控制台输出 .outputMode("complete")//输出模式,complete表示每次将所有数据都输出 .trigger(Trigger.ProcessingTime(0))//触发计算的时间间隔,0表示尽可能快的触发执行,//If `interval` is 0, the query will run as fast as possible. .start()//开启 .awaitTermination()//等待结束 //可以观察到确实可以完成WordCount计算,并且自动做了和历史数据的聚合,API使用起来比SparkStreaming要简单 } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

Operation-操作

- 通过查看官网可以知道,StructuredStreaming做流式数据处理的API和之前学习的DataFrame/DataSet的用法基本类似

OutPut-输出

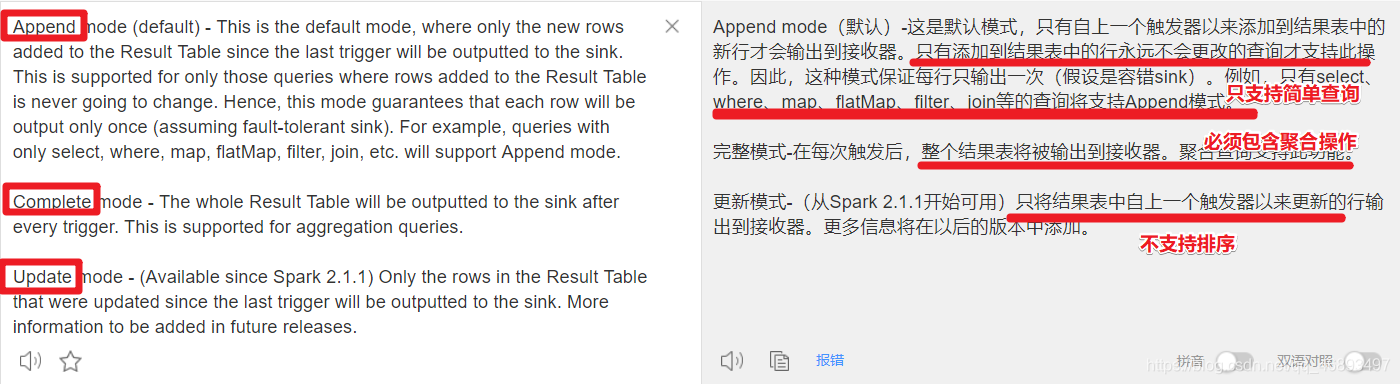

OutputModes-输出模式

package cn.hanjiaxiaozhi.structedstream import org.apache.spark.SparkContext import org.apache.spark.sql.streaming.Trigger import org.apache.spark.sql.{DataFrame, Dataset, Row, SparkSession} /** * Author hanjiaxiaozhi * Date 2020/7/26 16:35 * Desc 演示使用StructuredStreaming读取Socket数据并做WordCount */ object WordCount { def main(args: Array[String]): Unit = { //1.准备StructuredStreaming执行环境 //SparkContext?--RDD //SparkSession?--DataFrame/DataSet //StreamingContext?--DStream //这里应该要使用SparkSession,因为StructuredStreaming的编程API还是DataFrame/DataSet val spark: SparkSession = SparkSession.builder.appName("wc").master("local[*]").getOrCreate() val sc: SparkContext = spark.sparkContext sc.setLogLevel("WARN") //2.读取node01:9999端口的数据 val df: DataFrame = spark.readStream//表示使用DataFrame/DataSet做流处理并加载数据 .option("host", "node01")//指定ip .option("port", 9999)//指定端口 .format("socket")//指定数据源为socket .load()//开始加载数据 //3.做WordCount import spark.implicits._ val ds: Dataset[String] = df.as[String] val result: Dataset[Row] = ds.flatMap(_.split(" ")).groupBy("value").count().sort($"count".desc) //4.输出结果 //result.show()//错误,这是离线处理是的输出,现在做实时处理 //Queries with streaming sources must be executed with writeStream.start();; result.writeStream .format("console")//指定往控制台输出 .outputMode("complete")//输出模式,complete表示每次将所有数据都输出,必须包含聚合 //.outputMode("append")//默认模式,表示输出新增的行,只支持简单查询,不支持聚合//Append output mode not supported when there are streaming aggregations on streaming //.outputMode("update")//更新模式,表示输出有更新的行,不支持排序//Sorting is not supported on streaming DataFrames/Datasets .trigger(Trigger.ProcessingTime(0))//触发计算的时间间隔,0表示尽可能快的触发执行,//If `interval` is 0, the query will run as fast as possible. .start()//开启 .awaitTermination()//等待结束 //可以观察到确实可以完成WordCount计算,并且自动做了和历史数据的聚合,API使用起来比SparkStreaming要简单 } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

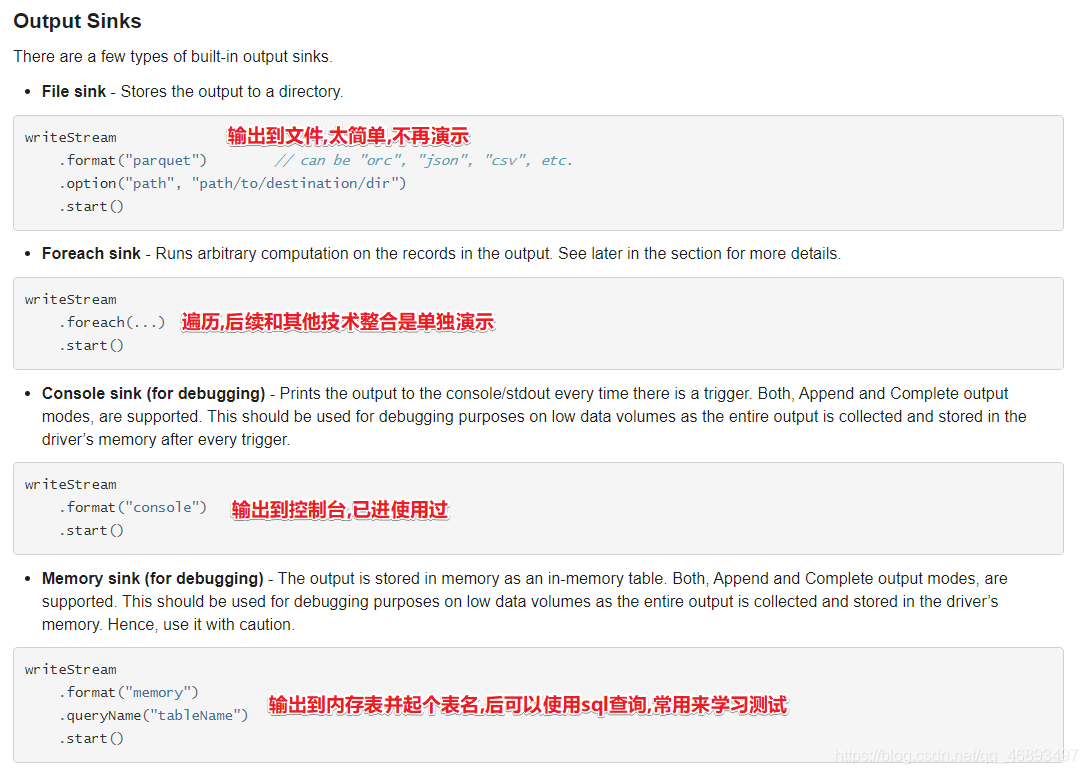

OutputSinks-输出位置

package cn.hanjiaxiaozhi.structedstream import org.apache.spark.SparkContext import org.apache.spark.sql.streaming.{StreamingQuery, Trigger} import org.apache.spark.sql.{DataFrame, Dataset, Row, SparkSession} /** * Author hanjiaxiaozhi * Date 2020/7/26 16:35 * Desc 演示使用StructuredStreaming读取Socket数据并做WordCount */ object WordCount2 { def main(args: Array[String]): Unit = { //1.准备StructuredStreaming执行环境 //SparkContext?--RDD //SparkSession?--DataFrame/DataSet //StreamingContext?--DStream //这里应该要使用SparkSession,因为StructuredStreaming的编程API还是DataFrame/DataSet val spark: SparkSession = SparkSession.builder.appName("wc").master("local[*]").getOrCreate() val sc: SparkContext = spark.sparkContext sc.setLogLevel("WARN") //2.读取node01:9999端口的数据 val df: DataFrame = spark.readStream//表示使用DataFrame/DataSet做流处理并加载数据 .option("host", "node01")//指定ip .option("port", 9999)//指定端口 .format("socket")//指定数据源为socket .load()//开始加载数据 //3.做WordCount import spark.implicits._ val ds: Dataset[String] = df.as[String] val result: Dataset[Row] = ds.flatMap(_.split(" ")).groupBy("value").count().sort($"count".desc) //4.输出结果--输出到内存表,方便后续使用sql进行查询 val query: StreamingQuery = result.writeStream .format("memory") //表示输出到内存表 .queryName("t_memory") //起个表名 .outputMode("complete") //.trigger(Trigger.ProcessingTime(0))//不指定也有默认值0 .start()//开启 //.awaitTermination() //如果awaitTermination在这里写,后面的查询没有计划执行了! //在这里可以一直去查询内存表 while (true){ Thread.sleep(5000)//每隔5s查一次内存表,看看表中的数据,方便测试 spark.sql("select * from t_memory").show() } query.awaitTermination()//等待停止 } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

本文内容由网友自发贡献,转载请注明出处:【wpsshop博客】

推荐阅读

相关标签