- 1PyTorch版YOLOv4训练自己的数据集---基于Google Colab_whisper训练自己数据

- 2神经网络基础 —— Seq2Seq的应用_seq1的优先级高于seq2怎么用

- 3Python实战:史上最全| 圣诞树画法_python 3d粒子圣诞树

- 4springboot2.3.x整合JWT_springboot 整合jwt

- 5大语言模型原理与工程实践:适配器微调

- 6数据分析软件之SPSS、Stata、Matlab_spss和stata

- 7【Vue3】Axios简易二次封装+token+跨域问题+接口同步调用使用。_vue3 axios token

- 8ERR! configure error gyp ERR! stack Error: Can't find Python executable "python"_gyp err! configure error gyp err! stack error: can

- 9个人博客系统_个人博客应该有哪些功能

- 10【网路安全 --- pikachu靶场安装】超详细的pikachu靶场安装教程(提供靶场代码及工具)

机器学习笔记(7)——决策树&随机森林代码_决策树代码

赞

踩

机器学习笔记(7)——决策树&随机森林代码

本文部分图片与文字来源网络或学术论文,仅供学习使用,持续修改完善中。

目录

1、决策树

决策树(Decision Tree)是一种非参数的有监督学习方法,它能够从一系列有特征和标签的数据中总结出决策规 则,并用树状图的结构来呈现这些规则,以解决分类和回归问题。

python写决策树

- import math

- import random

- from collections import Counter

- import pandas as pd

- import numpy as np

- import pygraphviz as pyg

-

- def calAccuracy(pred,label):

- """

- calAccuracy函数:计算准确率

- :param pred:预测值

- :param label:真实值

- :returns acc:正确率

- :returns p:查准率

- :returns r:查全率

- :returns f1:F1度量

- """

- num = len(pred)#样本个数

- TP = 0

- FN = 0

- FP = 0

- TN = 0

- '''分别计算TP FP FN TN'''

- for i in range(num):

- if pred[i] == 1 and label[i]==1:

- TP += 1

- elif pred[i] == 1 and label[i]==0:

- FP += 1

- elif pred[i] == 0 and label[i]==1:

- FN += 1

- elif pred[i] == 0 and label[i]==0:

- TN += 1

- '''计算准确率 查准率 查全率 F1度量'''

- acc = (TN+TP) / num

- if TP+FP==0:

- p = 0

- else:

- p = TP/(TP+FP)

- if TP+FN == 0:

- r = 0

- else:

- r = TP/(TP+FN)

- if p+r==0:

- f1 = 0

- else:

- f1 = 2*p*r/(p+r)

- return acc,p,r,f1

-

- def get_dataset(filename ="watermelon.txt"):

- """

- get_dataset:从文件中读取数据,将其转化为指定格式的dataset和col_name

- :returns dataset:数据+标签 col_name:列名,即有哪些属性

- """

- df = pd.read_csv(filename)

- #下面分别提取标签和数据:一行对应一个数据

- dataset = df.values.tolist()

- col_name = df.columns.values[0:-1].tolist()#剔除最后一列

- return dataset,col_name

-

- class Node(object):

- #决策树节点类

- def __init__(self):

- self.attr = None # 决策节点属性

- self.value = None # 节点与父节点的连接属性

- self.isLeaf = False # 是否为叶节点 T or F

- self.label = 0 # 叶节点对应标签

- self.parent = None # 父节点

- self.children = [] # 子列表

-

- @staticmethod

- def set_leaf(self, label):

- """

- :param self:

- :param label: 叶子节点分类值 int

- :return:

- """

- self.isLeaf = True

- self.label= label

-

- class DataSet(object):

- """

- 数据集类

- """

- def __init__(self, data_set, col_name):

- """

- :param col_name 列名 list[]

- :param data_set 数据集 list[list[]]:

- """

- self.num = len(data_set) #获得数据集大小

- self.data_set = data_set

- self.data = [example[:-1] for example in data_set if self.data_set[0]] #提取数据剔除标签

- self.labels = [int(example[-1]) for example in data_set if self.data_set[0]] # 类别向量集合

- self.col_name = col_name # 特征向量对应的标签

-

- def get_mostLabel(self):

- """

- 得到最多的类别

- """

- class_dict = Counter(self.labels)

- return max(class_dict)

-

- def is_OneClass(self):

- """

- 判断当前的data_set是否属于同一类别,如果是返回类别,如果不是,返回-1

- :return: class or -1

- """

- if len(np.unique(self.labels))==1:#只有一个类别

- return self.labels[0]

- else:

- return -1

-

- def group_feature(self, index, feature):

- """

- group_feature:将 data[index] 等于 feature的值放在一起,同时去除该列,保证不会重复计算

- :param index:当前处理特征的索引

- :param feature:处理的特征值

- :return:聚集后的数据经过打包返回

- """

- grouped_data_set = [example[:index]+example[index+1:] for example in self.data_set if example[index] == feature]

- sub_col = self.col_name.copy() # list 传参可能改变原参数值,故需要复制出新的labels避免影响原参数

- del(sub_col[index]) #删除划分属性

- return DataSet(grouped_data_set, sub_col)

-

- def is_sameFeature(self):

- """

- 判断数据集的所有特征是否相同

- :return: Ture or False

- """

- example = self.data[0]

- for index in range(self.num):

- if self.data[index] != example:

- return False

- return True

-

- def cal_ent(self):

- """

- 计算信息熵

- :return:float 信息熵

- """

- class_dict = Counter(self.labels)

- # 计算信息熵

- ent = 0

- for key in class_dict.keys():

- p = float(class_dict[key]) / self.num

- ent -= p*math.log(p, 2)

- return ent

-

- def split_continuous_feature(self, index, mid):

- """

- 划分连续型数据

- :param index:是哪一列特征

- :param mid:考察的中位数

- :return:经过DataSet类封装的数据集

- """

- left_feature_list = []

- right_feature_list = []

- for example in self.data_set:#遍历每一行数据

- if example[index] <= mid:

- left_feature_list.append(example) # 此处不用del第axis个标签,连续变量的属性可以作为后代节点的划分属性

- else:

- right_feature_list.append(example)

- return DataSet(left_feature_list, self.col_name), DataSet(right_feature_list, self.col_name)

-

- def choose_byGain(self):

- """

- 根据信息增益选择最佳列属性划分

- :return: 最佳划分属性索引,int

- """

- num_features = len(self.data[0])#特征数量

- max_Gain = -999#最大增益率

- best_feature_index = -1

- best_col = ""

- for index in range(num_features):

- new_ent = 0

- feature_list = [example[index] for example in self.data]#提取每一行特征

- if type(feature_list[0]).__name__ == "float": # 如果是连续值

- sorted_feature_list = sorted(feature_list)#对连续值排序

- split_list = []

- for k in range(self.num - 1):#考察选取哪一个中位数点

- mid_feature = (sorted_feature_list[k] + sorted_feature_list[k+1])/2#计算中位数

- split_list.append(mid_feature)

- left_feature_list, right_feature_list = self.split_continuous_feature(index, mid_feature)

- p_left = left_feature_list.num / float(self.num)

- p_right = right_feature_list.num / float(self.num)

- new_ent = p_left*left_feature_list.cal_ent() + p_right*right_feature_list.cal_ent()#划分后只有两个类别

- gain = self.cal_ent() - new_ent # 计算增益率

- if gain > max_Gain:

- max_Gain = gain

- best_feature_index = index

- best_col = self.col_name[best_feature_index] + "<=" + str(mid_feature)

- else:

- unique_feature = np.unique(feature_list)#剔除重复出现的特征减少计算量

- for feature in unique_feature:

- sub_data_set = self.group_feature(index,feature)

- p = sub_data_set.num/float(self.num)#同一个个数比例

- new_ent += p*sub_data_set.cal_ent()

- gain = self.cal_ent()-new_ent#计算增益率

- if gain > max_Gain:

- max_Gain = gain

- best_feature_index = index

- best_col = self.col_name[best_feature_index]

- return best_feature_index, best_col

-

- def choose_byGain_ratio(self):

- """

- 根据信息增益率选择最佳列属性划分

- :return: 最佳划分属性索引,int

- """

- gain_list = []#记录各个属性的信息增益

- gainRatio_list = []#记录各个属性的增益率

- col_list = []

- num_features = len(self.data[0])#特征数量

- best_col = ""

- for index in range(num_features):

- new_ent = 0

- feature_list = [example[index] for example in self.data]#提取每一行特征

- if type(feature_list[0]).__name__ == "float": # 如果是连续值

- sorted_feature_list = sorted(feature_list)#对连续值排序

- split_list = []

- maxGain = -999

- for k in range(self.num - 1):#考察选取哪一个中位数点

- mid_feature = (sorted_feature_list[k] + sorted_feature_list[k+1])/2#计算中位数

- split_list.append(mid_feature)

- left_feature_list, right_feature_list = self.split_continuous_feature(index, mid_feature)

- p_left = left_feature_list.num / float(self.num)

- p_right = right_feature_list.num / float(self.num)

- new_ent = p_left*left_feature_list.cal_ent() + p_right*right_feature_list.cal_ent()#划分后只有两个类别

- gain = self.cal_ent() - new_ent # 计算

- if gain>maxGain:

- maxGain = gain

- best_feature_index = index

- best_col = self.col_name[best_feature_index] + "<=" + str(mid_feature)

- IV =p_left*math.log(p_left,2)+math.log(p_right,2)*p_right

- IV = 0-IV#取相反数

- Gain_ratio = gain/IV#计算信息增益

- gain_list.append(maxGain)

- gainRatio_list.append(Gain_ratio)

- col_list.append(best_col)

- else:

- unique_feature = np.unique(feature_list)#剔除重复出现的特征减少计算量

- IV = 0

- for feature in unique_feature:

- sub_data_set = self.group_feature(index,feature)

- p = sub_data_set.num/float(self.num)#同一个个数比例

- new_ent += p*sub_data_set.cal_ent()

- IV += p*math.log(p,2)

- gain = self.cal_ent()-new_ent#计算信息增益

- if IV==0:

- IV=0.001

- IV = 0 - IV # 取相反数

- Gain_ratio = gain / IV#计算增益率

- gain_list.append(gain)

- gainRatio_list.append(Gain_ratio)

- col_list.append(self.col_name[index])

- av_gain = np.average(gain_list)#求均值

- dic_table = {}#信息增益和增益率的对照字典,key为增益率,值为信息增益和index的元祖

- for i in range(num_features):

- dic_table[gainRatio_list[i]] = (gain_list[i],i)

- gainRatio_list.sort(reverse=True)#对增益率排序

- for ratio in gainRatio_list:

- if dic_table[ratio][0]>=av_gain:#高于平均值

- index = dic_table[ratio][1]

- return index, col_list[index]

- else:

- continue

-

-

- from pygraphviz import AGraph

-

- class DecisionTree(object):

- """

- 决策树

- """

- def __init__(self, data_set:DataSet,strategy):

- self.root = self.bulidTree(data_set,strategy)#头结点

- def bulidTree(self,data_set:DataSet,strategy):

- """

- :param data_set: 建立树用到的数据集

- :param strategy:选择建立树的策略包含--信息增益:gain --增益率gain-ratio

- :return:建立树的头结点

- """

- node = Node()

- if data_set.is_OneClass() != -1: # 如果数据集中样本属于同一类别

- node.isLeaf = True # 标记为叶子节点

- node.label = data_set.is_OneClass() # 标记叶子节点的类别为此类

- return node

- if len(data_set.data) == 0 or data_set.is_sameFeature(): # 如果数据集为空或者数据集的特征向量相同

- node.isLeaf = True # 标记为叶子节点

- node.label = data_set.get_mostLabel() # 标记叶子节点的类别为数据集中样本数最多的类

- return node

- if strategy=='gain_ratio':

- best_feature_index, best_col = data_set.choose_byGain_ratio() # 最佳划分数据集的标签索引,最佳划分数据集的列名

- elif strategy=='gain':

- best_feature_index, best_col = data_set.choose_byGain()

- else:

- print("输入策略有误!!!")

- return node

- node.attr = best_col # 设置非叶节点的属性为最佳划分数据集的列名

- if u'<=' in node.attr: #如果当前属性是连续属性

- mid_value = float(best_col.split('<=')[1]) # 获得比较值

- left_data_set, right_data_set = data_set.split_continuous_feature(best_feature_index, mid_value)

- left_node = Node()

- right_node = Node()

- '''分别求左右子树'''

- if left_data_set.num == 0: # 如果子数据集为空

- left_node.isLeaf = True # 标记新节点为叶子节点

- left_node.label = data_set.get_mostLabel() # 类别为父数据集中样本数最多的类

- else:

- left_node = self.bulidTree(left_data_set,strategy)#递归生成新的树

- if right_data_set.num == 0: # 如果子数据集为空

- right_node.isLeaf = True # 标记新节点为叶子节点

- right_node.label = data_set.get_mostLabel() # 类别为父数据集中样本数最多的类

- else:

- right_node = self.bulidTree(right_data_set,strategy)

- left_node.value = '小于等于'

- right_node.value = '大于'

- node.children.append(left_node)#记录孩子

- node.children.append(right_node)#记录又孩子

- else:

- feature_set = np.unique([example[best_feature_index] for example in data_set.data]) #最佳划分标签的可取值集合

- for feature in feature_set:

- new_node = Node()

- sub_data_set = data_set.group_feature(best_feature_index, feature) # 划分数据集并返回子数据集

- if sub_data_set.num == 0:# 如果子数据集为空

- new_node.isLeaf = True # 标记新节点为叶子节点

- new_node.label = data_set.get_mostLabel() # 类别为父数据集中样本数最多的类

- else:

- new_node = self.bulidTree(sub_data_set,strategy)

- new_node.value = feature # 设置节点与父节点间的连接属性

- new_node.parent = node # 设置父节点

- node.children.append(new_node)

- return node

-

- def save(self, filename):

- g = pyg.AGraph(strict=False, directed=True)

- g.add_node(self.root.attr, fontname="Microsoft YaHei")

- self._save(g, self.root)

- g.layout(prog='dot')

- g.draw(filename)

-

- def _save(self, graph, root):

- if len(root.children) > 0:

- for node in root.children:

- if node.attr != None:

- graph.add_node(node.attr, fontname="Microsoft YaHei")

- graph.add_edge(root.attr, node.attr, label=node.value, fontname="Microsoft YaHei")

- else:

- graph.add_node(node.label, shape="box", fontname="Microsoft YaHei")

- graph.add_edge(root.attr, node.label, label=node.value, fontname="Microsoft YaHei")

- self._save(graph, node)

-

- def predict_one(self, test_x, node, col_name) -> int:

- if (len(node.children) == 0):

- return node.label

- else:

- if u'<=' not in node.attr: # 处理离散数据

- index = col_name.index(node.attr)

- for chil in node.children:

- if chil.value == None: # 是一个叶子节点

- return chil.label

- if chil.value == test_x[index]:

- return self.predict_one(test_x, chil, col_name)

- else:

- col_ = node.attr.split("<=")[0]

- value = eval(node.attr.split("<=")[1])

- index = col_name.index(col_) # 这个元素的索引是啥

- if test_x[index] <= value: # 小于划分节点,在左子树

- return self.predict_one(test_x, node.children[0], col_name)

- else:

- return self.predict_one(test_x, node.children[1], col_name)

-

- def predict(self, test_data, col_name):

- pred = []

- for data in test_data:

- pred_y = self.predict_one(data, self.root, col_name)

- pred.append(pred_y)

- return pred

-

-

- dataset,col_name = get_dataset()

- # print(dataset)

- # print(col_name)

-

- random.shuffle(dataset) #将序列中的元素随机打乱

- train_data, test_data = dataset[0:12],dataset[12:17] #前12列为训练数据,13-17的5列为测试数据

-

- test_label = [test_data[i][-1] for i in range(len(test_data))] #5个测试数据的label

-

- Train_Data_set = DataSet(train_data,col_name)

-

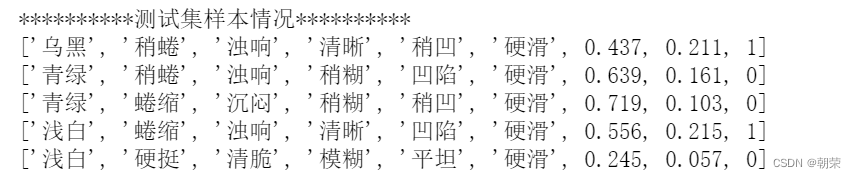

- print("*" *10 + "测试集样本情况"+ "*" *10)

- for data in test_data:

- print(data)

-

-

ID4.5 iterative dichotomiser 迭代二分器,使用的信息增益率划分

- print("*"*10+"信息增益率"+"*"*10)

- decisionTree = DecisionTree(Train_Data_set,strategy ='gain_ratio')

- decisionTree.save('watermelon_gainRatio.jpg')

- pred = decisionTree.predict(test_data,col_name)

-

- print("预测值:",pred)

- acc, p, r, f1 = calAccuracy(pred,test_label)

- print("正确率:{:.2%}\t查准率:{:.4f}\t查全率:{:.4f}\tF1:{:.4f}".format(acc,p,r,f1))

ID3 使用的信息增益划分

-

- print("*"*10+"信息增益"+"*"*10)

- decisionTree = DecisionTree(Train_Data_set,strategy ='gain')

- decisionTree.save('watermelon_gain.jpg')

- pred = decisionTree.predict(test_data,col_name)

-

- print("预测值:",pred)

- acc, p, r, f1 = calAccuracy(pred,test_label)

- print("正确率:{:.2%}\t查准率:{:.4f}\t查全率:{:.4f}\tF1:{:.4f}".format(acc,p,r,f1))

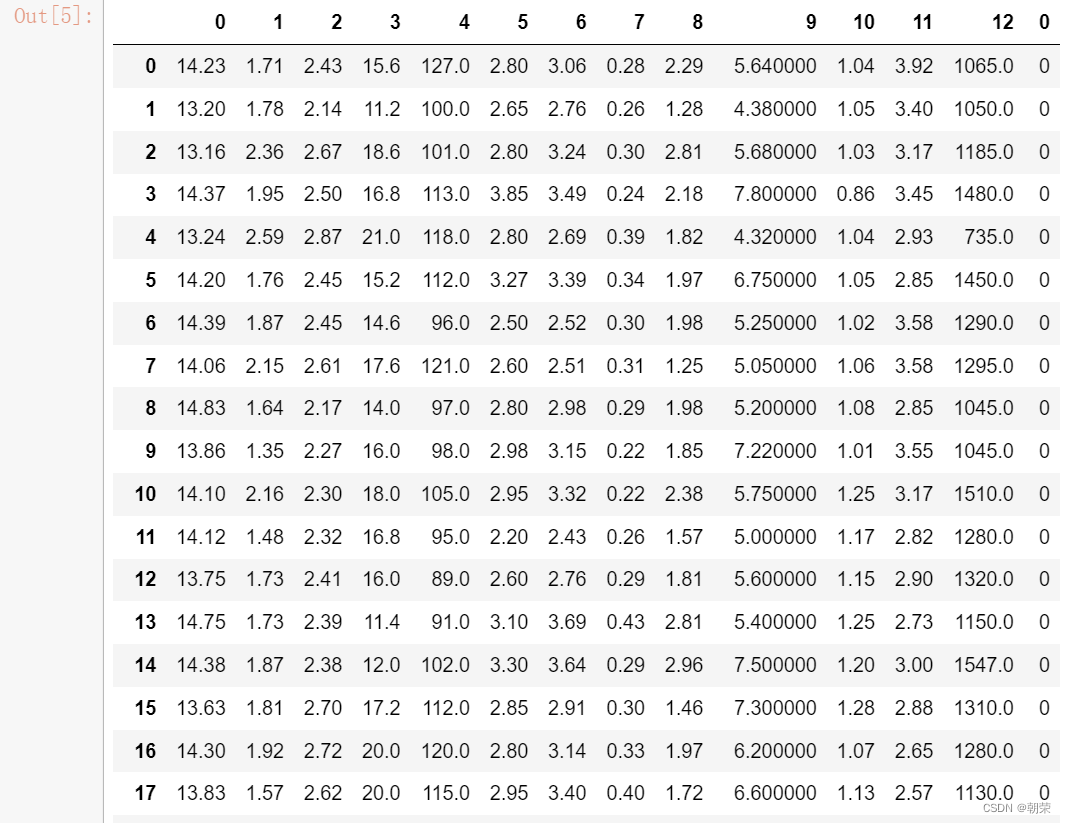

sklearn实现决策树分类器

- from sklearn import tree

- from sklearn.datasets import load_wine

- from sklearn.model_selection import train_test_split

-

-

- wine = load_wine()

- wine.data.shape

- wine.target

-

- import pandas as pd

- pd.concat([pd.DataFrame(wine.data),pd.DataFrame(wine.target)],axis=1)

- Xtrain, Xtest, Ytrain, Ytest = train_test_split(wine.data,wine.target,test_size=0.3)

-

- clf = tree.DecisionTreeClassifier(criterion="entropy")

- clf = clf.fit(Xtrain, Ytrain)

- score = clf.score(Xtest, Ytest) #返回预测的准确度accuracy

-

- feature_name = ['酒精','苹果酸','灰','灰的碱性','镁','总酚','类黄酮','非黄烷类酚类','花青素','颜色强度','色调','od280/od315稀释葡萄酒','脯氨酸']

-

- import graphviz

- dot_data = tree.export_graphviz(clf, out_file="Tree.dot"

- ,feature_names = feature_name

- ,class_names=["琴酒","雪莉","贝尔摩德"]

- ,filled=True

- ,rounded=True

- )

- clf.feature_importances_

-

- [*zip(feature_name,clf.feature_importances_)]

-

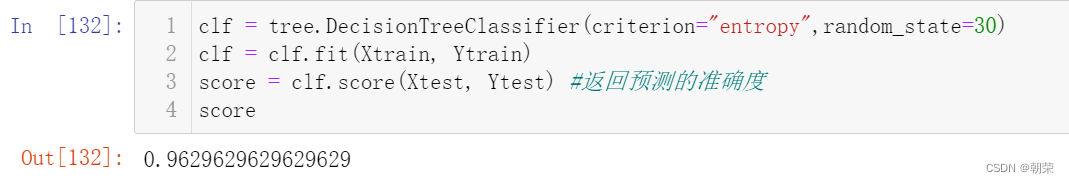

- clf = tree.DecisionTreeClassifier(criterion="entropy",random_state=30)

- clf = clf.fit(Xtrain, Ytrain)

- score = clf.score(Xtest, Ytest) #返回预测的准确度

- score

参数:

random_state:用来设置分枝中的随机模式的参数,默认None,在高维度时随机性会表现更明显,低维度的数据 (比如鸢尾花数据集),随机性几乎不会显现。输入任意整数,会一直长出同一棵树,让模型稳定下来。

splitter:也是用来控制决策树中的随机选项的,有两种输入值,输入”best",决策树在分枝时虽然随机,但是还是会 优先选择更重要的特征进行分枝(重要性可以通过属性feature_importances_查看),输入“random",决策树在 分枝时会更加随机,树会因为含有更多的不必要信息而更深更大,并因这些不必要信息而降低对训练集的拟合。这 也是防止过拟合的一种方式。

剪枝操作:

max_depth: 限制树的最大深度,超过设定深度的树枝全部剪掉 这是用得最广泛的剪枝参数,在高维度低样本量时非常有效。决策树多生长一层,对样本量的需求会增加一倍,所 以限制树深度能够有效地限制过拟合。在集成算法中也非常实用。实际使用时,建议从=3开始尝试,看看拟合的效 果再决定是否增加设定深度。

min_samples_leaf限定:一个节点在分枝后的每个子节点都必须包含至少min_samples_leaf个训练样本,否则分 枝就不会发生,或者,分枝会朝着满足每个子节点都包含min_samples_leaf个样本的方向去发生。

- clf = tree.DecisionTreeClassifier(criterion="entropy"

- ,random_state=30

- ,splitter="random"

- ,max_depth=3

- # ,min_samples_leaf=10

- # ,min_samples_split=25

- )

- clf = clf.fit(Xtrain, Ytrain)

- dot_data = tree.export_graphviz(clf

- ,feature_names= feature_name

- ,class_names=["琴酒","雪莉","贝尔摩德"]

- ,filled=True

- ,rounded=True

- )

- graph = graphviz.Source(dot_data)

- graph

-

- score = clf.score(Xtest, Ytest)

- score

-

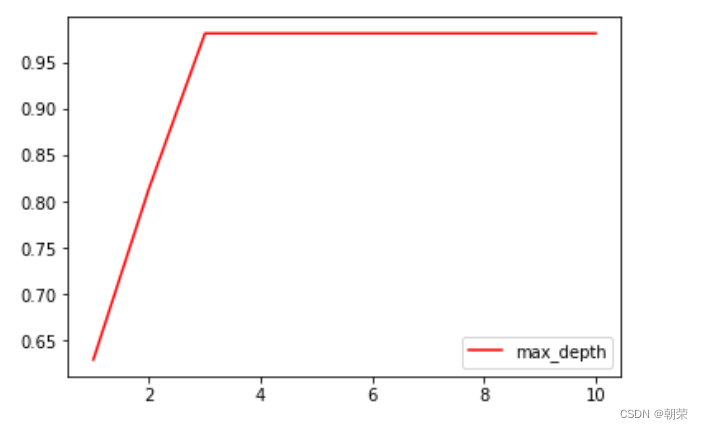

- import matplotlib.pyplot as plt

- test = []

- for i in range(10):

- clf = tree.DecisionTreeClassifier(max_depth=i+1

- ,criterion="entropy"

- ,random_state=30

- ,splitter="random"

- )

- clf = clf.fit(Xtrain, Ytrain)

- score = clf.score(Xtest, Ytest)

- test.append(score)

- plt.plot(range(1,11),test,color="red",label="max_depth")

- plt.legend()

- plt.show()

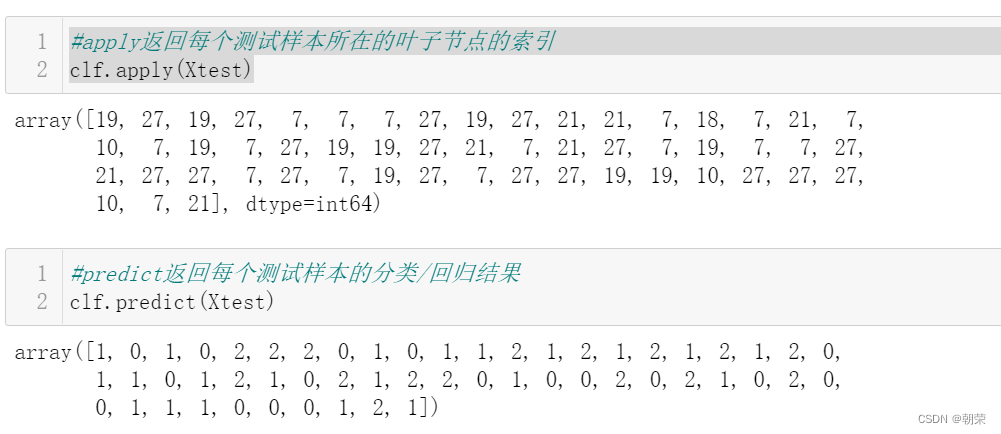

- #apply返回每个测试样本所在的叶子节点的索引

- clf.apply(Xtest)

-

-

- #predict返回每个测试样本的分类/回归结果

- clf.predict(Xtest)

sklearn实现决策树回归器

- from sklearn.datasets import load_boston

- from sklearn.model_selection import cross_val_score

- from sklearn.tree import DecisionTreeRegressor

-

- boston = load_boston()

-

- #regressor = DecisionTreeRegressor(random_state=0) #实例化

- #cross_val_score(regressor, boston.data, boston.target, cv=10).mean()

-

-

- regressor = DecisionTreeRegressor(random_state=0)

- cross_val_score(regressor, boston.data, boston.target, cv=10,

- scoring = "neg_mean_squared_error"

- )

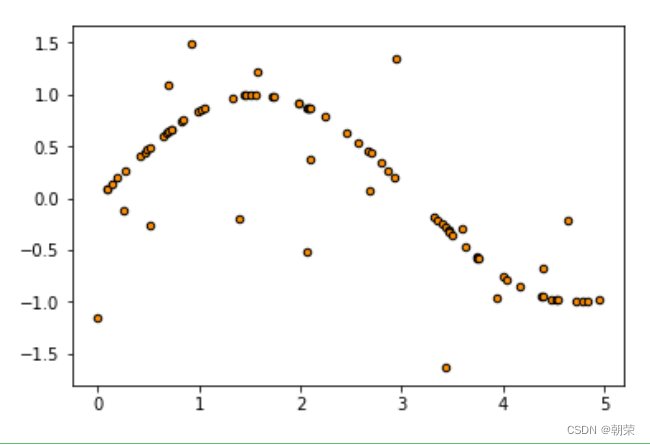

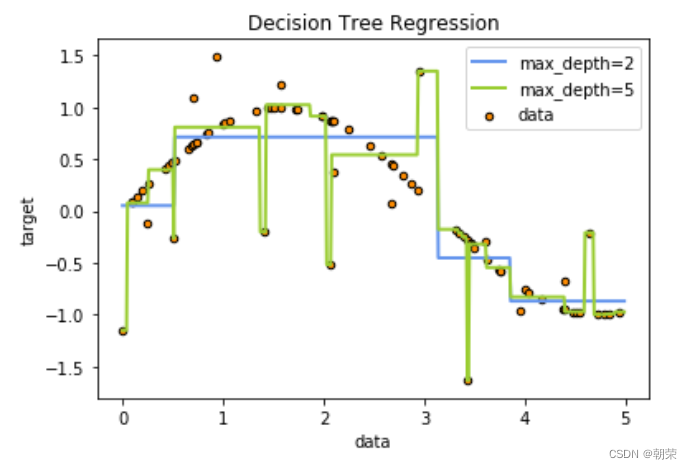

一维回归的图像绘制:

- import numpy as np

- from sklearn.tree import DecisionTreeRegressor

- import matplotlib.pyplot as plt

-

- rng = np.random.RandomState(1) #随机数种子

- X = np.sort(5 * rng.rand(80,1), axis=0) #生成0~5之间随机的x的取值

- y = np.sin(X).ravel() #生成正弦曲线

- y[::5] += 3 * (0.5 - rng.rand(16)) #在正弦曲线上加噪声

-

- plt.figure()

- plt.scatter(X, y, s=20, edgecolor="black",c="darkorange", label="data")

- regr_1 = DecisionTreeRegressor(max_depth=2)

- regr_2 = DecisionTreeRegressor(max_depth=5)

- regr_1.fit(X, y)

- regr_2.fit(X, y)

-

- X_test = np.arange(0.0, 5.0, 0.01)[:, np.newaxis]

-

- y_1 = regr_1.predict(X_test)

- y_2 = regr_2.predict(X_test)

-

- plt.figure()

- plt.scatter(X, y, s=20, edgecolor="black",c="darkorange", label="data")

- plt.plot(X_test, y_1, color="cornflowerblue",label="max_depth=2", linewidth=2)

- plt.plot(X_test, y_2, color="yellowgreen", label="max_depth=5", linewidth=2)

- plt.xlabel("data")

- plt.ylabel("target")

- plt.title("Decision Tree Regression")

- plt.legend()

- plt.show()

2、随机森林

随机森林是非常具有代表性的Bagging集成算法,它的所有基评估器都是决策树,分类树组成的森林就叫做随机森 林分类器,回归树所集成的森林就叫做随机森林回归器。

控制基评估器的参数:

criterion 不纯度的衡量指标,有基尼系数和信息熵两种选择。

max_depth 树的最大深度,超过最大深度的树枝都会被剪掉。

min_samples_leaf 一个节点在分枝后的每个子节点都必须包含至少min_samples_leaf个训练样 本,否则分枝就不会发生。

min_samples_split 一个节点必须要包含至少min_samples_split个训练样本,这个节点才允许被分 枝,否则分枝就不会发生。

max_features max_features限制分枝时考虑的特征个数,超过限制个数的特征都会被舍弃, 默认值为总特征个数开平方取整。

min_impurity_decrease 限制信息增益的大小,信息增益小于设定数值的分枝不会发生。

n_estimators参数:森林中树木的数量,即基评估器的数量。这个参数对随机森林模型的精确性影响是单调的,n_estimators越 大,模型的效果往往越好。但是相应的,任何模型都有决策边界,n_estimators达到一定的程度之后,随机森林的 精确性往往不在上升或开始波动,并且,n_estimators越大,需要的计算量和内存也越大,训练的时间也会越来越 长。对于这个参数,我们是渴望在训练难度和模型效果之间取得平衡。

sklearn实现随机森林分类器

- %matplotlib inline

- from sklearn.tree import DecisionTreeClassifier

- from sklearn.ensemble import RandomForestClassifier

- from sklearn.datasets import load_wine

-

- wine = load_wine()

-

- #实例化

- #训练集带入实例化的模型去进行训练,使用的接口是fit

- #使用其他接口将测试集导入我们训练好的模型,去获取我们希望过去的结果(score.Y_test)

- from sklearn.model_selection import train_test_split

- Xtrain, Xtest, Ytrain, Ytest = train_test_split(wine.data,wine.target,test_size=0.3)

-

- clf = DecisionTreeClassifier(random_state=0)

- rfc = RandomForestClassifier(random_state=0)

- clf = clf.fit(Xtrain,Ytrain)

- rfc = rfc.fit(Xtrain,Ytrain)

- score_c = clf.score(Xtest,Ytest)

- score_r = rfc.score(Xtest,Ytest)

-

- print("Single Tree:{}".format(score_c)

- ,"Random Forest:{}".format(score_r))

-

-

- #交叉验证:是数据集划分为n分,依次取每一份做测试集,每n-1份做训练集,多次训练模型以观测模型稳定性的方法

-

- from sklearn.model_selection import cross_val_score

- import matplotlib.pyplot as plt

-

- rfc = RandomForestClassifier(n_estimators=25)

- rfc_s = cross_val_score(rfc,wine.data,wine.target,cv=10)

-

- clf = DecisionTreeClassifier()

- clf_s = cross_val_score(clf,wine.data,wine.target,cv=10)

-

- plt.plot(range(1,11),rfc_s,label = "RandomForest")

- plt.plot(range(1,11),clf_s,label = "Decision Tree")

- plt.legend()

- plt.show()

-

- #====================一种更加有趣也更简单的写法===================#

-

- """

- label = "RandomForest"

- for model in [RandomForestClassifier(n_estimators=25),DecisionTreeClassifier()]:

- score = cross_val_score(model,wine.data,wine.target,cv=10)

- print("{}:".format(label)),print(score.mean())

- plt.plot(range(1,11),score,label = label)

- plt.legend()

- label = "DecisionTree"

-

- """

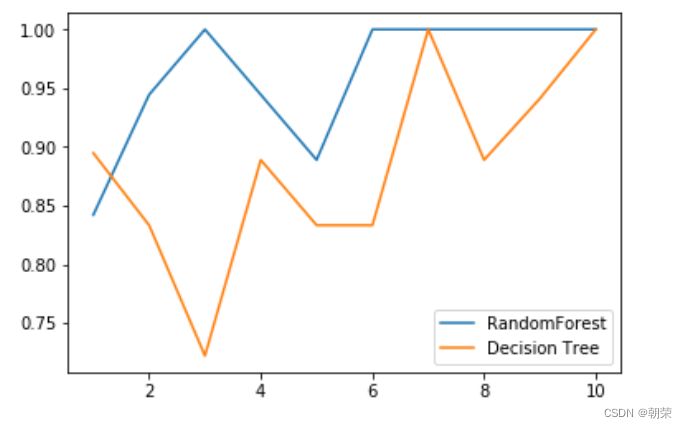

画出随机森林和决策树在十组交叉验证下的效果对比:

- rfc_l = []

- clf_l = []

-

- for i in range(10):

- rfc = RandomForestClassifier(n_estimators=25)

- rfc_s = cross_val_score(rfc,wine.data,wine.target,cv=10).mean()

- rfc_l.append(rfc_s)

- clf = DecisionTreeClassifier()

- clf_s = cross_val_score(clf,wine.data,wine.target,cv=10).mean()

- clf_l.append(clf_s)

-

- plt.plot(range(1,11),rfc_l,label = "Random Forest")

- plt.plot(range(1,11),clf_l,label = "Decision Tree")

- plt.legend()

- plt.show()

-

- #是否有注意到,单个决策树的波动轨迹和随机森林一致?

- #再次验证了我们之前提到的,单个决策树的准确率越高,随机森林的准确率也会越高

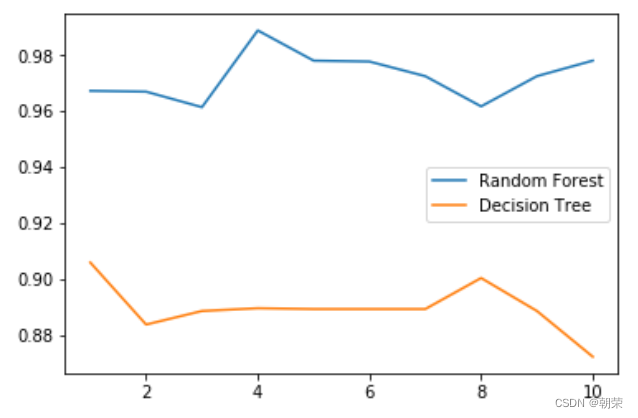

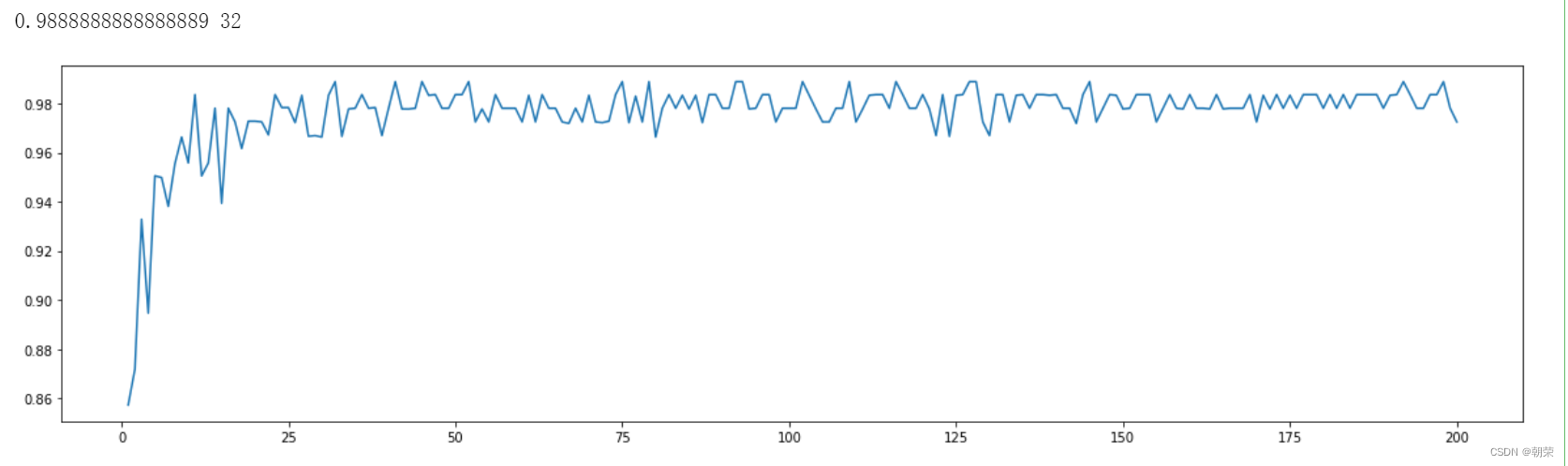

n_estimators的学习曲线:

- superpa = []

- for i in range(200):

- rfc = RandomForestClassifier(n_estimators=i+1,n_jobs=-1)

- rfc_s = cross_val_score(rfc,wine.data,wine.target,cv=10).mean()

- superpa.append(rfc_s)

- print(max(superpa),superpa.index(max(superpa))+1)#打印出:最高精确度取值,max(superpa))+1指的是森林数目的数量n_estimators

- plt.figure(figsize=[20,5])

- plt.plot(range(1,201),superpa)

- plt.show()

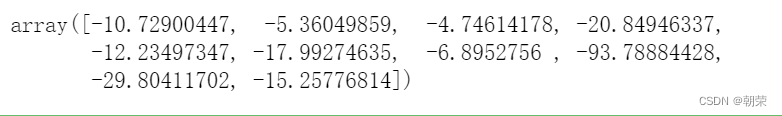

sklearn实现随机森林回归器

- from sklearn.datasets import load_boston#一个标签是连续西变量的数据集

- from sklearn.model_selection import cross_val_score#导入交叉验证模块

- from sklearn.ensemble import RandomForestRegressor#导入随机森林回归系

-

- boston = load_boston()

- regressor = RandomForestRegressor(n_estimators=100,random_state=0)#实例化

- cross_val_score(regressor, boston.data, boston.target, cv=10

- ,scoring = "neg_mean_squared_error"#如果不写scoring,回归评估默认是R平方

- )

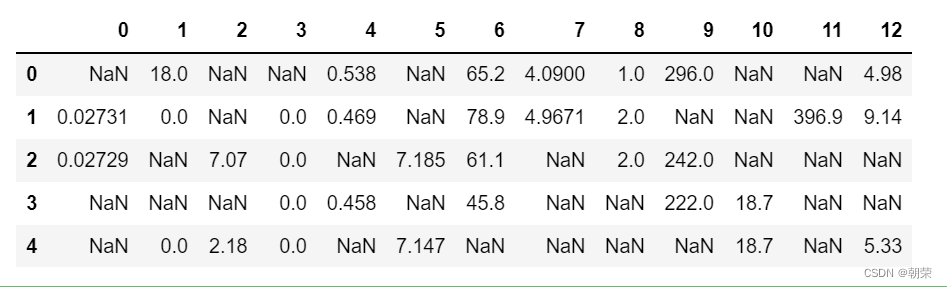

sklearn用随机森林回归填补缺失值

- import numpy as np

- import pandas as pd

- import matplotlib.pyplot as plt

- from sklearn.datasets import load_boston

- from sklearn.impute import SimpleImputer#填补缺失值的类

- from sklearn.ensemble import RandomForestRegressor

- from sklearn.model_selection import cross_val_score

-

- dataset = load_boston()

- dataset#是一个字典

- dataset.target#查看数据标签

- dataset.data#数据的特征矩阵

- dataset.data.shape#数据的结构

- #总共506*13=6578个数据

-

- X_full, y_full = dataset.data, dataset.target

- n_samples = X_full.shape[0]#506

- n_features = X_full.shape[1]#13

-

-

- #首先确定我们希望放入的缺失数据的比例,在这里我们假设是50%,那总共就要有3289个数据缺失

-

- rng = np.random.RandomState(0)#设置一个随机种子,方便观察

- missing_rate = 0.5

- n_missing_samples = int(np.floor(n_samples * n_features * missing_rate))#3289

- #np.floor向下取整,返回.0格式的浮点数

-

- #所有数据要随机遍布在数据集的各行各列当中,而一个缺失的数据会需要一个行索引和一个列索引

- #如果能够创造一个数组,包含3289个分布在0~506中间的行索引,和3289个分布在0~13之间的列索引,那我们就可以利用索引来为数据中的任意3289个位置赋空值

- #然后我们用0,均值和随机森林来填写这些缺失值,然后查看回归的结果如何

-

- missing_features = rng.randint(0,n_features,n_missing_samples)#randint(下限,上限,n)指在下限和上限之间取出n个整数

- len(missing_features)#3289

- missing_samples = rng.randint(0,n_samples,n_missing_samples)

- len(missing_samples)#3289

-

- #missing_samples = rng.choice(n_samples,n_missing_samples,replace=False)

- #我们现在采样了3289个数据,远远超过我们的样本量506,所以我们使用随机抽取的函数randint。

- # 但如果我们需要的数据量小于我们的样本量506,那我们可以采用np.random.choice来抽样,choice会随机抽取不重复的随机数,

- # 因此可以帮助我们让数据更加分散,确保数据不会集中在一些行中!

- #这里我们不采用np.random.choice,因为我们现在采样了3289个数据,远远超过我们的样本量506,使用np.random.choice会报错

-

- X_missing = X_full.copy()

- y_missing = y_full.copy()

-

- X_missing[missing_samples,missing_features] = np.nan

-

- X_missing = pd.DataFrame(X_missing)

- #转换成DataFrame是为了后续方便各种操作,numpy对矩阵的运算速度快到拯救人生,但是在索引等功能上却不如pandas来得好用

- X_missing.head()

-

- #并没有对y_missing进行缺失值填补,原因是有监督学习,不能缺标签啊

-

- #使用均值进行填补

- from sklearn.impute import SimpleImputer

- imp_mean = SimpleImputer(missing_values=np.nan, strategy='mean')#实例化

- X_missing_mean = imp_mean.fit_transform(X_missing)#特殊的接口fit_transform = 训练fit + 导出predict

- #pd.DataFrame(X_missing_mean).isnull()#但是数据量大的时候还是看不全

- #布尔值False = 0, True = 1

- # pd.DataFrame(X_missing_mean).isnull().sum()#如果求和为0可以彻底确认是否有NaN

-

- #使用0进行填补

- imp_0 = SimpleImputer(missing_values=np.nan, strategy="constant",fill_value=0)#constant指的是常数

- X_missing_0 = imp_0.fit_transform(X_missing)

-

- X_missing_reg = X_missing.copy()

-

- #找出数据集中,缺失值从小到大排列的特征们的顺序,并且有了这些的索引

- sortindex = np.argsort(X_missing_reg.isnull().sum(axis=0)).values#np.argsort()返回的是从小到大排序的顺序所对应的索引

-

- for i in sortindex:

-

- #构建我们的新特征矩阵(没有被选中去填充的特征 + 原始的标签)和新标签(被选中去填充的特征)

- df = X_missing_reg

- fillc = df.iloc[:,i]#新标签

- df = pd.concat([df.iloc[:,df.columns != i],pd.DataFrame(y_full)],axis=1)#新特征矩阵

-

- #在新特征矩阵中,对含有缺失值的列,进行0的填补

- df_0 =SimpleImputer(missing_values=np.nan,strategy='constant',fill_value=0).fit_transform(df)

-

- #找出我们的训练集和测试集

- Ytrain = fillc[fillc.notnull()]# Ytrain是被选中要填充的特征中(现在是我们的标签),存在的那些值:非空值

- Ytest = fillc[fillc.isnull()]#Ytest 是被选中要填充的特征中(现在是我们的标签),不存在的那些值:空值。注意我们需要的不是Ytest的值,需要的是Ytest所带的索引

- Xtrain = df_0[Ytrain.index,:]#在新特征矩阵上,被选出来的要填充的特征的非空值所对应的记录

- Xtest = df_0[Ytest.index,:]#在新特征矩阵上,被选出来的要填充的特征的空值所对应的记录

-

- #用随机森林回归来填补缺失值

- rfc = RandomForestRegressor(n_estimators=100)#实例化

- rfc = rfc.fit(Xtrain, Ytrain)#导入训练集进行训练

- Ypredict = rfc.predict(Xtest)#用predict接口将Xtest导入,得到我们的预测结果(回归结果),就是我们要用来填补空值的这些值

-

- #将填补好的特征返回到我们的原始的特征矩阵中

- X_missing_reg.loc[X_missing_reg.iloc[:,i].isnull(),i] = Ypredict

-

- #检验是否有空值

- X_missing_reg.isnull().sum()

-

-

- #对所有数据进行建模,取得MSE结果

-

- X = [X_full,X_missing_mean,X_missing_0,X_missing_reg]

-

- mse = []

- std = []

- for x in X:

- estimator = RandomForestRegressor(random_state=0, n_estimators=100)#实例化

- scores = cross_val_score(estimator,x,y_full,scoring='neg_mean_squared_error', cv=5).mean()

- mse.append(scores * -1)

-

-

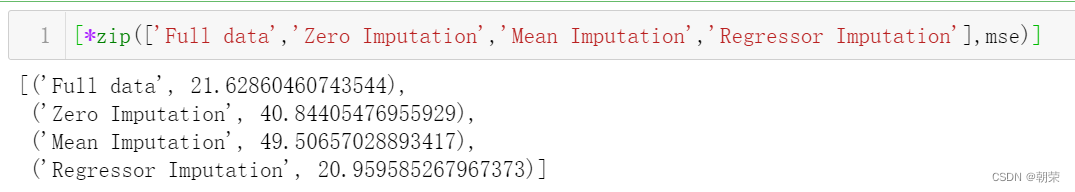

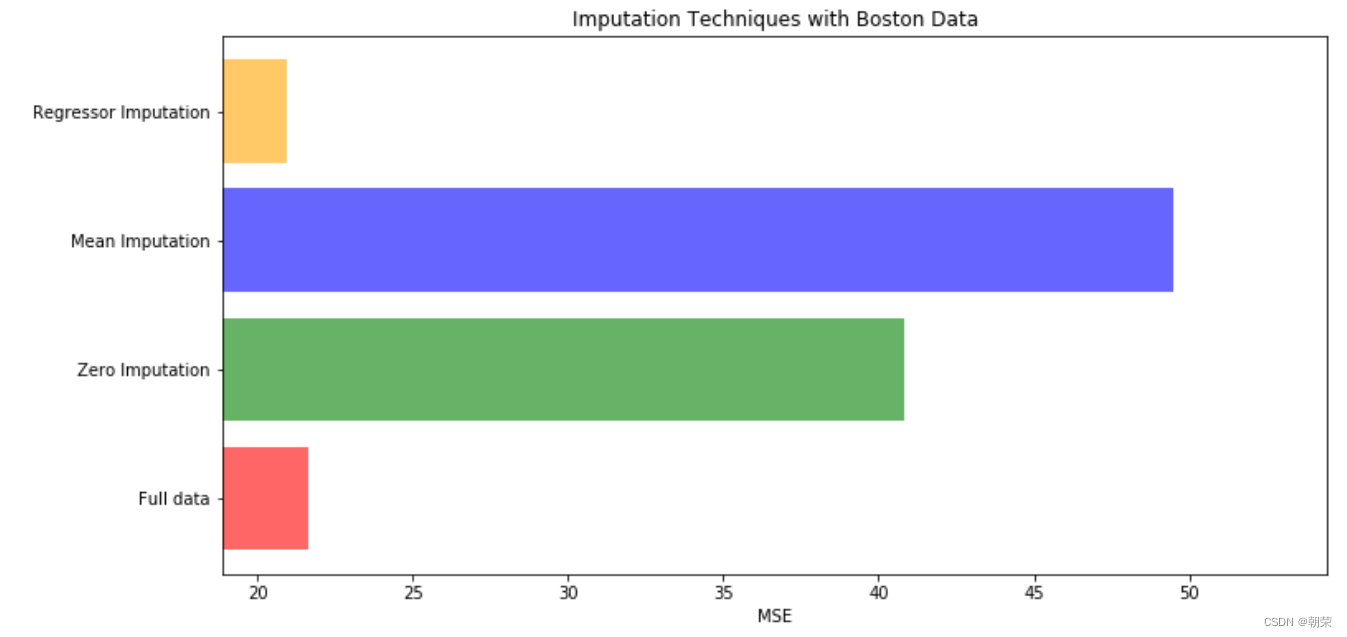

- [*zip(['Full data','Zero Imputation','Mean Imputation','Regressor Imputation'],mse)]

-

-

- x_labels = ['Full data',

- 'Zero Imputation',

- 'Mean Imputation',

- 'Regressor Imputation']

- colors = ['r', 'g', 'b', 'orange']

-

- plt.figure(figsize=(12, 6))#画出画布

- ax = plt.subplot(111)#添加子图

- for i in np.arange(len(mse)):

- ax.barh(i, mse[i],color=colors[i], alpha=0.6, align='center')#bar为条形图,barh为横向条形图,alpha表示条的粗度

- ax.set_title('Imputation Techniques with Boston Data')

- ax.set_xlim(left=np.min(mse) * 0.9,

- right=np.max(mse) * 1.1)#设置x轴取值范围

- ax.set_yticks(np.arange(len(mse)))

- ax.set_xlabel('MSE')

- ax.set_yticklabels(x_labels)

- plt.show()