- 1本地部署开源免费文件传输工具LocalSend并实现公网快速传送文件_local send

- 2移动端跨平台方案Kotlin Multiplatform

- 3解决微信获取用户授权手机号code码失效问题_如何解决微信获取用户手机号code失效问题

- 4flutter开发vscode用模拟器调试

- 5浏览器显示“您与此网站之间建立的连接不安全”的解决方案

- 6《自然语言处理实战入门》 文本检索 ---- 文本查询实例:ElasticSearch 配置ik 分词器及使用_ik分词和查询互动

- 7Java课程大作业基于JavaFX+MySQL的学生管理系统源代码+数据库+详细文档,具有成绩数据可视化分析及自动生成简历功能_基于javafx的学生成绩管理系统大学生课设

- 8【海贼王的数据航海】ST表——RMQ问题

- 9Docker 简介【虚拟化、容器化】

- 10An unhandled error has occurred inside Forge: An error occured while making for target: squirrel_an unhandled rejection has occurred inside forge:

【深度学习实战(31)】模型结构之CSPDarknet

赞

踩

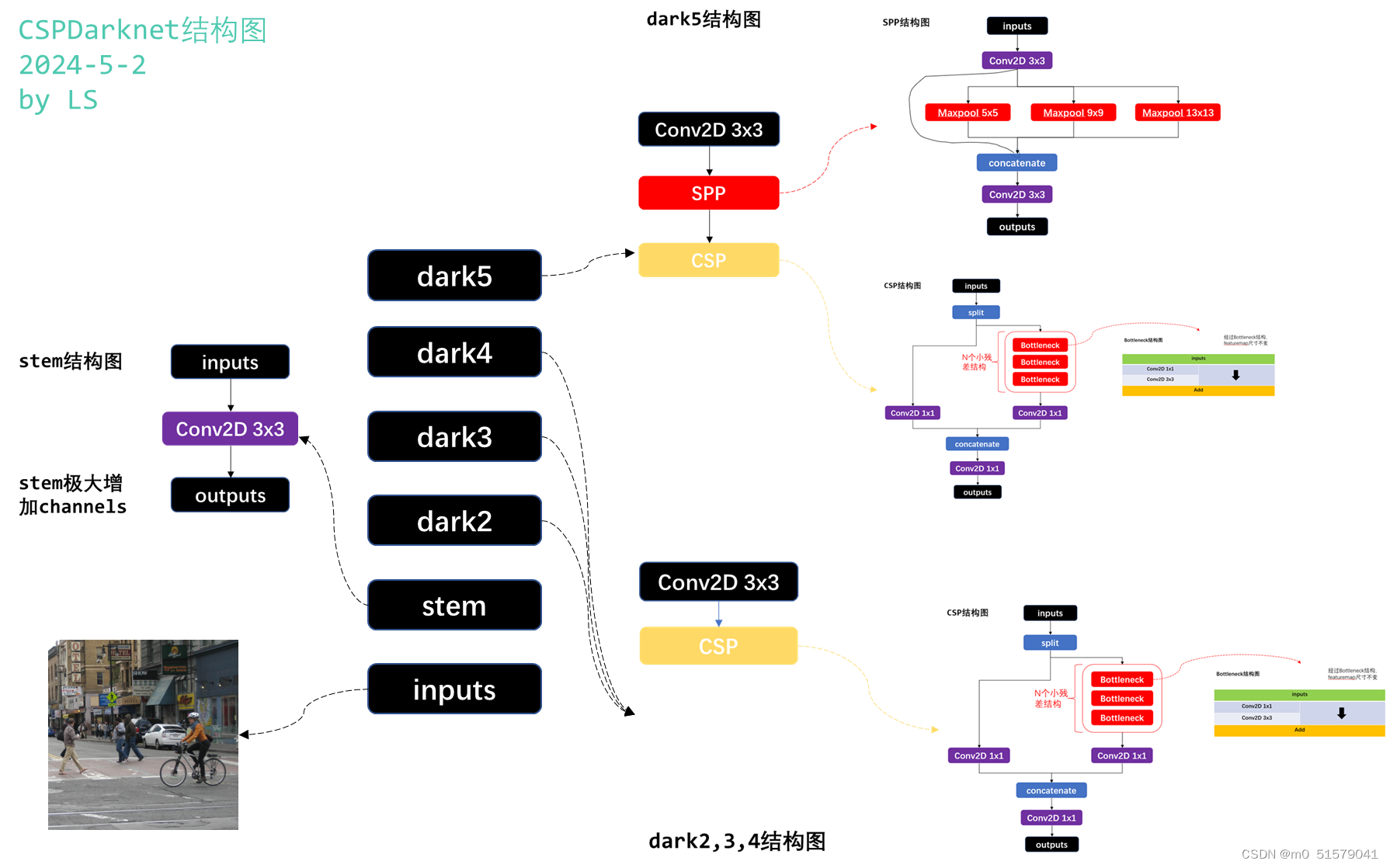

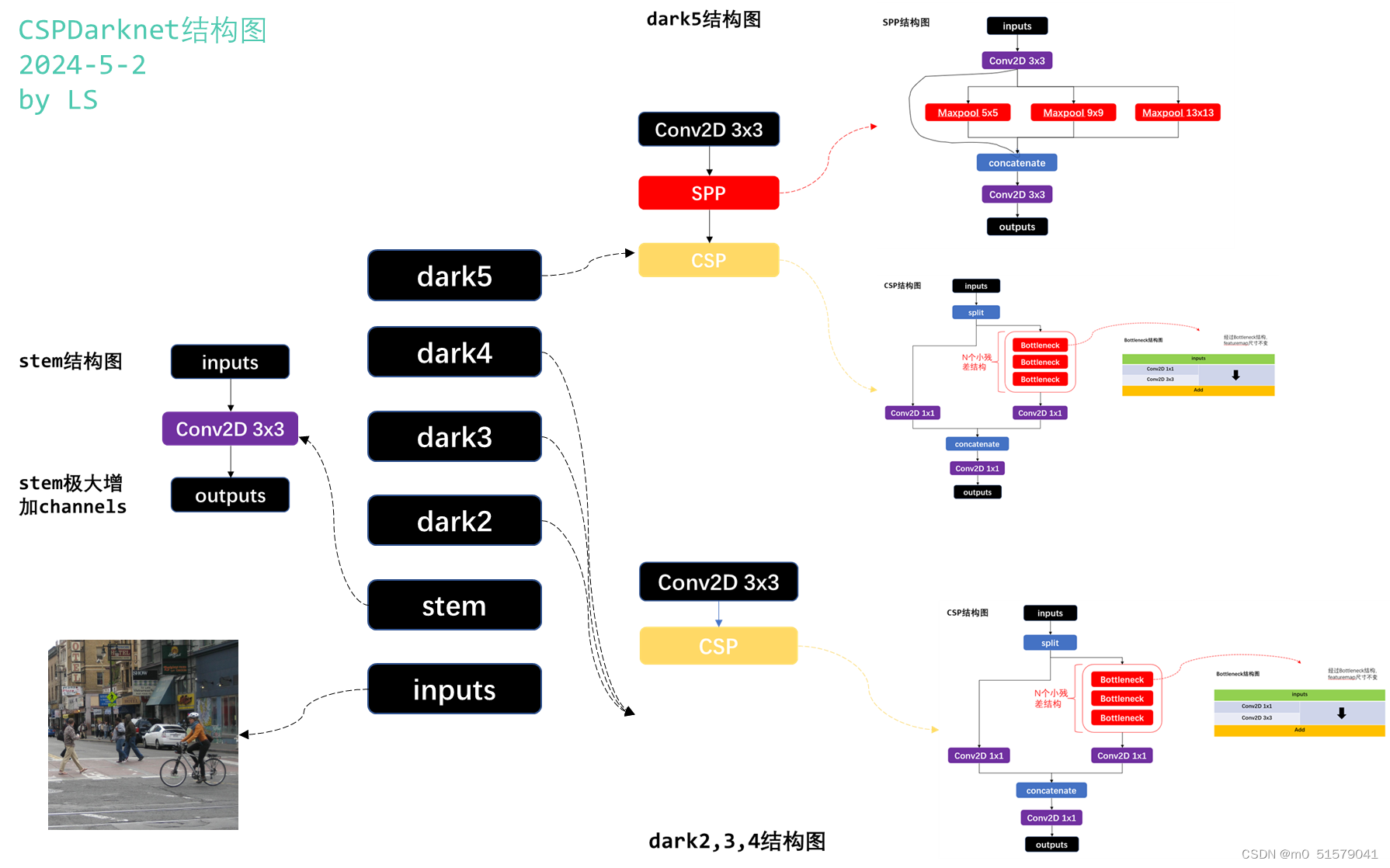

一、CSPDarknet整体结构

CSPDarknet主要借鉴Darknet53(yolov3中使用)的网络框架,并使用了SPP和CSPNet结构做了改进。

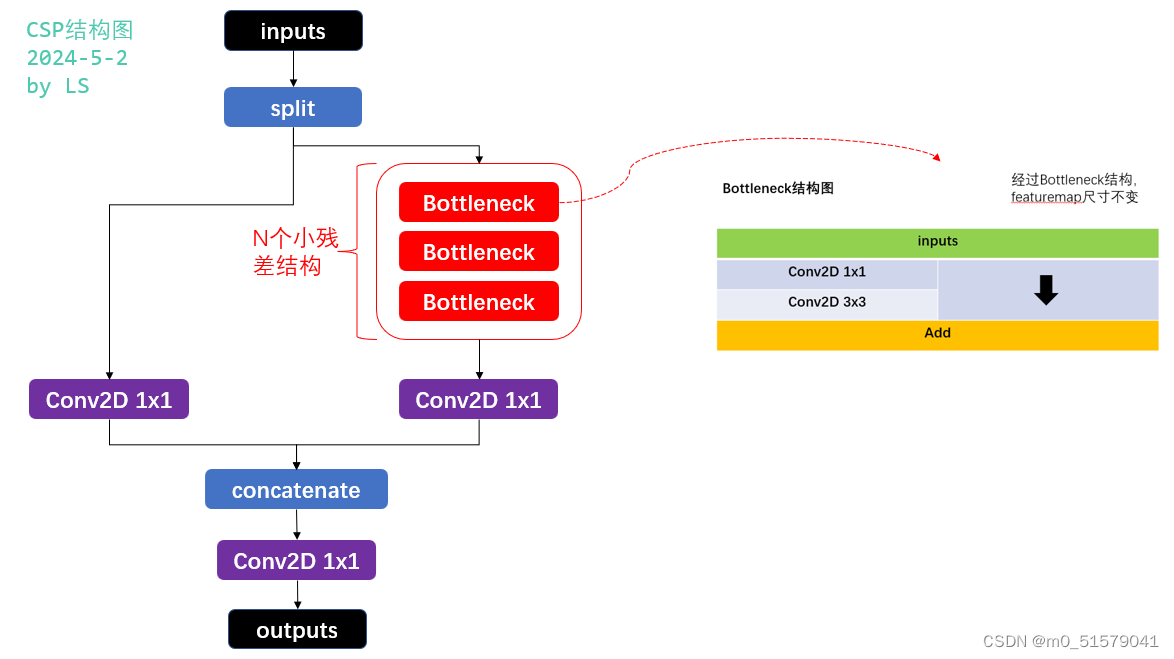

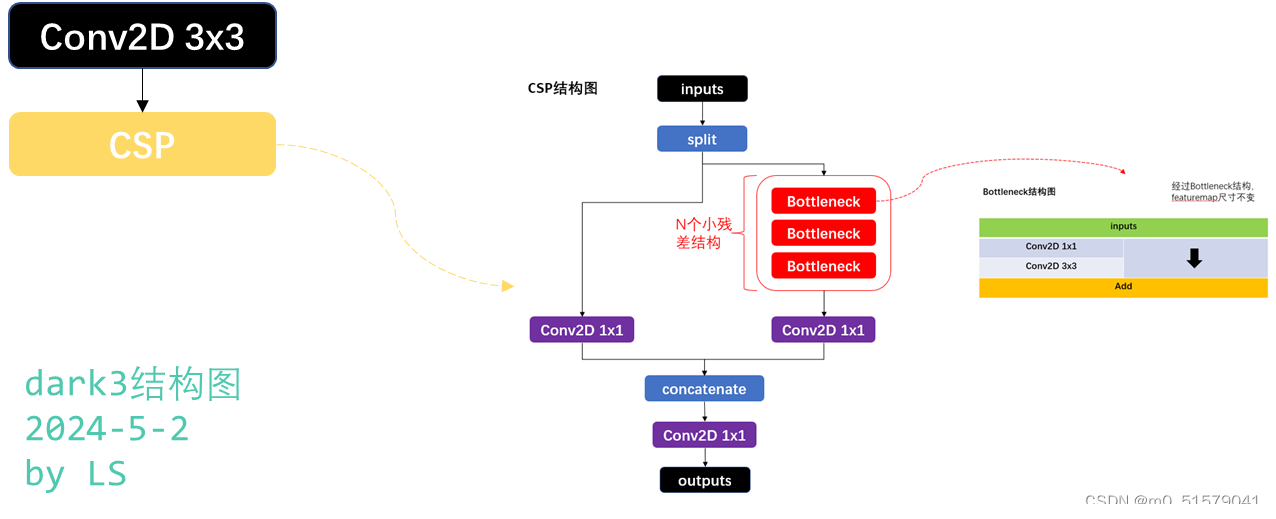

二、CSPNet结构

论文链接:https://arxiv.org/pdf/1911.11929

CSPNet由里层小残差Bottleneck结构和外层大残差结构CSP组成。

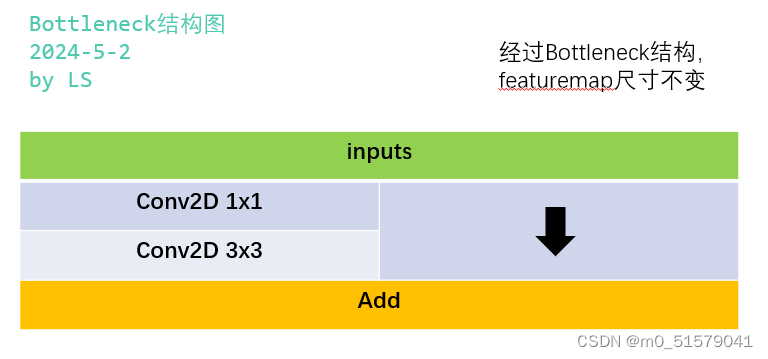

2.1 里面小残差结构Bottleneck

Bottleneck小残差结构图如下,其中Conv2D可以根据需要设置为普通卷积BaseConv或者是深度可分离卷积DWConv,激活函数可以选择relu或者leaky_relu。

代码实现

#--------------------------------------------------#

# activation func.

#--------------------------------------------------#

def get_activation(name="lrelu", inplace=True):

if name == "relu":

module = nn.ReLU(inplace=inplace)

elif name == "lrelu":

module = nn.LeakyReLU(0.1, inplace=inplace)

else:

raise AttributeError("Unsupported act type: {}".format(name))

return module

#--------------------------------------------------#

# BaseConv

#--------------------------------------------------#

class BaseConv(nn.Module):

def __init__(self, in_channels, out_channels, ksize, stride, groups=1, bias=False, act="lrelu"):

super().__init__()

pad = (ksize - 1) // 2

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size=ksize, stride=stride, padding=pad, groups=groups, bias=bias)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001, momentum=0.03)

self.act = get_activation(act, inplace=True)

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def fuseforward(self, x):

return self.act(self.conv(x))

#--------------------------------------------------#

# DWConv

#--------------------------------------------------#

class DWConv(nn.Module):

def __init__(self, in_channels, out_channels, ksize, stride=1, act="lrelu"):

super().__init__()

self.dconv = BaseConv(in_channels, in_channels, ksize=ksize, stride=stride, groups=in_channels, act=act,)

self.pconv = BaseConv(in_channels, out_channels, ksize=1, stride=1, groups=1, act=act)

def forward(self, x):

x = self.dconv(x)

return self.pconv(x)

#--------------------------------------------------#

# 残差结构的构建,小的残差结构

#--------------------------------------------------#

class Bottleneck(nn.Module):

# Standard bottleneck

def __init__(self, in_channels, out_channels, shortcut=True, expansion=0.5, depthwise=False, act="lrelu"):

super().__init__()

hidden_channels = int(out_channels * expansion)

Conv = DWConv if depthwise else BaseConv

#--------------------------------------------------#

# 利用1x1卷积进行通道数的缩减。缩减率一般是50%

#--------------------------------------------------#

self.conv1 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=act)

#--------------------------------------------------#

# 利用3x3卷积进行通道数的拓张。并且完成特征提取

#--------------------------------------------------#

self.conv2 = Conv(hidden_channels, out_channels, 3, stride=1, act=act)

self.use_add = shortcut and in_channels == out_channels

def forward(self, x):

y = self.conv2(self.conv1(x))

if self.use_add:

y = y + x

return y

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

2.2 外层大残差结构CSP

CSPLayer外层大残差结构图如下

代码实现

#--------------------------------------------------#

# CSP

#--------------------------------------------------#

class CSPLayer(nn.Module):

def __init__(self, in_channels, out_channels, n=1, shortcut=True, expansion=0.5, depthwise=False, act="lrelu"):

# ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

hidden_channels = int(out_channels * expansion)

#--------------------------------------------------#

# 主干部分的初次卷积

#--------------------------------------------------#

self.conv1 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=act)

#--------------------------------------------------#

# 大的残差边部分的初次卷积

#--------------------------------------------------#

self.conv2 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=act)

#-----------------------------------------------#

# 对堆叠的结果进行卷积的处理

#-----------------------------------------------#

self.conv3 = BaseConv(2 * hidden_channels, out_channels, 1, stride=1, act=act)

#--------------------------------------------------#

# 根据循环的次数构建上述Bottleneck残差结构

#--------------------------------------------------#

module_list = [Bottleneck(hidden_channels, hidden_channels, shortcut, 1.0, depthwise, act=act) for _ in range(n)]

self.m = nn.Sequential(*module_list)

def forward(self, x):

#-------------------------------#

# x_1是主干部分

#-------------------------------#

x_1 = self.conv1(x)

#-------------------------------#

# x_2是大的残差边部分

#-------------------------------#

x_2 = self.conv2(x)

#-----------------------------------------------#

# 主干部分利用残差结构堆叠继续进行特征提取

#-----------------------------------------------#

x_1 = self.m(x_1)

#-----------------------------------------------#

# 主干部分和大的残差边部分进行堆叠

#-----------------------------------------------#

x = torch.cat((x_1, x_2), 1)

#-----------------------------------------------#

# 对堆叠的结果进行卷积的处理

#-----------------------------------------------#

return self.conv3(x)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

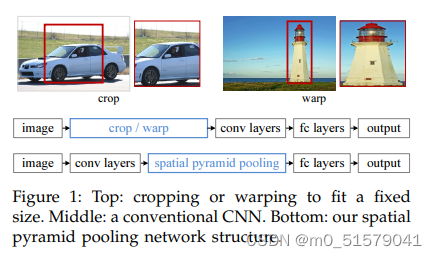

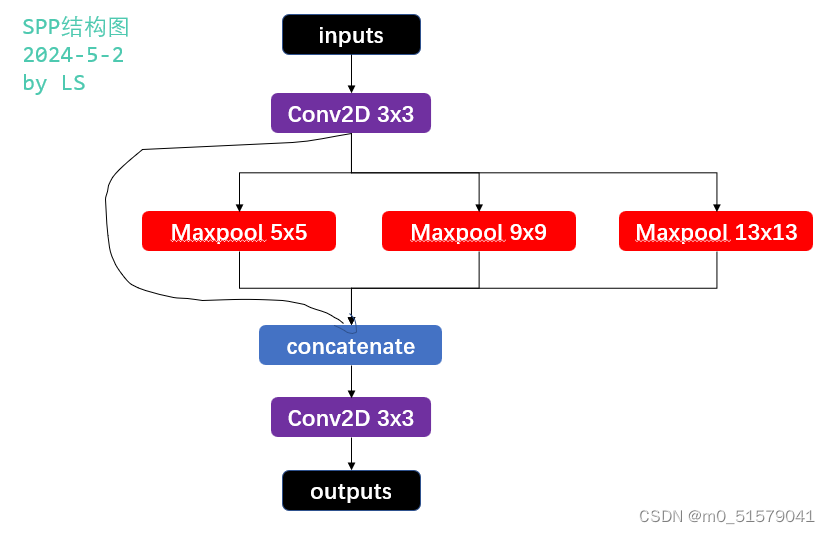

三、SPP结构

在一般的CNN结构中,在卷积层后面通常连接着全连接。而全连接层的特征数是固定的,所以在网络输入的时候,会固定输入的大小(fixed-size)。但在现实中,我们的输入的图像尺寸总是不能满足输入时要求的大小。然而通常的手法就是裁剪(crop)和拉伸(warp)。

这样做总是不好的:图像的纵横比(ratio aspect) 和 输入图像的尺寸是被改变的。这样就会扭曲原始的图像。而Kaiming He在这里提出了一个SPP(Spatial Pyramid Pooling)层能很好的解决这样的问题, 但SPP通常连接在最后一层卷基层。

论文链接:https://arxiv.org/pdf/1406.4729

代码实现

#--------------------------------------------------#

# SPP

#--------------------------------------------------#

class SPPBottleneck(nn.Module):

def __init__(self, in_channels, out_channels, kernel_sizes=(5, 9, 13), activation="lrelu"):

super().__init__()

hidden_channels = in_channels // 2

self.conv1 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=activation)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=ks, stride=1, padding=ks // 2) for ks in kernel_sizes])

conv2_channels = hidden_channels * (len(kernel_sizes) + 1)

self.conv2 = BaseConv(conv2_channels, out_channels, 1, stride=1, act=activation)

def forward(self, x):

x = self.conv1(x)

x = torch.cat([x] + [m(x) for m in self.m], dim=1)

x = self.conv2(x)

return x

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

四、CSPDarknet结构

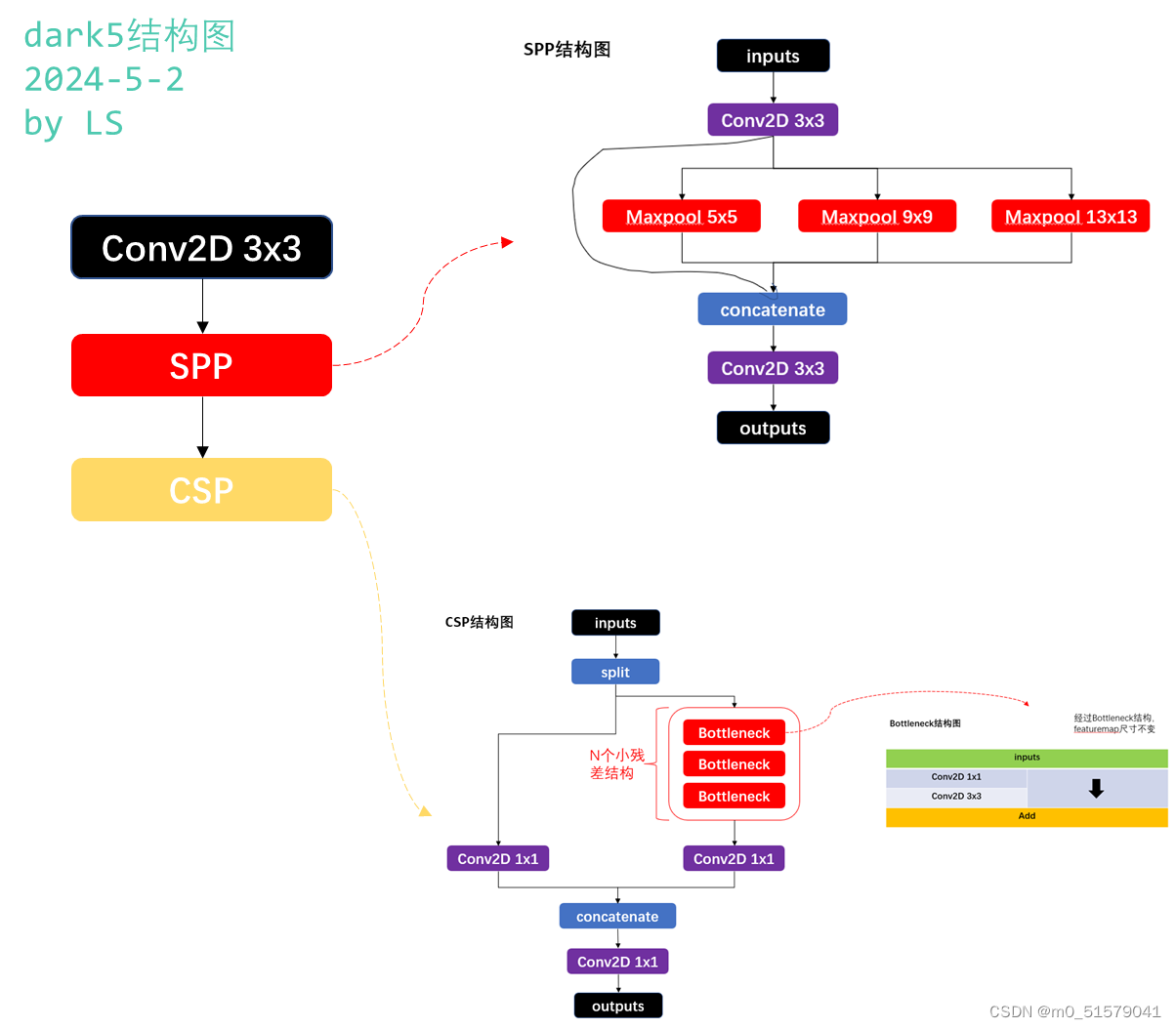

参考yolov3中的Darknet53结构,结合二,三节的CSP和SPP结构,就可以进行CSPDarknet完整网络结构的搭建。CSPDarkenet主体由input,stem,dark2,dark3,dark4,dark5组成。其中dark2,3,4结构类似,stem,dark5稍微有点区别。

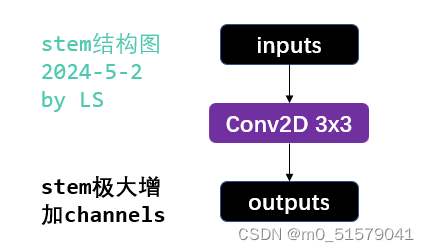

4.1 stem结构

结构

代码实现

#-----------------------------------------------#

# 输入图片是640, 640, 3

# 初始的基本通道是64

#-----------------------------------------------#

base_channels = int(wid_mul * 64) # 64

base_depth = max(round(dep_mul * 3), 1) # 3

#-----------------------------------------------#

# 利用卷积结构进行特征提取

# 640, 640, 3 -> 320, 320, 64

#-----------------------------------------------#

self.stem = Conv(3, base_channels, 6, 2)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

4.2 dark2,3,4结构,dark3为例:

结构

代码实现

#-----------------------------------------------#

# 完成卷积之后,160, 160, 128 -> 80, 80, 256

# 完成CSPlayer之后,80, 80, 256 -> 80, 80, 256

#-----------------------------------------------#

self.dark3 = nn.Sequential(

Conv(base_channels * 2, base_channels * 4, 3, 2, act=act),

CSPLayer(base_channels * 4, base_channels * 4, n=base_depth * 3, depthwise=depthwise, act=act),

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

4.3 dark5结构

结构

代码实现

#-----------------------------------------------#

# 完成卷积之后,40, 40, 512 -> 20, 20, 1024

# 完成SPP之后,20, 20, 1024 -> 20, 20, 1024

# 完成CSPlayer之后,20, 20, 1024 -> 20, 20, 1024

#-----------------------------------------------#

self.dark5 = nn.Sequential(

Conv(base_channels * 8, base_channels * 16, 3, 2, act=act),

SPPBottleneck(base_channels * 16, base_channels * 16, activation=act),

# SPPF(base_channels * 16, base_channels * 16,activation=act),

CSPLayer(base_channels * 16, base_channels * 16, n=base_depth, shortcut=False, depthwise=depthwise, act=act),

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

4.4 CSPDarkNet整体结构

结构

代码实现

#--------------------------------------------------#

# CSPDarknet

#--------------------------------------------------#

class CSPDarknet(nn.Module):

def __init__(self, dep_mul, wid_mul, out_features=("dark3", "dark4", "dark5"), depthwise=False, act="lrelu"):

super().__init__()

assert out_features, "please provide output features of Darknet"

self.out_features = out_features

Conv = DWConv if depthwise else BaseConv

#-----------------------------------------------#

# 输入图片是640, 640, 3

# 初始的基本通道是64

#-----------------------------------------------#

base_channels = int(wid_mul * 64) # 64

base_depth = max(round(dep_mul * 3), 1) # 3

#-----------------------------------------------#

# 利用卷积结构进行特征提取

# 640, 640, 3 -> 320, 320, 64

#-----------------------------------------------#

self.stem = Conv(3, base_channels, 6, 2)

#-----------------------------------------------#

# 完成卷积之后,320, 320, 64 -> 160, 160, 128

# 完成CSPlayer之后,160, 160, 128 -> 160, 160, 128

#-----------------------------------------------#

self.dark2 = nn.Sequential(

Conv(base_channels, base_channels * 2, 3, 2, act=act),

CSPLayer(base_channels * 2, base_channels * 2, n=base_depth, depthwise=depthwise, act=act),

)

#-----------------------------------------------#

# 完成卷积之后,160, 160, 128 -> 80, 80, 256

# 完成CSPlayer之后,80, 80, 256 -> 80, 80, 256

#-----------------------------------------------#

self.dark3 = nn.Sequential(

Conv(base_channels * 2, base_channels * 4, 3, 2, act=act),

CSPLayer(base_channels * 4, base_channels * 4, n=base_depth * 3, depthwise=depthwise, act=act),

)

#-----------------------------------------------#

# 完成卷积之后,80, 80, 256 -> 40, 40, 512

# 完成CSPlayer之后,40, 40, 512 -> 40, 40, 512

#-----------------------------------------------#

self.dark4 = nn.Sequential(

Conv(base_channels * 4, base_channels * 8, 3, 2, act=act),

CSPLayer(base_channels * 8, base_channels * 8, n=base_depth * 3, depthwise=depthwise, act=act),

)

#-----------------------------------------------#

# 完成卷积之后,40, 40, 512 -> 20, 20, 1024

# 完成SPP之后,20, 20, 1024 -> 20, 20, 1024

# 完成CSPlayer之后,20, 20, 1024 -> 20, 20, 1024

#-----------------------------------------------#

self.dark5 = nn.Sequential(

Conv(base_channels * 8, base_channels * 16, 3, 2, act=act),

SPPBottleneck(base_channels * 16, base_channels * 16, activation=act),

# SPPF(base_channels * 16, base_channels * 16,activation=act),

CSPLayer(base_channels * 16, base_channels * 16, n=base_depth, shortcut=False, depthwise=depthwise, act=act),

)

def forward(self, x):

outputs = {}

x = self.stem(x)

outputs["stem"] = x

x = self.dark2(x)

outputs["dark2"] = x

#-----------------------------------------------#

# dark3的输出为80, 80, 256,是一个有效特征层

#-----------------------------------------------#

x = self.dark3(x)

outputs["dark3"] = x

#-----------------------------------------------#

# dark4的输出为40, 40, 512,是一个有效特征层

#-----------------------------------------------#

x = self.dark4(x)

outputs["dark4"] = x

#-----------------------------------------------#

# dark5的输出为20, 20, 1024,是一个有效特征层

#-----------------------------------------------#

x = self.dark5(x)

outputs["dark5"] = x

return [v for k, v in outputs.items() if k in self.out_features]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

四、完整代码

#!/usr/bin/env python3

# -*- coding:utf-8 -*-

# Copyright (c) Megvii, Inc. and its affiliates.

import torch

from torch import nn

from torchsummary import summary

#--------------------------------------------------#

# activation func.

#--------------------------------------------------#

def get_activation(name="lrelu", inplace=True):

if name == "relu":

module = nn.ReLU(inplace=inplace)

elif name == "lrelu":

module = nn.LeakyReLU(0.1, inplace=inplace)

else:

raise AttributeError("Unsupported act type: {}".format(name))

return module

#--------------------------------------------------#

# BaseConv (CBL)

#--------------------------------------------------#

class BaseConv(nn.Module):

def __init__(self, in_channels, out_channels, ksize, stride, groups=1, bias=False, act="lrelu"):

super().__init__()

pad = (ksize - 1) // 2

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size=ksize, stride=stride, padding=pad, groups=groups, bias=bias)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001, momentum=0.03)

self.act = get_activation(act, inplace=True)

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def fuseforward(self, x):

return self.act(self.conv(x))

#--------------------------------------------------#

# DWConv

#--------------------------------------------------#

class DWConv(nn.Module):

def __init__(self, in_channels, out_channels, ksize, stride=1, act="lrelu"):

super().__init__()

self.dconv = BaseConv(in_channels, in_channels, ksize=ksize, stride=stride, groups=in_channels, act=act,)

self.pconv = BaseConv(in_channels, out_channels, ksize=1, stride=1, groups=1, act=act)

def forward(self, x):

x = self.dconv(x)

return self.pconv(x)

#--------------------------------------------------#

# SPP

#--------------------------------------------------#

class SPPBottleneck(nn.Module):

def __init__(self, in_channels, out_channels, kernel_sizes=(5, 9, 13), activation="lrelu"):

super().__init__()

hidden_channels = in_channels // 2

self.conv1 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=activation)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=ks, stride=1, padding=ks // 2) for ks in kernel_sizes])

conv2_channels = hidden_channels * (len(kernel_sizes) + 1)

self.conv2 = BaseConv(conv2_channels, out_channels, 1, stride=1, act=activation)

def forward(self, x):

x = self.conv1(x)

x = torch.cat([x] + [m(x) for m in self.m], dim=1)

x = self.conv2(x)

return x

#--------------------------------------------------#

# 残差结构的构建,小的残差结构

#--------------------------------------------------#

class Bottleneck(nn.Module):

# Standard bottleneck

def __init__(self, in_channels, out_channels, shortcut=True, expansion=0.5, depthwise=False, act="lrelu"):

super().__init__()

hidden_channels = int(out_channels * expansion)

Conv = DWConv if depthwise else BaseConv

#--------------------------------------------------#

# 利用1x1卷积进行通道数的缩减。缩减率一般是50%

#--------------------------------------------------#

self.conv1 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=act)

#--------------------------------------------------#

# 利用3x3卷积进行通道数的拓张。并且完成特征提取

#--------------------------------------------------#

self.conv2 = Conv(hidden_channels, out_channels, 3, stride=1, act=act)

self.use_add = shortcut and in_channels == out_channels

def forward(self, x):

y = self.conv2(self.conv1(x))

if self.use_add:

y = y + x

return y

#--------------------------------------------------#

# CSP

#--------------------------------------------------#

class CSPLayer(nn.Module):

def __init__(self, in_channels, out_channels, n=1, shortcut=True, expansion=0.5, depthwise=False, act="lrelu"):

# ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

hidden_channels = int(out_channels * expansion)

#--------------------------------------------------#

# 主干部分的初次卷积

#--------------------------------------------------#

self.conv1 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=act)

#--------------------------------------------------#

# 大的残差边部分的初次卷积

#--------------------------------------------------#

self.conv2 = BaseConv(in_channels, hidden_channels, 1, stride=1, act=act)

#-----------------------------------------------#

# 对堆叠的结果进行卷积的处理

#-----------------------------------------------#

self.conv3 = BaseConv(2 * hidden_channels, out_channels, 1, stride=1, act=act)

#--------------------------------------------------#

# 根据循环的次数构建上述Bottleneck残差结构

#--------------------------------------------------#

module_list = [Bottleneck(hidden_channels, hidden_channels, shortcut, 1.0, depthwise, act=act) for _ in range(n)]

self.m = nn.Sequential(*module_list)

def forward(self, x):

#-------------------------------#

# x_1是主干部分

#-------------------------------#

x_1 = self.conv1(x)

#-------------------------------#

# x_2是大的残差边部分

#-------------------------------#

x_2 = self.conv2(x)

#-----------------------------------------------#

# 主干部分利用残差结构堆叠继续进行特征提取

#-----------------------------------------------#

x_1 = self.m(x_1)

#-----------------------------------------------#

# 主干部分和大的残差边部分进行堆叠

#-----------------------------------------------#

x = torch.cat((x_1, x_2), 1)

#-----------------------------------------------#

# 对堆叠的结果进行卷积的处理

#-----------------------------------------------#

return self.conv3(x)

#--------------------------------------------------#

# CSPDarknet

#--------------------------------------------------#

class CSPDarknet(nn.Module):

def __init__(self, dep_mul, wid_mul, out_features=("dark3", "dark4", "dark5"), depthwise=False, act="lrelu"):

super().__init__()

assert out_features, "please provide output features of Darknet"

self.out_features = out_features

Conv = DWConv if depthwise else BaseConv

#-----------------------------------------------#

# 输入图片是640, 640, 3

# 初始的基本通道是64

#-----------------------------------------------#

base_channels = int(wid_mul * 64) # 64

base_depth = max(round(dep_mul * 3), 1) # 3

#-----------------------------------------------#

# 利用卷积结构进行特征提取

# 640, 640, 3 -> 320, 320, 64

#-----------------------------------------------#

self.stem = Conv(3, base_channels, 6, 2)

#-----------------------------------------------#

# 完成卷积之后,320, 320, 64 -> 160, 160, 128

# 完成CSPlayer之后,160, 160, 128 -> 160, 160, 128

#-----------------------------------------------#

self.dark2 = nn.Sequential(

Conv(base_channels, base_channels * 2, 3, 2, act=act),

CSPLayer(base_channels * 2, base_channels * 2, n=base_depth, depthwise=depthwise, act=act),

)

#-----------------------------------------------#

# 完成卷积之后,160, 160, 128 -> 80, 80, 256

# 完成CSPlayer之后,80, 80, 256 -> 80, 80, 256

#-----------------------------------------------#

self.dark3 = nn.Sequential(

Conv(base_channels * 2, base_channels * 4, 3, 2, act=act),

CSPLayer(base_channels * 4, base_channels * 4, n=base_depth * 3, depthwise=depthwise, act=act),

)

#-----------------------------------------------#

# 完成卷积之后,80, 80, 256 -> 40, 40, 512

# 完成CSPlayer之后,40, 40, 512 -> 40, 40, 512

#-----------------------------------------------#

self.dark4 = nn.Sequential(

Conv(base_channels * 4, base_channels * 8, 3, 2, act=act),

CSPLayer(base_channels * 8, base_channels * 8, n=base_depth * 3, depthwise=depthwise, act=act),

)

#-----------------------------------------------#

# 完成卷积之后,40, 40, 512 -> 20, 20, 1024

# 完成SPP之后,20, 20, 1024 -> 20, 20, 1024

# 完成CSPlayer之后,20, 20, 1024 -> 20, 20, 1024

#-----------------------------------------------#

self.dark5 = nn.Sequential(

Conv(base_channels * 8, base_channels * 16, 3, 2, act=act),

SPPBottleneck(base_channels * 16, base_channels * 16, activation=act),

# SPPF(base_channels * 16, base_channels * 16,activation=act),

CSPLayer(base_channels * 16, base_channels * 16, n=base_depth, shortcut=False, depthwise=depthwise, act=act),

)

def forward(self, x):

outputs = {}

x = self.stem(x)

outputs["stem"] = x

x = self.dark2(x)

outputs["dark2"] = x

#-----------------------------------------------#

# dark3的输出为80, 80, 256,是一个有效特征层

#-----------------------------------------------#

x = self.dark3(x)

outputs["dark3"] = x

#-----------------------------------------------#

# dark4的输出为40, 40, 512,是一个有效特征层

#-----------------------------------------------#

x = self.dark4(x)

outputs["dark4"] = x

#-----------------------------------------------#

# dark5的输出为20, 20, 1024,是一个有效特征层

#-----------------------------------------------#

x = self.dark5(x)

outputs["dark5"] = x

return [v for k, v in outputs.items() if k in self.out_features]

if __name__ == '__main__':

dep_mul =1

wid_mul = 1

net = CSPDarknet(dep_mul,wid_mul,out_features=("dark3", "dark4", "dark5"), depthwise=False, act="lrelu")

summary(net, input_size=(3, 320, 320), batch_size=2, device="cpu")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

运行查看CSPDarknet完整结构信息

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [2, 32, 160, 160] 3,456

BatchNorm2d-2 [2, 32, 160, 160] 64

LeakyReLU-3 [2, 32, 160, 160] 0

BaseConv-4 [2, 32, 160, 160] 0

Conv2d-5 [2, 64, 80, 80] 18,432

BatchNorm2d-6 [2, 64, 80, 80] 128

LeakyReLU-7 [2, 64, 80, 80] 0

BaseConv-8 [2, 64, 80, 80] 0

Conv2d-9 [2, 32, 80, 80] 2,048

BatchNorm2d-10 [2, 32, 80, 80] 64

LeakyReLU-11 [2, 32, 80, 80] 0

BaseConv-12 [2, 32, 80, 80] 0

Conv2d-13 [2, 32, 80, 80] 2,048

BatchNorm2d-14 [2, 32, 80, 80] 64

LeakyReLU-15 [2, 32, 80, 80] 0

BaseConv-16 [2, 32, 80, 80] 0

Conv2d-17 [2, 32, 80, 80] 1,024

BatchNorm2d-18 [2, 32, 80, 80] 64

LeakyReLU-19 [2, 32, 80, 80] 0

BaseConv-20 [2, 32, 80, 80] 0

Conv2d-21 [2, 32, 80, 80] 9,216

BatchNorm2d-22 [2, 32, 80, 80] 64

LeakyReLU-23 [2, 32, 80, 80] 0

BaseConv-24 [2, 32, 80, 80] 0

Bottleneck-25 [2, 32, 80, 80] 0

Conv2d-26 [2, 64, 80, 80] 4,096

BatchNorm2d-27 [2, 64, 80, 80] 128

LeakyReLU-28 [2, 64, 80, 80] 0

BaseConv-29 [2, 64, 80, 80] 0

CSPLayer-30 [2, 64, 80, 80] 0

Conv2d-31 [2, 128, 40, 40] 73,728

BatchNorm2d-32 [2, 128, 40, 40] 256

LeakyReLU-33 [2, 128, 40, 40] 0

BaseConv-34 [2, 128, 40, 40] 0

Conv2d-35 [2, 64, 40, 40] 8,192

BatchNorm2d-36 [2, 64, 40, 40] 128

LeakyReLU-37 [2, 64, 40, 40] 0

BaseConv-38 [2, 64, 40, 40] 0

Conv2d-39 [2, 64, 40, 40] 8,192

BatchNorm2d-40 [2, 64, 40, 40] 128

LeakyReLU-41 [2, 64, 40, 40] 0

BaseConv-42 [2, 64, 40, 40] 0

Conv2d-43 [2, 64, 40, 40] 4,096

BatchNorm2d-44 [2, 64, 40, 40] 128

LeakyReLU-45 [2, 64, 40, 40] 0

BaseConv-46 [2, 64, 40, 40] 0

Conv2d-47 [2, 64, 40, 40] 36,864

BatchNorm2d-48 [2, 64, 40, 40] 128

LeakyReLU-49 [2, 64, 40, 40] 0

BaseConv-50 [2, 64, 40, 40] 0

Bottleneck-51 [2, 64, 40, 40] 0

Conv2d-52 [2, 64, 40, 40] 4,096

BatchNorm2d-53 [2, 64, 40, 40] 128

LeakyReLU-54 [2, 64, 40, 40] 0

BaseConv-55 [2, 64, 40, 40] 0

Conv2d-56 [2, 64, 40, 40] 36,864

BatchNorm2d-57 [2, 64, 40, 40] 128

LeakyReLU-58 [2, 64, 40, 40] 0

BaseConv-59 [2, 64, 40, 40] 0

Bottleneck-60 [2, 64, 40, 40] 0

Conv2d-61 [2, 64, 40, 40] 4,096

BatchNorm2d-62 [2, 64, 40, 40] 128

LeakyReLU-63 [2, 64, 40, 40] 0

BaseConv-64 [2, 64, 40, 40] 0

Conv2d-65 [2, 64, 40, 40] 36,864

BatchNorm2d-66 [2, 64, 40, 40] 128

LeakyReLU-67 [2, 64, 40, 40] 0

BaseConv-68 [2, 64, 40, 40] 0

Bottleneck-69 [2, 64, 40, 40] 0

Conv2d-70 [2, 128, 40, 40] 16,384

BatchNorm2d-71 [2, 128, 40, 40] 256

LeakyReLU-72 [2, 128, 40, 40] 0

BaseConv-73 [2, 128, 40, 40] 0

CSPLayer-74 [2, 128, 40, 40] 0

Conv2d-75 [2, 256, 20, 20] 294,912

BatchNorm2d-76 [2, 256, 20, 20] 512

LeakyReLU-77 [2, 256, 20, 20] 0

BaseConv-78 [2, 256, 20, 20] 0

Conv2d-79 [2, 128, 20, 20] 32,768

BatchNorm2d-80 [2, 128, 20, 20] 256

LeakyReLU-81 [2, 128, 20, 20] 0

BaseConv-82 [2, 128, 20, 20] 0

Conv2d-83 [2, 128, 20, 20] 32,768

BatchNorm2d-84 [2, 128, 20, 20] 256

LeakyReLU-85 [2, 128, 20, 20] 0

BaseConv-86 [2, 128, 20, 20] 0

Conv2d-87 [2, 128, 20, 20] 16,384

BatchNorm2d-88 [2, 128, 20, 20] 256

LeakyReLU-89 [2, 128, 20, 20] 0

BaseConv-90 [2, 128, 20, 20] 0

Conv2d-91 [2, 128, 20, 20] 147,456

BatchNorm2d-92 [2, 128, 20, 20] 256

LeakyReLU-93 [2, 128, 20, 20] 0

BaseConv-94 [2, 128, 20, 20] 0

Bottleneck-95 [2, 128, 20, 20] 0

Conv2d-96 [2, 128, 20, 20] 16,384

BatchNorm2d-97 [2, 128, 20, 20] 256

LeakyReLU-98 [2, 128, 20, 20] 0

BaseConv-99 [2, 128, 20, 20] 0

Conv2d-100 [2, 128, 20, 20] 147,456

BatchNorm2d-101 [2, 128, 20, 20] 256

LeakyReLU-102 [2, 128, 20, 20] 0

BaseConv-103 [2, 128, 20, 20] 0

Bottleneck-104 [2, 128, 20, 20] 0

Conv2d-105 [2, 128, 20, 20] 16,384

BatchNorm2d-106 [2, 128, 20, 20] 256

LeakyReLU-107 [2, 128, 20, 20] 0

BaseConv-108 [2, 128, 20, 20] 0

Conv2d-109 [2, 128, 20, 20] 147,456

BatchNorm2d-110 [2, 128, 20, 20] 256

LeakyReLU-111 [2, 128, 20, 20] 0

BaseConv-112 [2, 128, 20, 20] 0

Bottleneck-113 [2, 128, 20, 20] 0

Conv2d-114 [2, 256, 20, 20] 65,536

BatchNorm2d-115 [2, 256, 20, 20] 512

LeakyReLU-116 [2, 256, 20, 20] 0

BaseConv-117 [2, 256, 20, 20] 0

CSPLayer-118 [2, 256, 20, 20] 0

Conv2d-119 [2, 512, 10, 10] 1,179,648

BatchNorm2d-120 [2, 512, 10, 10] 1,024

LeakyReLU-121 [2, 512, 10, 10] 0

BaseConv-122 [2, 512, 10, 10] 0

Conv2d-123 [2, 256, 10, 10] 131,072

BatchNorm2d-124 [2, 256, 10, 10] 512

LeakyReLU-125 [2, 256, 10, 10] 0

BaseConv-126 [2, 256, 10, 10] 0

MaxPool2d-127 [2, 256, 10, 10] 0

MaxPool2d-128 [2, 256, 10, 10] 0

MaxPool2d-129 [2, 256, 10, 10] 0

Conv2d-130 [2, 512, 10, 10] 524,288

BatchNorm2d-131 [2, 512, 10, 10] 1,024

LeakyReLU-132 [2, 512, 10, 10] 0

BaseConv-133 [2, 512, 10, 10] 0

SPPBottleneck-134 [2, 512, 10, 10] 0

Conv2d-135 [2, 256, 10, 10] 131,072

BatchNorm2d-136 [2, 256, 10, 10] 512

LeakyReLU-137 [2, 256, 10, 10] 0

BaseConv-138 [2, 256, 10, 10] 0

Conv2d-139 [2, 256, 10, 10] 131,072

BatchNorm2d-140 [2, 256, 10, 10] 512

LeakyReLU-141 [2, 256, 10, 10] 0

BaseConv-142 [2, 256, 10, 10] 0

Conv2d-143 [2, 256, 10, 10] 65,536

BatchNorm2d-144 [2, 256, 10, 10] 512

LeakyReLU-145 [2, 256, 10, 10] 0

BaseConv-146 [2, 256, 10, 10] 0

Conv2d-147 [2, 256, 10, 10] 589,824

BatchNorm2d-148 [2, 256, 10, 10] 512

LeakyReLU-149 [2, 256, 10, 10] 0

BaseConv-150 [2, 256, 10, 10] 0

Bottleneck-151 [2, 256, 10, 10] 0

Conv2d-152 [2, 512, 10, 10] 262,144

BatchNorm2d-153 [2, 512, 10, 10] 1,024

LeakyReLU-154 [2, 512, 10, 10] 0

BaseConv-155 [2, 512, 10, 10] 0

CSPLayer-156 [2, 512, 10, 10] 0

================================================================

Total params: 4,212,672

Trainable params: 4,212,672

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 2.34

Forward/backward pass size (MB): 303.91

Params size (MB): 16.07

Estimated Total Size (MB): 322.32

----------------------------------------------------------------

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170