热门标签

热门文章

- 1【Opencv项目实战】背景替换:帮你的证件照换个背景色_backgroundremover

- 2”雷云嘴中出低吟声

- 3我的一些学习经验:WIFI_hostapd 提高无线传输效率

- 4CA位置注意力机制_ca注意力机制

- 5MMSegmentation的用法(手把手入门教程)搭配Colab,对自己的数据进行训练_mmsegmentation miou其他类为0

- 6failed to update store for object type *libnetwork.endpointCnt: Key not found in store

- 7ArrayList和LinkedList_arraylist向第一个位置插入元素

- 8真实评测:华为mate30epro和mate30的区别-哪个好_mate30对比mate30epro

- 9安装和使用pyltp

- 10Android 应用启动过程

当前位置: article > 正文

python爬虫返回百度安全验证_python爬虫百度安全验证

作者:花生_TL007 | 2024-04-10 10:24:06

赞

踩

python爬虫百度安全验证

我一开始用的是requests库,header加了accept和user-agent,这是一开始的代码:

- import requests

-

- headers = {

- 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36',

- }

-

- url = "https://www.baidu.com/s?wd=python"

- response = requests.get(url, headers=headers)

- response.encoding = 'utf-8'

- print(response.text)

返回结果:

然后我用urllib.request试了一下

- import urllib.request

-

- headers = {

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.97 Safari/537.36 Edg/83.0.478.50',

- 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9'

- }

-

- url = "https://www.baidu.com/s?wd=python"

-

- req = urllib.request.Request(url=url, headers=headers)

- html = urllib.request.urlopen(req).read().decode('UTF-8')

-

- # with open(r'new.html', mode='w',encoding='utf-8') as f:

- # f.write(html)

- print(html)

-

- # with open('new.html', mode='rb') as f:

- # #f.read()

- # html = f.read().decode("utf-8")

- # soup = BeautifulSoup(html, 'html.parser')

- # bs = soup.select('#content_left')

- # print(bs)

- # f.close()

就可以返回了...

大概因为requests是第三方库比较容易被识别?哪位大神知道可以在评论区告诉我一下怎么用requests绕过吗(〃'▽'〃)

再一个问题,爬虫是不是也跟浏览器的版本有关系?我和上面这个header的区别也就是浏览器版本不一样吧

-

- #这个是我自己浏览器的header,不管是requests还是urllib.request都是拿不到的

- headers = {

- 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36',

- # }

ps:如果请求参数想加中文的话,可以用quote

- from urllib.parse import quote

- text = quote("从万米高空降临", 'utf-8') #把中文进行url编码

- url = "https://www.baidu.com/s?wd="+ text

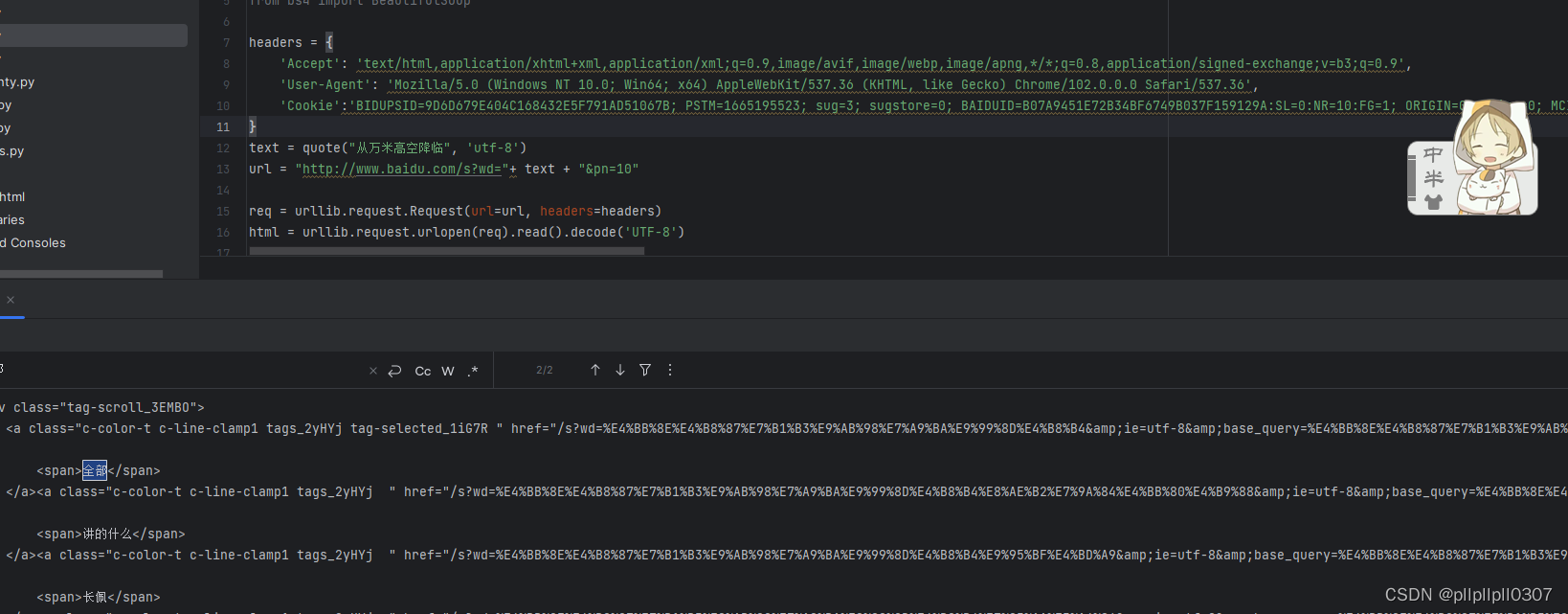

===更新==============2023.07.02=====================

感谢评论区友友的提示!我这边尝试了一下直接换http协议,不行;想起了之前我试过的headers里面加Cookie,用http协议请求成功(这个我直接用的高版本chrome的User-Agent)~~

实现如下:

urllib.request同理,headers里面加Cookie,用http请求,User-Agent高版本同样可行。如图:

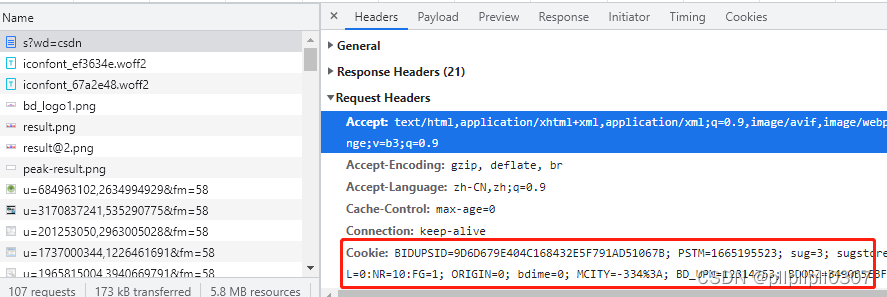

Cookie是浏览器f12随手拿的:

之前查过很多文章都说加cookie就可以了,但是我怎么试都不行,没想到换个http协议就ok了,果然我还是学的太浅了

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/花生_TL007/article/detail/398134

推荐阅读

相关标签