热门标签

热门文章

- 1java学习笔记:集合之一些练习题_java map

- 2图像分割与GAN网络_gan图像分割

- 3openmv串口发送数据_OpenMV串口发送图片

- 4删除数据库的外键_删除外键

- 5echarts树图的节点自定义图片,以及子节点挤在一起该怎么处理..._echarts树状图设置点的图形

- 6【超详细】HIVE 日期函数(当前日期、时间戳转换、前一天日期等)_hive當前時間

- 7Nginx详解(一篇让你深入认识Nginx)_ngix

- 8tryhackme

- 9穿越 java | 快速入门篇 - 第1节 计算机基础知识_java 开发涉及精简指令复杂指令

- 10如何在Wpf程序中使用MaterialDesign,实现mvvm,及封装常用的确认对话框、信息输入对话框、进度等待框_wpf material design

当前位置: article > 正文

[论文笔记] Pai-megatron Qwen1.5-14B-CT 后预训练 踩坑记录

作者:花生_TL007 | 2024-04-21 15:14:38

赞

踩

[论文笔记] Pai-megatron Qwen1.5-14B-CT 后预训练 踩坑记录

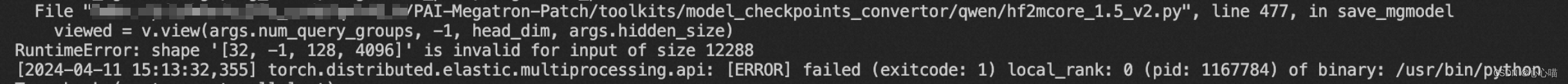

1. 模型权重转换报错 hf2mcore_1.5_v2.py

报错为:

/mnt/cpfs/kexin/dlc_code/qwen1.5/PAI-Megatron-Patch/toolkits/model_checkpoints_convertor/qwen/hf2mcore_1.5_v2.py

正确文件替换如下,更改了477行,删除了 args.hidden_size 这个维度,在tp>1时也支持转换:

- elif 'linear_qkv.bias' in k and 'norm' not in k:

- # raw

- viewed = v.view(args.num_query_groups, -1, head_dim, args.hidden_size)

- # changed

- viewed = v.view(args.num_query_groups, -1, head_dim)

替换为:

- import os

- import re

- import json

- import torch

- import transformers

- import torch.nn as nn

- from functools import partial

- from collections import defaultdict

- from transformers import (

- AutoConfig,

- AutoModelForCausalLM,

- AutoTokenizer,

- )

- from transformers.models.mixtral

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/花生_TL007/article/detail/463691

推荐阅读

相关标签