热门标签

热门文章

- 1【Microsoft Azure 的1024种玩法】六.使用Azure Cloud Shell对Linux VirtualMachines 进行生命周期管理...

- 2大数据技术-Apache Superset

- 3Android - Loading 中间显示logo,外部ing转圈加载动画_android glide 图片loading转圈

- 4ArmSoM-W3之RK3588安装Qt+opencv+采集摄像头画面_rk3588 debian qt

- 5基于python超市仓库管理系统的设计与实现-计算机毕业设计源码96723_编写一个程序,模拟库存管理系统,主要有商品入库,商品出库,输出仓库中商品信息的功

- 6普通本科在人工智能和JAVA里选一个,怎么选?AI和java哪个好?_java ai

- 7音视频开发之旅(80)- AI数字人-腾讯开源AniPortrait-音频驱动的肖像动画

- 8【.NET Core】深入理解C#中的特殊字符_.net常用特殊字符

- 9vivado 详细布局、布局后优化_vivado 优化时序的布局策略有哪些

- 10git bash 拉取代码_解决git bash下拉

当前位置: article > 正文

java.sql.SQLException: No suitable driver -- sparkshell读取mysql数据load失败 未解决 (但Properties方式可以)_spark : java.sql.sqlexception: no suitable driver

作者:花生_TL007 | 2024-05-19 14:45:56

赞

踩

spark : java.sql.sqlexception: no suitable driver at java.sql.drivermanager.

spark使用jdbc格式读取数据内容

要将驱动jar包复制到spark的jars目录下

注意是单节点的spark还是集群的spark

要将jar包复制到每个节点。

加载jar包方法有几个

1.启动spark shell 时,加上 --jars

[root@hadoop01 spark-2.2.0-bin-hadoop2.7]#

bin/spark-shell --jars mysql-connector-java-5.1.7-bin.jar --driver--class-path --jars mysql-connector-java-5.1.7-bin.jar(要写完整路径)

- 1

- 2

bin/spark-shell --jars /usr/local/spark-2.2.0-bin-hadoop2.7/mysql-connector-java-5.1.7-bin.jar --driver-class-path /usr/local/spark-2.2.0-bin-hadoop2.7/mysql-connector-java-5.1.7-bin.jar

2.使用option配置

val jdbcDF = spark.read.format("jdbc")

.option("driver","com.mysql.jdbc.Driver")

.option("url", "jdbc:mysql//hadoop01:3306/test")

.option("dbtable", "u")

.option("user","root")

.option("password","root").load()

- 1

- 2

- 3

- 4

- 5

- 6

但是最后还是没什么用

使用命令出错:

scala> val jdbcDF = spark.read.format("jdbc").option("url", "jdbc:mysql//hadoop01:3306/test").option("dbtable", "u").option("user","root").option("password","root").load()

- 1

- 2

报错:java.sql.SQLException: No suitable driver

java.sql.SQLException: No suitable driver

at java.sql.DriverManager.getDriver(DriverManager.java:315)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions$$anonfun$7.apply(JDBCOptions.scala:84)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions$$anonfun$7.apply(JDBCOptions.scala:84)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.<init>(JDBCOptions.scala:83)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.<init>(JDBCOptions.scala:34)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:32)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:306)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:178)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:146)

... 48 elided

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

然后将mysql的jar包cp到spark的jars目录下

但是:再次启动还是同样的错误

使用.option(“driver”,“com.mysql.jdbc.Driver”)照样找不到,这个直接报空指针了,再换个方法

val jdbcDF = spark.read.format("jdbc")

.option("driver","com.mysql.jdbc.Driver")

.option("url", "jdbc:mysql//hadoop01:3306/test")

.option("dbtable", "u")

.option("user","root")

.option("password","root").load()

- 1

- 2

- 3

- 4

- 5

- 6

所以第二次启动:使用spark-shell --jars

[root@hadoop01 spark-2.2.0-bin-hadoop2.7]#

bin/spark-shell --jars mysql-connector-java-5.1.7-bin.jar

- 1

- 2

报错:

java.io.FileNotFoundException:

Jar /usr/local/spark-2.2.0-bin-hadoop2.7/mysql-connector-java-5.1.7-bin.jar

not found

- 1

- 2

- 3

发现到sppark的根目录去找jar包 没有到jars目录下找

所以将mysql驱动jar包再次cp到spark根目录下。

再次启动bin/spark-shell --jars mysql-connector-java-5.1.7-bin.jar

成功

再次读取数据,仍然报没有驱动的错误

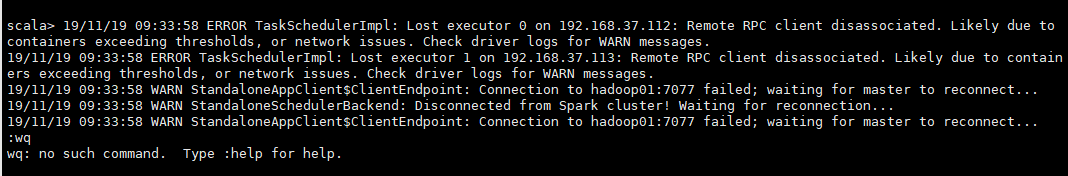

最后关闭spaark时发现

注意是112节点 我启动sparkshell是111节点

scala> 19/11/19 09:33:58 ERROR TaskSchedulerImpl: Lost executor 0 on 192.168.37.112: Remote RPC client disassociated. Likely due to containers exceeding thresholds, or network issues. Check driver logs for WARN messages.

- 1

- 2

所以

我启动了spark集群,然后再启动spark shell,连接的就可能不是本机的spark

而是其他节点的spark

而我其他节点没有mysql驱动

所以

就一直出错 不管我在111节点再怎么搞都没用。

贼坑。

改完后仍然报错!!!!未解决

spark sql 读取jdbc的两种方式 第一种不管怎么改都不行 不知道怎么办???

val jdbcDF = spark.read.format("jdbc").option("driver","com.mysql.jdbc.Driver").option("url", "jdbc:mysql//hadoop01:3306/test").option("dbtable", "u").option("user","root").option("password","root").load()

val jdbcDF = spark.read.format("jdbc").option("url", "jdbc:mysql//hadoop01:3306/test").option("dbtable", "u").option("user","root").option("password","root").load()

val jdbcDF = spark.read.format("jdbc")

.option("url", "jdbc:mysql//hadoop01:3306/test")

.option("dbtable", "u")

.option("user","root")

.option("password","root")

.load()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

但是这种配置Properties方法就可以使用!!

val connectionProperties = new java.util.Properties()

connectionProperties.put("user", "root")

connectionProperties.put("password", "root")

val jdbcDF2 = spark.read.jdbc("jdbc:mysql://hadoop01:3306/test", "u", connectionProperties)

- 1

- 2

- 3

- 4

- 5

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181) Type in expressions to have them evaluated. Type :help for more information. scala> val jdbcDF = spark.read.format("jdbc").option("url", "jdbc:mysql//hadoop01:3306/test").option("dbtable", "u").option("user","root").option("password","root").load() java.sql.SQLException: No suitable driver at java.sql.DriverManager.getDriver(DriverManager.java:315) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions$$anonfun$7.apply(JDBCOptions.scala:84) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions$$anonfun$7.apply(JDBCOptions.scala:84) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.<init>(JDBCOptions.scala:83) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.<init>(JDBCOptions.scala:34) at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:32) at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:306) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:178) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:146) ... 48 elided scala> val jdbcDF = spark.read.format("jdbc").option("driver","com.mysql.jdbc.Driver").option("url", "jdbc:mysql//hadoop01:3306/test").option("dbtable", "u").option("user","root").option("password","root").load() java.lang.NullPointerException at org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD$.resolveTable(JDBCRDD.scala:72) at org.apache.spark.sql.execution.datasources.jdbc.JDBCRelation.<init>(JDBCRelation.scala:113) at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:47) at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:306) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:178) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:146) ... 48 elided scala> val connectionProperties = new java.util.Properties() connectionProperties: java.util.Properties = {} scala> connectionProperties.put("user", "root") res0: Object = null scala> connectionProperties.put("password", "root") res1: Object = null scala> val jdbcDF2 = spark.read.jdbc("jdbc:mysql://hadoop01:3306/test", "u", connectionProperties) jdbcDF2: org.apache.spark.sql.DataFrame = [id: int, name: string] scala> val jdbcDF = spark.read.format("jdbc") jdbcDF: org.apache.spark.sql.DataFrameReader = org.apache.spark.sql.DataFrameReader@399ac1a3 scala> .option("url", "jdbc:mysql//hadoop01:3306/test") res2: org.apache.spark.sql.DataFrameReader = org.apache.spark.sql.DataFrameReader@399ac1a3 scala> .option("dbtable", "u") res3: org.apache.spark.sql.DataFrameReader = org.apache.spark.sql.DataFrameReader@399ac1a3 scala> .option("user","root") res4: org.apache.spark.sql.DataFrameReader = org.apache.spark.sql.DataFrameReader@399ac1a3 scala> .option("password","root") res5: org.apache.spark.sql.DataFrameReader = org.apache.spark.sql.DataFrameReader@399ac1a3 scala> .load() java.sql.SQLException: No suitable driver at java.sql.DriverManager.getDriver(DriverManager.java:315) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions$$anonfun$7.apply(JDBCOptions.scala:84) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions$$anonfun$7.apply(JDBCOptions.scala:84) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.<init>(JDBCOptions.scala:83) at org.apache.spark.sql.execution.datasources.jdbc.JDBCOptions.<init>(JDBCOptions.scala:34) at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:32) at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:306) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:178) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:146) ... 48 elided scala>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/花生_TL007/article/detail/593374

推荐阅读

相关标签