热门标签

热门文章

- 1实操教学|用Serverless 分分钟部署一个 Spring Boot 应用,真香!

- 2色彩校正及OpenCV mcc模块介绍_有mcc的opencv

- 3文本----简单编写文章的方法(中),后端接口的编写,自己编写好页面就上传到自己的服务器上,使用富文本编辑器进行编辑,想写好一个项目,先分析一下需求,再理一下实现思路,再搞几层,配好参数校验,lomb

- 4【Flink实战系列】Flink+kafka+redis 实时计算 wordcount_flink 读写redis

- 5【ViViT】A Video Vision Transformer 用于视频数据特征提取的ViT详解_视频vit

- 6数据结构之——简说链表

- 7【专题】2024中国汽车业人工智能行业应用发展图谱报告合集PDF分享(附原数据表)...

- 8浅谈Nginx负载均衡原理与实现_nginx 负载均衡 必须在局域网吗为什么

- 9二、Neo4j的使用(知识图谱构建射雕人物关系)

- 10推荐9个好玩的AI作图网站_mental ai

当前位置: article > 正文

windows11本地安装部署langchain-chatchat api接口报错_error: apierror: 获取chatcompletion时出错:invalid respo

作者:菜鸟追梦旅行 | 2024-04-09 15:09:39

赞

踩

error: apierror: 获取chatcompletion时出错:invalid response object from api:

Langchain-chatchat

提示:Langchain服务已经正常启动,swagger-ui页面也正常访问的情况下出现接口调试报错问题

前言

报错接口如下:

2023-11-03 15:23:50 | INFO | stdout | INFO: 127.0.0.1:53907 - "POST /v1/chat/chat HTTP/1.1" 404 Not Found 2023-11-03 15:24:01 | INFO | stdout | INFO: 127.0.0.1:53920 - "POST /v1 HTTP/1.1" 404 Not Found 2023-11-03 15:24:23 | INFO | stdout | INFO: 127.0.0.1:53938 - "GET /v1 HTTP/1.1" 404 Not Found INFO: 127.0.0.1:54053 - "GET / HTTP/1.1" 307 Temporary Redirect INFO: 127.0.0.1:54053 - "GET /docs HTTP/1.1" 200 OK openai.api_key='EMPTY' openai.api_base='http://127.0.0.1:20000/v1' model='chatglm2-6b' messages=[OpenAiMessage(role='user', content='hello')] temperature=0.7 n=1 max_tokens=0 stop=[] stream=False presence_penalty=0 frequency_penalty=0 INFO: 127.0.0.1:54064 - "POST /chat/fastchat HTTP/1.1" 200 OK 2023-11-03 15:26:58 | INFO | stdout | INFO: 127.0.0.1:54065 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:26:58,486 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:26:58,487 - openai_chat.py[line:52] - ERROR: APIError: 获取ChatCompletion时出错:Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400) openai.api_key='EMPTY' openai.api_base='http://127.0.0.1:20000/v1' model='chatglm2-6b' messages=[OpenAiMessage(role='user', content='hello')] temperature=0.7 n=1 max_tokens=0 stop=[] stream=False presence_penalty=0 frequency_penalty=0 INFO: 127.0.0.1:54064 - "POST /chat/fastchat HTTP/1.1" 200 OK 2023-11-03 15:27:02 | INFO | stdout | INFO: 127.0.0.1:54071 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:02,322 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:02,323 - openai_chat.py[line:52] - ERROR: APIError: 获取ChatCompletion时出错:Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400) openai.api_key='EMPTY' openai.api_base='http://127.0.0.1:20000/v1' model='chatglm2-6b' messages=[OpenAiMessage(role='user', content='hello')] temperature=0.7 n=1 max_tokens=0 stop=[] stream=False presence_penalty=0 frequency_penalty=0 INFO: 127.0.0.1:54064 - "POST /chat/fastchat HTTP/1.1" 200 OK 2023-11-03 15:27:02 | INFO | stdout | INFO: 127.0.0.1:54073 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:02,968 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:02,970 - openai_chat.py[line:52] - ERROR: APIError: 获取ChatCompletion时出错:Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400) 123 INFO: 127.0.0.1:54096 - "POST /chat/chat HTTP/1.1" 200 OK {'cache': None, 'verbose': True, 'callbacks': [<langchain.callbacks.streaming_aiter.AsyncIteratorCallbackHandler object at 0x000001DE719FE610>], 'callback_manager': None, 'tags': None, 'metadata': None, 'client': <class 'openai.api_resources.chat_completion.ChatCompletion'>, 'model_name': 'chatglm2-6b', 'temperature': 0.7, 'model_kwargs': {}, 'openai_api_key': 'EMPTY', 'openai_api_base': 'http://127.0.0.1:20000/v1', 'openai_organization': '', 'openai_proxy': '', 'request_timeout': None, 'max_retries': 6, 'streaming': True, 'n': 1, 'max_tokens': 0, 'tiktoken_model_name': None} 2023-11-03 15:27:36 | INFO | stdout | INFO: 127.0.0.1:54097 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:36,492 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:36,493 - before_sleep.py[line:65] - WARNING: Retrying langchain.chat_models.openai.acompletion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised APIError: Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400). 2023-11-03 15:27:40 | INFO | stdout | INFO: 127.0.0.1:54103 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:40,498 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:40,499 - before_sleep.py[line:65] - WARNING: Retrying langchain.chat_models.openai.acompletion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised APIError: Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400). 2023-11-03 15:27:44 | INFO | stdout | INFO: 127.0.0.1:54109 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:44,521 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:44,524 - before_sleep.py[line:65] - WARNING: Retrying langchain.chat_models.openai.acompletion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised APIError: Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400). 2023-11-03 15:27:48 | INFO | stdout | INFO: 127.0.0.1:54113 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:48,537 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:48,537 - before_sleep.py[line:65] - WARNING: Retrying langchain.chat_models.openai.acompletion_with_retry.<locals>._completion_with_retry in 8.0 seconds as it raised APIError: Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400). 2023-11-03 15:27:56 | INFO | stdout | INFO: 127.0.0.1:54124 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:27:56,572 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:27:56,574 - before_sleep.py[line:65] - WARNING: Retrying langchain.chat_models.openai.acompletion_with_retry.<locals>._completion_with_retry in 10.0 seconds as it raised APIError: Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400). 2023-11-03 15:28:06 | INFO | stdout | INFO: 127.0.0.1:54138 - "POST /v1/chat/completions HTTP/1.1" 400 Bad Request 2023-11-03 15:28:06,598 - util.py[line:67] - INFO: message='OpenAI API response' path=http://127.0.0.1:20000/v1/chat/completions processing_ms=None request_id=None response_code=400 2023-11-03 15:28:06,604 - utils.py[line:26] - ERROR: APIError: Caught exception: Invalid response object from API: '{"object":"error","message":"0 is less than the minimum of 1 - \'max_tokens\'","code":40302}' (HTTP response code was 400)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

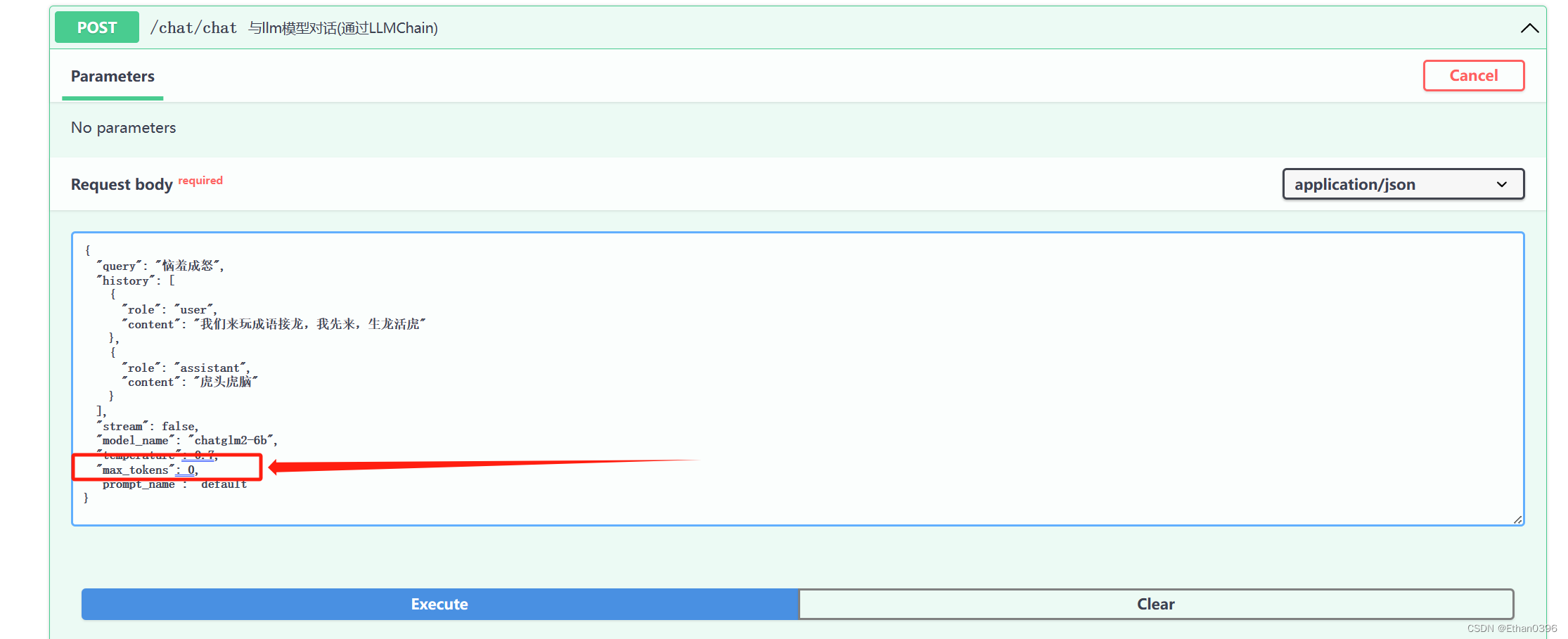

问题分析

请求体重自带 【max_tokens】,如果有max_tokens=0,不为null,就去请求方案openAI接口,我们只要掉本地api接口就可了。所以解决方案就是将这个参数从请求体中删掉就可以正常请求了,这个问题困扰了我两天,真的是太反人类了。

希望对大家有帮助。

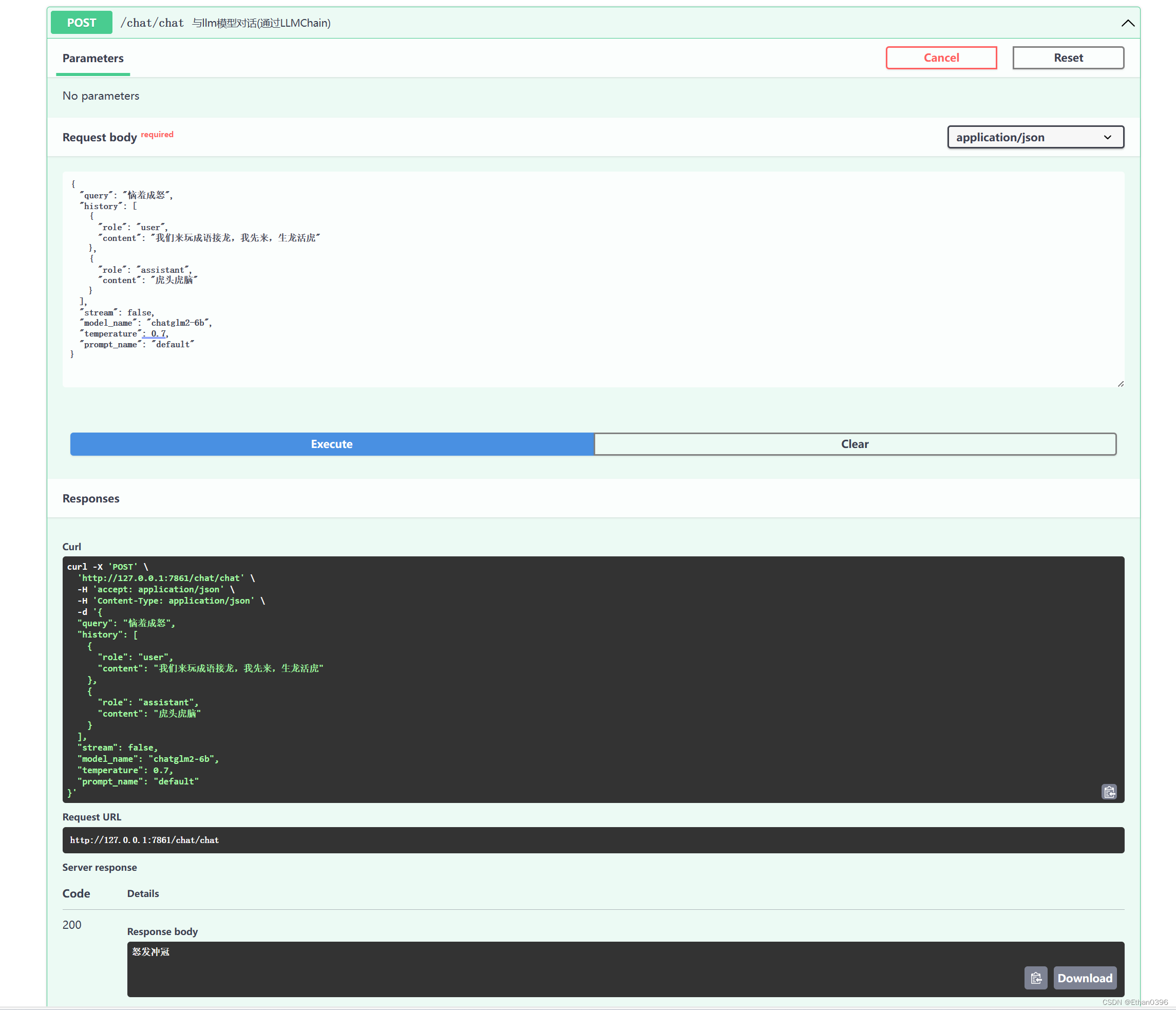

解决问题方案

删除【max_tokens】参数。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/菜鸟追梦旅行/article/detail/393557

推荐阅读

相关标签