热门标签

热门文章

- 1C# RAM Stable Diffusion 提示词反推 Onnx Demo_ram.onnx

- 2Linux性能统计工具_内存统计工具

- 3Redis 集群hash分片算法(槽位定位算法)_redis集群槽位计算

- 4CentOS7安装ZooKeeper完整步骤

- 5论文初审就被拒,较常见的10类负面意见 (拒稿理由)_desk rejection

- 62023保研经验贴(低rank的浙软、软件所、东南、哈深之旅)_浙软好保吗

- 7数据结构知识点总结:排序_数据排序时,是否类型数据的是大于否

- 8数据中台产品核心功能_数据中台设计方式

- 9Linux:文件系统与日志分析

- 10vue 使用element-ui的el-dialog时 由于滚动条隐藏和出现导致页面抖动问题的解决_vue切换页面时滚动条会先隐藏再出现导致页面抖动

当前位置: article > 正文

Pytorch实战——MNIST数据集手写数字识别(CNN卷积神经网络)_pytorch mnist

作者:酷酷是懒虫 | 2024-07-05 16:26:32

赞

踩

pytorch mnist

原视频链接:轻松学Pytorch手写字体识别MNIST

1.加载必要的库

#1 加载必要的库

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets,transforms

- 1

- 2

- 3

- 4

- 5

- 6

2.定义超参数

#2 定义超参数

BATCH_SIZE= 64 #每次训练的数据的个数

DEVICE=torch.device("cuda"if torch.cuda.is_available()else"cpu")#做一个判断,如果有gpu就用gpu,如果没有的话就用cpu

EPOCHS=20 #训练轮数

- 1

- 2

- 3

- 4

3.构建pipeline,对图像做处理

#3 构建pipeline,对图像做处理

pipeline=transforms.Compose([

transforms.ToTensor(),#将图片转换成tensor类型

transforms.Normalize((0.1307,),(0.3081,))#正则化,模型过拟合时,降低模型复杂度

])

- 1

- 2

- 3

- 4

- 5

4.下载、加载数据集

#下载数据集

train_set=datasets.MNIST("data",train=True,download=True,transform=pipeline)#文件夹,需要训练,需要下载,要转换成tensor

test_set=datasets.MNIST("data",train=False,download=True,transform=pipeline)

#加载数据

train_loader=DataLoader(train_set,batch_size=BATCH_SIZE,shuffle=True)#加载训练集,数据个数为64,需要打乱

test_loader=DataLoader(test_set,batch_size=BATCH_SIZE,shuffle=True)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

下载完毕之后,看一眼数据集内的图片

with open("MNIST的绝对路径","rb") as f:

file=f.read()

image1=[int(str(item).encode('ascii'),10)for item in file[16: 16+784]]

print(image1)

import cv2

import numpy as np

image1_np=np.array(image1,dtype=np.uint8).reshape(28,28,1)

print(image1_np.shape)

cv2.imwrite("digit.jpg",image1_np)#保存图片

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

输出结果

5.构建网络模型

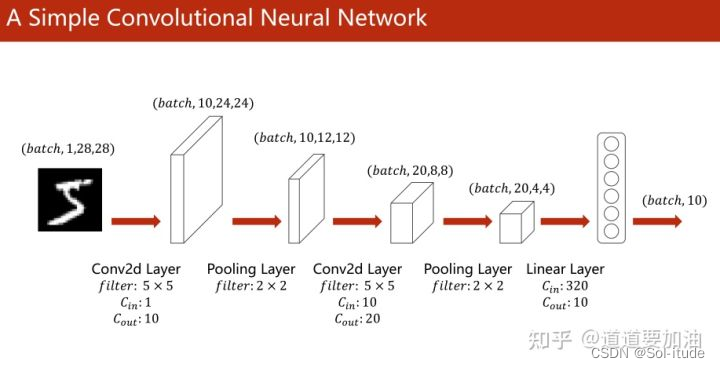

#5 构建网络模型 class Digit(nn.Module): def __init__(self): super().__init__() self.conv1= nn.Conv2d(1,10,kernel_size=5) self.conv2=nn.Conv2d(10,20,kernel_size=3) self.fc1=nn.Linear(20*10*10,500) self.fc2=nn.Linear(500,10) def forward(self,x): input_size=x.size(0) x=self.conv1(x)#输入batch_size 1*28*28 ,输出batch 10*24*24(28-5+1=24) x=F.relu(x) #激活函数,保持shape不变 x=F.max_pool2d(x,2,2)#2是池化步长的大小,输出batch20*12*12 x=self.conv2(x)#输入batch20*12*12 输出batch 20*10*10 (12-3+1=10) x=F.relu(x) x=x.view(input_size,-1)#拉平,-1自动计算维度 20*10*10=2000 x=self.fc1(x)#输入batch 2000 输出batch 500 x=F.relu(x) x=self.fc2(x)#输出batch 500 输出batch 10 ouput=F.log_softmax(x,dim=1)#计算分类后每个数字概率 return ouput

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

6.定义优化器

model =Digit().to(device)

optimizer=optim.Adam(model.parameters())##选择adam优化器

- 1

- 2

- 3

7.定义训练方法

#7 定义训练方法 def train_model(model,device,train_loader,optimizer,epoch): #模型训练 model.train() for batch_index,(data,target) in enumerate(train_loader): #部署到DEVICE上去 data,target=data.to(device), target.to(device) #梯度初始化为0 optimizer.zero_grad() #预测,训练后结果 output=model(data) #计算损失 loss = F.cross_entropy(output,target)#多分类用交叉验证 #反向传播 loss.backward() #参数优化 optimizer.step() if batch_index%3000==0: print("Train Epoch :{} \t Loss :{:.6f}".format(epoch,loss.item()))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

运行

8.定义测试方法

#8 定义测试方法 def test_model(model,device,test_loader): #模型验证 model.eval() #正确率 corrcet=0.0 #测试损失 test_loss=0.0 with torch.no_grad(): #不会计算梯度,也不会进行反向传播 for data,target in test_loader: #部署到device上 data,target=data.to(device),target.to(device) #测试数据 output=model(data) #计算测试损失 test_loss+=F.cross_entropy(output,target).item() #找到概率最大的下标 pred=output.argmax(1) #累计正确的值 corrcet+=pred.eq(target.view_as(pred)).sum().item() test_loss/=len(test_loader.dataset) print("Test--Average Loss:{:.4f},Accuarcy:{:.3f}\n".format(test_loss,100.0 * corrcet / len(test_loader.dataset)))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

运行

9.调用方法

#9 调用方法

for epoch in range(1,EPOCHS+1):

train_model(model,DEVICE,train_loader,optimizer,epoch)

test_model(model,DEVICE,test_loader)

- 1

- 2

- 3

- 4

输出结果

Train Epoch :1 Loss :2.296158 Train Epoch :1 Loss :0.023645 Test--Average Loss:0.0027,Accuarcy:98.690 Train Epoch :2 Loss :0.035262 Train Epoch :2 Loss :0.002957 Test--Average Loss:0.0027,Accuarcy:98.750 Train Epoch :3 Loss :0.029884 Train Epoch :3 Loss :0.000642 Test--Average Loss:0.0032,Accuarcy:98.460 Train Epoch :4 Loss :0.002866 Train Epoch :4 Loss :0.003708 Test--Average Loss:0.0033,Accuarcy:98.720 Train Epoch :5 Loss :0.000039 Train Epoch :5 Loss :0.000145 Test--Average Loss:0.0026,Accuarcy:98.840 Train Epoch :6 Loss :0.000124 Train Epoch :6 Loss :0.035326 Test--Average Loss:0.0054,Accuarcy:98.450 Train Epoch :7 Loss :0.000014 Train Epoch :7 Loss :0.000001 Test--Average Loss:0.0044,Accuarcy:98.510 Train Epoch :8 Loss :0.001491 Train Epoch :8 Loss :0.000045 Test--Average Loss:0.0031,Accuarcy:99.140 Train Epoch :9 Loss :0.000428 Train Epoch :9 Loss :0.000000 Test--Average Loss:0.0056,Accuarcy:98.500 Train Epoch :10 Loss :0.000001 Train Epoch :10 Loss :0.000377 Test--Average Loss:0.0042,Accuarcy:98.930

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

总结和改进

看完视频之后,老师确实讲得好,但是却没有讲明白为什么网络结构为什么要这样搭建,于是我又去看了看CNN,这个网络结构也能实现

#5 构建网络模型 class Digit(nn.Module): def __init__(self): super().__init__() self.conv1= nn.Conv2d(1,10,5) self.conv2=nn.Conv2d(10,20,5) self.fc1=nn.Linear(20*4*4,10) def forward(self,x): input_size=x.size(0) x=self.conv1(x)#输入batch_size 1*28*28 ,输出batch 10*24*24(28-5+1=24) x=F.relu(x) #激活函数,保持shape不变 x=F.max_pool2d(x,2,2)#2是池化步长的大小,输出batch20*12*12 x=self.conv2(x)#输入batch20*12*12 输出batch 20*10*10 (12-3+1=10) x=F.relu(x) x=F.max_pool2d(x,2,2) x=x.view(input_size,-1)#拉平,-1自动计算维度 20*10*10=2000 x=self.fc1(x)#输入batch 2000 输出batch 500 x=F.relu(x) ouput=F.log_softmax(x,dim=1)#计算分类后每个数字概率 return ouput

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

用CNN之后,发现准确度一下子下降到了50%,百思不得其解,我猜可能是优化器的问题,就把优化器换成了SGD,结果果然效果更好

Train Epoch :17 Loss :0.014693

Train Epoch :17 Loss :0.000051

Test--Average Loss:0.0026,Accuarcy:99.010

- 1

- 2

- 3

在第17轮准确率居然到了99%,不知道为什么,先挖个坑,等我以后研究明白再来填

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/酷酷是懒虫/article/detail/790511

推荐阅读

相关标签