- 1前端vue后端express项目服务器部署操作_前端vue3,后端express

- 2时间戳校验和计算

- 3STM32的QSPI在dual-flash双闪存模式下读写寄存器描述,译自H743参考手册_qspi双闪存模式

- 4推荐系统!基于tensorflow搭建混合神经网络精准推荐!_tenserflow 做内容推荐

- 52-报错“a component required a bean of type ‘微服务名称‘ that could”_微服务 启动报错a component required a bean of type 'com.s

- 6unity3d中平滑跟随的功能实现!!!!_smooth follow unity

- 7git命令大全(非常齐全)_linlin@tiger versa-activity % git pull feature/sig

- 8java正则验证时间戳_时间戳和正则表达式

- 910个python入门小游戏,零基础打通关,就能掌握编程基础_python编写的入门简单小游戏_python编程小游戏简单的

- 10Postgresql排序与limit组合场景性能极限优化_posterger limit

多节点OpenStack Charms 部署指南0.0.1.dev--42--部署bundle openstack-base-78,单网口openstack网络,及注意:千万不能以数字开头命名主机名

赞

踩

参考文档:

OpenStack Charms Deployment Guide-001dev416

最近在看新的openstack-base-78,顺手点开了OpenStack Charms Deployment Guide-001dev416读了下,发现网络结构有比较大的改变。

在OpenStack Charms Deployment Guide-001dev416中的Install MAAS章节,提到需求为:

- 1 x MAAS 系统:8GiB RAM、2 个 CPU、1 个 NIC、1 x 40GiB 存储

- 1 x Juju 控制器节点:4GiB RAM、2 CPU、1 NIC、1 x 40GiB 存储

- 4 个云节点:8GiB RAM、2 个 CPU、1 个网卡、3 个 80GiB 存储

注:其实在Openstack Base #78中,三个云节点就够了,且存储两块硬盘就可以。且juju控制器可以配置在maas节点的lxd上。

原注:自 MAAS (v.2.9.2) 添加对 Open vSwitch

网桥的支持以来,四个云节点中的每个节点上的两个网络接口的传统要求已被删除。这种桥接类型支持比 Linux Bridge

更复杂的网络拓扑结构,特别是对于 Charmed OpenStack,ovn-chassis 单元不再需要专用的网络设备

。

1 IPMI带内管理模式

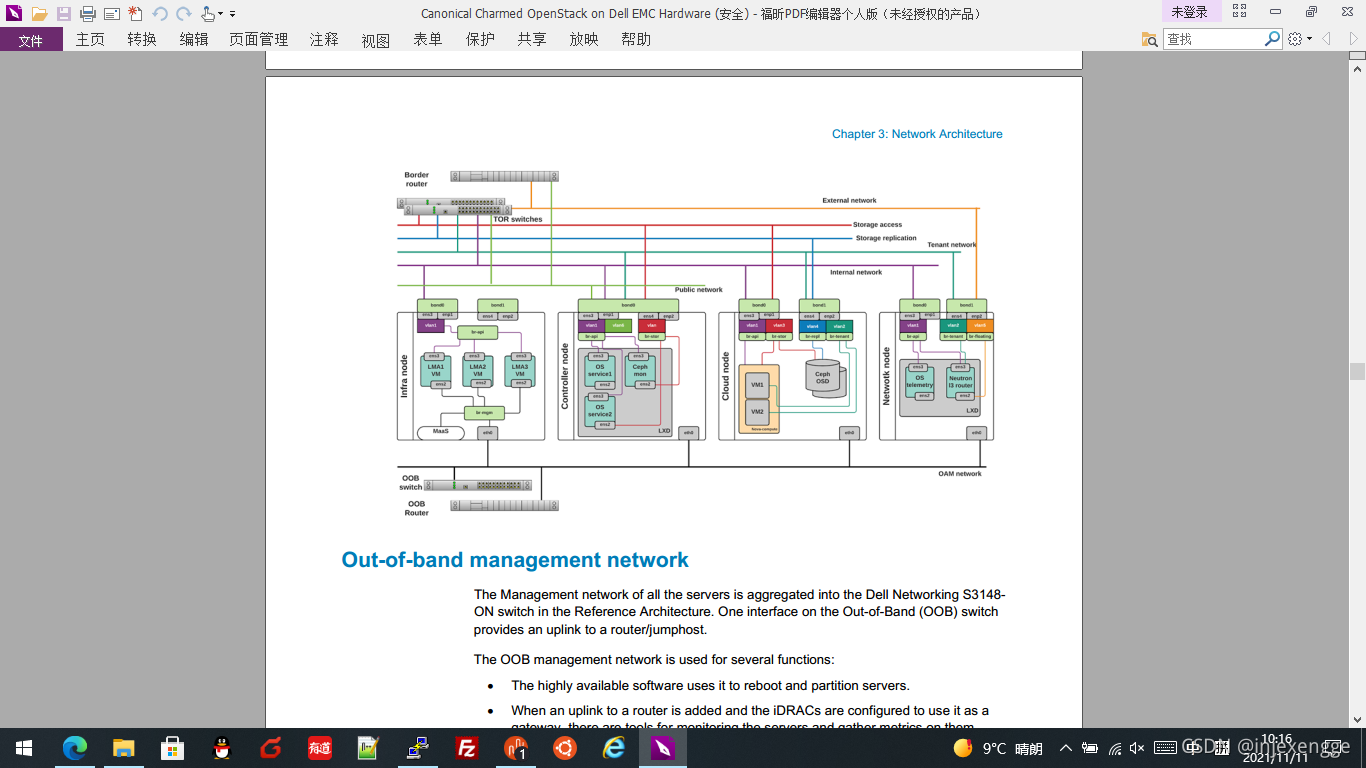

拓扑可以变化为有点类似dell emc和conanial联合推出的文档Reference Architecture—Canonical Charmed OpenStack (Ussuri) on Dell EMC Hardware

eno1端口是与带外管理连接(OOB)交换机连接,并通过带外管理路由器与互联网连接,进行ipmi电源管理。

eno2与TOR交换机连接,openstack使用10.0.0.0/20网段,externet即floatingip使用其中的10.0.9.1-10.0.9.250子网段,openstack之上的vm使用172.16.0.0网段。

2 带内管理IPMI模式:

而笔者实际使用的是使用使用带内管理,全部网络通过eno1。

这在配置中涉及下列变化:

1 MAAS服务器端配置变化:

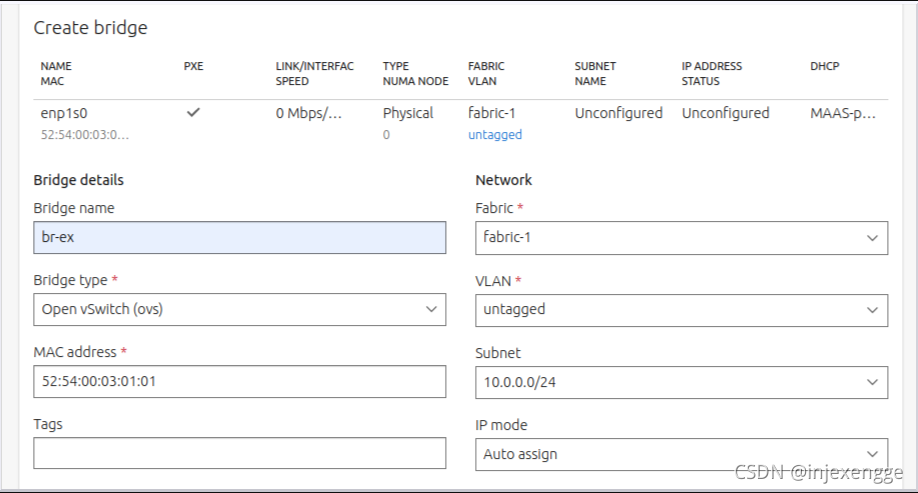

在招募节点并改名及tag节点后,需要创建OVS网桥:

还需要在subnet配置中,将子网10.0.0.0/20更改到fabric-1.

2 Openstack Base #78中配置的变化。

首先gitclone openstack-base-78:

git clone https://github.com/openstack-charmers/openstack-bundles

- 1

编辑bundle.yaml

vim ~/openstack-bundles/stable/openstack-base/bundle.yaml

- 1

其中,将variables:中的 data-port: &data-port br-ex:eno2变更为eno1。

其中,ovn-chassis段中,也要配置为eno1口,

bridge-interface-mappings: br-ex:eno1

- 1

详细配置可以参见Install OpenStack

全文如下:

# Please refer to the OpenStack Charms Deployment Guide for more information. # https://docs.openstack.org/project-deploy-guide/charm-deployment-guide # # NOTE: Please review the value for the configuration option # `bridge-interface-mappings` for the `ovn-chassis` charm (see `data-port` variable). # Refer to the [Open Virtual Network (OVN)](https://docs.openstack.org/project-deploy-guide/charm-deployment-guide/latest/app-ovn.html) # section of the [OpenStack Charms Deployment Guide](https://docs.openstack.org/project-deploy-guide/charm-deployment-guide/latest/) # for more information. series: focal variables: openstack-origin: &openstack-origin cloud:focal-xena data-port: &data-port br-ex:eno1 worker-multiplier: &worker-multiplier 0.25 osd-devices: &osd-devices /dev/sdb /dev/vdb expected-osd-count: &expected-osd-count 3 expected-mon-count: &expected-mon-count 3 machines: '0': '1': '2': relations: - - nova-compute:amqp - rabbitmq-server:amqp - - nova-cloud-controller:identity-service - keystone:identity-service - - glance:identity-service - keystone:identity-service - - neutron-api:identity-service - keystone:identity-service - - neutron-api:amqp - rabbitmq-server:amqp - - glance:amqp - rabbitmq-server:amqp - - nova-cloud-controller:image-service - glance:image-service - - nova-compute:image-service - glance:image-service - - nova-cloud-controller:cloud-compute - nova-compute:cloud-compute - - nova-cloud-controller:amqp - rabbitmq-server:amqp - - openstack-dashboard:identity-service - keystone:identity-service - - nova-cloud-controller:neutron-api - neutron-api:neutron-api - - cinder:image-service - glance:image-service - - cinder:amqp - rabbitmq-server:amqp - - cinder:identity-service - keystone:identity-service - - cinder:cinder-volume-service - nova-cloud-controller:cinder-volume-service - - cinder-ceph:storage-backend - cinder:storage-backend - - ceph-mon:client - nova-compute:ceph - - nova-compute:ceph-access - cinder-ceph:ceph-access - - ceph-mon:client - cinder-ceph:ceph - - ceph-mon:client - glance:ceph - - ceph-osd:mon - ceph-mon:osd - - ntp:juju-info - nova-compute:juju-info - - ceph-radosgw:mon - ceph-mon:radosgw - - ceph-radosgw:identity-service - keystone:identity-service - - placement:identity-service - keystone:identity-service - - placement:placement - nova-cloud-controller:placement - - keystone:shared-db - keystone-mysql-router:shared-db - - cinder:shared-db - cinder-mysql-router:shared-db - - glance:shared-db - glance-mysql-router:shared-db - - nova-cloud-controller:shared-db - nova-mysql-router:shared-db - - neutron-api:shared-db - neutron-mysql-router:shared-db - - openstack-dashboard:shared-db - dashboard-mysql-router:shared-db - - placement:shared-db - placement-mysql-router:shared-db - - vault:shared-db - vault-mysql-router:shared-db - - keystone-mysql-router:db-router - mysql-innodb-cluster:db-router - - cinder-mysql-router:db-router - mysql-innodb-cluster:db-router - - nova-mysql-router:db-router - mysql-innodb-cluster:db-router - - glance-mysql-router:db-router - mysql-innodb-cluster:db-router - - neutron-mysql-router:db-router - mysql-innodb-cluster:db-router - - dashboard-mysql-router:db-router - mysql-innodb-cluster:db-router - - placement-mysql-router:db-router - mysql-innodb-cluster:db-router - - vault-mysql-router:db-router - mysql-innodb-cluster:db-router - - neutron-api-plugin-ovn:neutron-plugin - neutron-api:neutron-plugin-api-subordinate - - ovn-central:certificates - vault:certificates - - ovn-central:ovsdb-cms - neutron-api-plugin-ovn:ovsdb-cms - - neutron-api:certificates - vault:certificates - - ovn-chassis:nova-compute - nova-compute:neutron-plugin - - ovn-chassis:certificates - vault:certificates - - ovn-chassis:ovsdb - ovn-central:ovsdb - - vault:certificates - neutron-api-plugin-ovn:certificates - - vault:certificates - cinder:certificates - - vault:certificates - glance:certificates - - vault:certificates - keystone:certificates - - vault:certificates - nova-cloud-controller:certificates - - vault:certificates - openstack-dashboard:certificates - - vault:certificates - placement:certificates - - vault:certificates - ceph-radosgw:certificates - - vault:certificates - mysql-innodb-cluster:certificates applications: ceph-mon: annotations: gui-x: '790' gui-y: '1540' charm: cs:ceph-mon-61 num_units: 3 options: expected-osd-count: *expected-osd-count monitor-count: *expected-mon-count source: *openstack-origin to: - lxd:0 - lxd:1 - lxd:2 ceph-osd: annotations: gui-x: '1065' gui-y: '1540' charm: cs:ceph-osd-315 num_units: 3 options: osd-devices: *osd-devices source: *openstack-origin to: - '0' - '1' - '2' ceph-radosgw: annotations: gui-x: '850' gui-y: '900' charm: cs:ceph-radosgw-300 num_units: 1 options: source: *openstack-origin to: - lxd:0 cinder-mysql-router: annotations: gui-x: '900' gui-y: '1400' charm: cs:mysql-router-15 cinder: annotations: gui-x: '980' gui-y: '1270' charm: cs:cinder-317 num_units: 1 options: block-device: None glance-api-version: 2 worker-multiplier: *worker-multiplier openstack-origin: *openstack-origin to: - lxd:1 cinder-ceph: annotations: gui-x: '1120' gui-y: '1400' charm: cs:cinder-ceph-268 num_units: 0 glance-mysql-router: annotations: gui-x: '-290' gui-y: '1400' charm: cs:mysql-router-15 glance: annotations: gui-x: '-230' gui-y: '1270' charm: cs:glance-312 num_units: 1 options: worker-multiplier: *worker-multiplier openstack-origin: *openstack-origin to: - lxd:2 keystone-mysql-router: annotations: gui-x: '230' gui-y: '1400' charm: cs:mysql-router-15 keystone: annotations: gui-x: '300' gui-y: '1270' charm: cs:keystone-329 num_units: 1 options: worker-multiplier: *worker-multiplier openstack-origin: *openstack-origin to: - lxd:0 neutron-mysql-router: annotations: gui-x: '505' gui-y: '1385' charm: cs:mysql-router-15 neutron-api-plugin-ovn: annotations: gui-x: '690' gui-y: '1385' charm: cs:neutron-api-plugin-ovn-10 neutron-api: annotations: gui-x: '580' gui-y: '1270' charm: cs:neutron-api-302 num_units: 1 options: neutron-security-groups: true flat-network-providers: physnet1 worker-multiplier: *worker-multiplier openstack-origin: *openstack-origin to: - lxd:1 placement-mysql-router: annotations: gui-x: '1320' gui-y: '1385' charm: cs:mysql-router-15 placement: annotations: gui-x: '1320' gui-y: '1270' charm: cs:placement-31 num_units: 1 options: worker-multiplier: *worker-multiplier openstack-origin: *openstack-origin to: - lxd:2 nova-mysql-router: annotations: gui-x: '-30' gui-y: '1385' charm: cs:mysql-router-15 nova-cloud-controller: annotations: gui-x: '35' gui-y: '1270' charm: cs:nova-cloud-controller-361 num_units: 1 options: network-manager: Neutron worker-multiplier: *worker-multiplier openstack-origin: *openstack-origin to: - lxd:0 nova-compute: annotations: gui-x: '190' gui-y: '890' charm: cs:nova-compute-337 num_units: 3 options: config-flags: default_ephemeral_format=ext4 enable-live-migration: true enable-resize: true migration-auth-type: ssh openstack-origin: *openstack-origin to: - '0' - '1' - '2' ntp: annotations: gui-x: '315' gui-y: '1030' charm: cs:ntp-47 num_units: 0 dashboard-mysql-router: annotations: gui-x: '510' gui-y: '1030' charm: cs:mysql-router-15 openstack-dashboard: annotations: gui-x: '585' gui-y: '900' charm: cs:openstack-dashboard-318 num_units: 1 options: openstack-origin: *openstack-origin to: - lxd:1 rabbitmq-server: annotations: gui-x: '300' gui-y: '1550' charm: cs:rabbitmq-server-117 num_units: 1 to: - lxd:2 mysql-innodb-cluster: annotations: gui-x: '535' gui-y: '1550' charm: cs:mysql-innodb-cluster-15 num_units: 3 to: - lxd:0 - lxd:1 - lxd:2 ovn-central: annotations: gui-x: '70' gui-y: '1550' charm: cs:ovn-central-15 num_units: 3 options: source: *openstack-origin to: - lxd:0 - lxd:1 - lxd:2 ovn-chassis: annotations: gui-x: '120' gui-y: '1030' charm: cs:ovn-chassis-21 # Please update the `bridge-interface-mappings` to values suitable for the # hardware used in your deployment. See the referenced documentation at the # top of this file. options: ovn-bridge-mappings: physnet1:br-ex bridge-interface-mappings: br-ex:eno1 vault-mysql-router: annotations: gui-x: '1535' gui-y: '1560' charm: cs:mysql-router-15 vault: annotations: gui-x: '1610' gui-y: '1430' charm: cs:vault-54 num_units: 1 to: - lxd:0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

- 338

- 339

- 340

- 341

- 342

- 343

- 344

- 345

- 346

- 347

- 348

- 349

- 350

- 351

- 352

- 353

- 354

- 355

- 356

- 357

- 358

- 359

- 360

- 361

- 362

- 363

- 364

- 365

- 366

- 367

- 368

- 369

- 370

- 371

- 372

- 373

- 374

- 375

- 376

- 377

- 378

- 379

- 380

- 381

3 然后部署Openstack Base #78

juju deploy ~/openstack-bundles/stable/openstack-base/bundle.yaml --debug

- 1

然后juju status,惊讶的发现nova-compute 状态blocked:

nova-compute/0 blocked idle 0 10.0.0.159 Services not running that should be: nova-compute, nova-api-metadata

ntp/2 active idle 10.0.0.159 123/udp chrony: Ready

ovn-chassis/2 active idle 10.0.0.159 Unit is ready

nova-compute/1 blocked idle 1 10.0.0.157 Services not running that should be: nova-compute, nova-api-metadata

ntp/1 active idle 10.0.0.157 123/udp chrony: Ready

ovn-chassis/1 active idle 10.0.0.157 Unit is ready

nova-compute/2* blocked idle 2 10.0.0.158 Services not running that should be: nova-compute, nova-api-metadata

ntp/0* active idle 10.0.0.158 123/udp chrony: Ready

ovn-chassis/0* active idle 10.0.0.158 Unit is ready

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

检查了下log:

登录到nova-compute/0

juju ssh nova-compute/0

- 1

查看log和nova-compute:

systemctl status nova-compute

systemctl status nova-api-metadata

journalctl -xe

- 1

- 2

- 3

输出:

1 systemctl status nova-compute ● nova-compute.service - OpenStack Compute Loaded: loaded (/lib/systemd/system/nova-compute.service; enabled; vendor preset: enabled) Active: failed (Result: exit-code) since Tue 2021-11-09 06:13:26 UTC; 12s ago Process: 614284 ExecStart=/etc/init.d/nova-compute systemd-start (code=exited, status=1/FAILURE) Main PID: 614284 (code=exited, status=1/FAILURE) Nov 09 06:13:26 158 systemd[1]: nova-compute.service: Scheduled restart job, restart counter is at 5. Nov 09 06:13:26 158 systemd[1]: Stopped OpenStack Compute. Nov 09 06:13:26 158 systemd[1]: nova-compute.service: Start request repeated too quickly. Nov 09 06:13:26 158 systemd[1]: nova-compute.service: Failed with result 'exit-code'. Nov 09 06:13:26 158 systemd[1]: Failed to start OpenStack Compute. 2 systemctl status nova-api-metadata ● nova-api-metadata.service - OpenStack Compute metadata API Loaded: loaded (/lib/systemd/system/nova-api-metadata.service; enabled; vendor preset: enabled) Active: failed (Result: exit-code) since Tue 2021-11-09 06:11:43 UTC; 2min 11s ago Docs: man:nova-api-metadata(1) Process: 612584 ExecStart=/etc/init.d/nova-api-metadata systemd-start (code=exited, status=1/FAILURE) Main PID: 612584 (code=exited, status=1/FAILURE) Nov 09 06:11:43 158 systemd[1]: nova-api-metadata.service: Scheduled restart job, restart counter is at 5. Nov 09 06:11:43 158 systemd[1]: Stopped OpenStack Compute metadata API. Nov 09 06:11:43 158 systemd[1]: nova-api-metadata.service: Start request repeated too quickly. Nov 09 06:11:43 158 systemd[1]: nova-api-metadata.service: Failed with result 'exit-code'. Nov 09 06:11:43 158 systemd[1]: Failed to start OpenStack Compute metadata API. 3 less /var/log/nova/nova-compute.log /var/log/nova/nova-compute.log: No such file or directory less /var/log/nova/nova-api-metadata.log /var/log/nova/nova-api-metadata.log: No such file or directory 4 journalctl -xe -- -- The job identifier is 148372. Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: Traceback (most recent call last): Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/types.py", line 843, in __call__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: value = self.ip_address(value) Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/types.py", line 728, in __call__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: self.version_checker(value) Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/types.py", line 748, in _check_both_versions Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: raise ValueError("%s is not IPv4 or IPv6 address" % address) Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: ValueError: 158 is not IPv4 or IPv6 address Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: During handling of the above exception, another exception occurred: Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: Traceback (most recent call last): Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/types.py", line 846, in __call__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: value = self.hostname(value) Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/types.py", line 795, in __call__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: raise ValueError('%s contains no non-numeric characters in the ' Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: ValueError: 158 contains no non-numeric characters in the top-level domain part of the host name and is invalid Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: During handling of the above exception, another exception occurred: Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: Traceback (most recent call last): Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/cfg.py", line 611, in _check_default Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: self.type(self.default) Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/types.py", line 848, in __call__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: raise ValueError( Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: ValueError: 158 is not a valid host address Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: During handling of the above exception, another exception occurred: Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: Traceback (most recent call last): Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/bin/neutron-ovn-metadata-agent", line 6, in <module> Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: from neutron.cmd.eventlet.agents.ovn_metadata import main Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/neutron/cmd/eventlet/agents/ovn_metadata.py", line 15, in <module> Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: from neutron.agent.ovn import metadata_agent Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/neutron/agent/ovn/metadata_agent.py", line 16, in <module> Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: from neutron.common import config Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/neutron/common/config.py", line 33, in <module> Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: from neutron.conf import common as common_config Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/neutron/conf/common.py", line 94, in <module> Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: cfg.HostAddressOpt('host', default=net.get_hostname(), Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/cfg.py", line 1173, in __init__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: super(HostAddressOpt, self).__init__(name, Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/cfg.py", line 595, in __init__ Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: self._check_default() Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: File "/usr/lib/python3/dist-packages/oslo_config/cfg.py", line 613, in _check_default Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: raise DefaultValueError("Error processing default value " Nov 09 06:22:47 158 neutron-ovn-metadata-agent[631648]: oslo_config.cfg.DefaultValueError: Error processing default value 158 for Opt type of HostAddress. root@158:~# root@158:~# root@158:~# root@158:~# journalctl -xe Nov 09 06:23:27 158 neutron-ovn-metadata-agent[632922]: super(HostAddressOpt, self).__init__(name, Nov 09 06:23:27 158 neutron-ovn-metadata-agent[632922]: File "/usr/lib/python3/dist-packages/oslo_config/cfg.py", line 595, in __init__ Nov 09 06:23:27 158 neutron-ovn-metadata-agent[632922]: self._check_default() Nov 09 06:23:27 158 neutron-ovn-metadata-agent[632922]: File "/usr/lib/python3/dist-packages/oslo_config/cfg.py", line 613, in _check_default Nov 09 06:23:27 158 neutron-ovn-metadata-agent[632922]: raise DefaultValueError("Error processing default value " Nov 09 06:23:27 158 neutron-ovn-metadata-agent[632922]: oslo_config.cfg.DefaultValueError: Error processing default value 158 for Opt type of HostAddress. Nov 09 06:23:27 158 systemd[1]: neutron-ovn-metadata-agent.service: Main process exited, code=exited, status=1/FAILURE -- Subject: Unit process exited -- Defined-By: systemd -- Support: http://www.ubuntu.com/support -- -- An ExecStart= process belonging to unit neutron-ovn-metadata-agent.service has exited. -- -- The process' exit code is 'exited' and its exit status is 1. Nov 09 06:23:27 158 systemd[1]: neutron-ovn-metadata-agent.service: Failed with result 'exit-code'. -- Subject: Unit failed -- Defined-By: systemd -- Support: http://www.ubuntu.com/support -- -- The unit neutron-ovn-metadata-agent.service has entered the 'failed' state with result 'exit-code'. Nov 09 06:23:27 158 systemd[1]: neutron-ovn-metadata-agent.service: Scheduled restart job, restart counter is at 1845. -- Subject: Automatic restarting of a unit has been scheduled -- Defined-By: systemd -- Support: http://www.ubuntu.com/support -- -- Automatic restarting of the unit neutron-ovn-metadata-agent.service has been scheduled, as the result for -- the configured Restart= setting for the unit. Nov 09 06:23:27 158 systemd[1]: Stopped Neutron OVN Metadata Agent. -- Subject: A stop job for unit neutron-ovn-metadata-agent.service has finished -- Defined-By: systemd -- Support: http://www.ubuntu.com/support -- -- A stop job for unit neutron-ovn-metadata-agent.service has finished. -- -- The job identifier is 149316 and the job result is done. Nov 09 06:23:27 158 systemd[1]: Started Neutron OVN Metadata Agent. -- Subject: A start job for unit neutron-ovn-metadata-agent.service has finished successfully -- Defined-By: systemd -- Support: http://www.ubuntu.com/support -- -- A start job for unit neutron-ovn-metadata-agent.service has finished successfully. -- -- The job identifier is 149316.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

论坛答疑为:

似乎您将捆绑包部署到的金属的主机名被命名为 157、158 和 159。

ovn 元数据代理日志显示它没有正确处理这个问题,因为如果它是主机名,它期望在起始位置有一个字母字符,如果它是 IPv4

地址,则需要一个完整的点四元 IP 地址。这篇文章 解释说,虽然 RFC 1123 放宽了 RFC 952 的约束,即主机名必须以字母字符开头,但是,由于实现问题,RFC1178 指南中提供了选择有效主机名的规定

不要在名称的开头使用数字。

许多程序接受数字互联网地址以及 姓名。不幸的是,有些程序不正确 区分两者并可能被愚弄,例如, 以十进制数字开头的字符串。

完全由十六进制数字组成的名称,例如 “beef”,也是有问题的,因为它们可以被解释 完全作为十六进制数字以及字母字符串。 我建议重命名以 A和 Z 之间的字符开头的主机,以符合主机名 RFC 952,然后重新部署捆绑包。

原来是因为偷懒在maas中把主机名改成数字造成的。。。。。。

在MAAS中将把节点名改成node156类似的格式,重新部署,解封vault,juju status为:

Model Controller Cloud/Region Version SLA Timestamp openstack maas-controller mymaas/default 2.9.18 unsupported 09:57:02+08:00 App Version Status Scale Charm Store Channel Rev OS Message ceph-mon 16.2.6 active 3 ceph-mon charmstore stable 61 ubuntu Unit is ready and clustered ceph-osd 16.2.6 active 3 ceph-osd charmstore stable 315 ubuntu Unit is ready (1 OSD) ceph-radosgw 16.2.6 active 1 ceph-radosgw charmstore stable 300 ubuntu Unit is ready cinder 19.0.0 active 1 cinder charmstore stable 317 ubuntu Unit is ready cinder-ceph 19.0.0 active 1 cinder-ceph charmstore stable 268 ubuntu Unit is ready cinder-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready dashboard-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready glance 23.0.0 active 1 glance charmstore stable 312 ubuntu Unit is ready glance-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready keystone 20.0.0 active 1 keystone charmstore stable 329 ubuntu Application Ready keystone-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready mysql-innodb-cluster 8.0.27 active 3 mysql-innodb-cluster charmstore stable 15 ubuntu Unit is ready: Mode: R/W, Cluster is ONLINE and can tolerate up to ONE failure. neutron-api 19.0.0 active 1 neutron-api charmstore stable 302 ubuntu Unit is ready neutron-api-plugin-ovn 19.0.0 active 1 neutron-api-plugin-ovn charmstore stable 10 ubuntu Unit is ready neutron-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready nova-cloud-controller 24.0.0 active 1 nova-cloud-controller charmstore stable 361 ubuntu Unit is ready nova-compute 24.0.0 active 3 nova-compute charmstore stable 337 ubuntu Unit is ready nova-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready ntp 3.5 active 3 ntp charmstore stable 47 ubuntu chrony: Ready openstack-dashboard 20.1.0 active 1 openstack-dashboard charmstore stable 318 ubuntu Unit is ready ovn-central 21.09.0 active 3 ovn-central charmstore stable 15 ubuntu Unit is ready (leader: ovnnb_db, ovnsb_db northd: active) ovn-chassis 21.09.0 active 3 ovn-chassis charmstore stable 21 ubuntu Unit is ready placement 6.0.0 active 1 placement charmstore stable 31 ubuntu Unit is ready placement-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready rabbitmq-server 3.8.2 active 1 rabbitmq-server charmstore stable 117 ubuntu Unit is ready vault 1.5.9 active 1 vault charmstore stable 54 ubuntu Unit is ready (active: true, mlock: disabled) vault-mysql-router 8.0.27 active 1 mysql-router charmstore stable 15 ubuntu Unit is ready Unit Workload Agent Machine Public address Ports Message ceph-mon/0* active idle 0/lxd/0 10.0.9.255 Unit is ready and clustered ceph-mon/1 active idle 1/lxd/0 10.0.0.254 Unit is ready and clustered ceph-mon/2 active idle 2/lxd/0 10.0.10.9 Unit is ready and clustered ceph-osd/0 active idle 0 10.0.0.157 Unit is ready (1 OSD) ceph-osd/1* active idle 1 10.0.0.158 Unit is ready (1 OSD) ceph-osd/2 active idle 2 10.0.0.159 Unit is ready (1 OSD) ceph-radosgw/0* active idle 0/lxd/1 10.0.9.254 80/tcp Unit is ready cinder/0* active idle 1/lxd/1 10.0.1.0 8776/tcp Unit is ready cinder-ceph/0* active idle 10.0.1.0 Unit is ready cinder-mysql-router/0* active idle 10.0.1.0 Unit is ready glance/0* active idle 2/lxd/1 10.0.10.8 9292/tcp Unit is ready glance-mysql-router/0* active idle 10.0.10.8 Unit is ready keystone/0* active idle 0/lxd/2 10.0.10.0 5000/tcp Unit is ready keystone-mysql-router/0* active idle 10.0.10.0 Unit is ready mysql-innodb-cluster/0* active idle 0/lxd/3 10.0.10.3 Unit is ready: Mode: R/W, Cluster is ONLINE and can tolerate up to ONE failure. mysql-innodb-cluster/1 active idle 1/lxd/2 10.0.9.252 Unit is ready: Mode: R/O, Cluster is ONLINE and can tolerate up to ONE failure. mysql-innodb-cluster/2 active idle 2/lxd/2 10.0.10.6 Unit is ready: Mode: R/O, Cluster is ONLINE and can tolerate up to ONE failure. neutron-api/0* active idle 1/lxd/3 10.0.0.253 9696/tcp Unit is ready neutron-api-plugin-ovn/0* active idle 10.0.0.253 Unit is ready neutron-mysql-router/0* active idle 10.0.0.253 Unit is ready nova-cloud-controller/0* active idle 0/lxd/4 10.0.10.2 8774/tcp,8775/tcp Unit is ready nova-mysql-router/0* active idle 10.0.10.2 Unit is ready nova-compute/0 active idle 0 10.0.0.157 Unit is ready ntp/1 active idle 10.0.0.157 123/udp chrony: Ready ovn-chassis/1 active idle 10.0.0.157 Unit is ready nova-compute/1* active idle 1 10.0.0.158 Unit is ready ntp/0* active idle 10.0.0.158 123/udp chrony: Ready ovn-chassis/0* active idle 10.0.0.158 Unit is ready nova-compute/2 active idle 2 10.0.0.159 Unit is ready ntp/2 active idle 10.0.0.159 123/udp chrony: Ready ovn-chassis/2 active idle 10.0.0.159 Unit is ready openstack-dashboard/0* active idle 1/lxd/4 10.0.9.251 80/tcp,443/tcp Unit is ready dashboard-mysql-router/0* active idle 10.0.9.251 Unit is ready ovn-central/0* active idle 0/lxd/5 10.0.9.253 6641/tcp,6642/tcp Unit is ready (leader: ovnnb_db, ovnsb_db northd: active) ovn-central/1 active idle 1/lxd/5 10.0.0.255 6641/tcp,6642/tcp Unit is ready ovn-central/2 active idle 2/lxd/3 10.0.10.4 6641/tcp,6642/tcp Unit is ready placement/0* active idle 2/lxd/4 10.0.10.5 8778/tcp Unit is ready placement-mysql-router/0* active idle 10.0.10.5 Unit is ready rabbitmq-server/0* active idle 2/lxd/5 10.0.10.7 5672/tcp Unit is ready vault/0* active idle 0/lxd/6 10.0.10.1 8200/tcp Unit is ready (active: true, mlock: disabled) vault-mysql-router/0* active idle 10.0.10.1 Unit is ready Machine State DNS Inst id Series AZ Message 0 started 10.0.0.157 node157 focal default Deployed 0/lxd/0 started 10.0.9.255 juju-7569b7-0-lxd-0 focal default Container started 0/lxd/1 started 10.0.9.254 juju-7569b7-0-lxd-1 focal default Container started 0/lxd/2 started 10.0.10.0 juju-7569b7-0-lxd-2 focal default Container started 0/lxd/3 started 10.0.10.3 juju-7569b7-0-lxd-3 focal default Container started 0/lxd/4 started 10.0.10.2 juju-7569b7-0-lxd-4 focal default Container started 0/lxd/5 started 10.0.9.253 juju-7569b7-0-lxd-5 focal default Container started 0/lxd/6 started 10.0.10.1 juju-7569b7-0-lxd-6 focal default Container started 1 started 10.0.0.158 node158 focal default Deployed 1/lxd/0 started 10.0.0.254 juju-7569b7-1-lxd-0 focal default Container started 1/lxd/1 started 10.0.1.0 juju-7569b7-1-lxd-1 focal default Container started 1/lxd/2 started 10.0.9.252 juju-7569b7-1-lxd-2 focal default Container started 1/lxd/3 started 10.0.0.253 juju-7569b7-1-lxd-3 focal default Container started 1/lxd/4 started 10.0.9.251 juju-7569b7-1-lxd-4 focal default Container started 1/lxd/5 started 10.0.0.255 juju-7569b7-1-lxd-5 focal default Container started 2 started 10.0.0.159 node159 focal default Deployed 2/lxd/0 started 10.0.10.9 juju-7569b7-2-lxd-0 focal default Container started 2/lxd/1 started 10.0.10.8 juju-7569b7-2-lxd-1 focal default Container started 2/lxd/2 started 10.0.10.6 juju-7569b7-2-lxd-2 focal default Container started 2/lxd/3 started 10.0.10.4 juju-7569b7-2-lxd-3 focal default Container started 2/lxd/4 started 10.0.10.5 juju-7569b7-2-lxd-4 focal default Container started 2/lxd/5 started 10.0.10.7 juju-7569b7-2-lxd-5 focal default Container started

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98