热门标签

热门文章

- 1AI + SQL 所想即所得_ai sql

- 2第十四届蓝桥杯全国软件和信息技术专业人才大赛(Web 应用开发)_isbn 转换与生成 蓝桥杯

- 3基于STM32的多功能智能手环设计(可以当做毕设与简历项目)_智能手环系统作为毕业设计够么?

- 4Speech-Text Dialog Pre-training for Spoken Dialog Understanding with Explicit Cross-Modal Alignment

- 5softmax分类器_论文盘点:性别年龄分类器详解

- 6BPE系列之—— BPE算法

- 72024上半年软考中级《数据库系统工程师》报名考试全攻略_2024上半年数据库系统工程师考试时间

- 8“继承MonoBehavior的泛型单例“本质上是一个单例模板

- 9Python获取天气数据 并做可视化解读气象魅力_基于python爬虫的天气预报数据分析

- 10办公室分配方案python_Python自动化办公室(1),python,一

当前位置: article > 正文

朴素贝叶斯之自然语言语义分析(三):评论情感分类 - 差评、中评、好评_朴素贝叶斯评论情感分类

作者:2023面试高手 | 2024-04-04 15:28:50

赞

踩

朴素贝叶斯评论情感分类

一、需求说明

(1)应用背景

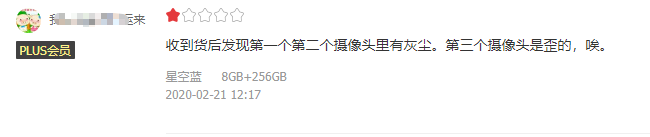

我们在做用户画像的时候,需要获得用户对某商品、品牌的评价记录这样的事实标签。这个值获取很麻烦,不好根据一句标语得出一个分值。

我们可以为评价的偏好得分,定义一个规则:

- 好评 --> 得 5分

- 中评 --> 得 0分

- 差评 --> 得 - 5分

(2)带来的问题

业务系统中有大量的用户商品评价,存在于商品评论表中:

| sku_id | user_id | comment |

|---|---|---|

| sku0001 | user0008 | 穿的舒服,卖家发货挺快的,服务态度也很好 |

| sku0001 | user0006 | 东西质量不错,性价比高,特别轻便,舒适透气,宝贝与描述完全一致。 |

| sku0002 | user0003 | 版型挺好看的,穿起来挺合身的。这个价买到相当值! |

| sku0003 | user0012 | 穿了没几天就坏了,客服还不理人,不会再买了! |

| … | … | … |

如何让程序,输入一句评语后,能自动判别出是好评还是中评、差评呢?

无法通过sql编程实现,必须使用机器学习算法,比如朴素贝叶斯!

二、实现步骤

- 人工收集并标注样本训练集

- 开发朴素贝叶斯算法的模型训练程序

- 开发模型的预测程序并用测试数据进行模型效果评估

- 用调好的模型,去对未知数据进行预测分类

三、关键要点

(1)特征向量化方案

- 中文分词:HanLP工具包、分词去噪 ★★★★★

- 词特征位置映射:Hash映射

- 词特征值选取:TF-IDF

(2)分类算法

- 朴素贝叶斯算法

- 预测评估 ★★

四、代码开干

(1)导入HanLP依赖

HanLP使用说明 -> 官网:http://www.hanlp.com/

<dependency>

<groupId>com.hankcs</groupId>

<artifactId>hanlp</artifactId>

<version>portable-1.7.4</version>

</dependency>

- 1

- 2

- 3

- 4

- 5

(2)不要怂

package cn.ianlou.bayes import java.util import com.hankcs.hanlp.HanLP import com.hankcs.hanlp.seg.common.Term import org.apache.log4j.{Level, Logger} import org.apache.spark.broadcast.Broadcast import org.apache.spark.ml.classification.{NaiveBayes, NaiveBayesModel} import org.apache.spark.ml.feature.{HashingTF, IDF, IDFModel} import org.apache.spark.sql.{DataFrame, Dataset, Row, SparkSession} import scala.collection.mutable /** * @date: 2020/2/23 22:04 * @site: www.ianlou.cn * @author: lekko 六水 * @qq: 496208110 * @description: * */ object NLP_CommentClassify { def main(args: Array[String]): Unit = { Logger.getLogger("org.apache.spark").setLevel(Level.WARN) val spark: SparkSession = SparkSession .builder() .appName(this.getClass.getSimpleName) .master("local[*]") .getOrCreate() import spark.implicits._ // 加载禁用词、敏感词 收集到Driver端并广播出去 val stopWords: Dataset[String] = spark.read.textFile("userProfile/data/comment/stopwords") val sw: Set[String] = stopWords.collect().toSet val bc: Broadcast[Set[String]] = spark.sparkContext.broadcast(sw) // 1、加载样本数据,并将三种样本数据合一块 // 0.0->差评 1.0->中评 2.0->好评 val hp: Dataset[String] = spark.read.textFile("userProfile/data/comment/good") val zp: Dataset[String] = spark.read.textFile("userProfile/data/comment/general") val cp: Dataset[String] = spark.read.textFile("userProfile/data/comment/poor") val sample: Dataset[(Double, String)] = hp.map(e => (2.0, e)) union zp.map(e => (1.0, e)) union cp.map(e => (0.0, e)) // 2、对样本数据进行分词,并过滤 val sampleWcDF: DataFrame = sample.map(ds => { val label: Double = ds._1 val str: String = ds._2 //分词 java类型的List -> 需将java中的自动转换成scala的,方便调用 import scala.collection.JavaConversions._ val terms: util.List[Term] = HanLP.segment(str) val filtered: mutable.Buffer[Term] = terms.filter(term => { //term是个对象,包含了词、声调等 !term.nature.startsWith("p") && //过滤介词 !term.nature.startsWith("u") && //过滤助词 !term.nature.startsWith("y") && //过滤语气词 !term.nature.startsWith("w") //过滤标点 }) .filter(term => { !bc.value.contains(term.word) }) //将term数组转换成字符串Array val wcArr: Array[String] = filtered.map(term => term.word).toArray (label, wcArr) }).toDF("label", "wcArr") /** 切分后的部分词如下: * +-----+-----------------------------------------------------------------------------+ * |label|wcArr | * +-----+-----------------------------------------------------------------------------+ * |3.0 |[不错, 家居, 用品, 推荐, 这家, 微, 店] | * |3.0 |[物流, 也, 是, 很, 快, 价格, 实惠, 而且, 卖家, 服务, 态度, 很好] | * |3.0 |[不说, 话] | * |3.0 |[手机, 有, 一, 段, 时间, 总体, 感觉, 还, 可以, 就, 是, 指纹, 解锁, 感觉, 不, 太, 好] | * |3.0 |[穿, 身上, 挺, 舒适, 物流, 也, 快, 很, 满意] | * |3.0 |[你好, 好看, 穿, 上, 很, 有, 型, 非常, 满意] | * | .. | .......................... | * +-----+-----------------------------------------------------------------------------+ */ // 3、将词的数组转成 TF向量 val tfBean: HashingTF = new HashingTF() .setNumFeatures(1000000) //设定TF向量的长度 .setInputCol("wcArr") .setOutputCol("tf_vec") val tfDF: DataFrame = tfBean.transform(sampleWcDF) // 4、计算出IDF 并得到最终的TF-IDF val idfBean: IDF = new IDF() .setInputCol("tf_vec") .setOutputCol("tfidf_vec") val tf_model: IDFModel = idfBean.fit(tfDF) val tf_idf: DataFrame = tf_model.transform(tfDF) // 5、将特征向量数据,随机切分成了两部分:训练集、测试集 // 返回值自动匹配值对应位置上:0.9->train 0.1->test val Array(train, test) = tf_idf.randomSplit(Array(0.9, 0.1)) // 6、使用 Naive bayes算法训练模型 val bayes: NaiveBayes = new NaiveBayes() .setLabelCol("label") .setFeaturesCol("tfidf_vec") .setSmoothing(1.0) val bayes_model: NaiveBayesModel = bayes.fit(train) //将模型保存输出 // bayes_model.save("userProfile/data/comment/cmt_classfiy_model") // 7、加载模型,对测试数据进行预测 // val import_model: NaiveBayesModel = NaiveBayesModel.load("userProfile/data/comment/cmt_classfiy_model") val predict: DataFrame = bayes_model.transform(test) predict.drop("wcArr", "tf_vec", "tfidf_vec", "probability") .show(20, false) /** 预测数据如下: * +-----+-------------------------------------------------------------+----------+ * |label|rawPrediction |prediction| * +-----+-------------------------------------------------------------+----------+ * |2.0 |[-747.637914564428,-731.2594545039569,-670.607711511326] |2.0 | * |2.0 |[-272.37994460222103,-282.57209202292,-311.7303961540079] |0.0 | * |2.0 |[-5767.454153488989,-5535.821898484342,-5800.9303308607905] |1.0 | * |2.0 |[-2684.464068025356,-2708.624329110813,-2583.469344023015] |2.0 | * |2.0 |[-277.9643926287218,-268.26311309671814,-227.48341719673542] |2.0 | * |2.0 |[-284.10668520829034,-274.7353536977782,-231.0692541159483] |2.0 | * |2.0 |[-6215.519311631931,-6073.2560110828745,-6310.240365291973] |1.0 | * |2.0 |[-6931.386427418773,-6714.489964773973,-6948.950297510344] |1.0 | * |2.0 |[-4373.763017497593,-4413.6535762004105,-4422.23896893744] |0.0 | * |2.0 |[-372.6616634591026,-370.30136899146345,-342.6704036063473] |2.0 | * | ... | ........................ | ...... | * +-----+-------------------------------------------------------------+----------+ */ // 8、评估模型预测的准确率 val correctCnts: DataFrame = predict.selectExpr("count(1) as total", "count(if(label = prediction,1,null)) as correct") correctCnts.show(10, false) /** * +------+-------+ * |total |correct| * +------+-------+ * |245733|204328 | * +------+-------+ * * 模型预测的准确率为: * correct ÷ total ×100% = 83.15% */ // 9、 接下来就是读取待预测分类数据, // 然后分词、特征工程、调用算法、预测分类 // 逻辑和上面的差不多,不再手写。 spark.close() } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/2023面试高手/article/detail/359532

推荐阅读

相关标签