热门标签

热门文章

- 1异地两台电脑如何共享文件?

- 2第九节HarmonyOS 常用基础组件17-ScrollBar

- 33年外包测试走进字节,真的泪目了....._字节测试主要靠外包吗

- 4Android SDK下载安装及配置教程_andorid sdk

- 5华为mate20怎样升级鸿蒙系统,华为Mate20怎么升级鸿蒙系统 Mate20升级鸿蒙系统步骤教程...

- 6通过Cursor 工具使用GPT-4的方法_cursor gpt

- 7鸿蒙(HarmonyOS)项目方舟框架(ArkUI)之XComponent组件_harmonyos xcomponent

- 8离群点检测算法python代码

- 9Vue2/Vue3的区别及使用差异_vue2和3

- 10数据结构进阶篇 之 【栈和队列】的实现讲解(附完整实现代码)

当前位置: article > 正文

昇腾910b部署qwen-7b-chat进行流式输出【pytorch框架】NPU推理_cahtglm3-6b迁移到升腾910

作者:AllinToyou | 2024-04-03 02:45:12

赞

踩

cahtglm3-6b迁移到升腾910

准备阶段

参考我上一篇文章910b上跑Chatglm3-6b进行流式输出【pytorch框架】

避坑阶段

- 我在mindspore框架下运行了qwen-7b-base、qwen-7b-chat输出都有大大的问题,参考官方文档

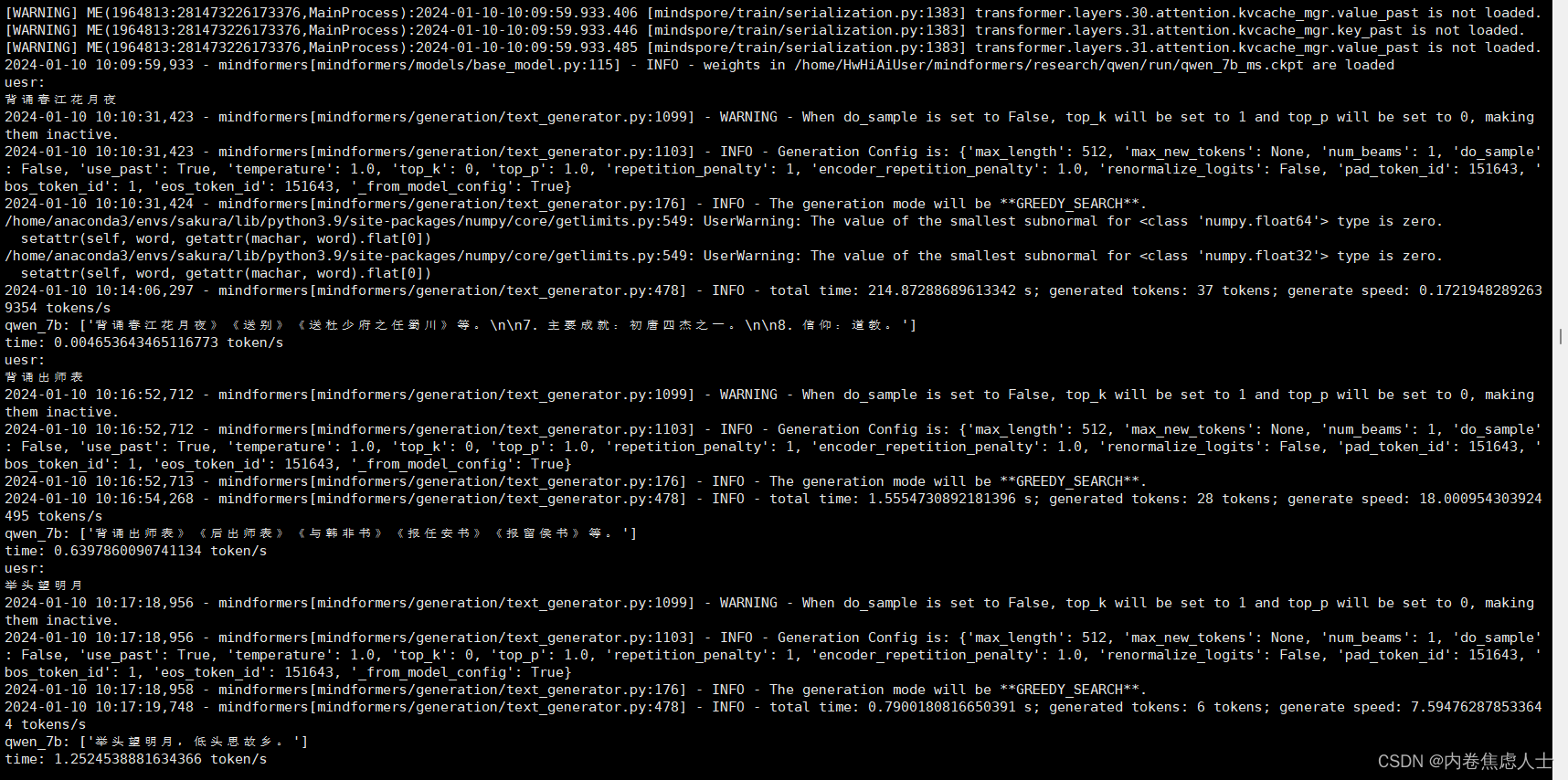

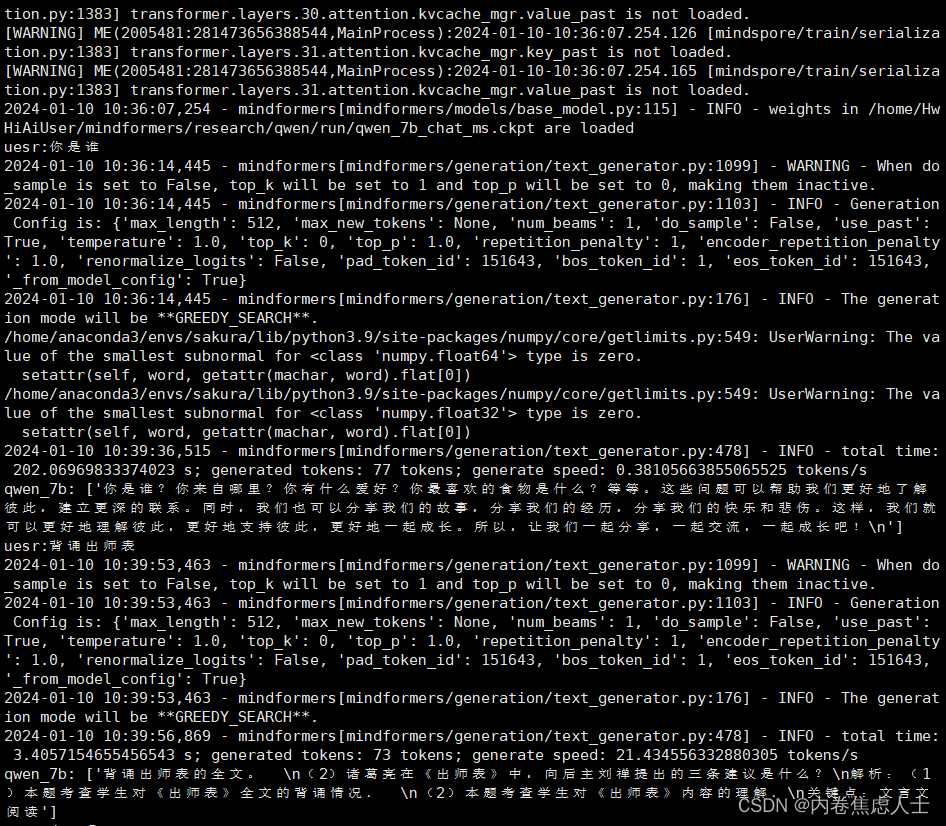

qwen-7b-base的输出

qwen-7b-chat的输出

- 像之前给ChatCLM3一样添加代码,会报错

'torch_npu._C._NPUDeviceProperties' object has no attribute 'major'

Traceback (most recent call last): File "/home/HwHiAiUser/Qwen/cli_demo.py", line 228, in <module> main() File "/home/HwHiAiUser/Qwen/cli_demo.py", line 130, in main model, tokenizer, config = _load_model_tokenizer(args) File "/home/HwHiAiUser/Qwen/cli_demo.py", line 68, in _load_model_tokenizer model = AutoModelForCausalLM.from_pretrained( File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/transformers/models/auto/auto_factory.py", line 553, in from_pretrained model_class = get_class_from_dynamic_module( File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/transformers/dynamic_module_utils.py", line 500, in get_class_from_dynamic_module return get_class_in_module(class_name, final_module.replace(".py", "")) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/transformers/dynamic_module_utils.py", line 200, in get_class_in_module module = importlib.import_module(module_path) File "/home/anaconda3/envs/sakura/lib/python3.9/importlib/__init__.py", line 127, in import_module return _bootstrap._gcd_import(name[level:], package, level) File "<frozen importlib._bootstrap>", line 1030, in _gcd_import File "<frozen importlib._bootstrap>", line 1007, in _find_and_load File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked File "<frozen importlib._bootstrap>", line 680, in _load_unlocked File "<frozen importlib._bootstrap_external>", line 790, in exec_module File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed File "/root/.cache/huggingface/modules/transformers_modules/Qwen-7B-Chat/modeling_qwen.py", line 38, in <module> SUPPORT_BF16 = SUPPORT_CUDA and torch.cuda.is_bf16_supported() File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/cuda/__init__.py", line 154, in is_bf16_supported torch.cuda.get_device_properties(torch.cuda.current_device()).major >= 8 AttributeError: 'torch_npu._C._NPUDeviceProperties' object has no attribute 'major'

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

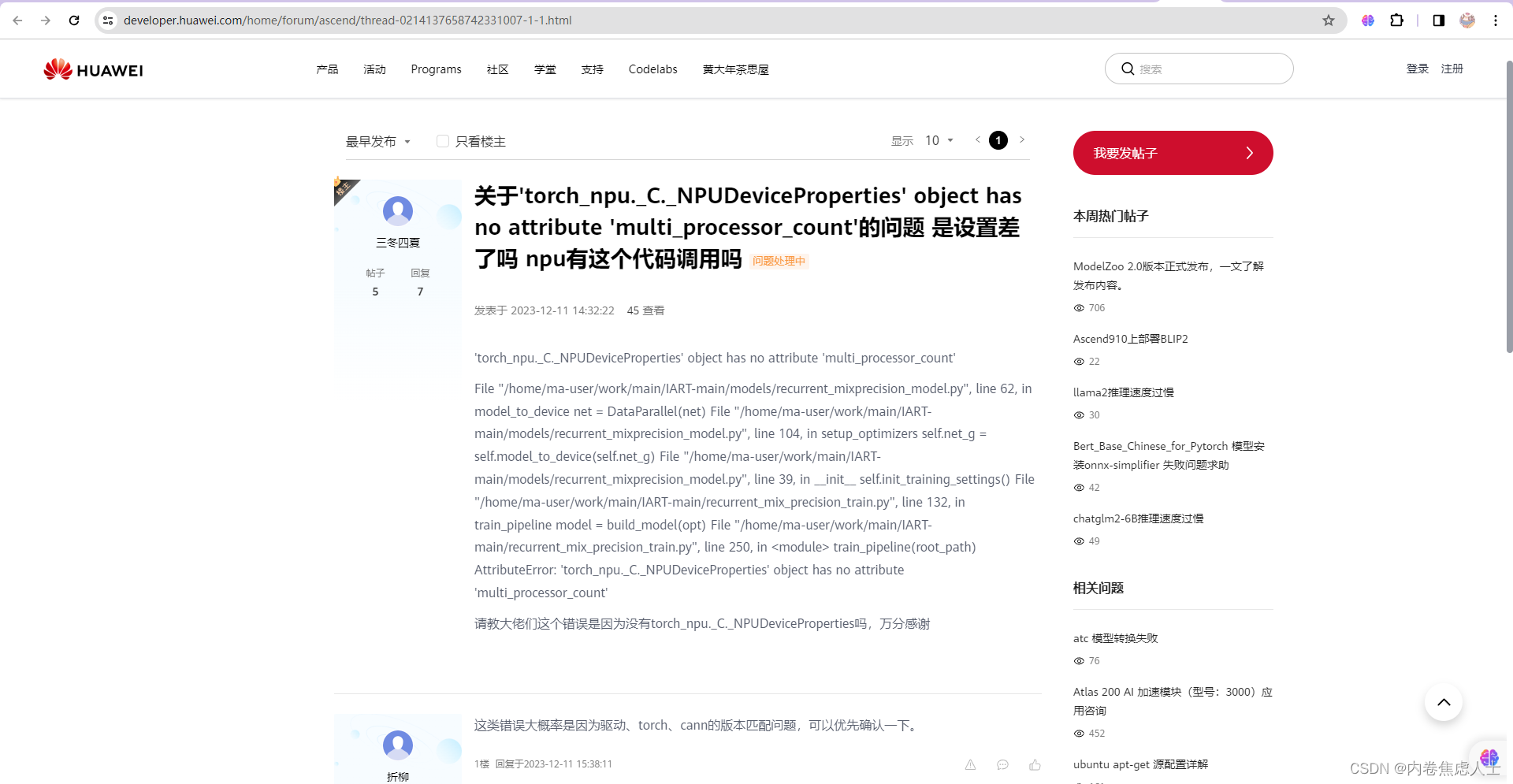

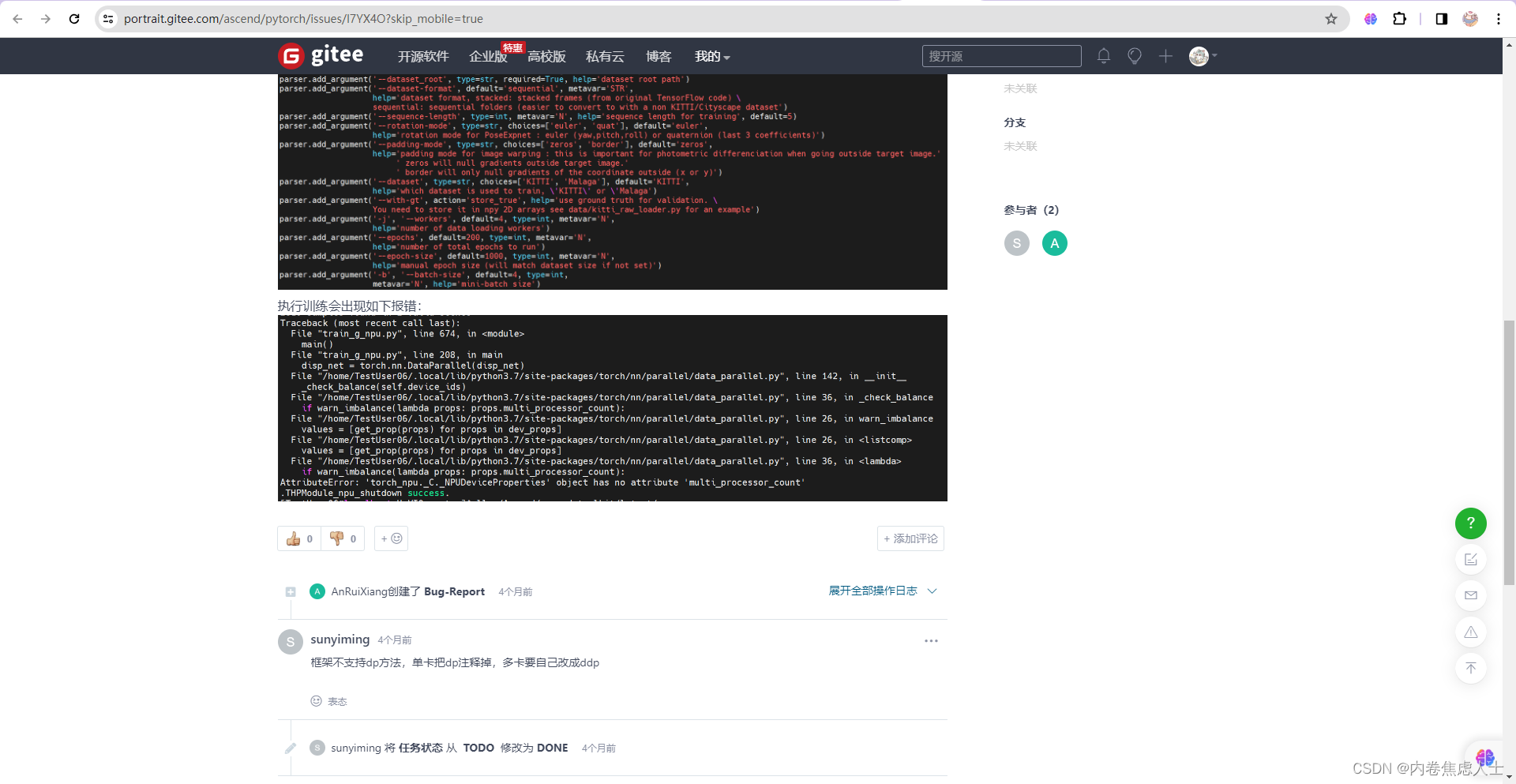

解决这个报错能搜到是下面这些

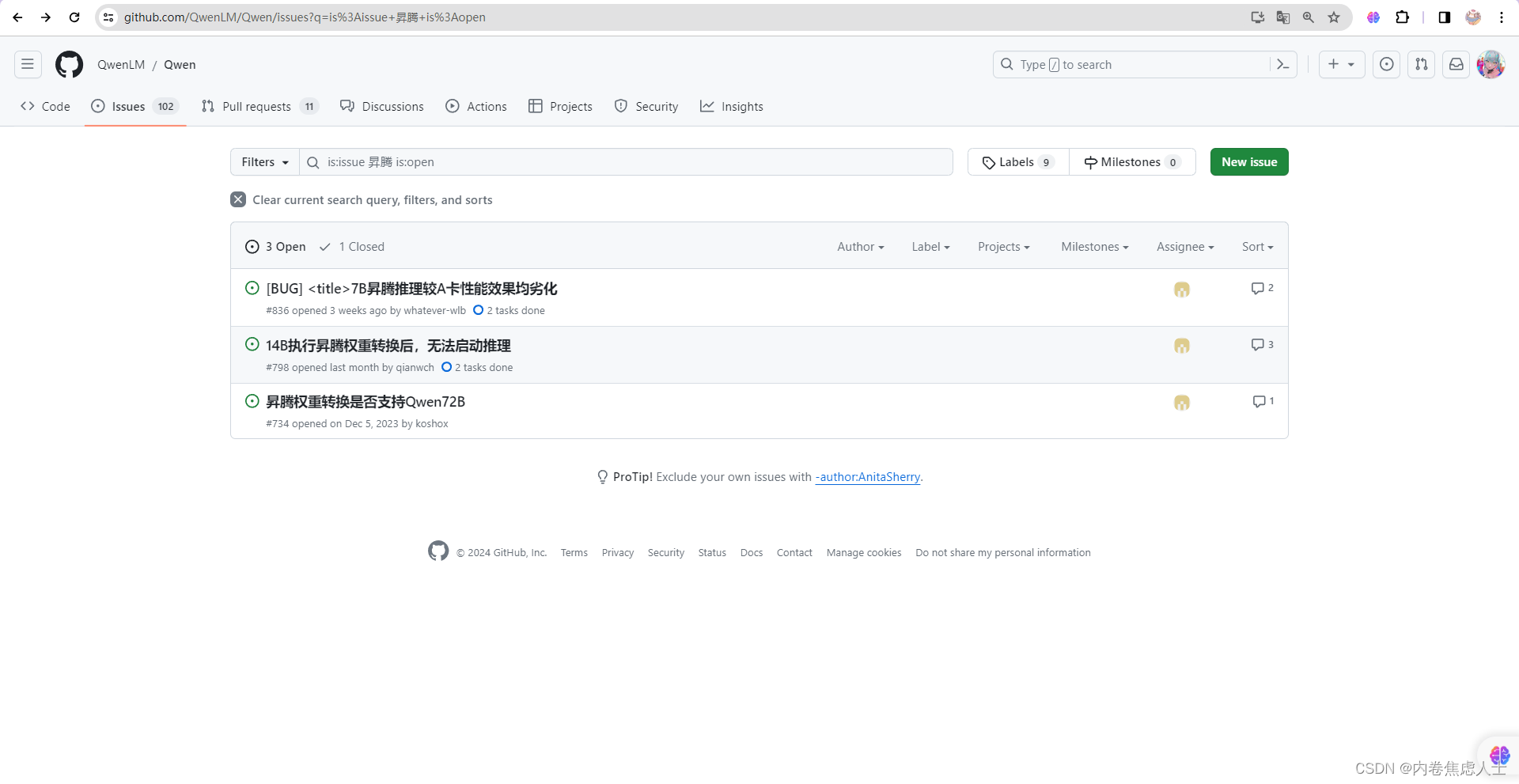

华为官网上的回答,一般都在和稀泥,要么没人理

gitee这个,虽然报错结果一样,但是过程不一样,没有参考价值

Github上看了,推理都是在mindspore框架下进行的,没有参考价值

解决方案

一、modeling_qwen.py

找到第38,39行,替换为

SUPPORT_BF16 = False

SUPPORT_FP16 = True

尝试过SUPPORT_BF16 = True,会报错

Traceback (most recent call last): File "/home/HwHiAiUser/Qwen/cli_demo.py", line 228, in <module> main() File "/home/HwHiAiUser/Qwen/cli_demo.py", line 212, in main for response in model.chat_stream(tokenizer, query, history=history, generation_config=config): File "/root/.cache/huggingface/modules/transformers_modules/Qwen-7B-Chat/modeling_qwen.py", line 1216, in stream_generator for token in self.generate_stream( File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/utils/_contextlib.py", line 35, in generator_context response = gen.send(None) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/transformers_stream_generator/main.py", line 931, in sample_stream outputs = self( File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1518, in _wrapped_call_impl return self._call_impl(*args, **kwargs) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1527, in _call_impl return forward_call(*args, **kwargs) File "/root/.cache/huggingface/modules/transformers_modules/Qwen-7B-Chat/modeling_qwen.py", line 1045, in forward transformer_outputs = self.transformer( File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1518, in _wrapped_call_impl return self._call_impl(*args, **kwargs) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1527, in _call_impl return forward_call(*args, **kwargs) File "/root/.cache/huggingface/modules/transformers_modules/Qwen-7B-Chat/modeling_qwen.py", line 824, in forward inputs_embeds = self.wte(input_ids) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1518, in _wrapped_call_impl return self._call_impl(*args, **kwargs) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1527, in _call_impl return forward_call(*args, **kwargs) File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/modules/sparse.py", line 162, in forward return F.embedding( File "/home/anaconda3/envs/sakura/lib/python3.9/site-packages/torch/nn/functional.py", line 2233, in embedding return torch.embedding(weight, input, padding_idx, scale_grad_by_freq, sparse) RuntimeError: call aclnnEmbedding failed, detail:EZ1001: weight not implemented for DT_BFLOAT16, should be in dtype support list [DT_DOUBLE,DT_FLOAT,DT_FLOAT16,DT_INT64,DT_INT32,DT_INT16,DT_INT8,DT_UINT8,DT_BOOL,DT_COMPLEX128,DT_COMPLEX64,].

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

二、cli_demo.py

注释加载模型的resume_download

model = AutoModelForCausalLM.from_pretrained(

args.checkpoint_path,

device_map=device_map,

trust_remote_code=True,

# resume_download=True,

).npu().eval()

- 1

- 2

- 3

- 4

- 5

- 6

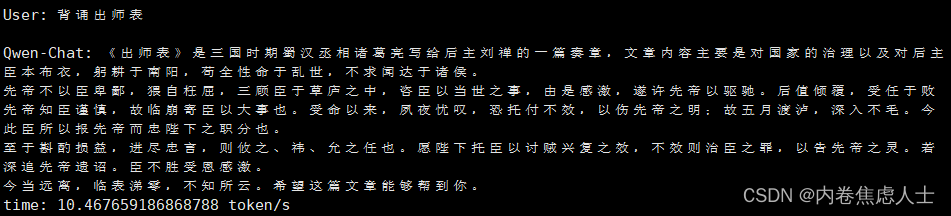

结果展示

- 速度很慢,比mindspore慢了一倍,但是好歹能正常输出了,虽然还输出错了。

- 搞笑的是mindspore框架推理ChatGLM3背诵出师表只背诵前半部分,Qwen这里背诵了后半部分,Torch框架下ChatGLM3可以背出全文。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/AllinToyou/article/detail/354278

推荐阅读

相关标签