热门标签

热门文章

- 1Paper Reading — 3D Gaussian Splatting_3d gaussian示意图

- 2mysql的set类型很简单_mysql set转换string

- 3SSH远程连接原理(怎样在linux系统和windows系统互传文件)(有多处引用别人的博客,侵权删)

- 4【Java数据结构】详解LinkedList与链表(一)

- 5【Unity实战篇 】 | Unity实现UGUI颜色渐变,支持透明渐变_unity如何逐渐改变物体颜色

- 6华为OD机试C卷-- 螺旋数字矩阵(Java & JS & Python & C)

- 7简单的单目测距_单目测距原理

- 8Python基于Oxford-IIIT Pet Dataset实现宠物识别系统_数据集包含宠物的面部图像和声音录音

- 9如何实现无公网IP远程访问本地内网部署的Proxmox VE虚拟机平台_远程访问pve

- 10【推荐系统】基于用户的协同过滤算法(UserCF)的python实现_下载movielens数据集,并进行分析,要求如下 (1)下载数据集ml-latest-small.

当前位置: article > 正文

GPUImage实现人脸实时识别_gpuimage 脸部贴图

作者:AllinToyou | 2024-06-17 06:08:27

赞

踩

gpuimage 脸部贴图

最近在研究OC的生物活检方面的实现,发现SDK中自带有相应的功能类,则进行了调研与实现。

实现过程中发现一个比较坑人的一个地方,就是GPUIMAGE这个框架里面对于视频采集使用的YUV格式,而YUV格式无法与OC的类库进行配合实现实时识别。

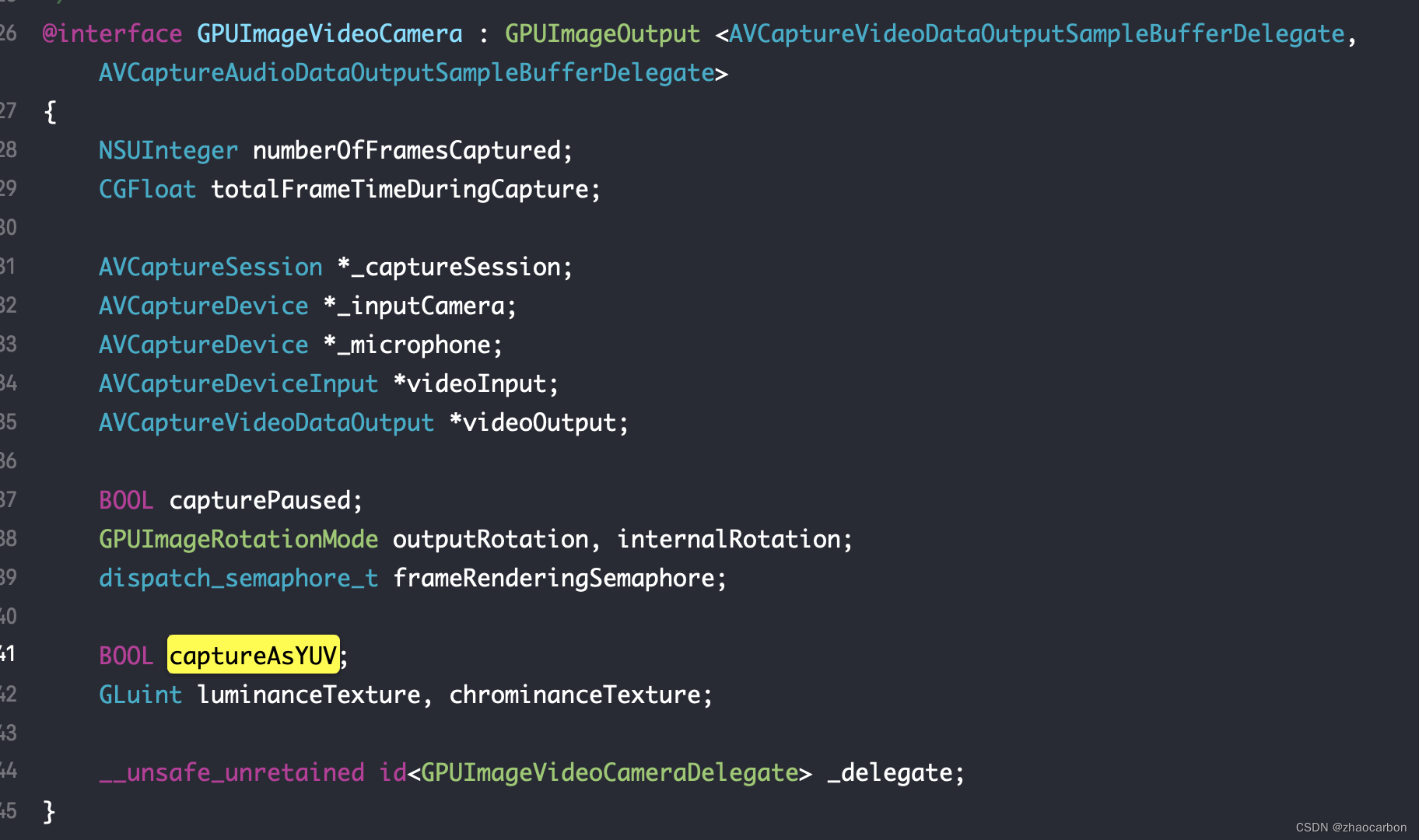

现在我们来剖析一下GPUImageVideoCamera的实现:

- @interface GPUImageVideoCamera : GPUImageOutput <AVCaptureVideoDataOutputSampleBufferDelegate, AVCaptureAudioDataOutputSampleBufferDelegate>

-

-

- - (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition;

可以看到提供了一个初始化方法,此初始化方法内部的代码如下:

- - (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition;

- {

- if (!(self = [super init]))

- {

- return nil;

- }

-

- cameraProcessingQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_HIGH,0);

- audioProcessingQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_LOW,0);

-

- frameRenderingSemaphore = dispatch_semaphore_create(1);

-

- _frameRate = 0; // This will not set frame rate unless this value gets set to 1 or above

- _runBenchmark = NO;

- capturePaused = NO;

- outputRotation = kGPUImageNoRotation;

- internalRotation = kGPUImageNoRotation;

- captureAsYUV = YES;

- _preferredConversion = kColorConversion709;

-

- // Grab the back-facing or front-facing camera

- _inputCamera = nil;

- NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

- for (AVCaptureDevice *device in devices)

- {

- if ([device position] == cameraPosition)

- {

- _inputCamera = device;

- }

- }

-

- if (!_inputCamera) {

- return nil;

- }

-

- // Create the capture session

- _captureSession = [[AVCaptureSession alloc] init];

-

- [_captureSession beginConfiguration];

-

- // Add the video input

- NSError *error = nil;

- videoInput = [[AVCaptureDeviceInput alloc] initWithDevice:_inputCamera error:&error];

- if ([_captureSession canAddInput:videoInput])

- {

- [_captureSession addInput:videoInput];

- }

-

- // Add the video frame output

- videoOutput = [[AVCaptureVideoDataOutput alloc] init];

- [videoOutput setAlwaysDiscardsLateVideoFrames:NO];

-

- // if (captureAsYUV && [GPUImageContext deviceSupportsRedTextures])

- if (captureAsYUV && [GPUImageContext supportsFastTextureUpload])

- {

- BOOL supportsFullYUVRange = NO;

- NSArray *supportedPixelFormats = videoOutput.availableVideoCVPixelFormatTypes;

- for (NSNumber *currentPixelFormat in supportedPixelFormats)

- {

- if ([currentPixelFormat intValue] == kCVPixelFormatType_420YpCbCr8BiPlanarFullRange)

- {

- supportsFullYUVRange = YES;

- }

- }

-

- if (supportsFullYUVRange)

- {

- [videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarFullRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey]];

- isFullYUVRange = YES;

- }

- else

- {

- [videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey]];

- isFullYUVRange = NO;

- }

- }

- else

- {

- [videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_32BGRA] forKey:(id)kCVPixelBufferPixelFormatTypeKey]];

- }

-

- runSynchronouslyOnVideoProcessingQueue(^{

-

- if (captureAsYUV)

- {

- [GPUImageContext useImageProcessingContext];

- // if ([GPUImageContext deviceSupportsRedTextures])

- // {

- // yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVVideoRangeConversionForRGFragmentShaderString];

- // }

- // else

- // {

- if (isFullYUVRange)

- {

- yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVFullRangeConversionForLAFragmentShaderString];

- }

- else

- {

- yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVVideoRangeConversionForLAFragmentShaderString];

- }

-

- // }

-

- if (!yuvConversionProgram.initialized)

- {

- [yuvConversionProgram addAttribute:@"position"];

- [yuvConversionProgram addAttribute:@"inputTextureCoordinate"];

-

- if (![yuvConversionProgram link])

- {

- NSString *progLog = [yuvConversionProgram programLog];

- NSLog(@"Program link log: %@", progLog);

- NSString *fragLog = [yuvConversionProgram fragmentShaderLog];

- NSLog(@"Fragment shader compile log: %@", fragLog);

- NSString *vertLog = [yuvConversionProgram vertexShaderLog];

- NSLog(@"Vertex shader compile log: %@", vertLog);

- yuvConversionProgram = nil;

- NSAssert(NO, @"Filter shader link failed");

- }

- }

-

- yuvConversionPositionAttribute = [yuvConversionProgram attributeIndex:@"position"];

- yuvConversionTextureCoordinateAttribute = [yuvConversionProgram attributeIndex:@"inputTextureCoordinate"];

- yuvConversionLuminanceTextureUniform = [yuvConversionProgram uniformIndex:@"luminanceTexture"];

- yuvConversionChrominanceTextureUniform = [yuvConversionProgram uniformIndex:@"chrominanceTexture"];

- yuvConversionMatrixUniform = [yuvConversionProgram uniformIndex:@"colorConversionMatrix"];

-

- [GPUImageContext setActiveShaderProgram:yuvConversionProgram];

-

- glEnableVertexAttribArray(yuvConversionPositionAttribute);

- glEnableVertexAttribArray(yuvConversionTextureCoordinateAttribute);

- }

- });

-

- [videoOutput setSampleBufferDelegate:self queue:cameraProcessingQueue];

- if ([_captureSession

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/AllinToyou/article/detail/729930

推荐阅读

相关标签