热门标签

热门文章

- 1阴影初始化【4】_round down to nearest power of two

- 2PWM实验

- 3OpenCV 笔记(4):图像的算术运算、逻辑运算

- 4高级Linux运维工程师必备技能(扫盲篇)

- 5深度学习VGG模型核心拆解(转载)_conv relu conv relu maxpool drop

- 6Unity 编辑器篇|(五)编辑器拓展GUILayout类 (全面总结 | 建议收藏)_unity guilayout.toggle

- 7oauth2.0 授权码模式简单理解

- 8[JS]Mac系统下SublimeText运行JavaScript控制台——纯JavaScript开发环境_sublime 运行js mac

- 9Linux下TongWeb7.0.4.9企业版安装_tongweb7安装文件

- 10python asyncio 异步协程知识点集合_asyncio 查看协程号

当前位置: article > 正文

基于Yolov8网络进行目标检测(三)-训练自己的数据集

作者:weixin_40725706 | 2024-02-15 14:37:30

赞

踩

warning 鈿狅笍 no model scale passed. assuming scale='n'.

前一篇文章详细了讲解了如何构造自己的数据集,以及如何修改模型配置文件和数据集配置文件,本篇主要是如何训练自己的数据集,并且如何验证。

VOC2012数据集下载地址:

http://host.robots.ox.ac.uk/pascal/VOC/voc2012/

coco全量数据集下载地址:

http://images.cocodtaset.org/annotations/annotations_trainval2017.zip

本篇以以下图片为预测对象。

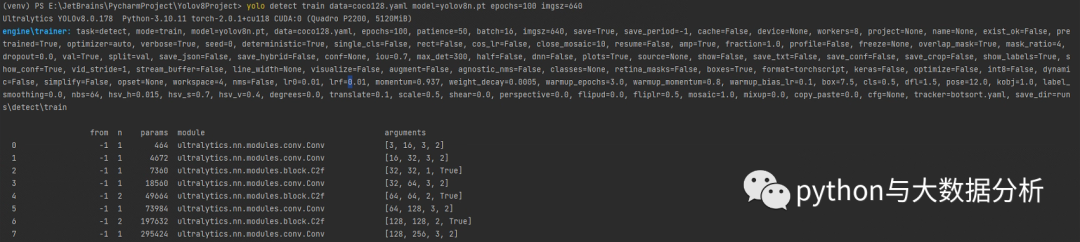

一、对coco128数据集进行训练,coco128.yaml中已包括下载脚本,选择yolov8n轻量模型,开始训练

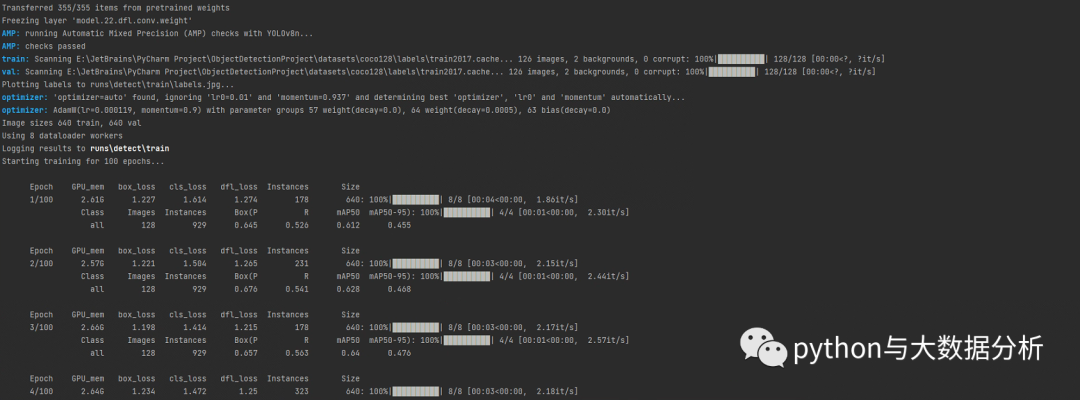

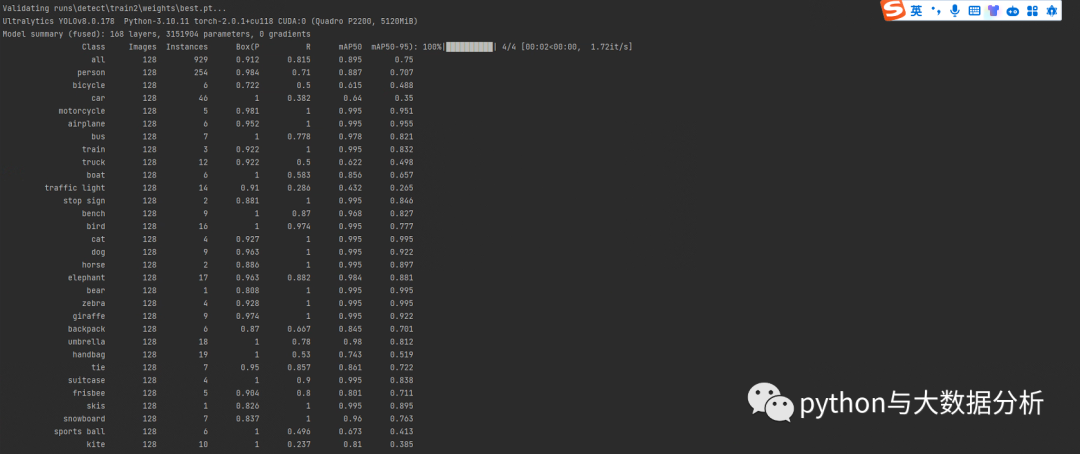

yolo detect train data=coco128.yaml model=model\yolov8n.pt epochs=100 imgsz=640训练的相关截图,第一部分是展开后的命令行执行参数和网络结构

第二部分是每轮训练过程

第三部分是对各类标签的验证情况

二、对VOC2012数据集进行训练,使用我们定义的两个yaml配置文件,选择yolov8n轻量模型,开始训练

yolo detect train data=E:\JetBrains\PycharmProject\Yolov8Project\venv\Lib\site-packages\ultralytics\cfg\datasets\VOC2012.yaml model=E:\JetBrains\PycharmProject\Yolov8Project\venv\Lib\site-packages\ultralytics\cfg\models\v8\VOC2012.yaml pretrained=model\yolov8n.pt epochs=10 imgsz=640以下为运行日志,和上述一样

- (venv) PS E:\JetBrains\PycharmProject\Yolov8Project> yolo detect train data=E:\JetBrains\PycharmProject\Yolov8Project\venv\Lib\site-packages\ultralytics\cfg\datasets\VOC2012.yaml model=E:\JetBrains\PycharmProject\Yolov8Project\venv\

- Lib\site-packages\ultralytics\cfg\models\v8\VOC2012.yaml pretrained=model\yolov8n.pt epochs=10 imgsz=640

- WARNING no model scale passed. Assuming scale='n'.

- from n params module arguments

- 0-11464 ultralytics.nn.modules.conv.Conv[3, 16, 3, 2]

- 1-114672 ultralytics.nn.modules.conv.Conv[16, 32, 3, 2]

- 2-117360 ultralytics.nn.modules.block.C2f [32, 32, 1, True]

- 3-1118560 ultralytics.nn.modules.conv.Conv[32, 64, 3, 2]

- 4-1249664 ultralytics.nn.modules.block.C2f [64, 64, 2, True]

- 5-1173984 ultralytics.nn.modules.conv.Conv[64, 128, 3, 2]

- 6-12197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]

- 7-11295424 ultralytics.nn.modules.conv.Conv[128, 256, 3, 2]

- 8-11460288 ultralytics.nn.modules.block.C2f [256, 256, 1, True]

- 9-11164608 ultralytics.nn.modules.block.SPPF [256, 256, 5]

- 10-110 torch.nn.modules.upsampling.Upsample[None, 2, 'nearest']

- 11[-1, 6] 10 ultralytics.nn.modules.conv.Concat[1]

- 12-11148224 ultralytics.nn.modules.block.C2f [384, 128, 1]

- 13-110 torch.nn.modules.upsampling.Upsample[None, 2, 'nearest']

- 14[-1, 4] 10 ultralytics.nn.modules.conv.Concat[1]

- 15-1137248 ultralytics.nn.modules.block.C2f [192, 64, 1]

- 16-1136992 ultralytics.nn.modules.conv.Conv[64, 64, 3, 2]

- 17[-1, 12] 10 ultralytics.nn.modules.conv.Concat[1]

- 18-11123648 ultralytics.nn.modules.block.C2f [192, 128, 1]

- 19-11147712 ultralytics.nn.modules.conv.Conv[128, 128, 3, 2]

- 20[-1, 9] 10 ultralytics.nn.modules.conv.Concat[1]

- 21-11493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

- 22[15, 18, 21] 1755212 ultralytics.nn.modules.head.Detect[20, [64, 128, 256]]

- VOC2012 summary: 225 layers, 3014748 parameters, 3014732 gradients

- Transferred319/355 items from pretrained weights

- UltralyticsYOLOv8.0.178Python-3.10.11 torch-2.0.1+cu118 CUDA:0(Quadro P2200, 5120MiB)

- engine\trainer: task=detect, mode=train, model=E:\JetBrains\PycharmProject\Yolov8Project\venv\Lib\site-packages\ultralytics\cfg\models\v8\VOC2012.yaml, data=E:\JetBrains\PycharmProject\Yolov8Project\venv\Lib\site-packages\ultralytic

- s\cfg\datasets\VOC2012.yaml, epochs=10, patience=50, batch=16, imgsz=640, save=True, save_period=-1, cache=False, device=None, workers=8, project=None, name=None, exist_ok=False, pretrained=model\yolov8n.pt, optimizer=auto, verbose=

- True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=10, resume=False, amp=True, fraction=1.0, profile=False, freeze=None, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save

- _json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, show=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, vid_stride=1, stream_bu

- ffer=False, line_width=None, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, boxes=True, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=None, w

- orkspace=4, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, label_smoothing=0.0, nbs=64, hsv_h=0.015, hs

- v_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0, cfg=None, tracker=botsort.yaml, save_dir=runs\detect\train8

- WARNING no model scale passed. Assuming scale='n'.

- from n params module arguments

- 0-11464 ultralytics.nn.modules.conv.Conv[3, 16, 3, 2]

- 1-114672 ultralytics.nn.modules.conv.Conv[16, 32, 3, 2]

- 2-117360 ultralytics.nn.modules.block.C2f [32, 32, 1, True]

- train: Scanning E:\JetBrains\PyCharm Project\ObjectDetectionProject\datasets\VOC2012\labels\train.cache... 17125 images, 195 backgrounds, 0 corrupt: 100%|██████████| 17125/17125[00:00<?, ?it/s]

- val: Scanning E:\JetBrains\PyCharm Project\ObjectDetectionProject\datasets\VOC2012\labels\train.cache... 17125 images, 195 backgrounds, 0 corrupt: 100%|██████████| 17125/17125[00:00<?, ?it/s]

- Plotting labels to runs\detect\train8\labels.jpg...

- optimizer: 'optimizer=auto' found, ignoring 'lr0=0.01' and 'momentum=0.937' and determining best 'optimizer', 'lr0' and 'momentum' automatically...

- optimizer: AdamW(lr=0.000417, momentum=0.9) with parameter groups 57 weight(decay=0.0), 64 weight(decay=0.0005), 63 bias(decay=0.0)

- Image sizes 640 train, 640 val

- Using8 dataloader workers

- Logging results to runs\detect\train8

- Starting training for10 epochs...

- Closing dataloader mosaic

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 1/102.41G0.91562.5721.24410640: 100%|██████████| 1071/1071[07:06<00:00, 2.51it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:44<00:00, 3.26it/s]

- all 17125349130.6210.5720.6050.436

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 2/102.53G1.0061.8691.31110640: 100%|██████████| 1071/1071[07:06<00:00, 2.51it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:40<00:00, 3.35it/s]

- all 17125349130.6440.540.5920.414

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 3/102.49G1.0381.6611.3449640: 100%|██████████| 1071/1071[07:02<00:00, 2.54it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:44<00:00, 3.25it/s]

- all 17125349130.6160.5620.5940.419

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 4/102.47G1.0211.4931.33112640: 100%|██████████| 1071/1071[07:00<00:00, 2.55it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:42<00:00, 3.29it/s]

- all 17125349130.6510.5880.6380.457

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 5/102.48G1.0051.4031.3184640: 100%|██████████| 1071/1071[07:00<00:00, 2.54it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:41<00:00, 3.31it/s]

- all 17125349130.6730.5920.650.467

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 6/102.46G0.96821.2991.299640: 100%|██████████| 1071/1071[06:55<00:00, 2.58it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:29<00:00, 3.58it/s]

- all 17125349130.7090.6230.6930.511

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 7/102.48G0.9321.2091.2618640: 100%|██████████| 1071/1071[06:57<00:00, 2.56it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:39<00:00, 3.37it/s]

- all 17125349130.7210.6610.7220.542

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 8/102.49G0.89611.1271.2329640: 100%|██████████| 1071/1071[07:00<00:00, 2.55it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:40<00:00, 3.35it/s]

- all 17125349130.7350.670.7460.567

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 9/102.47G0.85651.0581.2028640: 100%|██████████| 1071/1071[06:58<00:00, 2.56it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:29<00:00, 3.59it/s]

- all 17125349130.7660.6960.7730.597

- Epoch GPU_mem box_loss cls_loss dfl_loss InstancesSize

- 10/102.45G0.82780.98891.17911640: 100%|██████████| 1071/1071[06:55<00:00, 2.58it/s]

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:28<00:00, 3.61it/s]

- all 17125349130.7770.7180.7950.621

- 10 epochs completed in 1.620 hours.

- Optimizer stripped from runs\detect\train8\weights\last.pt, 6.2MB

- Optimizer stripped from runs\detect\train8\weights\best.pt, 6.2MB

- Validating runs\detect\train8\weights\best.pt...

- UltralyticsYOLOv8.0.178Python-3.10.11 torch-2.0.1+cu118 CUDA:0(Quadro P2200, 5120MiB)

- VOC2012 summary (fused): 168 layers, 3009548 parameters, 0 gradients

- ClassImagesInstancesBox(P R mAP50 mAP50-95): 100%|██████████| 536/536[02:31<00:00, 3.54it/s]

- all 17125349130.7770.7180.7950.621

- aeroplane 171259110.9240.8130.9020.731

- bicycle 171257530.7650.5780.7370.582

- bird 1712511690.8940.7570.8620.651

- boat 171259020.7560.6410.7260.506

- bottle 1712513290.7230.5940.6790.489

- bus 171256380.8930.8180.8940.775

- car 1712521050.7860.690.7990.618

- cat 1712512660.8520.880.9210.763

- chair 1712524430.7060.5610.660.482

- cow 171256420.7820.8040.8580.673

- diningtable 171256350.5910.7180.690.517

- dog 1712515710.8460.7950.8830.727

- horse 171257600.6730.6340.740.61

- person 17125157530.790.8390.8750.691

- pottedplant 1712510550.7010.5250.6140.404

- sheep 171258780.7750.8230.8580.665

- sofa 171255920.7030.6440.730.592

- train 171256720.8820.8440.9140.735

- tvmonitor 171258390.730.6770.7650.595

- Speed: 0.2ms preprocess, 3.9ms inference, 0.0ms loss, 0.7ms postprocess per image

- Results saved to runs\detect\train8

- Learn more at https://docs.ultralytics.com/modes/train

- (venv) PS E:\JetBrains\PycharmProject\Yolov8Project>

三、将run\detect\trainx\best.pt拷贝到model目录下,并改为相关可辨识的模型名称

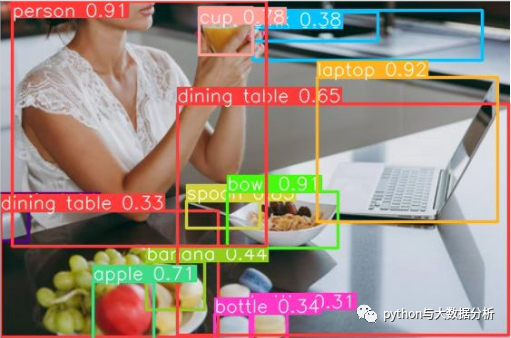

四、执行测试代码,验证一下几个训练模型的预测结果

- from ultralytics import YOLO

- from PIL importImage

- filepath='test\eat.png'

- # 直接加载预训练模型

- model = YOLO('model\yolov8x.pt')

- # Run inference on 'bus.jpg'

- results = model(filepath) # results list

- # Show the results

- for r in results:

- im_array = r.plot() # plot a BGR numpy array of predictions

- im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

- im.show() # show image

- im.save('yolov8x.jpg') # save image

- # 直接加载预训练模型

- model = YOLO('model\yolov8n.pt')

- # Run inference on 'bus.jpg'

- results = model(filepath) # results list

- # Show the results

- for r in results:

- im_array = r.plot() # plot a BGR numpy array of predictions

- im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

- im.show() # show image

- im.save('yolov8n.jpg') # save image

- # 直接加载预训练模型

- model = YOLO('model\coco128.pt')

- # Run inference on 'bus.jpg'

- results = model(filepath) # results list

- # Show the results

- for r in results:

- im_array = r.plot() # plot a BGR numpy array of predictions

- im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

- im.show() # show image

- im.save('coco128.jpg') # save image

- # 直接加载预训练模型

- model = YOLO('model\VOC2012.pt')

- # Run inference on 'bus.jpg'

- results = model(filepath) # results list

- # Show the results

- for r in results:

- im_array = r.plot() # plot a BGR numpy array of predictions

- im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

- im.show() # show image

- im.save('VOC2012.jpg') # save image

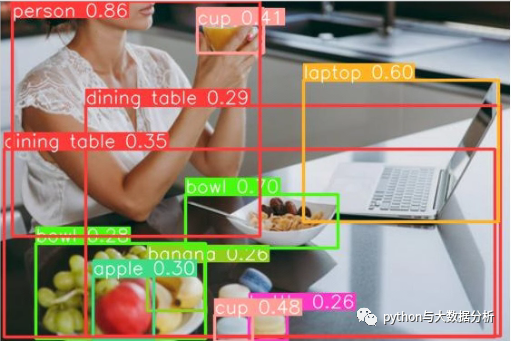

基于yolov8x.pt预训练模型预测情况如下:

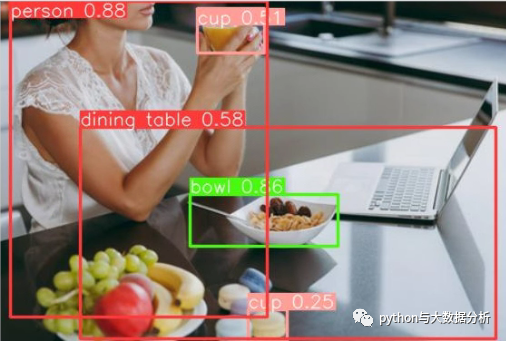

基于yolov8n.pt预训练模型预测情况如下:

基于coco128数据集训练的模型预测情况如下:

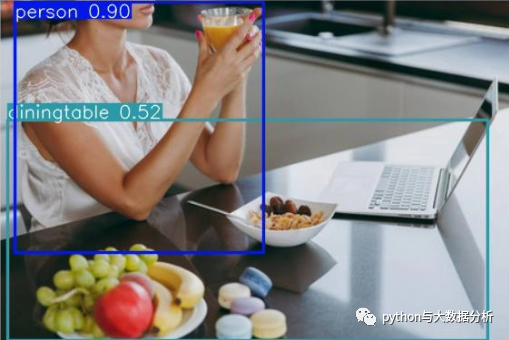

基于VOC2012数据集训练的模型预测情况如下:

结论:

1、基于yolov8x.pt预训练模型预测的最全最准,但也最慢。

2、基于yolov8n.pt预训练模型预测和yolov8x在概率上有些不一致,80类中的极少数类别识别不出来,毕竟网络模型参数相差太多。

3、基于coco128数据集训练的模型预测类别比yolov8n少,毕竟只有128张训练样本,估计会缺失一些标签。

4、基于VOC2012数据集训练的模型预测类别最少,毕竟只有20种类别,和coco数据集有交叉也有不同,VOC2012数据集没有水果样本,所以无法识别出水果。

基本上后边就可以愉快的训练各种目标检测了,但是数据集和标注数据才是比较耗人的。

最后欢迎关注公众号:python与大数据分析

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/weixin_40725706/article/detail/85033

推荐阅读

相关标签