- 1vue引入iconfont 报错 vue-cli引入iconfont报错These relative modules were not found: ./iconfont/iconfont.eot?t_cannot find module '/src/style/iconfont/iconfont.e

- 2【IDEA】IDEA 中运行SpringBoot +JSP项目,JSP页面404问题_idea启动jsp项目

- 3图像处理:AI大模型在图像识别和生成中的应用

- 4NFTScan :什么是 ERC-404?深入解读 NFT 协议的未来

- 5将时间序列转成图像——递归图方法 Matlab实现

- 6AI助手 - 商汤小浣熊 Raccoon_代码小浣熊

- 7AVPlayer的基本使用_avplayer 重定向

- 8Android JetPack简介

- 9FISCO BCOS区块链平台上的智能合约压力测试指南_对区块链网络节点完成压力测试

- 10Java项目:SpringBoot小区物业管理系统_@postmapping("/login")

Kubernetes(k8s)高可用简介与安装_k8s 高可用

赞

踩

一、简介

Kubernetes是Google 2014年创建管理的,是Google 10多年大规模容器管理技术Borg的开源版本。它是容器集群管理系统,是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制

Kubernetes一个核心的特点就是能够自主的管理容器来保证云平台中的容器按照用户的期望状态运行着(比如用户想让apache一直运行,用户不需要关心怎么去做,Kubernetes会自动去监控,然后去重启,新建,总之,让apache一直提供服务),管理员可以加载一个微型服务,让规划器来找到合适的位置,同时,Kubernetes也系统提升工具以及人性化方面,让用户能够方便的部署自己的应用(就像canary deployments)

现在Kubernetes着重于不间断的服务状态(比如web服务器或者缓存服务器)和原生云平台应用(Nosql),在不久的将来会支持各种生产云平台中的各种服务,例如,分批,工作流,以及传统数据库

Kubernetes作用:快速部署应用、快速扩展应用、无缝对接新的应用功能、节省资源,优化硬件资源的使用

Kubernetes 特点:

可移植:支持公有云,私有云,混合云,多重云(multi-cloud)

可扩展:模块化, 插件化, 可挂载, 可组合

自动化:自动部署,自动重启,自动复制,自动伸缩/扩展

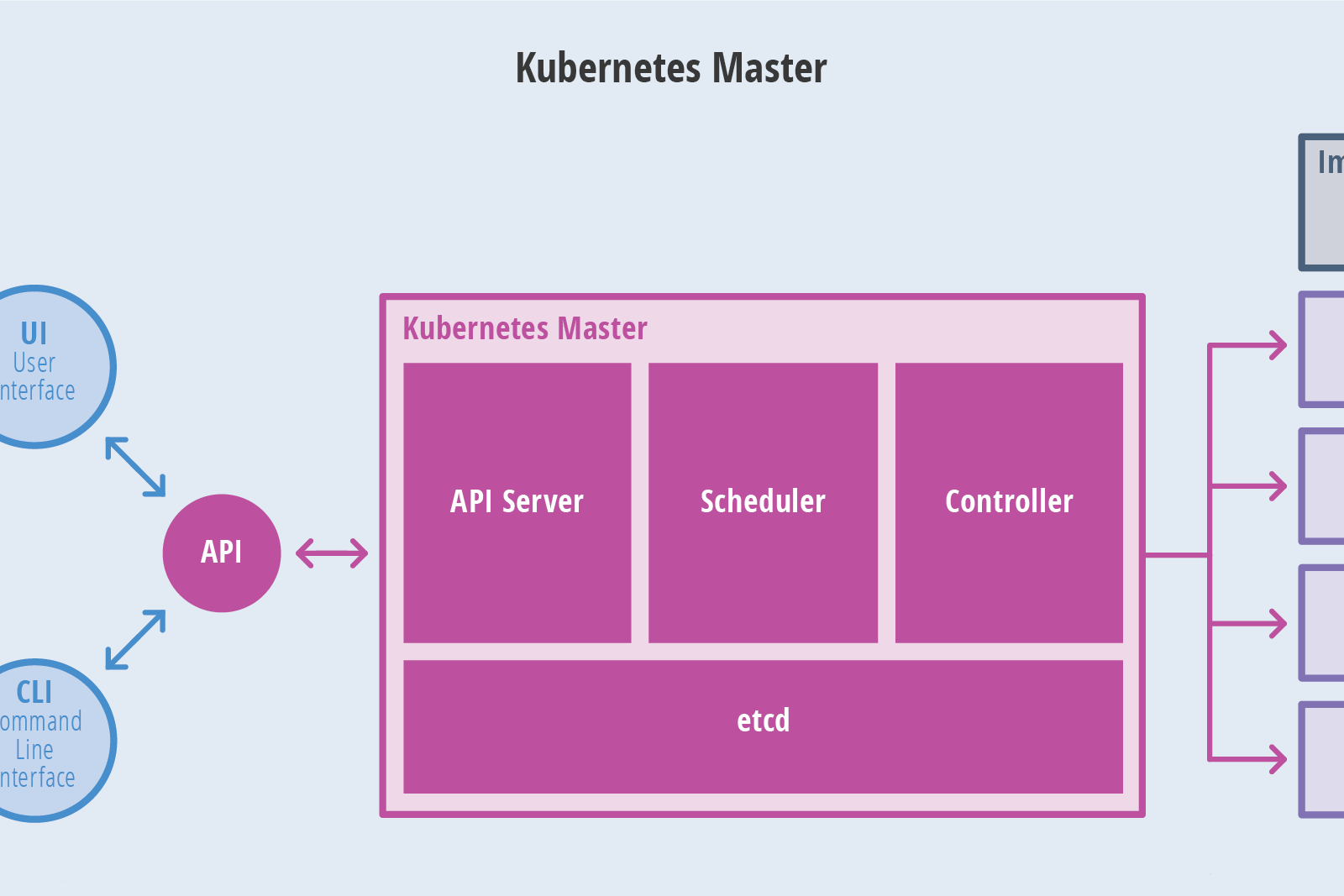

Kubernetes架构

Kubernetes集群包含有节点代理kubelet和Master组件(APIs, scheduler, etc),一切都基于分布式的存储系统。下面这张图是Kubernetes的架构图

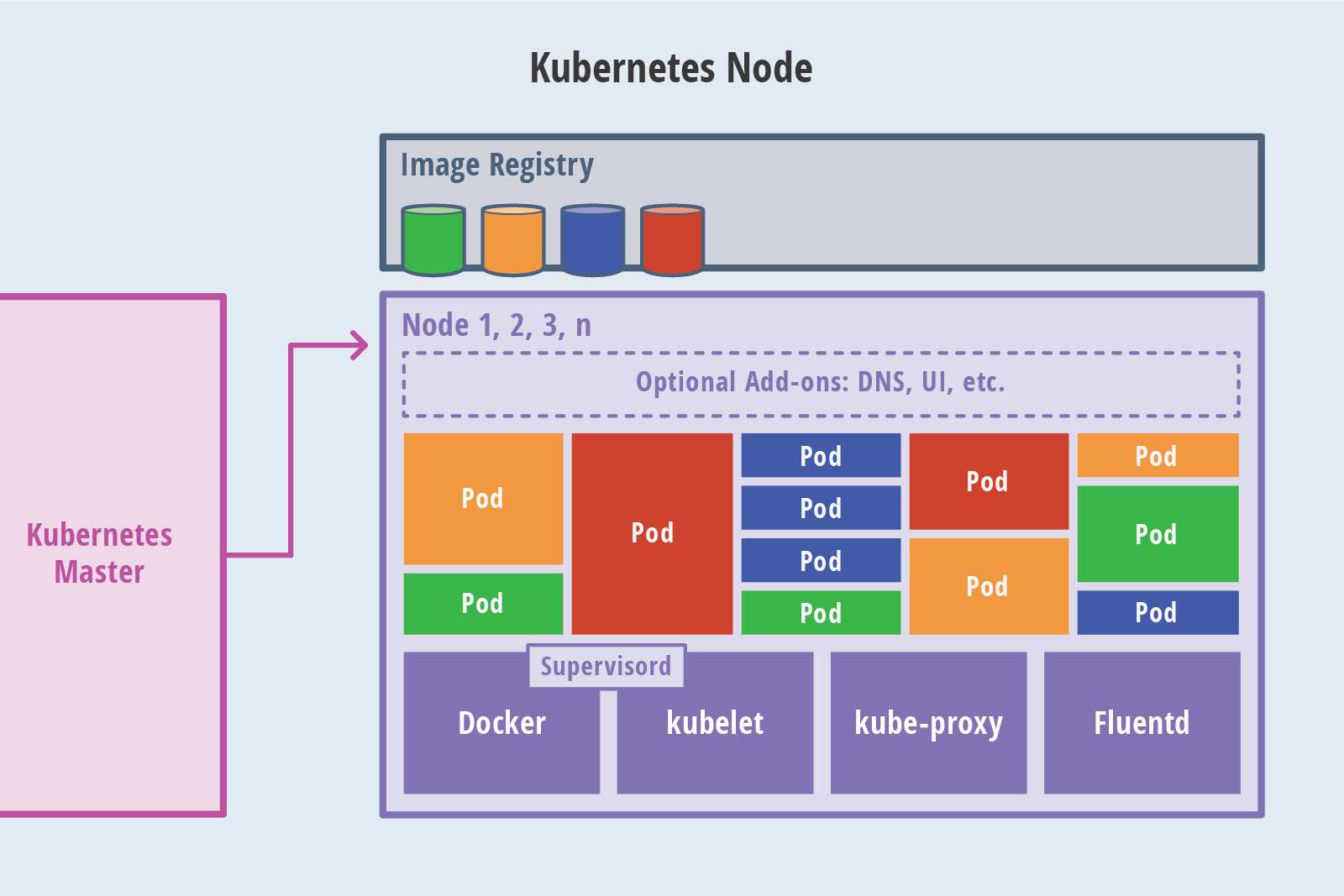

Kubernetes节点

在这张系统架构图中,我们把服务分为运行在工作节点上的服务和组成集群级别控制板的服务

Kubernetes节点有运行应用容器必备的服务,而这些都是受Master的控制

每次个节点上当然都要运行Docker。Docker来负责所有具体的映像下载和容器运行

Kubernetes主要由以下几个核心组件组成:

etcd:保存了整个集群的状态;

apiserver:提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制;

controller manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上;

kubelet:负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理;

Container runtime:负责镜像管理以及Pod和容器的真正运行(CRI);

kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡;

除了核心组件,还有一些推荐的Add-ons:

kube-dns:负责为整个集群提供DNS服务

Ingress Controller:为服务提供外网入口

Heapster:提供资源监控

Dashboard:提供GUI

Federation:提供跨可用区的集群

Fluentd-elasticsearch:提供集群日志采集、存储与查询

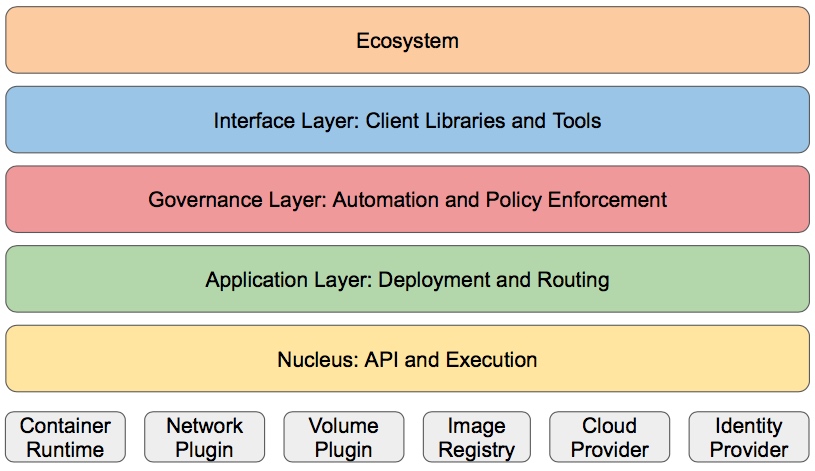

分层架构

Kubernetes设计理念和功能其实就是一个类似Linux的分层架构,如下图所示

核心层:Kubernetes最核心的功能,对外提供API构建高层的应用,对内提供插件式应用执行环境

应用层:部署(无状态应用、有状态应用、批处理任务、集群应用等)和路由(服务发现、DNS解析等)

管理层:系统度量(如基础设施、容器和网络的度量),自动化(如自动扩展、动态Provision等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

接口层:kubectl命令行工具、客户端SDK以及集群联邦

生态系统:在接口层之上的庞大容器集群管理调度的生态系统,可以划分为两个范畴

Kubernetes外部:日志、监控、配置管理、CI、CD、Workflow、FaaS、OTS应用、ChatOps等

Kubernetes内部:CRI、CNI、CVI、镜像仓库、Cloud Provider、集群自身的配置和管理等

kubelet

kubelet负责管理pods和它们上面的容器,images镜像、volumes、etc。

kube-proxy

每一个节点也运行一个简单的网络代理和负载均衡(详见services FAQ )(PS:官方 英文)。 正如Kubernetes API里面定义的这些服务(详见the services doc)(PS:官方 英文)也可以在各种终端中以轮询的方式做一些简单的TCP和UDP传输。

服务端点目前是通过DNS或者环境变量( Docker-links-compatible 和 Kubernetes{FOO}_SERVICE_HOST 及 {FOO}_SERVICE_PORT 变量都支持)。这些变量由服务代理所管理的端口来解析。

Kubernetes控制面板

Kubernetes控制面板可以分为多个部分。目前它们都运行在一个master 节点,然而为了达到高可用性,这需要改变。不同部分一起协作提供一个统一的关于集群的视图。

etcd

所有master的持续状态都存在etcd的一个实例中。这可以很好地存储配置数据。因为有watch(观察者)的支持,各部件协调中的改变可以很快被察觉。

Kubernetes API Server

API服务提供Kubernetes API (PS:官方 英文)的服务。这个服务试图通过把所有或者大部分的业务逻辑放到不两只的部件中从而使其具有CRUD特性。它主要处理REST操作,在etcd中验证更新这些对象(并最终存储)。

Scheduler

调度器把未调度的pod通过binding api绑定到节点上。调度器是可插拔的,并且我们期待支持多集群的调度,未来甚至希望可以支持用户自定义的调度器。

Kubernetes控制管理服务器

所有其它的集群级别的功能目前都是由控制管理器所负责。例如,端点对象是被端点控制器来创建和更新。这些最终可以被分隔成不同的部件来让它们独自的可插拔。

replicationcontroller(PS:官方 英文)是一种建立于简单的 pod API之上的一种机制。一旦实现,我们最终计划把这变成一种通用的插件机制

二、安装Kubernetes

Kubernetes的安装方式

1、Kubeadm 安装(官方建议、建议学习研究使用;以容器的方式运行)

2、二进制安装(生产环境使用;以进程的方式运行)

3、Ansible安装

| 系统 | 性能 | IP | 主机名 |

| CentOS 7.4 | 内存=4G;处理器=2 | 192.168.2.1 虚拟ip:192.168.2.5 | k8s-master1 |

| CentOS 7.4 | 内存=4G;处理器=2 | 192.168.2.2 虚拟ip:192.168.2.5 | k8s-master2 |

| CentOS 7.4 | 内存=2G;处理器=1 | 192.168.2.3 | k8s-node1 |

| CentOS 7.4 | 内存=2G;处理器=1 | 192.168.2.4 | k8s-node2 |

1、环境配置

注意: 在所有服务器上添加网卡可以ping通外网

- #在master1、2、node1、2上配置hosts文件

- echo '

- 192.168.2.1 k8s-master1

- 192.168.2.2 k8s-master2

- 192.168.2.3 k8s-node1

- 192.168.2.4 k8s-node2

- '>> /etc/hosts

所有服务器关闭防火墙、selinux、dnsmasq、swap

-

- systemctl disable --now firewalld

-

- systemctl disable --now NetworkManager #CentOS8无需关闭

- 所有服务器都运行此命令

- [root@k8s-master1 ~]# swapoff -a && sysctl -w vm.swappiness=0 #关闭交换空间

- vm.swappiness = 0

- 所有服务器都运行此命令 #不注释可能会影响k8s的性能

- [root@k8s-master1 ~]# sed -i '12 s/^/#/' /etc/fstab #注意每个人的行号可能不一样

- 所有服务器都运行此命令

-

- [root@k8s-master1 ~]# yum -y install ntpdate #安装同步时间命令

-

- [root@k8s-master1 ~]# ntpdate time2.aliyun.com #同步时间

- 所有服务器都运行此命令

-

- [root@k8s-master1 ~]# ulimit -SHn 65535 #设置进程打开文件数为65535

- Master01节点免密钥登录其他节点,安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作

-

- [root@k8s-master1 ~]# ssh-keygen -t rsa #生成密钥(只在master1上操作)

-

- [root@k8s-master1 ~]# for i in k8s-master1 k8s-master2 k8s-node1 k8s-node2;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

- .......

- ...

- 全部服务器上执行

-

- [root@k8s-master1 ~]# yum -y install wget

- .......

- ..

- [root@k8s-master1 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

- .............

- ........

- [root@k8s-master1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

- ........

- ...

- [root@k8s-master1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 全部服务器上执行

- 设置k8s的yum源

-

- [root@k8s-master1 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

- [kubernetes]

- name=Kubernetes

- baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

- enabled=1

- gpgcheck=1

- repo_gpgcheck=1

- gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

- EOF

-

- [root@k8s-master1 ~]# sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

-

- [root@k8s-master1 ~]# yum makecache #建立缓存

- ..........

- ...

- 全部服务器上执行

-

- [root@k8s-master1 ~]# yum -y install wget psmisc vim net-tools telnet #安装常用命令

2、内核配置

所有节点安装ipvsadm:(ipvs性能比iptables性能好)

- 全部服务器上执行

-

- [root@k8s-master1 ~]# yum -y install ipvsadm ipset sysstat conntrack libseccomp

- 所有节点配置ipvs模块

-

- [root@k8s-master1 ~]# modprobe -- ip_vs

- [root@k8s-master1 ~]# modprobe -- ip_vs_rr

- [root@k8s-master1 ~]# modprobe -- ip_vs_wrr

- [root@k8s-master1 ~]# modprobe -- ip_vs_sh

- [root@k8s-master1 ~]# modprobe -- nf_conntrack_ipv4

-

- ————————————————————————————————————

- modprobe -- ip_vs

- modprobe -- ip_vs_rr

- modprobe -- ip_vs_wrr

- modprobe -- ip_vs_sh

- modprobe -- nf_conntrack_ipv4

- 全部服务器上执行

-

- [root@k8s-master1 ~]# vi /etc/modules-load.d/ipvs.conf #配置成开机自动加载

-

- ip_vs

- ip_vs_rr

- ip_vs_wrr

- ip_vs_sh

- nf_conntrack_ipv4

- ip_tables

- ip_set

- xt_set

- ipt_set

- ipt_rpfilter

- ipt_REJECT

- ipip

-

- 保存

-

- [root@k8s-master1 ~]# systemctl enable --now systemd-modules-load.service

- [root@k8s-master1 ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #查看是否加载

- nf_conntrack_ipv4 15053 0

- nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

- ip_vs_sh 12688 0

- ip_vs_wrr 12697 0

- ip_vs_rr 12600 0

- ip_vs 141092 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

- nf_conntrack 133387 2 ip_vs,nf_conntrack_ipv4

- libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

- 所有节点配置k8s内核 直接复制以下配置

-

- [root@k8s-master1 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

- net.ipv4.ip_forward = 1

- net.bridge.bridge-nf-call-iptables = 1

- fs.may_detach_mounts = 1

- vm.overcommit_memory=1

- vm.panic_on_oom=0

- fs.inotify.max_user_watches=89100

- fs.file-max=52706963

- fs.nr_open=52706963

- net.netfilter.nf_conntrack_max=2310720

- net.ipv4.tcp_keepalive_time = 600

- net.ipv4.tcp_keepalive_probes = 3

- net.ipv4.tcp_keepalive_intvl =15

- net.ipv4.tcp_max_tw_buckets = 36000

- net.ipv4.tcp_tw_reuse = 1

- net.ipv4.tcp_max_orphans = 327680

- net.ipv4.tcp_orphan_retries = 3

- net.ipv4.tcp_syncookies = 1

- net.ipv4.tcp_max_syn_backlog = 16384

- net.ipv4.ip_conntrack_max = 65536

- net.ipv4.tcp_max_syn_backlog = 16384

- net.ipv4.tcp_timestamps = 0

- net.core.somaxconn = 16384

- EOF

-

- [root@k8s-master1 ~]# sysctl --system sysctl -p

3、组件安装

- 全部服务器上安装最新版本的Docker k8s管理的是pod,在pod里面有一个或多个容器

-

- [root@k8s-master1 ~]# yum -y install docker-ce

- ..........

- ....

-

- [root@k8s-master1 ~]# yum list kubeadm.x86_64 --showduplicates | sort -r #查看k8s版本

- .........

- ...

- .

- [root@k8s-master1 ~]# yum install -y kubeadm-1.19.3-0.x86_64 kubectl-1.19.3-0.x86_64 kubelet-1.19.3-0.x86_64

- ..........

- .....

- ..

-

- kubeadm: 用来初始化集群的指令

- kubelet: 在集群中的每个节点上用来启动 pod 和 container 等

- kubectl: 用来与集群通信的命令行工具

- 所有节点设置开机自启动Docker

-

- [root@k8s-master1 ~]# systemctl enable --now docker

默认配置的pause镜像使用gcr.io仓库,国内可能无法访问,所以这里配置Kubelet使用阿里云的pause镜像,pause镜像启动成容器可以处理容器的僵尸进程

- 全部服务器上执行

-

- [root@k8s-master1 ~]# DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3)

- cat >/etc/sysconfig/kubelet<<EOF

- KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

- EOF

- 所有服务器设置Kubelet开机自启动

-

- systemctl daemon-reload

- systemctl enable --now kubelet

4、高可用组件安装

注意:是在所有Master节点通过yum安装HAProxy和KeepAlived

-

- [root@k8s-master1 ~]# yum -y install keepalived haproxy

所有Master节点配置HAProxy;所有Master节点的HAProxy配置相同

-

- [root@k8s-master1 ~]# vim /etc/haproxy/haproxy.cfg

-

- global

- maxconn 2000

- ulimit-n 16384

- log 127.0.0.1 local0 err

- stats timeout 30s

-

- defaults

- log global

- mode http

- option httplog

- timeout connect 5000

- timeout client 50000

- timeout server 50000

- timeout http-request 15s

- timeout http-keep-alive 15s

-

- frontend monitor-in

- bind *:33305

- mode http

- option httplog

- monitor-uri /monitor

-

- listen stats

- bind *:8006

- mode http

- stats enable

- stats hide-version

- stats uri /stats

- stats refresh 30s

- stats realm Haproxy\ Statistics

- stats auth admin:admin

-

- frontend k8s-master

- bind 0.0.0.0:16443

- bind 127.0.0.1:16443

- mode tcp

- option tcplog

- tcp-request inspect-delay 5s

- default_backend k8s-master

-

- backend k8s-master

- mode tcp

- option tcplog

- option tcp-check

- balance roundrobin

- default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

- server k8s-master1 192.168.2.1:6443 check #修改ip

- server k8s-master2 192.168.2.2:6443 check #修改ip

-

- 保存

单配置Master1节点上的keepalived

-

- [root@k8s-master1 ~]# vim /etc/keepalived/keepalived.conf

- ! Configuration File for keepalived

- global_defs {

- router_id LVS_DEVEL

- }

- vrrp_script chk_apiserver {

- script "/etc/keepalived/check_apiserver.sh"

- interval 2

- weight -5

- fall 3

- rise 2

- }

- vrrp_instance VI_1 {

- state MASTER

- interface ens33 #修改网卡名称

- mcast_src_ip 192.168.2.1 #修改ip地址

- virtual_router_id 51

- priority 100

- advert_int 2

- authentication {

- auth_type PASS

- auth_pass K8SHA_KA_AUTH

- }

- virtual_ipaddress {

- 192.168.2.5 #设置虚拟IP

- }

- # track_script { #健康检查是关闭的,集群建立完成后再开启

- # chk_apiserver

- # }

- }

-

- 保存

单配置Master2节点上的keepalived

-

- [root@k8s-master2 ~]# vim /etc/keepalived/keepalived.conf

- ! Configuration File for keepalived

- global_defs {

- router_id LVS_DEVEL

- }

- vrrp_script chk_apiserver {

- script "/etc/keepalived/check_apiserver.sh"

- interval 2

- weight -5

- fall 3

- rise 2

- }

- vrrp_instance VI_1 {

- state BACKUP

- interface ens33 #修改网卡名称

- mcast_src_ip 192.168.2.2 #修改ip

- virtual_router_id 51

- priority 99

- advert_int 2

- authentication {

- auth_type PASS

- auth_pass K8SHA_KA_AUTH

- }

- virtual_ipaddress {

- 192.168.2.5 #修改虚拟ip

- }

- # track_script { #健康检查是关闭的,集群建立完成后再开启

- # chk_apiserver

- # }

- }

-

- 保存

在所有Master节点上配置KeepAlived健康检查文件

-

- [root@k8s-master1 ~]# vim /etc/keepalived/check_apiserver.sh

-

- #!/bin/bash

-

- err=0

- for k in $(seq 1 5)

- do

- check_code=$(pgrep kube-apiserver)

- if [[ $check_code == "" ]]; then

- err=$(expr $err + 1)

- sleep 5

- continue

- else

- err=0

- break

- fi

- done

-

- if [[ $err != "0" ]]; then

- echo "systemctl stop keepalived"

- /usr/bin/systemctl stop keepalived

- exit 1

- else

- exit 0

- fi

-

- 保存

-

- [root@k8s-master1 ~]# chmod a+x /etc/keepalived/check_apiserver.sh

在master上启动haproxy和keepalived

-

- [root@k8s-master1 ~]# systemctl enable --now haproxy

- Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

- [root@k8s-master1 ~]# systemctl enable --now keepalived

- Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

查看是否有192.168.2.5

-

- [root@k8s-master1 ~]# ip a

- 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

- link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- inet 127.0.0.1/8 scope host lo

- valid_lft forever preferred_lft forever

- inet6 ::1/128 scope host

- valid_lft forever preferred_lft forever

- 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- link/ether 00:0c:29:7b:b0:46 brd ff:ff:ff:ff:ff:ff

- inet 192.168.2.1/24 brd 192.168.2.255 scope global ens33

- valid_lft forever preferred_lft forever

- inet 192.168.2.5/32 scope global ens33 #虚拟IP

- valid_lft forever preferred_lft forever

- inet6 fe80::238:ba2d:81b5:920e/64 scope link

- valid_lft forever preferred_lft forever

- ......

- ...

-

- [root@k8s-master1 ~]# netstat -anptu |grep 16443

- tcp 0 0 127.0.0.1:16443 0.0.0.0:* LISTEN 105533/haproxy

- tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 105533/haproxy

- ————————————————————————————————————————————————————

- [root@k8s-master2 ~]# netstat -anptu |grep 16443

- tcp 0 0 127.0.0.1:16443 0.0.0.0:* LISTEN 96274/haproxy

- tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 96274/haproxy

查看master所需镜像

-

- [root@k8s-master1 ~]# kubeadm config images list

- I0306 14:28:17.418780 104960 version.go:252] remote version is much newer: v1.23.4; falling back to: stable-1.18

- W0306 14:28:19.249961 104960 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

- k8s.gcr.io/kube-apiserver:v1.18.20

- k8s.gcr.io/kube-controller-manager:v1.18.20

- k8s.gcr.io/kube-scheduler:v1.18.20

- k8s.gcr.io/kube-proxy:v1.18.20

- k8s.gcr.io/pause:3.2

- k8s.gcr.io/etcd:3.4.3-0

- k8s.gcr.io/coredns:1.6.7

以下操作在所有master上操作

- 在所有master上进行脚本下载

-

- [root@k8s-master1 ~]# vim alik8simages.sh

- #!/bin/bash

- list='kube-apiserver:v1.19.3

- kube-controller-manager:v1.19.3

- kube-scheduler:v1.19.3

- kube-proxy:v1.19.3

- pause:3.2

- etcd:3.4.13-0

- coredns:1.7.0'

- for item in ${list}

- do

-

- docker pull registry.aliyuncs.com/google_containers/$item && docker tag registry.aliyuncs.com/google_containers/$item k8s.gcr.io/$item && docker rmi registry.aliyuncs.com/google_containers/$item && docker pull jmgao1983/flannel

-

- done

-

- 保存

-

- [root@k8s-master1 ~]# bash alik8simages.sh #执行脚本进行下载

- ————————————————————————————————————————————

- #上面过程

- #docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.5

- #docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.5 k8s.gcr.io/kube-apiserver:v1.19.5

- #docker rmi registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.5

以下操作在所有node上操作

-

- [root@k8s-node1 ~]# vim alik8simages.sh

- #!/bin/bash

- list='kube-proxy:v1.19.3

- pause:3.2'

- for item in ${list}

- do

-

- docker pull registry.aliyuncs.com/google_containers/$item && docker tag registry.aliyuncs.com/google_containers/$item k8s.gcr.io/$item && docker rmi registry.aliyuncs.com/google_containers/$item && docker pull jmgao1983/flannel

-

- done

-

- 保存

-

- [root@k8s-node1 ~]# bash alik8simages.sh

所有节点设置开机自启动kubelet

-

- [root@k8s-master1 ~]# systemctl enable --now kubelet

Master1节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master1即可

-

- --kubernetes-version=v1.19.3 #k8s的版本

- --apiserver-advertise-address=192.168.2.1 #地址写master1的

- --pod-network-cidr=10.244.0.0/16 #pod 指定网段(容器的网段)

- ————————————————————————————执行下面命令

- [root@k8s-master1 ~]# kubeadm init --kubernetes-version=v1.19.3 --apiserver-advertise-address=192.168.2.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16

- ......

- ...

- ..

- mkdir -p $HOME/.kube

- sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

- sudo chown $(id -u):$(id -g) $HOME/.kube/config

- ........

- ..... 以下是加入群集所需要的命令

- kubeadm join 192.168.2.1:6443 --token ona3p0.flcw3tmfl3fsfn5r \

- --discovery-token-ca-cert-hash sha256:8c74d27c94b5c6a1f2c226e93e605762df708b44129145791608e959d30aa36f

-

- ————————————————————————————————

- 执行提示命令

-

- [root@k8s-master1 ~]# mkdir -p $HOME/.kube

- [root@k8s-master1 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

- [root@k8s-master1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

所有Master节点配置环境变量,用于访问Kubernetes集群

-

- cat <<EOF >> /root/.bashrc

- export KUBECONFIG=/etc/kubernetes/admin.conf

- EOF

- source /root/.bashrc

查看节点状态

-

- [root@k8s-master1 ~]# kubectl get nodes

- NAME STATUS ROLES AGE VERSION

- k8s-master1 NotReady master 7m7s v1.19.3

- 全部机器执行以写命令

-

- iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

将其他节点加入群集(node1、2内容相同直接复制即可)

- 使用上面提示的命令加入群集

- [root@k8s-master2 ~]# kubeadm join 192.168.2.1:6443 --token ona3p0.flcw3tmfl3fsfn5r \

- > --discovery-token-ca-cert-hash sha256:8c74d27c94b5c6a1f2c226e93e605762df708b44129145791608e959d30aa36f

- ..............

- .....

-

- Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

-

-

- [root@k8s-master1 ~]# kubectl get nodes #加入群集后查看

- NAME STATUS ROLES AGE VERSION

- k8s-master1 NotReady master 18m v1.19.3

- k8s-master2 NotReady <none> 2m19s v1.19.3

- k8s-node1 NotReady <none> 2m16s v1.19.3

- k8s-node2 NotReady <none> 2m15s v1.19.3

-

- 只在master1上运行此命令,作用:允许master部署应用

- 因我们环境配置有限才执行此命令,如果在生产环境在可以不用执行

-

- [root@k8s-master1 ~]# kubectl taint nodes --all node-role.kubernetes.io/master-

- node/k8s-master1 untainted

- taint "node-role.kubernetes.io/master" not found

- taint "node-role.kubernetes.io/master" not found

- taint "node-role.kubernetes.io/master" not found

-

- [root@k8s-master1 ~]# kubectl describe nodes k8s-master1 | grep -E '(Roles|Taints)'

- Roles: master

- Taints: <none>

-

- ————————————————————————————————————————————————————————————————-

- 传输给master2

- [root@k8s-master1 ~]# scp /etc/kubernetes/admin.conf root@192.168.2.2:/etc/kubernetes/admin.conf

- admin.conf 100% 5567 4.6MB/s 00:00

-

-

- [root@k8s-master2 ~]# kubectl describe nodes k8s-master2 | grep -E '(Roles|Taints)'

- Roles: <none>

- Taints: <none>

flannel组件配置

-

- [root@k8s-master1 ~]# vim flannel.yml

- ---

- apiVersion: policy/v1beta1

- kind: PodSecurityPolicy

- metadata:

- name: psp.flannel.unprivileged

- annotations:

- seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

- seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

- apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

- apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

- spec:

- privileged: false

- volumes:

- - configMap

- - secret

- - emptyDir

- - hostPath

- allowedHostPaths:

- - pathPrefix: "/etc/cni/net.d"

- - pathPrefix: "/etc/kube-flannel"

- - pathPrefix: "/run/flannel"

- readOnlyRootFilesystem: false

- # Users and groups

- runAsUser:

- rule: RunAsAny

- supplementalGroups:

- rule: RunAsAny

- fsGroup:

- rule: RunAsAny

- # Privilege Escalation

- allowPrivilegeEscalation: false

- defaultAllowPrivilegeEscalation: false

- # Capabilities

- allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

- defaultAddCapabilities: []

- requiredDropCapabilities: []

- # Host namespaces

- hostPID: false

- hostIPC: false

- hostNetwork: true

- hostPorts:

- - min: 0

- max: 65535

- # SELinux

- seLinux:

- # SELinux is unused in CaaSP

- rule: 'RunAsAny'

- ---

- kind: ClusterRole

- apiVersion: rbac.authorization.k8s.io/v1

- metadata:

- name: flannel

- rules:

- - apiGroups: ['extensions']

- resources: ['podsecuritypolicies']

- verbs: ['use']

- resourceNames: ['psp.flannel.unprivileged']

- - apiGroups:

- - ""

- resources:

- - pods

- verbs:

- - get

- - apiGroups:

- - ""

- resources:

- - nodes

- verbs:

- - list

- - watch

- - apiGroups:

- - ""

- resources:

- - nodes/status

- verbs:

- - patch

- ---

- kind: ClusterRoleBinding

- apiVersion: rbac.authorization.k8s.io/v1

- metadata:

- name: flannel

- roleRef:

- apiGroup: rbac.authorization.k8s.io

- kind: ClusterRole

- name: flannel

- subjects:

- - kind: ServiceAccount

- name: flannel

- namespace: kube-system

- ---

- apiVersion: v1

- kind: ServiceAccount

- metadata:

- name: flannel

- namespace: kube-system

- ---

- kind: ConfigMap

- apiVersion: v1

- metadata:

- name: kube-flannel-cfg

- namespace: kube-system

- labels:

- tier: node

- app: flannel

- data:

- cni-conf.json: |

- {

- "name": "cbr0",

- "cniVersion": "0.3.1",

- "plugins": [

- {

- "type": "flannel",

- "delegate": {

- "hairpinMode": true,

- "isDefaultGateway": true

- }

- },

- {

- "type": "portmap",

- "capabilities": {

- "portMappings": true

- }

- }

- ]

- }

- net-conf.json: |

- {

- "Network": "10.244.0.0/16",

- "Backend": {

- "Type": "vxlan"

- }

- }

- ---

- apiVersion: apps/v1

- kind: DaemonSet

- metadata:

- name: kube-flannel-ds

- namespace: kube-system

- labels:

- tier: node

- app: flannel

- spec:

- selector:

- matchLabels:

- app: flannel

- template:

- metadata:

- labels:

- tier: node

- app: flannel

- spec:

- affinity:

- nodeAffinity:

- requiredDuringSchedulingIgnoredDuringExecution:

- nodeSelectorTerms:

- - matchExpressions:

- - key: kubernetes.io/os

- operator: In

- values:

- - linux

- hostNetwork: true

- priorityClassName: system-node-critical

- tolerations:

- - operator: Exists

- effect: NoSchedule

- serviceAccountName: flannel

- initContainers:

- - name: install-cni

- image: jmgao1983/flannel:latest

- command:

- - cp

- args:

- - -f

- - /etc/kube-flannel/cni-conf.json

- - /etc/cni/net.d/10-flannel.conflist

- volumeMounts:

- - name: cni

- mountPath: /etc/cni/net.d

- - name: flannel-cfg

- mountPath: /etc/kube-flannel/

- containers:

- - name: kube-flannel

- image: jmgao1983/flannel:latest

- command:

- - /opt/bin/flanneld

- args:

- - --ip-masq

- - --kube-subnet-mgr

- resources:

- requests:

- cpu: "100m"

- memory: "50Mi"

- limits:

- cpu: "100m"

- memory: "50Mi"

- securityContext:

- privileged: false

- capabilities:

- add: ["NET_ADMIN", "NET_RAW"]

- env:

- - name: POD_NAME

- valueFrom:

- fieldRef:

- fieldPath: metadata.name

- - name: POD_NAMESPACE

- valueFrom:

- fieldRef:

- fieldPath: metadata.namespace

- volumeMounts:

- - name: run

- mountPath: /run/flannel

- - name: flannel-cfg

- mountPath: /etc/kube-flannel/

- volumes:

- - name: run

- hostPath:

- path: /run/flannel

- - name: cni

- hostPath:

- path: /etc/cni/net.d

- - name: flannel-cfg

- configMap:

- name: kube-flannel-cfg

-

- 保存 确保版本一样,如果不一样,修改即可

执行flannel.yml

-

- [root@k8s-master1 ~]# kubectl apply -f flannel.yml

- podsecuritypolicy.policy/psp.flannel.unprivileged created

- clusterrole.rbac.authorization.k8s.io/flannel created

- clusterrolebinding.rbac.authorization.k8s.io/flannel created

- serviceaccount/flannel created

- configmap/kube-flannel-cfg created

- daemonset.apps/kube-flannel-ds created

成后观察Master上运行的pod

-

- [root@k8s-master1 ~]# kubectl get -A pods -o wide

- NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

- kube-system coredns-f9fd979d6-cnrvb 1/1 Running 0 6m32s 10.244.0.3 k8s-master1 <none> <none>

- kube-system coredns-f9fd979d6-fxsdt 1/1 Running 0 6m32s 10.244.0.2 k8s-master1 <none> <none>

- kube-system etcd-k8s-master1 1/1 Running 0 6m43s 192.168.2.1 k8s-master1 <none> <none>

- kube-system kube-apiserver-k8s-master1 1/1 Running 0 6m43s 192.168.2.1 k8s-master1 <none> <none>

- kube-system kube-controller-manager-k8s-master1 1/1 Running 0 6m43s 192.168.2.1 k8s-master1 <none> <none>

- kube-system kube-flannel-ds-7rt9n 1/1 Running 0 52s 192.168.2.3 k8s-node1 <none> <none>

- kube-system kube-flannel-ds-brktl 1/1 Running 0 52s 192.168.2.1 k8s-master1 <none> <none>

- kube-system kube-flannel-ds-kj9hg 1/1 Running 0 52s 192.168.2.4 k8s-node2 <none> <none>

- kube-system kube-flannel-ds-ld7xj 1/1 Running 0 52s 192.168.2.2 k8s-master2 <none> <none>

- kube-system kube-proxy-4wbh9 1/1 Running 0 3m27s 192.168.2.2 k8s-master2 <none> <none>

- kube-system kube-proxy-crfmv 1/1 Running 0 3m24s 192.168.2.3 k8s-node1 <none> <none>

- kube-system kube-proxy-twttg 1/1 Running 0 6m32s 192.168.2.1 k8s-master1 <none> <none>

- kube-system kube-proxy-xdg6r 1/1 Running 0 3m24s 192.168.2.4 k8s-node2 <none> <none>

- kube-system kube-scheduler-k8s-master1 1/1 Running 0 6m42s 192.168.2.1 k8s-master1 <none> <none>

注意:如果上面查看发现状态不是Running 可以重新初始化Kubernetes集群

注意:先查看版本是否一致如果不一致就算重新初始化有没有用

-

- [root@k8s-master1 ~]# rm -rf /etc/kubernetes/*

- [root@k8s-master1 ~]# rm -rf ~/.kube/*

- [root@k8s-master1 ~]# rm -rf /var/lib/etcd/*

- [root@k8s-master1 ~]# kubeadm reset -f

-

- rm -rf /etc/kubernetes/*

- rm -rf ~/.kube/*

- rm -rf /var/lib/etcd/*

- kubeadm reset -f

- 同上面的初始化

-

- [root@k8s-master1 ~]# kubeadm init --kubernetes-version=v1.19.3 --apiserver-advertise-address=192.168.2.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16

- .............

- .......

- ..

- [root@k8s-master1 ~]# mkdir -p $HOME/.kube

- [root@k8s-master1 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

- [root@k8s-master1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

-

- 加入群集

-

- [root@k8s-master2 ~]# kubeadm join 192.168.2.1:6443 --token aqywqm.fcddl4o1sy2q1qgj \

- > --discovery-token-ca-cert-hash sha256:4d3b60e0801e9c307ae6d94507e1fac514a493e277c715dc873eeadb950e5215

- .........

- ...

-

-

- [root@k8s-master1 ~]# kubectl get nodes

- NAME STATUS ROLES AGE VERSION

- k8s-master1 Ready master 4m13s v1.19.3

- k8s-master2 Ready <none> 48s v1.19.3

- k8s-node1 Ready <none> 45s v1.19.3

- k8s-node2 Ready <none> 45s v1.19.3

-

-

- [root@k8s-master1 ~]# kubectl taint nodes --all node-role.kubernetes.io/master-

- node/k8s-master1 untainted

- taint "node-role.kubernetes.io/master" not found

- taint "node-role.kubernetes.io/master" not found

- taint "node-role.kubernetes.io/master" not found

-

- [root@k8s-master1 ~]# kubectl describe nodes k8s-master1 | grep -E '(Roles|Taints)'

- Roles: master

- Taints: <none>

-

- [root@k8s-master1 ~]# kubectl apply -f flannel.yml

- ...........

- .....

- .

-

- [root@k8s-master1 ~]# kubectl get nodes

- NAME STATUS ROLES AGE VERSION

- k8s-master1 Ready master 43m v1.19.3

- k8s-master2 Ready <none> 40m v1.19.3

- k8s-node1 Ready <none> 40m v1.19.3

- k8s-node2 Ready <none> 40m v1.19.3

-

-

- [root@k8s-master1 ~]# systemctl enable haproxy

- [root@k8s-master1 ~]# systemctl enable keepalived

-

- ————————————————————————————————————————————————————

- [root@k8s-master2 ~]# systemctl enable haproxy

- [root@k8s-master2 ~]# systemctl enable keepalived

5、Metrics部署

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率

Metrics介绍

Kubernetes的早期版本依靠Heapster来实现完整的性能数据采集和监控功能,Kubernetes从1.8版本开始,性能数据开始以Metrics API的方式提供标准化接口,并且从1.10版本开始将Heapster替换为Metrics Server。在Kubernetes新的监控体系中,Metrics Server用于提供核心指标(Core Metrics),包括Node、Pod的CPU和内存使用指标。

对其他自定义指标(Custom Metrics)的监控则由Prometheus等组件来完成

上传所需镜像;在所有服务器上上传

- 传输给其他服务器

- [root@k8s-master1 ~]# scp metrics* root@192.168.2.2:$PWD

- metrics-scraper_v1.0.1.tar 100% 38MB 76.5MB/s 00:00

- metrics-server.tar.gz 100% 39MB 67.1MB/s 00:00

- [root@k8s-master1 ~]# scp metrics* root@192.168.2.3:$PWD

- metrics-scraper_v1.0.1.tar 100% 38MB 56.9MB/s 00:00

- metrics-server.tar.gz 100% 39MB 40.7MB/s 00:00

- [root@k8s-master1 ~]# scp metrics* root@192.168.2.4:$PWD

- metrics-scraper_v1.0.1.tar 100% 38MB 61.8MB/s 00:00

- metrics-server.tar.gz 100% 39MB 49.2MB/s 00:00

-

-

-

- 上传镜像 在所有服务器上执行

- [root@k8s-master1 ~]# docker load -i metrics-scraper_v1.0.1.tar

- .........

- ....

- [root@k8s-master1 ~]# docker load -i metrics-server.tar.gz

- .......

- ...

上传components.yaml文件

- 在master1 上操作

-

- [root@k8s-master1 ~]# kubectl apply -f components.yaml

- clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

- clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

- rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

- Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService

- apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

- serviceaccount/metrics-server created

- deployment.apps/metrics-server created

- service/metrics-server created

- clusterrole.rbac.authorization.k8s.io/system:metrics-server created

- clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

- ————————————————————————————————————

- 查看状态

- [root@k8s-master1 ~]# kubectl -n kube-system get pods -l k8s-app=metrics-server

- NAME READY STATUS RESTARTS AGE

- metrics-server-5c98b8989-54npg 1/1 Running 0 9m55s

- metrics-server-5c98b8989-9w9dd 1/1 Running 0 9m55s

- 查看资源监控 master1上操作

- [root@k8s-master1 ~]# kubectl top nodes #节点占用情况

- NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

- k8s-master1 166m 8% 1388Mi 36%

- k8s-master2 61m 3% 728Mi 18%

- k8s-node1 50m 5% 889Mi 47%

- k8s-node2 49m 4% 878Mi 46%

-

- [root@k8s-master1 ~]# kubectl top pods -A #pods占用情况

- NAMESPACE NAME CPU(cores) MEMORY(bytes)

- kube-system coredns-f9fd979d6-cnrvb 2m 14Mi

- kube-system coredns-f9fd979d6-fxsdt 2m 16Mi

- kube-system etcd-k8s-master1 11m 72Mi

- kube-system kube-apiserver-k8s-master1 27m 298Mi

- kube-system kube-controller-manager-k8s-master1 11m 48Mi

- kube-system kube-flannel-ds-7rt9n 1m 9Mi

- kube-system kube-flannel-ds-brktl 1m 14Mi

- kube-system kube-flannel-ds-kj9hg 2m 14Mi

- kube-system kube-flannel-ds-ld7xj 1m 15Mi

- kube-system kube-proxy-4wbh9 1m 19Mi

- kube-system kube-proxy-crfmv 1m 11Mi

- kube-system kube-proxy-twttg 1m 20Mi

- kube-system kube-proxy-xdg6r 1m 13Mi

- kube-system kube-scheduler-k8s-master1 2m 24Mi

- kube-system metrics-server-5c98b8989-54npg 1m 10Mi

- kube-system metrics-server-5c98b8989-9w9dd 1m 12Mi

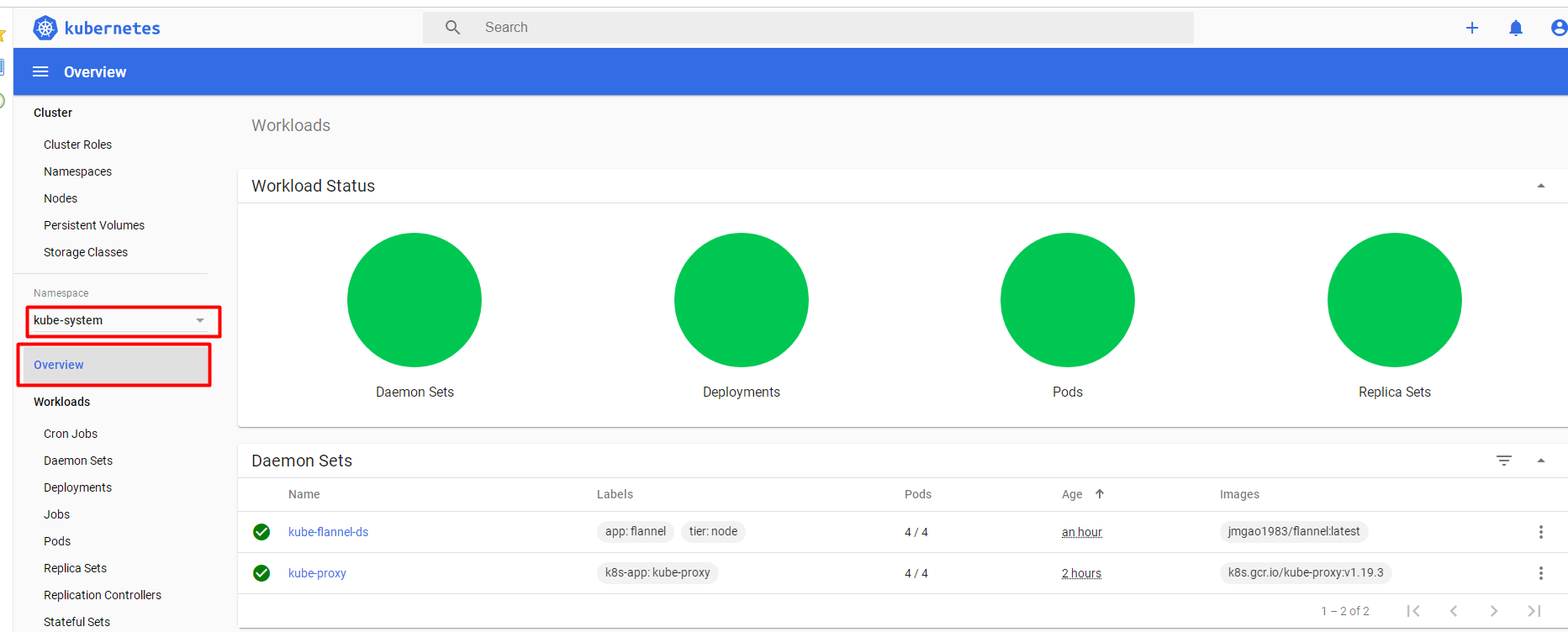

6、Dashboard部署

Dashboard用于展示集群中的各类资源,同时也可以通过Dashboard实时查看Pod的日志和在容器中执行一些命令等

Dashboard 是基于网页的 Kubernetes 用户界面。您可以使用 Dashboard 将容器应用部署到 Kubernetes 集群中,也可以对容器应用排错,还能管理集群资源。您可以使用 Dashboard 获取运行在集群中的应用的概览信息,也可以创建或者修改 Kubernetes 资源(如 Deployment,Job,DaemonSet 等等)。例如,您可以对 Deployment 实现弹性伸缩、发起滚动升级、重启 Pod 或者使用向导创建新的应用

上传dashboard.yaml文件

- master1上操作

- [root@k8s-master1 ~]# vim dashboard.yaml

- .......

- ...

- 44 nodePort: 30001 #访问端口

- ......

- ..

-

- 保存

- master1上操作

- [root@k8s-master1 ~]# kubectl create -f dashboard.yaml

- namespace/kubernetes-dashboard created

- serviceaccount/kubernetes-dashboard created

- secret/kubernetes-dashboard-certs created

- secret/kubernetes-dashboard-csrf created

- secret/kubernetes-dashboard-key-holder created

- configmap/kubernetes-dashboard-settings created

- .....

- ..

确认Dashboard 关联pod和service的状态,这里注意kubernetes-dashboard会去自动下载镜像确保网络是可以通信的

-

- [root@k8s-master1 ~]# kubectl get pod,svc -n kubernetes-dashboard

-

- NAME READY STATUS RESTARTS AGE

- pod/dashboard-metrics-scraper-7445d59dfd-9jwcw 1/1 Running 0 36m

- pod/kubernetes-dashboard-7d8466d688-mgfq9 1/1 Running 0 36m

-

- NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- service/dashboard-metrics-scraper ClusterIP 10.1.70.163 <none> 8000/TCP 36m

- service/kubernetes-dashboard NodePort 10.1.158.233 <none> 443:30001/TCP 36m

创建serviceaccount和clusterrolebinding资源YAML文件

-

- [root@k8s-master1 ~]# vim adminuser.yaml

-

- apiVersion: v1

- kind: ServiceAccount

- metadata:

- name: admin-user

- namespace: kubernetes-dashboard

- ---

- apiVersion: rbac.authorization.k8s.io/v1

- kind: ClusterRoleBinding

- metadata:

- name: admin-user

- roleRef:

- apiGroup: rbac.authorization.k8s.io

- kind: ClusterRole

- name: cluster-admin

- subjects:

- - kind: ServiceAccount

- name: admin-user

- namespace: kubernetes-dashboard

-

- 保存

-

- [root@k8s-master1 ~]# kubectl create -f adminuser.yaml

- serviceaccount/admin-user created

- clusterrolebinding.rbac.authorization.k8s.io/admin-user created

访问测试:https://192.168.2.2:30001

获取token,用于登录Dashboard UI

- [root@k8s-master1 ~]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}') #查看

- Name: admin-user-token-rwzng

- Namespace: kubernetes-dashboard

- Labels: <none>

- Annotations: kubernetes.io/service-account.name: admin-user

- kubernetes.io/service-account.uid: 194f1012-cbed-4c15-b8c2-2142332174a9

-

- Type: kubernetes.io/service-account-token

-

- Data

- ====

- token:

- ————————————————复制以下秘钥

- eyJhbGciOiJSUzI1NiIsImtpZCI6Imxad29JeHUyYVFucGJuQzBDNm5qYU1NVDVDUUItU0NqWUxvQTdtWjcyYW8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXJ3em5nIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIxOTRmMTAxMi1jYmVkLTRjMTUtYjhjMi0yMTQyMzMyMTc0YTkiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.nDvv2CevmtTBtpHqikXp5nRbzmJaMr13OSU5YCoBtMrOg1V6bMSn6Ctu5IdtxGExmDGY-69v4fBw7-DvJtLTon_rgsow6NA1LwUOuebMh8TwVrHSV0SW7yI0MCRFSMctC9NxIxyacxIDkDQ7eA7Rr9sQRKFpIWfjBgsC-k7z13IIuaAROXFrZKdqUUPd5hNTLhtFqtXOs7b_nMxzQTln9rSDIHozMTHbRMkL_oLm7McEGfrod7bO6RsTyPfn0TcK6pFCx5T9YA6AfoPMH3mNU0gsr-zbIYZyxIMr9FMpw2zpjP53BnaVhTQJ1M_c_Ptd774cRPk6vTWRPprul2U_OQ

将Kube-proxy改为ipvs模式,因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下

- [root@k8s-master1 ~]# curl 127.0.0.1:10249/proxyMode

- iptables

- [root@k8s-master1 ~]# kubectl edit cm kube-proxy -n kube-system

- ......

- 44 mode: "ipvs"

- ....

- ..

-

- 保存

- 更新Kube-Proxy的Pod

- [root@k8s-master1 ~]# kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system

- daemonset.apps/kube-proxy patched

-

- [root@k8s-master1 ~]# curl 127.0.0.1:10249/proxyMode

- ipvs

注意:如果要做虚拟机快照,启动后会发现vip消失,30001端口无法访问,解决方法:重启全部master,作用恢复vip,然后,重新初始化,在执行相对于的yaml文件