- 1基于python的天气网站数据爬取和可视化分析项目

- 2使用Java + MapRedcue实现 K-means 聚类算法和基于散点图的可视化_centerrandomadapter

- 3Linux 解锁被锁定的账号_linux解锁用户命令

- 4android短信过滤关键词,iOS 11的垃圾短信按关键词过滤功能使用介绍

- 5前端较为常见的面试题八股文笔记-持续更新2024

- 6Digging Into Self-Supervised Monocular Depth Estimation(2019.8)

- 7Flutter:使用流行三方网络请求框架Dio封装一个自己的HTTP工具类_dio和http

- 828.Gateway-网关过滤器

- 9idea-Git分支代码合并到主分支_idea git 分支合并到master

- 10【Linux】Linux权限管理_linux用户权限

强化学习笔记1——ppo算法_ppo越学越倒退

赞

踩

参考莫烦Python的学习视频链接: 莫烦Python的学习视频.

- why PPO?

根据 OpenAI 的官方博客, PPO 已经成为他们在强化学习上的默认算法. 如果一句话概括 PPO: OpenAI 提出的一种解决 Policy Gradient 不好确定 Learning rate (或者 Step size) 的问题. 因为如果 step size 过大, 学出来的 Policy 会一直乱动, 不会收敛, 但如果 Step Size 太小, 对于完成训练, 我们会等到绝望. PPO 利用 New Policy 和 Old Policy 的比例, 限制了 New Policy 的更新幅度, 让 Policy Gradient 对稍微大点的 Step size 不那么敏感. - 一些讲解,来源: link.

on-policy和off-policy的含义

on-policy和off-policy的含义

- 1可以用迁移思想来理解这个。t1为要训练的agent, t2 为fixed的agent,用于sample

- 2在t2上反复采样,可以理解t2是个高手。t2是固定的,也不会导致(s,a)中某个a的概率发生变化

- 3即可以理解为t2中采的样本一定都是正确的!。把t1模型,训练成t2模型的水平。

- 4所以alpha go 的前期训练好像是借助的棋谱。棋谱相当于t2, 刚开始的go相当于t1

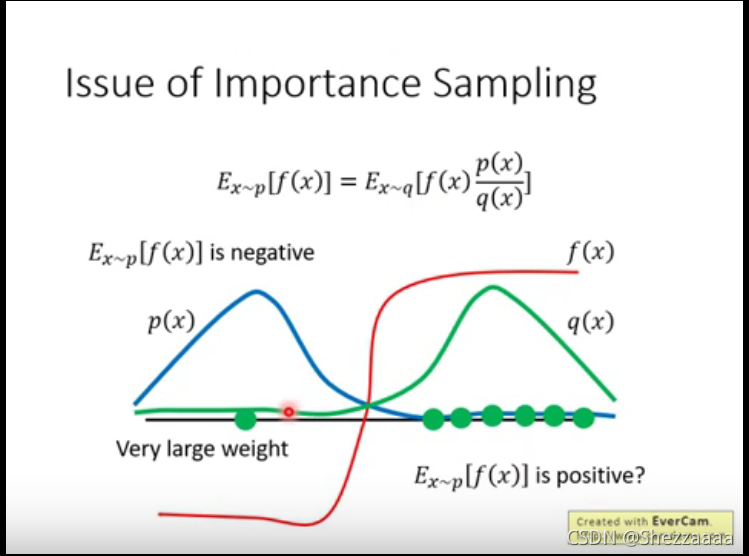

简单来说,因为难以在

p

(

x

)

p(x)

p(x)中采样,所以曲线救国,从

q

(

x

)

q(x)

q(x)采样求期望,再乘以一个weight,即

p

(

x

)

/

q

(

x

)

p(x)/q(x)

p(x)/q(x)

如果

p

(

x

)

p(x)

p(x)与

q

(

x

)

q(x)

q(x)差距很大,要多采样才行,采样数少会错误

替换原理

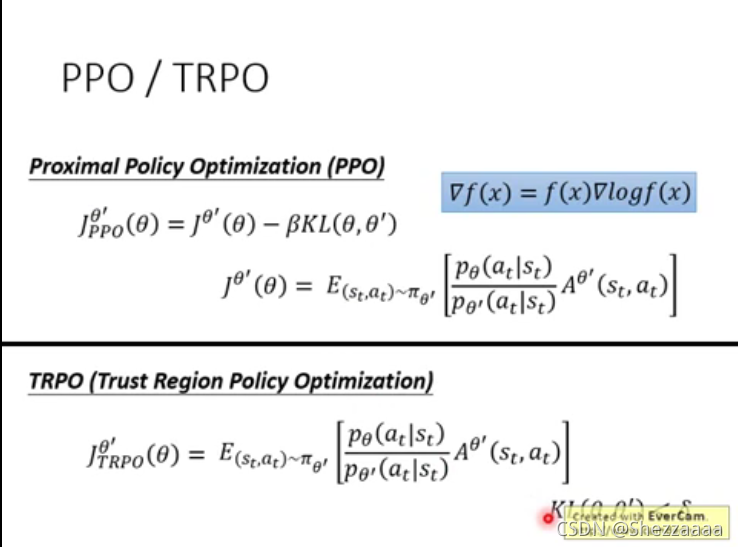

原问题中的替换原理。when to stop? 引入PPO解决原网络与现在网络不能差太多的问题,即两个分布不可以差太多

4. 算法伪代码

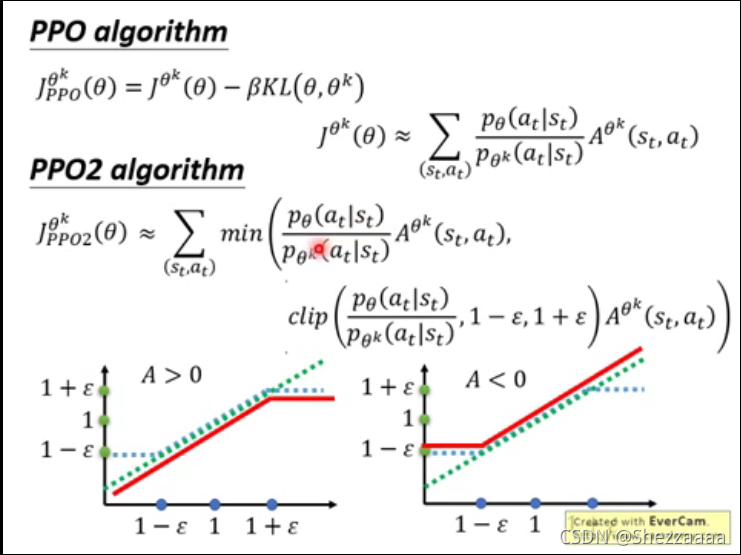

PPO-Penalty:近似地解决了TRPO之类的受KL约束的更新,但对目标函数中的KL偏离进行了惩罚而不是使其成为硬约束,并在训练过程中自动调整惩罚系数,以便对其进行适当缩放。

PPO-Clip:在目标中没有KL散度项,也完全没有约束。取而代之的是依靠对目标函数的专门裁剪来减小新老策略的差异。

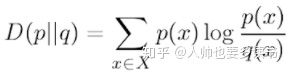

KL散度用来限制新策略的更新幅度(重要)

在PPO clip中去掉了KL散度的计算,只限制了比例。效果更好。

多线程将加快学习进程。

5. 算法结构

- 代码

class PPO: def __init__(self): # 建 Actor Critic 网络 # 搭计算图纸 graph self.sess = tf.Session() self.tfs = tf.placeholder(tf.float32, [None, S_DIM], 'state') # 状态空间[None, S_DIM] self._build_anet('Critic') # 建立critic网络,更新self.v # 得到self.v之后计算损失函数 with tf.variable_scope('closs'): self.tfdc_r = tf.placeholder(tf.float32, [None, 1], name='discounted_r') # 折扣奖励 self.adv = self.tfdc_r - self.v # ?这个可以理解TD error吗? closs = tf.reduce_mean(tf.square(self.adv)) # critic的损失函数 self.ctrain = tf.train.AdamOptimizer(C_LR).minimize(closs) # 接着训练critic # 建立pi网络和old_pi网络,获得相应参数 pi, pi_params = self._build_anet('pi', trainable=True) oldpi, oldpi_params = self._build_anet('oldpi', trainable=False) # ??这是什么 with tf.variable_scope('sample_action'): self.sample_op = tf.squeeze(pi.sample(1), axis=0) # 将新pi参数赋给old_pi with tf.variable_scope('update_oldpi'): # 此时还没有赋值,要sess.run才行 self.update_oldpi_op = [oldp.assign(p) for p, oldp in zip(pi_params, oldpi_params)] with tf.variable_scope('aloss'): self.tfa = tf.placeholder(dtype=tf.float32, shape=[None, A_DIM], name='action') # 动作空间 self.tfadv = tf.placeholder(tf.float32, [None, 1], 'advantage') # 优势函数 with tf.variable_scope('surrogate'): ratio = pi.prob(self.tfa) / oldpi.prob(self.tfa) # 概率密度 surr = ratio * self.tfadv # 差异大,奖励大惊讶度高 if METHOD['name'] == 'kl_pen': self.tflam = tf.placeholder(tf.float32, None, 'lambda') kl = tf.distributions.kl_divergence(oldpi, pi) self.kl_mean = tf.reduce_mean(kl) self.aloss = -(tf.reduce_mean(surr - self.tflam * kl)) else: # clipping method, find this is better 限制了surrogate的变化幅度 self.aloss = -tf.reduce_mean(tf.minimum(surr, tf.clip_by_value(ratio, 1. - METHOD['epsilon'], 1. + METHOD[ 'epsilon']) * self.tfadv)) # 限定ratio的范围,我也不懂这个参数是怎么调的 self.atrain = tf.train.AdamOptimizer(A_LR).minimize(self.aloss) # A_LR学习率,损失函数aloss # 写日志文件 tf.summary.FileWriter('log/', self.sess.graph) self.sess.run(tf.global_variables_initializer()) # 搭建网络函数 def _build_anet(self, name, trainable=True): # Critic网络部分 if name == 'Critic': with tf.variable_scope(name): # self.s_Critic = tf.placeholder(tf.float32, [None, S_DIM], 'state') # 两层神经网络,输出是self.v,即估计state value l1_Critic = tf.layers.dense(self.tfs, 100, tf.nn.relu, trainable=trainable, name='l1') self.v = tf.layers.dense(l1_Critic, 1, trainable=trainable, name='value_predict') # Actor部分,分为‘pi’和‘oldpi’两个神经网络 # 返回动作分布以及网络参数列表 else: with tf.variable_scope(name): # self.s_Actor = tf.placeholder(tf.float32, [None, S_DIM], 'state') # ??这部分 l1_Actor = tf.layers.dense(self.tfs, 100, tf.nn.relu, trainable=trainable, name='l1') mu = 2 * tf.layers.dense(l1_Actor, A_DIM, tf.nn.tanh, trainable=trainable, name='mu') sigma = tf.layers.dense(l1_Actor, A_DIM, tf.nn.softplus, trainable=trainable, name='sigma') norm_list = tf.distributions.Normal(loc=mu, scale=sigma) # 正态分布 params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope=name) #提取网络参数列表 return norm_list, params def update(self, s, a, r): # 将值赋给old_pi网络 self.sess.run(self.update_oldpi_op) #为了取回self.adv, adv = self.sess.run(self.adv, {self.tfdc_r: r, self.tfs: s}) # 后面那个字典是什么意思? if METHOD['name'] == 'kl_pen': # 选择kl-penalty方式 for _ in range(A_UPDATE_STEPS): _, kl = self.sess.run([self.atrain, self.kl_mean], {self.tfa: a, self.tfadv: adv, self.tfs: s, self.tflam: METHOD['lam']}) if kl > 4 * METHOD['kl_target']: # this in in google's paper break if kl < METHOD['kl_target'] / 1.5: # adaptive lambda, this is in OpenAI's paper METHOD['lam'] /= 2 elif kl > METHOD['kl_target'] * 1.5: METHOD['lam'] *= 2 METHOD['lam'] = np.clip(METHOD['lam'], 1e-4, 10) # sometimes explode, this clipping is my solution else: # 训练actor网络 [self.sess.run(self.atrain, {self.tfs: s, self.tfa: a, self.tfadv: adv}) for _ in range(A_UPDATE_STEPS)] # 训练critic网络 [self.sess.run(self.ctrain, {self.tfs: s, self.tfdc_r: r}) for _ in range(C_UPDATE_STEPS)] def choose_action(self, s): # 选动作 s = s[np.newaxis, :] a = self.sess.run(self.sample_op, {self.tfs: s})[0] return np.clip(a, -2, 2) def get_v(self, s): # 算 state value if s.ndim < 2: s = s[np.newaxis, :] return self.sess.run(self.v, {self.tfs: s}) env = gym.make('Pendulum-v0').unwrapped S_DIM = env.observation_space.shape[0] A_DIM = env.action_space.shape[0] ppo = PPO() all_ep_r = [] # ppo和环境的互动 # 达到最大回合数退出 for ep in range(EP_MAX): s = env.reset() buffer_s, buffer_a, buffer_r = [], [], [] ep_r = 0 for t in range(EP_LEN): env.render() a = ppo.choose_action(s) s_, r, done, _ = env.step(a) # 存储在buffer当中 buffer_s.append(s) buffer_a.append(a) buffer_r.append((r + 8) / 8) s = s_ ep_r += r # 如果buffer收集一个batch了或者episode结束了 if (t + 1) % BATCH == 0 or t == EP_LEN - 1: # 计算discounted reward v_s_ = ppo.get_v(s_) discounted_r = [] for r in buffer_r[::-1]: v_s_ = r + GAMMA * v_s_ discounted_r.append(v_s_) discounted_r.reverse() bs, ba, br = np.vstack(buffer_s), np.vstack(buffer_a), np.vstack(discounted_r) # 清空buffer buffer_s, buffer_a, buffer_r = [], [], [] ppo.update(bs, ba, br) # 更新PPO if ep == 0: all_ep_r.append(ep_r) else: all_ep_r.append(all_ep_r[-1] * 0.9 + ep_r * 0.1) print('Ep:%d | Ep_r:%f' % (ep, ep_r)) plt.plot(np.arange(len(all_ep_r)), all_ep_r) plt.xlabel('Episode') plt.ylabel('Moving averaged episode reward') plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 多线程DPPO