- 1《深入浅出LLM基础篇》(一):大模型概念与发展_大模型发展时间线

- 2Hbase在集群中启动时碰到的问题

- 3React--useState_react usestate

- 4教程:利用LLaMA_Factory微调llama3:8b大模型_llama3模型微调保存

- 5【C语言课设】经典植物大战僵尸丨完整开发教程+笔记+源码_植物大战僵尸csdn

- 6Applied soft computing期刊投稿时间线_applied soft computing 需要上传代码吗

- 72024年前端最新2 年前端面试心路历程(字节跳动、YY、虎牙、BIGO),前端基础开发

- 8华为认证 | 华为HCIE认证体系有哪些?考哪个比较好?_华为网络认证体系

- 9微信扫码登录_第三方微信扫码登录

- 1010月华为OD面经整理分享,感谢三位上岸考友分享经验

Android整合SherpaNcnn实现离线语音识别(支持中文,手把手带你从编译动态库开始)_android 离线语音识别

赞

踩

经过一番折腾后,终于实现了想要的效果。经过一番的测试,发现运行的表现还不错,因此这里记录一下。

sherpa-ncnn相关动态库编译

首先是下载sherpa-ncnn这个工程进行编译,我们可以直接从这个github的地址下载:sherpa-ncnn;如果无法访问github或无法下载的,我已将相关资源及编译好的动态库放在文章的开头。

下载完成后,按照k2-fsa.github上文档的步骤,使用ndk进行编译。实际这里在文档中写得已经比较清楚了,只需要按照上面的步骤进行即可。当然这里我也记录下我的编译过程:

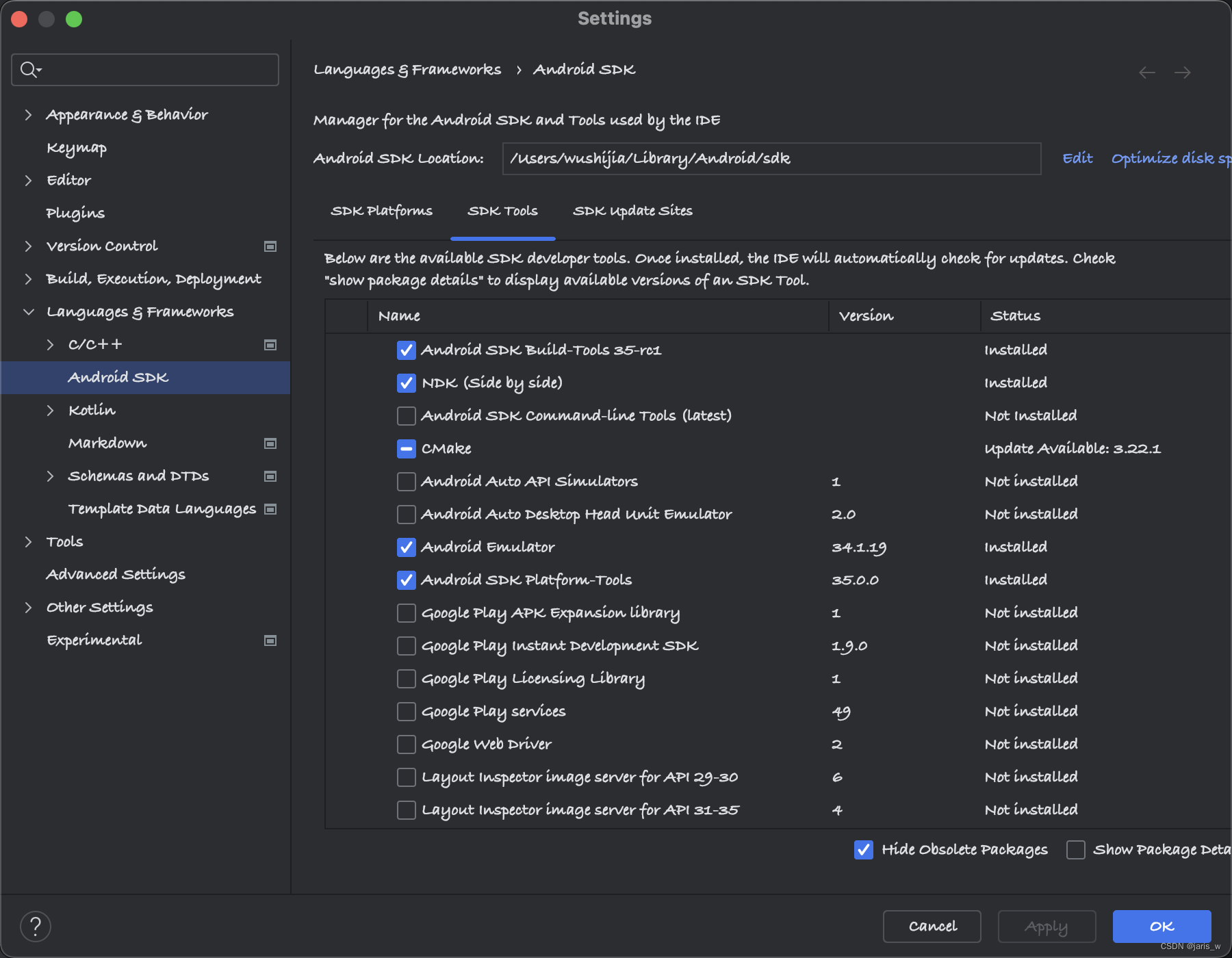

首先在Android Studio中安装ndk,下面是我已经安装好的截图:

从上图可以看到安装完成后ndk所在的路径,我这里就是/Users/wushijia/Library/Android/sdk/ndk,在该路径中可以看到我们已经安装的内容;这里也给出我的文件夹截图,当然我已经安装过多个ndk版本,所以效果如下:

接着就是按照文档的步骤,对动态库进行编译。我们可以现在命令行中,进入sherpa-ncnn-master工程目录,然后设置ndk环境变量。根据我的ndk路径,这里我选用版本最高的一个ndk路径,执行下面的命令:

export ANDROID_NDK=/Users/wushijia/Library/Android/sdk/ndk/26.2.11394342

- 1

上面的命令只是对当前命令行临时的设置环境变量,当然我就不去修改系统文件了;可能退出后就失效了,所以只是在编译动态库的时候设置一下,这里要注意。

设置好环境变量后,我们就可以在sherpa-ncnn-master工程路径中分别执行如下几个命令文件:

./build-android-arm64-v8a.sh

- 1

./build-android-armv7-eabi.sh

- 1

./build-android-x86-64.sh

- 1

./build-android-x86.sh

- 1

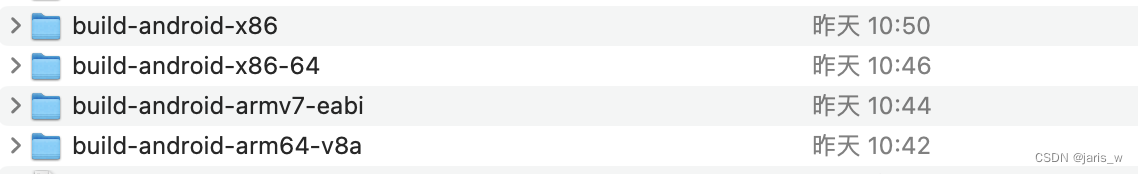

命令执行成功后,我们会在脚本的同级目录下得到如下几个文件夹:

在每个文件夹中都会包含相应的install/lib/*.so文件,这就是Android中所需的动态库了。

Android工程整合

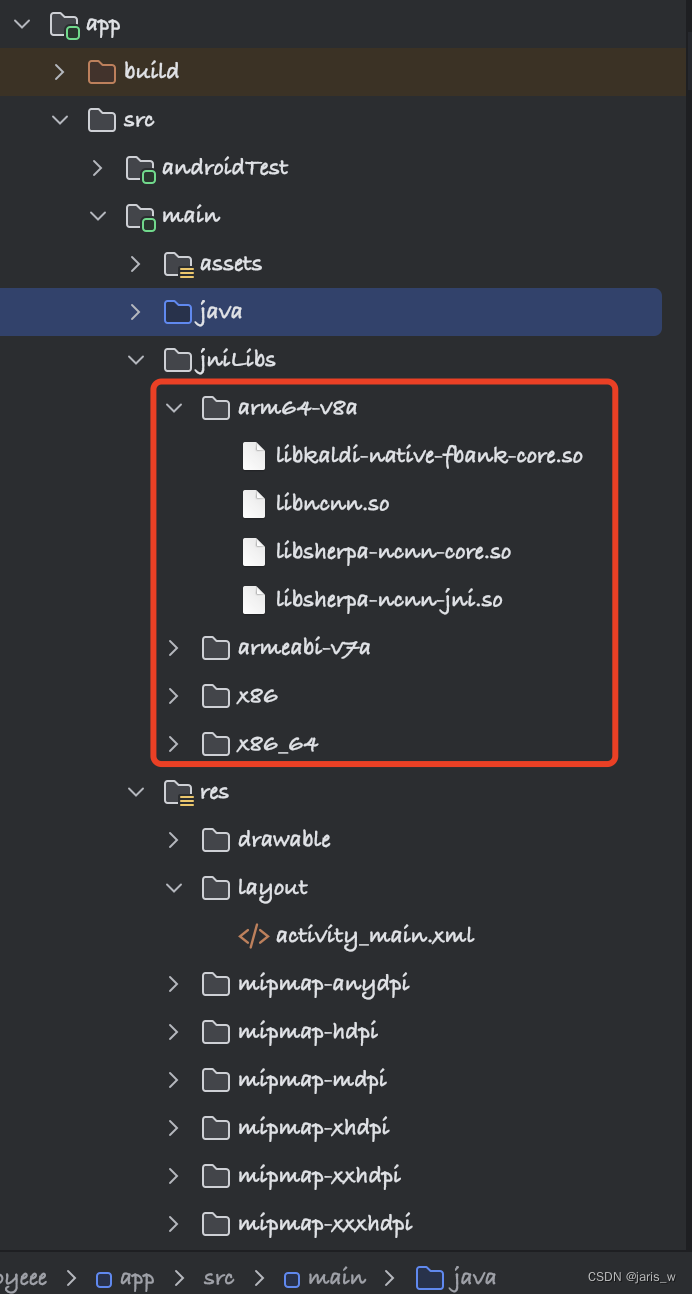

接下来将对应的动态库复制到Android Studio工程jniLibs中对应的目录,效果如下:

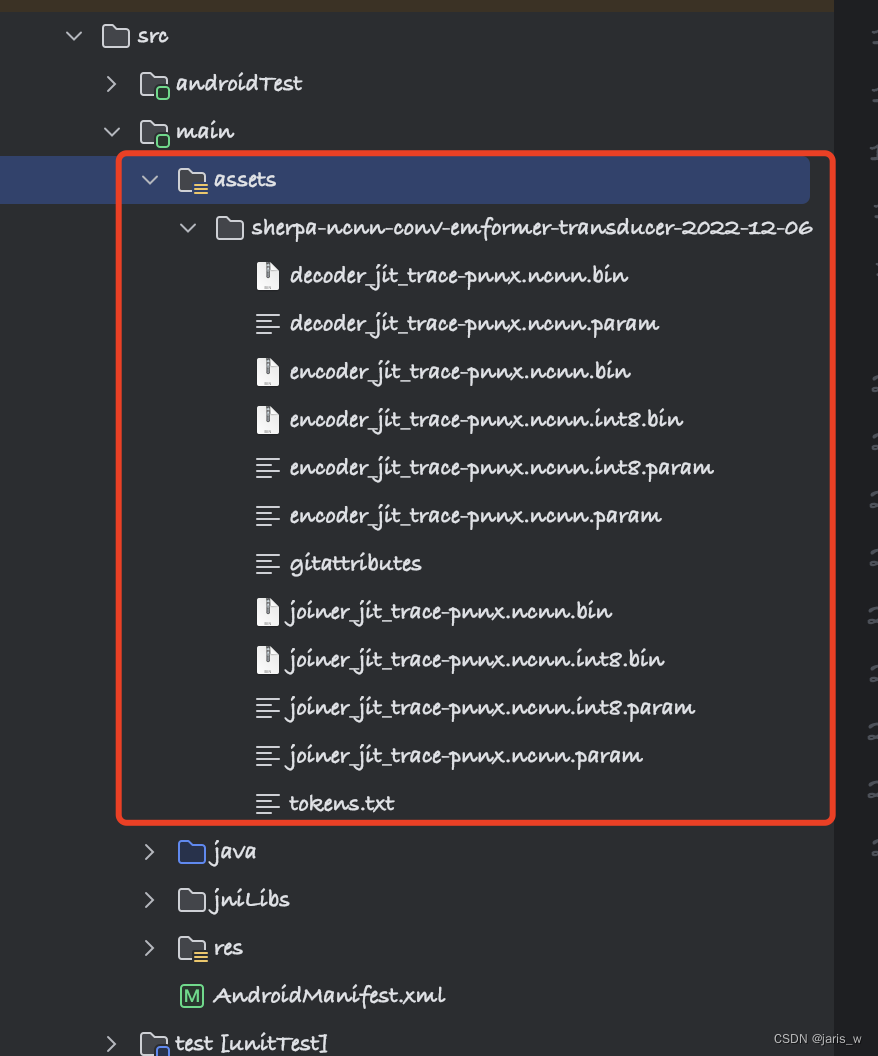

在上一步完成之后,我们还要将预训练模型拷贝到Android工程中,效果如下:

在完成上一步之后,我们就可以开始调用了。

这里读取文件的方式,我引用了一个别人的kotlin代码来读取模型文件。所以在我的工程中实现的方式变成了java代码调用kotlin代码,虽然有些混乱,但也勉强能用。我的java文件夹如下:

这里需要注意的是,由于Android中动态库的调用是需要和java包名关联的,所以我们引用别人的动态库,包名也必须按照别人的规则来。所以SherpaNcnn.kt文件所在的包名必须是k2fsa.sherpa.ncnn,否则运行时会提示动态库里找不到相应函数。这里总不能去修改动态库的内容吧,又要修改C++源码,直接按照别人的包名来是最方便的。

接着给出SherpaNcnn.kt的完整文件代码,当然大家也可以从文章开头的资源文件中下载:

package com.k2fsa.sherpa.ncnn import android.content.res.AssetManager data class FeatureExtractorConfig( var sampleRate: Float, var featureDim: Int, ) data class ModelConfig( var encoderParam: String, var encoderBin: String, var decoderParam: String, var decoderBin: String, var joinerParam: String, var joinerBin: String, var tokens: String, var numThreads: Int = 1, var useGPU: Boolean = true, // If there is a GPU and useGPU true, we will use GPU ) data class DecoderConfig( var method: String = "modified_beam_search", // valid values: greedy_search, modified_beam_search var numActivePaths: Int = 4, // used only by modified_beam_search ) data class RecognizerConfig( var featConfig: FeatureExtractorConfig, var modelConfig: ModelConfig, var decoderConfig: DecoderConfig, var enableEndpoint: Boolean = true, var rule1MinTrailingSilence: Float = 2.4f, var rule2MinTrailingSilence: Float = 1.0f, var rule3MinUtteranceLength: Float = 30.0f, var hotwordsFile: String = "", var hotwordsScore: Float = 1.5f, ) class SherpaNcnn( var config: RecognizerConfig, assetManager: AssetManager? = null, ) { private val ptr: Long init { if (assetManager != null) { ptr = newFromAsset(assetManager, config) } else { ptr = newFromFile(config) } } protected fun finalize() { delete(ptr) } fun acceptSamples(samples: FloatArray) = acceptWaveform(ptr, samples = samples, sampleRate = config.featConfig.sampleRate) fun isReady() = isReady(ptr) fun decode() = decode(ptr) fun inputFinished() = inputFinished(ptr) fun isEndpoint(): Boolean = isEndpoint(ptr) fun reset(recreate: Boolean = false) = reset(ptr, recreate = recreate) val text: String get() = getText(ptr) private external fun newFromAsset( assetManager: AssetManager, config: RecognizerConfig, ): Long private external fun newFromFile( config: RecognizerConfig, ): Long private external fun delete(ptr: Long) private external fun acceptWaveform(ptr: Long, samples: FloatArray, sampleRate: Float) private external fun inputFinished(ptr: Long) private external fun isReady(ptr: Long): Boolean private external fun decode(ptr: Long) private external fun isEndpoint(ptr: Long): Boolean private external fun reset(ptr: Long, recreate: Boolean) private external fun getText(ptr: Long): String companion object { init { System.loadLibrary("sherpa-ncnn-jni") } } } fun getFeatureExtractorConfig( sampleRate: Float, featureDim: Int ): FeatureExtractorConfig { return FeatureExtractorConfig( sampleRate = sampleRate, featureDim = featureDim, ) } fun getDecoderConfig(method: String, numActivePaths: Int): DecoderConfig { return DecoderConfig(method = method, numActivePaths = numActivePaths) } /* @param type 0 - https://huggingface.co/csukuangfj/sherpa-ncnn-2022-09-30 This model supports only Chinese 1 - https://huggingface.co/csukuangfj/sherpa-ncnn-conv-emformer-transducer-2022-12-06 This model supports both English and Chinese 2 - https://huggingface.co/csukuangfj/sherpa-ncnn-streaming-zipformer-bilingual-zh-en-2023-02-13 This model supports both English and Chinese 3 - https://huggingface.co/csukuangfj/sherpa-ncnn-streaming-zipformer-en-2023-02-13 This model supports only English 4 - https://huggingface.co/shaojieli/sherpa-ncnn-streaming-zipformer-fr-2023-04-14 This model supports only French 5 - https://github.com/k2-fsa/sherpa-ncnn/releases/download/models/sherpa-ncnn-streaming-zipformer-zh-14M-2023-02-23.tar.bz2 This is a small model and supports only Chinese 6 - https://github.com/k2-fsa/sherpa-ncnn/releases/download/models/sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16.tar.bz2 This is a medium model and supports only Chinese Please follow https://k2-fsa.github.io/sherpa/ncnn/pretrained_models/index.html to add more pre-trained models */ fun getModelConfig(type: Int, useGPU: Boolean): ModelConfig? { when (type) { 0 -> { val modelDir = "sherpa-ncnn-2022-09-30" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin", tokens = "$modelDir/tokens.txt", numThreads = 1, useGPU = useGPU, ) } 1 -> { val modelDir = "sherpa-ncnn-conv-emformer-transducer-2022-12-06" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.int8.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.int8.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.int8.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.int8.bin", tokens = "$modelDir/tokens.txt", numThreads = 1, useGPU = useGPU, ) } 2 -> { val modelDir = "sherpa-ncnn-streaming-zipformer-bilingual-zh-en-2023-02-13" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin", tokens = "$modelDir/tokens.txt", numThreads = 1, useGPU = useGPU, ) } 3 -> { val modelDir = "sherpa-ncnn-streaming-zipformer-en-2023-02-13" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin", tokens = "$modelDir/tokens.txt", numThreads = 1, useGPU = useGPU, ) } 4 -> { val modelDir = "sherpa-ncnn-streaming-zipformer-fr-2023-04-14" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin", tokens = "$modelDir/tokens.txt", numThreads = 1, useGPU = useGPU, ) } 5 -> { val modelDir = "sherpa-ncnn-streaming-zipformer-zh-14M-2023-02-23" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin", tokens = "$modelDir/tokens.txt", numThreads = 2, useGPU = useGPU, ) } 6 -> { val modelDir = "sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/96" return ModelConfig( encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param", encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin", decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param", decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin", joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param", joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin", tokens = "$modelDir/tokens.txt", numThreads = 2, useGPU = useGPU, ) } } return null }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

完成上面所有的步骤之后,可以说外部所需的条件我们都已经准备完毕了。胜利就在前方,哈哈。接下来是在我自己的MainActivity中调用:

package com.qimiaoren.aiemployee; import android.Manifest; import android.app.Activity; import android.app.AlertDialog; import android.content.DialogInterface; import android.content.Intent; import android.content.pm.PackageManager; import android.content.res.AssetManager; import android.media.AudioFormat; import android.media.AudioRecord; import android.media.MediaRecorder; import android.net.Uri; import android.os.Bundle; import android.provider.Settings; import android.util.Log; import android.view.View; import android.widget.Button; import android.widget.Toast; import androidx.annotation.NonNull; import androidx.core.app.ActivityCompat; import androidx.core.content.ContextCompat; import com.k2fsa.sherpa.ncnn.DecoderConfig; import com.k2fsa.sherpa.ncnn.FeatureExtractorConfig; import com.k2fsa.sherpa.ncnn.ModelConfig; import com.k2fsa.sherpa.ncnn.RecognizerConfig; import com.k2fsa.sherpa.ncnn.SherpaNcnn; import com.k2fsa.sherpa.ncnn.SherpaNcnnKt; import net.jaris.aiemployeee.R; import java.util.Arrays; public class MainActivity extends Activity { private static final String TAG = "AITag"; private static final int MY_PERMISSIONS_REQUEST = 1; Button start; private AudioRecord audioRecord = null; private int audioSource = MediaRecorder.AudioSource.MIC; private int sampleRateInHz = 16000; private int channelConfig = AudioFormat.CHANNEL_IN_MONO; private int audioFormat = AudioFormat.ENCODING_PCM_16BIT; private boolean isRecording = false; boolean useGPU = true; private SherpaNcnn model; @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); initView(); checkPermission(); initModel(); } private void initView() { start = findViewById(R.id.start); start.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View view) { Toast.makeText(MainActivity.this, "开始说话", Toast.LENGTH_SHORT).show(); if (!isRecording){ if (initMicrophone()){ Log.d(TAG, "onClick: 麦克风初始化完成"); isRecording = true; audioRecord.startRecording(); new Thread(new Runnable() { @Override public void run() { double interval = 0.1; int bufferSize = (int) (interval * sampleRateInHz); short[] buffer = new short[bufferSize]; int silenceDuration = 0; while (isRecording){ int ret = audioRecord.read(buffer,0,buffer.length); if (ret > 0) { int energy = 0; int zeroCrossing = 0; for (int i = 0; i < ret; i++) { energy += buffer[i] * buffer[i]; if (i > 0 && buffer[i] * buffer[i - 1] > 0){ zeroCrossing ++; } } if (energy < 100 && zeroCrossing < 3){ silenceDuration += bufferSize; }else { silenceDuration = 0; } if (silenceDuration >= 500){ Log.d(TAG, "run: 说话停顿"); continue; } float[] samples = new float[ret]; for (int i = 0; i < ret; i++){ samples[i] = buffer[i] / 32768.0f; } model.acceptSamples(samples); while (model.isReady()){ model.decode(); } boolean isEndpoint = model.isEndpoint(); String text = model.getText(); if (!"".equals(text)){ Log.d(TAG, "run: " + text); } if (isEndpoint){ Log.d(TAG, "isEndpoint: " + text); model.reset(false); } } } } }).start(); } } } }); } private void checkPermission() { if (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.MODIFY_AUDIO_SETTINGS) != PackageManager.PERMISSION_GRANTED || ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED || ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.READ_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED || ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED ) { ActivityCompat.requestPermissions(MainActivity.this, new String[]{ Manifest.permission.MODIFY_AUDIO_SETTINGS, Manifest.permission.RECORD_AUDIO, Manifest.permission.READ_EXTERNAL_STORAGE, Manifest.permission.WRITE_EXTERNAL_STORAGE }, MY_PERMISSIONS_REQUEST); Log.d(TAG, "checkPermission: 请求权限"); } else { Log.d(TAG, "checkPermission: 权限已授权"); } } private void initModel() { FeatureExtractorConfig featConfig = SherpaNcnnKt.getFeatureExtractorConfig(16000.0f,80); ModelConfig modelConfig = SherpaNcnnKt.getModelConfig(1, useGPU); DecoderConfig decoderConfig = SherpaNcnnKt.getDecoderConfig("greedy_search", 4); RecognizerConfig config = new RecognizerConfig(featConfig, modelConfig, decoderConfig, true, 2.0f, 0.8f, 20.0f, "", 1.5f); AssetManager assetManager = getAssets(); model = new SherpaNcnn(config, assetManager); Log.d(TAG, "model初始化成功"); } private void permissionDialog(String title, String content) { AlertDialog alertDialog = new AlertDialog.Builder(this) .setTitle(title) .setMessage(content) .setPositiveButton("确定", new DialogInterface.OnClickListener() { @Override public void onClick(DialogInterface dialog, int which) { Intent permissionIntent = new Intent(); permissionIntent.setAction(Settings.ACTION_APPLICATION_DETAILS_SETTINGS); Uri uri = Uri.fromParts("package", getPackageName(), null); permissionIntent.setData(uri); startActivity(permissionIntent); } }) .create(); alertDialog.show(); } @Override public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) { if (requestCode == MY_PERMISSIONS_REQUEST) { if (grantResults[0] == PackageManager.PERMISSION_GRANTED && grantResults[1] == PackageManager.PERMISSION_GRANTED && grantResults[2] == PackageManager.PERMISSION_GRANTED && grantResults[3] == PackageManager.PERMISSION_GRANTED ) { Log.d(TAG, "onRequestPermissionsResult: 授权成功"); } else { permissionDialog("当前应用权限不足", "请先检查所有权限可用"); } } super.onRequestPermissionsResult(requestCode, permissions, grantResults); } private boolean initMicrophone() { if (ActivityCompat.checkSelfPermission(this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) { return false; } int numBytes = AudioRecord.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat); audioRecord = new AudioRecord(audioSource, sampleRateInHz, channelConfig, audioFormat, numBytes * 2); return true; } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

要注意上面导入包时,部分引用是从“com.k2fsa.sherpa.ncnn”中导入的。

上面调用的核心代码就是点击start按钮,new Thread线程中的内容了。通过while循环不断读取麦克风的声音数据,并经过计算后传入模型的acceptSamples函数中获取识别文本的结果。

在线程里面我自己写了一些方法来判断说话的停顿时机,大家可以根据自己的需求来参考。

线程中最后的两个log打印日志一个是实时识别的文本内容,第二个是一句话停顿后,返回的一句完整的文本内容。一般使用isEndpoint判断中的text即可。