- 1python中count函数的用法_counts在python中的用法

- 2Redis - 分布式缓存_redis分布式缓存

- 3【课程设计/毕业设计】python图书借阅管理系统源码+开发文档_图书管理系统的设计与开发代码python

- 4一键生成论文的软件推荐!ai免费写论文_论文写作机器人

- 5redis-GEO地理位置_redis geo hash原理

- 6(一)微信小程序云开发之上传图片(全流程讲解)_壁纸小程序云服务怎么上传照片

- 724个 Docker 常见疑难杂症处理技巧_docker 24

- 8分类与分割的区别_分类头和分割头代码实现的区别

- 9《设计模式详解》创建型模式 - 工厂模式_设计模式 根据不同类型生成不同对象

- 10DBeaver执行SQL报错——No active connection

Hadoop集群安装组件版本对应关系_spark与hadoop版本对应关系

赞

踩

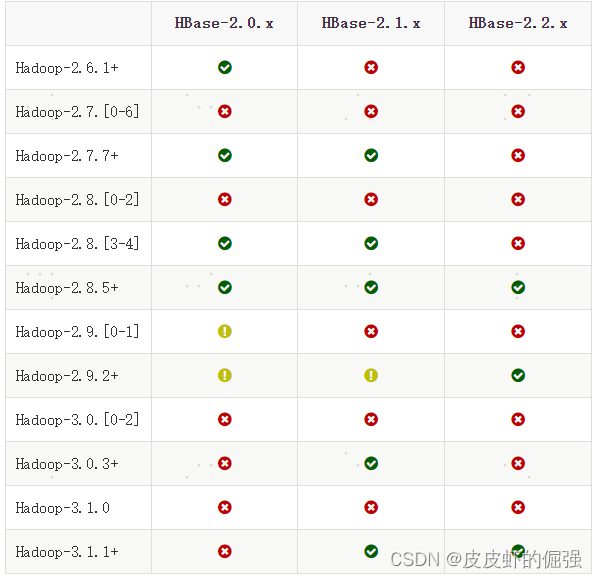

hbase和Hadoop之间版本对应关系

图片来源参考官网:http://hbase.apache.org/book.html#hadoop

hive和hadoop、hive和spark之间版本对应关系

版本信息来自于hive源码包src.tar.gz的pom.xml:

hive-3.1.2

<hadoop.version>3.1.0</hadoop.version>

<hbase.version>2.0.0-alpha4</hbase.version>

<spark.version>2.3.0</spark.version>

<scala.binary.version>2.11</scala.binary.version>

<scala.version>2.11.8</scala.version>

<zookeeper.version>3.4.6</zookeeper.version>

hive-2.3.6

<hadoop.version>2.7.2</hadoop.version>

<hbase.version>1.1.1</hbase.version>

<spark.version>2.0.0</spark.version>

<scala.binary.version>2.11</scala.binary.version>

<scala.version>2.11.8</scala.version>

<zookeeper.version>3.4.6</zookeeper.version>

Hive Version Spark Version

3.0.x 2.3.0

2.3.x 2.0.0

2.2.x 1.6.0

2.1.x 1.6.0

2.0.x 1.5.0

1.2.x 1.3.1

1.1.x 1.2.0

apache-hive-1.2.2-src <spark.version>1.3.1</spark.version>

apache-hive-2.1.1-src <spark.version>1.6.0</spark.version>

apache-hive-2.3.3-src <spark.version>2.0.0</spark.version>

apache-hive-3.0.0-src <spark.version>2.3.0</spark.version>

stackoverflow上可行的例子是:

spark 2.0.2 with hadoop 2.7.3 and hive 2.1

参考链接:https://stackoverflow.com/questions/42281174/hive-2-1-1-on-spark-which-version-of-spark-should-i-use

qq群里有网友给出的版本是:

Hive 2.6 spark2.2.0

版本如下暂时没发现有什么兼容性问题:

apache-hive-3.0.0-bin

hadoop-3.0.3

spark-2.3.1-bin-hadoop2.7

参考博客链接:

https://blog.csdn.net/appleyuchi/article/details/81171785

flink

版本信息来自于flink源码包的pom.xml:

flink-1.9.1

<hadoop.version>2.4.1</hadoop.version>

<scala.version>2.11.12</scala.version>

<scala.binary.version>2.11</scala.binary.version>

<zookeeper.version>3.4.10</zookeeper.version>

<hive.version>2.3.4</hive.version>

参考:

https://blog.csdn.net/lucklydog123/article/details/109773865