- 1基础搜索算法篇---二分搜索_二分法查找次数怎么算

- 2Go语言操作mongodb_go mongo

- 3srp中阴影_srp shadow renderer feature

- 4平衡二叉树(AVL)【java实现+图解】_java平衡二叉树实现

- 5Rabbitmq取消预取机制配置,配置手动确认后仍然java.lang.IllegalStateException: Channel closed; cannot ack/nack的问题_rabbitmq java.lang.illegalstateexception: connecti

- 6Idea中使用Git的常用操作_git在idea中相关操作

- 7synopsys-SDC第六章——生成时钟_create generated clock

- 8记一次Spring Websocket后台服务器CPU占用率过高的问题排查过程_又服务器向应用推送cpu使用率

- 9Hadoop集群搭建教程(hadoop-3.1.3手把手教学)超详细!!!_hadoop313资料

- 10cmake———CXX_STANDARD is set to invalid value ‘17‘

【网安AIGC专题11.1】12 CODEIE用于NER和RE:顶刊OpenAI API调用、CodeX比chatgpt更好:提示工程设计+控制变量对比实验(格式一致性、模型忠实度、细粒度性能)

赞

踩

CODEIE: Large Code Generation Models are Better Few-Shot Information Extractors

- 写在最前面

- 汇报

- 补充

- 代码

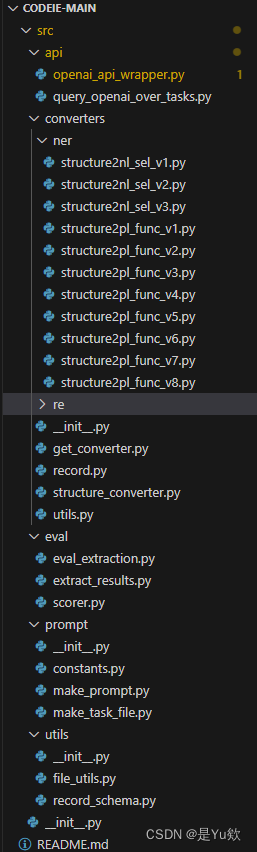

- 代码目录

- api

- openai_api_wrapper.py

- query_openai_over_tasks.py

- 分段解读 def run:记录任务的执行情况,保存任务的结果,以及对于失败任务的记录和保存

- def run_task:执行一个给定任务,与Codex进行交互,获取生成的代码,记录执行时间,并返回任务的结果

- def maintain_request_per_minute:控制每分钟的请求数以避免超出最大请求数

- def read_prompt:读取提示

- def load_cache:创建一个缓存以存储查询结果,以避免重复查询相同的输入

- def query_codex:向Codex(AI引擎)发出查询,以获取生成的代码

- def get_completed_code:从Codex的响应中提取生成的代码,并将其与任务的输入提示合并,以获得完整的生成代码

- def get_request_per_minute:根据已经发出的请求数和经过的时间来计算每分钟的请求速率,以便在维持请求速率时使用

- 主函数:(通用模版)从命令行传递参数来配置任务的执行,然后根据这些参数来执行任务

- converters

- eval

- prompt

- utils

写在最前面

这次该我汇报啦

许愿明天讲的顺利,问的都会

课堂讨论

讲+提问1个小时

但是在讨论的过程中,感觉逐步抽丝挖掘到了核心原理:

之前的理解:借助代码-LLM中的编码丰富结构化代码信息

最后的理解:如果能设置一个方法,让大模型能对自己输出的有所理解,那么效果会更好。这篇论文是通过代码结构和提示来实现这个的,理论上文字也可以

汇报

CODEIE:代码生成大模型能更好的进行少样本信息提取这项工作

接下来我将从这四个板块展开介绍

研究背景

这篇论文于2023年5月发布在arXiv,随后9月发表在ACL-NLP顶刊。其作者来自复旦大学和华师大学。

命名实体识别(NER)和关系抽取(RE)

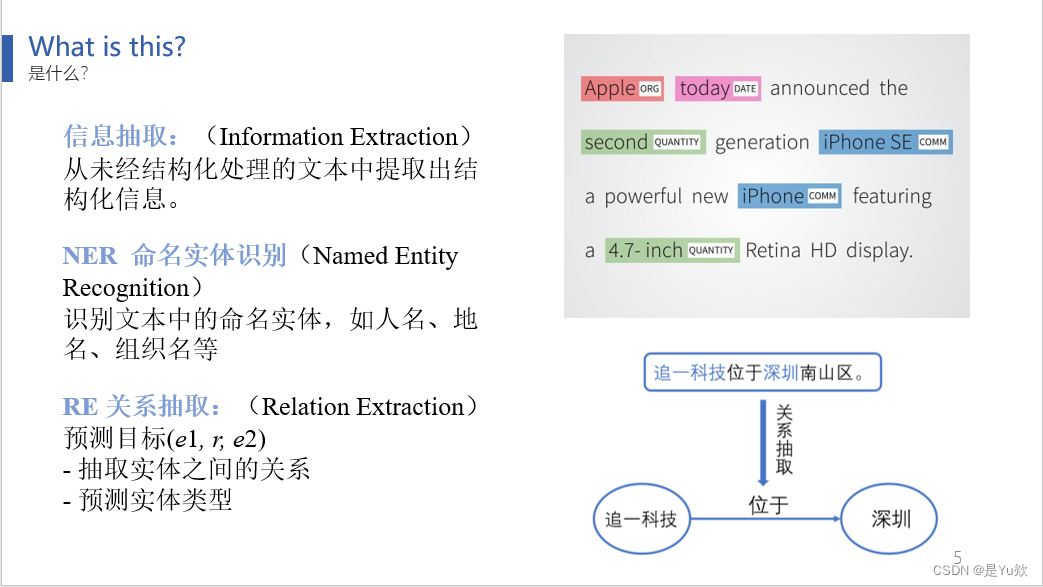

首先,让我们了解一些自然语言处理(NLP)的背景知识。

信息抽取的目标是从未经结构化处理的文本中提取出结构化信息。这个领域涵盖了各种任务,包括命名实体识别(NER)和关系抽取(RE)。

NER用于识别文本中的命名实体,例如人名、地名和组织名,

而RE则用于提取文本中实体之间的关系信息。

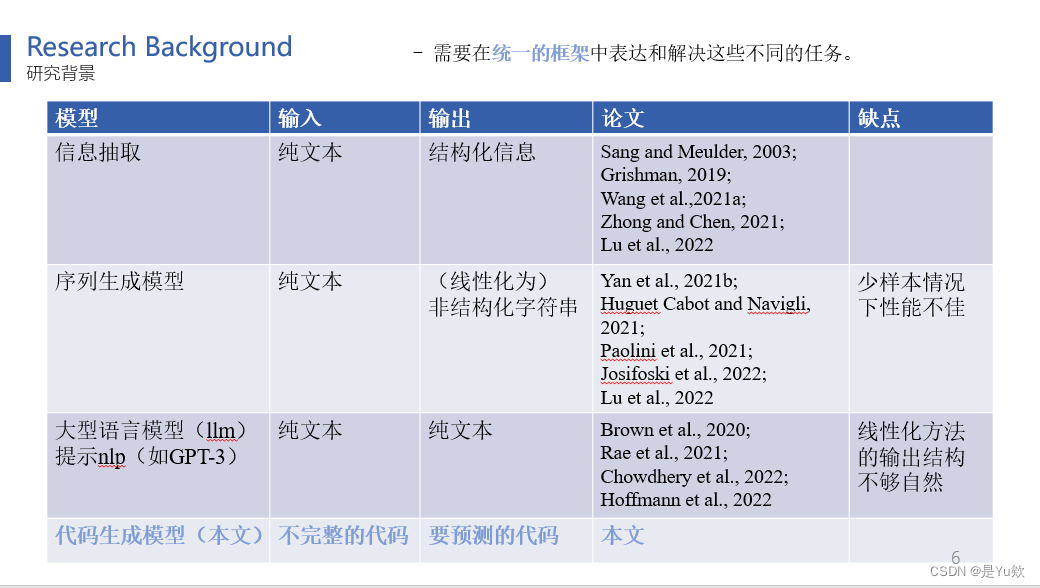

相关工作

- 为了在一个统一框架内处理这些不同的任务,近期的研究建议将输出结构线性化为非结构化字符串,并采用

序列生成模型来解决信息抽取(IE)任务。

尽管这种线性化方法在具有充足训练数据的情况下取得了良好的成果,但在少样本情况下性能不佳。 - 鉴于

大型语言模型具有强大的少样本学习能力,本论文旨在充分利用它们来解决少样本IE任务,特别是NER和RE任务。

通常,对于文本分类等NLP任务,以前的工作将任务重新构建为文本到文本生成的形式,并利用自然语言-llm(如GPT-3)来生成答案。 - 然而,由于IE任务具有复杂的内部结构,以往的线性化方法的输出结构通常显得不够自然,导致了预训练时的输出格式与推理时的输出格式不匹配。

因此,在使用这些扁平化方法时,通常需要复杂的解码策略来将输出后处理为有效的结构。

作者动机

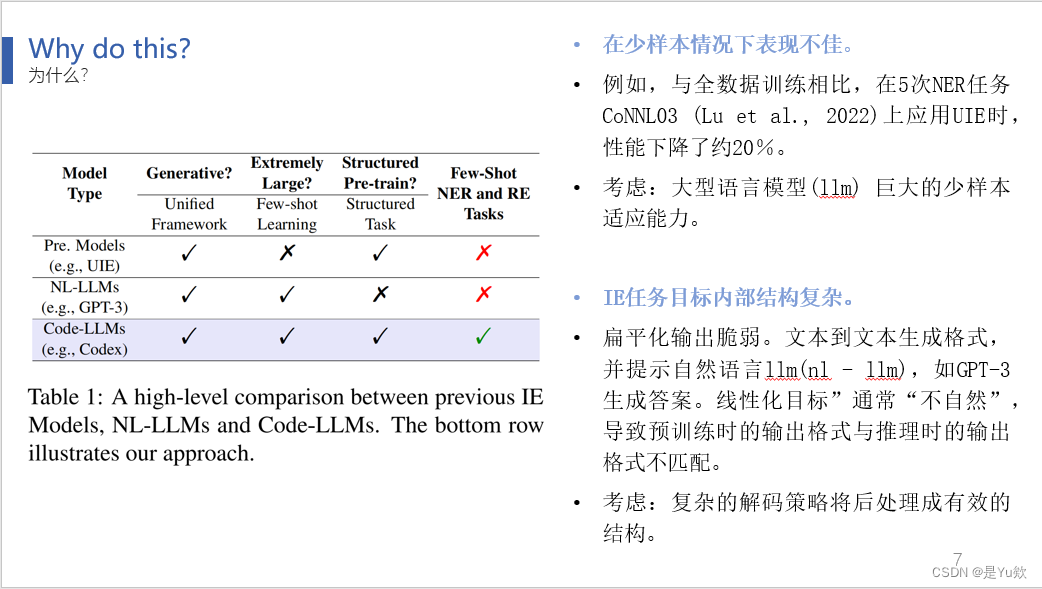

总结一下为什么作者选择了这一方法。

表1总结了用于IE任务的中等规模模型、NL-LLM和Code-LLM之间的高层次差异。

此前的工作未能在一个统一的框架下充分利用大型模型进行少样本学习,特别是在处理结构化任务方面。这篇论文的模型成功弥补了这两个限制。

为什么这两个限制会对结果产生重大影响呢?

首先,大型模型具有适应少样本数据的能力

其次,由于线性输出不够自然,通常需要更复杂的解码策略。

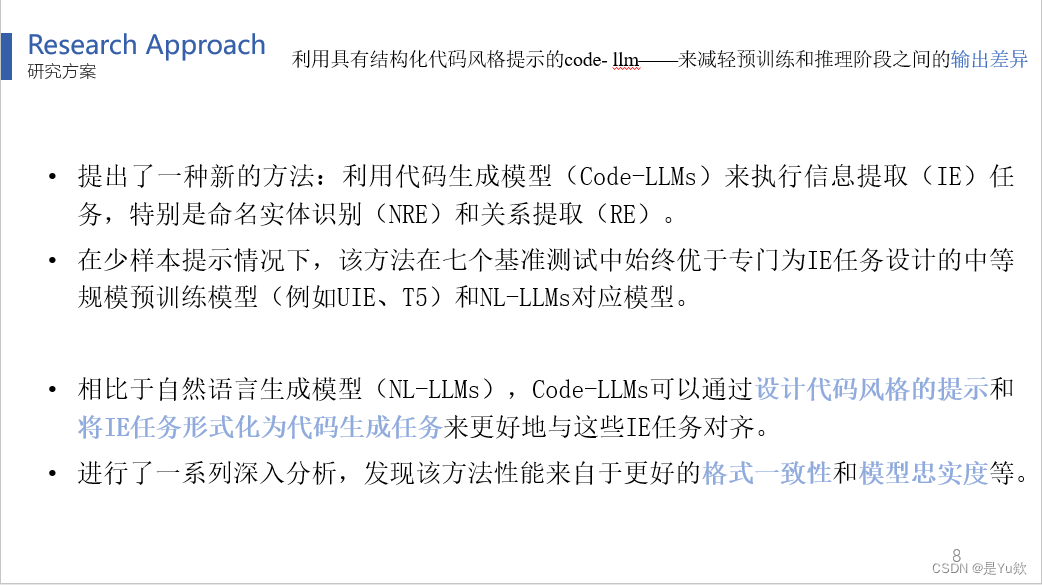

研究方案

因此,这篇论文提出了一种全新的思路,通过使用带有结构化代码风格提示的Code-LLM,来弥合预训练和推理阶段之间的输出差异,从而实现了IE任务的统一框架并获得更出色的结果。

这一方法的核心思想是将这两个IE任务框架化为代码生成任务,并借助代码-LLM中的编码丰富结构化代码信息,从而使这些IE任务有更好的结果。

实例

这是一个示例:

通过代码风格提示和Python字典的键,如“文本”和“类型”,可以组合它们成一个与NER(命名实体识别)示例等价的Python函数。

研究方案

下面我们对具体方案展开介绍。

方案预览

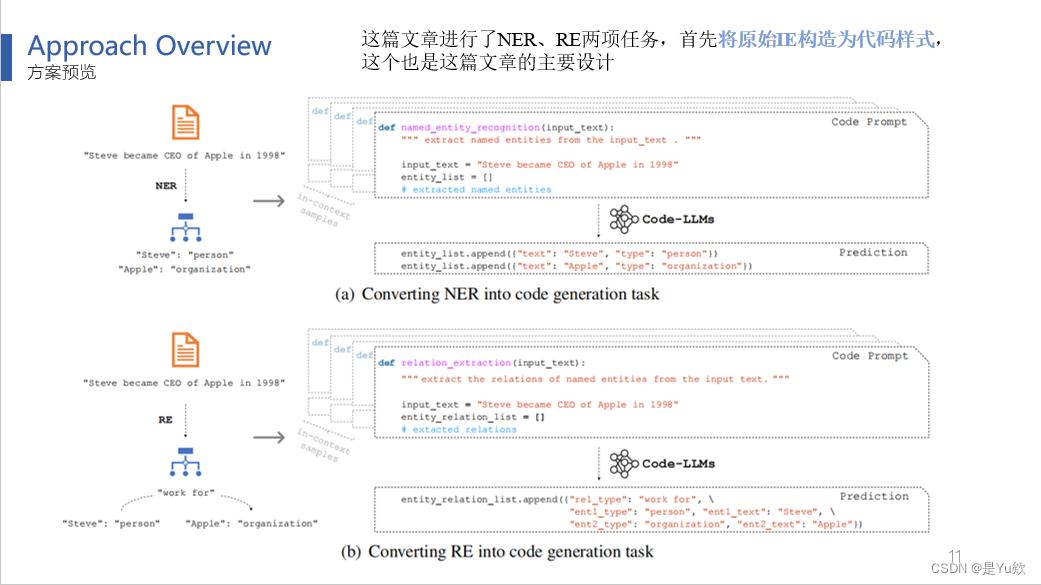

论文涵盖了NER和RE两项任务,

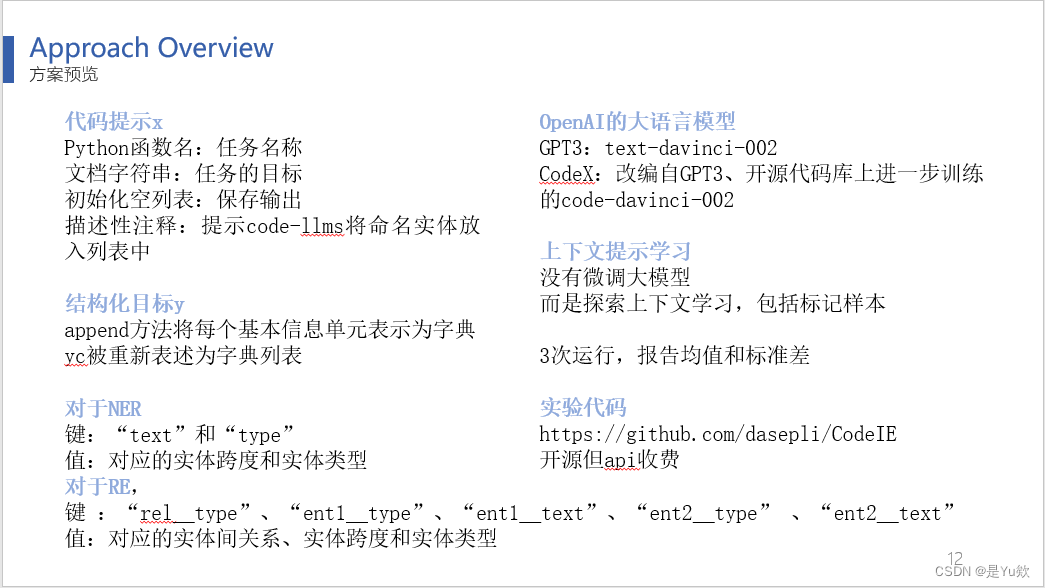

首先将原始IE任务转化为代码样式,其中(换PPT)Python函数名称表示任务,文档字符串说明任务目标,初始化空列表用于保存输出,描述性注释提供了提示以将命名实体放入列表中。(换PPT)这些元素被组合为“代码提示x”。

“结构化目标y”,将每个基本信息单元(NER的一对实体和RE的三元组)表示为Python字典,yc为字典列表。

对于NER任务,键包括“text”和“type”,值分别是实体跨度和实体类型。

对于RE任务,键包括实体类型和关系类型。然后,这些输入被传递给代码生成大模型,并得到输出。

左侧显示的文字是对前面图表的简明描述。

此外,GPT-3和CodeX都是OpenAI的模型,而CodeX是在GPT-3的基础上进行改进的,这两者有着相同的起源。

由于大型模型API的黑盒特性,无法对这些大型模型进行微调,因此这篇论文致力于探索上下文学习的方法,包括使用标记样本。

上下文提示学习是如何具体体现的呢?在这篇论文中,任务被转化为代码表示,然后将它们连接在一起,构建了一个上下文演示,其中包括x1y1x2y2直到xnyn,最后有一个准备预测的xc。这个上下文被输入到模型中,生成输出yc,其格式与y1y2yn相似,通常保持了Python语法,容易还原成原始结构。

鉴于少样本训练容易受到高方差的影响,该论文为每个实验采用不同的随机种子运行了三次,并报告了度量指标的均值和标准差。

这篇论文已经开源了这项研究的代码,如果有兴趣的朋友可以前去查看。

实验

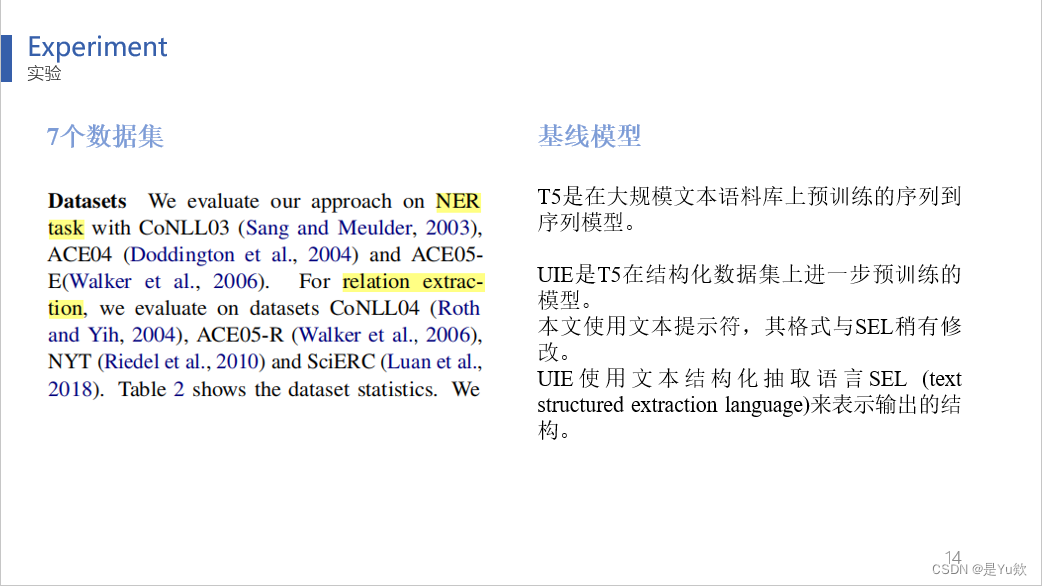

接下来,我们对这篇文章的实验部分进行梳理

数据集和基线模型

在实验结果部分,论文涵盖了七个NLP任务的数据集,采用了中等规模的预训练模型作为基线

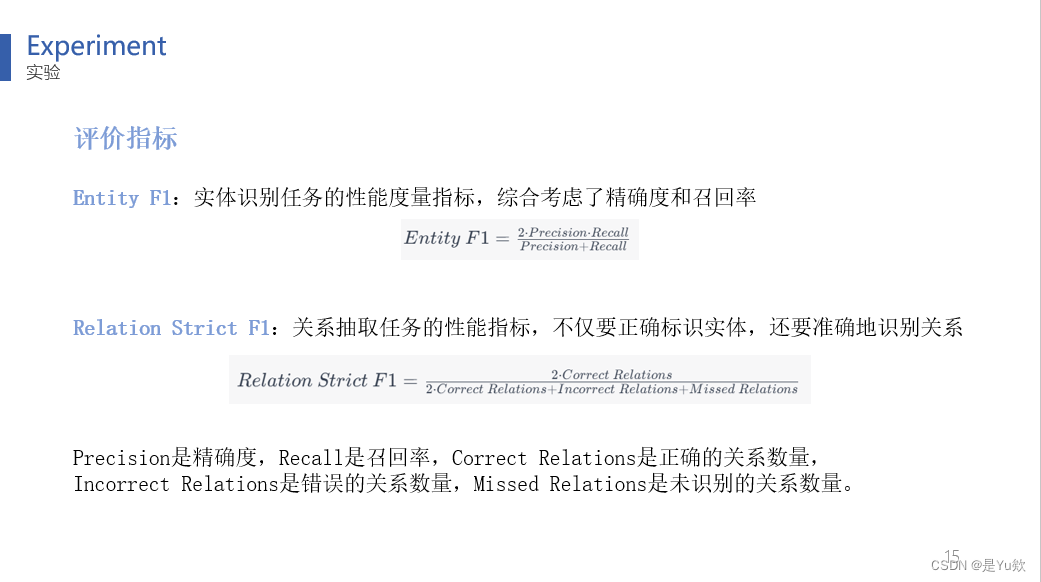

评价指标

评价指标是常规的NER和RE任务性能度量指标。

实体的偏移量和实体类型与黄金实体匹配,那么实体跨度预测就是正确的。

如果关系类型正确且其实体对应的偏移量和类型正确,则关系预测是正确的。

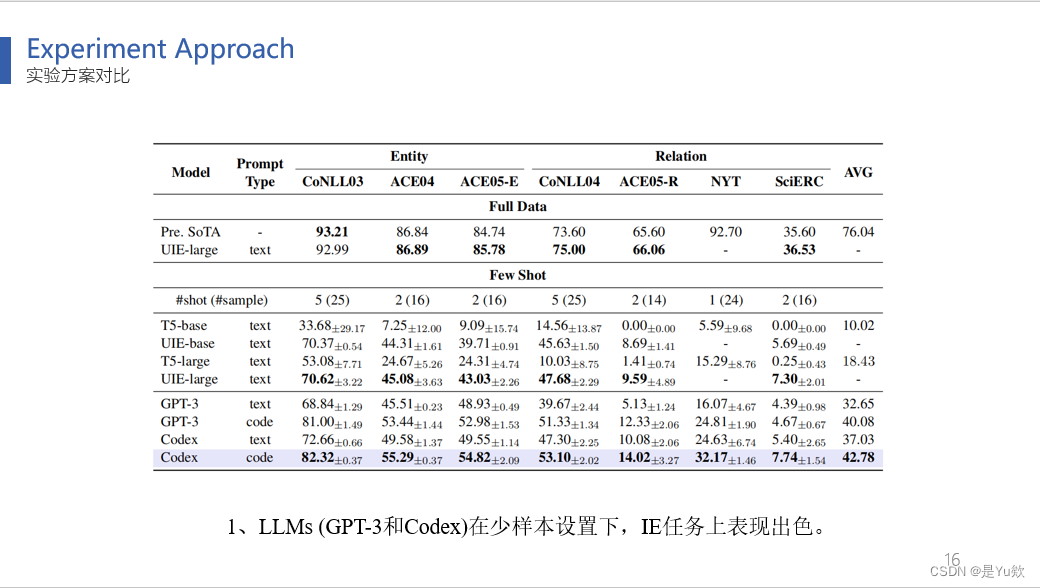

实验方案对比

结果表明:

1、(表3)LLMs (GPT-3和Codex)在少样本设置下,比中等大小的模型(T5和UIE)实现了优越的性能。

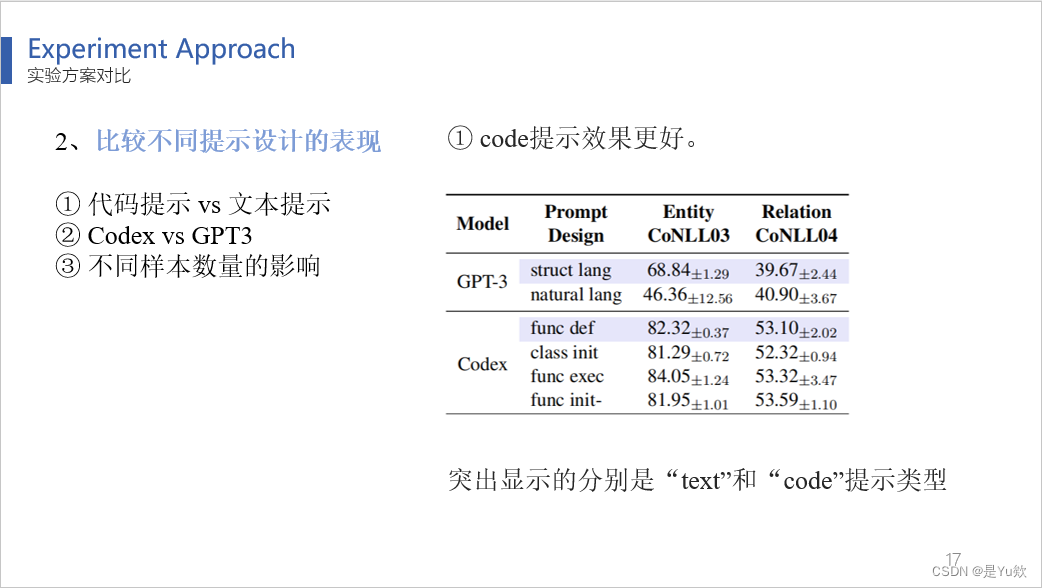

2、比较不同提示设计的效果

(表4)突出显示的分别是“text”和“code”提示类型,code提示效果更好。

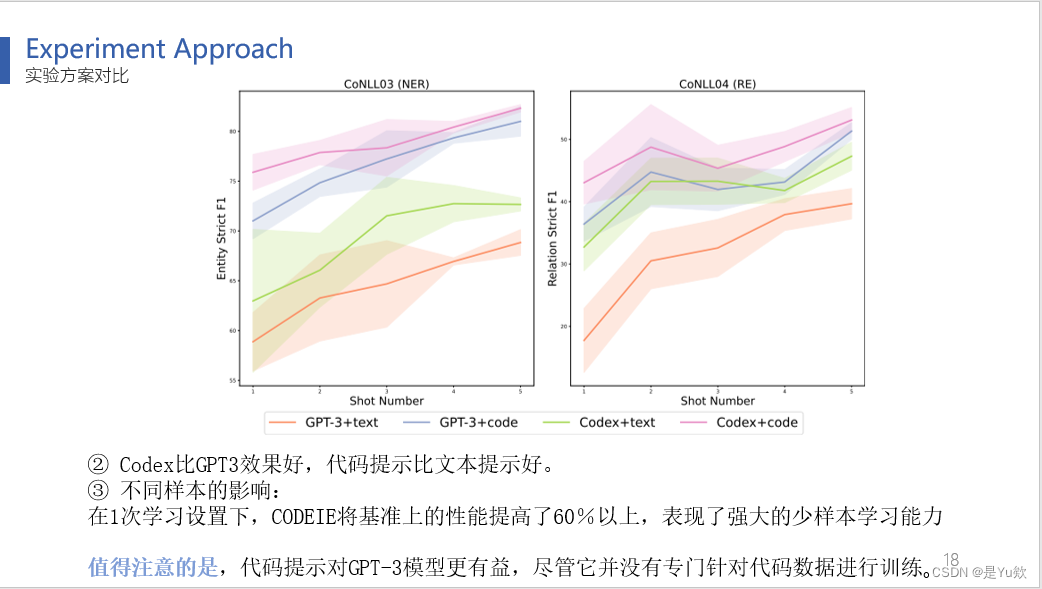

(图3)Codex胜过GPT-3,代码提示优于文本提示。Codex比GPT3效果好,代码提示比文本提示好。

并且在1次学习设置下,CODEIE将基准上的性能提高了60%以上,表现了强大的少样本学习能力

值得注意的是,代码提示对GPT-3更有益,尽管它并没有专门针对代码数据进行训练。

3、控制变量对比实验

作者进行了一些控制变量的对比实验,以探讨导致模型性能优越的因素。

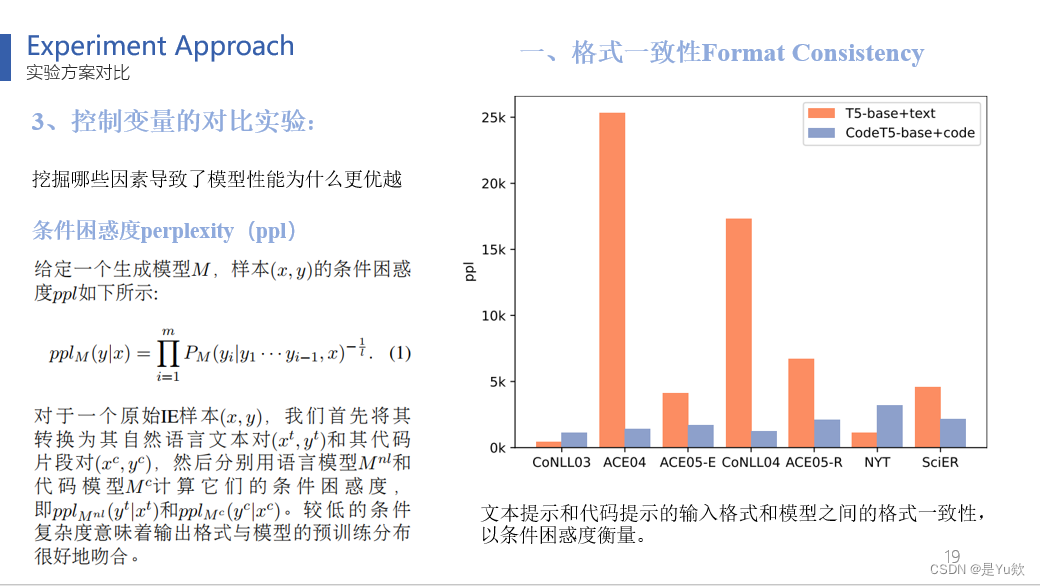

第一个是格式一致性Format Consistency

介绍下条件困惑度,这是一种衡量生成的文本在给定条件下生成文本的可预测性,也就是在给定上下文前缀的条件下,模型生成下一个字符的概率的准确性的度量。

较低的条件困惑度值表示生成的文本更符合所期望的条件。

图4,在7个数据集上,文本提示和代码提示的输入格式和模型之间的条件困惑度。

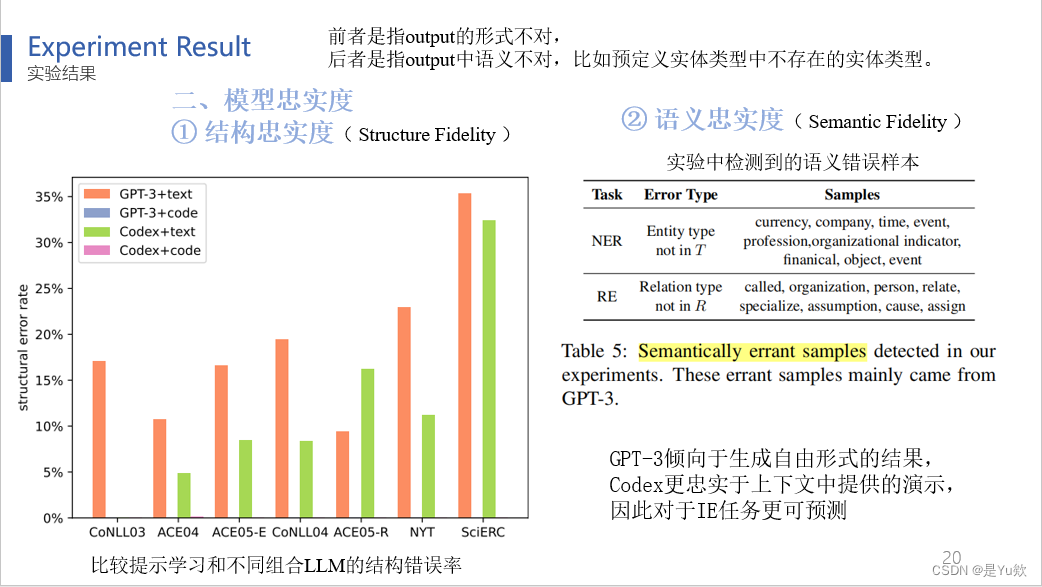

第二个是模型忠实度

分为两个指标:

1、一个是结构忠实度Structure Fidelity,顾名思义是生成文本的结构

图5:比较提示学习和不同组合LLM的结构错误率,output的形式不对

2、一个是语义忠实度Semantic Fidelity,生成文本的语义忠诚度

表5:实验中检测到的语义错误样本,output中语义不对,比如预定义实体类型中不存在的实体类型。

结果表明,GPT-3倾向于生成自由形式的结果,Codex更忠实于上下文中提供的演示,因此对于IE任务更可预测

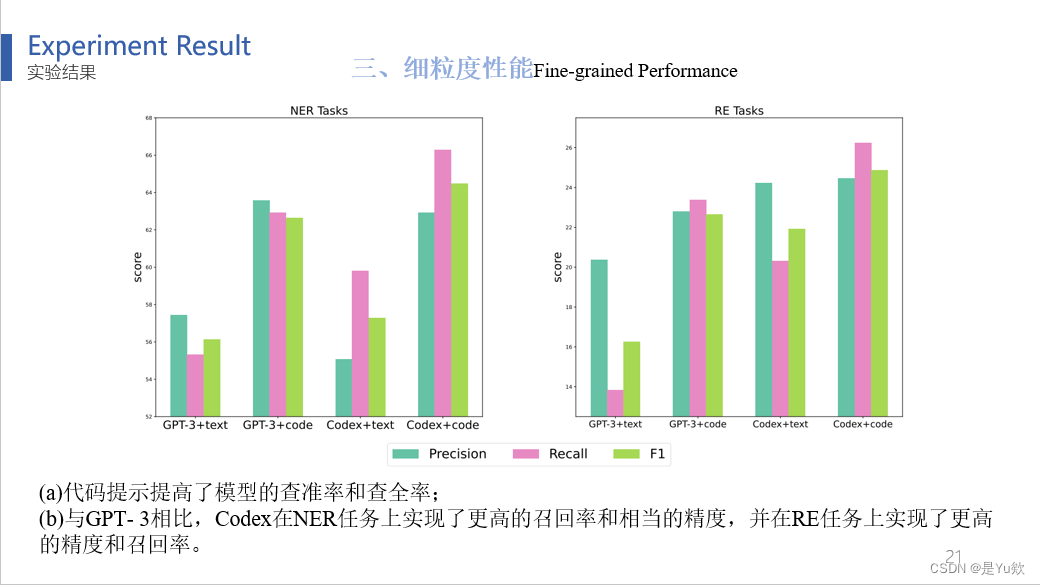

第三个,细粒度性能Fine-grained Performance

结果表明(a)代码提示提高了模型的查准率和查全率;

(b)与GPT- 3相比,Codex在NER任务上实现了更高的召回率和相当的精度,并在RE任务上实现了更高的精度和召回率。

研究总结

最后对这篇论文进行总结。

这篇论文提出的方法相对于其他顶刊论文来说,更加简单有效。它通过领域迁移,将文本生成转化为代码生成,设计上下文提示学习以替代仅提供API的大型模型微调。

未来的工作可考虑:

考虑设计更良好的代码格式提示。

目前是在黑盒模型GPT3和Codex上进行实验,之后可以在开源模型上进一步微调。

以及,在非英文数据集(如中文数据集)上探索本文模型的实用性。

这就是《CODEIE: Large Code Generation Models are Better Few-Shot Information Extractors》的主要内容和关键观点。感谢大家的聆听。

补充

条件困惑度

条件复杂度是一种用于评估模型在给定上下文下生成下一个标记的难度和质量的度量,

模型的目标是尽可能减少条件复杂度,以获得更高质量的生成结果。

语言模型的条件复杂度和代码模型的条件复杂度通常都基于困惑度(Perplexity)来计算,但有一些细微的差异,具体取决于模型的类型和应用领域。

1. 语言模型的条件复杂度:

语言模型的条件复杂度用于评估模型在给定上下文下生成下一个单词的质量。它通常采用以下方式计算:

- 给定一组文本数据(通常是测试集),将每个句子划分为多个标记(例如,单词或字符)。

- 模型接受一个前缀文本(通常是句子的一部分)作为输入,并尝试生成下一个标记。

- 通过将模型生成的标记与实际下一个标记进行比较,计算困惑度。困惑度通常使用交叉熵损失来计算。

数学表达式如下:

P e r p l e x i t y = exp ( − 1 N ∑ i = 1 N log P ( w i ∣ w 1 , w 2 , … , w i − 1 ) ) Perplexity = \exp\left(-\frac{1}{N} \sum_{i=1}^{N} \log P(w_i | w_1, w_2, \ldots, w_{i-1})\right) Perplexity=exp(−N1i=1∑NlogP(wi∣w1,w2,…,wi−1))

其中:

- P ( w i ∣ w 1 , w 2 , … , w i − 1 ) P(w_i | w_1, w_2, \ldots, w_{i-1}) P(wi∣w1,w2,…,wi−1)表示在给定前缀 w 1 , w 2 , … , w i − 1 w_1, w_2, \ldots, w_{i-1} w1,w2,…,wi−1的条件下,模型生成标记 w i w_i wi的概率。

- N N N表示测试集中的标记总数。

2. 代码模型的条件复杂度:

对于代码生成模型,条件复杂度的计算方式与语言模型类似,但有一些不同之处:

- 给定一组源代码或代码片段,将其分解为标记(例如,编程语言中的令牌或代码块)。

- 模型接受前缀代码或上下文,并尝试生成下一个代码标记。

- 使用交叉熵或其他损失函数计算条件复杂度,以评估模型在给定上下文下生成下一个代码标记的质量。

计算方式在代码模型中的具体实施可能因任务和模型架构而异,但基本原理与语言模型相似。

代码

https://github.com/artpli/CodeIE

我们的代码主要是从 UIE 和 CoCoGen 代码仓库修改而来的。

我们更新了源文件的初始版本。有关数据处理和代码的更多信息将在后续更新中提供。通知:

一个不太好的消息是,Codex 模型现在已被 OpenAI 弃用,这将对复制本文产生重大影响。一些可能的解决方案包括

申请 OpenAI的研究人员访问计划或访问 Azure OpenAI 服务上的 Codex。

由于我们使用的是 OpenAI 的闭源API,因此我们不知道它们背后的技术细节,例如使用的特定预训练语料库。因此,在评估我们的论文时可能存在潜在的数据污染问题。如果可能的话,我们会在更多的开源模型上评估我们的方法。

Codex被弃用了,但OpenAI建议所有用户从Codex切换到GPT-3.5 Turbo,它既可以完成编码任务,又可以补充灵活的自然语言功能。

代码目录

api

openai_api_wrapper.py

import os

from typing import Dict, Any

import openai

from src.prompt.constants import END, END_LINE

openai.api_key = os.getenv("OPENAI_API_KEY")

class OpenaiAPIWrapper:

@staticmethod

def call(prompt: str, max_tokens: int, engine: str) -> dict:

response = openai.Completion.create(

engine=engine,

prompt=prompt,

temperature=0.0,

max_tokens=max_tokens,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=[END, END_LINE],

# logprobs=3,

best_of=1

)

return response

@staticmethod

def parse_response(response) -> Dict[str, Any]:

text = response["choices"][0]["text"]

return text

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

这段代码是一个Python脚本,它包含了一个名为OpenaiAPIWrapper的类,该类提供了与OpenAI的GPT-3 API交互的功能。这个类的主要目的是通过给定的提示(prompt)来请求GPT-3生成文本,并解析生成的文本。

具体来说,这段代码的功能如下:

-

导入必要的模块和库,包括

os、openai,以及一些类型提示(typing模块)和其他自定义模块。 -

设置OpenAI API密钥,它是在环境变量中查找的,这个密钥用于身份验证并授权访问OpenAI的GPT-3服务。

-

定义了一个名为

OpenaiAPIWrapper的类,该类包含两静态方法。-

call方法接受三个参数:prompt(提示文本,用于生成文本的输入)、max_tokens(生成的文本的最大令牌数)、engine(指定GPT-3的引擎)。它使用这些参数通过OpenAI API发送请求,并返回生成的文本作为响应。 -

parse_response方法接受一个响应对象,从中提取生成的文本,并返回它作为字符串。

-

这个代码段的主要目的是:为了通过OpenAI的API与GPT-3交互,以便生成自然语言文本,然后将生成的文本提取出来以供后续处理或显示。

query_openai_over_tasks.py

给定一个提示文件(prompt file)和一个任务文件(task file),任务文件包含以下字段:

- input_prompt:用于提示Codex的代码。

- reference_code:期望的完成代码。

- reference_graph:期望的图形(可能是与代码相关的图形)。

对于每个input_prompt,运行Codex的推断,并将以下字段添加到输出文件:

- generated_code:生成的代码。

- generated_graph:生成的图形。

文件可以包含其他元数据,但上述字段是必需的。

"""

Given a prompt file and path to a task file with the following fields:

1. input_prompt: the code used to prompt codex

2. reference_code: expected completed code

3. reference_graph: expected graph

Runs inference over codex for each input_prompt, and adds the following fields to the output file:

4. generated_code: generated code

5. generated_graph: generated graph

The file can contain other metadata, but the fields above are required.

"""

import os

import sys

sys.path.append(os.getcwd())

from datetime import datetime

import shutil

import time

import openai

import pandas as pd

from tqdm import tqdm

import logging

import os

import pickle

from src.converters.structure_converter import StructureConverter

from src.converters.get_converter import ConverterFactory

from openai_api_wrapper import OpenaiAPIWrapper

from src.prompt.constants import END

from src.utils.file_utils import load_yaml,load_schema

logging.basicConfig(level=logging.INFO)

def run(task_file_path: str,

num_tasks: int,

start_idx: int,

output_file_path: str,

prompt_path: str,

keep_writing_output: bool,

engine: str,

max_tokens:int,

max_requests_per_min: int,

schema_path:str,

map_config_path:str,

start_cut_num:int):

tasks = pd.read_json(task_file_path, orient='records', lines=True)

converter = ConverterFactory.get_converter(args.job_type,schema_folder=schema_path, map_config_path=map_config_path)

if num_tasks != -1:

tasks = tasks.iloc[start_idx: start_idx+num_tasks]

fixed_prompt_text = read_prompt(prompt_path)

results = []

cache = load_cache(output_file_path)

num_requests = 0

time_begin = time.time()

failed_list = []

max_failed_time = 10

max_failed_taskes = 10

for task_idx, task in tqdm(tasks.iterrows(), total=len(tasks)):

is_success = False

tmp_failed_time = 0

while is_success is False and tmp_failed_time < max_failed_time:

cut_prompt_examples_list = [None, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

cut_prompt_examples_list = cut_prompt_examples_list[start_cut_num:]

for cut_prompt_examples in cut_prompt_examples_list:

try:

num_requests += 1

request_per_minute = maintain_request_per_minute(

num_requests=num_requests, time_begin=time_begin,

max_requests_per_min=max_requests_per_min, task_idx=task_idx)

logging.info("\n")

logging.info(

f"Task {task_idx} > request/minute = {request_per_minute:.2f}")

task_results = run_task(task=task, fixed_prompt_text=fixed_prompt_text,

cache=cache, converter=converter,

cut_prompt_examples=cut_prompt_examples, task_idx=task_idx,

engine=engine, max_tokens=max_tokens)

task_results['id'] = task_idx

results.append(task_results)

is_success = True

break

except openai.error.InvalidRequestError as e:

logging.info(

f"InvalidRequestError: {e}, trying with shorter prompt (cut_prompt_examples={cut_prompt_examples + 1 if cut_prompt_examples is not None else 1})")

# sleep for a bit to further avoid rate limit exceeded exceptions

if cut_prompt_examples != cut_prompt_examples_list[-1]:

time.sleep(5)

continue

else:

tmp_failed_time = max_failed_time

logging.info(f"Failed too many times: {tmp_failed_time}")

except Exception as e: # something else went wrong

logging.info(f"Task {task_idx} failed: {e}")

tmp_failed_time += 1

time.sleep(5 * tmp_failed_time)

logging.info(f"Restart task {task_idx}")

break

if is_success and keep_writing_output:

pd.DataFrame(results).to_json(

output_file_path, orient='records', lines=True)

if is_success == False:

failed_list.append(task_idx)

logging.info(f"Task {task_idx} failed {max_failed_time} times, skipped and recorded.")

if failed_list != []:

print ("failed list:\n", failed_list)

if len(failed_list) > max_failed_taskes:

print ("too many failed taskes. exit().")

exit(0)

print(

f"Ran {len(results)} out of {len(tasks)} tasks ({len(results) / len(tasks):.2%})")

pd.DataFrame(results).to_json(

output_file_path, orient='records', lines=True)

if failed_list != []:

print ("failed list:\n", failed_list)

output_path = output_file_path.rstrip('.jsonl') + '_failed_list.pkl'

with open(output_path,"w") as fout:

pickle.dump(failed_list,fout)

print ("failed list saved into: ", output_path)

def run_task(task: dict, fixed_prompt_text: str, cache: dict,

converter: StructureConverter, task_idx: int, engine: str,

max_tokens: int, cut_prompt_examples: int = None) -> dict:

"""Runs the task, and returns the results.

Args:

task (dict): The task input

fixed_prompt_text (str): Used for cases where the input prompt is fixed

cache (dict): cache of previous results

converter (GraphPythonConverter): A graph-python converter to parse results

cut_prompt_examples (int, optional): If provided, the first `cut_prompt_examples` examples are

deleted. Prevents 4096 errors. Defaults to None.

Returns:

dict: A dictionary with the results.

"""

start_time = time.time()

prompt_text = fixed_prompt_text if fixed_prompt_text is not None else task['prompt']

if cut_prompt_examples is not None:

prompt_text_parts = prompt_text.split(END)

prompt_text = END.join(prompt_text_parts[cut_prompt_examples:])

if task['input_prompt'] in cache:

logging.info(

f"Task {task_idx} > Using cached result for {task['input_prompt']}")

codex_response = cache[task['input_prompt']]["codex_response"]

else:

codex_response = query_codex(task, prompt_text, engine, max_tokens=max_tokens)

completed_code = get_completed_code(task, codex_response)

task_results = {k: v for (k, v) in task.items()}

task_results["codex_response"] = codex_response

task_results["generated_code"] = completed_code

task_results["elapsed_time"] = time.time() - start_time

return task_results

def maintain_request_per_minute(num_requests: int, time_begin: float, max_requests_per_min: int, task_idx: int) -> float:

request_per_minute = get_request_per_minute(num_requests, time_begin)

logging.info("\n")

while request_per_minute > max_requests_per_min:

logging.info(

f"Task {task_idx} > Sleeping! (Requests/minute = {request_per_minute:.2f} > {max_requests_per_min:.2f})")

time.sleep(1)

request_per_minute = get_request_per_minute(

num_requests, time_begin)

return request_per_minute

def read_prompt(prompt_path):

if prompt_path is None:

return None

with open(prompt_path, "r") as f:

prompt = f.read()

return prompt

def load_cache(output_file_path: str):

"""We don't want to query codex repeatedly for the same input. If an output file exists, this

function creates a "cache" of the results.

The cache is implemented as a hashmap keyed by `input_prompt`, and maps to the

entire output entry

Args:

output_file_path (str): _description_

"""

if not os.path.exists(output_file_path):

return {}

else:

# make a backup of the file already there

shutil.copyfile(output_file_path, output_file_path + "_" + datetime.now().strftime("%Y%m%d_%H%M%S"))

shutil.copy(output_file_path, output_file_path + ".bak")

cache_data = pd.read_json(

output_file_path, orient='records', lines=True)

cache = {row['input_prompt']: row.to_dict()

for _, row in cache_data.iterrows()}

return cache

def query_codex(task: dict, prompt_text: str, engine: str, max_tokens: int):

prompt = f"{prompt_text} {task['input_prompt']}"

response = OpenaiAPIWrapper.call(

prompt=prompt, max_tokens=max_tokens, engine=engine)

return response

def get_completed_code(task: dict, codex_response: dict) -> str:

completed_code = OpenaiAPIWrapper.parse_response(codex_response)

all_code = f"{task['input_prompt']}{completed_code}"

# NOTE: space is already taken care of, no need to add it again, otherwise indentation will be off

return all_code

def get_request_per_minute(num_request: int, begin_time: float) -> float:

elapsed_time = time.time() - begin_time

request_per_minute = (num_request / elapsed_time) * 60

return request_per_minute

if __name__ == "__main__":

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--task_file_path", type=str, required=True)

parser.add_argument("--num_tasks", type=int, required=True)

parser.add_argument("--start_idx", type=int, required=True)

parser.add_argument("--output_file_path", type=str, required=True)

parser.add_argument("--prompt_path", type=str,

required=False, default=None)

parser.add_argument("--job_type", type=str, required=True,

choices=ConverterFactory.supported_converters)

parser.add_argument("--keep_writing_output",

action="store_true", default=True)

parser.add_argument("--engine", type=str, required=True)

parser.add_argument("--max_requests_per_min", type=int, default=10)

parser.add_argument("--max_tokens", type=int, default=280)

parser.add_argument("--schema_path", type=str, required=True)

parser.add_argument("--map_config_path", type=str, required=True)

parser.add_argument("--start_cut_num", type=int, default=0)

args = parser.parse_args()

run(task_file_path=args.task_file_path, num_tasks=args.num_tasks,start_idx=args.start_idx,

output_file_path=args.output_file_path, prompt_path=args.prompt_path,

keep_writing_output=args.keep_writing_output, engine=args.engine,

max_requests_per_min=args.max_requests_per_min,

max_tokens=args.max_tokens,schema_path=args.schema_path,

map_config_path=args.map_config_path,start_cut_num=args.start_cut_num)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

分段解读 def run:记录任务的执行情况,保存任务的结果,以及对于失败任务的记录和保存

def run(task_file_path: str,

num_tasks: int,

start_idx: int,

output_file_path: str,

prompt_path: str,

keep_writing_output: bool,

engine: str,

max_tokens:int,

max_requests_per_min: int,

schema_path:str,

map_config_path:str,

start_cut_num:int):

tasks = pd.read_json(task_file_path, orient='records', lines=True)

converter = ConverterFactory.get_converter(args.job_type,schema_folder=schema_path, map_config_path=map_config_path)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

这段代码定义了一个名为run的Python函数,该函数接受多个参数,并执行一些任务。以下是每个参数的解释:

task_file_path(str):任务文件的路径,包含任务信息。num_tasks(int):任务的数量。start_idx(int):任务的起始索引。output_file_path(str):输出文件的路径,用于将结果写入。prompt_path(str):提示文件的路径,可能包含用于代码生成的输入提示。keep_writing_output(bool):一个布尔值,指示是否保持写入输出。可能用于控制写入的方式。engine(str):引擎名称,用于执行任务,可能与某种代码生成引擎相关。max_tokens(int):最大令牌数,可能用于限制生成的代码的长度。max_requests_per_min(int):每分钟的最大请求数。schema_path(str):模式文件的路径,可能包含任务的数据模式。map_config_path(str):映射配置文件的路径,用于数据转换或映射。start_cut_num(int):一个整数,可能用于指示某种切割操作的起始数量。

在函数内部,它首先读取了一个任务文件并将其存储在名为tasks的DataFrame中。然后,它使用ConverterFactory类中的get_converter方法来创建一个converter对象,该对象用于根据作业类型(args.job_type)执行某些转换任务,并使用给定的模式文件和映射配置文件。

if num_tasks != -1:

tasks = tasks.iloc[start_idx: start_idx+num_tasks]

fixed_prompt_text = read_prompt(prompt_path)

results = []

cache = load_cache(output_file_path)

num_requests = 0

time_begin = time.time()

failed_list = []

max_failed_time = 10

max_failed_taskes = 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

在给定的代码段中,如果num_tasks参数的值不等于-1,那么它会对任务进行切片操作,保留从start_idx到start_idx+num_tasks的子集。

然后,代码从一个名为prompt_path的文件中读取提示文本(这个提示文本包含用于代码生成的输入提示),并将其存储在fixed_prompt_text变量中。

接下来,代码初始化了一些变量,包括:

results:用于存储任务的结果的空列表。cache:通过加载一个输出文件来初始化的,这个文件用于缓存结果。num_requests:用于跟踪已经发出的请求数量的变量。time_begin:用于记录时间的变量,可能用于计算运行时间。

此外,还定义了以下变量:

failed_list:用于存储失败任务的列表。max_failed_time:最大失败时间,可能用于指示失败任务的最大允许时间。max_failed_taskes:最大失败任务数,可能用于指示允许的最大失败任务数量。

这些变量在接下来的代码中可能会用于跟踪和处理任务的执行以及失败的情况。接下来的代码段可能包括任务的执行和结果的收集,以及处理失败任务的逻辑。

for task_idx, task in tqdm(tasks.iterrows(), total=len(tasks)):

is_success = False

tmp_failed_time = 0

while is_success is False and tmp_failed_time < max_failed_time:

cut_prompt_examples_list = [None, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

cut_prompt_examples_list = cut_prompt_examples_list[start_cut_num:]

for cut_prompt_examples in cut_prompt_examples_list:

try:

num_requests += 1

request_per_minute = maintain_request_per_minute(

num_requests=num_requests, time_begin=time_begin,

max_requests_per_min=max_requests_per_min, task_idx=task_idx)

logging.info("\n")

logging.info(

f"Task {task_idx} > request/minute = {request_per_minute:.2f}")

task_results = run_task(task=task, fixed_prompt_text=fixed_prompt_text,

cache=cache, converter=converter,

cut_prompt_examples=cut_prompt_examples, task_idx=task_idx,

engine=engine, max_tokens=max_tokens)

task_results['id'] = task_idx

results.append(task_results)

is_success = True

break

except openai.error.InvalidRequestError as e:

logging.info(

f"InvalidRequestError: {e}, trying with shorter prompt (cut_prompt_examples={cut_prompt_examples + 1 if cut_prompt_examples is not None else 1})")

# sleep for a bit to further avoid rate limit exceeded exceptions

if cut_prompt_examples != cut_prompt_examples_list[-1]:

time.sleep(5)

continue

else:

tmp_failed_time = max_failed_time

logging.info(f"Failed too many times: {tmp_failed_time}")

except Exception as e: # something else went wrong

logging.info(f"Task {task_idx} failed: {e}")

tmp_failed_time += 1

time.sleep(5 * tmp_failed_time)

logging.info(f"Restart task {task_idx}")

break

if is_success and keep_writing_output:

pd.DataFrame(results).to_json(

output_file_path, orient='records', lines=True)

if is_success == False:

failed_list.append(task_idx)

logging.info(f"Task {task_idx} failed {max_failed_time} times, skipped and recorded.")

if failed_list != []:

print ("failed list:\n", failed_list)

if len(failed_list) > max_failed_taskes:

print ("too many failed taskes. exit().")

exit(0)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

这段代码是一个循环,用于处理任务列表中的每个任务。以下是代码的主要逻辑:

-

循环迭代任务列表中的每个任务(通过

for task_idx, task in tqdm(tasks.iterrows(), total=len(tasks))实现迭代)。 -

在每次迭代中,设置了一个

is_success标志,用于跟踪任务是否成功完成。还有一个tmp_failed_time变量,用于跟踪任务失败的时间。 -

在一个while循环内,当

is_success为False且tmp_failed_time小于max_failed_time时,会尝试执行任务。该while循环将重试直到任务成功或达到最大失败时间。 -

在内部循环中,任务会被分成多个部分,通过

cut_prompt_examples_list来控制。每个部分都会尝试执行。如果任务成功完成,is_success标志将设置为True,并跳出内部循环。如果任务失败,则会捕获异常,并根据不同的异常类型采取不同的措施,包括减少任务提示的长度、等待一段时间,以及记录失败的任务。 -

如果

is_success为True,将任务结果添加到results列表中,然后写入到输出文件中。 -

如果

is_success为False,表示任务在允许的失败时间内无法成功执行,将任务索引添加到failed_list列表中,并记录失败的任务。如果failed_list不为空,它会将失败的任务索引打印出来。如果失败的任务数超过了max_failed_taskes,则终止程序。

总之,这段代码负责循环处理任务列表中的每个任务,监视任务的成功与失败,并根据情况采取相应的措施,包括重试任务、记录失败的任务以及终止程序。这个循环是任务执行和处理失败任务的核心部分。

print(

f"Ran {len(results)} out of {len(tasks)} tasks ({len(results) / len(tasks):.2%})")

pd.DataFrame(results).to_json(

output_file_path, orient='records', lines=True)

if failed_list != []:

print ("failed list:\n", failed_list)

output_path = output_file_path.rstrip('.jsonl') + '_failed_list.pkl'

with open(output_path,"w") as fout:

pickle.dump(failed_list,fout)

print ("failed list saved into: ", output_path)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

这段代码是在处理所有任务后执行的一些收尾工作:

-

首先,它打印出已经运行了多少个任务,以及总共有多少任务,并以百分比形式表示已完成任务的比例。

-

然后,它将任务结果存储为JSON文件(output_file_path),使用

pd.DataFrame(results).to_json方法将results列表的内容写入文件。这个文件将包含每个任务的生成结果。 -

接下来,如果存在失败的任务(

failed_list不为空),它会打印出失败任务的索引,并将这个失败列表保存为一个.pkl文件。保存的文件名是在原始output_file_path的基础上,去掉扩展名.jsonl并添加_failed_list.pkl后缀。 -

最后,它会打印出失败任务列表已保存的文件路径。

这段代码用于记录任务的执行情况,保存任务的结果,以及对于失败任务的记录和保存。它提供了任务执行的总结信息,并保存了任务的结果和失败列表。

def run_task:执行一个给定任务,与Codex进行交互,获取生成的代码,记录执行时间,并返回任务的结果

def run_task(task: dict, fixed_prompt_text: str, cache: dict,

converter: StructureConverter, task_idx: int, engine: str,

max_tokens: int, cut_prompt_examples: int = None) -> dict:

"""Runs the task, and returns the results.

Args:

task (dict): The task input

fixed_prompt_text (str): Used for cases where the input prompt is fixed

cache (dict): cache of previous results

converter (GraphPythonConverter): A graph-python converter to parse results

cut_prompt_examples (int, optional): If provided, the first `cut_prompt_examples` examples are

deleted. Prevents 4096 errors. Defaults to None.

Returns:

dict: A dictionary with the results.

"""

start_time = time.time()

prompt_text = fixed_prompt_text if fixed_prompt_text is not None else task['prompt']

if cut_prompt_examples is not None:

prompt_text_parts = prompt_text.split(END)

prompt_text = END.join(prompt_text_parts[cut_prompt_examples:])

if task['input_prompt'] in cache:

logging.info(

f"Task {task_idx} > Using cached result for {task['input_prompt']}")

codex_response = cache[task['input_prompt']]["codex_response"]

else:

codex_response = query_codex(task, prompt_text, engine, max_tokens=max_tokens)

completed_code = get_completed_code(task, codex_response)

task_results = {k: v for (k, v) in task.items()}

task_results["codex_response"] = codex_response

task_results["generated_code"] = completed_code

task_results["elapsed_time"] = time.time() - start_time

return task_results

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

这段代码定义了一个名为run_task的函数,它用于运行一个任务并返回结果。以下是函数的主要参数和功能:

task(dict):任务的输入,是一个包含任务信息的字典。fixed_prompt_text(str):用于固定输入提示文本的字符串,如果不为None,则会使用它而不是任务字典中的prompt字段。cache(dict):包含先前结果的缓存字典。converter(StructureConverter):用于解析结果的转换器对象。cut_prompt_examples(int,可选):一个整数,如果提供,将删除提示文本的前cut_prompt_examples个示例以防止4096错误。默认为None。

函数的主要功能如下:

-

计时开始,记录开始时间。

-

根据输入参数,确定要使用的提示文本,如果

fixed_prompt_text不为None,则使用它,否则使用任务字典中的prompt字段。如果提供了cut_prompt_examples,则截取提示文本的一部分以防止4096错误。 -

检查缓存中是否已经有了任务的结果,如果有,则从缓存中获取Codex的响应,否则使用

query_codex函数查询Codex以获取响应。 -

获取Codex响应后,通过

get_completed_code函数获取生成的代码。 -

将任务结果存储在一个字典中,包括任务的所有输入信息、Codex的响应、生成的代码和任务执行所花费的时间。

-

最后,返回包含任务结果的字典。

这个函数的主要目的是执行一个给定任务,与Codex进行交互,获取生成的代码,记录执行时间,并返回任务的结果。

def maintain_request_per_minute:控制每分钟的请求数以避免超出最大请求数

def maintain_request_per_minute(num_requests: int, time_begin: float, max_requests_per_min: int, task_idx: int) -> float:

request_per_minute = get_request_per_minute(num_requests, time_begin)

logging.info("\n")

while request_per_minute > max_requests_per_min:

logging.info(

f"Task {task_idx} > Sleeping! (Requests/minute = {request_per_minute:.2f} > {max_requests_per_min:.2f})")

time.sleep(1)

request_per_minute = get_request_per_minute(

num_requests, time_begin)

return request_per_minute

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

这段代码定义了一个名为maintain_request_per_minute的函数,用于控制每分钟的请求数以避免超出最大请求数。以下是函数的主要参数和功能:

num_requests(int):已经发出的请求数。time_begin(float):开始计时的时间。max_requests_per_min(int):每分钟的最大请求数限制。task_idx(int):任务的索引,用于记录日志。

函数的主要功能如下:

-

计算当前每分钟的请求速率,通过调用

get_request_per_minute函数,传递已发出的请求数和开始计时的时间。 -

如果当前的请求速率超过了最大请求数限制,就会进入一个while循环。在循环中,它会记录日志,指示正在等待以减少请求速率。

-

在每次循环中,它会等待1秒,然后再次计算请求速率。这样,它会一直等待,直到请求速率不再超过最大请求数限制。

-

一旦请求速率在允许的范围内,它会返回当前请求速率。

这个函数的目的是确保不会超出每分钟的最大请求数限制,以遵守请求速率的规则。如果请求速率太高,它会等待一段时间,直到速率降到允许的水平。

def read_prompt:读取提示

def read_prompt(prompt_path):

if prompt_path is None:

return None

with open(prompt_path, "r") as f:

prompt = f.read()

return prompt

- 1

- 2

- 3

- 4

- 5

- 6

这段代码定义了一个名为read_prompt的函数,用于从文件中读取提示文本。以下是函数的主要参数和功能:

prompt_path:提示文本文件的路径。

函数的主要功能如下:

-

首先,它检查

prompt_path是否为None。如果prompt_path为None,则函数直接返回None,表示没有可用的提示文本。 -

如果

prompt_path不为None,则使用with语句打开文件,读取文件中的文本内容,并将其存储在prompt变量中。 -

最后,函数返回读取的提示文本。

这个函数的目的是从指定的文件中读取提示文本,并将其返回,以供后续任务使用。如果文件路径为None,则返回None表示没有可用的提示文本。

def load_cache:创建一个缓存以存储查询结果,以避免重复查询相同的输入

def load_cache(output_file_path: str):

"""We don't want to query codex repeatedly for the same input. If an output file exists, this

function creates a "cache" of the results.

The cache is implemented as a hashmap keyed by `input_prompt`, and maps to the

entire output entry

Args:

output_file_path (str): _description_

"""

if not os.path.exists(output_file_path):

return {}

else:

# make a backup of the file already there

shutil.copyfile(output_file_path, output_file_path + "_" + datetime.now().strftime("%Y%m%d_%H%M%S"))

shutil.copy(output_file_path, output_file_path + ".bak")

cache_data = pd.read_json(

output_file_path, orient='records', lines=True)

cache = {row['input_prompt']: row.to_dict()

for _, row in cache_data.iterrows()}

return cache

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

这段代码定义了一个名为load_cache的函数,用于创建一个缓存以存储查询结果,以避免重复查询相同的输入。以下是函数的主要参数和功能:

output_file_path:用于存储缓存的文件路径。

函数的主要功能如下:

-

首先,它检查是否存在指定的

output_file_path文件。如果文件不存在,它会返回一个空的缓存字典(空的哈希映射)。 -

如果文件存在,它会执行以下操作:

- 创建一个具有当前时间戳作为后缀的备份文件,以便保留文件的备份。

- 创建一个名为

output_file_path.bak的文件,作为原始文件的备份副本。 - 从

output_file_path文件中读取数据,以解析缓存的内容。 - 创建一个字典

cache,其中每个缓存条目的键是input_prompt,值是整个输出条目的字典表示。

-

最后,函数返回创建的缓存字典。

这个函数的目的是在指定的文件中创建一个缓存,用于存储查询的结果。如果文件不存在,它返回一个空的缓存字典。如果文件存在,它会创建一个包含缓存数据的字典,以便在以后的查询中可以快速查找和检索已缓存的结果。同时,它也会对原始文件进行备份,以便在需要时可以还原。

def query_codex:向Codex(AI引擎)发出查询,以获取生成的代码

def query_codex(task: dict, prompt_text: str, engine: str, max_tokens: int):

prompt = f"{prompt_text} {task['input_prompt']}"

response = OpenaiAPIWrapper.call(

prompt=prompt, max_tokens=max_tokens, engine=engine)

return response

- 1

- 2

- 3

- 4

- 5

这段代码定义了一个名为query_codex的函数,用于向Codex(可能是GPT-3或其他类似的AI引擎)发出查询以获取生成的代码。以下是函数的主要参数和功能:

task(dict):任务的输入,是一个包含任务信息的字典。prompt_text(str):用于查询的提示文本。engine(str):用于执行任务的引擎名称。max_tokens(int):生成的代码的最大令牌数。

函数的主要功能如下:

-

构建完整的提示文本,将输入提示(

task['input_prompt'])附加到传入的提示文本(prompt_text)后面。 -

使用

OpenaiAPIWrapper中的call方法,向Codex引擎发送查询请求,传递生成的提示文本、最大令牌数(max_tokens)和引擎名称(engine)。 -

函数将Codex的响应作为结果返回。

这个函数的主要目的是执行查询,以获取与给定任务和提示相关的生成代码。它使用提供的引擎和参数来发出查询请求,并将Codex的响应返回供后续处理。

def get_completed_code:从Codex的响应中提取生成的代码,并将其与任务的输入提示合并,以获得完整的生成代码

def get_completed_code(task: dict, codex_response: dict) -> str:

completed_code = OpenaiAPIWrapper.parse_response(codex_response)

all_code = f"{task['input_prompt']}{completed_code}"

# NOTE: space is already taken care of, no need to add it again, otherwise indentation will be off

return all_code

- 1

- 2

- 3

- 4

- 5

这段代码定义了一个名为get_completed_code的函数,用于从Codex的响应中提取生成的代码。以下是函数的主要参数和功能:

task(dict):任务的输入,是一个包含任务信息的字典。codex_response(dict):Codex的响应,可能包含生成的代码。

函数的主要功能如下:

-

使用

OpenaiAPIWrapper中的parse_response方法,从Codex的响应(codex_response)中提取生成的代码,并将其存储在completed_code变量中。 -

将生成的代码与任务的输入提示(

task['input_prompt'])合并,以获得完整的代码。这是通过将输入提示和生成的代码连接在一起来实现的,不需要额外的空格或缩进。 -

返回包含完整代码的字符串(

all_code)。

这个函数的目的是从Codex的响应中提取生成的代码,并将其与任务的输入提示合并,以获得完整的生成代码。这个生成的代码可以在后续任务中使用或记录下来。

def get_request_per_minute:根据已经发出的请求数和经过的时间来计算每分钟的请求速率,以便在维持请求速率时使用

def get_request_per_minute(num_request: int, begin_time: float) -> float:

elapsed_time = time.time() - begin_time

request_per_minute = (num_request / elapsed_time) * 60

return request_per_minute

- 1

- 2

- 3

- 4

- 5

这段代码定义了一个名为get_request_per_minute的函数,用于计算每分钟的请求速率。以下是函数的主要参数和功能:

num_request(int):已经发出的请求数。begin_time(float):开始计时的时间。

函数的主要功能如下:

-

计算从

begin_time开始到当前时间的经过的时间(elapsed_time),使用time.time()函数来获取当前时间戳。 -

使用已发出的请求数(

num_request)除以经过的时间(elapsed_time),然后乘以60,以得到每分钟的请求速率。 -

返回计算出的请求速率(

request_per_minute)。

这个函数的目的是根据已经发出的请求数和经过的时间来计算每分钟的请求速率,以便在维持请求速率时使用。

主函数:(通用模版)从命令行传递参数来配置任务的执行,然后根据这些参数来执行任务

if __name__ == "__main__":

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--task_file_path", type=str, required=True)

parser.add_argument("--num_tasks", type=int, required=True)

parser.add_argument("--start_idx", type=int, required=True)

parser.add_argument("--output_file_path", type=str, required=True)

parser.add_argument("--prompt_path", type=str,

required=False, default=None)

parser.add_argument("--job_type", type=str, required=True,

choices=ConverterFactory.supported_converters)

parser.add_argument("--keep_writing_output",

action="store_true", default=True)

parser.add_argument("--engine", type=str, required=True)

parser.add_argument("--max_requests_per_min", type=int, default=10)

parser.add_argument("--max_tokens", type=int, default=280)

parser.add_argument("--schema_path", type=str, required=True)

parser.add_argument("--map_config_path", type=str, required=True)

parser.add_argument("--start_cut_num", type=int, default=0)

args = parser.parse_args()

run(task_file_path=args.task_file_path, num_tasks=args.num_tasks,start_idx=args.start_idx,

output_file_path=args.output_file_path, prompt_path=args.prompt_path,

keep_writing_output=args.keep_writing_output, engine=args.engine,

max_requests_per_min=args.max_requests_per_min,

max_tokens=args.max_tokens,schema_path=args.schema_path,

map_config_path=args.map_config_path,start_cut_num=args.start_cut_num)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

这段代码是一个主程序入口,用于执行任务。它使用argparse模块来解析命令行参数,根据用户提供的参数来调用run函数,执行任务。以下是它的主要功能和参数:

-

使用

argparse创建一个命令行解析器(parser)。 -

添加一系列命令行参数,包括:

task_file_path:任务文件的路径。num_tasks:任务的数量。start_idx:任务的起始索引。output_file_path:输出文件的路径。prompt_path:提示文件的路径(可选参数,默认为None)。job_type:作业类型,从支持的作业类型中选择。keep_writing_output:一个布尔标志,指示是否保持写入输出(默认为True)。engine:用于执行任务的引擎名称。max_requests_per_min:每分钟的最大请求数(默认为10)。max_tokens:生成的代码的最大令牌数(默认为280)。schema_path:模式文件的路径。map_config_path:映射配置文件的路径。start_cut_num:一个整数,用于指示从输入提示中删除的示例数量(默认为0)。

-

使用

parser.parse_args()解析命令行参数,并将结果存储在args变量中。 -

调用

run函数,传递解析后的参数,以执行任务。

这个主程序入口的目的是允许用户从命令行传递参数来配置任务的执行,然后根据这些参数来执行任务。这是一个通用的模板,可以根据不同的需求和任务来定制。

converters

ner

structure2nl_sel_v1.py:将文本结构化成适用于自然语言处理任务的输入数据,然后将模型的输出结果转换回结构化的数据

import json

import re

from collections import OrderedDict

from typing import List, Union, Dict, Tuple

from src.converters.structure_converter import StructureConverter

from src.converters.record import EntityRecord, RelationRecord

from src.utils.file_utils import load_yaml,load_schema

from uie.sel2record.record import MapConfig

from uie.sel2record.sel2record import SEL2Record

class NLSELPromptCreator(StructureConverter):

def __init__(self, schema_folder=None, map_config_path=None):

self.schema_dict = SEL2Record.load_schema_dict(schema_folder)

self.decoding = 'spotasoc'

record_schema = self.schema_dict['record']

self.entity_schema = record_schema.type_list

self.relation_schema = record_schema.role_list

self.spot_asoc = record_schema.type_role_dict

self.map_config = MapConfig.load_from_yaml(map_config_path)

def structure_to_input(self, input_dict: dict, prompt_part_only: bool = False):

"""

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'spot': ['organization'],

"""

text = input_dict['text']

record = input_dict['record']

prompt = []

input = ['The text is : ',

"\"" + text + "\". ",

"The named entities in the text: "

]

prompt.extend(input)

if prompt_part_only:

return ''.join(prompt)

return ''.join(prompt) + '\n'

def output_to_structure(self, input_dict, output_str):

"""

sample:

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'tokens': ['CRICKET', '-', 'LEICESTERSHIRE', 'TAKE', 'OVER', 'AT', 'TOP', 'AFTER', 'INNINGS', 'VICTORY', '.'],

'record': '<extra_id_0> <extra_id_0> organization <extra_id_5> LEICESTERSHIRE <extra_id_1> <extra_id_1>',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'relation': [],

'event': [],

'spot': ['organization'],

'asoc': [],

'spot_asoc': [{'span': 'LEICESTERSHIRE', 'label': 'organization', 'asoc': []}]}

code:

The text is : "CRICKET -

LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .".

Find named entities such as organization, person,

miscellaneous, location in the text. The organization

"LEICESTERSHIRE" exist in the text.

:param sample:

:param code:

:return:

"""

text = input_dict['text']

tokens = input_dict['tokens']

sel2record = SEL2Record(

schema_dict=self.schema_dict,

decoding_schema=self.decoding,

map_config=self.map_config,

)

pattern = re.compile(r"The named entities in the text:\s*(.*)")

pred = re.search(pattern, output_str).group(1)

# print ("pred: ")

# print (pred)

pred_record = sel2record.sel2record(pred, text, tokens)

return pred_record

if __name__ == "__main__":

schema_folder = 'data/conll03'

map_config_path = 'config/offset_map/first_offset_en.yaml'

val_path = 'data/conll03/val.json'

with open(val_path) as fin:

line = fin.readline()

line = eval(line.strip())

data = line

# print ("dev data:\n", data)

converter = NLSELPromptCreator(schema_folder=schema_folder,

map_config_path=map_config_path)

# convert the whole sample

prompt = converter.structure_to_input(data, prompt_part_only=False)

# print ("prompt:\n", prompt)

# we have to provide the init state to the sample

# prompt = converter.generate_sample_head(data)

# print("sample head: ", prompt)

code = """

The text is : "CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .". The named entities in the text: <extra_id_0> <extra_id_0> organization <extra_id_5> LEICESTERSHIRE <extra_id_1> <extra_id_1>

"""

data = {"text":"Enterprises from domestic coastal provinces and cities increased , and there are altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting .","tokens":["Enterprises","from","domestic","coastal","provinces","and","cities","increased",",","and","there","are","altogether","30","enterprise","representatives","from","30","provinces",",","cities","and","autonomous","regions","coming","to","this","meeting","."],"entity":[{"type":"geographical social political","offset":[2,3,4,5,6],"text":"domestic coastal provinces and cities"},{"type":"geographical social political","offset":[17,18,19,20,21,22,23],"text":"30 provinces , cities and autonomous regions"},{"type":"organization","offset":[0,1,2,3,4,5,6],"text":"Enterprises from domestic coastal provinces and cities"},{"type":"person","offset":[12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27],"text":"altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting"}],"relation":[],"event":[],"spot":["person","organization","geographical social political"],"asoc":[],"spot_asoc":[{"span":"Enterprises from domestic coastal provinces and cities","label":"organization","asoc":[]},{"span":"domestic coastal provinces and cities","label":"geographical social political","asoc":[]},{"span":"altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting","label":"person","asoc":[]},{"span":"30 provinces , cities and autonomous regions","label":"geographical social political","asoc":[]}]}

code = r'The text is : \"Enterprises from domestic coastal provinces and cities increased , and there are altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting .\". The named entities in the text: <extra_id_0> <extra_id_0> organization <extra_id_5> Enterprises from domestic coastal provinces and cities <extra_id_1> <extra_id_0> geographical social political <extra_id_5> domestic coastal provinces and cities <extra_id_1> <extra_id_0> person <extra_id_5> altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions <extra_id_1> <extra_id_0> geographical social political <extra_id_5> provinces , cities and autonomous regions <extra_id_1> <extra_id_0> geographical social political <extra_id_5> provinces <extra_id_1> <extra_id_0> geographical social political <extra_id_5> cities <extra_id_1> <extra_id_0> geographical social political <extra_id_5> autonomous regions <extra_id_1> <extra_id_0> person <extra_id_5> this meeting <extra_id_1> <extra_id_1>\n'

# conver the prediction to the answers

predictions = converter.output_to_structure(data, code)

print (predictions)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

这段代码主要用于将文本结构化成适用于自然语言处理任务的输入数据,然后将模型的输出结果转换回结构化的数据。代码的核心部分包括以下功能:

-

导入必要的库和模块,包括

json、re、collections、typing等。还导入了一些自定义的模块,例如StructureConverter和其他用于处理数据的模块。 -

定义了一个名为

NLSELPromptCreator的类,该类继承自StructureConverter,用于将文本数据结构化为适用于自然语言处理任务的输入,并将模型的输出结果还原为结构化的数据。 -

类中的

__init__方法用于初始化类的属性,包括加载模式文件、解码模式、实体模式、关系模式和其他配置信息。 -

structure_to_input方法用于将输入数据结构化为适用于模型的输入格式。它接受输入字典和一个布尔参数prompt_part_only,根据输入数据生成用于模型的提示文本。 -

output_to_structure方法用于将模型的输出结果还原为结构化的数据。它接受输入字典和模型的输出字符串,并使用自定义的SEL2Record类进行解析,以还原结构化数据。 -

在

if __name__ == "__main__":部分,脚本展示了如何使用NLSELPromptCreator类来处理输入数据并还原模型的输出结果。具体来说,它加载了模式文件、配置文件和示例数据,然后调用structure_to_input方法生成模型输入的提示文本,接着将模型的输出结果传递给output_to_structure方法,以还原结构化数据。

总的来说,这段代码是用于数据处理和转换的工具,特别适用于将自然语言文本转化为适用于特定任务的输入格式,以及将模型的输出结果还原为结构化数据。这对于自然语言处理任务中的数据预处理和后处理非常有用。

structure2nl_sel_v2.py:将文本结构化成适用于自然语言处理任务的输入数据,然后将模型的输出结果转换回结构化的数据

import json

import re

from collections import OrderedDict

from typing import List, Union, Dict, Tuple

import numpy as np

from src.converters.structure_converter import StructureConverter

from src.converters.record import EntityRecord, RelationRecord

from src.utils.file_utils import load_yaml,load_schema

from uie.sel2record.record import MapConfig

from uie.sel2record.sel2record import SEL2Record

class NLSELPromptCreator(StructureConverter):

def __init__(self, schema_folder=None, map_config_path=None):

self.schema_dict = SEL2Record.load_schema_dict(schema_folder)

self.decoding = 'spotasoc'

record_schema = self.schema_dict['record']

self.entity_schema = record_schema.type_list

self.relation_schema = record_schema.role_list

self.spot_asoc = record_schema.type_role_dict

self.map_config = MapConfig.load_from_yaml(map_config_path)

def structure_to_input(self, input_dict: dict, prompt_part_only: bool = False):

"""

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'spot': ['organization'],

"""

text = input_dict['text']

record = input_dict['record']

prompt = []

input = ['The text is : ',

"\"" + text + "\". ",

"The named entities in the text: "

]

prompt.extend(input)

if prompt_part_only:

return ''.join(prompt)

record = record.replace('extra_id_','')

prompt.append(record)

return ''.join(prompt) + '\n'

def existing_nested(self, entity_dict_list):

entity_offset = []

for ent in entity_dict_list:

tmp_offset = ent['offset']

entity_offset.append(tmp_offset)

sorted_offset = sorted(entity_offset)

start = -1

end = -1

for so in sorted_offset:

temp_s, temp_e = so[0],so[-1]

if temp_s <= end:

return True

start = temp_s

end = temp_e

return False

def output_to_structure(self, input_dict, output_str):

"""

sample:

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'tokens': ['CRICKET', '-', 'LEICESTERSHIRE', 'TAKE', 'OVER', 'AT', 'TOP', 'AFTER', 'INNINGS', 'VICTORY', '.'],

'record': '<extra_id_0> <extra_id_0> organization <extra_id_5> LEICESTERSHIRE <extra_id_1> <extra_id_1>',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'relation': [],

'event': [],

'spot': ['organization'],

'asoc': [],

'spot_asoc': [{'span': 'LEICESTERSHIRE', 'label': 'organization', 'asoc': []}]}

code:

The text is : "CRICKET -

LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .".

Find named entities such as organization, person,

miscellaneous, location in the text. The organization

"LEICESTERSHIRE" exist in the text.

:param sample:

:param code:

:return:

"""

text = input_dict['text']

tokens = input_dict['tokens']

entity = input_dict['entity']

exist_nested = self.existing_nested(entity)

sel2record = SEL2Record(

schema_dict=self.schema_dict,

decoding_schema=self.decoding,

map_config=self.map_config,

)

pattern = re.compile(r"The named entities in the text:\s*(.*)")

pred = re.search(pattern, output_str).group(1)

pred = pred.strip()

#

# print ("text: ", text)

# print ("output_str: ", output_str)

# print ("pred: ", pred)

pred_record = sel2record.sel2record(pred, text, tokens)

pred_record['statistic']['complex'] = exist_nested

return pred_record

if __name__ == "__main__":

schema_folder = 'data/conll03'

map_config_path = 'config/offset_map/first_offset_en.yaml'

val_path = 'data/conll03/val.json'

with open(val_path) as fin:

line = fin.readline()

line = eval(line.strip())

data = line

# print ("dev data:\n", data)

converter = NLSELPromptCreator(schema_folder=schema_folder,

map_config_path=map_config_path)

# convert the whole sample

prompt = converter.structure_to_input(data, prompt_part_only=False)

print ("prompt:\n", prompt)

# we have to provide the init state to the sample

# prompt = converter.generate_sample_head(data)

# print("sample head: ", prompt)

# code = """

# The text is : "CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .". The named entities in the text: <extra_id_0> <extra_id_0> organization <extra_id_5> LEICESTERSHIRE <extra_id_1> <extra_id_1>

# """

# data = {"text":"Enterprises from domestic coastal provinces and cities increased , and there are altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting .","tokens":["Enterprises","from","domestic","coastal","provinces","and","cities","increased",",","and","there","are","altogether","30","enterprise","representatives","from","30","provinces",",","cities","and","autonomous","regions","coming","to","this","meeting","."],"entity":[{"type":"geographical social political","offset":[2,3,4,5,6],"text":"domestic coastal provinces and cities"},{"type":"geographical social political","offset":[17,18,19,20,21,22,23],"text":"30 provinces , cities and autonomous regions"},{"type":"organization","offset":[0,1,2,3,4,5,6],"text":"Enterprises from domestic coastal provinces and cities"},{"type":"person","offset":[12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27],"text":"altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting"}],"relation":[],"event":[],"spot":["person","organization","geographical social political"],"asoc":[],"spot_asoc":[{"span":"Enterprises from domestic coastal provinces and cities","label":"organization","asoc":[]},{"span":"domestic coastal provinces and cities","label":"geographical social political","asoc":[]},{"span":"altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting","label":"person","asoc":[]},{"span":"30 provinces , cities and autonomous regions","label":"geographical social political","asoc":[]}]}

# code = r'The text is : "CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .". The named entities in the text: <0> <0> organization <5> LEICESTERSHIRE <1> <1>\n'

code = repr(prompt)

# conver the prediction to the answers

predictions = converter.output_to_structure(data, code)

print (predictions)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

这段代码是一个Python脚本,与前一个代码段非常相似,也是用于将文本结构化为适用于自然语言处理任务的输入数据,并将模型的输出结果还原为结构化数据。不过在这个代码段中,有一些新增的功能和修改:

-

导入了一些额外的库和模块,如

numpy,用于在代码中进行一些数学运算。 -

NLSELPromptCreator类的构造函数中,引入了一个新的方法existing_nested,用于检测输入文本中是否存在嵌套的实体。 -

structure_to_input方法中,生成的prompt中还包括了从输入数据中提取的record信息。 -

output_to_structure方法中,通过新的existing_nested方法检测输入数据中是否存在嵌套实体,并将结果存储在生成的结构化数据中。 -

在

if __name__ == "__main__":部分,脚本加载了模式文件、配置文件和示例数据,然后调用NLSELPromptCreator类的方法,将输入数据结构化为适用于模型的提示文本,并将模型的输出结果还原为结构化数据。此外,还对输入数据进行了一些修改,以测试新功能。

总的来说,这段代码与前一个代码段非常相似,但在一些细节上进行了一些修改和新增功能。它仍然是用于处理和转换文本数据的工具,特别适用于自然语言处理任务中的数据预处理和后处理。

structure2nl_sel_v3.py

import json

import re

from collections import OrderedDict

from typing import List, Union, Dict, Tuple

from src.converters.structure_converter import StructureConverter

from src.converters.record import EntityRecord, RelationRecord

from src.utils.file_utils import load_yaml,load_schema

from uie.sel2record.record import MapConfig

from uie.sel2record.sel2record import SEL2Record

class NLSELPromptCreator(StructureConverter):

def __init__(self, schema_folder=None, map_config_path=None):

self.schema_dict = SEL2Record.load_schema_dict(schema_folder)

self.decoding = 'spotasoc'

record_schema = self.schema_dict['record']

self.entity_schema = record_schema.type_list

self.relation_schema = record_schema.role_list

self.spot_asoc = record_schema.type_role_dict

self.map_config = MapConfig.load_from_yaml(map_config_path)

def structure_to_input(self, input_dict: dict, prompt_part_only: bool = False):

"""

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'spot': ['organization'],

"""

text = input_dict['text']

record = input_dict['record']

prompt = []

input = ['The text is : ',

"\"" + text + "\". ",

"The named entities in the text: "

]

prompt.extend(input)

if prompt_part_only:

return ''.join(prompt)

record = record.replace('extra_id_','')

record = record.lstrip('<0>').rstrip('<1>').strip()

record = record.split('<1>')

record = [rec.strip().lstrip('<0>').strip() for rec in record]

record_new = []

for rec in record:

if rec != '':

temp_str = rec

temp_tuple = temp_str.split('<5>')

assert len(temp_tuple) == 2

temp_tuple = [tt.strip() for tt in temp_tuple]

new_str = f'"{temp_tuple[1]}" is "{temp_tuple[0]}" .'

record_new.append(new_str)

record = ' '.join(record_new)

prompt.append(record)

return ''.join(prompt) + '\n'

def output_to_structure(self, input_dict, output_str):

"""

sample:

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'tokens': ['CRICKET', '-', 'LEICESTERSHIRE', 'TAKE', 'OVER', 'AT', 'TOP', 'AFTER', 'INNINGS', 'VICTORY', '.'],

'record': '<extra_id_0> <extra_id_0> organization <extra_id_5> LEICESTERSHIRE <extra_id_1> <extra_id_1>',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'relation': [],

'event': [],

'spot': ['organization'],

'asoc': [],

'spot_asoc': [{'span': 'LEICESTERSHIRE', 'label': 'organization', 'asoc': []}]}

code:

The text is : "CRICKET -

LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .".

Find named entities such as organization, person,

miscellaneous, location in the text. The organization

"LEICESTERSHIRE" exist in the text.

:param sample:

:param code:

:return:

"""

text = input_dict['text']

tokens = input_dict['tokens']

sel2record = SEL2Record(

schema_dict=self.schema_dict,

decoding_schema=self.decoding,

map_config=self.map_config,

)

pattern = re.compile(r"The named entities in the text:\s*(.*)")

pred = re.search(pattern, output_str).group(1)

pattern = re.compile(r"\"(.*?)\"\sis\s\"(.*?)\"\s.")

pred = pattern.findall(pred)

pred = [(p[1],p[0]) for p in pred]

pred = [' <5> '.join(p) for p in pred]

pred = ['<0> ' + p + ' <1>' for p in pred]

pred = ' '.join(pred)

pred = '<0> ' + pred + ' <1>'

pred_record = sel2record.sel2record(pred, text, tokens)

return pred_record

if __name__ == "__main__":

schema_folder = 'data/conll03'

map_config_path = 'config/offset_map/first_offset_en.yaml'

val_path = 'data/conll03/val.json'

with open(val_path) as fin:

line = fin.readline()

line = fin.readline()

line = eval(line.strip())

data = line

# print ("dev data:\n", data)

converter = NLSELPromptCreator(schema_folder=schema_folder,

map_config_path=map_config_path)

# convert the whole sample

prompt = converter.structure_to_input(data, prompt_part_only=False)

print ("prompt:\n", prompt)

# we have to provide the init state to the sample

# prompt = converter.generate_sample_head(data)

# print("sample head: ", prompt)

# code = """

# The text is : "CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .". The named entities in the text: <extra_id_0> <extra_id_0> organization <extra_id_5> LEICESTERSHIRE <extra_id_1> <extra_id_1>

# """

# data = {"text":"Enterprises from domestic coastal provinces and cities increased , and there are altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting .","tokens":["Enterprises","from","domestic","coastal","provinces","and","cities","increased",",","and","there","are","altogether","30","enterprise","representatives","from","30","provinces",",","cities","and","autonomous","regions","coming","to","this","meeting","."],"entity":[{"type":"geographical social political","offset":[2,3,4,5,6],"text":"domestic coastal provinces and cities"},{"type":"geographical social political","offset":[17,18,19,20,21,22,23],"text":"30 provinces , cities and autonomous regions"},{"type":"organization","offset":[0,1,2,3,4,5,6],"text":"Enterprises from domestic coastal provinces and cities"},{"type":"person","offset":[12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27],"text":"altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting"}],"relation":[],"event":[],"spot":["person","organization","geographical social political"],"asoc":[],"spot_asoc":[{"span":"Enterprises from domestic coastal provinces and cities","label":"organization","asoc":[]},{"span":"domestic coastal provinces and cities","label":"geographical social political","asoc":[]},{"span":"altogether 30 enterprise representatives from 30 provinces , cities and autonomous regions coming to this meeting","label":"person","asoc":[]},{"span":"30 provinces , cities and autonomous regions","label":"geographical social political","asoc":[]}]}

# code = r'The text is : "CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .". The named entities in the text: <0> <0> organization <5> LEICESTERSHIRE <1> <1>\n'

code = repr(prompt)

# conver the prediction to the answers

predictions = converter.output_to_structure(data, code)

print (predictions)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

这段代码也是与前面的代码段非常相似,它仍然是用于将文本结构化为适用于自然语言处理任务的输入数据,并将模型的输出结果还原为结构化数据。在这个代码段中,主要的改变包括:

-

structure_to_input方法现在可以正确地处理record信息,将其从模型的输出中提取并格式化为更易读的文本。 -

output_to_structure方法在处理模型输出时,根据新的格式化规则,将模型的输出解析为结构化数据。这包括解析文本并将其还原为实体。 -

if __name__ == "__main__":部分加载了模式文件、配置文件和示例数据,然后调用NLSELPromptCreator类的方法,将输入数据结构化为适用于模型的提示文本,并将模型的输出结果还原为结构化数据。此外,还对输入数据进行了一些修改,以测试新功能。

总的来说,这段代码仍然是用于文本数据的处理和转换工具,特别适用于自然语言处理任务中的数据预处理和后处理。它提供了更复杂的处理能力,可以正确处理record信息,并按新的格式规则生成输出。

structure2pl_func_v1.py:结构转换器,用于将文本数据转换为适合训练和输入到模型的格式,以及将模型的输出结果还原为结构化数据

import json

import re

from collections import OrderedDict

from typing import List, Union, Dict, Tuple

from src.converters.structure_converter import StructureConverter

from src.utils.file_utils import load_yaml,load_schema

from uie.sel2record.record import EntityRecord, RelationRecord

from uie.sel2record.record import MapConfig

from uie.sel2record.sel2record import SEL2Record

class PLFuncPromptCreator(StructureConverter):

def __init__(self, schema_folder=None, map_config_path=None):

self.schema_dict = SEL2Record.load_schema_dict(schema_folder)

self.decoding = 'spotasoc'

record_schema = self.schema_dict['record']

self.entity_schema = record_schema.type_list

self.relation_schema = record_schema.role_list

self.spot_asoc = record_schema.type_role_dict

self.map_config = MapConfig.load_from_yaml(map_config_path)

def structure_to_input(self, input_dict: dict, prompt_part_only: bool = False):

"""

{'text': 'CRICKET - LEICESTERSHIRE TAKE OVER AT TOP AFTER INNINGS VICTORY .',

'entity': [{'type': 'organization', 'offset': [2], 'text': 'LEICESTERSHIRE'}],

'spot': ['organization'],

"""

text = input_dict['text']

entity_list = input_dict['entity']

spot_list = input_dict['spot']

prompt = []

goal = 'named entity extraction'

func_head = self.to_function_head(self.to_function_name(goal),input='input_text')

prompt.append(func_head)

docstring = '\t""" extract named entities from the input_text . """'

prompt.append(docstring)

input_text = f'\tinput_text = "{text}"'

prompt.append(input_text)

inline_annotation = '\t# extracted named entity list'

prompt.append(inline_annotation)

if prompt_part_only:

return self.list_to_str(prompt)

for spot in spot_list:

entity_list_name = self.to_function_name(spot) + '_list'

tmp_entity_text = []

for ent in entity_list:

if ent['type'] == spot:

ent_text = ent['text']

tmp_entity_text.append(f'"{ent_text}"')

prompt.append(f'\t{entity_list_name} = [' + ', '.join(tmp_entity_text) + ']')

prompt = self.list_to_str(prompt)

return prompt + '\n'

def output_to_structure(self, input_dict, output_str):

"""

input_dict:

{'text': 'West Indian all-rounder Phil Simmons took four for 38 on Friday as Leicestershire beat Somerset by an innings and 39 runs in two days to take over at the head of the county championship .',

'tokens': ['West', 'Indian', 'all-rounder', 'Phil', 'Simmons', 'took', 'four', 'for', '38', 'on', 'Friday', 'as', 'Leicestershire', 'beat', 'Somerset', 'by', 'an', 'innings', 'and', '39', 'runs', 'in', 'two', 'days', 'to', 'take', 'over', 'at', 'the', 'head', 'of', 'the', 'county', 'championship', '.'],

'record': '<extra_id_0> <extra_id_0> miscellaneous <extra_id_5> West Indian <extra_id_1> <extra_id_0> person <extra_id_5> Phil Simmons <extra_id_1> <extra_id_0> organization <extra_id_5> Leicestershire <extra_id_1> <extra_id_0> organization <extra_id_5> Somerset <extra_id_1> <extra_id_1>',

'entity': [{'type': 'organization', 'offset': [12], 'text': 'Leicestershire'}, {'type': 'person', 'offset': [3, 4], 'text': 'Phil Simmons'}, {'type': 'organization', 'offset': [14], 'text': 'Somerset'}, {'type': 'miscellaneous', 'offset': [0, 1], 'text': 'West Indian'}],

'relation': [], 'event': [], 'spot': ['person', 'organization', 'miscellaneous'], 'asoc': [],

'spot_asoc': [{'span': 'West Indian', 'label': 'miscellaneous', 'asoc': []}, {'span': 'Phil Simmons', 'label': 'person', 'asoc': []}, {'span': 'Leicestershire', 'label': 'organization', 'asoc': []}, {'span': 'Somerset', 'label': 'organization', 'asoc': []}]}

output_str:

def extract_named_entity(input_text):

# extract named entities from the input_text.

input_text = "West Indian all-rounder Phil Simmons took four for 38 on Friday as Leicestershire beat Somerset by an innings and 39 runs in two days to take over at the head of the county championship ."

# extracted named entity list

person_list = ["Phil Simmons"]

organization_list = ["Leicestershire", "Somerset"]

miscellaneous_list = ["West Indian"]

:return:

"""

tokens = input_dict['tokens']

sent_records = {}

sent_records['entity'] = []

for entity_s in self.entity_schema:

temp_entities = re.findall(f'{self.to_function_name(entity_s)}_list' + r' = \[(.*?)\]', output_str)

if len(temp_entities) != 0:

temp_entities = temp_entities[0].split(", ")

temp_entity_list = [

{'text': e.strip(r'\"'), 'type': entity_s} for e in temp_entities

]

sent_records['entity'].extend(temp_entity_list)

offset_records = {}

record_map = EntityRecord(map_config=self.map_config)

offset_records['offset'] = record_map.to_offset(

instance=sent_records.get('entity', []),

tokens=tokens,

)

offset_records['string'] = record_map.to_string(

sent_records.get('entity', []),

)

"""

{'offset': [('opinion', (10,)), ('aspect', (11, 12)), ('opinion', (32,)), ('aspect', (34,))],

'string': [('opinion', 'soft'), ('aspect', 'rubber enclosure'), ('opinion', 'break'), ('aspect', 'seal')]}

"""

return {"entity": offset_records,"relation": {"offset": [], "string": []},"event": {"offset": [], "string": []}}

if __name__ == "__main__":

schema_path = 'data/conll03'

map_config_path = 'config/offset_map/first_offset_en.yaml'

val_path = 'data/conll03/val.json'

with open(val_path) as fin:

line0 = fin.readline()

line1 = fin.readline()

line = fin.readline()

line = eval(line.strip())

data = line

converter = PLFuncPromptCreator(schema_folder=schema_path,

map_config_path=map_config_path)

# convert the whole sample

prompt = converter.structure_to_input(data, prompt_part_only=False)

# convert the whole sample

# prompt = converter.structure_to_input(data, prompt_part_only=True)

# print ("prompt:\n", prompt)

data = {"text":"Two goals from defensive errors in the last six minutes allowed Japan to come from behind and collect all three points from their opening meeting against Syria .","tokens":["Two","goals","from","defensive","errors","in","the","last","six","minutes","allowed","Japan","to","come","from","behind","and","collect","all","three","points","from","their","opening","meeting","against","Syria","."],"entity":[{"type":"location","offset":[26],"text":"Syria"},{"type":"location","offset":[11],"text":"Japan"}],"relation":[],"event":[],"spot":["location"],"asoc":[],"spot_asoc":[{"span":"Japan","label":"location","asoc":[]},{"span":"Syria","label":"location","asoc":[]}]}

code = r'def named_entity_extraction(input_text):\n\t\"\"\" extract named entities from the input_text . \"\"\"\n\tinput_text = \"Two goals from defensive errors in the last six minutes allowed Japan to come from behind and collect all three points from their opening meeting against Syria .\"\n\t# extracted named entity list\n\tlocation_list = [\"Syria\"]\n'

print (data)

print (code)

# conver the prediction to the answers

predictions = converter.output_to_structure(data, code)

print ("output: \n")

print (predictions)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

这段代码看起来是一个结构转换器,用于将文本数据转换为适合训练和输入到模型的格式,以及将模型的输出结果还原为结构化数据。在这个特定的示例中,它是为了从文本中提取命名实体而设计的。

以下是代码的一些关键部分:

-

structure_to_input方法:这个方法将输入文本数据转化为一个Python函数的形式,函数用于从输入文本中提取命名实体。它生成一个Python函数头,包括函数名和参数(input_text),然后生成一个文档字符串,指明函数的目的。接下来,它提供了输入文本,然后为每个命名实体类型生成一个Python列表,以存储从文本中提取的实体。最后,它将生成的代码合并成一个完整的Python函数。 -

output_to_structure方法:这个方法用于将模型生成的代码输出还原为结构化数据。它从输出字符串中提取每个命名实体类型的实体列表,并将它们映射回原始文本的偏移位置。最后,它返回包含命名实体信息的字典,其中包括偏移位置和字符串表示。 -

if __name__ == "__main__":部分加载了模式文件、配置文件和示例数据。然后,它使用PLFuncPromptCreator类将示例数据转化为模型输入的提示文本,并将模型的输出代码还原为结构化数据。这里还包括了一个硬编码的示例数据和相应的输出代码。

总的来说,这段代码是一个通用的结构转换器,可以用于将文本数据转化为适合输入到模型的格式,以及将模型的输出结果还原为结构化数据。这在自然语言处理任务中非常有用,特别是在需要处理命名实体提取的任务中。

structure2pl_func_v2.py:将结构化数据转化为模型的输入提示文本,从生成的代码中提取出结构化数据

import json

import re

from collections import OrderedDict

from typing import List, Union, Dict, Tuple

from src.converters.structure_converter import StructureConverter

from src.utils.file_utils import load_yaml,load_schema

from uie.sel2record.record import EntityRecord, RelationRecord

from uie.sel2record.record import MapConfig

from uie.sel2record.sel2record import SEL2Record

"""

def extract_named_entity(input_text):

# extract named entities from the input_text .

input_text = "Steve became CEO of Apple in 1998"

# extracted named entities