- 1Kafka的工作原理_kafka工作原理

- 2linux 修改 可执行文件 函数,linux下实现在程序运行时的函数替换(热补丁)

- 3【SR Works】使用HTC Vive Pro结合SteamVR在Unity中制作MR内容_vive srworks

- 4防止xss攻击 java_java接口防止XSS攻击的常用方法总结

- 5rtsp、rtmp、m3u8、flv、mkv、3gp、mp4直播流测试地址_flv测试地址

- 6论 $*、$@、"$*"、"$@"的区别

- 7java.lang.UnsatisfiedLinkError: 找不到指定的模块。_exception in thread "main" java.lang.unsatisfiedli

- 8activity的基本介绍(转)_应用入口activity

- 9模型加速之openvino_openvion加速

- 10K8S kubectl命令行常用命令参数_k8s 命令在后台执行参数

Coursera吴恩达机器学习专项课程02:Advanced Learning Algorithms 笔记 Week01

赞

踩

Advanced Learning Algorithms Week 01

笔者在2022年7月份取得这门课的证书,现在(2024年2月25日)才想起来将笔记发布到博客上。

Website: https://www.coursera.org/learn/advanced-learning-algorithms?specialization=machine-learning-introduction

Offered by: DeepLearning.AI and Stanford

课程地址:https://www.coursera.org/learn/machine-learning

本笔记包含字幕,quiz的答案以及作业的代码,仅供个人学习使用,如有侵权,请联系删除。

文章目录

- Advanced Learning Algorithms Week 01

- [01] Neural networks intuition

- Welcome

- Neurons and the brain

- Demand Prediction

- Example: Recognizing Images

- [02] Practice quiz: Neural networks intuition

- [03] Neural network model

- Neural network layer

- More complex neural networks

- Inference: making predictions (forward propagation)

- Lab: Neurons and Layers

- [04] Practice quiz: Neural network model

- [05] TensorFlow implementation

- Inference in Code

- Data in TensorFlow

- Building a neural network

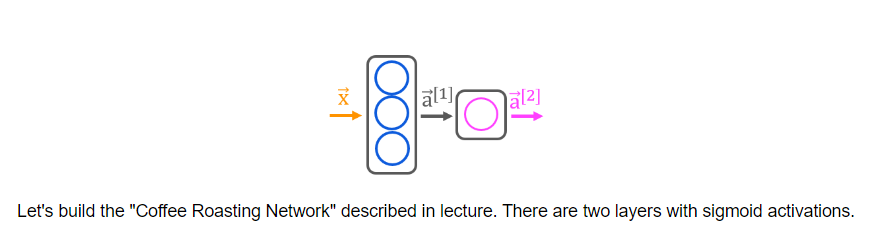

- Lab: Coffee Roasting in Tensorflow

- [06] Practice quiz: TensorFlow implementation

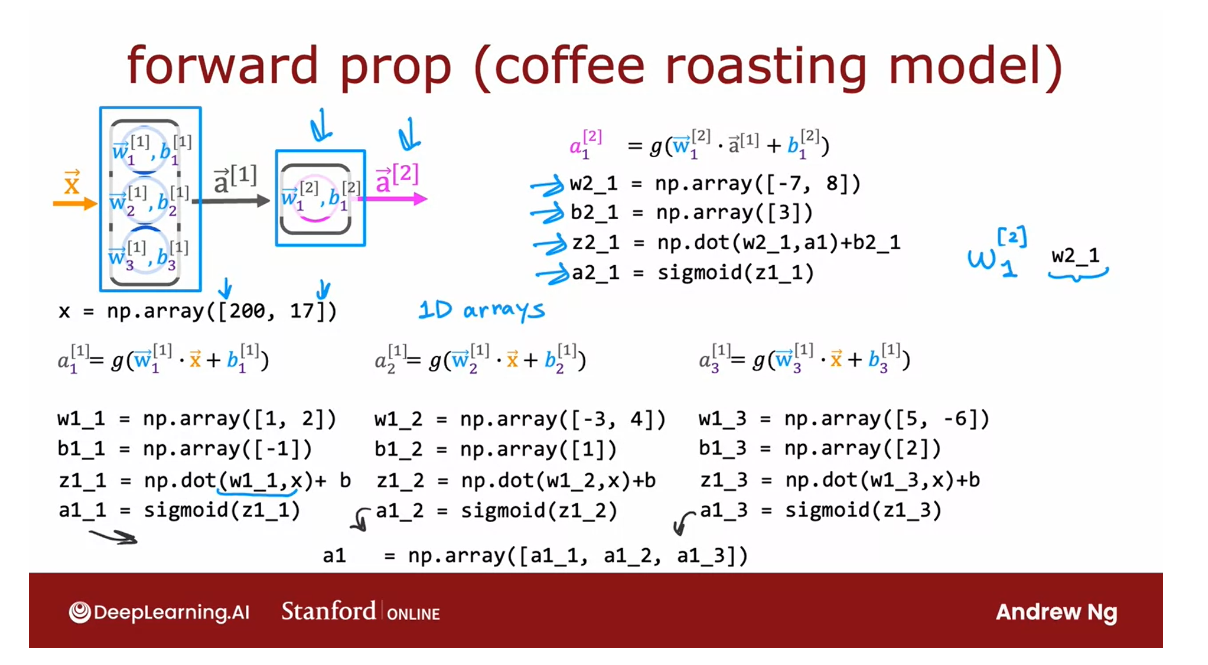

- [07] Neural network implementation in Python

- Forward prop in a single layer

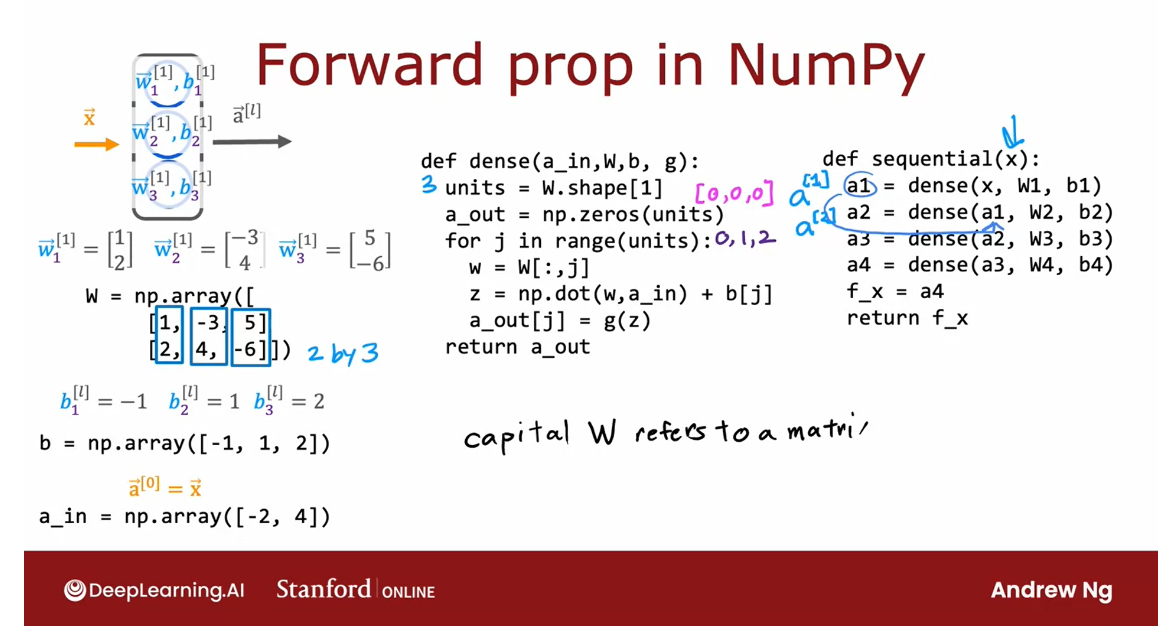

- General implementation of forward propagation

- Lab: CoffeeRoastingNumPy

- [08] Practice quiz: Neural network implementation in Python

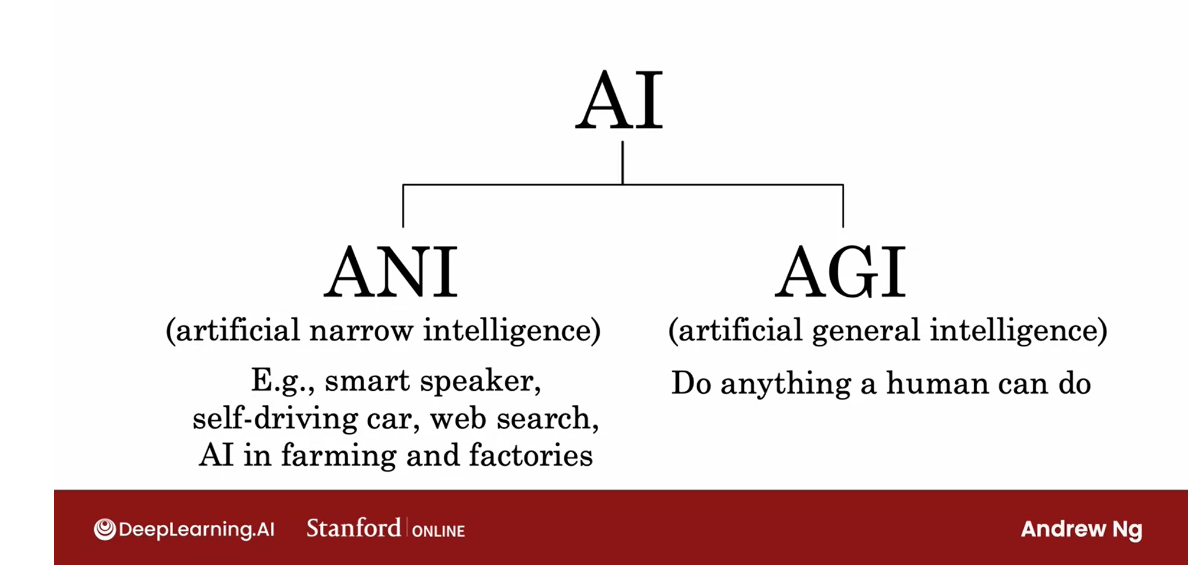

- [09] Speculations on artificial general intelligence (AGI)

- Is there a path to AGI?

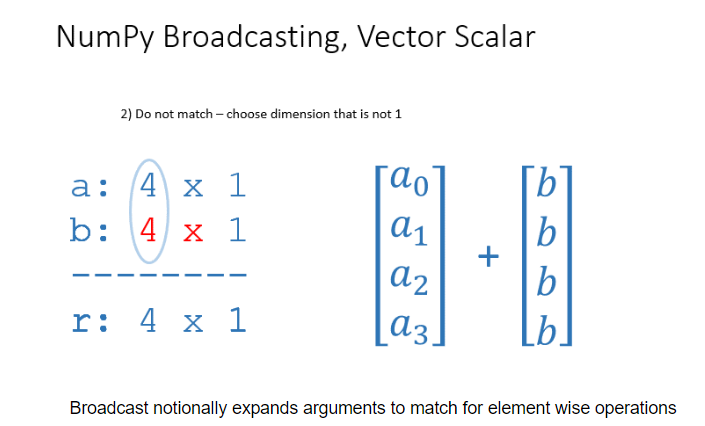

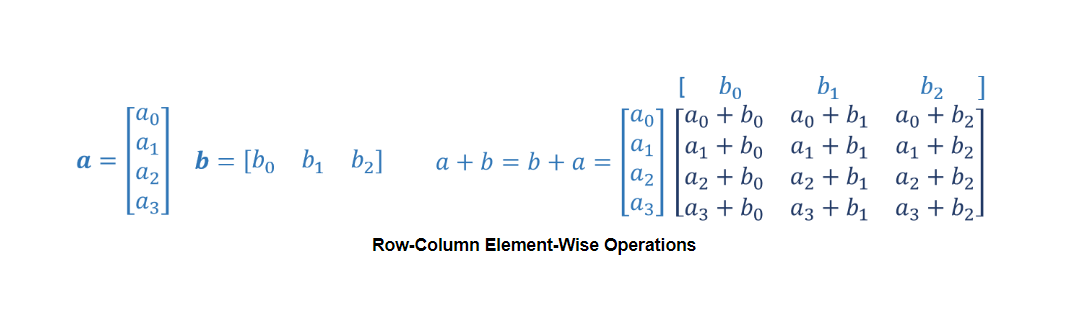

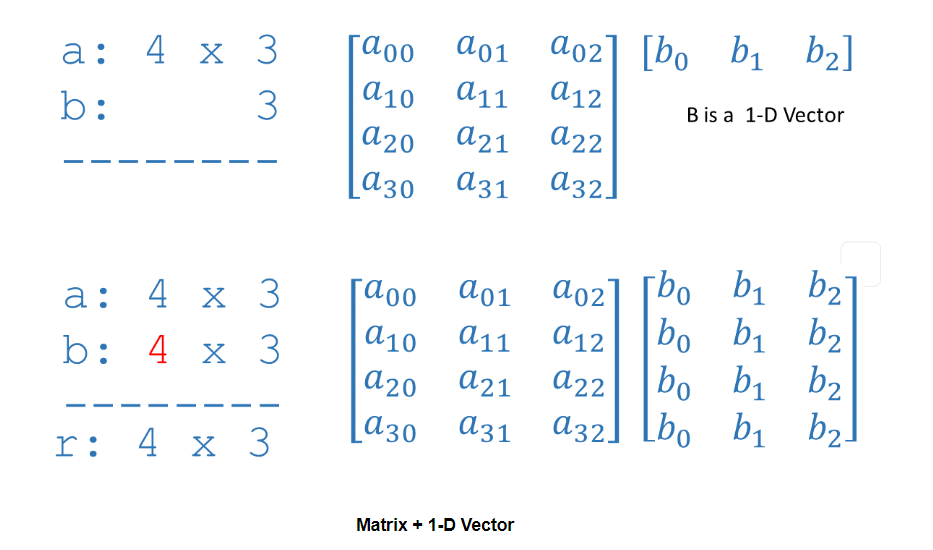

- [10] Vectorization (optional)

- How neural networks are implemented efficiently

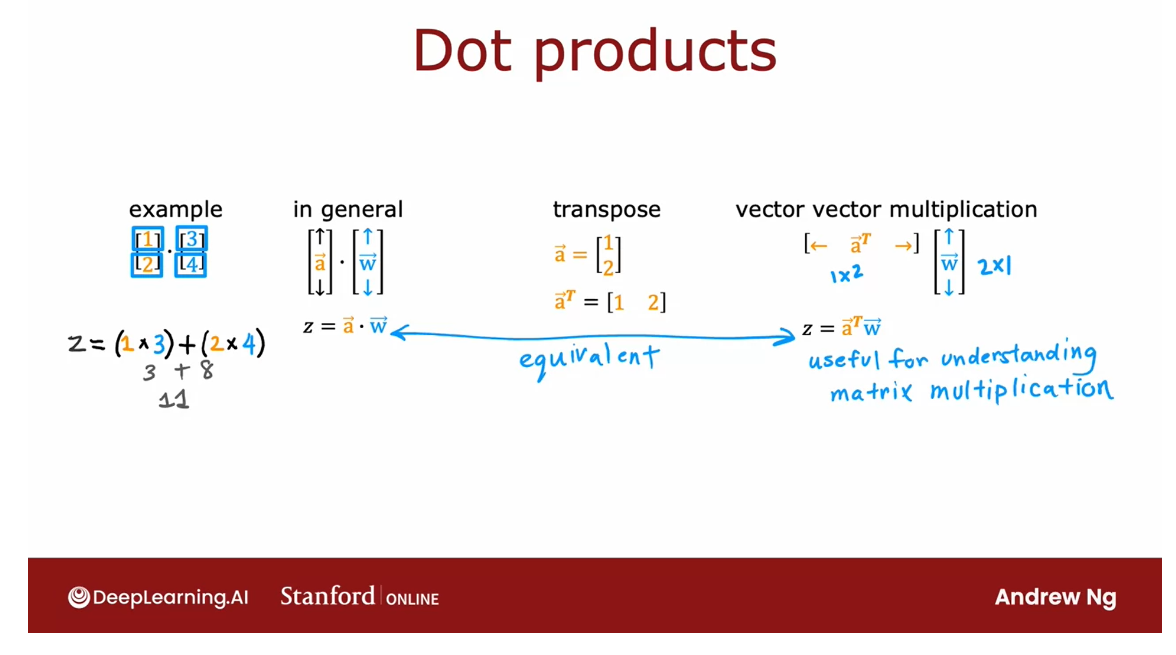

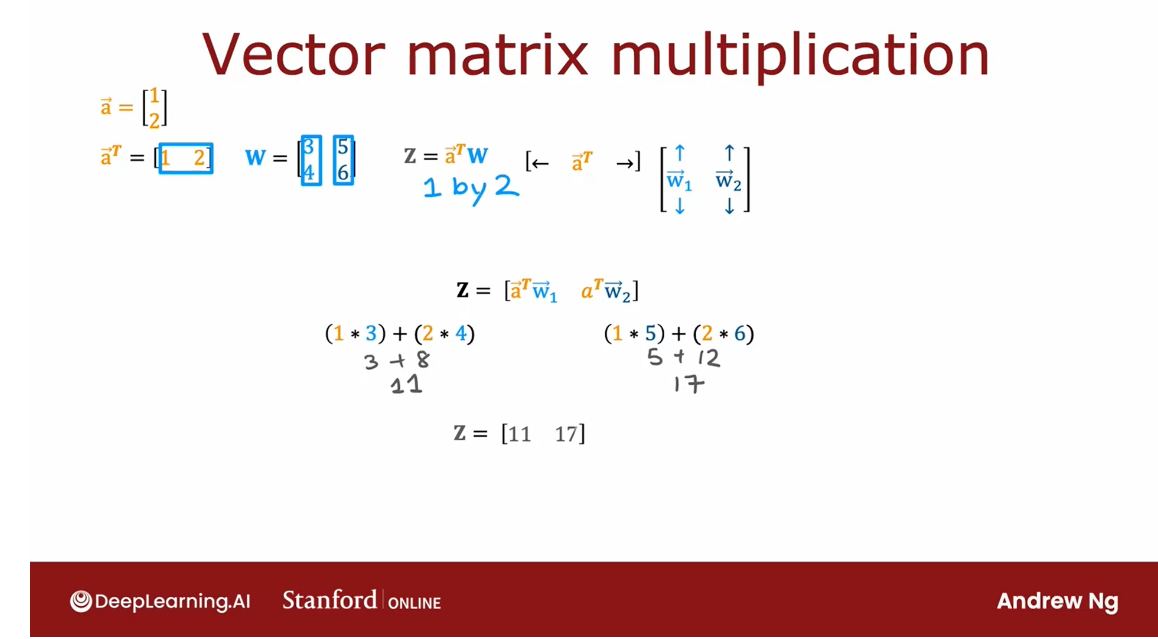

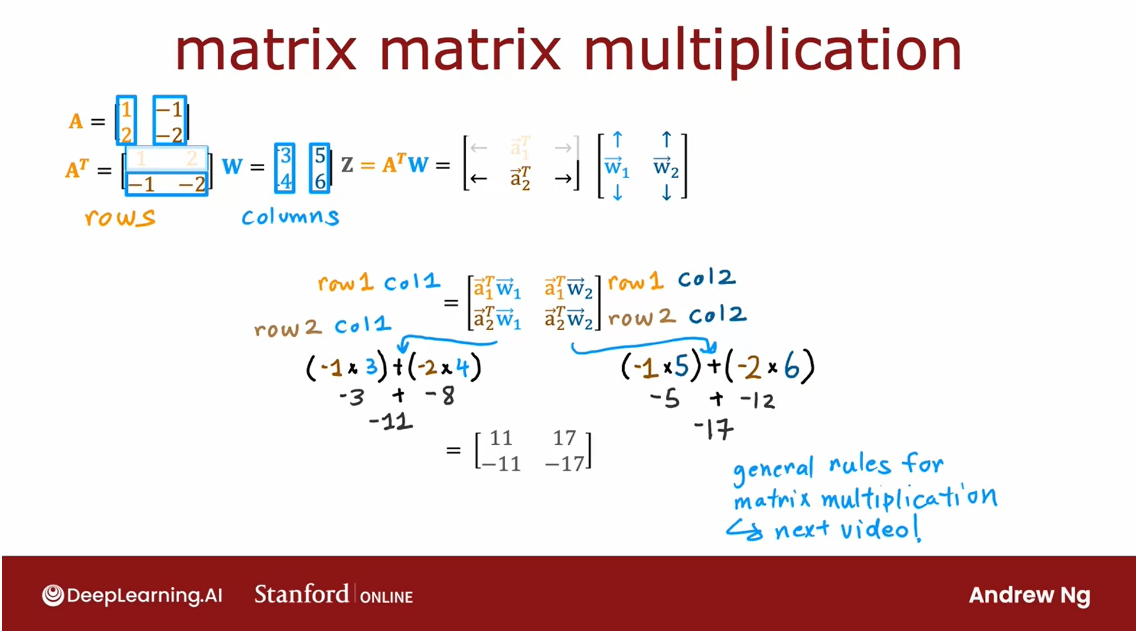

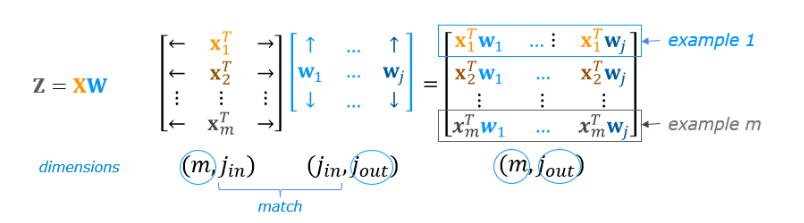

- Matrix multiplication

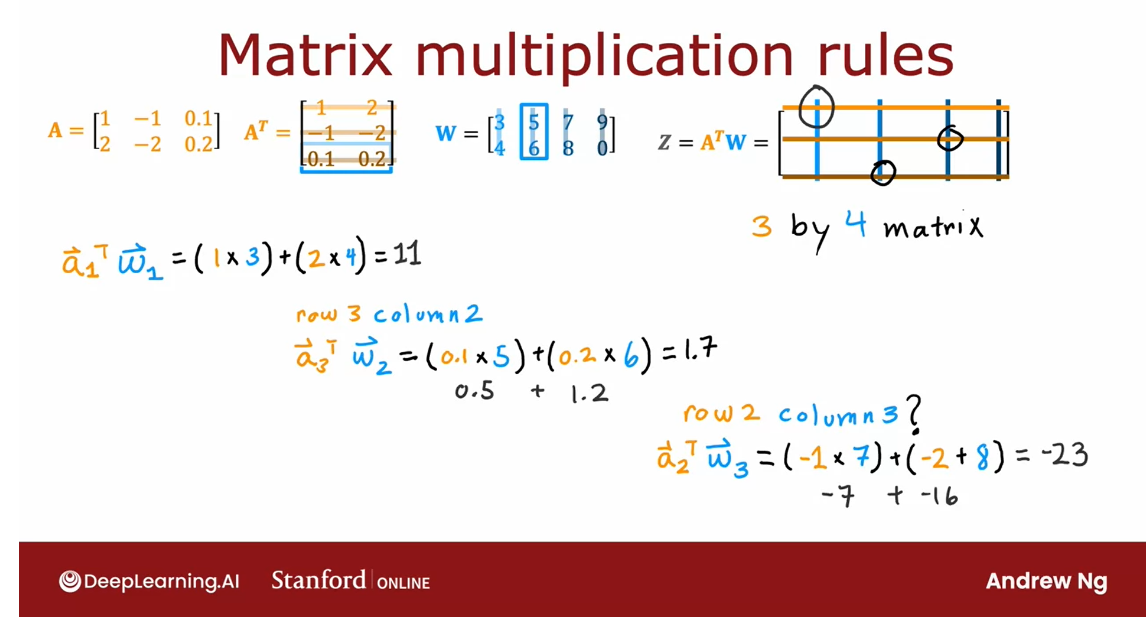

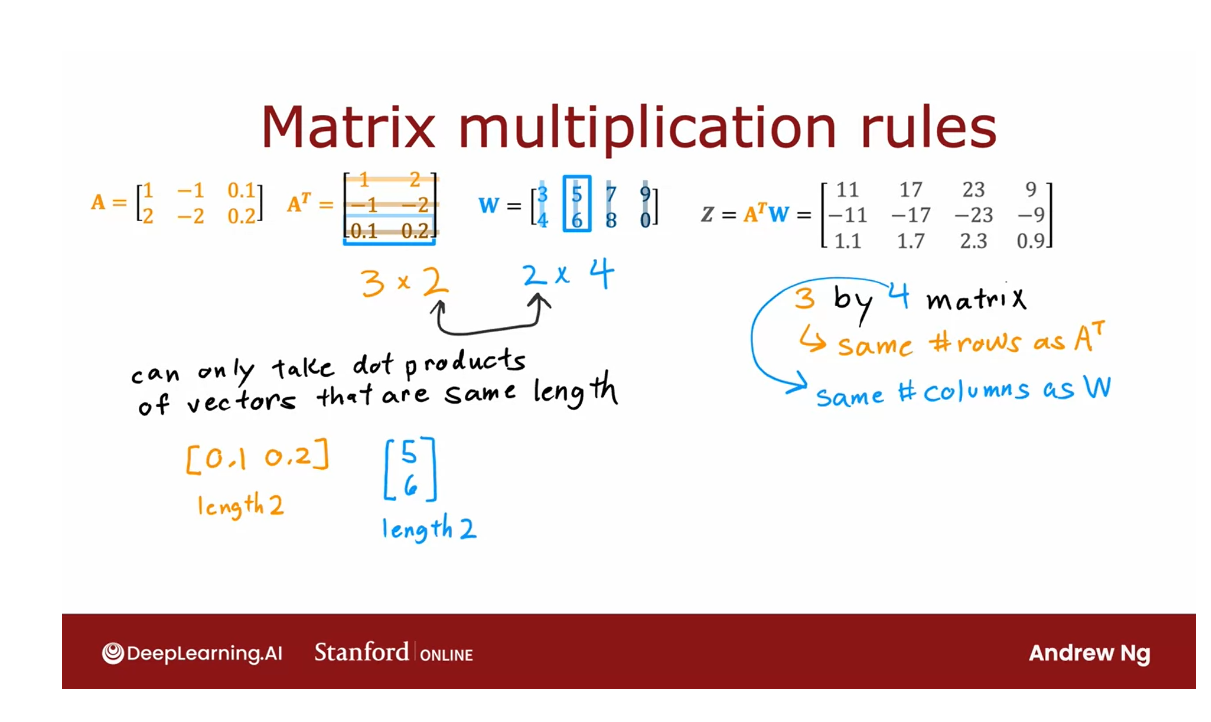

- Matrix multiplication rules

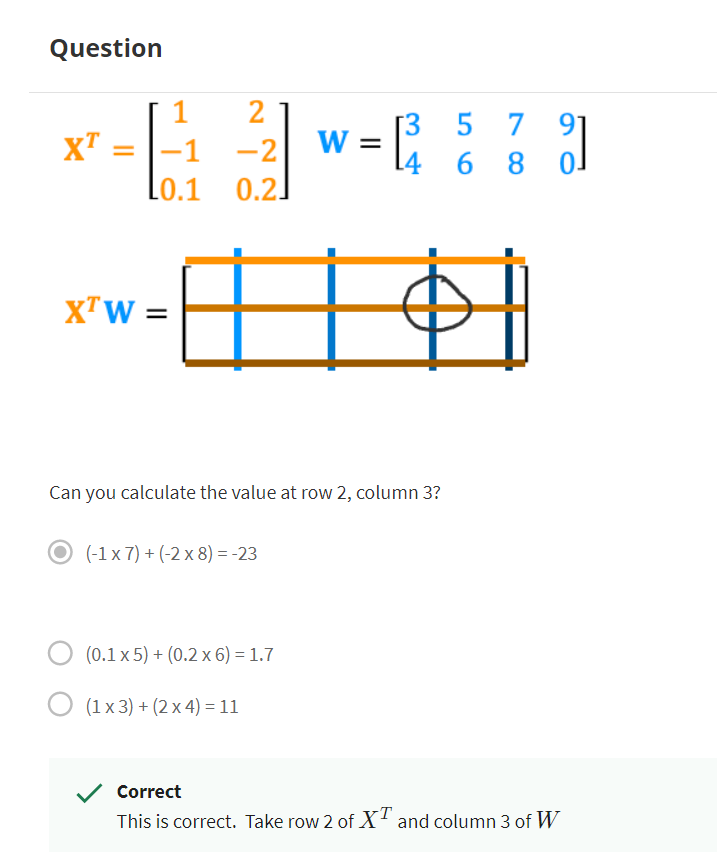

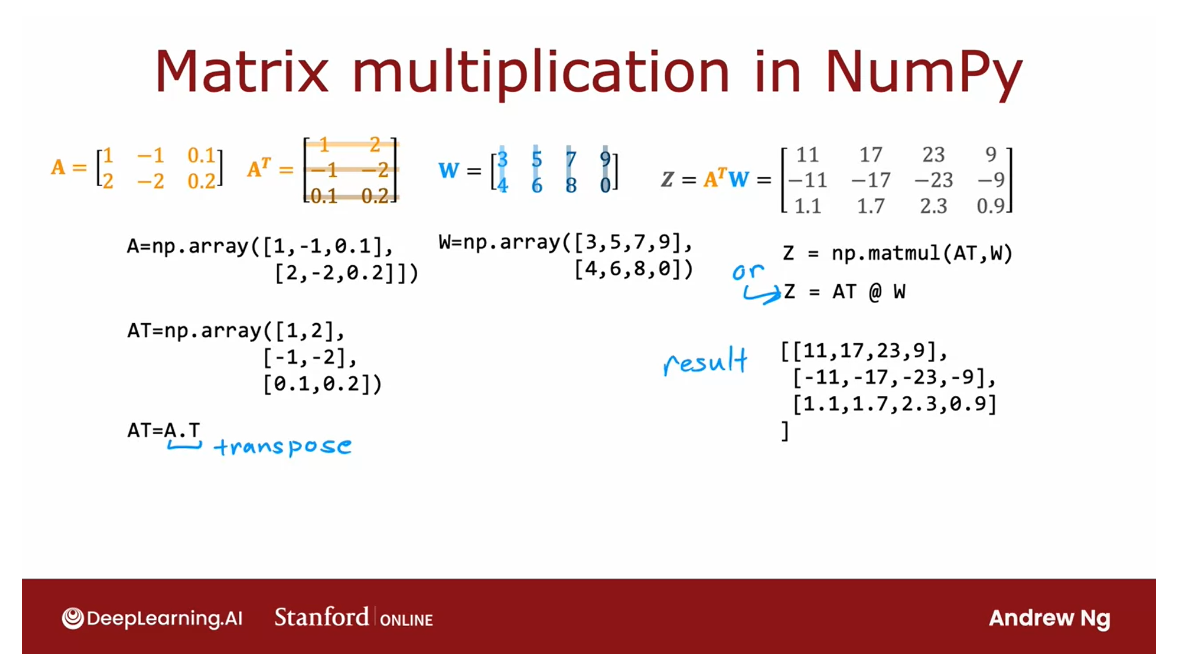

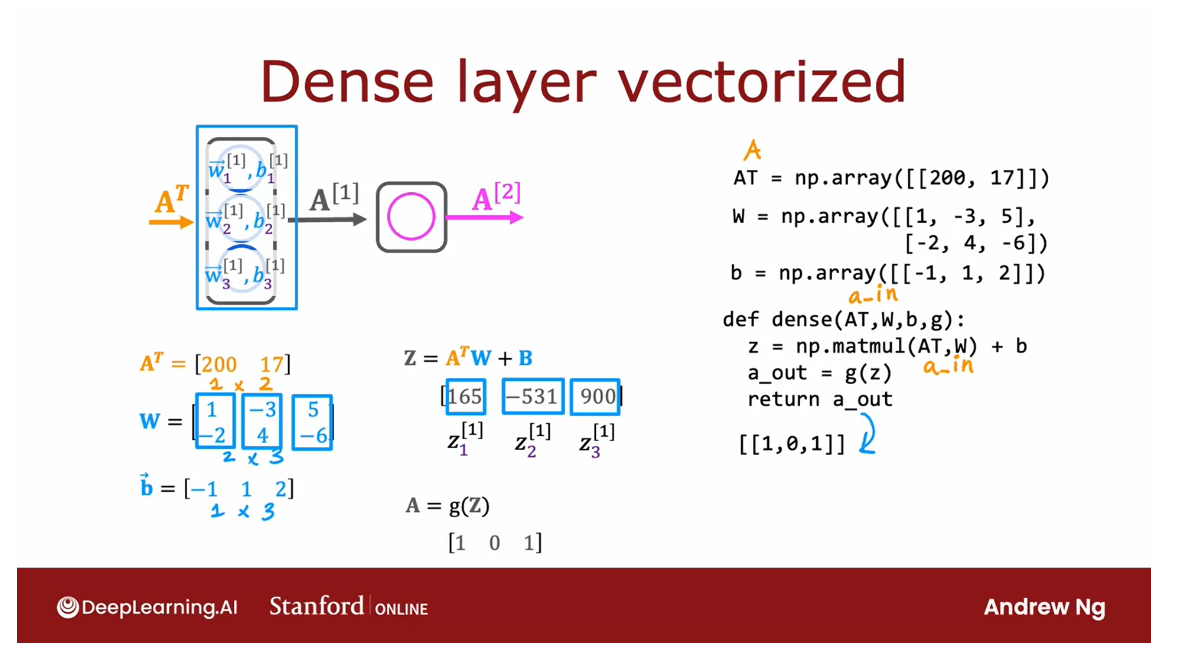

- Matrix multiplication code

- [11] Practice Lab: Neural networks

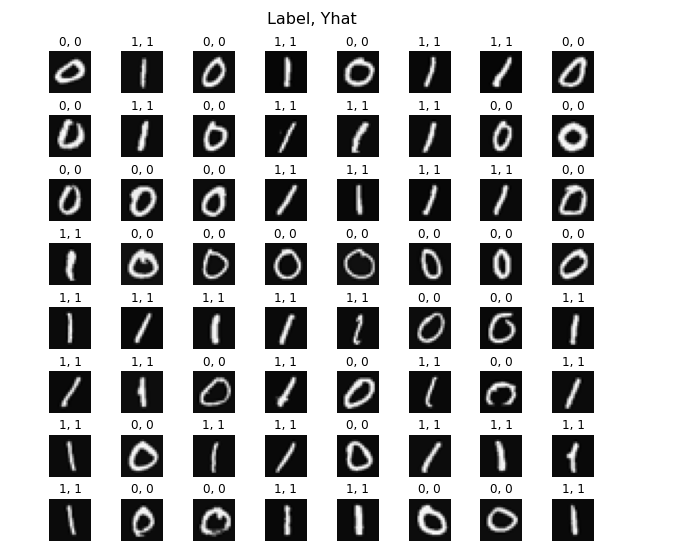

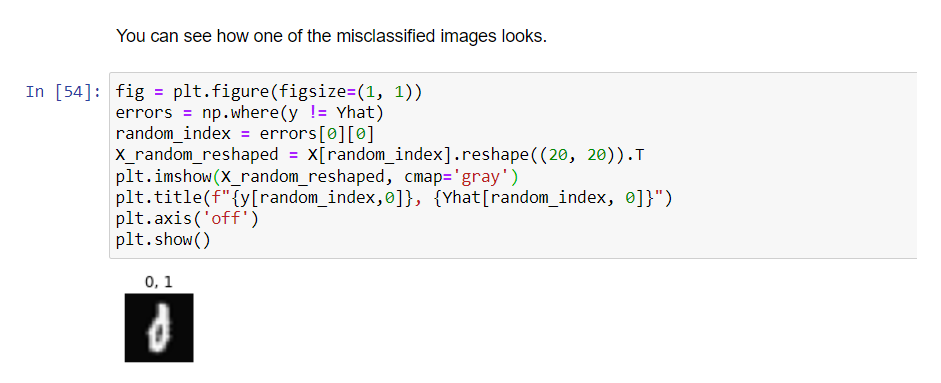

- Programming Assignment: Neural Networks for Binary Classification

- 1 - Packages

- 2 - Neural Networks

- 其他

- 英文发音

This week, you’ll learn about neural networks and how to use it for classification tasks. You’ll use the TensorFlow framework to build a neural network with just a few lines of code. Then, dive deeper by learning how to code up your own neural network in Python, “from scratch”. Optionally, you can learn more about how neural network computations are implemented efficiently use parallel processing (vectorization).

Learning Objectives

- Get familiar with the diagram and components of a neural network

- Understand the concept of a “layer” in a neural network

- Understand how neural networks learn new features.

- Understand how activations are calculated at each layer.

- Learn how a neural network can perform classification on an image.

- Use a framework, TensorFlow, to build a neural network for classification of an image.

- Learn how data goes into and out of a neural network layer in TensorFlow

- Build a neural network in regular Python code (from scratch) to make predictions.

- (Optional): Learn how neural networks use parallel processing (vectorization) to make computations faster.

[01] Neural networks intuition

Welcome

Welcome to Course 2 of this machine learning

specialization. In this course, you’ll learn

about neural networks, also called deep

learning algorithms, as well as decision trees.

These are some of the most powerful and widely

used machine learning algorithms and you’d get to implement them and get

them to work for yourself.

One of the things you see

also in this course is practical advice on how to build machine

learning systems. This part of the material is

quite unique to this course.

When you’re building a practical

machine learning system, there are a lot of

decisions you have to make, such as should you

spend more time collecting data or should you buy a much bigger GPU to build a much bigger

neural network?

Even today, when I visit a leading tech

company and talk to the team working there on a machine learning

application, unfortunately, sometimes I look at what

they’ve been doing for the last six months and go, gee, someone could have

told you maybe even six months ago that that approach wasn’t

going to work that well.

With some of the tips that

you learn in this course, I hope that you’ll

be one or the ones to not waste those six

months, but instead, be able to make more systematic

and better decisions about how to build practical working machine

learning applications.

With that, let’s dive in. In detail, this is what you see in the four weeks

of this course.

In Week 1, we’ll go over

neural networks and how to carry out

inference or prediction.

If you were to go

to the Internet and download the parameters of a neural network that

someone else had trained and whose parameters that

posted on the Internet, then to use that

neural network to make predictions would be

called inference, and you learned how

neural networks work, and how to do inference

in this week.

Next week, you’ll learn how to train your own neural network. In particular, if you have a training set of

labeled examples, X and Y, how do you train the parameters of a neural

network for yourself?

In the third week, we’ll then go into

practical advice for building machine learning

systems and I’ll share with you some tips that I think even highly paid engineers building machine learning

systems very successfully today don’t really always manage

to consistently apply and I think that will help you build systems yourself

efficiently and quickly.

Then in the final

week of this course, you learn about decision trees.

While decision trees don’t get

as much buzz in the media, there’s local less hype about decision trees compared

to neural networks. They are also one of the widely used and very

powerful learning algorithms that I think there’s

a good chance you end up using yourself if you end

up building an application.

With that, let’s jump into neural networks and we’re going to start by taking a quick

look at how the human brain, that is how the

biological brain works. Let’s go on to the next video.

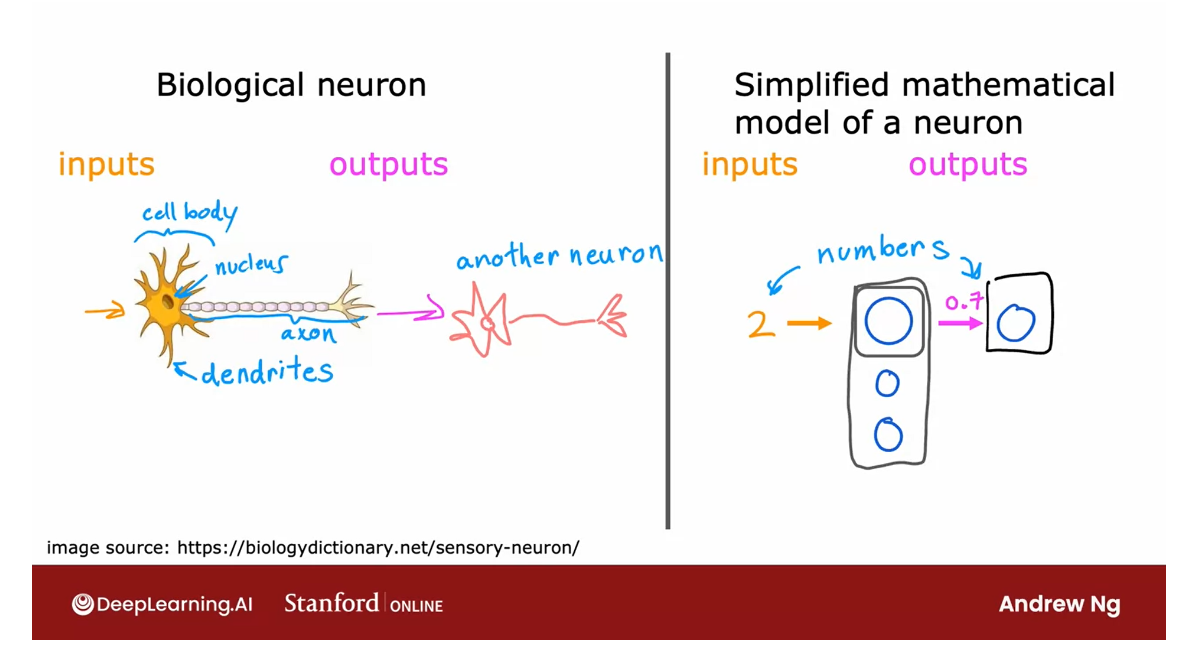

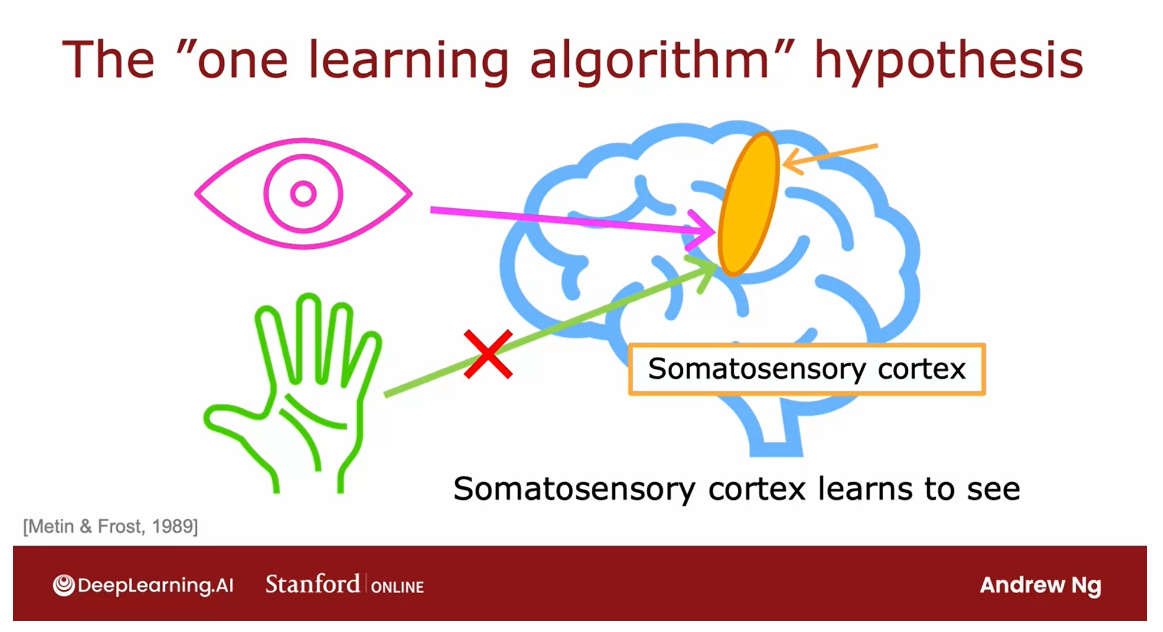

Neurons and the brain

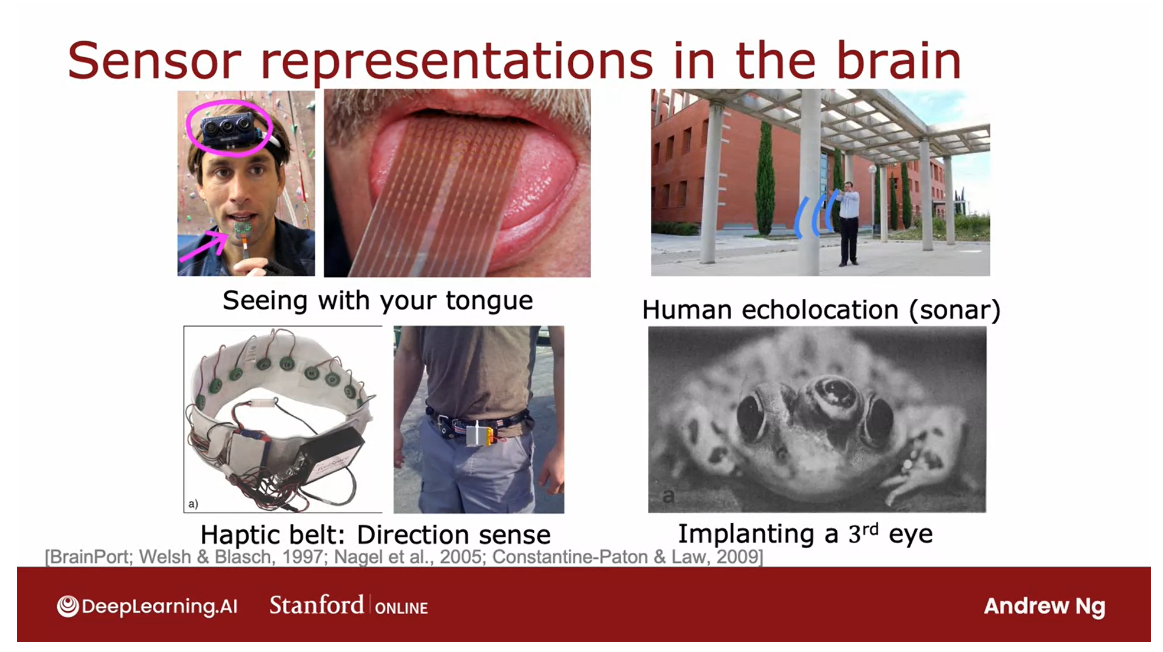

Original motivation: mimic how the human brain or how the biological brain learns and thinks

When neural networks were first invented many decades ago, the original motivation was to write software that could mimic how the human brain or how the biological brain

learns and thinks.

Even though today,

neural networks, sometimes also called

artificial neural networks, have become very

different than how any of us might think about how the brain actually

works and learns.

Some of the biological

motivations still remain in the way we think about artificial neural networks or computer neural

networks today.

Let’s start by taking a

look at how the brain works and how that relates

to neural networks.

The human brain, or

maybe more generally, the biological brain demonstrates

a higher level or more capable level of

intelligence and anything else would be

on the bill so far. So neural networks

has started with the motivation of

trying to build software to mimic the brain.

Work in neural networks had

started back in the 1950s, and then it fell out

of favor for a while.

Then in the 1980s

and early 1990s, they gained in popularity

again and showed tremendous traction

in some applications like handwritten

digit recognition, which were used

even backed then to read postal codes for routing mail and for reading dollar figures in

handwritten checks.

But then it fell out of favor

again in the late 1990s.

It was from about

2005 that it enjoyed a resurgence and also became re-branded little bit

with deep learning.

One of the things that

surprised me back then was deep learning and neural networks meant

very similar things.

But maybe under appreciated at the time that the

term deep learning, just sounds much better because it’s deep

and this learning. So that turned out

to be the brand that took off in the last decade

or decade and a half.

Since then, neural networks have revolutionized application

area after application area.

I think the first

application area that modern neural

networks or deep learning, had a huge impact on was

probably speech recognition, where we started to see much better speech

recognition systems due to modern deep learning

and authors such as [inaudible] and Geoff Hinton

were instrumental to this, and then it started to make

inroads into computer vision.

Sometimes people still speak of the ImageNet moments in 2012, and that was maybe a bigger

splash where then [inaudible] draw their imagination and had a big impact on

computer vision.

Then the next few years, it made us inroads into texts or into natural

language processing, and so on and so forth.

Now, neural networks are

used in everything from climate change to medical

imaging to online advertising.

So proudly, recommendations

and really lots of application areas

of machine learning now use neural networks.

Even though today’s

neural networks have almost nothing to do with

how the brain learns, there was the early

motivation of trying to build software

to mimic the brain.

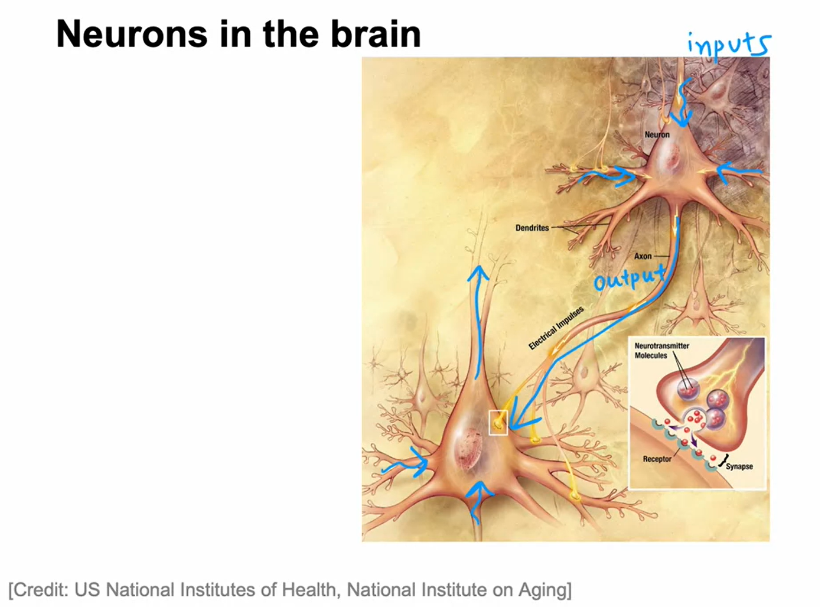

So how does the brain work?

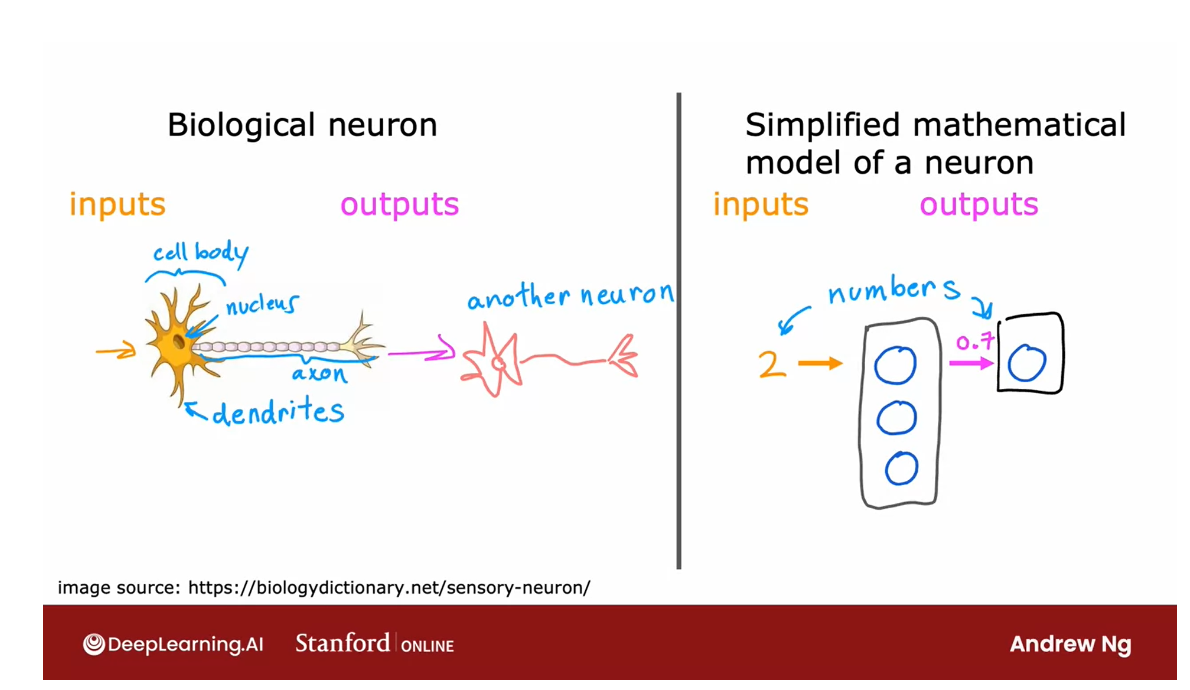

Here’s a diagram illustrating what neurons in a

brain look like.All of human thought is from neurons like this in

your brain and mine, sending electrical impulses and sometimes forming new

connections of other neurons.

The stuff of which human thought is made

Given a neuron like this one, it has a number of

inputs where it receives electrical impulses

from other neurons, and then this neuron that I’ve circled carries out

some computations and will then send this outputs to other neurons by this

electrical impulses, and this upper neuron’s

output in turn becomes the input to

this neuron down below, which again aggregates

inputs from multiple other neurons to then

maybe send its own output, to yet other neurons, and this is the stuff of

which human thought is made.

Here’s a simplified diagram

of a biological neuron.

Biological neuron:

nucleus of the neuron: 神经元核

dendrites: 树突 ˈdendrīt

axon:轴突 ˈakˌsän

A neuron comprises a cell

body shown here on the left, and if you have taken

a course in biology, you may recognize this to be

the nucleus of the neuron.

As we saw on the previous slide, the neuron has different inputs. In a biological neuron, the input wires are

called the dendrites, and it then occasionally

sends electrical impulses to other neurons via

the output wire, which is called the axon. Don’t worry about these

biological terms. If you saw them in

a biology class, you may remember them, but you don’t really need to memorize any of these terms for the purpose of building

artificial neural networks.

But this biological

neuron may then send electrical impulses that become the input to another neuron.

So the artificial

neural network uses a very simplified

Mathematical model of what a biological

neuron does.

I’m going to draw

a little circle here to denote a single neuron.

What a neuron does is

it takes some inputs, one or more inputs, which are just numbers. It does some computation and it outputs

some other number, which then could be an

input to a second neuron, shown here on the right.

Neurons in neural network: input a few numbers, carry out some computation, and output some other numbers.

When you’re building an

artificial neural network or deep learning algorithm, rather than building

one neuron at a time, you often want to simulate many such

neurons at the same time. In this diagram, I’m

drawing three neurons.What these neurons do collectively is

input a few numbers, carry out some computation, and output some other numbers.

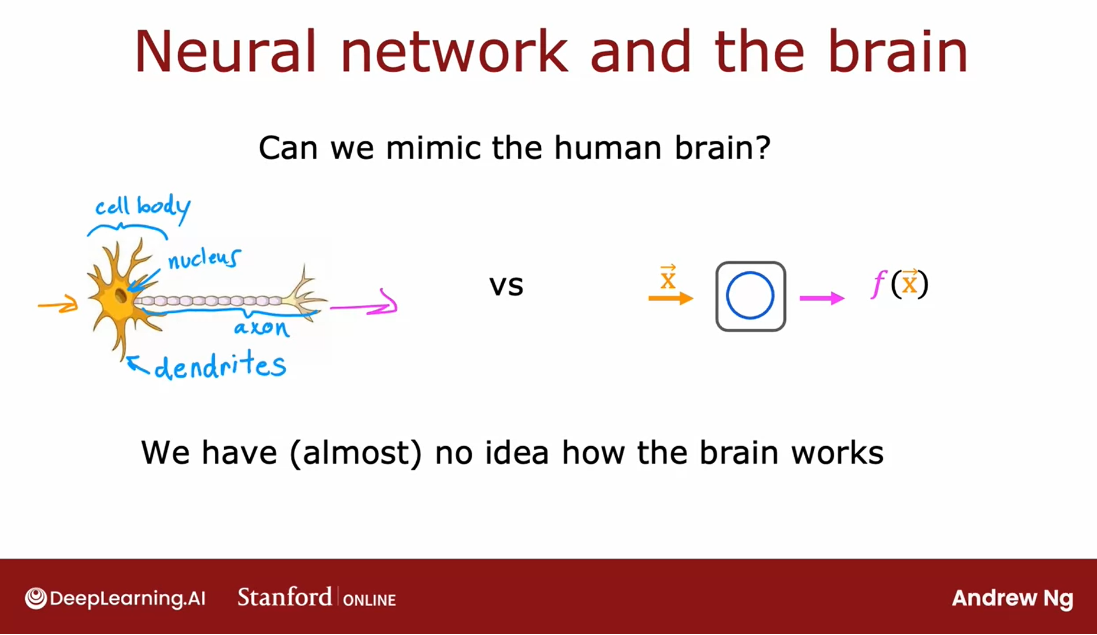

Now, at this point, I’d like to give one big caveat, which is that even though I made a loose analogy between biological neurons and

artificial neurons, I think that today we have almost no idea how the

human brain works.

In fact, every few years, neuroscientists make some

fundamental breakthrough about how the brain works. I think we’ll continue to do so for the foreseeable future.

That to me is a

sign that there are many breakthroughs

that are yet to be discovered about how the

brain actually works, and thus attempts to blindly mimic what we know of

the human brain today, which is frankly very little, probably won’t get us that far toward building

raw intelligence.

Certainly not with

our current level of knowledge in neuroscience. Having said that, even with these extremely simplified

models of a neuron, which we’ll talk about,

we’ll be able to build really powerful deep

learning algorithms.

So as you go deeper into neural networks and

into deep learning, even though the origins were

biologically motivated, don’t take the biological

motivation too seriously.

In fact, those of us that do research in deep learning have shifted away from looking to biological motivation that much. But instead, they’re just using engineering principles to figure out how to build algorithms

that are more effective.

But I think it might still

be fun to speculate and think about how

biological neurons work every now and then.

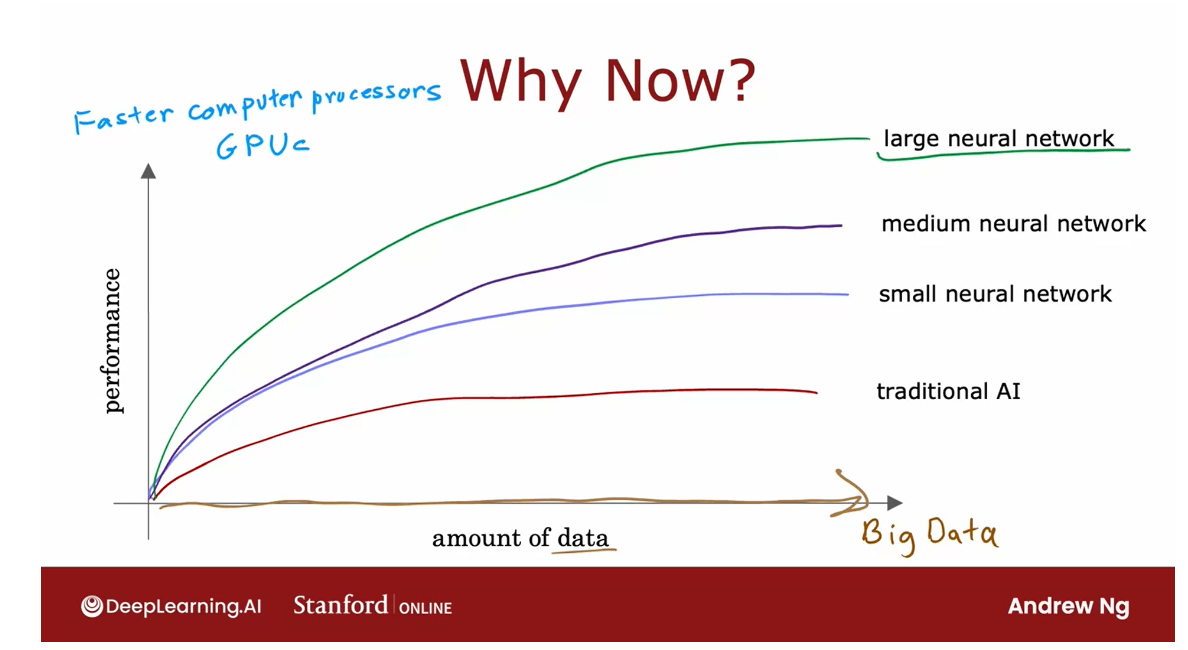

Why is it that only in the last handful of years that neural networks have really taken off?

The ideas of neural

networks have been around for many decades. A few people have asked me, “Hey Andrew, why now? Why is it that only

in the last handful of years that neural networks

have really taken off?”

This is a picture I draw for them when I’m

asked that question and that maybe you could draw for others as well if they

ask you that question.

Draw a picture:

- horizontal axis: the amount of data

- vertical axis: the performance (or the accuracy) of a learning algorithm

Let me plot on the

horizontal axis the amount of data you

have for a problem, and on the vertical axis, the performance or

the accuracy of a learning algorithm

applied to that problem.

In many application areas, the amount of digital data has exploded.

Over the last couple of decades, with the rise of the Internet, the rise of mobile phones, the digitalization

of our society, the amount of data

we have for a lot of applications has steadily

marched to the right.

Lot of records that

use P on paper, such as if you order something rather than it being

on a piece of paper, there’s much more likely

to be a digital record. Your health record,

if you see a doctor, is much more likely

to be digital now compared to on

pieces of paper.

So in many application areas, the amount of digital

data has exploded.

Traditional learning algorithm: won’t be able to scale with the amount of data

Meaning: Even if you fed those algorithms more data, it was very difficult to get the performance to keep on going up.

What we saw was with traditional machine-learning

algorithms, such as logistic regression

and linear regression, even as you fed those

algorithms more data, it was very difficult to get the performance to

keep on going up.

So it was as if the traditional learning

algorithms like linear regression and

logistic regression, they just weren’t able to scale with the amount of data

we could now feed it and they weren’t able to

take effective advantage of all this data we had for

different applications.

Train neural network with different size

What AI researchers

started to observe was that if you were to train a small neural network

on this dataset, then the performance

maybe looks like this.

If you were to train a

medium-sized neural network, meaning one with

more neurons in it, its performance may

look like that.

If you were to train a

very large neural network, meaning one with a lot of

these artificial neurons, then for some applications the performance will

just keep on going up.

So this meant two things, it meant that for

a certain class of applications where you

do have a lot of data, sometimes you hear the

term big data toss around, if you’re able to train a very large neural

network to take advantage of that huge amount

of data you have, then you could attain

performance on anything ranging from speech recognition,

to image recognition, to natural language processing

applications and many more, they just were not possible with earlier generations of

learning algorithms.

This caused deep learning

algorithms to take off, and this too is why faster

computer processes, including the rise of GPUs

or graphics processor units.

This is hardware

originally designed to generate nice-looking

computer graphics, but turned out to be really powerful for deep

learning as well.That was also a major force in allowing deep

learning algorithms to become what it is today.

That’s how neural

networks got started, as well as why they took off so quickly in the

last several years. Let’s now dive more deeply into the details of how neural

network actually works. Please go on to the next video.

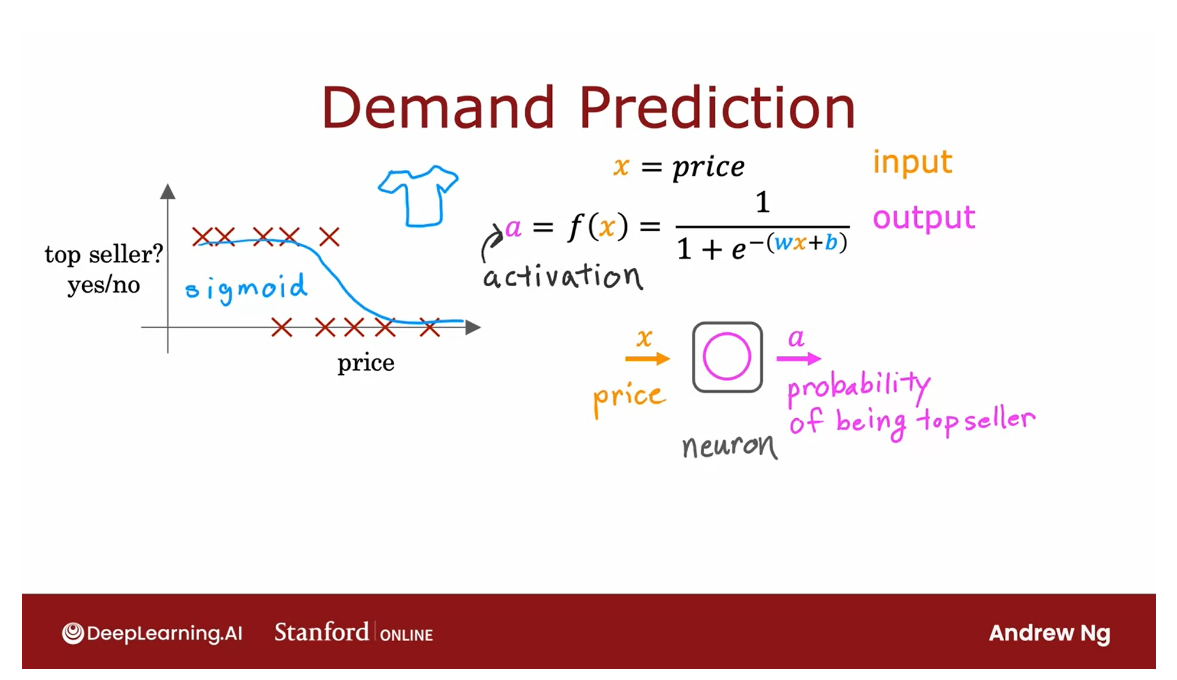

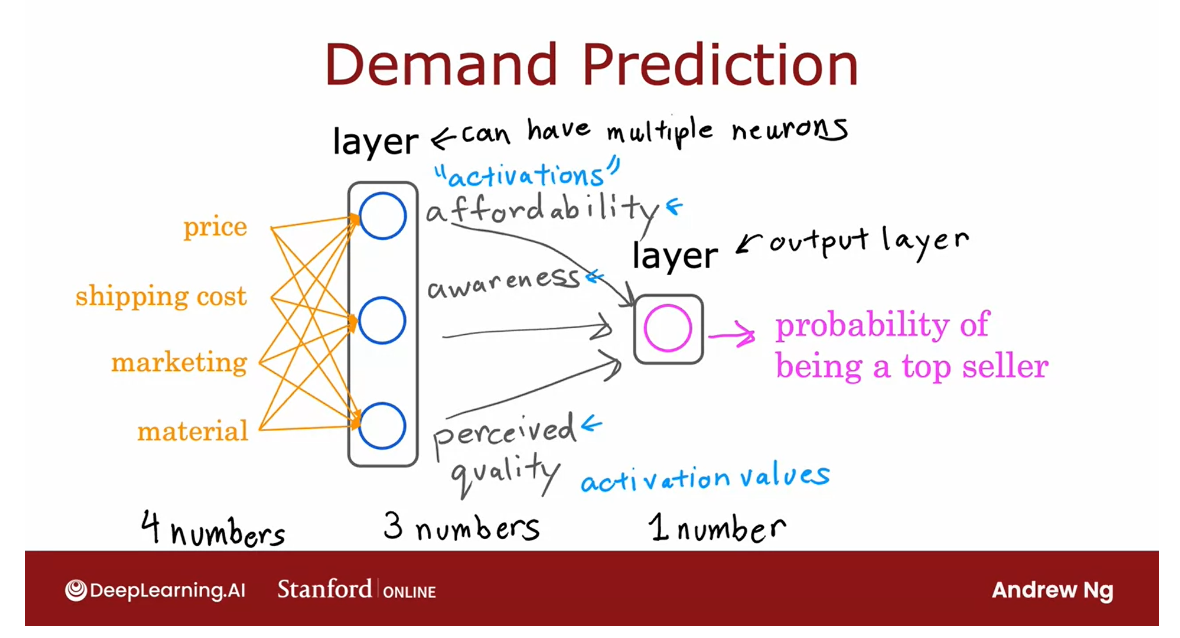

Demand Prediction

To illustrate how

neural networks work, let’s start with an example.We’ll use an example from demand prediction in which you look at the product

and try to predict, will this product be

a top seller or not? Let’s take a look.

Predict a product is a top seller or not

In this example, you’re

selling T-shirts and you would like to know if a particular T-shirt

will be a top seller, yes or no, and you

have collected data of different t-shirts that

were sold at different prices, as well as which ones

became a top seller.

Used by retailers today

This type of application is used by retailers today in order to plan better inventory levels as well as marketing campaigns. If you know what’s likely to be a top seller, you would plan, for example, to just purchase more of that

stock in advance.

In this example,

the input feature x is the price of the T-shirt, and so that’s the input to

the learning algorithm.

If you apply logistic

regression to fit a sigmoid function to the data that might

look like that then the outputs of your prediction

might look like this, 1/1 plus e to the

negative wx plus b.

Previously, we had

written this as f of x as the output of

the learning algorithm.

In order to set us up to

build a neural network, I’m going to switch the

terminology a little bit and use the alphabet a to denote the output of this logistic

regression algorithm.

The term a stands

for activation, and it’s actually a

term from neuroscience, and it refers to how

much a neuron is sending a high output to other

neurons downstream from it.

It turns out that this logistic regression units or this little logistic

regression algorithm, can be thought of as a very simplified model of a

single neuron in the brain.

Where what the neuron does is it takes us

input the price x, and then it computes

this formula on top, and it outputs the number a, which is computed

by this formula, and it outputs the probability of this T-shirt

being a top seller.

Another way to think

of a neuron is as a tiny little computer whose only job is to input

one number or a few numbers, such as a price, and then

to output one number or maybe a few other

numbers which in this case is the probability of the T-shirt

being a top seller.

As I alluded in the

previous video, a logistic regression

algorithm is much simpler than what any biological neuron in your

brain or mine does. Which is why the artificial

neural network is such a vastly oversimplified

model of the human brain.

Even though in

practice, as you know, deep learning algorithms

do work very well.

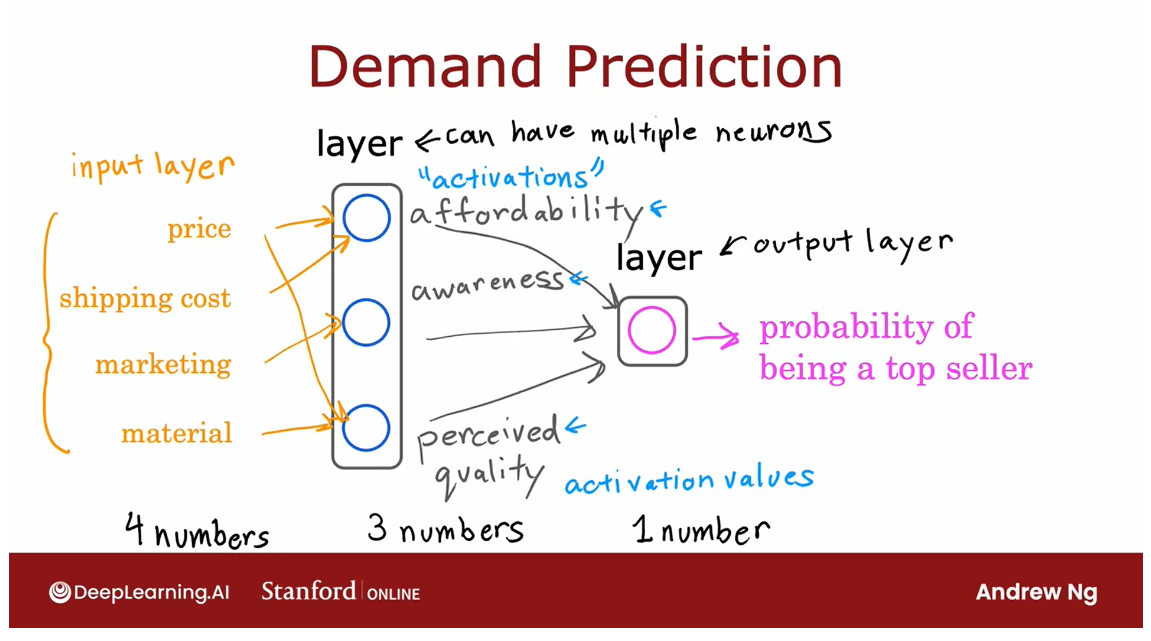

Given this description

of a single neuron, building a neural network now it just requires taking a bunch of these neurons and wiring them together or putting

them together.

Let’s now look at a

more complex example of demand prediction.In this example, we’re

going to have four features to predict whether or not

a T-shirt is a top seller. The features are the

price of the T-shirt, the shipping costs, the amounts of marketing of that

particular T-shirt, as well as the material quality, is this a high-quality, thick cotton versus maybe

a lower quality material?

Now, you might suspect

that whether or not a T-shirt becomes a top seller actually depends

on a few factors.

First, one is the

affordability of this T-shirt.

Second is, what’s the degree of awareness of this T-shirt

that potential buyers have?Third is perceived quality to bias or potential bias saying this is a

high-quality T-shirt.

What I’m going to do is create

one artificial neuron to try to estimate the

probability that this T-shirt is perceive

as highly affordable.

Affordability is mainly a

function of price and shipping costs because the

total amount of the pay is some of the price

plus the shipping costs.We’re going to use a

little neuron here, a logistic regression unit

to input price and shipping costs and predict do people

think this is affordable?

Second, I’m going to create another artificial

neuron here to estimate, is there high awareness of this? Awareness in this case is mainly a function of the

marketing of the T-shirt.

Finally, going to create

another neuron to estimate do people perceive

this to be of high quality, and that may mainly

be a function of the price of the T-shirt and

of the material quality.

Price is a factor here because fortunately

or unfortunately, if there’s a very

high priced T-shirt, people will sometimes perceive that to be of high

quality because it is very expensive than maybe people think it’s going

to be of high-quality.

Given these estimates of

affordability, awareness, and perceived quality we

then wire the outputs of these three neurons to another

neuron here on the right, that then there’s another

logistic regression unit.

That finally inputs

those three numbers and outputs the probability of this t-shirt being a top seller. In the terminology

of neural networks, we’re going to group these three neurons together

into what’s called a layer.

A layer is a grouping

of neurons which takes us input the same

or similar features, and that in turn outputs

a few numbers together.

These three neurons on the left form one layer which is why I drew them

on top of each other, and this single neuron on

the right is also one layer. The layer on the left

has three neurons, so a layer can have multiple

neurons or it can also have a single neuron as in the case of this

layer on the right.

This layer on the

right is also called the output layer

because the outputs of this final neuron is the output probability predicted

by the neural network.

Activation: refer to the degree that the biological neuron is sending a high output value (or sending many electronical impulses) to other neurons to the downstream from it.

In the terminology of neural networks we’re

also going to call affordability

awareness and perceive quality to be activations.

The term activations comes

from biological neurons, and it refers to the degree that the biological

neuron is sending a high output value or sending many electrical impulses to other neurons to the

downstream from it.

These numbers on

affordability, awareness, and perceived quality are the activations of these

three neurons in this layer, and also this output

probability is the activation of this neuron

shown here on the right.

This particular neural network therefore carries out

computations as follows.

It inputs four numbers then this layer of the

neural network uses those four numbers to compute the new numbers also

called activation values.

Then the final layer, the output layer of the

neural network used those three numbers to

compute one number.

In a neural network this list of four numbers is also

called the input layer, and that’s just a

list of four numbers. Now, there’s one simplification I’d like make to

this neural network.

The way I’ve

described it so far, we had to go through the

neurons one at a time and decide what inputs it would

take from the previous layer.

For example, we said

affordability is a function of just price and shipping

costs and awareness is a function of just

marketing and so on, but if you’re building

a large neural network it’d be a lot of work

to go through and manually decide which neurons should take which

features as inputs.

Difficult to go through and manually decide which neurons should take which features as inputs.

In practice: layer in the middle will have access to every feature, to every value from the previous layer.

The way a neural network

is implemented in practice each neuron

in a certain layer;

say this layer in the middle, will have access

to every feature, to every value from

the previous layer, from the input layer which is

why I’m now drawing arrows from every input

feature to every one of these neurons shown

here in the middle.

You can imagine that if

you’re trying to predict affordability and it knows what’s the price shipping

cost marketing and material, may be you’ll learn to ignore marketing and material

and just figure out through setting the

parameters appropriately to only focus on the subset

of features that are most relevant to affordability.

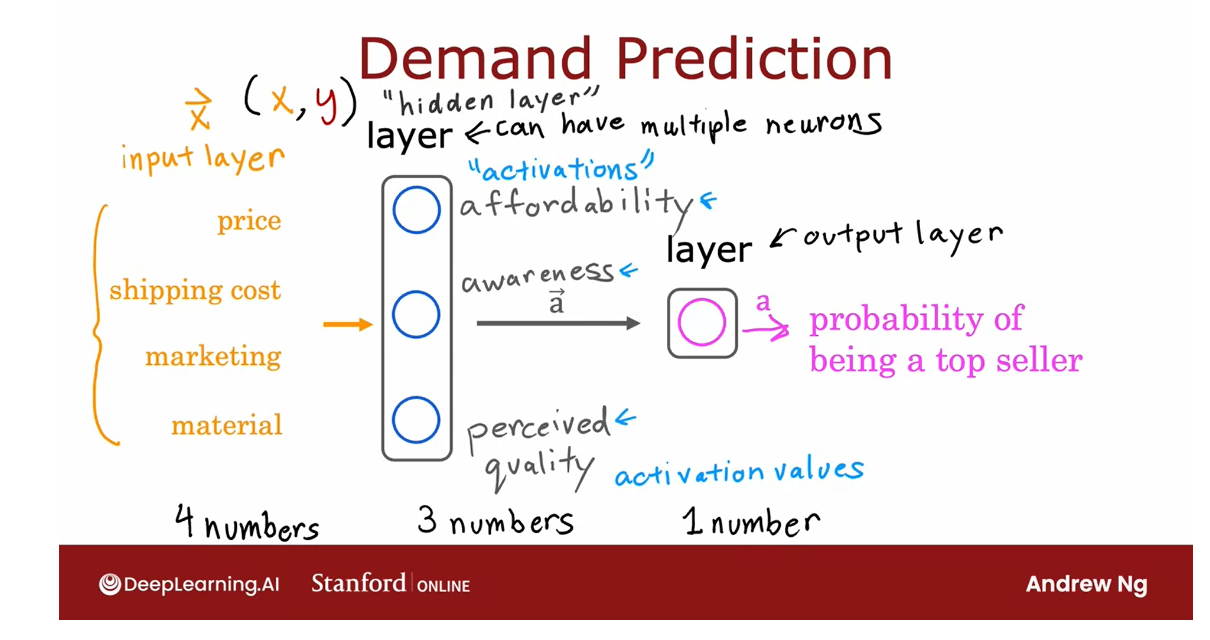

Input features comprise feature vector

To further simplify

the notation and the description of this

neural network I’m going to take these four

input features and write them as a vector x, and we’re going to view the

neural network as having four features that comprise

this feature vector x.

This feature vector is

fed to this layer in the middle which then computes

three activation values. That is these numbers and these three activation values in turn becomes

another vector which is fed to this final

output layer that finally outputs the probability of this t-shirt to

being a top seller. That’s all a neural network is.

It has a few layers

where each layer inputs a vector and outputs

another vector of numbers.

For example, this layer

in the middle inputs four numbers x and outputs three numbers

corresponding to affordability, awareness, and

perceived quality.

To add a little bit

more terminology, you’ve seen that this

layer is called the output layer and this layer is

called the input layer. To give the layer in the

middle a name as well, this layer in the middle

is called a hidden layer. I know that this is

maybe not the best or the most intuitive name but that terminology comes from that’s when you have

a training set.

In a training set, you get to observe both x and y. Your data set tells you

what is x and what is y, and so you get data that tells you what are the correct inputs

and the correct outputs.

But your dataset

doesn’t tell you what are the correct values

for affordability, awareness, and

perceived quality. The correct values

for those are hidden.You don’t see them

in the training set, which is why this layer in the middle is called

a hidden layer.

I’d like to share with you

another way of thinking about neural networks

that I’ve found useful for building my

intuition about it.

Cover up the left half of the diagram

Just let me cover up the

left half of this diagram, and see what we’re left with.

What you see here

is that there is a logistic regression

algorithm or logistic regression unit

that is taking as input, affordability, awareness, and perceived

quality of a t-shirt, and using these three

features to estimate the probability of the

t-shirt being a top seller. This is just

logistic regression.

But the cool thing about this is rather than using

the original features, price, shipping cost,

marketing, and so on, is using maybe better set of features,

affordability, awareness, and perceived quality,

that are hopefully more predictive of whether or not this t-shirt will

be a top seller.

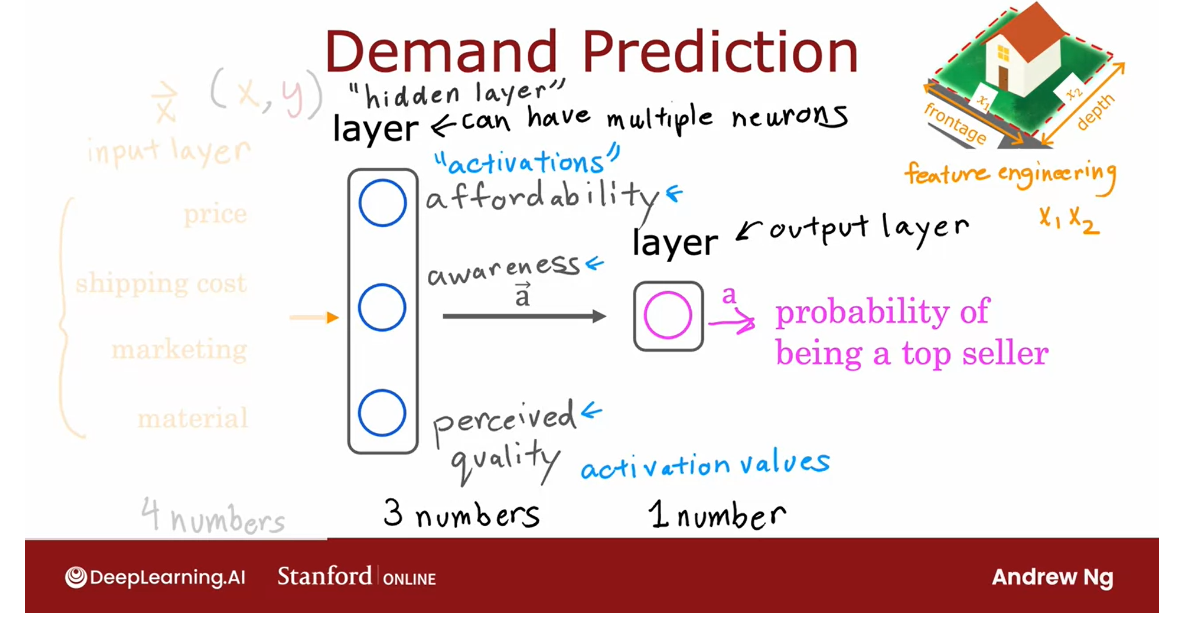

One way to think of this neural network is logistic regression: learn its own features

One way to think of

this neural network is, just logistic regression. But as a version of

logistic regression, they can learn its

own features that makes it easier to make

accurate predictions.

In fact, you might remember

from the previous week, this housing example

where we said that if you want to predict

the price of the house, you might take the frontage or the width of lots

and multiply that by the depth of a

lot to construct a more complex feature, x_1 times x_2, which was the size of the lawn.

There we were doing manual

feature engineering where we had to look

at the features x_1 and x_2 and decide by

hand how to combine them together to come up

with better features.

What the neural network

does is instead of you needing to manually

engineer the features, it can learn, as

you’ll see later, its on features to make the learning problem

easier for itself. This is what makes neural networks one of the most powerful learning

algorithms in the world today.

To summarize, a neural network, does this, the input layer

has a vector of features, four numbers in this example, it is input to the hidden layer, which outputs three numbers.

I’m going to use a

vector to denote this vector of activations that this hidden layer outputs.Then the output layer

takes its input to three numbers and

outputs one number, which would be the

final activation, or the final prediction

of the neural network.

Property of neural network: don’t need to go in to explicitly decide what features the NN should compute

One note, even

though I previously described this neural network

as computing affordability, awareness, and

perceived quality, one of the really nice

properties of a neural network is when you train it from data, you don’t need to go in to explicitly decide

what other features, such as affordability and so on, that the neural network should compute instead or

figure out all by itself what are the features it wants to use in

this hidden layer.

That’s what makes it such a

powerful learning algorithm.You’ve seen here one example

of a neural network and this neural network has a single layer that

is a hidden layer.

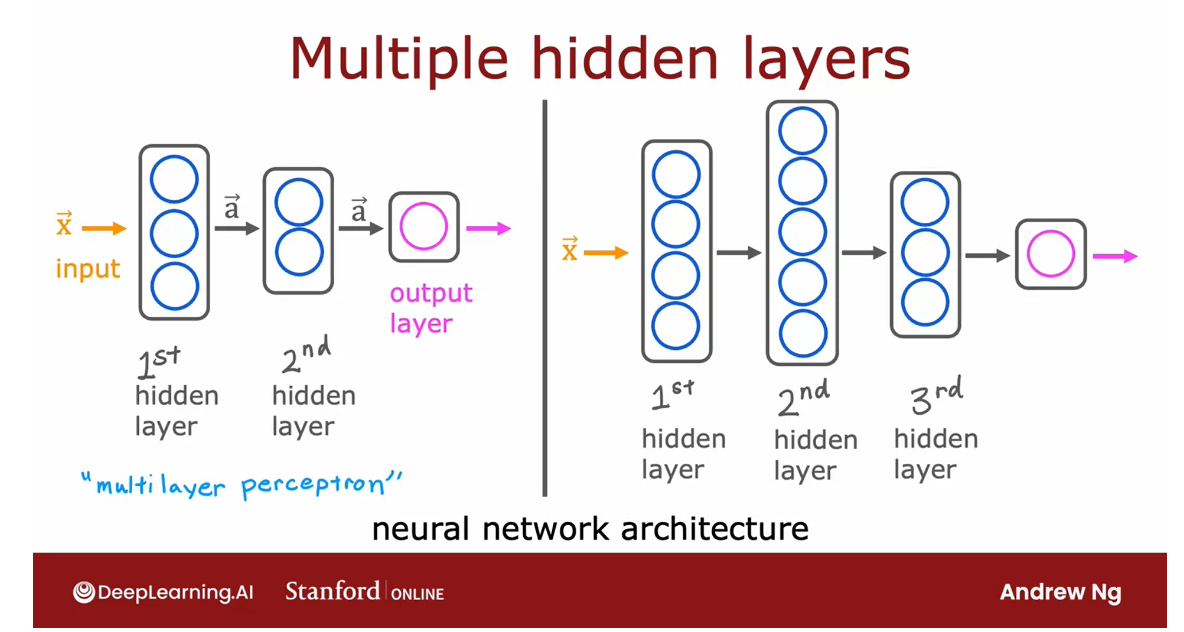

Let’s take a look at

some other examples of neural networks,

specifically, examples with more

than one hidden layer. Here’s an example.

This neural network has an input feature vector X that is fed to one hidden layer. I’m going to call this

the first hidden layer.If this hidden layer

has three neurons, it will then output a vector

of three activation values.

These three numbers can then be input to the second

hidden layer.

If the second hidden layer has two neurons to logistic units, then this second

hidden there will output another vector of now two activation values

that maybe goes to the output layer that then outputs the neural

network’s final prediction.

Here’s another example. Here’s a neural network that it’s input goes to

the first hidden layer, the output of the

first hidden layer goes to the second hidden layer, goes to the third hidden layer, and then finally to

the output layer.

The architecture of the neural network: how many hidden layers and how many neurons per hidden layer is.

When you’re building

your own neural network, one of the decisions

you need to make is how many hidden layers do you want and how many neurons do you want each hidden

layer to have.

This question of how

many hidden layers and how many neurons

per hidden layer is a question of the architecture

of the neural network.

You’ll learn later in

this course some tips for choosing an appropriate

architecture for a neural network.

But choosing the right number of hidden layers and number of hidden units per layer can have an impact on the performance of a learning algorithm as well.

Later in this course,

you’ll learn how to choose a good architecture for your

neural network as well.

Neural network with multi layers: Multilayer perceptron 多层感知机

By the way, in some

of the literature, you see this type of

neural network with multiple layers like this

called a multilayer perceptron.

If you see that, that just

refers to a neural network that looks like what you’re

seeing here on the slide. That’s a neural network.

I know we went through

a lot in this video. Thank you for sticking with me. But you now know how a

neural network works.

In the next video, let’s take a look

at how these ideas can be applied to other

applications as well. In particular, we’ll

take a look at the computer vision application

of face recognition. Let’s go on to the next video.

Example: Recognizing Images

In the last video, you saw how a neural network works in a

demand prediction example.

Let’s take a look at how you

can apply a similar type of idea to computer vision

application.

Let’s dive in. If you’re building a face

recognition application, you might want to train a neural network that takes

as input a picture like this and outputs the identity of the person in the picture.

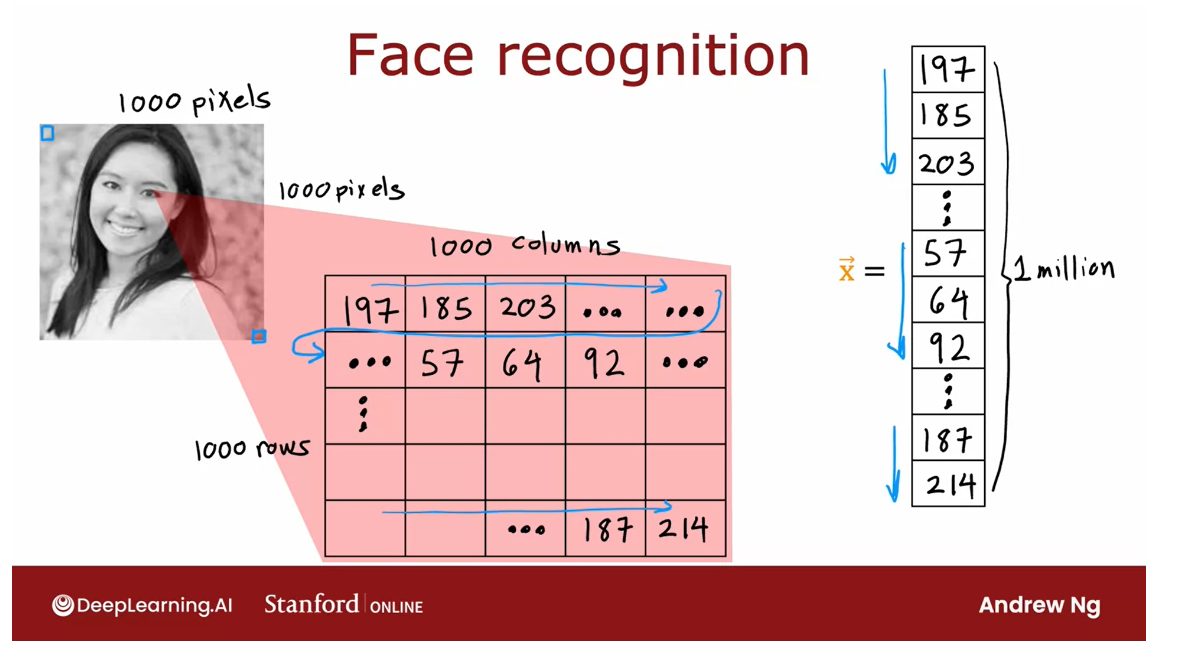

This image is 1,000

by 1,000 pixels. Its representation

in the computer is actually as 1,000 by 1,000 grid, or also called 1,000 by 1,000 matrix of pixel

intensity values.

In this example, my

pixel intensity values or pixel brightness values, goes from 0-255 and so 197 here would be the brightness of the pixel in the very upper

left of the image, 185 is brightness of the

pixel, one pixel over, and so on down to 214 would be the lower

right corner of this image.

Take pixel intensity values and unroll them into a vector

NN: Takes as input a feature vector with xxx pixel brightness values

NN: Output the identity of a person in the picture

If you were to take these pixel intensity values and unroll them into a vector, you end up with a

list or a vector of a million pixel

intensity values. One million because 1,000 by 1,000 square gives you

a million numbers. The face recognition problem is, can you train a neural network that takes as input a

feature vector with a million pixel

brightness values and outputs the identity of

the person in the picture.

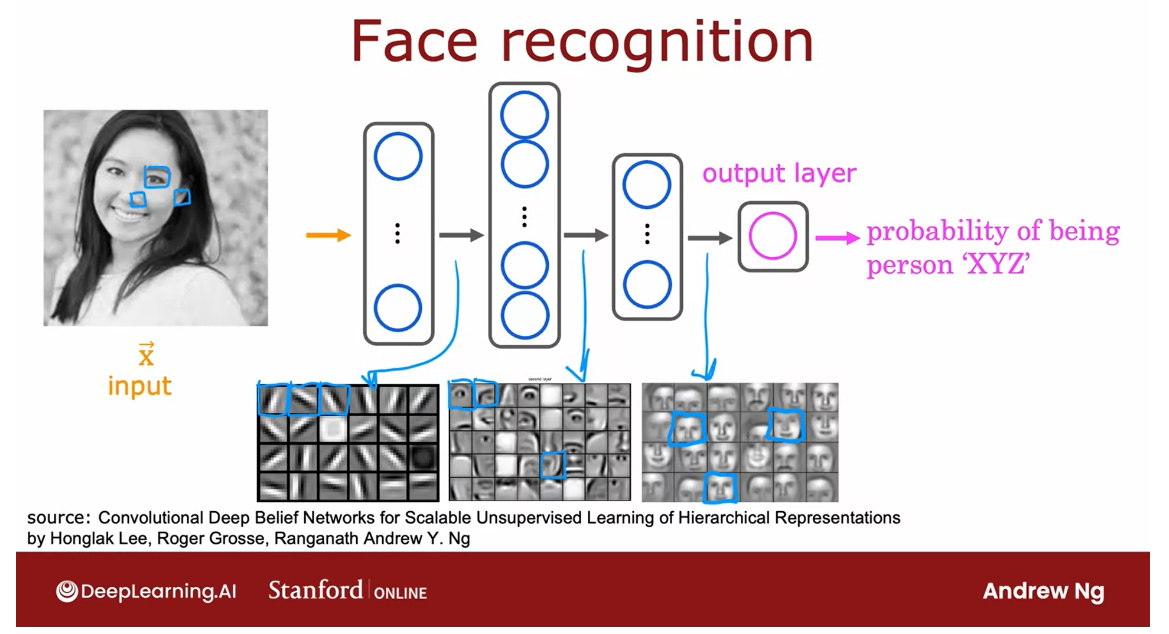

This is how you might build a neural network to

carry out this task.The input image X is fed

to this layer of neurons. This is the first hidden layer, which then extract

some features.

The upwards of this

first hidden layer is fed to a second hidden layer and that output is fed to a third layer and then

finally to the upper layer, which then estimates, say the probability of this

being a particular person.

Peer at the different neurons in the hidden layers to figure out what they may be computing.

One interesting

thing would be if you look at a neural network

that’s been trained on a lot of images of

faces and to try to visualize what are these hidden layers,

trying to compute.

It turns out that when you train a system like this

on a lot of pictures of faces and you peer at the different neurons

in the hidden layers to figure out what they may be computing this is

what you might find.

In the first hidden layer: Neurons are looking for very short lines or edges

In the first hidden layer, you might find one

neuron that is looking for the low vertical line or

a vertical edge like that.

A second neuron looking for a oriented line or

oriented edge like that.The third neuron

looking for a line at that orientation, and so on.

In the earliest layers

of a neural network, you might find that the

neurons are looking for very short lines or very

short edges in the image.

In the second hidden layer: Learn to group lots of short lines to look for parts of faces.

If you look at the

next hidden layer, you find that these neurons

might learn to group together lots of little short lines and little short edge segments in order to look for

parts of faces.

For example, each of these

little square boxes is a visualization of what that

neuron is trying to detect.This first neuron

looks like it’s trying to detect the presence or absence of an eye in a certain

position of the image.

The second neuron,

looks like it’s trying to detect like a corner of a nose and maybe

this neuron over here is trying to detect

the bottom of a nose.Then as you look

at the next hidden layer in this example, the neural network

is aggregating different parts of faces to then try to detect presence

or absence of larger, coarser face shapes.

Then finally, detecting how much the face corresponds to

different face shapes creates a rich set of features

that then helps the output layer try to determine the identity

of the person picture.

NN: feature detectors at the different hidden layers learn all by themselves.

A remarkable thing about the neural network

is you can learn these feature detectors at the different hidden

layers all by itself.

In this example, no

one ever told it to look for short little

edges in the first layer, and eyes and noses

and face parts in the second layer and then more complete face shapes

at the third layer.

The neural network is able

to figure out these things all by itself from data.

Just one note, in

this visualization, the neurons in the

first hidden layer are shown looking at relatively small windows

to look for these edges.

In the second hidden layer

is looking at bigger window, and the third hidden layer is looking at even bigger window.These little neurons

visualizations actually correspond

to differently sized regions in the image.

Just for fun, let’s see

what happens if you were to train this neural network

on a different dataset, say on lots of pictures of cars, picture on the side. The same learning algorithm

is asked to detect cars, will then learn edges

in the first layer.

Pretty similar but then they’ll learn to detect parts of cars in the second hidden

layer and then more complete car shapes in

the third hidden layer.

Just by feeding it

different data, the neural network

automatically learns to detect very different features

so as to try to make the predictions

of car detection or person recognition

or whether there’s a particular given task

that is trained on.

That’s how a neural

network works for computer vision application.

In fact, later this week, you’ll see how you can build a neural network

yourself and apply it to a handwritten digit

recognition application.

So far we’ve been going

over the description of intuitions of neural networks to give you a feel

for how they work. In the next video, let’s look more deeply into

the concrete mathematics and a concrete implementation

of details of how you actually build one or more

layers of a neural network, and therefore how

you can implement one of these things yourself. Let’s go on to the next video.

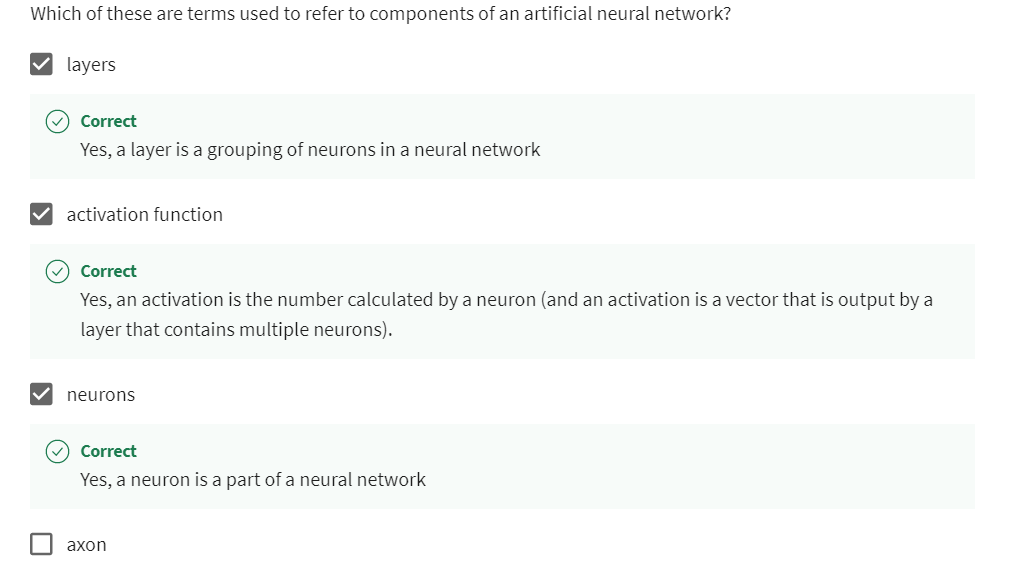

[02] Practice quiz: Neural networks intuition

Practice quiz: Neural networks intuition

Latest Submission Grade 100%

[03] Neural network model

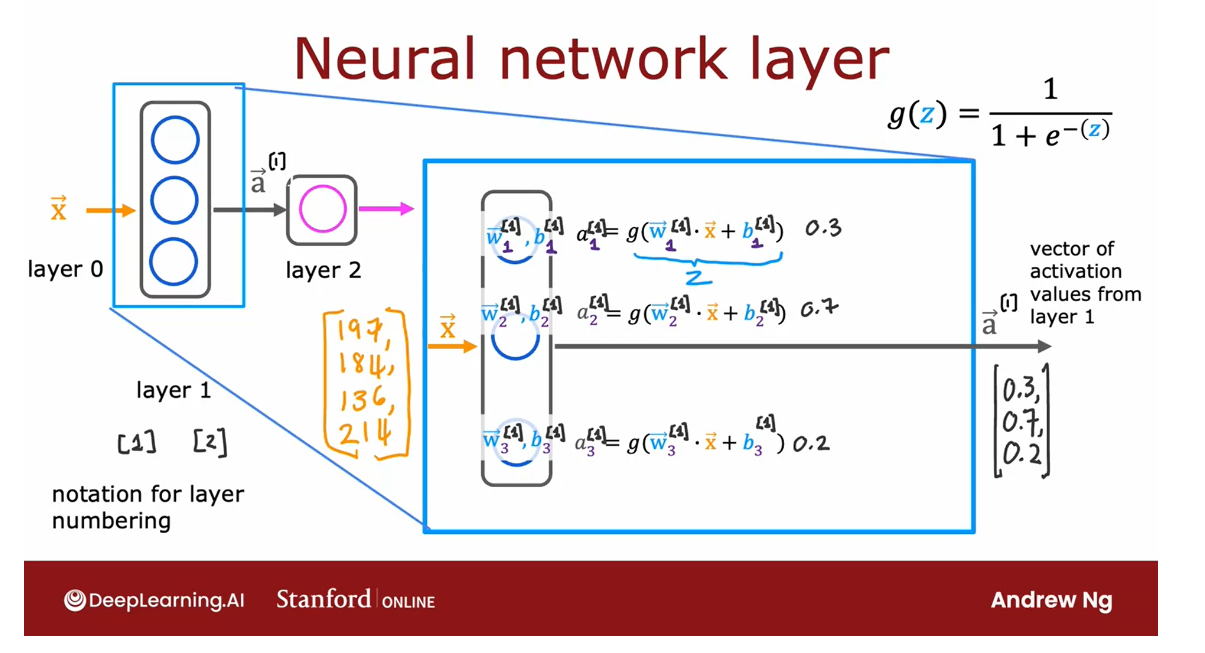

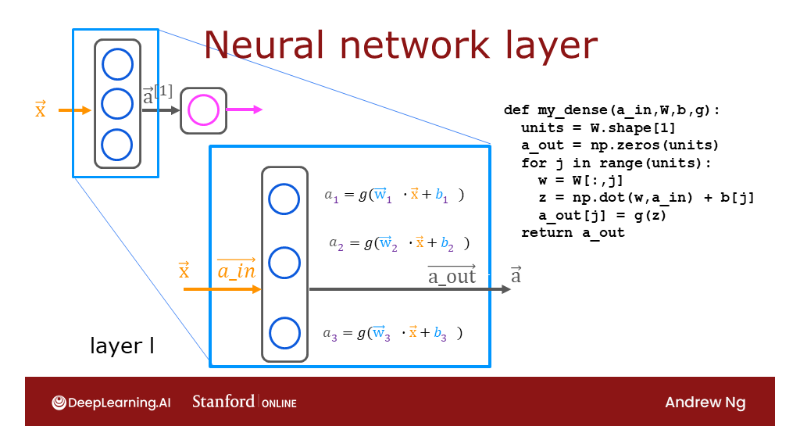

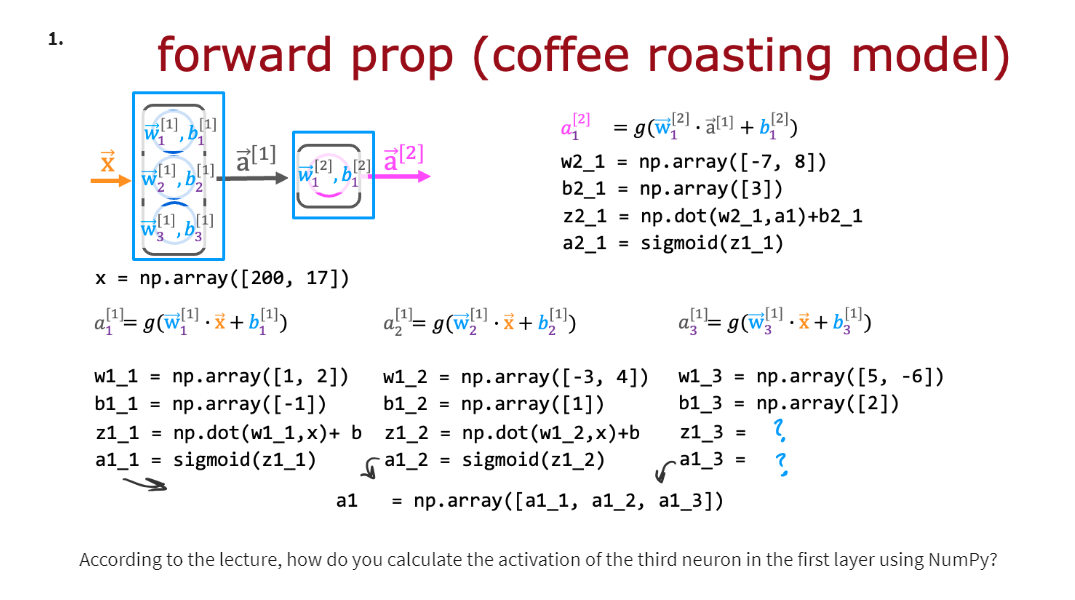

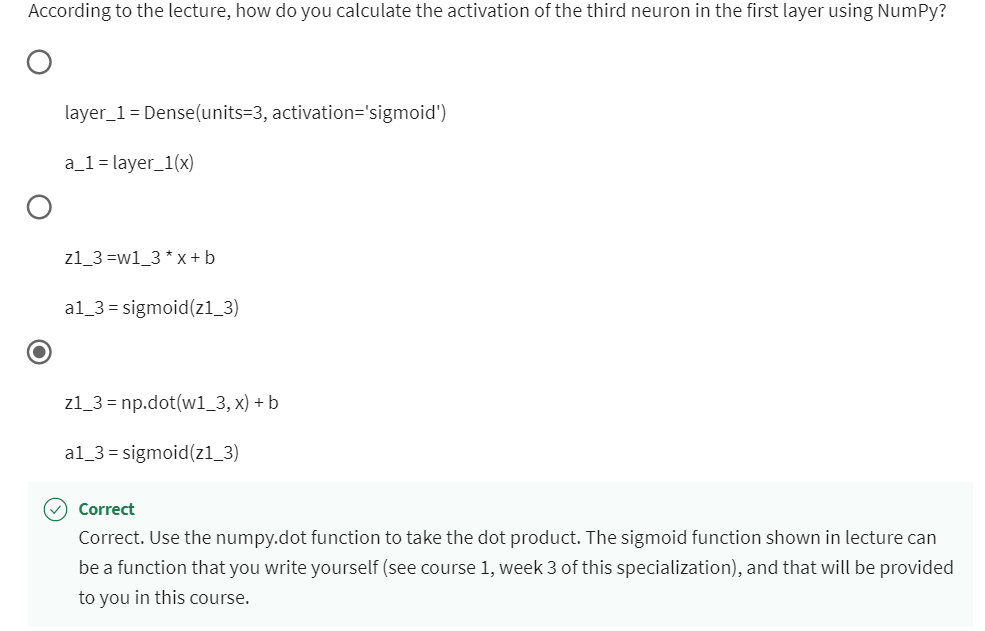

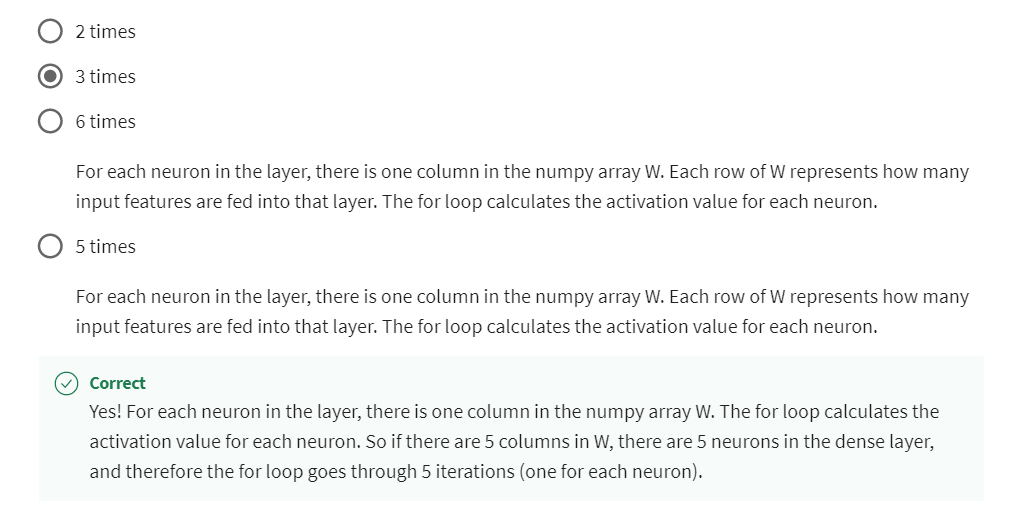

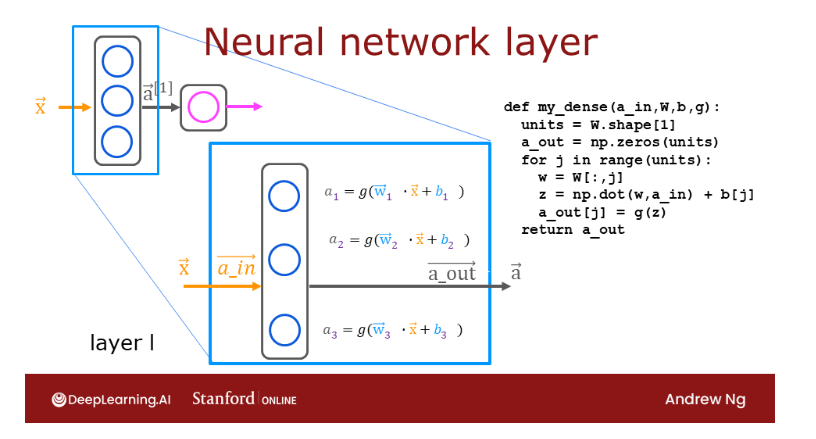

Neural network layer

The fundamental

building block of most modern neural networks

is a layer of neurons.

In this video, you’ll

learn how to construct a layer of neurons and

once you have that down, you’d be able to take those

building blocks and put them together to form a

large neural network.

Let’s take a look at how

a layer of neurons works.

Here’s the example we had from the demand prediction

example where we had four input features

that were set to this layer of three neurons

in the hidden layer that then sends its output to this output layer

with just one neuron.

Let’s zoom in to the hidden layer to look

at its computations.

This hidden layer

inputs four numbers and these four numbers are inputs

to each of three neurons.

Each of these three neurons

is just implementing a little logistic

regression unit or a little bit logistic

regression function.

Take this first neuron. It has two parameters, w and b. In fact, to denote that, this is the first hidden unit, I’m going to subscript

this as w_1, b_1.

What it does is I’ll output

some activation value a, which is g of w_1 in a

product with x plus b_1, where this is the

familiar z value that you have learned about in logistic regression in

the previous course, and g of z is the familiar

logistic function, 1 over 1 plus e to

the negative z.

Maybe this ends up

being a number 0.3 and that’s the activation value

a of the first neuron.To denote that this

is the first neuron, I’m also going to add a

subscript a_1 over here, and so a_1 may be

a number like 0.3.

There’s a 0.3 chance of this being highly affordable

based on the input features.

Now let’s look at

the second neuron.

The second neuron has

parameters w_2 and b_2, and these w, b or w_2, b_2 are the parameters of

the second logistic unit.

It computes a_2 equals the

logistic function g applied to w_2 dot product x plus b_2 and this may be some

other number, say 0.7. Because in this example, there’s a 0.7 chance that we think the potential buyers

will be aware of this t-shirt.

Similarly, the third neuron has a third set of

parameters w_3, b_3.

Similarly, it computes an activation value

a_3 equals g of w_3 dot product x plus b_3

and that may be say, 0.2.

In this example, these

three neurons output 0.3, 0.7, and 0.2, and this vector of three numbers becomes the vector of

activation values a, that is then passed to the final output layer

of this neural network.

Give the layers different numbers

Now, when you build neural networks with

multiple layers, it’ll be useful to give the

layers different numbers.

By convention, this layer

is called layer 1 of the neural network

and this layer is called layer 2 of

the neural network.

The input layer

is also sometimes called layer 0 and today, there are neural

networks that can have dozens or even

hundreds of layers.

But in order to

introduce notation to help us distinguish

between the different layers, I’m going to use

superscript square bracket 1 to index into

different layers.

In particular, a superscript in square brackets

1, I’m going to use, that’s a notation to

denote the output of layer 1 of this hidden layer

of this neural network, and similarly, w_1, b_1 here are the parameters of the first unit in layer

1 of the neural network, so I’m also going to add a superscript in

square brackets 1 here, and w_2, b_2 are the parameters of the second hidden unit or the second hidden

neuron in layer 1.

Its parameters are also

denoted here w1 like so.

Similarly, I can add superscripts square

brackets like so to denote that these are the activation values of the hidden units of layer

1 of this neural network.

I know maybe this notation is getting a little

bit cluttered.

But the thing to

remember is whenever you see this superscript

square bracket 1, that just refers to a quantity that is associated with layer

1 of the neural network.

If you see superscript

square bracket 2, that refers to a quantity

associated with layer 2 of the neural network and similarly for

other layers as well, including layer 3, layer 4 and so on for neural

networks with more layers.

That’s the computation of layer

1 of this neural network. Its output is this

activation vector, a2 and I’m going to

copy this over here because this output a_1

becomes the input to layer 2.

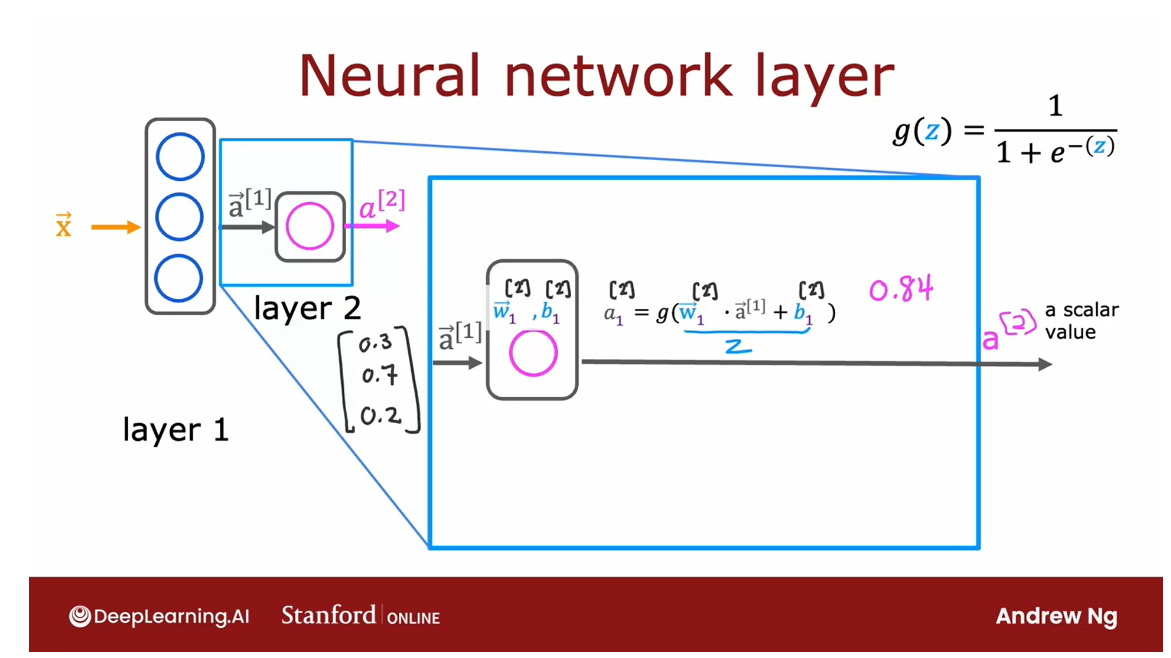

Now let’s zoom into the computation of layer

2 of this neural network, which is also the output layer. The input to layer 2 is

the output of layer 1, so a_1 is this vector 0.3, 0.7, 0.2 that we just computed on the previous

part of this slide.

Because the output layer

has just a single neuron, all it does is it

computes a_1 that is the output of this first

and only neuron, as g, the sigmoid function

applied to w _1 in a product with a3, so this is the input

into this layer, and then plus b_1.

Here, this is the quantity

z that you familiar with and g as before is the sigmoid function

that you apply to this. If this results in

a number, say 0.84, then that becomes the output

layer of the neural network.

In this example, because the output layer has

just a single neuron, this output is just a scalar, is a single number rather

than a vector of numbers.

Sticking with our notational

convention from before, we’re going to use a superscript

in square brackets 2, to denote the quantities associated with layer 2

of this neural network, so a4 is the

output of this layer, and so I’m going

to also copy this here as the final output

of the neural network.

To make the notation consistent, you can also add these

superscripts square bracket 2s to denote that these are the parameters and

activation values associated with layer 2

of the neural network.

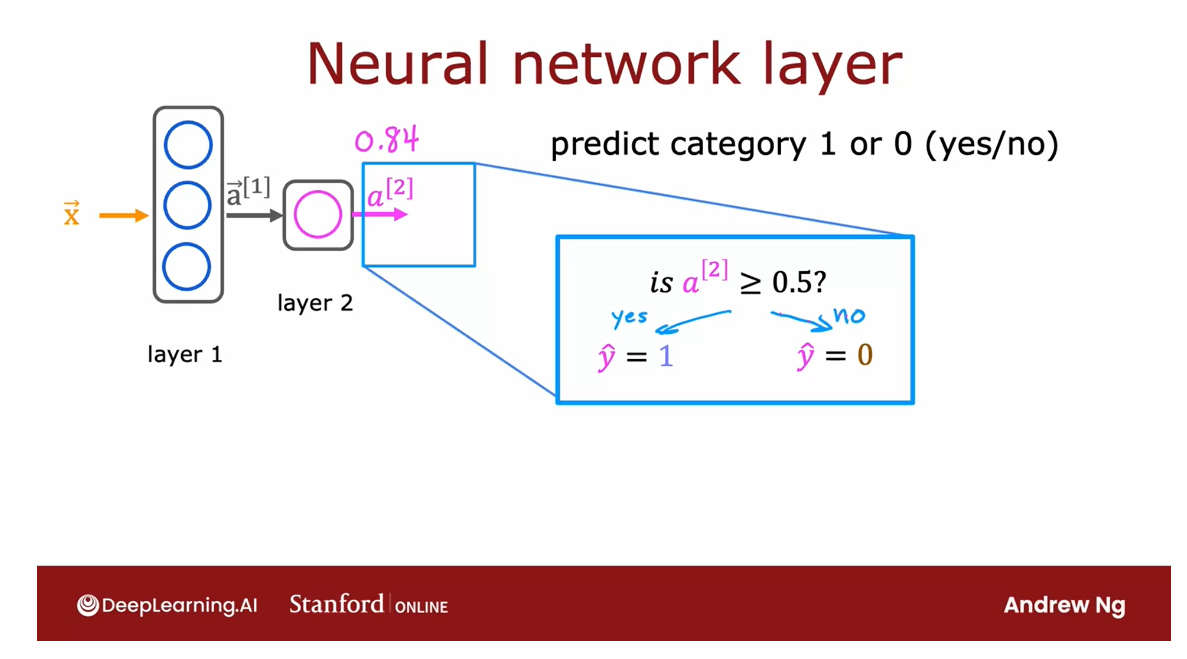

Once the neural network

has computed a_2, there’s one final

optional step that you can choose to implement or not, which is if you want

a binary prediction, 1 or 0, is this a top seller? Yes or no? As you

can take the number a superscript square

brackets 2 subscript 1, and this is the number

0.84 that we computed, and threshold this at 0.5. If it’s greater than 0.5, you can predict y hat equals 1 and if it

is less than 0.5, then predict your

y hat equals 0.

We saw this thresholding as

well when you learned about logistic regression in the first course of

the specialization. If you wish, this then gives you the final prediction y hat

as either one or zero, if you don’t want

just the probability of it being a top seller. So that’s how a

neural network works.

Every layer inputs a

vector of numbers and applies a bunch of logistic

regression units to it, and then computes

another vector of numbers that then

gets passed from layer to layer until you get to the final output

layers computation, which is the prediction

of the neural network.

Then you can either

threshold at 0.5 or not to come up with

the final prediction.

With that, let’s go on to

use this foundation we’ve built now to look at

some even more complex, even larger neural

network models. I hope that by seeing

more examples, this concept of layers

and how to put them together to build

a neural network will become even clearer. So let’s go on to

the next video.

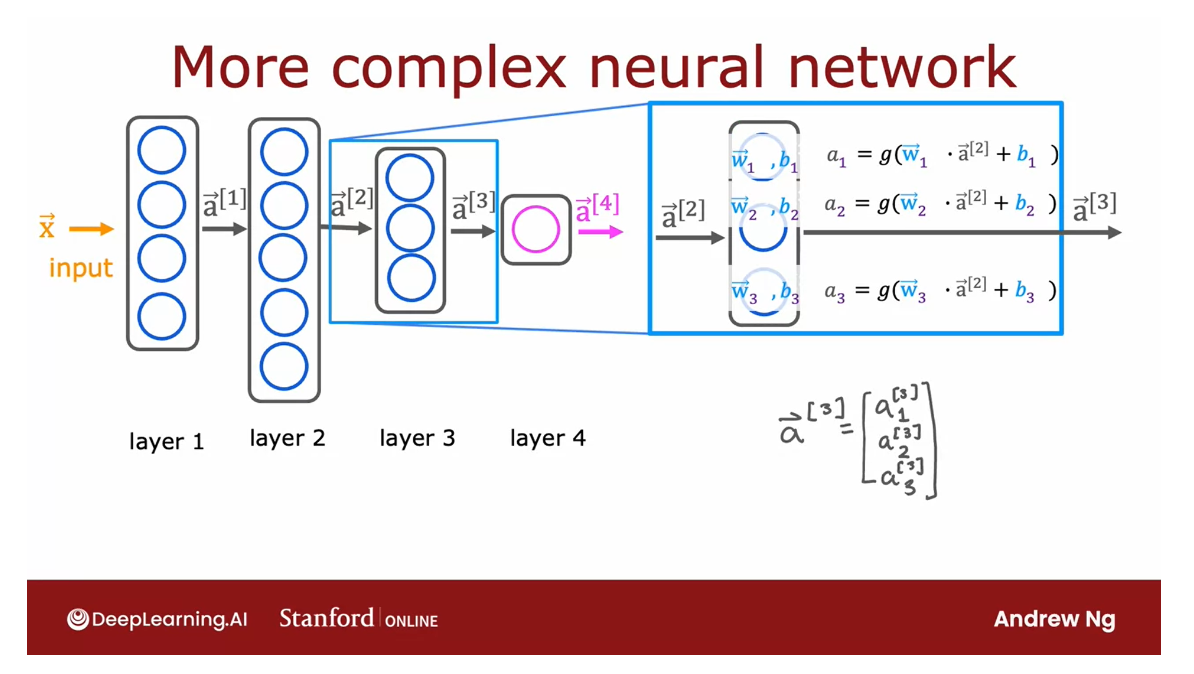

More complex neural networks

In the last video, you learned about the neural

network layer and how that takes this inputs a

vector of numbers and in turn, outputs another

vector of numbers.

In this video, let’s use that layer to build a more

complex neural network.

Through this, I hope that the notation that

we’re using for neural networks

will become clearer and more concrete as

well. Let’s take a look.

Four layers

This is the running example that I’m going to use throughout this video as an example of a more complex

neural network.

This network has four layers, not counting the input layer, which is also called Layer 0, where layers 1, 2, and 3 are hidden layers, and Layer 4 is the output layer, and Layer 0, as usual, is the input layer.

By convention, when we say that a neural network

has four layers, that includes all the hidden

layers in the output layer, but we don’t count

the input layer. This is a neural network

with four layers in the conventional way of

counting layers in the network.

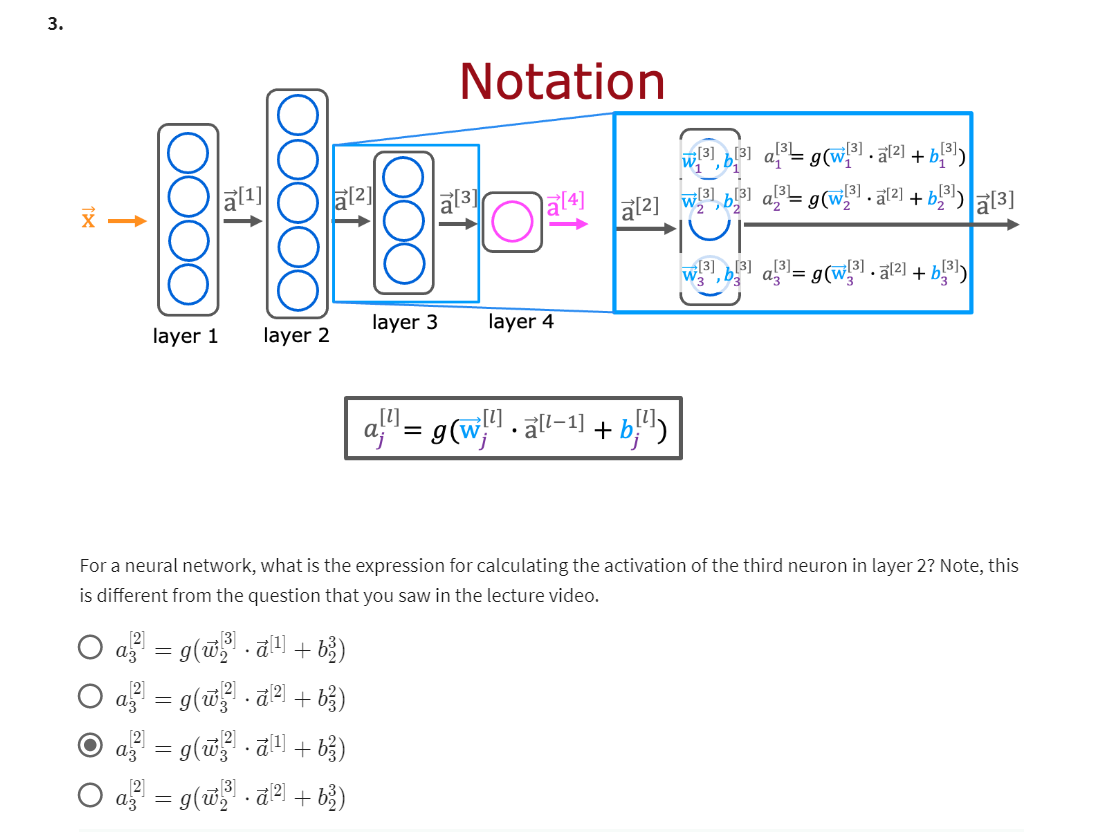

Let’s zoom in to Layer 3, which is the third and

final hidden layer to look at the computations

of that layer.

Layer 3 inputs a vector, a superscript square bracket 2 that was computed by

the previous layer, and it outputs a_3, which is another vector.

What is the computation that

Layer 3 does in order to go from a_2 to a_3?

If it has three neurons or we

call it three hidden units, then it has parameters w_1, b_1, w_2, b_2, and w_3, b_3 and it computes a_1

equals sigmoid of w_1. product with this input

to the layer plus b_1, and it computes a_2

equals sigmoid of w_2. product with again a_2, the input to the layer plus

b_2 and so on to get a_3.Then the output of this layer is a vector comprising a_1, a_2, and a_3.

Again, by convention, if we want to more explicitly denote

that all of these are quantities associated

with Layer 3 then we add in all of

these superscript, square brackets 3 here, to denote that these parameters w and b are the parameters

associated with neurons in Layer 3 and that these activations are

activations with Layer 3.

Notice that this term here is w_1 superscript

square bracket 3, meaning the parameters

associated with Layer 3. product with a superscript

square bracket 2, which was the output of Layer 2, which became the

input to Layer 3.

That’s why it has a_3

here because it’s a parameter associator of

Layer 3. product with, and there’s a_2 there because

is the output of Layer 2.

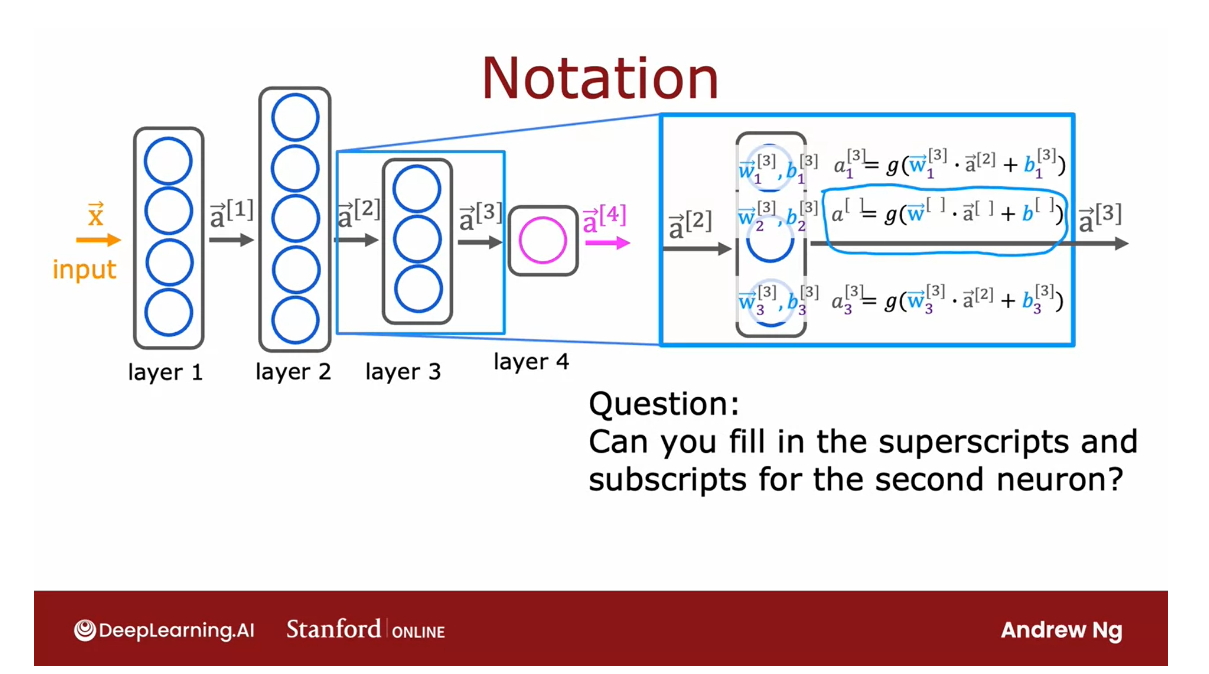

Now, let’s just do a quick double check on

our understanding of this. I’m going to hide the

superscripts and subscripts associated with

the second neuron and without rewinding

this video, go ahead and rewind if you want, but prefer you not.

But without rewinding

this video, are you able to think

through what are the missing superscripts and subscripts in this equation

and fill them in yourself?

Once you take a look at the end video quiz and

see if you can figure out what are the appropriate

superscripts and subscripts for this

equation over here.

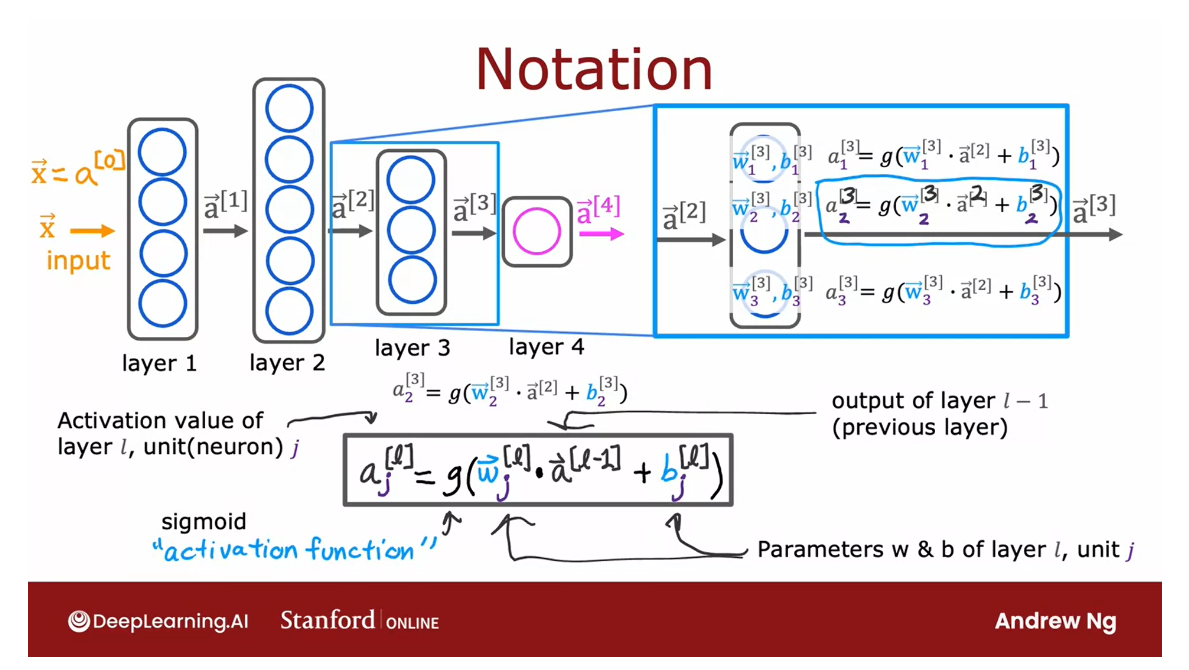

To recap, a_3 is activation associated

with Layer 3 for the second neuron hence, this a_2 is a parameter

associated with the third layer.

For the second neuron, this is a_2, same as above and then plus b_3 too. Hopefully,

that makes sense.

Just the more general

form of this equation for an arbitrary Layer 0 and

for an arbitrary unit j, which is that a deactivation

outputs of layer l, unit j, like a32, that’s going to be

the sigmoid function applied to this term, which is the wave

vector of layer l, such as Layer 3 for the jth

unit so there’s a_2 again, in the example

above, and so that’s dot-producted with a

deactivation value.

Notice, this is not l, this is l minus 1, like a_2 above here

because you’re dot-producting with

the output from the previous layer

and then plus b, the parameter for this

layer for that unit j.

This gives you the activation

of layer l unit j, where the superscript in

square brackets l denotes layer l and a subscript

j denotes unit j.

When building neural networks, unit j refers to the jth neuron, so we use those

terms a little bit interchangeably where each unit is a single neuron in the layer.

Activation function: outputs activation value

G here is the sigmoid function. In the context of

a neural network, g has another name, which is also called the

activation function, because g outputs this

activation value.

When I say activation function, I mean this function g here.

So far, the only activation

function you’ve seen, this is a sigmoid

function but next week, we’ll look at when

other functions, then the sigmoid function can be plugged in place of g as well…

The activation function

is just that function that outputs these

activation values.

Just one last piece of notation. In order to make all this

notation consistent, I’m also going to give the input vector X and

another name which is a_0, so this way, the same equation also works for the first layer, where when l is equal to 1, the activations of

the first layer, that is a_1, would be the sigmoid times the weights

dot-product with a_0, which is just this

input feature vector X.

With this notation, you

now know how to compute the activation values

of any layer in a neural network

as a function of the parameters as well as the activations of

the previous layer.

You now know how to

compute the activations of any layer given the activations

of the previous layer.

Let’s put this into an inference algorithm

for a neural network. In other words, how to get a neural network to

make predictions. Let’s go see that

in the next video.

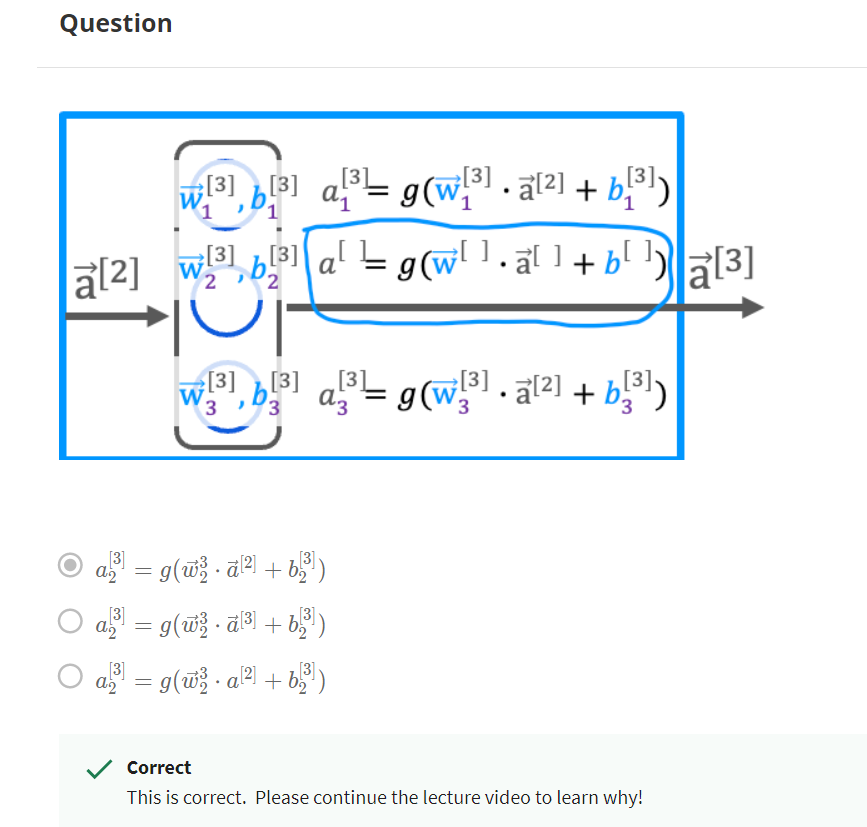

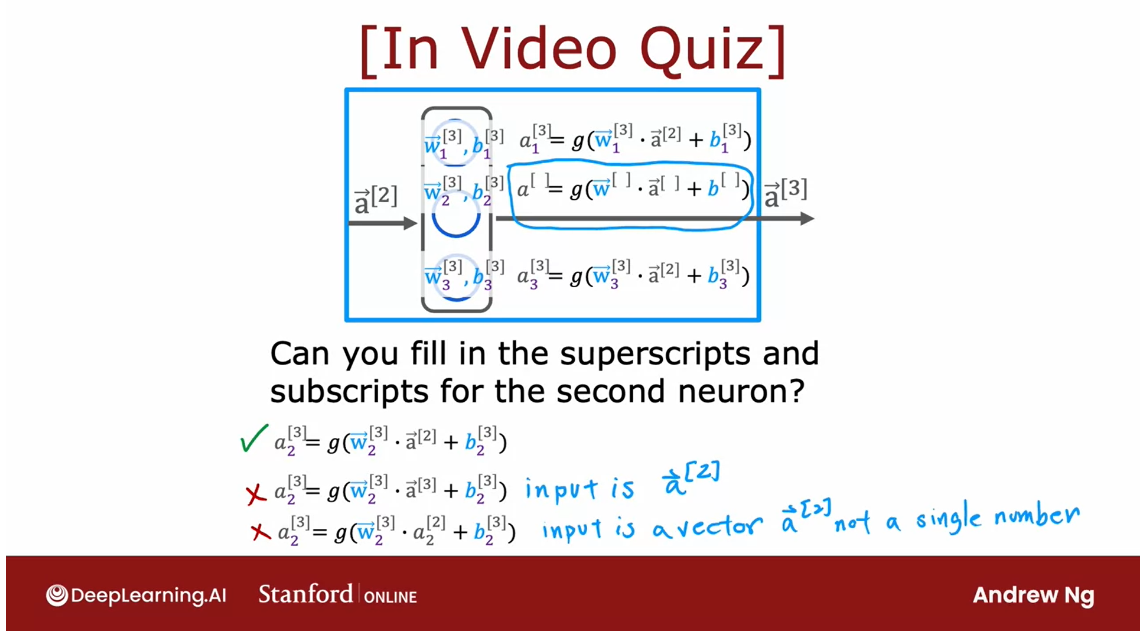

Quiz

Can you fill in the superscripts and subscripts for the second neuron?

answer

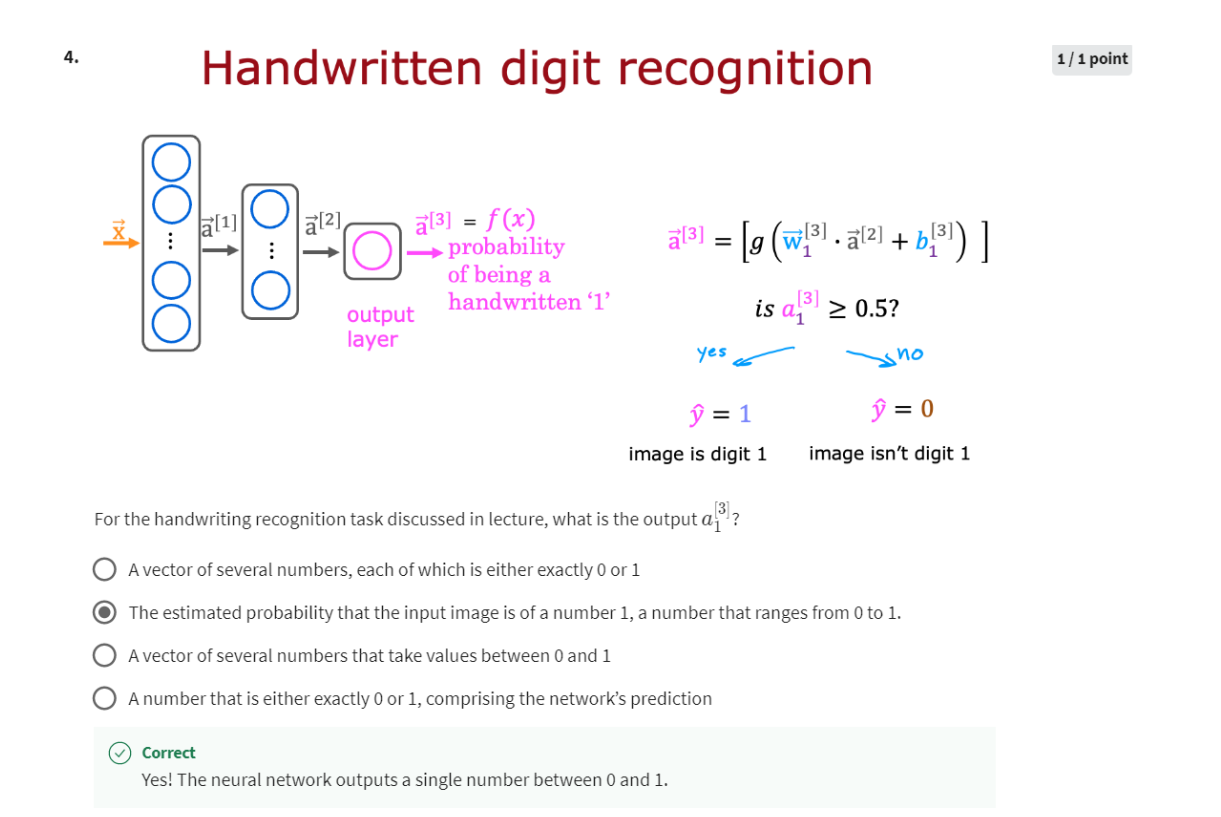

Inference: making predictions (forward propagation)

Let’s take what we’ve learned and put it

together into an algorithm to let your neural network make inferences or

make predictions.

Forward propagation

This will be an algorithm

called forward propagation. Let’s take a look.

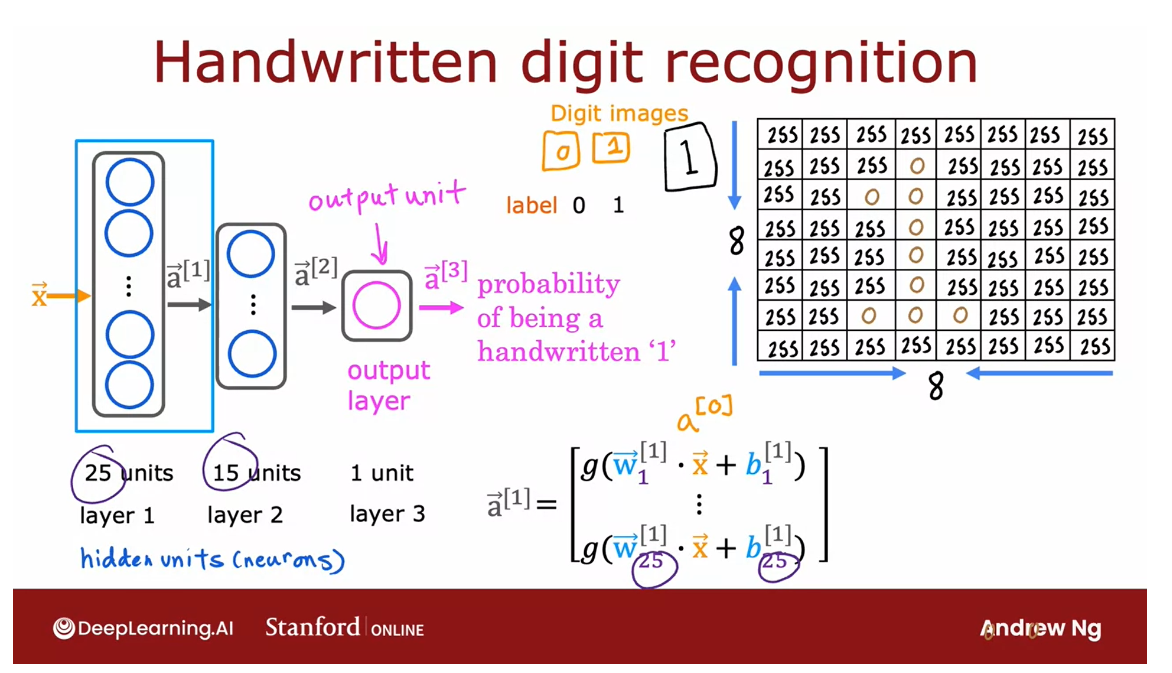

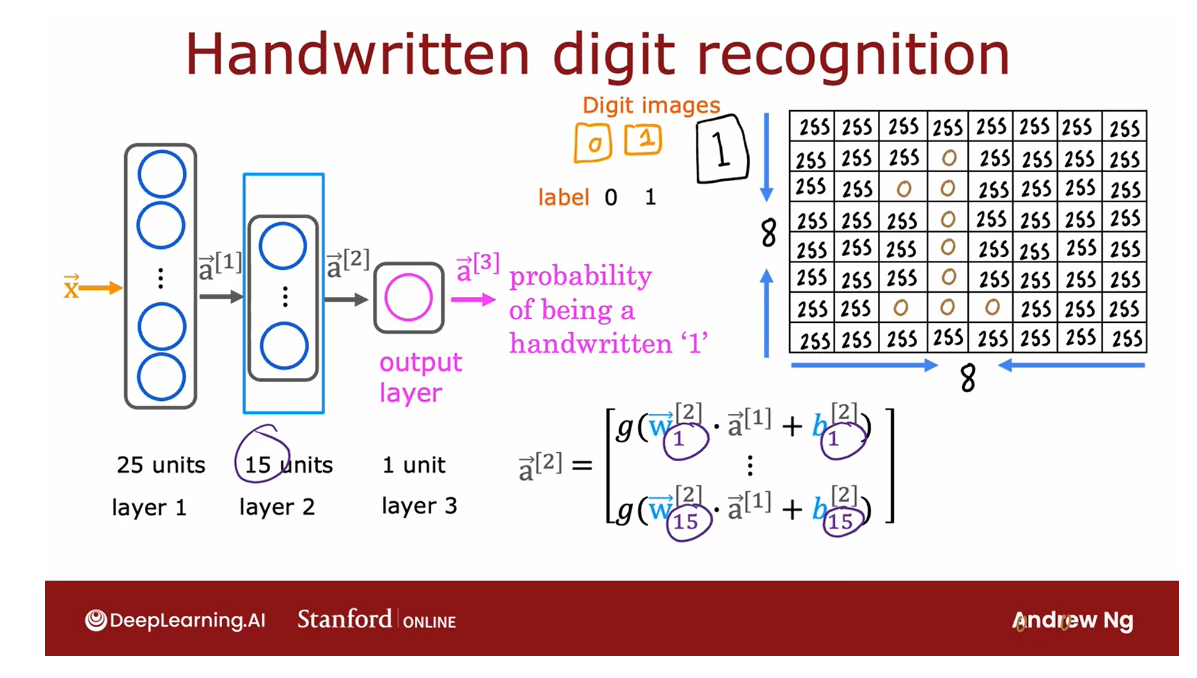

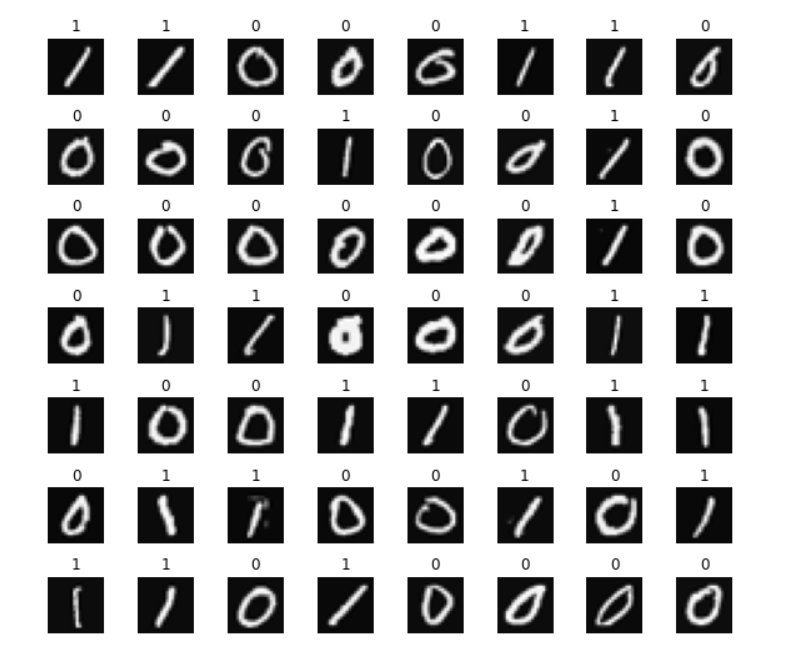

Binary classification

I’m going to use as a motivating example,

handwritten digit recognition.

And for simplicity we are just

going to distinguish between the handwritten digits zero and one.

So it’s just a binary classification

problem where we’re going to input an image and classify,

is this the digit zero or the digit one?

And you get to play with this yourself

later this week in the practice lab as well.

For the example of the slide,

I’m going to use an eight by eight image. And so this image of a one is this grid or

matrix of eight by eight or 64 pixel intensity values where 255

denotes a bright white pixel and zero would denote a black pixel.

And different numbers

are different shades of gray in between the shades of black and white.

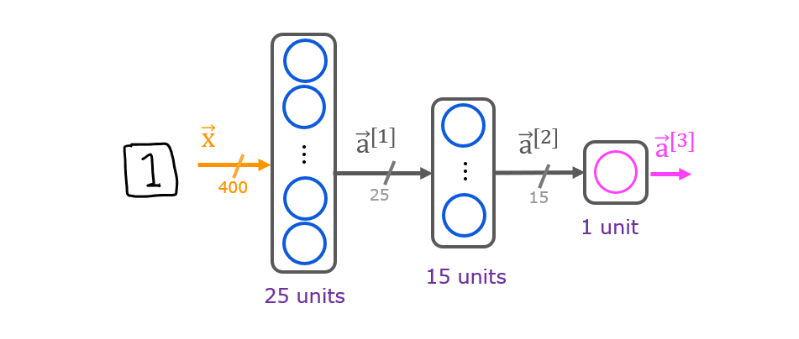

Given these 64 input features, we’re going to use the neural

network with two hidden layers.

Where the first hidden layer

has 25 neurons or 25 units.Second hidden layer has 15 neurons or

15 units.

And then finally the output layer or

outputs unit, what’s the chance of

this being 1 versus 0?.

So let’s step through the sequence

of computations that in your neural network will need to

make to go from the input X, this eight by eight or 64 numbers

to the predicted probability a3.

The first computation is

to go from X to a1, and that’s what the first layer of

the first hidden layer does.

It carries out a computation of

a super strip square bracket 1 equals this formula on the right.Notice that a one has 25 numbers

because this hidden layer has 25 units. Which is why the parameters go from w1

through w25 as well as b1 through b25.

And I’ve written x here but I could also

have written a0 here because by convention the activation of layer zero, that is a0

is equal to the input feature value x.So let’s just compute a1.

The next step is to compute a2. Looking at the second hidden layer, it then carries out this womputation

where a2 is a function of a1 and it’s computed as the safe point

activation function applied to w dot product a1 plus

the corresponding value of b.

Notice that layer two has 15 neurons or

15 units, which is why the parameters Here run

from w1 through w15 and b1 through b15. Now we’ve computed a2.

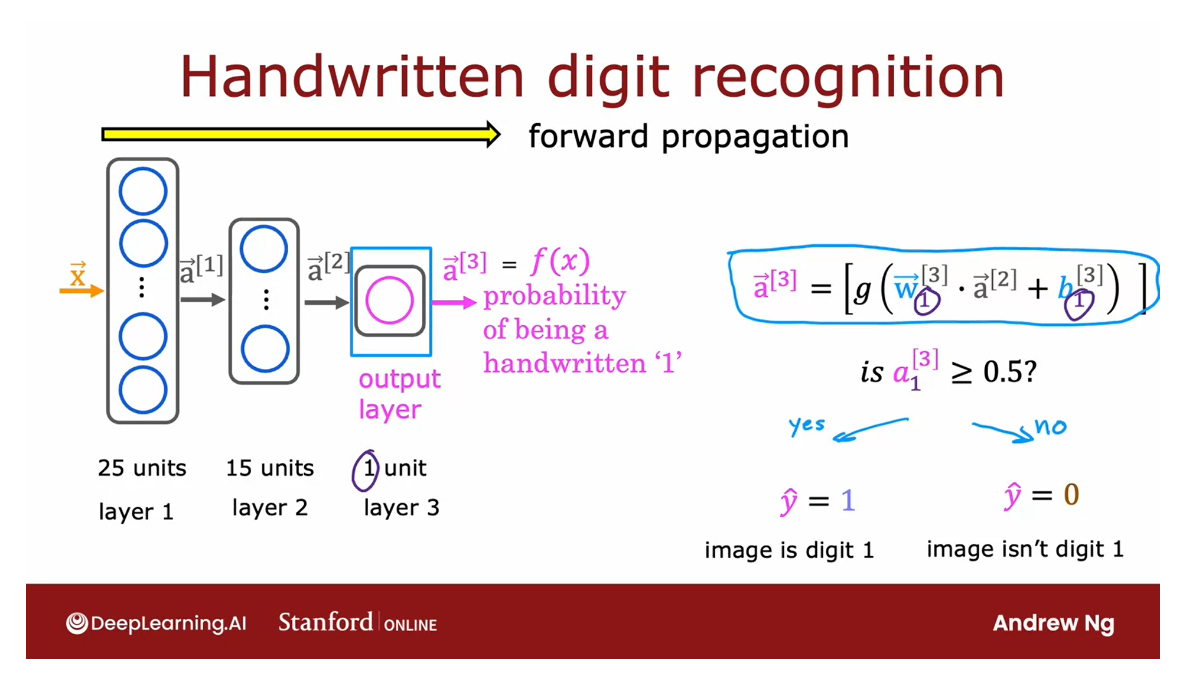

The Final step is then to compute a3 and

we do so using a very similar computation. Only now, this third layer,

the output layer has just one unit, which is why there’s just one output here.

So a3 is just a scalar. And finally you can optionally

take a3 subscript one and threshold it at 4.5 to come up with

a binary classification label. Is this the digit 1? Yes or no? So the sequence of computations first

takes x and then computes a1, and then computes a2, and then computes a3, which

is also the output of the neural networks.

You can also write that as f(x). So remember when we learned about linear

regression and logistic regression, we use f(x) to denote the output of

linear regression or logistic regression.

So we can also use f(x)

to denote the function computed by the neural

network as a function of x.

Computation goes from left to right: propagating the activations of the neurons

Because this computation goes from left to

right, you start from e and compute a1, then a2, then a3. This album is also called forward

propagation because you’re propagating the activations

of the neurons.

So you’re making these computations in

the four directions from left to right.And this is in contrast to a different

algorithm called backward propagation or back propagation,

which is used for learning. And that’s something you

learn about next week.

NN architecture: the number of hidden units decreases as you get closer to the output layer

And by the way, this type of neural

network architecture where you have more hidden units initially and then the number of hidden units decreases

as you get closer to the output layer.

There’s also a pretty typical choice when

choosing neural network architectures. And you see more examples of this

in the practice lab as well.

So that’s neural network inference using

the forward propagation algorithm.

And with this, you’d be able to download

the parameters of a neural network that someone else had trained and

posted on the Internet. And you’d be able to carry out

inference on your new data using their neural network.

Now that you’ve seen the math and

the algorithm, let’s take a look at how you can

actually implement this in tensorflow. Specifically, let’s take a look

at this in the next video.

Lab: Neurons and Layers

Examples of Neurons and Layers

Optional Lab - Neurons and Layers

In this lab we will explore the inner workings of neurons/units and layers. In particular, the lab will draw parallels to the models you have mastered in Course 1, the regression/linear model and the logistic model. The lab will introduce Tensorflow and demonstrate how these models are implemented in that framework.

Packages

Tensorflow and Keras

Tensorflow is a machine learning package developed by Google. In 2019, Google integrated Keras into Tensorflow and released Tensorflow 2.0. Keras is a framework developed independently by François Chollet that creates a simple, layer-centric interface to Tensorflow. This course will be using the Keras interface.

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras.layers import Dense, Input

from tensorflow.keras import Sequential

from tensorflow.keras.losses import MeanSquaredError, BinaryCrossentropy

from tensorflow.keras.activations import sigmoid

from lab_utils_common import dlc

from lab_neurons_utils import plt_prob_1d, sigmoidnp, plt_linear, plt_logistic

plt.style.use('./deeplearning.mplstyle')

import logging

logging.getLogger("tensorflow").setLevel(logging.ERROR)

tf.autograph.set_verbosity(0)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

Neuron without activation - Regression/Linear Model

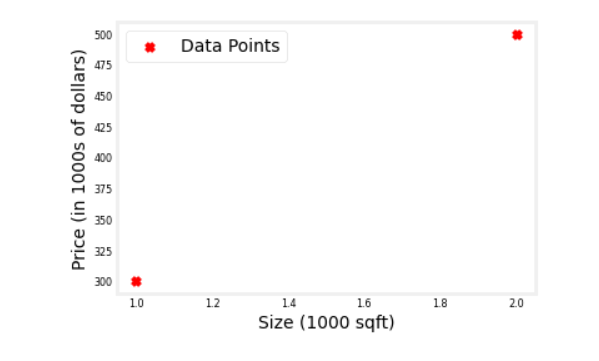

DataSet

We’ll use an example from Course 1, linear regression on house prices.

X_train = np.array([[1.0], [2.0]], dtype=np.float32) #(size in 1000 square feet)

Y_train = np.array([[300.0], [500.0]], dtype=np.float32) #(price in 1000s of dollars)

fig, ax = plt.subplots(1,1)

ax.scatter(X_train, Y_train, marker='x', c='r', label="Data Points")

ax.legend( fontsize='xx-large')

ax.set_ylabel('Price (in 1000s of dollars)', fontsize='xx-large')

ax.set_xlabel('Size (1000 sqft)', fontsize='xx-large')

plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

Output

Regression/Linear Model

The function implemented by a neuron with no activation is the same as in Course 1, linear regression:

f

w

,

b

(

x

(

i

)

)

=

w

⋅

x

(

i

)

+

b

(1)

f_{\mathbf{w},b}(x^{(i)}) = \mathbf{w}\cdot x^{(i)} + b \tag{1}

fw,b(x(i))=w⋅x(i)+b(1)

We can define a layer with one neuron or unit and compare it to the familiar linear regression function.

Let’s examine the weights.

linear_layer = tf.keras.layers.Dense(units=1, activation = 'linear', )

linear_layer.get_weights()

- 1

- 2

There are no weights as the weights are not yet instantiated. Let’s try the model on one example in X_train. This will trigger the instantiation of the weights. Note, the input to the layer must be 2-D, so we’ll reshape it.

a1 = linear_layer(X_train[0].reshape(1,1))

print(a1)

- 1

- 2

Output

这里的 1.39 是 w的值,是随机初始化得到的,而 b的初始值为0,这并并没有给出

tf.Tensor([[1.39]], shape=(1, 1), dtype=float32)

- 1

- 2

The result is a tensor (another name for an array) with a shape of (1,1) or one entry.

Now let’s look at the weights and bias. These weights are randomly initialized to small numbers and the bias defaults to being initialized to zero.

w, b= linear_layer.get_weights()

print(f"w = {w}, b={b}")

- 1

- 2

Output

w = [[1.39]], b=[0.]

- 1

A linear regression model (1) with a single input feature will have a single weight and bias. This matches the dimensions of our linear_layer above.

The weights are initialized to random values so let’s set them to some known values.

set_w = np.array([[200]])

set_b = np.array([100])

# set_weights takes a list of numpy arrays

linear_layer.set_weights([set_w, set_b])

print(linear_layer.get_weights())

- 1

- 2

- 3

- 4

- 5

- 6

Output

[array([[200.]], dtype=float32), array([100.], dtype=float32)]

- 1

- 2

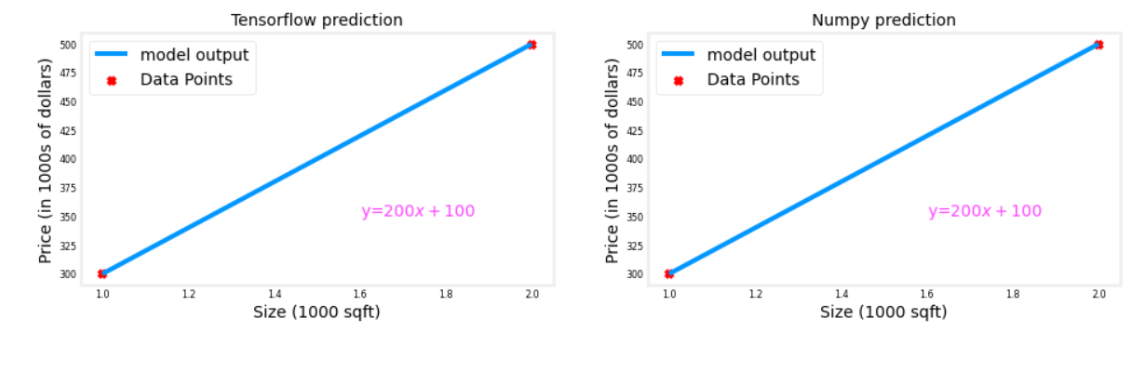

Let’s compare equation (1) to the layer output.

a1 = linear_layer(X_train[0].reshape(1,1))

print(a1)

alin = np.dot(set_w,X_train[0].reshape(1,1)) + set_b

print(alin)

- 1

- 2

- 3

- 4

Output

tf.Tensor([[300.]], shape=(1, 1), dtype=float32)

[[300.]]

- 1

- 2

They produce the same values!

Now, we can use our linear layer to make predictions on our training data.

prediction_tf = linear_layer(X_train)

prediction_np = np.dot( X_train, set_w) + set_b

plt_linear(X_train, Y_train, prediction_tf, prediction_np)

- 1

- 2

- 3

- 4

Output

Neuron with Sigmoid activation

The function implemented by a neuron/unit with a sigmoid activation is the same as in Course 1, logistic regression:

f

w

,

b

(

x

(

i

)

)

=

g

(

w

x

(

i

)

+

b

)

(2)

f_{\mathbf{w},b}(x^{(i)}) = g(\mathbf{w}x^{(i)} + b) \tag{2}

fw,b(x(i))=g(wx(i)+b)(2)

where

g ( x ) = s i g m o i d ( x ) g(x) = sigmoid(x) g(x)=sigmoid(x)

Let’s set w w w and b b b to some known values and check the model.

DataSet

We’ll use an example from Course 1, logistic regression.

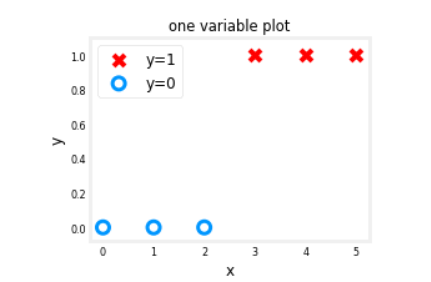

X_train = np.array([0., 1, 2, 3, 4, 5], dtype=np.float32).reshape(-1,1) # 2-D Matrix

Y_train = np.array([0, 0, 0, 1, 1, 1], dtype=np.float32).reshape(-1,1) # 2-D Matrix

pos = Y_train == 1

neg = Y_train == 0

X_train[pos]

- 1

- 2

- 3

- 4

- 5

- 6

- 7

Output

array([3., 4., 5.], dtype=float32)

- 1

pos = Y_train == 1

neg = Y_train == 0

fig,ax = plt.subplots(1,1,figsize=(4,3))

ax.scatter(X_train[pos], Y_train[pos], marker='x', s=80, c = 'red', label="y=1")

ax.scatter(X_train[neg], Y_train[neg], marker='o', s=100, label="y=0", facecolors='none',

edgecolors=dlc["dlblue"],lw=3)

ax.set_ylim(-0.08,1.1)

ax.set_ylabel('y', fontsize=12)

ax.set_xlabel('x', fontsize=12)

ax.set_title('one variable plot')

ax.legend(fontsize=12)

plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

Output

Logistic Neuron

We can implement a ‘logistic neuron’ by adding a sigmoid activation. The function of the neuron is then described by (2) above.

This section will create a Tensorflow Model that contains our logistic layer to demonstrate an alternate method of creating models. Tensorflow is most often used to create multi-layer models. The Sequential model is a convenient means of constructing these models.

model = Sequential(

[

tf.keras.layers.Dense(1, input_dim=1, activation = 'sigmoid', name='L1')

]

)

- 1

- 2

- 3

- 4

- 5

model.summary() shows the layers and number of parameters in the model. There is only one layer in this model and that layer has only one unit. The unit has two parameters,

w

w

w and

b

b

b.

model.summary()

- 1

Output

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

L1 (Dense) (None, 1) 2

=================================================================

Total params: 2

Trainable params: 2

Non-trainable params: 0

_________________________________________________________________

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

logistic_layer = model.get_layer('L1')

w,b = logistic_layer.get_weights()

print(w,b)

print(w.shape,b.shape)

- 1

- 2

- 3

- 4

Output

[[1.19]] [0.]

(1, 1) (1,)

- 1

- 2

Let’s set the weight and bias to some known values.

set_w = np.array([[2]])

set_b = np.array([-4.5])

# set_weights takes a list of numpy arrays

logistic_layer.set_weights([set_w, set_b])

print(logistic_layer.get_weights())

- 1

- 2

- 3

- 4

- 5

Output

[array([[2.]], dtype=float32), array([-4.5], dtype=float32)]

- 1

- 2

Let’s compare equation (2) to the layer output.

a1 = model.predict(X_train[0].reshape(1,1))

print(a1)

alog = sigmoidnp(np.dot(set_w,X_train[0].reshape(1,1)) + set_b)

print(alog)

- 1

- 2

- 3

- 4

Output

[[0.01]]

[[0.01]]

- 1

- 2

They produce the same values!

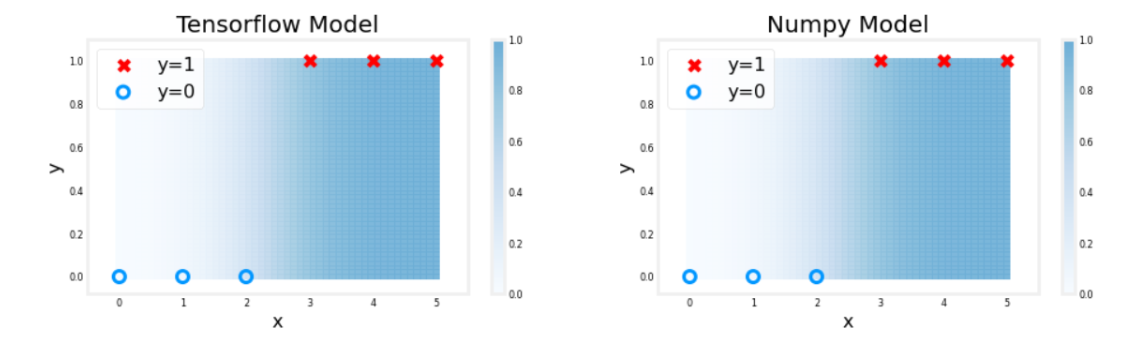

Now, we can use our logistic layer and NumPy model to make predictions on our training data.

plt_logistic(X_train, Y_train, model, set_w, set_b, pos, neg)

- 1

Output

The shading above reflects the output of the sigmoid which varies from 0 to 1.

Congratulations!

You built a very simple neural network and have explored the similarities of a neuron to the linear and logistic regression from Course 1.

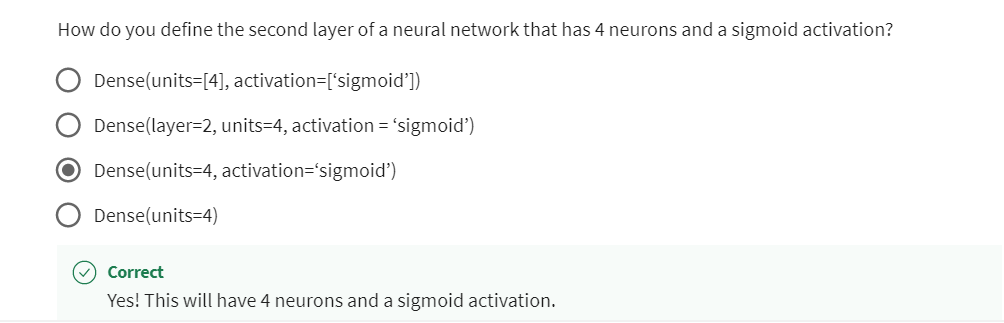

[04] Practice quiz: Neural network model

Practice quiz: Neural network model

Latest Submission Grade 93.75%

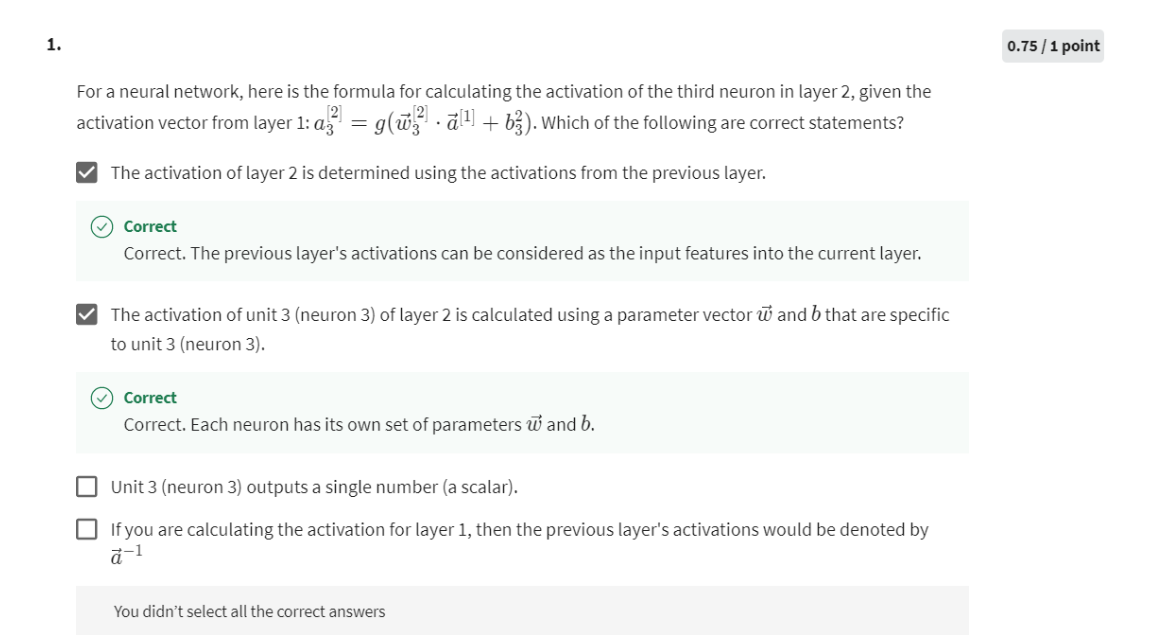

第一题第三个也要选的, Unit3 outputs a single number (a scalar) 这句话是对的

Yes! The superscript [3] refers to layer 3. The subscript 2 refers to the neuron in that layer. The input to layer 2 is the activation vector from layer 1.

[05] TensorFlow implementation

Inference in Code

TensorFlow: One of the leading framework

TensorFlow is one of the leading frameworks to implementing deep

learning algorithms.

When I’m building projects, TensorFlow is actually a tool

that I use the most often. The other popular

tool is PyTorch.

But we’re going to focus in this specialization

on TensorFlow.

In this video, let’s take a

look at how you can implement inferencing code using

TensorFlow. Let’s dive in.

One of the remarkable things

about neural networks is the same algorithm

can be applied to so many different

applications.

For this video and in

some of the labs for you to see what the neural

network is doing, I’m going to use another example

to illustrate inference.

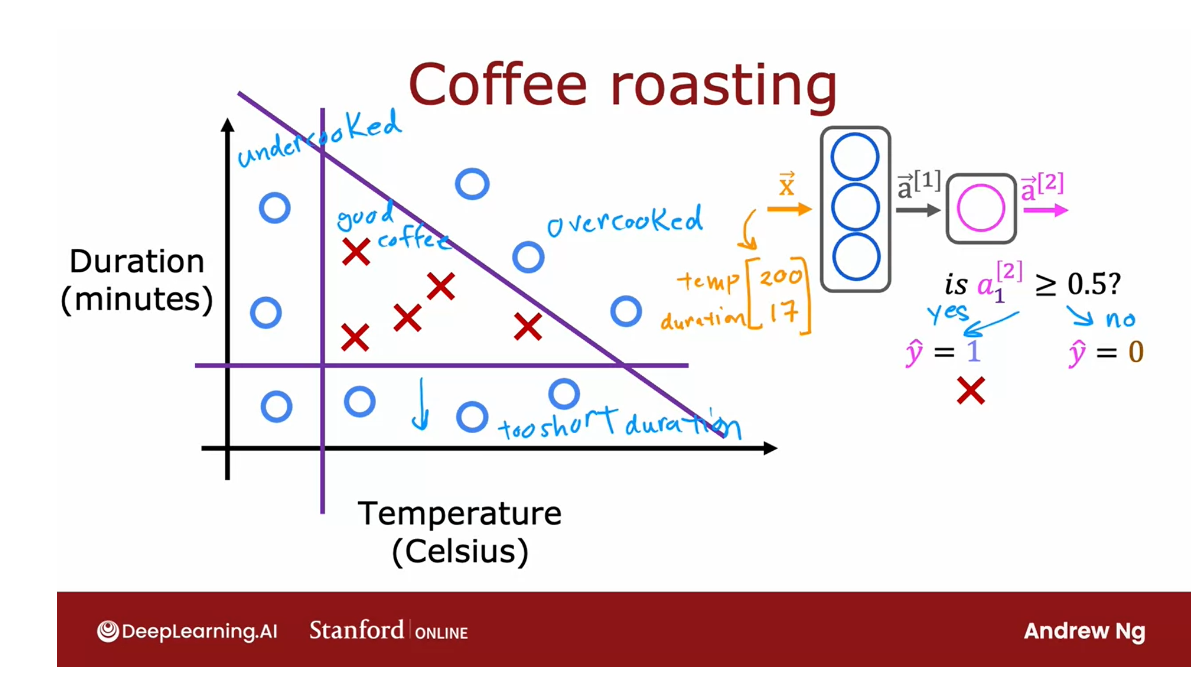

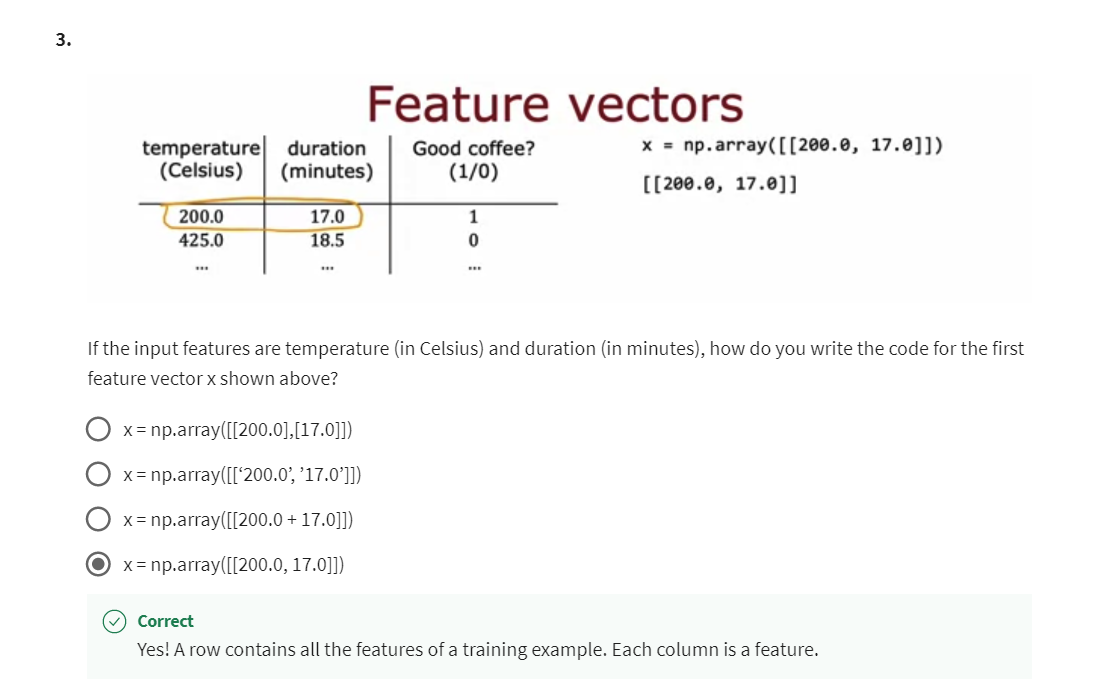

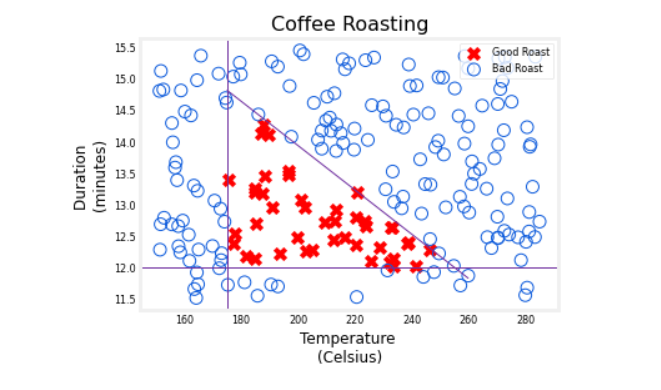

Coffee roasting

Sometimes I do like to roast

coffee beans myself at home. My favorite is actually

Colombian coffee beans.

Can the learning

algorithm help optimize the quality of the beans you get from a roasting

process like this?

When you’re roasting coffee, two parameters you

get to control are the temperature at

which you’re heating up the raw coffee beans to turn them into nicely

roasted coffee beans, as well as the duration or how long are you going

to roast the beans.

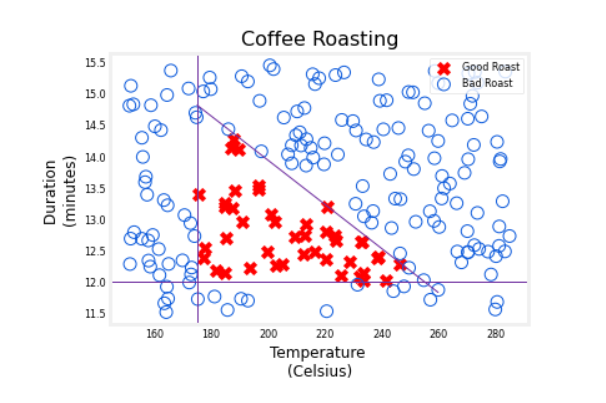

In this slightly

simplified example, we’ve created the datasets of different temperatures

and different durations, as well as labels

showing whether the coffee you roasted

is good-tasting coffee.

Where cross here, the positive cross y equals 1

corresponds to good coffee, and all the negative cross

corresponds to bad coffee.

It looks like a reasonable

way to think of this dataset is if you cook

it at too lower temperature, it doesn’t get roasted and

it ends up undercooked.

If you cook it, not

for long enough, the duration is too short, it’s also not a nicely

roasted set of beans.

Finally, if you were to cook it either for too long or for

too higher temperature, then you end up with

overcooked beans. They’re a little

bit burnt beans. There’s not good coffee either. It’s only points within this little triangle here that corresponds to good coffee.

This example is simplified a bit from actual coffee roasting.

Even though this example is a simplified one for the

purpose of illustration, there have actually

been serious projects using machine learning to optimize coffee

roasting as well.

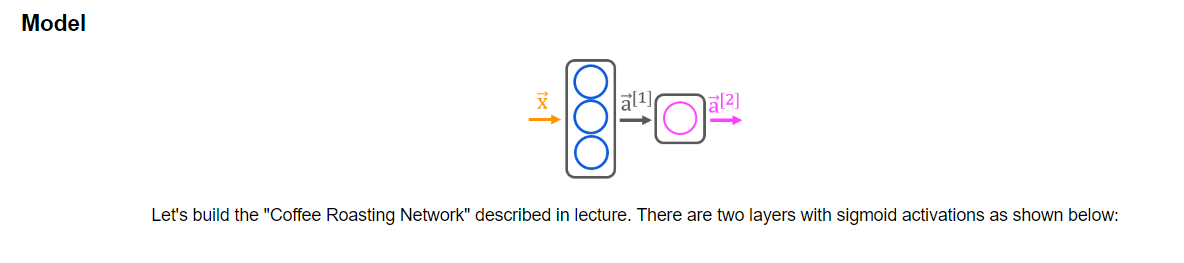

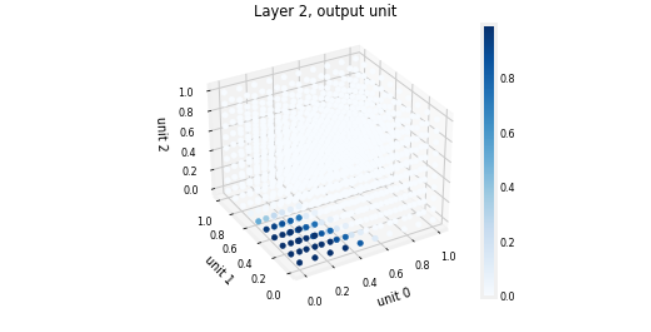

The task is given a feature vector x with both

temperature and duration, say 200 degrees Celsius

for 17 minutes, how can we do inference in a neural network to

get it to tell us whether or not this temperature

and duration setting will result in good

coffee or not? It looks like this.

We’re going to set x to be

an array of two numbers. The input features 200 degrees

celsius and 17 minutes. This here, Layer 1 equals dense

units 3 activation equals sigmoid creates a hidden layer of neurons with

three hidden units, and using as the

activation function, the sigmoid function, and dense here is just

the name of this layer.

Then finally, to compute

the activation values a1, you would write

a1 equals Layer 1 applied to the input features x.Then you create Layer 1 as this first hidden

layer, the neural network, as dense open

parenthesis units 3, that means three units

or three hidden units in this layer using as the activation function,

the sigmoid function.

Dense is another name for the layers of a neural network that we’ve learned about so far. As you learn more

about neural networks, you learn about other

types of layers as well.

But for now, we’ll just

use the dense layer, which is the layer type

you’ve learned about in the last few videos for

all of our examples.

Next, you compute a1

by taking Layer 1, which is actually a function, and applying this function

Layer 1 to the values of x.

That’s how you get a1, which is going to be a

list of three numbers because Layer 1 had three units. So a1 here may, just for the sake

of illustration, be 0.2, 0.7, 0.3.

Next, for the second

hidden layer, Layer 2, would be dense. Now this time it

has one unit and again to sigmoid

activation function, and you can then

compute a2 by applying this Layer 2 function to the activation values

from Layer 1 to a1. That will give you

the value of a2, which for the sake of

illustration is maybe 0.8.

Finally, if you wish to

threshold it at 0.5, then you can just test if a2 is greater and equal to 0.5 and set y-hat equals to one or zero positive or

negative cross accordingly.

That’s how you do inference in the neural network

using TensorFlow.

There are some

additional details that I didn’t go over here, such as how to load the TensorFlow library

and how to also load the parameters w and

b of the neural network.

But we’ll go over

that in the lab. Please be sure to take

a look at the lab. But these are the key

steps for propagation in how you compute a1 and a2

and optionally threshold a2.

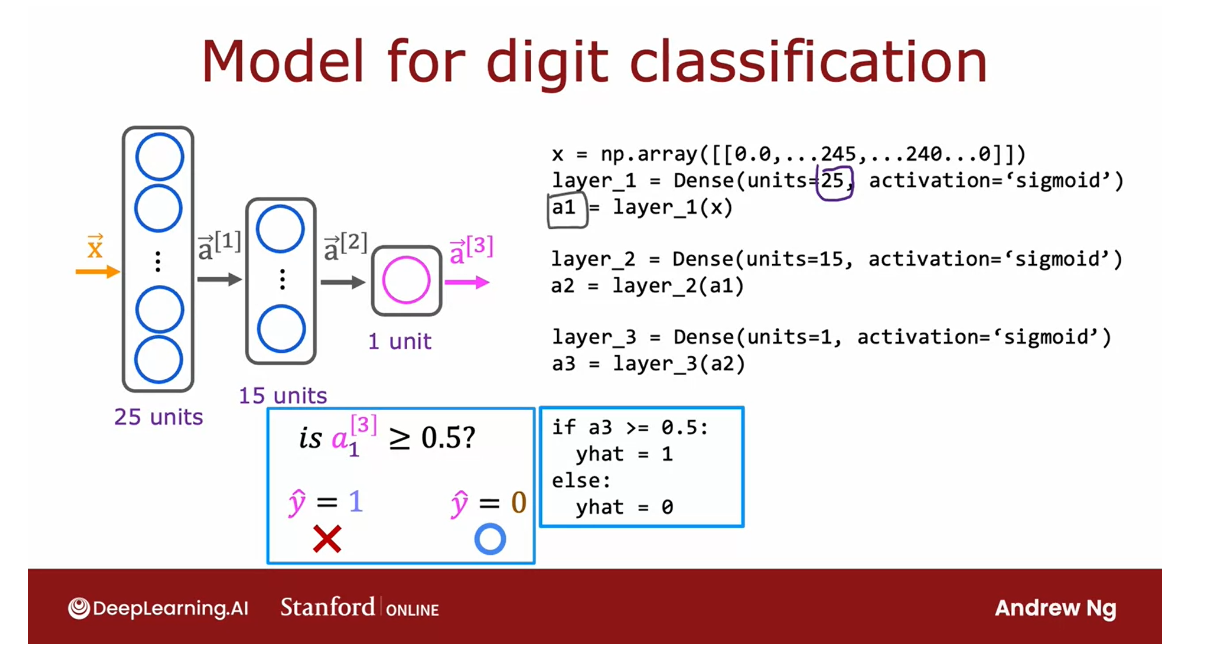

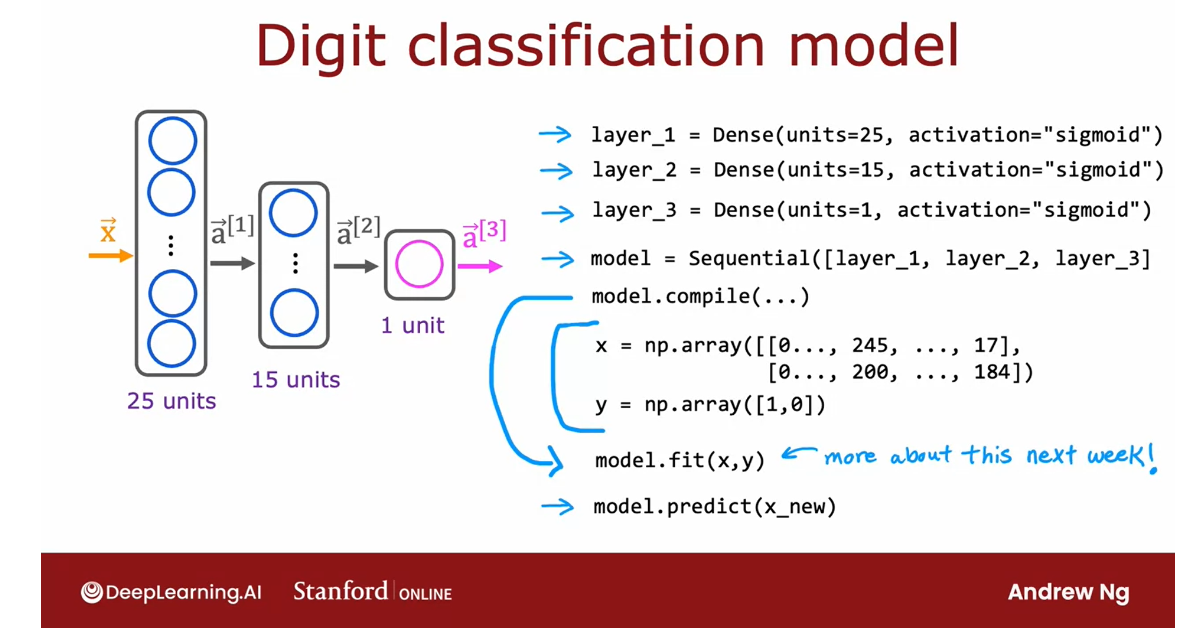

Let’s look at one more example and we’re going to go back to the handwritten digit

classification problem.

In this example, x is a list of the pixel

intensity values. So x is equal to a numpy array of this list

of pixel intensity values.

Then to initialize and carry out one step of

forward propagation, Layer 1 is a dense layer with 25 units and the

sigmoid activation function. You then compute a1 equals the Layer 1

function applied to x.

To build and carry out inference through the

second layer, similarly, you set up Layer 2 as follows, and then computes a2 as

Layer 2 applied to a1. Then finally, Layer 3 is the

third and final dense layer.

Then finally, you can

optionally threshold a3 to come up with a binary

prediction for y-hat.

That’s the syntax for carrying out interference in TensorFlow. One thing I briefly

alluded to is the structure of

the numpy arrays. TensorFlow treats data in a certain way that is

important to get right.

In the next video, let’s take a look at how

TensorFlow handles data.

Data in TensorFlow

Numpy

In this video, I want to step through with

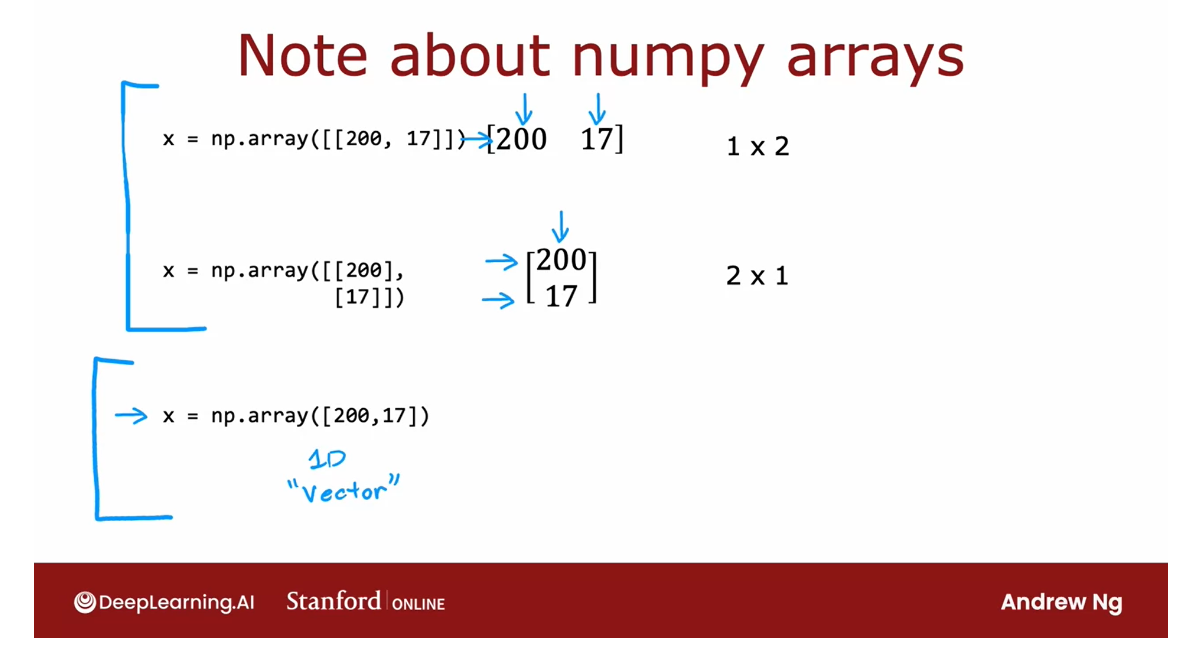

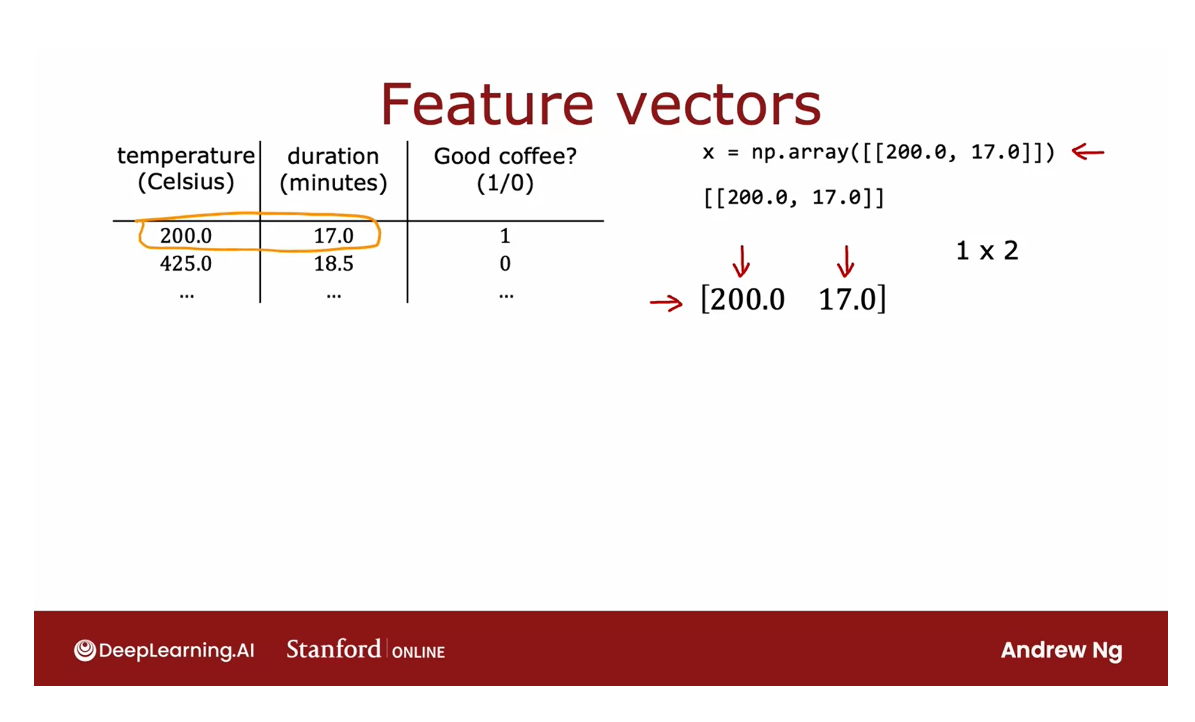

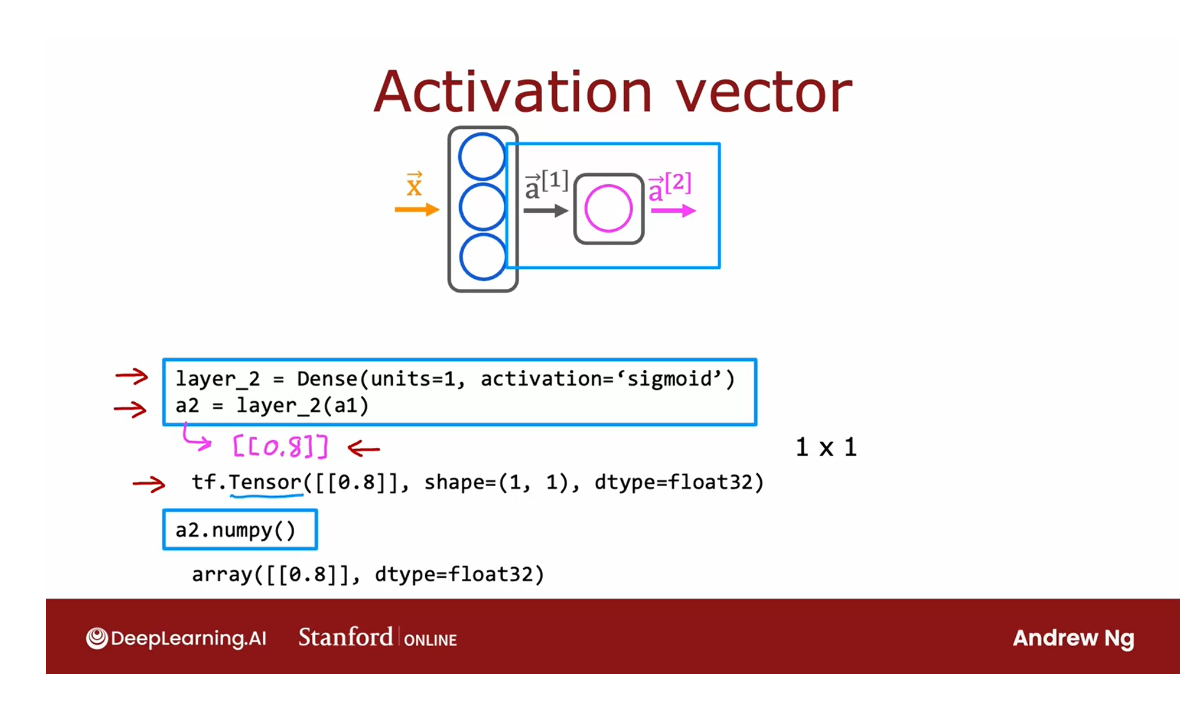

you how data is represented in NumPy and in TensorFlow.