- 1基于FPGA的CIC滤波器抽取内插滤波器数字上下变频多采样率信号处理_fpga 多路并行cic抽取器

- 2FPGA初学-调用IP核实现计数器_模200的二进制加法计数器ip核实现、

- 3使用 Docker 搭建 Hadoop 分布式环境_windows系统docker怎么搭建hadoop框架(1)

- 4windows安装mysql

- 5使用Python开发spark_spark python

- 6matlab 带参数二重积分,matlab 关于积分限带参数的二重积分没有数值解的问题

- 7Java连接Mysql报错:javax.net.ssl.SSLException: Received fatal alert: internal_error_at sun.reflect.generatedconstructoraccessor177.new

- 8基于springboot的校园社团活动管理系统【数据库设计、论文、毕设源码、开题报告】

- 9月薪9K!前台测试男生偷偷努力,工资翻倍转行5G网络优化工程师,“卷死”所有人!_5g网络优化测试赚钱吗

- 10Windows安装Mysql客户端_windows仅安装mysql客户端

七月论文审稿GPT第2.5和第3版:分别微调GPT3.5、Llama2 13B以扩大对GPT4的优势_七月论文审稿gpt第2版

赞

踩

前言

自去年7月份我带队成立大模型项目团队以来,我司至今已有5个项目组,其中

- 第一个项目组的AIGC模特生成系统已经上线在七月官网

- 第二项目组的论文审稿GPT则将在今年3 4月份对外上线发布

- 第三项目组的RAG知识库问答第1版则在春节之前已就绪

- 至于第四、第五项目组的大模型机器人、Agent则正在迭代中

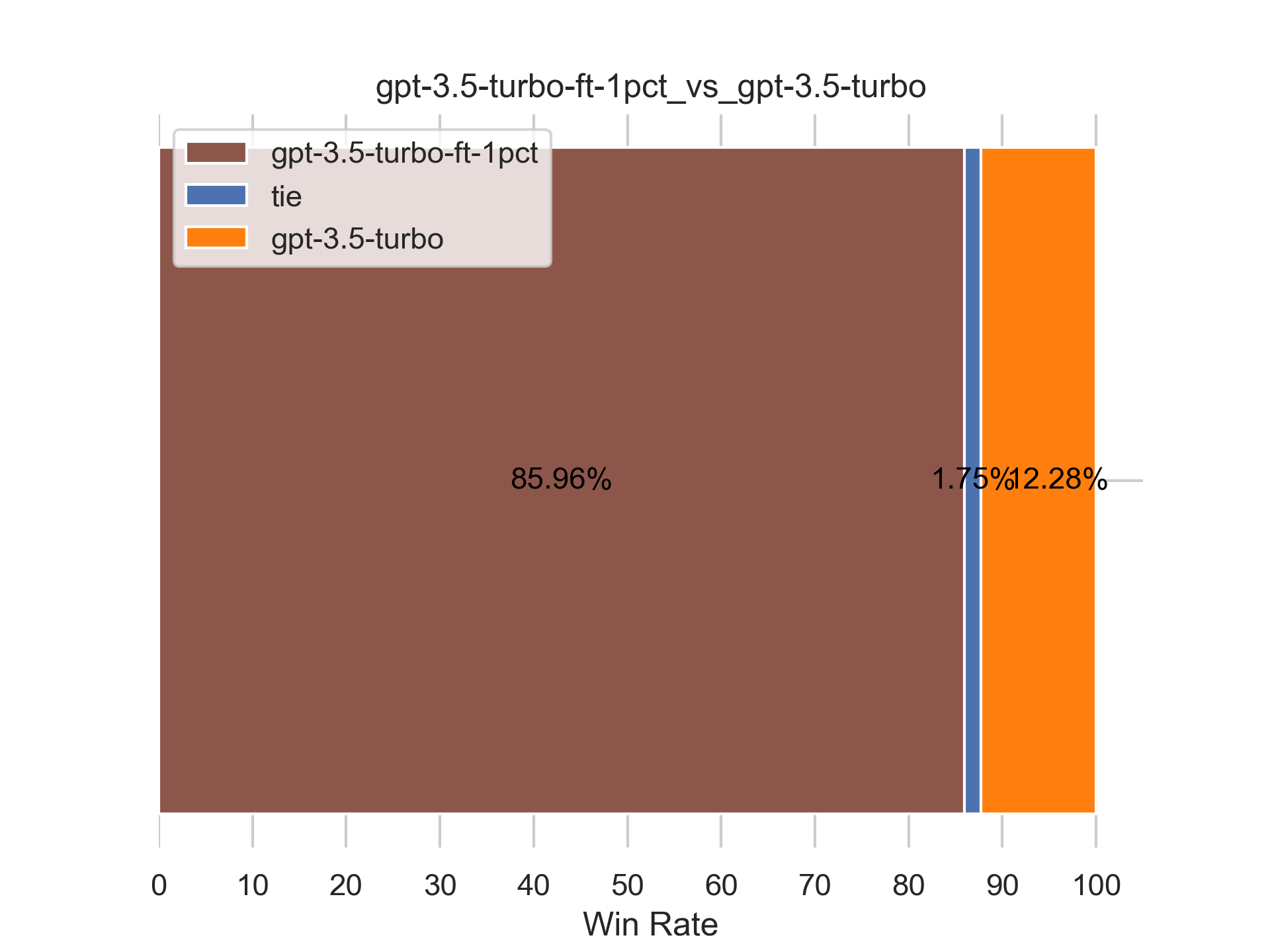

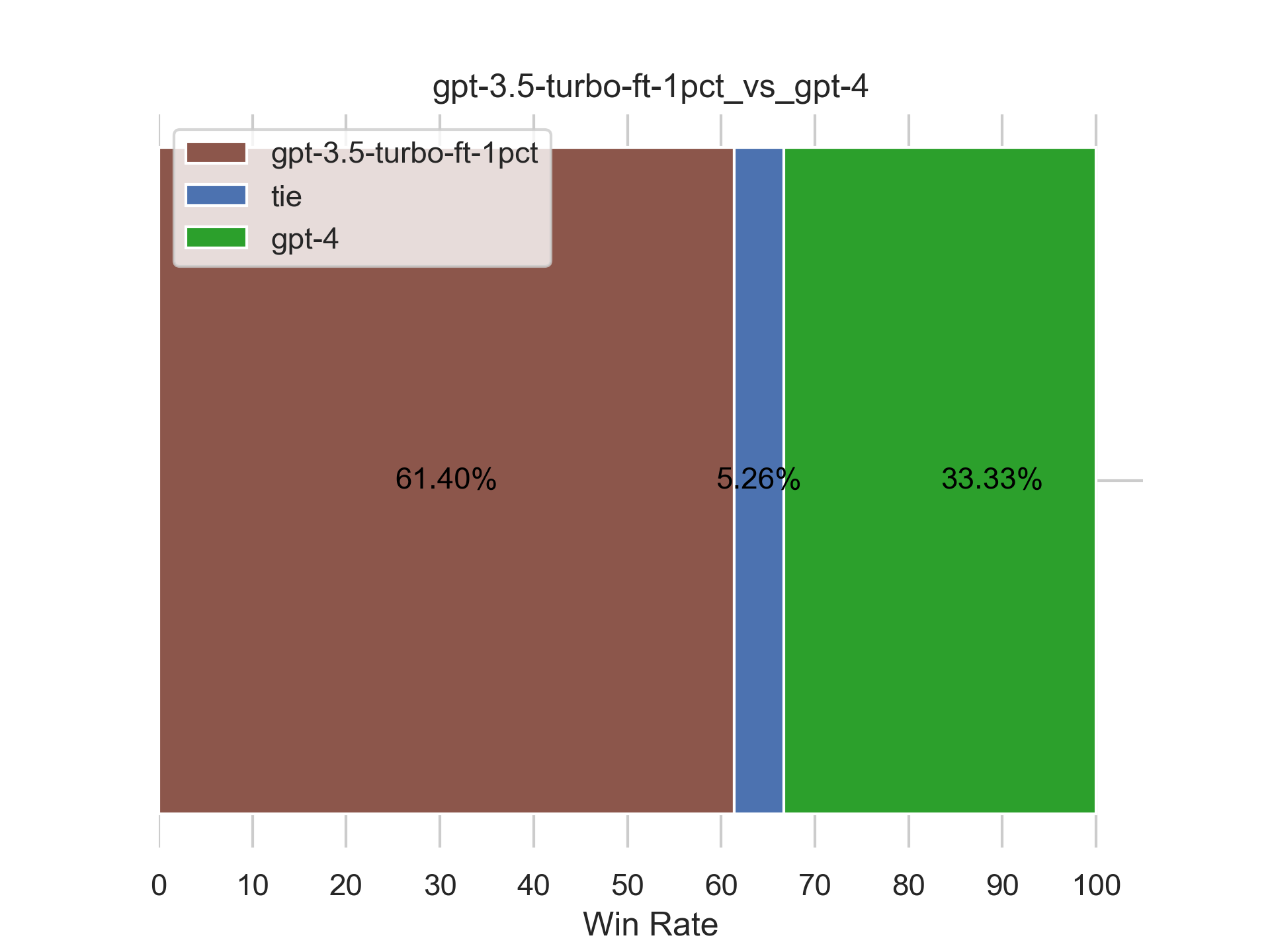

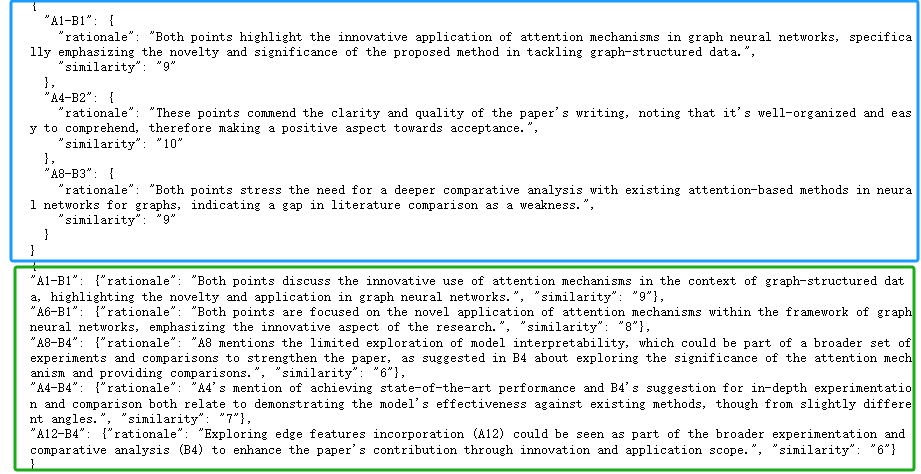

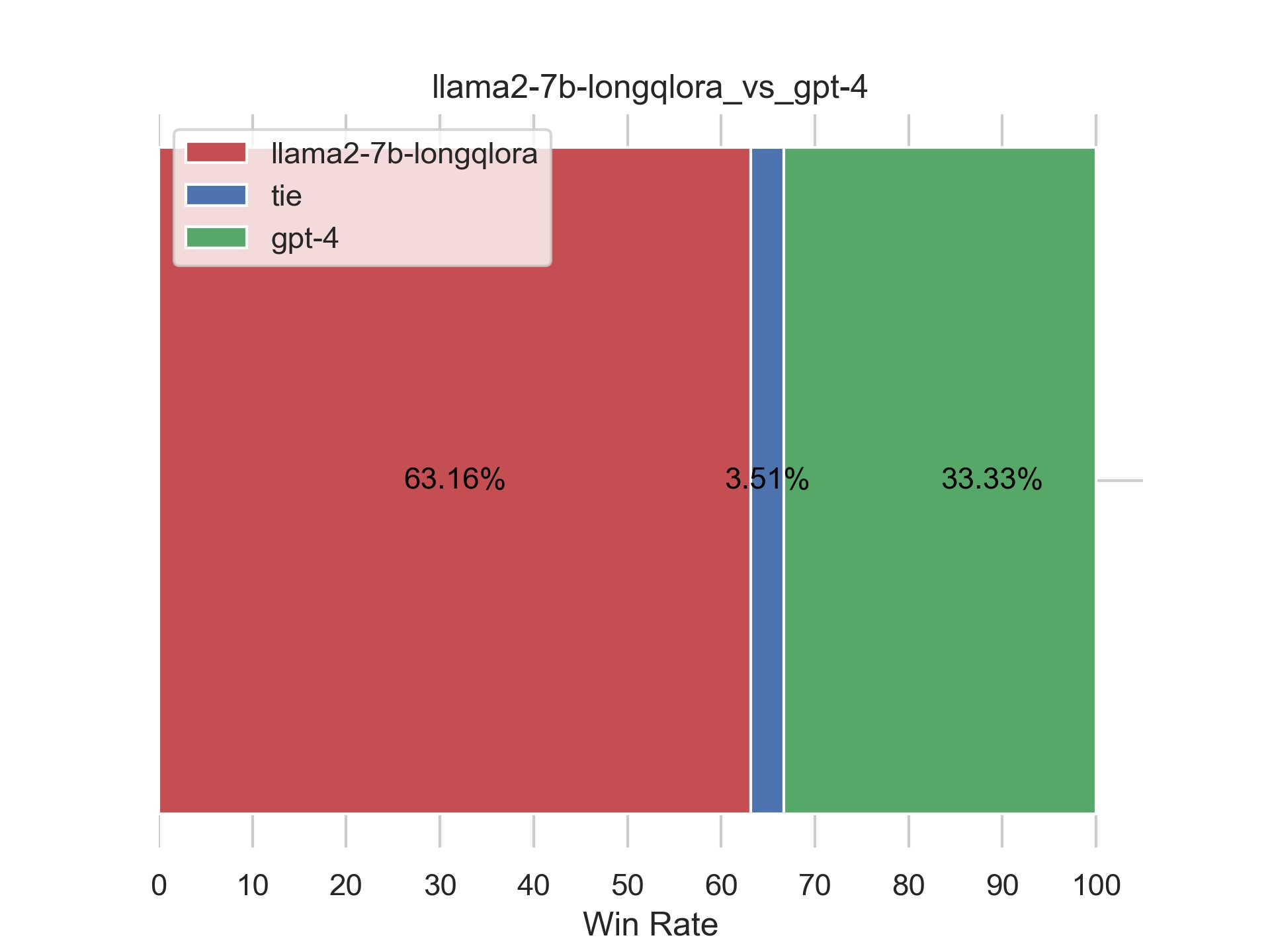

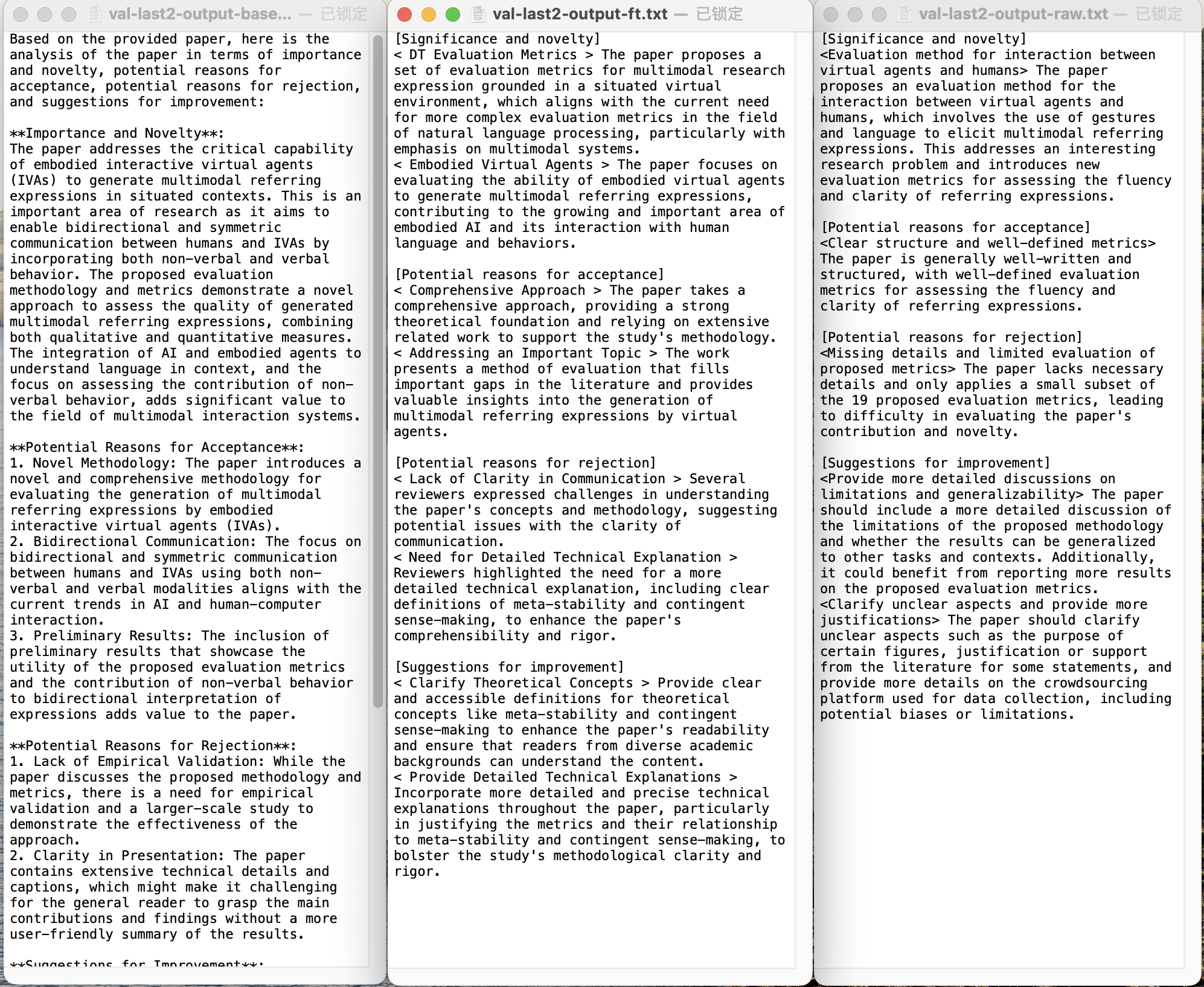

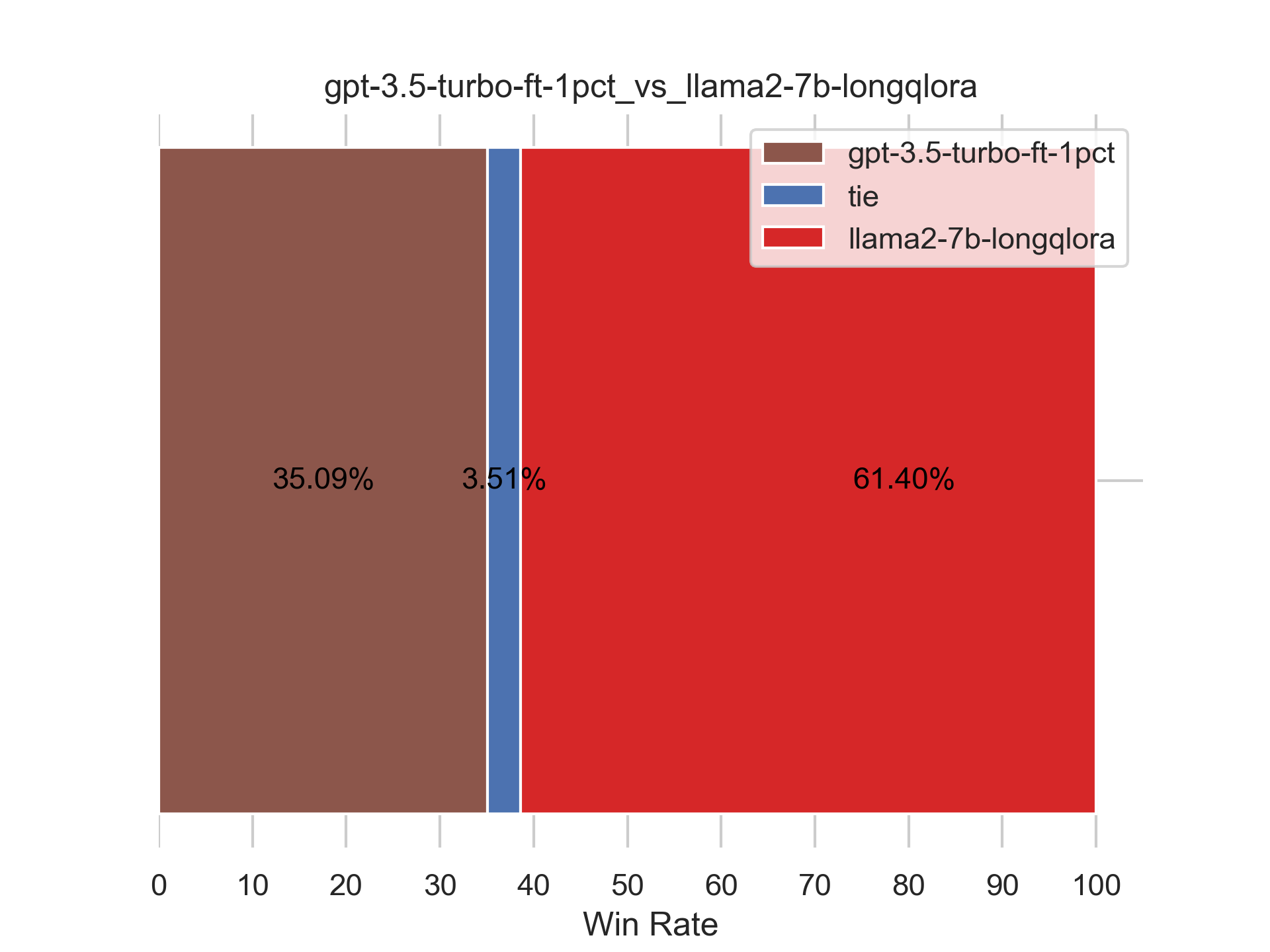

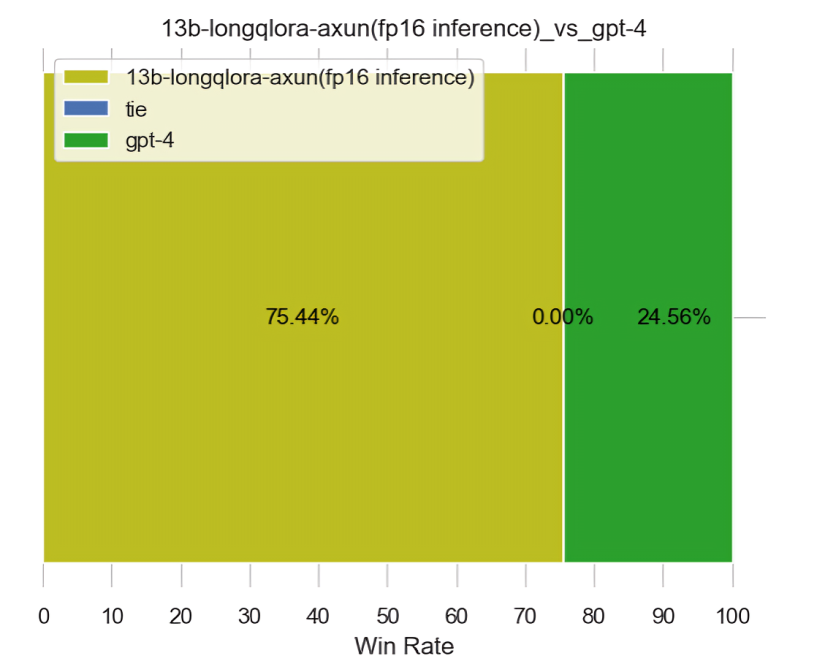

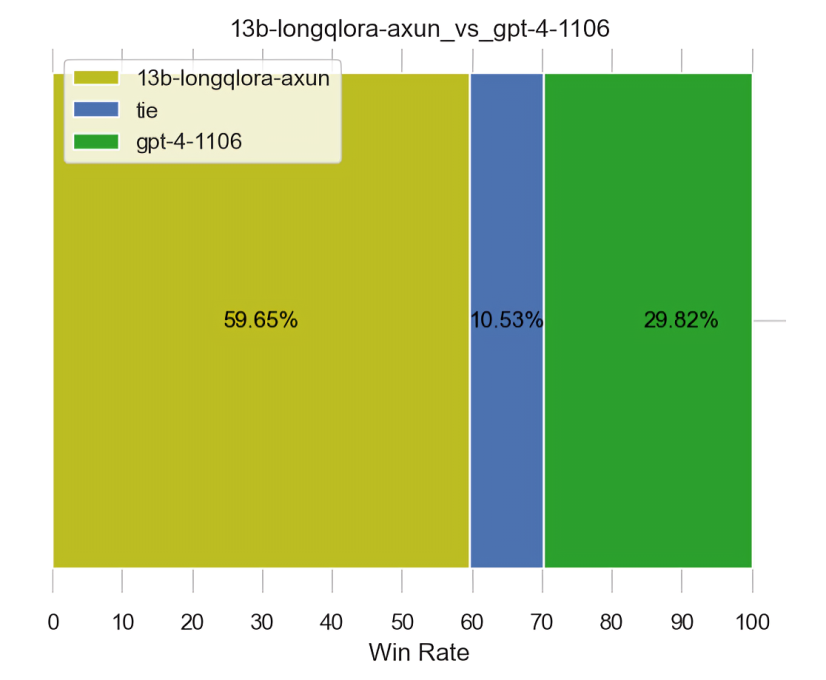

所有项目均为会对外上线发布的商用项目,而论文审稿GPT至今在过去的半年已经迭代两个版本,其中第二版的效果甚至超过了GPT4(详见《七月论文审稿GPT第2版:用一万多条paper-review数据集微调LLaMA2最终反超GPT4》,且本文所用的模型评估方法均用的该文第六部分所述的评估 ),为了持续累积与原始GPT4的优势,我们如今正在迭代第2.5版本:包括对GPT3.5 turbo 16K的微调以及llama2 13B的微调,本文也因此而成

第一部分 第2.5版之微调GPT3.5 Tubor 16K

我们微调第一版的时候,曾经考虑过微调ChatGPT,不过其开放的微调接口的上下文长度不够大部分论文的长度(截止到23年10月底暂只有4K),故当时没来得及,好在23年11.6日,OpenAI在其举办的首届开发者大会上,宣布开放GPT3.5 16K的微调接口

因此,我们在第2.5版便可以微调ChatGPT了,即我司正在尝试用我们自己爬取一万多条的paper-review数据集去微调GPT3.5 16k,最终让它们大乱斗,看哪个是最强王者

不过,考虑到可能存在的数据泄露给OpenAI的风险,故我们打算先用一小部分的数据 微调试下,看能否把这条路径走通,以及看下胜率对比

- 如果能超过咱们微调的开源模型,那ChatGPT确实强

- 如果没超过,则再上全量

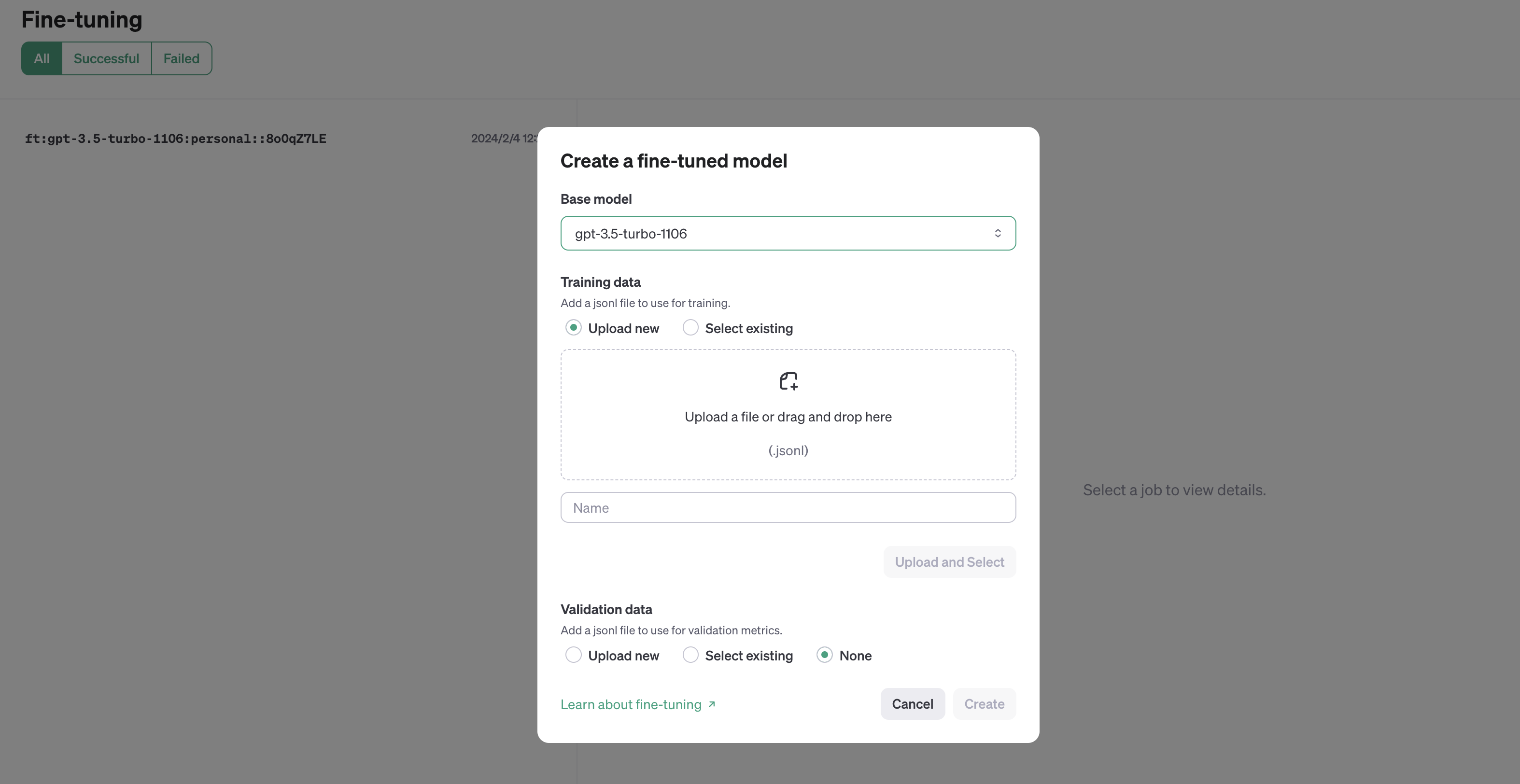

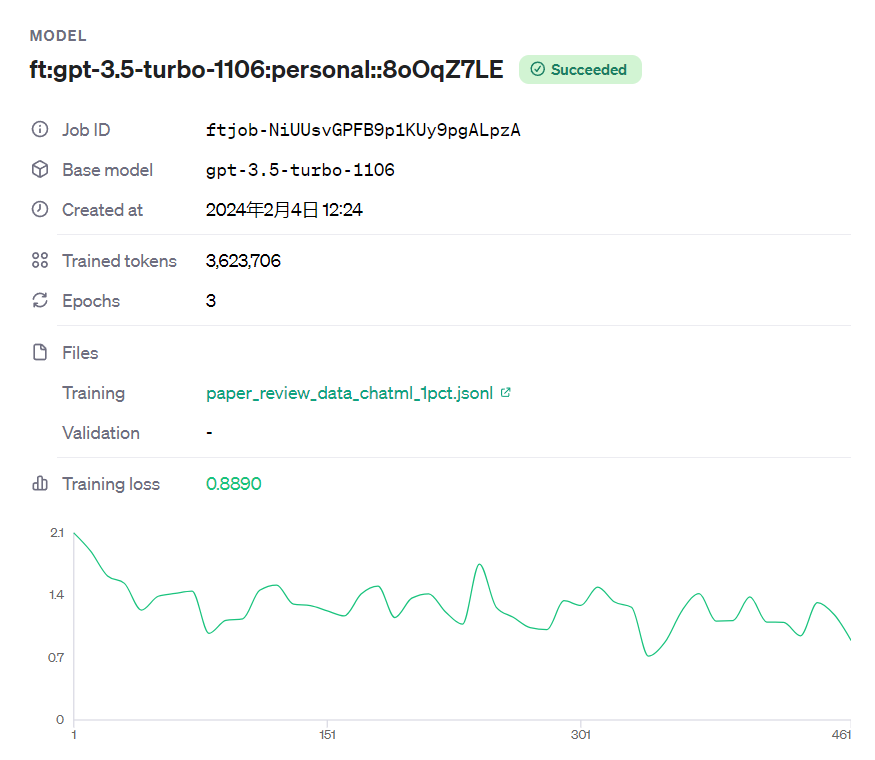

1.1 模型训练:GPT3.5 Tubor 16K的微调

1.1.1 微调GPT3.5的前期调研:费用、微调流程、格式转换等

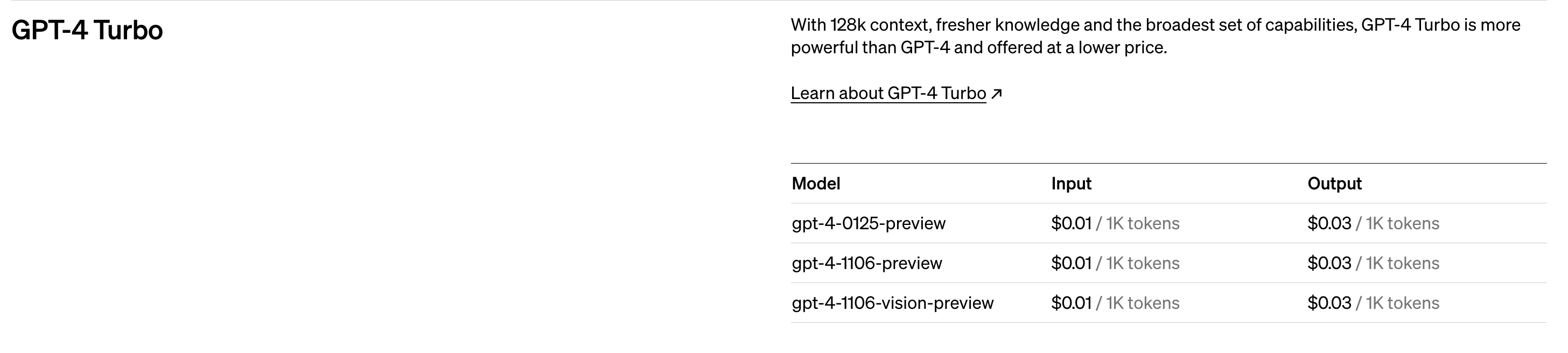

- 首先,计算一下微调GPT所需的费用

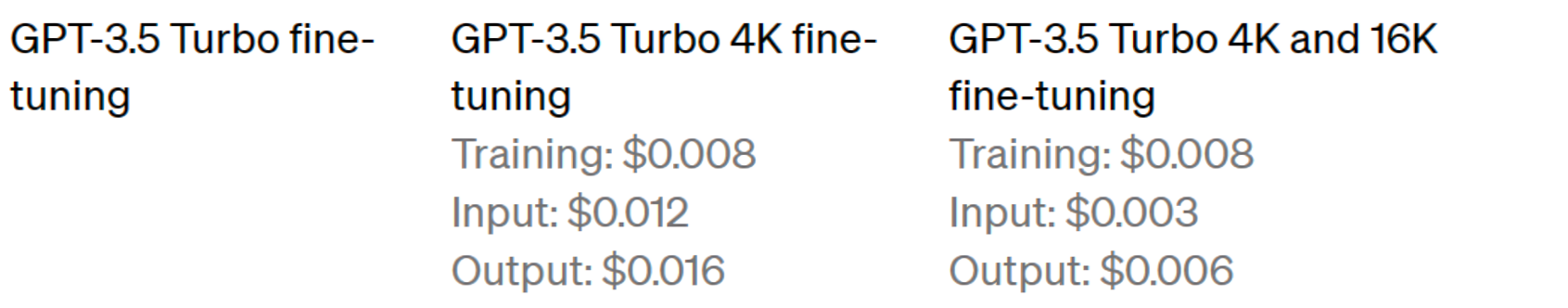

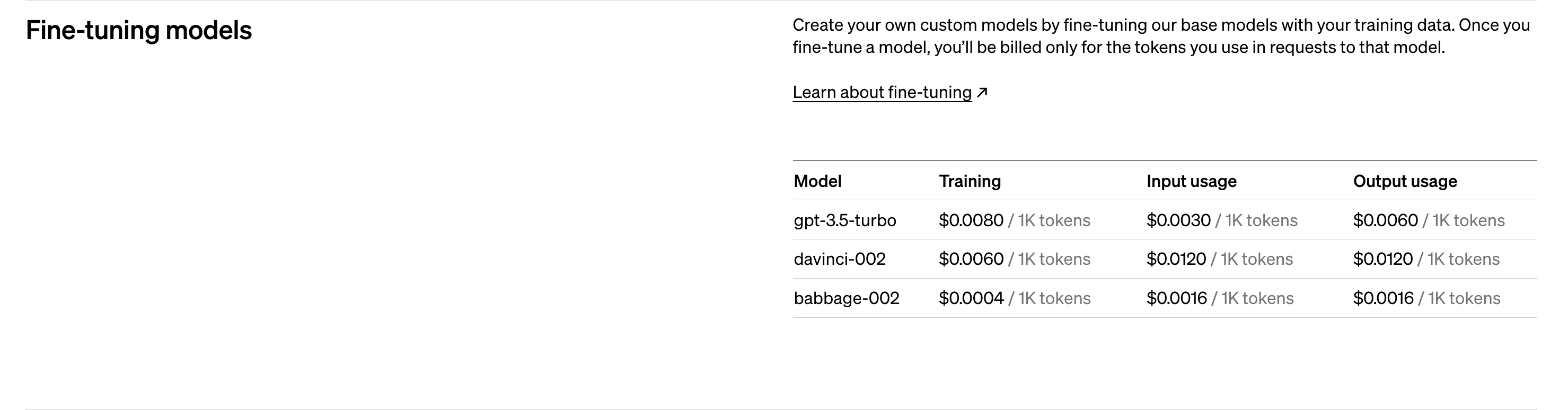

由于我司爬取的15566条paper-review数据集的token数量为:118689950

根据OpenAI微调gpt3.5 turbo的定价策略(Pricing)

可知,全量样本Traning阶段预计要花费的费用为(按2个epoch):118689950个token × 2个epoch × 0.008 × 汇率7.18 = 13635元

- 其次,这是微调的页面:https://platform.openai.com/finetune

此外,这是OpenAI官网上关于微调的教程:https://platform.openai.com/docs/guides/fine-tuning/fine-tuning-examples - 接着,根据OpenAI微调教程给的提示

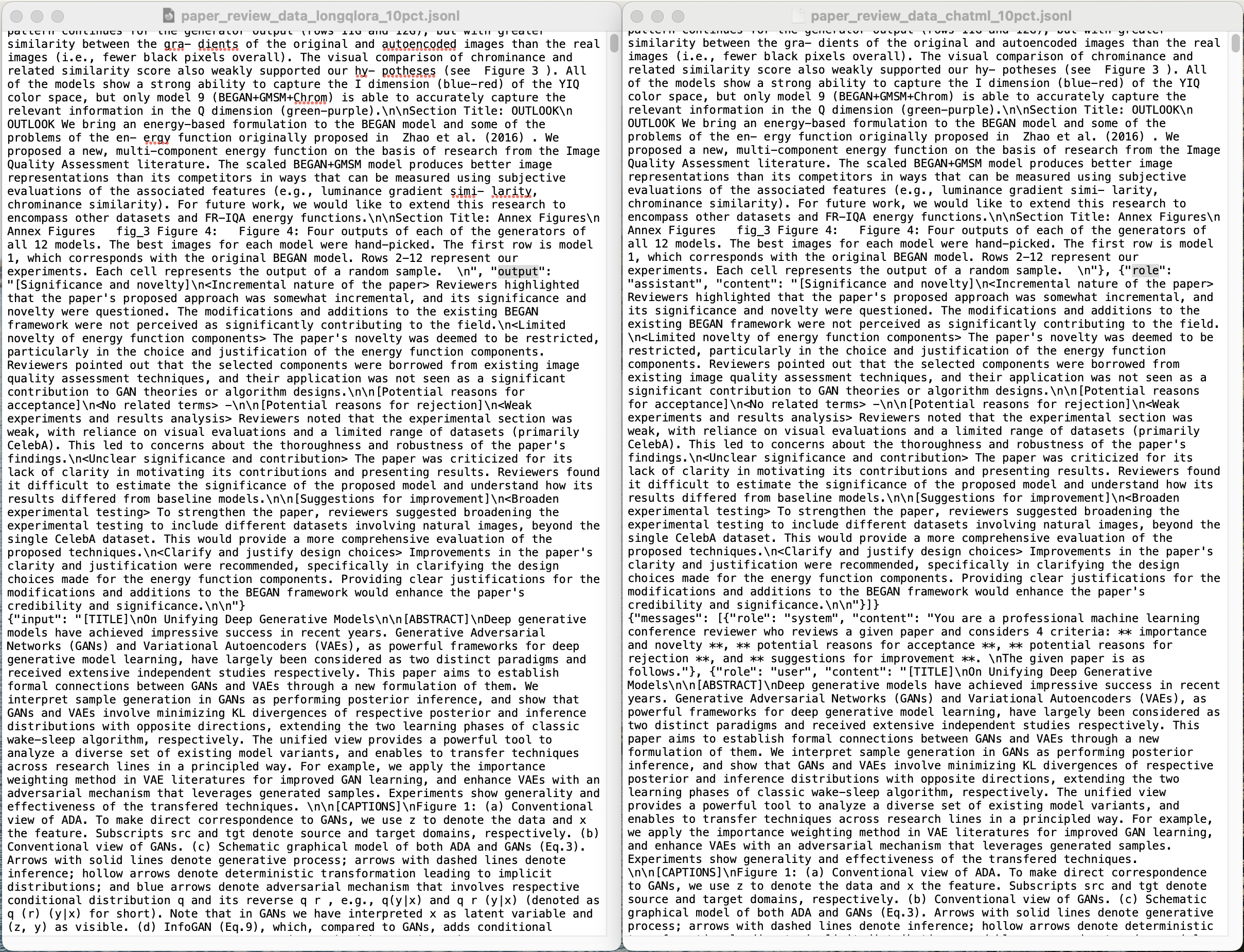

把我们自己爬的数据

- {"input": "[TITLE]\nImage Quality Assessment Techniques Improve Training and Evaluation of Energy-Based Generative Adversarial Networks\n\n[ABSTRACT]\nWe propose a new, multi-component energy function for energy-based Generative Adversarial Networks (GANs) based on methods from the image quality assessment literature. Our approach expands on the Boundary Equilibrium Generative Adversarial Network (BEGAN) by outlining some of the short-comings of the original energy and loss functions. We address these short-comings by incorporating an l1 score, the Gradient Magnitude Similarity score, and a chrominance score into the new energy function. We then provide a set of systematic experiments that explore its hyper-parameters. We show that each of the energy function's components is able to represent a slightly different set of features, which require their own evaluation criteria to assess whether they have been adequately learned. We show that models using the new energy function are able to produce better image representations than the BEGAN model in predicted ways.\n\n[CAPTIONS]\nFigure 1: From left to right, the images are the original image, a contrast stretched image, an image with impulsive noise contamination, and a Gaussian smoothed image. Although these images differ greatly in quality, they all have the same MSE from the original image (about 400), suggesting that MSE is a limited technique for measuring image quality.\nFigure 2: Comparison of the gradient (edges in the image) for models 11 (BEGAN) and 12 (scaled BEGAN+GMSM), where O is the original image, A is the autoencoded image, OG is the gradient of the original image, AG is the gradient of the autoencoded image, and S is the gradient magnitude similarity score for the discriminator (D) and generator (G). White equals greater similarity (better performance) and black equals lower similarity for the final column.\nFigure 3: Comparison of the chrominance for models 9 (BEGAN+GMSM+Chrom), 11 (BEGAN) and 12 (scaled BEGAN+GMSM), where O is the original image, OC is the original image in the corresponding color space, A is the autoencoded image in the color space, and S is the chrominance similarity score. I and Q indicate the (blue-red) and (green-purple) color dimensions, respectively. All images were normalized relative to their maximum value to increase luminance. Note that pink and purple approximate a similarity of 1, and green and blue approximate a similarity of 0 for I and Q dimensions, respectively. The increased gradient 'speckling' of model 12Q suggests an inverse relationship between the GMSM and chrominance distance functions.\nTable 1: Models and their corresponding model distance function parameters. The l 1 , GMSM, and Chrom parameters are their respective β d values from Equation 8.\nTable 2: Lists the models, their discriminator mean error scores, and their standard deviations for the l 1 , GMSM, and chrominance distance functions over all training epochs. Bold values show the best scores for similar models. Double lines separate sets of similar models. Values that are both bold and italic indicate the best scores overall, excluding models that suffered from modal collapse. These results suggest that model training should be customized to emphasize the relevant components.\n\n[CONTENT]\nSection Title: INTRODUCTION\n INTRODUCTION\n\nSection Title: IMPROVING LEARNED REPRESENTATIONS FOR GENERATIVE MODELING\n IMPROVING LEARNED REPRESENTATIONS FOR GENERATIVE MODELING Radford et al. (2015) demonstrated that Generative Adversarial Networks (GANs) are a good unsu- pervised technique for learning representations of images for the generative modeling of 2D images. Since then, a number of improvements have been made. First, Zhao et al. (2016) modified the error signal of the deep neural network from the original, single parameter criterion to a multi-parameter criterion using auto-encoder reconstruction loss. Berthelot et al. (2017) then further modified the loss function from a hinge loss to the Wasserstein distance between loss distributions. For each modification, the proposed changes improved the resulting output to visual inspection (see Ap- pendix A Figure 4 , Row 1 for the output of the most recent, BEGAN model). We propose a new loss function, building on the changes of the BEGAN model (called the scaled BEGAN GMSM) that further modifies the loss function to handle a broader range of image features within its internal representation.\n\nSection Title: GENERATIVE ADVERSARIAL NETWORKS\n GENERATIVE ADVERSARIAL NETWORKS Generative Adversarial Networks are a form of two-sample or hypothesis testing that uses a classi- fier, called a discriminator, to distinguish between observed (training) data and data generated by the model or generator. Training is then simplified to a competing (i.e., adversarial) objective between the discriminator and generator, where the discriminator is trained to better differentiate training from generated data, and the generator is trained to better trick the discriminator into thinking its generated data is real. The convergence of a GAN is achieved when the generator and discriminator reach a Nash equilibrium, from a game theory point of view (Zhao et al., 2016). In the original GAN specification, the task is to learn the generator's distribution p G over data x ( Goodfellow et al., 2014 ). To accomplish this, one defines a generator function G(z; θ G ), which produces an image using a noise vector z as input, and G is a differentiable function with param- eters θ G . The discriminator is then specified as a second function D(x; θ D ) that outputs a scalar representing the probability that x came from the data rather than p G . D is then trained to maxi- mize the probability of assigning the correct labels to the data and the image output of G while G is trained to minimize the probability that D assigns its output to the fake class, or 1 − D(G(z)). Although G and D can be any differentiable functions, we will only consider deep convolutional neural networks in what follows. Zhao et al. (2016) initially proposed a shift from the original single-dimensional criterion-the scalar class probability-to a multidimensional criterion by constructing D as an autoencoder. The image output by the autoencoder can then be directly compared to the output of G using one of the many standard distance functions (e.g., l 1 norm, mean square error). However, Zhao et al. (2016) also proposed a new interpretation of the underlying GAN architecture in terms of an energy-based model ( LeCun et al., 2006 ).\n\nSection Title: ENERGY-BASED GENERATIVE ADVERSARIAL NETWORKS\n ENERGY-BASED GENERATIVE ADVERSARIAL NETWORKS The basic idea of energy-based models (EBMs) is to map an input space to a single scalar or set of scalars (called its \"energy\") via the construction of a function ( LeCun et al., 2006 ). Learning in this framework modifies the energy surface such that desirable pairings get low energies while undesir- able pairings get high energies. This framework allows for the interpretation of the discriminator (D) as an energy function that lacks any explicit probabilistic interpretation (Zhao et al., 2016). In this view, the discriminator is a trainable cost function for the generator that assigns low energy val- ues to regions of high data density and high energy to the opposite. The generator is then interpreted as a trainable parameterized function that produces samples in regions assigned low energy by the discriminator. To accomplish this setup, Zhao et al. (2016) first define the discriminator's energy function as the mean square error of the reconstruction loss of the autoencoder, or: Zhao et al. (2016) then define the loss function for their discriminator using a form of margin loss. L D (x, z) = E D (x) + [m − E D (G(z))] + (2) where m is a constant and [·] + = max(0, ·). They define the loss function for their generator: The authors then prove that, if the system reaches a Nash equilibrium, then the generator will pro- duce samples that cannot be distinguished from the dataset. Problematically, simple visual inspec- tion can easily distinguish the generated images from the dataset.\n\nSection Title: DEFINING THE PROBLEM\n DEFINING THE PROBLEM It is clear that, despite the mathematical proof of Zhao et al. (2016) , humans can distinguish the images generated by energy-based models from real images. There are two direct approaches that could provide insight into this problem, both of which are outlined in the original paper. The first approach that is discussed by Zhao et al. (2016) changes Equation 2 to allow for better approxima- tions than m. The BEGAN model takes this approach. The second approach addresses Equation 1, but was only implicitly addressed when (Zhao et al., 2016) chose to change the original GAN to use the reconstruction error of an autoencoder instead of a binary logistic energy function. We chose to take the latter approach while building on the work of BEGAN. Our main contributions are as follows: • An energy-based formulation of BEGAN's solution to the visual problem. • An energy-based formulation of the problems with Equation 1. • Experiments that explore the different hyper-parameters of the new energy function. • Evaluations that provide greater detail into the learned representations of the model. • A demonstration that scaled BEGAN+GMSM can be used to generate better quality images from the CelebA dataset at 128x128 pixel resolution than the original BEGAN model in quantifiable ways.\n\nSection Title: BOUNDARY EQUILIBRIUM GENERATIVE ADVERSARIAL NETWORKS\n BOUNDARY EQUILIBRIUM GENERATIVE ADVERSARIAL NETWORKS The Boundary Equilibrium Generative Adversarial Network (BEGAN) makes a number of modi- fications to the original energy-based approach. However, the most important contribution can be summarized in its changes to Equation 2. In place of the hinge loss, Berthelot et al. (2017) use the Wasserstein distance between the autoencoder reconstruction loss distributions of G and D. They also add three new hyper-parameters in place of m: k t , λ k , and γ. Using an energy-based approach, we get the following new equation: The value of k t is then defined as: k t+1 = k t + λ k (γE D (x) − E D (G(z))) for each t (5) where k t ∈ [0, 1] is the emphasis put on E(G(z)) at training step t for the gradient of E D , λ k is the learning rate for k, and γ ∈ [0, 1]. Both Equations 2 and 4 are describing the same phenomenon: the discriminator is doing well if either 1) it is properly reconstructing the real images or 2) it is detecting errors in the reconstruction of the generated images. Equation 4 just changes how the model achieves that goal. In the original equation (Equation 2), we punish the discriminator (L D → ∞) when the generated input is doing well (E D (G(z)) → 0). In Equation 4, we reward the discriminator (L D → 0) when the generated input is doing poorly (E D (G(z)) → ∞). What is also different between Equations 2 and 4 is the way their boundaries function. In Equation 2, m only acts as a one directional boundary that removes the impact of the generated input on the discriminator if E D (G(z)) > m. In Equation 5, γE D (x) functions in a similar but more complex way by adding a dependency to E D (x). Instead of 2 conditions on either side of the boundary m, there are now four: The optimal condition is condition 1 Berthelot et al. (2017) . Thus, the BEGAN model tries to keep the energy of the generated output approaching the limit of the energy of the real images. As the latter will change over the course of learning, the resulting boundary dynamically establishes an equilibrium between the energy state of the real and generated input. It is not particularly surprising that these modifications to Equation 2 show improvements. Zhao et al. (2016) devote an appendix section to the correct selection of m and explicitly mention that the \"balance between... real and fake samples[s]\" (italics theirs) is crucial to the correct selection of m. Unsurprisingly, a dynamically updated parameter that accounts for this balance is likely to be the best instantiation of the authors' intuitions and visual inspection of the resulting output supports this (see Berthelot et al., 2017 ). We chose a slightly different approach to improving the proposed loss function by changing the original energy function (Equation 1).\n\nSection Title: FINDING A NEW ENERGY FUNCTION VIA IMAGE QUALITY ASSESSMENT\n FINDING A NEW ENERGY FUNCTION VIA IMAGE QUALITY ASSESSMENT In the original description of the energy-based approach to GANs, the energy function was defined as the mean square error (MSE) of the reconstruction loss of the autoencoder (Equation 1). Our first insight was a trivial generalization of Equation 1: E(x) = δ(D(x), x) (6) where δ is some distance function. This more general equation suggests that there are many possible distance functions that could be used to describe the reconstruction error and that the selection of δ is itself a design decision for the resulting energy and loss functions. Not surprisingly, an entire field of study exists that focuses on the construction of similar δ functions in the image domain: the field of image quality assessment (IQA). The field of IQA focuses on evaluating the quality of digital images ( Wang & Bovik, 2006 ). IQA is a rich and diverse field that merits substantial further study. However, for the sake of this paper, we want to emphasize three important findings from this field. First, distance functions like δ are called full-reference IQA (or FR-IQA) functions because the reconstruction (D(x)) has a 'true' or undistorted reference image (x) which it can be evaluated from Wang et al. (2004) . Second, IQA researchers have known for a long time that MSE is a poor indicator of image quality ( Wang & Bovik, 2006 ). And third, there are numerous other functions that are better able to indicate image quality. We explain each of these points below. One way to view the FR-IQA approach is in terms of a reference and distortion vector. In this view, an image is represented as a vector whose dimensions correspond with the pixels of the image. The reference image sets up the initial vector from the origin, which defines the original, perfect image. The distorted image is then defined as another vector defined from the origin. The vector that maps the reference image to the distorted image is called the distortion vector and FR-IQA studies how to evaluate different types of distortion vectors. In terms of our energy-based approach and Equation 6, the distortion vector is measured by δ and it defines the surface of the energy function. MSE is one of the ways to measure distortion vectors. It is based in a paradigm that views the loss of quality in an image in terms of the visibility of an error signal, which MSE quantifies. Problem- atically, it has been shown that MSE actually only defines the length of a distortion vector not its type ( Wang & Bovik, 2006 ). For any given reference image vector, there are an entire hypersphere of other image vectors that can be reached by a distortion vector of a given size (i.e., that all have the same MSE from the reference image; see Figure 1 ). A number of different measurement techniques have been created that improve upon MSE (for a review, see Chandler, 2013 ). Often these techniques are defined in terms of the similarity (S) between the reference and distorted image, where δ = 1−S. One of the most notable improvements is the Structural Similarity Index (SSIM), which measures the similarity of the luminance, contrast, and structure of the reference and distorted image using the following similarity function: 2 S(v d , v r ) = 2v d v r + C v 2 d + v 2 r + C (7) where v d is the distorted image vector, v r is the reference image vector, C is a constant, and all multiplications occur element-wise Wang & Bovik (2006) . 3 This function has a number of desirable features. It is symmetric (i.e., S(v d , v r ) = S(v r , v d ), bounded by 1 (and 0 for x > 0), and it has a unique maximum of 1 only when v d = v r . Although we chose not to use SSIM as our energy function (δ) as it can only handle black-and-white images, its similarity function (Equation 7) informs our chosen technique. The above discussion provides some insights into why visual inspection fails to show this correspon- dence between real and generated output of the resulting models, even though Zhao et al. (2016) proved that the generator should produce samples that cannot be distinguished from the dataset. The original proof by Zhao et al. (2016) did not account for Equation 1. Thus, when Zhao et al. (2016) show that their generated output should be indistinguishable from real images, what they are actu- ally showing is that it should be indistinguishable from the real images plus some residual distortion vector described by δ. Yet, we have just shown that MSE (the author's chosen δ) can only constrain the length of the distortion vector, not its type. Consequently, it is entirely possible for two systems using MSE for δ to have both reached a Nash equilibrium, have the same energy distribution, and yet have radically different internal representations of the learned images. The energy function is as important as the loss function for defining the data distribution.\n\nSection Title: A NEW ENERGY FUNCTION\n A NEW ENERGY FUNCTION Rather than assume that any one distance function would suffice to represent all of the various features of real images, we chose to use a multi-component approach for defining δ. In place of the luminance, contrast, and structural similarity of SSIM, we chose to evaluate the l 1 norm, the gradient magnitude similarity score (GMS), and a chrominance similarity score (Chrom). We outline the latter two in more detail below. The GMS score and chrom scores derive from an FR-IQA model called the color Quality Score (cQS; Gupta et al., 2017 ). The cQS uses GMS and chrom as its two components. First, it converts images to the YIQ color space model. In this model, the three channels correspond to the luminance information (Y) and the chrominance information (I and Q). Second, GMS is used to evaluate the local gradients across the reference and distorted images on the luminance dimension in order to compare their edges. This is performed by convolving a 3 × 3 Sobel filter in both the horizontal and vertical directions of each image to get the corresponding gradients. The horizontal and vertical gradients are then collapsed to the gradient magnitude of each image using the Euclidean distance. 4 The similarity between the gradient magnitudes of the reference and distorted image are then com- pared using Equation 7. Third, Equation 7 is used to directly compute the similarity between the I and Q color dimensions of each image. The mean is then taken of the GMS score (resulting in the GMSM score) and the combined I and Q scores (resulting in the Chrom score). In order to experimentally evaluate how each of the different components contribute to the underly- ing image representations, we defined the following, multi-component energy function: E D = δ∈D δ(D(x), x)β d δ∈D β d (8) where β d is the weight that determines the proportion of each δ to include for a given model, and D includes the l 1 norm, GMSM, and the chrominance part of cQS as individual δs. In what follows, we experimentally evaluate each of the energy function components(β) and some of their combinations.\n\nSection Title: EXPERIMENTS\n EXPERIMENTS\n\nSection Title: METHOD\n METHOD We conducted extensive quantitative and qualitative evaluation on the CelebA dataset of face images Liu et al. (2015) . This dataset has been used frequently in the past for evaluating GANs Radford et al. (2015) ; Zhao et al. (2016) ; Chen et al. (2016) ; Liu & Tuzel (2016) . We evaluated 12 different models in a number of combinations (see Table 1 ). They are as follows. Models 1, 7, and 11 are the original BEGAN model. Models 2 and 3 only use the GMSM and chrominance distance functions, respectively. Models 4 and 8 are the BEGAN model plus GMSM. Models 5 and 9 use all three Under review as a conference paper at ICLR 2018 distance functions (BEGAN+GMSM+Chrom). Models 6, 10, and 12 use a 'scaled' BEGAN model (β l1 = 2) with GMSM. All models with different model numbers but the same β d values differ in their γ values or the output image size.\n\nSection Title: SETUP\n SETUP All of the models we evaluate in this paper are based on the architecture of the BEGAN model Berthelot et al. (2017) . 5 We trained the models using Adam with a batch size of 16, β 1 of 0.9, β 2 of 0.999, and an initial learning rate of 0.00008, which decayed by a factor of 2 every 100,000 epochs. Parameters k t and k 0 were set at 0.001 and 0, respectively (see Equation 5). The γ parameter was set relative to the model (see Table 1 ). Most of our experiments were performed on 64 × 64 pixel images with a single set of tests run on 128 × 128 images. The number of convolution layers were 3 and 4, respectively, with a constant down-sampled size of 8 × 8. We found that the original size of 64 for the input vector (N z ) and hidden state (N h ) resulted in modal collapse for the models using GMSM. However, we found that this was fixed by increasing the input size to 128 and 256 for the 64 and 128 pixel images, respectively. We used N z = 128 for all models except 12 (scaled BEGAN+GMSM), which used 256. N z always equaled N h in all experiments. Models 2-3 were run for 18,000 epochs, 1 and 4-10 were run for 100,000 epochs, and 11-12 were run for 300,000 epochs. Models 2-4 suffered from modal collapse immediately and 5 (BE- GAN+GMSM+Chrom) collapsed around epoch 65,000 (see Appendix A Figure 4 rows 2-5).\n\nSection Title: EVALUATIONS\n EVALUATIONS We performed two evaluations. First, to evaluate whether and to what extent the models were able to capture the relevant properties of each associated distance function, we compared the mean and standard deviation of the error scores. We calculated them for each distance function over all epochs of all models. We chose to use the mean rather than the minimum score as we were interested in how each model performs as a whole, rather than at some specific epoch. All calculations use the distance, or one minus the corresponding similarity score, for both the gradient magnitude and chrominance values. Reduced pixelation is an artifact of the intensive scaling for image presentation (up to 4×). All images in the qualitative evaluations were upscaled from their original sizes using cubic image sampling so that they can be viewed at larger sizes. Consequently, the apparent smoothness of the scaled images is not a property of the model.\n\nSection Title: RESULTS\n RESULTS GANs are used to generate different types of images. Which image components are important depends on the domain of these images. Our results suggest that models used in any particular GAN application should be customized to emphasize the relevant components-there is not a one-size- fits-all component choice. We discuss the results of our four evaluations below.\n\nSection Title: MEANS AND STANDARD DEVIATIONS OF ERROR SCORES\n MEANS AND STANDARD DEVIATIONS OF ERROR SCORES Results were as expected: the three different distance functions captured different features of the underlying image representations. We compared all of the models in terms of their means and standard deviations of the error score of the associated distance functions (see Table 2 ). In particular, each of models 1-3 only used one of the distance functions and had the lowest error for the associated function (e.g., model 2 was trained with GMSM and has the lowest GMSM error score). Models 4-6 expanded on the first three models by examining the distance functions in different combinations. Model 5 (BEGAN+GMSM+Chrom) had the lowest chrominance error score and Model 6 (scaled BEGAN+GMSM) had the lowest scores for l 1 and GMSM of any model using a γ of 0.5. For the models with γ set at 0.7, models 7-9 showed similar results to the previous scores. Model 8 (BEGAN+GMSM) scored the lowest GMSM score overall and model 9 (BEGAN+GMSM+Chrom) scored the lowest chrominance score of the models that did not suffer from modal collapse. For the two models that were trained to generate 128 × 128 pixel images, model 12 (scaled BE- GAN+GMSM) had the lowest error scores for l 1 and GMSM, and model 11 (BEGAN) had the lowest score for chrominance. Model 12 had the lowest l 1 score, overall.\n\nSection Title: VISUAL COMPARISON OF SIMILARITY SCORES\n VISUAL COMPARISON OF SIMILARITY SCORES Subjective visual comparison of the gradient magnitudes in column S of Figure 2 shows there are more black pixels for model 11 (row 11D) when comparing real images before and after autoencod- ing. This indicates a lower similarity in the autoencoder. Model 12 (row 12D) has a higher similarity between the original and autoencoded real images as indicated by fewer black pixels. This pattern continues for the generator output (rows 11G and 12G), but with greater similarity between the gra- dients of the original and autoencoded images than the real images (i.e., fewer black pixels overall). The visual comparison of chrominance and related similarity score also weakly supported our hy- potheses (see Figure 3 ). All of the models show a strong ability to capture the I dimension (blue-red) of the YIQ color space, but only model 9 (BEGAN+GMSM+Chrom) is able to accurately capture the relevant information in the Q dimension (green-purple).\n\nSection Title: OUTLOOK\n OUTLOOK We bring an energy-based formulation to the BEGAN model and some of the problems of the en- ergy function originally proposed in Zhao et al. (2016) . We proposed a new, multi-component energy function on the basis of research from the Image Quality Assessment literature. The scaled BEGAN+GMSM model produces better image representations than its competitors in ways that can be measured using subjective evaluations of the associated features (e.g., luminance gradient simi- larity, chrominance similarity). For future work, we would like to extend this research to encompass other datasets and FR-IQA energy functions.\n\nSection Title: Annex Figures\n Annex Figures fig_3 Figure 4: Figure 4: Four outputs of each of the generators of all 12 models. The best images for each model were hand-picked. The first row is model 1, which corresponds with the original BEGAN model. Rows 2-12 represent our experiments. Each cell represents the output of a random sample. \n", "output": "[Significance and novelty]\n<Incremental nature of the paper> Reviewers highlighted that the paper's proposed approach was somewhat incremental, and its significance and novelty were questioned. The modifications and additions to the existing BEGAN framework were not perceived as significantly contributing to the field.\n<Limited novelty of energy function components> The paper's novelty was deemed to be restricted, particularly in the choice and justification of the energy function components. Reviewers pointed out that the selected components were borrowed from existing image quality assessment techniques, and their application was not seen as a significant contribution to GAN theories or algorithm designs.\n\n[Potential reasons for acceptance]\n<No related terms> -\n\n[Potential reasons for rejection]\n<Weak experiments and results analysis> Reviewers noted that the experimental section was weak, with reliance on visual evaluations and a limited range of datasets (primarily CelebA). This led to concerns about the thoroughness and robustness of the paper's findings.\n<Unclear significance and contribution> The paper was criticized for its lack of clarity in motivating its contributions and presenting results. Reviewers found it difficult to estimate the significance of the proposed model and understand how its results differed from baseline models.\n\n[Suggestions for improvement]\n<Broaden experimental testing> To strengthen the paper, reviewers suggested broadening the experimental testing to include different datasets involving natural images, beyond the single CelebA dataset. This would provide a more comprehensive evaluation of the proposed techniques.\n<Clarify and justify design choices> Improvements in the paper's clarity and justification were recommended, specifically in clarifying the design choices made for the energy function components. Providing clear justifications for the modifications and additions to the BEGAN framework would enhance the paper's credibility and significance.\n\n"}

- {"input": "[TITLE]\nOn Unifying Deep Generative Models\n\n[ABSTRACT]\nDeep generative models have achieved impressive success in recent years. Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), as powerful frameworks for deep generative model learning, have largely been considered as two distinct paradigms and received extensive independent studies respectively. This paper aims to establish formal connections between GANs and VAEs through a new formulation of them. We interpret sample generation in GANs as performing posterior inference, and show that GANs and VAEs involve minimizing KL divergences of respective posterior and inference distributions with opposite directions, extending the two learning phases of classic wake-sleep algorithm, respectively. The unified view provides a powerful tool to analyze a diverse set of existing model variants, and enables to transfer techniques across research lines in a principled way. For example, we apply the importance weighting method in VAE literatures for improved GAN learning, and enhance VAEs with an adversarial mechanism that leverages generated samples. Experiments show generality and effectiveness of the transfered techniques. \n\n[CAPTIONS]\nFigure 1: (a) Conventional view of ADA. To make direct correspondence to GANs, we use z to denote the data and x the feature. Subscripts src and tgt denote source and target domains, respectively. (b) Conventional view of GANs. (c) Schematic graphical model of both ADA and GANs (Eq.3). Arrows with solid lines denote generative process; arrows with dashed lines denote inference; hollow arrows denote deterministic transformation leading to implicit distributions; and blue arrows denote adversarial mechanism that involves respective conditional distribution q and its reverse q r , e.g., q(y|x) and q r (y|x) (denoted as q (r) (y|x) for short). Note that in GANs we have interpreted x as latent variable and (z, y) as visible. (d) InfoGAN (Eq.9), which, compared to GANs, adds conditional generation of code z with distribution qη(z|x, y). (e) VAEs (Eq.12), which is obtained by swapping the generation and inference processes of InfoGAN, i.e., in terms of the schematic graphical model, swapping solid-line arrows (generative process) and dashed-line arrows (inference) of (d).\nFigure 2: One optimization step of the parameter θ through Eq.(6) at point θ0. The posterior q r (x|y) is a mixture of p θ 0 (x|y = 0) (blue) and p θ 0 (x|y = 1) (red in the left panel) with the mixing weights induced from q r φ 0 (y|x). Minimizing the KLD drives p θ (x|y = 0) towards the respective mixture q r (x|y = 0) (green), resulting in a new state where p θ new (x|y = 0) = pg θ new (x) (red in the right panel) gets closer to p θ 0 (x|y = 1) = p data (x). Due to the asymmetry of KLD, pg θ new (x) missed the smaller mode of the mixture q r (x|y = 0) which is a mode of p data (x).\nFigure 3: Symmetric view of generation and inference. There is little difference of the two processes in terms of formulation: with implicit distribution modeling, both processes only need to perform simulation through black-box neural transformations between the latent and visible spaces.\nTable 1: Correspondence between different approaches in the proposed formulation. The label \"[G]\" in bold indicates the respective component is involved in the generative process within our interpretation, while \"[I]\" indicates inference process. This is also expressed in the schematic graphical models in Figure 1.\nTable 2: Left: Inception scores of GANs and the importance weighted extension. Middle: Classification accuracy of the generations by conditional GANs and the IW extension. Right: Classification accuracy of semi-supervised VAEs and the AA extension on MNIST test set, with 1% and 10% real labeled training data.\nTable 3: Variational lower bounds on MNIST test set, trained on 1%, 10%, and 100% training data, respectively.\n\n[CONTENT]\nSection Title: INTRODUCTION\n INTRODUCTION Deep generative models define distributions over a set of variables organized in multiple layers. Early forms of such models dated back to works on hierarchical Bayesian models (Neal, 1992) and neural network models such as Helmholtz machines (Dayan et al., 1995), originally studied in the context of unsupervised learning, latent space modeling, etc. Such models are usually trained via an EM style framework, using either a variational inference (Jordan et al., 1999) or a data augmentation (Tanner & Wong, 1987) algorithm. Of particular relevance to this paper is the classic wake-sleep algorithm dates by Hinton et al. (1995) for training Helmholtz machines, as it explored an idea of minimizing a pair of KL divergences in opposite directions of the posterior and its approximation. In recent years there has been a resurgence of interests in deep generative modeling. The emerging approaches, including Variational Autoencoders (VAEs) (Kingma & Welling, 2013), Generative Adversarial Networks (GANs) (Goodfellow et al., 2014), Generative Moment Matching Networks (GMMNs) (Li et al., 2015; Dziugaite et al., 2015), auto-regressive neural networks (Larochelle & Murray, 2011; Oord et al., 2016), and so forth, have led to impressive results in a myriad of applications, such as image and text generation (Radford et al., 2015; Hu et al., 2017; van den Oord et al., 2016), disentangled representation learning (Chen et al., 2016; Kulkarni et al., 2015), and semi-supervised learning (Salimans et al., 2016; Kingma et al., 2014). The deep generative model literature has largely viewed these approaches as distinct model training paradigms. For instance, GANs aim to achieve an equilibrium between a generator and a discrimi- nator; while VAEs are devoted to maximizing a variational lower bound of the data log-likelihood. A rich array of theoretical analyses and model extensions have been developed independently for GANs (Arjovsky & Bottou, 2017; Arora et al., 2017; Salimans et al., 2016; Nowozin et al., 2016) and VAEs (Burda et al., 2015; Chen et al., 2017; Hu et al., 2017), respectively. A few works attempt to combine the two objectives in a single model for improved inference and sample gener- ation (Mescheder et al., 2017; Larsen et al., 2015; Makhzani et al., 2015; Sønderby et al., 2017). Despite the significant progress specific to each method, it remains unclear how these apparently divergent approaches connect to each other in a principled way. In this paper, we present a new formulation of GANs and VAEs that connects them under a unified view, and links them back to the classic wake-sleep algorithm. We show that GANs and VAEs involve minimizing opposite KL divergences of respective posterior and inference distributions, and extending the sleep and wake phases, respectively, for generative model learning. More specifically, we develop a reformulation of GANs that interprets generation of samples as performing posterior inference, leading to an objective that resembles variational inference as in VAEs. As a counterpart, VAEs in our interpretation contain a degenerated adversarial mechanism that blocks out generated samples and only allows real examples for model training. The proposed interpretation provides a useful tool to analyze the broad class of recent GAN- and VAE- based algorithms, enabling perhaps a more principled and unified view of the landscape of generative modeling. For instance, one can easily extend our formulation to subsume InfoGAN (Chen et al., 2016) that additionally infers hidden representations of examples, VAE/GAN joint models (Larsen et al., 2015; Che et al., 2017a) that offer improved generation and reduced mode missing, and adver- sarial domain adaptation (ADA) (Ganin et al., 2016; Purushotham et al., 2017) that is traditionally framed in the discriminative setting. The close parallelisms between GANs and VAEs further ease transferring techniques that were originally developed for improving each individual class of models, to in turn benefit the other class. We provide two examples in such spirit: 1) Drawn inspiration from importance weighted VAE (IWAE) (Burda et al., 2015), we straightforwardly derive importance weighted GAN (IWGAN) that maximizes a tighter lower bound on the marginal likelihood compared to the vanilla GAN. 2) Motivated by the GAN adversarial game we activate the originally degenerated discriminator in VAEs, resulting in a full-fledged model that adaptively leverages both real and fake examples for learning. Empirical results show that the techniques imported from the other class are generally applicable to the base model and its variants, yielding consistently better performance.\n\nSection Title: RELATED WORK\n RELATED WORK There has been a surge of research interest in deep generative models in recent years, with remarkable progress made in understanding several class of algorithms. The wake-sleep algorithm (Hinton et al., 1995) is one of the earliest general approaches for learning deep generative models. The algorithm incorporates a separate inference model for posterior approximation, and aims at maximizing a variational lower bound of the data log-likelihood, or equivalently, minimizing the KL divergence of the approximate posterior and true posterior. However, besides the wake phase that minimizes the KL divergence w.r.t the generative model, the sleep phase is introduced for tractability that minimizes instead the reversed KL divergence w.r.t the inference model. Recent approaches such as NVIL (Mnih & Gregor, 2014) and VAEs (Kingma & Welling, 2013) are developed to maximize the variational lower bound w.r.t both the generative and inference models jointly. To reduce the variance of stochastic gradient estimates, VAEs leverage reparametrized gradients. Many works have been done along the line of improving VAEs. Burda et al. (2015) develop importance weighted VAEs to obtain a tighter lower bound. As VAEs do not involve a sleep phase-like procedure, the model cannot leverage samples from the generative model for model training. Hu et al. (2017) combine VAEs with an extended sleep procedure that exploits generated samples for learning. Another emerging family of deep generative models is the Generative Adversarial Networks (GANs) (Goodfellow et al., 2014), in which a discriminator is trained to distinguish between real and generated samples and the generator to confuse the discriminator. The adversarial approach can be alternatively motivated in the perspectives of approximate Bayesian computation (Gutmann et al., 2014) and density ratio estimation (Mohamed & Lakshminarayanan, 2016). The original objective of the generator is to minimize the log probability of the discriminator correctly recognizing a generated sample as fake. This is equivalent to minimizing a lower bound on the Jensen-Shannon divergence (JSD) of the generator and data distributions (Goodfellow et al., 2014; Nowozin et al., 2016; Huszar, 2016; Li, 2016). Besides, the objective suffers from vanishing gradient with strong discriminator. Thus in practice people have used another objective which maximizes the log probability of the discriminator recognizing a generated sample as real (Goodfellow et al., 2014; Arjovsky & Bottou, 2017). The second objective has the same optimal solution as with the original one. We base our analysis of GANs on the second objective as it is widely used in practice yet few theoretic analysis has been done on it. Numerous extensions of GANs have been developed, including combination with VAEs for improved generation (Larsen et al., 2015; Makhzani et al., 2015; Che et al., 2017a), and generalization of the objectives to minimize other f-divergence criteria beyond JSD (Nowozin et al., 2016; Sønderby et al., 2017). The adversarial principle has gone beyond the generation setting and been applied to other contexts such as domain adaptation (Ganin et al., 2016; Purushotham et al., 2017), and Bayesian inference (Mescheder et al., 2017; Tran et al., 2017; Huszár, 2017; Rosca et al., 2017) which uses implicit variational distributions in VAEs and leverage the adversarial approach for optimization. This paper starts from the basic models of GANs and VAEs, and develops a general formulation that reveals underlying connections of different classes of approaches including many of the above variants, yielding a unified view of the broad set of deep generative modeling.\n\nSection Title: BRIDGING THE GAP\n BRIDGING THE GAP The structures of GANs and VAEs are at the first glance quite different from each other. VAEs are based on the variational inference approach, and include an explicit inference model that reverses the generative process defined by the generative model. On the contrary, in traditional view GANs lack an inference model, but instead have a discriminator that judges generated samples. In this paper, a key idea to bridge the gap is to interpret the generation of samples in GANs as performing inference, and the discrimination as a generative process that produces real/fake labels. The resulting new formulation reveals the connections of GANs to traditional variational inference. The reversed generation-inference interpretations between GANs and VAEs also expose their correspondence to the two learning phases in the classic wake-sleep algorithm. For ease of presentation and to establish a systematic notation for the paper, we start with a new interpretation of Adversarial Domain Adaptation (ADA) (Ganin et al., 2016), the application of adversarial approach in the domain adaptation context. We then show GANs are a special case of ADA, followed with a series of analysis linking GANs, VAEs, and their variants in our formulation.\n\nSection Title: ADVERSARIAL DOMAIN ADAPTATION (ADA)\n ADVERSARIAL DOMAIN ADAPTATION (ADA) ADA aims to transfer prediction knowledge learned from a source domain to a target domain, by learning domain-invariant features (Ganin et al., 2016). That is, it learns a feature extractor whose output cannot be distinguished by a discriminator between the source and target domains. We first review the conventional formulation of ADA. Figure 1(a) illustrates the computation flow. Let z be a data example either in the source or target domain, and y ∈ {0, 1} the domain indicator with y = 0 indicating the target domain and y = 1 the source domain. The data distributions conditioning on the domain are then denoted as p(z|y). The feature extractor G θ parameterized with θ maps z to feature x = G θ (z). To enforce domain invariance of feature x, a discriminator D φ is learned. Specifically, D φ (x) outputs the probability that x comes from the source domain, and the discriminator is trained to maximize the binary classification accuracy of recognizing the domains: The feature extractor G θ is then trained to fool the discriminator: Please see the supplementary materials for more details of ADA. With the background of conventional formulation, we now frame our new interpretation of ADA. The data distribution p(z|y) and deterministic transformation G θ together form an implicit distribution over x, denoted as p θ (x|y), which is intractable to evaluate likelihood but easy to sample from. Let p(y) be the distribution of the domain indicator y, e.g., a uniform distribution as in Eqs.(1)-(2). The discriminator defines a conditional distribution q φ (y|x) = D φ (x). Let q r φ (y|x) = q φ (1 − y|x) be the reversed distribution over domains. The objectives of ADA are therefore rewritten as (omitting the constant scale factor 2): Note that z is encapsulated in the implicit distribution p θ (x|y). The only difference of the objectives of θ from φ is the replacement of q(y|x) with q r (y|x). This is where the adversarial mechanism comes about. We defer deeper interpretation of the new objectives in the next subsection.\n\nSection Title: GENERATIVE ADVERSARIAL NETWORKS (GANS)\n GENERATIVE ADVERSARIAL NETWORKS (GANS) GANs (Goodfellow et al., 2014) can be seen as a special case of ADA. Taking image generation for example, intuitively, we want to transfer the properties of real image (source domain) to generated image (target domain), making them indistinguishable to the discriminator. Figure 1(b) shows the conventional view of GANs. Formally, x now denotes a real example or a generated sample, z is the respective latent code. For the generated sample domain (y = 0), the implicit distribution p θ (x|y = 0) is defined by the prior of z and the generator G θ (z), which is also denoted as p g θ (x) in the literature. For the real example domain (y = 1), the code space and generator are degenerated, and we are directly presented with a fixed distribution p(x|y = 1), which is just the real data distribution p data (x). Note that p data (x) is also an implicit distribution and allows efficient empirical sampling. In summary, the conditional distribution over x is constructed as Here, free parameters θ are only associated with p g θ (x) of the generated sample domain, while p data (x) is constant. As in ADA, discriminator D φ is simultaneously trained to infer the probability that x comes from the real data domain. That is, q φ (y = 1|x) = D φ (x). With the established correspondence between GANs and ADA, we can see that the objectives of GANs are precisely expressed as Eq.(3). To make this clearer, we recover the classical form by unfolding over y and plugging in conventional notations. For instance, the objective of the generative parameters θ in Eq.(3) is translated into where p(y) is uniform and results in the constant scale factor 1/2. As noted in sec.2, we focus on the unsaturated objective for the generator (Goodfellow et al., 2014), as it is commonly used in practice yet still lacks systematic analysis.\n\nSection Title: New Interpretation\n New Interpretation Let us take a closer look into the form of Eq.(3). It closely resembles the data reconstruction term of a variational lower bound by treating y as visible variable while x as latent (as in ADA). That is, we are essentially reconstructing the real/fake indicator y (or its reverse 1 − y) with the \"generative distribution\" q φ (y|x) and conditioning on x from the \"inference distribution\" p θ (x|y). Figure 1(c) shows a schematic graphical model that illustrates such generative and inference processes. (Sec.D in the supplementary materials gives an example of translating a given schematic graphical model into mathematical formula.) We go a step further to reformulate the objectives and reveal more insights to the problem. In particular, for each optimization step of p θ (x|y) at point (θ 0 , φ 0 ) in the parameter space, we have: \" # = 1 = ()*) ( ) \" # = 0 = . / # ( ) 1 ( | = 0) \" 345 = 0 = . / 345 ( ) missed mode where KL(· ·) and JSD(· ·) are the KL and Jensen-Shannon Divergences, respectively. Proofs are in the supplements (sec.B). Eq.(6) offers several insights into the GAN generator learning: • Resemblance to variational inference. As above, we see x as latent and p θ (x|y) as the inference distribution. The p θ0 (x) is fixed to the starting state of the current update step, and can naturally be seen as the prior over x. By definition q r (x|y) that combines the prior p θ0 (x) and the generative distribution q r φ0 (y|x) thus serves as the posterior. Therefore, optimizing the generator G θ is equivalent to minimizing the KL divergence between the inference distribution and the posterior (a standard from of variational inference), minus a JSD between the distributions p g θ (x) and p data (x). The interpretation further reveals the connections to VAEs, as discussed later. • Training dynamics. By definition, p θ0 (x) = (p g θ 0 (x)+p data (x))/2 is a mixture of p g θ 0 (x) and p data (x) with uniform mixing weights, so the posterior q r (x|y) ∝ q r φ0 (y|x)p θ0 (x) is also a mix- ture of p g θ 0 (x) and p data (x) with mixing weights induced from the discriminator q r φ0 (y|x). For the KL divergence to minimize, the component with y = 1 is KL (p θ (x|y = 1) q r (x|y = 1)) = KL (p data (x) q r (x|y = 1)) which is a constant. The active component for optimization is with y = 0, i.e., KL (p θ (x|y = 0) q r (x|y = 0)) = KL (p g θ (x) q r (x|y = 0)). Thus, minimizing the KL divergence in effect drives p g θ (x) to a mixture of p g θ 0 (x) and p data (x). Since p data (x) is fixed, p g θ (x) gets closer to p data (x). Figure 2 illustrates the training dynamics schematically. • The JSD term. The negative JSD term is due to the introduction of the prior p θ0 (x). This term pushes p g θ (x) away from p data (x), which acts oppositely from the KLD term. However, we show that the JSD term is upper bounded by the KLD term (sec.C). Thus, if the KLD term is sufficiently minimized, the magnitude of the JSD also decreases. Note that we do not mean the JSD is insignificant or negligible. Instead conclusions drawn from Eq.(6) should take the JSD term into account. • Explanation of missing mode issue. JSD is a symmetric divergence measure while KLD is non-symmetric. The missing mode behavior widely observed in GANs (Metz et al., 2017; Che et al., 2017a) is thus explained by the asymmetry of the KLD which tends to concentrate p θ (x|y) to large modes of q r (x|y) and ignore smaller ones. See Figure 2 for the illustration. Concentration to few large modes also facilitates GANs to generate sharp and realistic samples. • Optimality assumption of the discriminator. Previous theoretical works have typically assumed (near) optimal discriminator (Goodfellow et al., 2014; Arjovsky & Bottou, 2017): q φ 0 (y|x) ≈ p θ 0 (x|y = 1) p θ 0 (x|y = 0) + p θ 0 (x|y = 1) = p data (x) pg θ 0 (x) + p data (x) , (7) which can be unwarranted in practice due to limited expressiveness of the discriminator (Arora et al., 2017). In contrast, our result does not rely on the optimality assumptions. Indeed, our result is a generalization of the previous theorem in (Arjovsky & Bottou, 2017), which is recovered by plugging Eq.(7) into Eq.(6): ∇ θ − E p θ (x|y)p(y) log q r φ 0 (y|x) θ=θ 0 = ∇ θ 1 2 KL (pg θ p data ) − JSD (pg θ p data ) θ=θ 0 , (8) which gives simplified explanations of the training dynamics and the missing mode issue only when the discriminator meets certain optimality criteria. Our generalized result enables understanding of broader situations. For instance, when the discriminator distribution q φ0 (y|x) gives uniform guesses, or when p g θ = p data that is indistinguishable by the discriminator, the gradients of the KL and JSD terms in Eq.(6) cancel out, which stops the generator learning. InfoGAN Chen et al. (2016) developed InfoGAN which additionally recovers (part of) the latent code z given sample x. This can straightforwardly be formulated in our framework by introducing an extra conditional q η (z|x, y) parameterized by η. As discussed above, GANs assume a degenerated code space for real examples, thus q η (z|x, y = 1) is fixed without free parameters to learn, and η is only associated to y = 0. The InfoGAN is then recovered by combining q η (z|x, y) with q φ (y|x) in Eq.(3) to perform full reconstruction of both z and y: Again, note that z is encapsulated in the implicit distribution p θ (x|y). The model is expressed as the schematic graphical model in Figure 1(d). Let q r (x|z, y) ∝ q η0 (z|x, y)q r φ0 (y|x)p θ0 (x) be the augmented \"posterior\", the result in the form of Lemma.1 still holds by adding z-related conditionals: The new formulation is also generally applicable to other GAN-related variants, such as Adversar- ial Autoencoder (Makhzani et al., 2015), Predictability Minimization (Schmidhuber, 1992), and cycleGAN (Zhu et al., 2017). In the supplements we provide interpretations of the above models.\n\nSection Title: VARIATIONAL AUTOENCODERS (VAES)\n VARIATIONAL AUTOENCODERS (VAES) We next explore the second family of deep generative modeling. The resemblance of GAN generator learning to variational inference (Lemma.1) suggests strong relations between VAEs (Kingma & Welling, 2013) and GANs. We build correspondence between them, and show that VAEs involve minimizing a KLD in an opposite direction, with a degenerated adversarial discriminator. The conventional definition of VAEs is written as: max θ,η L vae θ,η = E p data (x) Eq η (z|x) [logp θ (x|z)] − KL(qη(z|x) p(z)) , (11) wherep θ (x|z) is the generator,q η (z|x) the inference model, andp(z) the prior. The parameters to learn are intentionally denoted with the notations of corresponding modules in GANs. VAEs appear to differ from GANs greatly as they use only real examples and lack adversarial mechanism. To connect to GANs, we assume a perfect discriminator q * (y|x) which always predicts y = 1 with probability 1 given real examples, and y = 0 given generated samples. Again, for notational simplicity, let q r * (y|x) = q * (1 − y|x) be the reversed distribution. Lemma 2. Let p θ (z, y|x) ∝ p θ (x|z, y)p(z|y)p(y). The VAE objective L vae θ,η in Eq.(11) is equivalent to (omitting the constant scale factor 2): Here most of the components have exact correspondences (and the same definitions) in GANs and InfoGAN (see Table 1 ), except that the generation distribution p θ (x|z, y) differs slightly from its Components ADA GANs / InfoGAN VAEs x features data/generations data/generations y domain indicator real/fake indicator real/fake indicator (degenerated) z data examples code vector code vector p θ (x|y) feature distr. [I] generator, Eq.4 [G] p θ (x|z, y), generator, Eq.13 q φ (y|x) discriminator [G] discriminator [I] q*(y|x), discriminator (degenerated) qη(z|x, y) - [G] infer net (InfoGAN) [I] infer net KLD to min same as GANs counterpart p θ (x|y) in Eq.(4) to additionally account for the uncertainty of generating x given z: We provide the proof of Lemma 2 in the supplementary materials. Figure 1(e) shows the schematic graphical model of the new interpretation of VAEs, where the only difference from InfoGAN (Figure 1(d)) is swapping the solid-line arrows (generative process) and dashed-line arrows (inference). As in GANs and InfoGAN, for the real example domain with y = 1, both q η (z|x, y = 1) and p θ (x|z, y = 1) are constant distributions. Since given a fake sample x from p θ0 (x), the reversed perfect discriminator q r * (y|x) always predicts y = 1 with probability 1, the loss on fake samples is therefore degenerated to a constant, which blocks out fake samples from contributing to learning.\n\nSection Title: CONNECTING GANS AND VAES\n CONNECTING GANS AND VAES Table 1 summarizes the correspondence between the approaches. Lemma.1 and Lemma.2 have revealed that both GANs and VAEs involve minimizing a KLD of respective inference and posterior distributions. In particular, GANs involve minimizing the KL p θ (x|y) q r (x|y) while VAEs the KL q η (z|x, y)q r * (y|x) p θ (z, y|x) . This exposes several new connections between the two model classes, each of which in turn leads to a set of existing research, or can inspire new research directions: 1) As discussed in Lemma.1, GANs now also relate to the variational inference algorithm as with VAEs, revealing a unified statistical view of the two classes. Moreover, the new perspective naturally enables many of the extensions of VAEs and vanilla variational inference algorithm to be transferred to GANs. We show an example in the next section. 2) The generator parameters θ are placed in the opposite directions in the two KLDs. The asymmetry of KLD leads to distinct model behaviors. For instance, as discussed in Lemma.1, GANs are able to generate sharp images but tend to collapse to one or few modes of the data (i.e., mode missing). In contrast, the KLD of VAEs tends to drive generator to cover all modes of the data distribution but also small-density regions (i.e., mode covering), which usually results in blurred, implausible samples. This naturally inspires combination of the two KLD objectives to remedy the asymmetry. Previous works have explored such combinations, though motivated in different perspectives (Larsen et al., 2015; Che et al., 2017a; Pu et al., 2017). We discuss more details in the supplements. 3) VAEs within our formulation also include an adversarial mechanism as in GANs. The discriminator is perfect and degenerated, disabling generated samples to help with learning. This inspires activating the adversary to allow learning from samples. We present a simple possible way in the next section. 4) GANs and VAEs have inverted latent-visible treatments of (z, y) and x, since we interpret sample generation in GANs as posterior inference. Such inverted treatments strongly relates to the symmetry of the sleep and wake phases in the wake-sleep algorithm, as presented shortly. In sec.6, we provide a more general discussion on a symmetric view of generation and inference.\n\nSection Title: CONNECTING TO WAKE SLEEP ALGORITHM (WS)\n CONNECTING TO WAKE SLEEP ALGORITHM (WS) Wake-sleep algorithm (Hinton et al., 1995) was proposed for learning deep generative models such as Helmholtz machines (Dayan et al., 1995). WS consists of wake phase and sleep phase, which optimize the generative model and inference model, respectively. We follow the above notations, and introduce new notations h to denote general latent variables and λ to denote general parameters. The wake sleep algorithm is thus written as: Briefly, the wake phase updates the generator parameters θ by fitting p θ (x|h) to the real data and hidden code inferred by the inference model q λ (h|x). On the other hand, the sleep phase updates the parameters λ based on the generated samples from the generator. The relations between WS and VAEs are clear in previous discussions (Bornschein & Bengio, 2014; Kingma & Welling, 2013). Indeed, WS was originally proposed to minimize the variational lower bound as in VAEs (Eq.11) with the sleep phase approximation (Hinton et al., 1995). Alternatively, VAEs can be seen as extending the wake phase. Specifically, if we let h be z and λ be η, the wake phase objective recovers VAEs (Eq.11) in terms of generator optimization (i.e., optimizing θ). Therefore, we can see VAEs as generalizing the wake phase by also optimizing the inference model q η , with additional prior regularization on code z. On the other hand, GANs closely resemble the sleep phase. To make this clearer, let h be y and λ be φ. This results in a sleep phase objective identical to that of optimizing the discriminator q φ in Eq.(3), which is to reconstruct y given sample x. We thus can view GANs as generalizing the sleep phase by also optimizing the generative model p θ to reconstruct reversed y. InfoGAN (Eq.9) further extends the correspondence to reconstruction of latents z.\n\nSection Title: TRANSFERRING TECHNIQUES\n TRANSFERRING TECHNIQUES The new interpretation not only reveals the connections underlying the broad set of existing ap- proaches, but also facilitates to exchange ideas and transfer techniques across the two classes of algorithms. For instance, existing enhancements on VAEs can straightforwardly be applied to improve GANs, and vice versa. This section gives two examples. Here we only outline the main intuitions and resulting models, while providing the details in the supplement materials. 4.1 IMPORTANCE WEIGHTED GANS (IWGAN) Burda et al. (2015) proposed importance weighted autoencoder (IWAE) that maximizes a tighter lower bound on the marginal likelihood. Within our framework it is straightforward to develop importance weighted GANs by copying the derivations of IWAE side by side, with little adaptations. Specifically, the variational inference interpretation in Lemma.1 suggests GANs can be viewed as maximizing a lower bound of the marginal likelihood on y (putting aside the negative JSD term): Following (Burda et al., 2015), we can derive a tighter lower bound through a k-sample importance weighting estimate of the marginal likelihood. With necessary approximations for tractability, optimizing the tighter lower bound results in the following update rule for the generator learning: As in GANs, only y = 0 (i.e., generated samples) is effective for learning parameters θ. Compared to the vanilla GAN update (Eq.(6)), the only difference here is the additional importance weight w i which is the normalization of w i = q r φ 0 (y|xi) q φ 0 (y|xi) over k samples. Intuitively, the algorithm assigns higher weights to samples that are more realistic and fool the discriminator better, which is consistent to IWAE that emphasizes more on code states providing better reconstructions. Hjelm et al. (2017); Che et al. (2017b) developed a similar sample weighting scheme for generator training, while their generator of discrete data depends on explicit conditional likelihood. In practice, the k samples correspond to sample minibatch in standard GAN update. Thus the only computational cost added by the importance weighting method is by evaluating the weight for each sample, and is negligible. The discriminator is trained in the same way as in standard GANs. In the semi-supervised VAE (SVAE) setting, remaining training data are used for unsupervised training.\n\nSection Title: ADVERSARY ACTIVATED VAES (AAVAE)\n ADVERSARY ACTIVATED VAES (AAVAE) By Lemma.2, VAEs include a degenerated discriminator which blocks out generated samples from contributing to model learning. We enable adaptive incorporation of fake samples by activating the adversarial mechanism. Specifically, we replace the perfect discriminator q * (y|x) in VAEs with a discriminator network q φ (y|x) parameterized with φ, resulting in an adapted objective of Eq.(12): As detailed in the supplementary material, the discriminator is trained in the same way as in GANs. The activated discriminator enables an effective data selection mechanism. First, AAVAE uses not only real examples, but also generated samples for training. Each sample is weighted by the inverted discriminator q r φ (y|x), so that only those samples that resemble real data and successfully fool the discriminator will be incorporated for training. This is consistent with the importance weighting strategy in IWGAN. Second, real examples are also weighted by q r φ (y|x). An example receiving large weight indicates it is easily recognized by the discriminator, which means the example is hard to be simulated from the generator. That is, AAVAE emphasizes more on harder examples.\n\nSection Title: EXPERIMENTS\n EXPERIMENTS We conduct preliminary experiments to demonstrate the generality and effectiveness of the importance weighting (IW) and adversarial activating (AA) techniques. In this paper we do not aim at achieving state-of-the-art performance, but leave it for future work. In particular, we show the IW and AA extensions improve the standard GANs and VAEs, as well as several of their variants, respectively. We present the results here, and provide details of experimental setups in the supplements.\n\nSection Title: IMPORTANCE WEIGHTED GANS\n IMPORTANCE WEIGHTED GANS We extend both vanilla GANs and class-conditional GANs (CGAN) with the IW method. The base GAN model is implemented with the DCGAN architecture and hyperparameter setting (Radford et al., 2015). Hyperparameters are not tuned for the IW extensions. We use MNIST, SVHN, and CIFAR10 for evaluation. For vanilla GANs and its IW extension, we measure inception scores (Salimans et al., 2016) on the generated samples. For CGANs we evaluate the accuracy of conditional generation (Hu et al., 2017) with a pre-trained classifier. Please see the supplements for more details. Table 2 , left panel, shows the inception scores of GANs and IW-GAN, and the middle panel gives the classification accuracy of CGAN and and its IW extension. We report the averaged results ± one standard deviation over 5 runs. The IW strategy gives consistent improvements over the base models.\n\nSection Title: ADVERSARY ACTIVATED VAES\n ADVERSARY ACTIVATED VAES We apply the AA method on vanilla VAEs, class-conditional VAEs (CVAE), and semi-supervised VAEs (SVAE) (Kingma et al., 2014), respectively. We evaluate on the MNIST data. We measure the variational lower bound on the test set, with varying number of real training examples. For each batch of real examples, AA extended models generate equal number of fake samples for training. Table 3 shows the results of activating the adversarial mechanism in VAEs. Generally, larger improvement is obtained with smaller set of real training data. Table 2 , right panel, shows the improved accuracy of AA-SVAE over the base semi-supervised VAE.\n\nSection Title: DISCUSSIONS: SYMMETRIC VIEW OF GENERATION AND INFERENCE\n DISCUSSIONS: SYMMETRIC VIEW OF GENERATION AND INFERENCE Our new interpretations of GANs and VAEs have revealed strong connections between them, and linked the emerging new approaches to the classic wake-sleep algorithm. The generality of the proposed formulation offers a unified statistical insight of the broad landscape of deep generative modeling, and encourages mutual exchange of techniques across research lines. One of the key ideas in our formulation is to interpret sample generation in GANs as performing posterior inference. This section provides a more general discussion of this point. Traditional modeling approaches usually distinguish between latent and visible variables clearly and treat them in very different ways. One of the key thoughts in our formulation is that it is not necessary to make clear boundary between the two types of variables (and between generation and inference), but instead, treating them as a symmetric pair helps with modeling and understanding. For instance, we treat the generation space x in GANs as latent, which immediately reveals the connection between GANs and adversarial domain adaptation, and provides a variational inference interpretation of the generation. A second example is the classic wake-sleep algorithm, where the wake phase reconstructs visibles conditioned on latents, while the sleep phase reconstructs latents conditioned on visibles (i.e., generated samples). Hence, visible and latent variables are treated in a completely symmetric manner. • Empirical data distributions are usually implicit, i.e., easy to sample from but intractable for evaluating likelihood. In contrast, priors are usually defined as explicit distributions, amiable for likelihood evaluation. • The complexity of the two distributions are different. Visible space is usually complex while latent space tends (or is designed) to be simpler. However, the adversarial approach in GANs and other techniques such as density ratio estimation (Mo- hamed & Lakshminarayanan, 2016) and approximate Bayesian computation (Beaumont et al., 2002) have provided useful tools to bridge the gap in the first point. For instance, implicit generative models such as GANs require only simulation of the generative process without explicit likelihood evaluation, hence the prior distributions over latent variables are used in the same way as the empirical data distributions, namely, generating samples from the distributions. For explicit likelihood-based models, adversarial autoencoder (AAE) leverages the adversarial approach to allow implicit prior distributions over latent space. Besides, a few most recent work (Mescheder et al., 2017; Tran et al., 2017; Huszár, 2017; Rosca et al., 2017) extends VAEs by using implicit variational distributions as the inference model. Indeed, the reparameterization trick in VAEs already resembles construction of implicit variational distributions (as also seen in the derivations of IWGANs in Eq.37). In these algorithms, adversarial approach is used to replace intractable minimization of the KL divergence between implicit variational distributions and priors. The second difference in terms of space complexity guides us to choose appropriate tools (e.g., adver- sarial approach v.s. reconstruction optimization, etc) to minimize the distance between distributions to learn and their targets. However, the tools chosen do not affect the underlying modeling mechanism.\n", "output": "[Significance and novelty]\n<Unifying deep generative models> The paper presents a novel framework that interprets GAN algorithms as performing a form of variational inference on a generative model, providing a new perspective on the relationship between GANs and VAEs.\n<Connections to other variational methods> The paper establishes connections between GANs and other variational methods such as f-GAN, providing insights into the relationship between different generative models.\n\n[Potential reasons for acceptance]\n<Thought-provoking perspective on GANs and VAEs> The paper's framework places GANs and VAEs within the same graphical model perspective, offering valuable insights and potentially shaping how these models are understood and utilized in the future.\n<Correct theory and supported claims> The paper's theoretical foundations are sound, and the experiments conducted support the claims made in the paper, contributing to its potential acceptance.\n\n[Potential reasons for rejection]\n<Dense and hard to follow at times> Some reviewers found the paper dense and difficult to follow, suggesting potential issues with accessibility and clarity that may impact its acceptance.\n<Lack of connection to alternate perspectives> The paper may be perceived as isolating itself by not explicitly discussing connections to alternate perspectives or prior related work, potentially affecting its acceptance.\n\n[Suggestions for improvement]\n<Clarity and accessibility> Consider restructuring the paper to improve clarity and accessibility, potentially by moving some content to the appendix and concentrating more on key results and key experiments.\n<Further discussion of related work> Provide more detailed discussion and connections to related work, such as f-GAN, to contextualize the paper within existing research and address potential isolation from prior observations.\n<Exploration of alternate datasets> Expand the experiments to include harder datasets such as CelebA, LSUN, and ImageNet to further validate the proposed framework and its applications.\n\n"}