- 1斯坦福AI2021报告出炉!详解七大热点,论文引用中国首超美国

- 2OpenAI 开发系列(七):LLM提示工程(Prompt)与思维链(CoT)_llm 将高层次指令拆分成子任务

- 32. Transformer相关的原理(2.3. 图解BERT)_bertencoder图

- 4adb devices找不到设备的很多原因

- 5pytorch初学笔记(一):如何加载数据和Dataset实战_python dataset

- 6鸿蒙开发,对于前端开发来说,究竟是福是祸呢?_前端 鸿蒙

- 7宇视VM新BS界面配置告警联动上墙

- 8决策树python源码实现(含预剪枝和后剪枝)_def createdatalh(): data = np.array([['青年', '否', '

- 9hive集群搭建

- 10掌握Go语言:Go语言类型转换,解锁高级用法,轻松驾驭复杂数据结构(30)

基于CNNs的图片情感识别(Keras VS Pytorch)

赞

踩

之前做深度学习,用的框架一直都是Keras,从科研角度来说,可以基本满足我的需要。Keras的优势在于可以非常快速的实现一个相对简单的深度学习模型,例如深度神经网络、长短期记忆网络、双向长短期记忆网络等。同时Kera对于刚刚接触深度学习的新手是非常友好的,各个模块都进行了相对应的封装,从模型构建,模型编译到模型训练。我们所做的只需要脑海中有一个模型网络图,然后根据这个图对模型进行搭建,并且将输入调整为模型所接受的输入,设置相应的参数,之后点击run,就ok了。

物极必反,Keras对于模块的过度封装带来的缺点也是显而易见的,即对一些非必要封装的层的可操作性不大。随着研究的深入,总觉得用着Keras会有些局限,于是笔者便寻求一个新的深度学习框架满足研究和学习需求。目前常用的深度学习框架有这主要三种:Keras、Tensorflow以及Pytorch。Tensorflow是由Google开源的深度学习框架,目前已经到2.0版本,但是根据其他组对tensorflow的使用经验和经历来看,tensorflow的使用难度以及因版本迭代而带来的代码不可重复使用情况让我对其有些抗拒。Pytorch是由Facebook开源的一款深度学习框架,前一阵子刚发布了1.0版本,目前已经到了1.0.1版本。Pytorch的优势在于能够非常好的支持GPU,简洁,操作性大,对新手友好。同时Pytorch在用户中的口碑也非常的好,因此,根据我的需求,我将Pytorch作为我学习的第二个深度学习框架。

用Pytorch的第一步便是安装Pytorch,网上的教程有很多,但是!真正能解决问题的很少!在安装Pytorch方面,笔者强烈建议从官网下载.whl文件,然后进行安装,如果使用命令行pip install pytorch,会出现报错(具体错误内容忘记了)。

言归正传,这次的任务是对图片情感进行分类,图片数量为2001,情感类别分为正面、负面和中性,正面、负面和中性的数量分别为712、768和512。笔者利用CV2读取图片,并利用resize方法将图片大小调整为(224,224,3),进行归一化,保存至pickle文件。对于标签,笔者用0表示正面,1表示中性,2表示负面,并调整为[0, 1, 0](表示中性),保存至pickle文件。至此,对图片和标签已经完成。下面笔者利用不同的深度学习框架构建CNNs模型并进行相应的比较。

1. 构建CNNs模型

- import torch

- import torch.utils.data as U

- import torch.nn as nn

- import torch.optim as optim

- import torch.nn.functional as F

-

- #网络构建;

- class ConvNet(nn.Module):

- def __init__(self):

- super().__init__()

-

- #conv2d所接受的参数;

- #nn.conv2d(in_channels, out_channels, kernel_size,

- # stride= 1, padding= 0, dilation= 1, groups= 1, bias= True)

- #224*224*3

- self.conv1=nn.Conv2d(3, out_channels= 10, kernel_size= 5)

- #110*110*10

- self.conv2=nn.Conv2d(10,20,5) # 128, 10x10

- #106*106*20

- self.fc1 = nn.Linear(106*106*20,1024)

- self.fc2 = nn.Linear(1024,3)

- def forward(self,x):

- in_size = x.size(0)

- out = self.conv1(x) #24

- out = F.relu(out)

- out = F.max_pool2d(out, 2, 2) #12

- out = self.conv2(out) #10

- out = F.relu(out)

- out = out.view(in_size,-1)

- out = self.fc1(out)

- out = F.relu(out)

- out = self.fc2(out)

- out = F.softmax(out,dim=1)

- return out

-

- model = ConvNet()

- optimizer = optim.Adam(model.parameters())

- print(model)

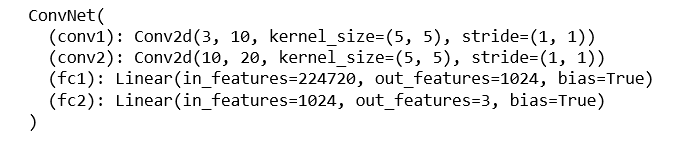

以上是笔者用Pytorch构建的一个CNNs模型,其中包含两层卷积神经网络、一个池化层和两层全连接层。模型的具体结构如下图。

下面,利用Keras构建一个相同结构的CNNs模型。

- from keras.models import Model, Sequential

- from keras.layers import Dense, Conv2D, Flatten, Dropout, BatchNormalization, Input, MaxPooling2D

- from keras.optimizers import SGD

- from sklearn.metrics import classification_report

- from keras.callbacks import EarlyStopping, CSVLogger

-

- left_input= Input(shape= (224, 224, 3))

- left_model= Conv2D(10, kernel_size= (5, 5), activation= 'relu', input_shape= (224, 224, 3))(left_input)

- left_model= MaxPooling2D(pool_size= (2, 2))(left_model)

-

- left_model= Conv2D(20, kernel_size= (5, 5), activation= 'relu')(left_model)

- left_model= MaxPooling2D(pool_size= (2, 2))(left_model)

- left_model= Flatten(name= 'flatten')(left_model)

-

- left_model= Dense(1024, activation= 'relu')(left_model)

- left_model= Dense(3, activation= 'softmax')(left_model)

-

- earlystopping= EarlyStopping(monitor= 'val_loss', patience= 20)

- model= Model(inputs= left_input, outputs= left_model)

- model.compile(loss= 'categorical_crossentropy', optimizer= 'adam', metrics= ['accuracy'])

打印模型结构,如下。

2. 模型输入

Pytorch

- def load_f(path):

- with open(path, 'rb') as f:

- data = pickle.load(f)

- return data

-

-

- #Pytorch所接受的输入;

-

- img = load_f(r'G:/Multimodal/img_arr.pickle')

- labels = load_f(r'G:/Multimodal/labels.pickle')

- labels = labels.argmax(axis= 1)

-

- train_X, test_X, train_Y, test_Y = train_test_split(img, labels, test_size= 0.2, random_state= 42)

- train_X = torch.from_numpy(train_X)

- test_X = torch.from_numpy(test_X)

- #train_Y = transform_label(train_Y)

- #test_Y = transform_label(test_Y)

- train_Y = torch.from_numpy(train_Y)

- test_Y = torch.from_numpy(test_Y)

-

- #对输入的形状进行转化;

- train_X = train_X.reshape(-1, 3, 224, 224)

- test_X = test_X.reshape(-1, 3, 224, 224)

-

- train_data = U.TensorDataset(train_X, train_Y)

- test_data = U.TensorDataset(test_X, test_Y)

-

- #构造迭代器;

- train_loader = U.DataLoader(train_data, batch_size= 1, shuffle= True)

- test_loader = U.DataLoader(test_data, batch_size= 1, shuffle= False)

-

以上是笔者保存为Pickle文件时的输入,都是array类型。在Pytorch中,接受的数据类型均为tensor,因此在训练前需要将其转化为tensor类型。以下为keras所接受的输入。需要着重说明的是,例,keras中label所接受的形状为[[0,1,0], [1, 0, 0]],在Pytorch中则为[1, 0]。

- def transformLabel(arr):

- labels = LabelBinarizer().fit_transform(arr)

- return labels

-

- img = load_f(r'G:/Multimodal/img_arr.pickle')

- labels = load_f(r'G:/Multimodal/labels.pickle')

- labels = labels.argmax(axis= 1)

- train_X, test_X, train_Y, test_Y = train_test_split(img, labels, test_size= 0.2, random_state= 42)

-

- train_Y = transformLabel(train_Y)

- test_Y = transformLabel(test_Y)

3. 模型训练和测试

Pytorch

- def train(model, train_loader, optimizer, epoch):

- model.train()

- for batch_idx, (data, target) in enumerate(train_loader):

- optimizer.zero_grad()

- output = model(data)

- labels = output.argmax(dim= 1)

- #y_true = target.argmax(dim= 1)

- acc = accuracy_score(target, labels)

- #print(acc)

- loss = F.cross_entropy(output, target)#交叉熵损失函数;

- #print(loss)

- #print(len(train_loader.dataset))

- #print(len(data))

- #执行反向传播

- loss.backward()

- #更新权重;

- optimizer.step()

- if(batch_idx+1)%30 == 0:

- finish_rate = (batch_idx* len(data) / len(train_loader.dataset))*100

- print('Train Epoch: %s'%str(epoch), #Train Epoch: 1

- '[%d/%d]--'%((batch_idx* len(data)),len(train_loader.dataset)), #[1450/60000]

- '%.3f%%'%finish_rate, #0.024

- '\t', 'acc: %.5f'%acc, #acc: 0.98000

- ' loss: %s'%loss.item()) #loss: 1.4811499118804932

-

- def test(model, test_loader):

- model.eval()

- test_loss = 0

- acc = 0

- y_true = []

- y_pred = []

- with torch.no_grad():

- for data, target in test_loader:

- output = model(data)

- test_loss += F.cross_entropy(output, target, reduction='sum').item() # 将一批的损失相加

- output = output.argmax(dim = 1)

- y_true.extend(target)

- y_pred.extend(output)

- acc_ = accuracy_score(output, target)

- acc += acc_

- #print(y_true)

- #print(y_pred)

- print(accuracy_score(y_true, y_pred))

- print(classification_report(y_true, y_pred, digits= 5))

- test_loss /= len(test_loader.dataset)

- acc = acc/(len(test_loader.dataset)/50 +1)#50 50指batch_size

- print(acc)

- print('Test set: Avg Loss:%s'%str(test_loss),

- '\t', 'Avg acc:%s'%str(acc))

-

- if __name__ == '__main__':

-

- model = ConvNet()

- optimizer = optim.Adam(model.parameters())

- print('-----model compiled-----')

-

- EPOCHS = 1

- batch_size = 32

-

- print('-----loading data-----')

- img = load_f(r'G:/Multimodal/img_arr.pickle')

- labels = load_f(r'G:/Multimodal/labels.pickle')

- labels = labels.argmax(axis=1)

- train_X, test_X, train_Y, test_Y = train_test_split(img, labels, test_size=0.2, random_state=42)

-

- train_X = torch.from_numpy(train_X)

- test_X = torch.from_numpy(test_X)

- train_Y = torch.from_numpy(train_Y)

- test_Y = torch.from_numpy(test_Y)

-

- train_X = train_X.reshape(-1, 3, 224, 224)

- test_X = test_X.reshape(-1, 3, 224, 224)

-

- train_data = U.TensorDataset(train_X, train_Y)

- test_data = U.TensorDataset(test_X, test_Y)

-

- train_loader = U.DataLoader(train_data, batch_size= batch_size, shuffle=True)

- test_loader = U.DataLoader(test_data, batch_size= batch_size, shuffle=False)

- print('-----data load completed-----')

-

- print('-----Training begins------')

- for epoch in tqdm(range(1, EPOCHS+1)):

- train(model, train_loader, optimizer, epoch)

- test(model, test_loader)

- print('============================================================================')

Keras

- model.fit(train_X, train_Y, batch_size= 128, epochs= 100,

- validation_data=(test_X, test_Y), verbose= 1, callbacks= [earlystopping])

-

- predictions= model.predict(test_X, batch_size= 32)

- print(classification_report(test_Y.argmax(axis= 1), predictions.argmax(axis=1),

- target_names=['0', '1', '2'], digits= 5))

在模型训练和测试方面,可以很容易的发现,Pytorch太过于复杂了,而Keras也太简单了,确实是这样,搞Pytorch确实折腾了好久。但从侧面可以看出,Pytorch的操作空间也比keras大的多。在实践过程中,笔者发现,sklearn中的一些metrics可以和Pytorch很好的兼容在一起,sklearn的方法也接受张量类型的数据,着实令人欣喜。

总结:

走出舒适区总是很艰难,但是真正走出来,会觉得豁然开朗,值得自己学习的东西还有很多。