- 1猫头虎分享已解决Bug || Error response from daemon: driver failed programming external connectivity on endpoi

- 2【超详细】【YOLOV8使用说明】一套框架解决CV的5大任务:目标检测、分割、姿势估计、跟踪和分类任务【含源码】_yolov8如何使用

- 3前端基础入门三大核心之HTML篇:解密标签、标题与段落的艺术

- 4Android ANR不会?这里有ANR全解析和各种案例!包教包会_android开发,anr处理

- 5Nltk安装及语料库包下载-------Python数据预处理_语料库怎么下载

- 613年毕业,用两年时间从外包走进互联网大厂!_中科软出来好找工作吗

- 7SQL SERVER无法连接到服务器解决过程记录

- 8百度Comate开放插件生态,智能代码助手定制化时代来临_comate代码助手

- 92、flink计算任务可以在哪些环境中运行_createlocalenvironmentwithwebui

- 10简述人工智能的研究目标_人工智能的目标是什么?研究内容主要有哪些?2.图灵测试和中文房间问题的主要内

java kafkastream_Kafka 实战:(五)Kafka Stream API 实现

赞

踩

案例一:实现topic之间的流传输

一、Kafka Java代码

创建maven过程,导入以下依赖

org.apache.kafka

kafka_2.11

2.0.0

org.apache.kafka

kafka-streams

2.0.0

代码部分public class MyStream {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"mystream");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.247.201:9092");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

// 创建流构造器

StreamsBuilder builder = new StreamsBuilder();

// 构建好builder 将mystreamin topic中的数据写入到 mystreamout topic中

builder.stream("mystreamin").to("mystreamout");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

二、Kafka Shell 命令

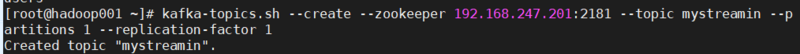

1、创建Topic`kafka-topics.sh --create --zookeeper 192.168.247.201:2181 --topic mystreamin --partitions 1 --replication-factor 1

kafka-topics.sh --create --zookeeper 192.168.247.201:2181 --topic mystreamout --partitions 1 --replication-factor 1`

* 1

* 2

查看Topickafka-topics.sh --zookeeper 192.168.247.201:2181 --list

2、运行Java代码,执行以下步骤:

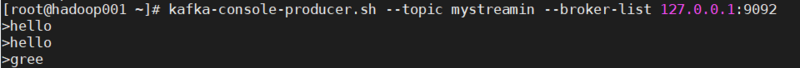

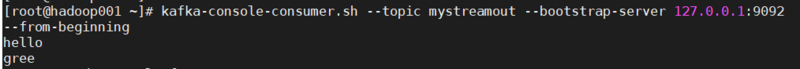

生产消息kafka-console-producer.sh --topic mystreamin --broker-list 127.0.0.1:9092

消费消息kafka-console-consumer.sh --topic mystreamout --bootstrap-server 127.0.0.1:9092 --from-beginning

案例二:WordCount Stream API

一、Kafka Java代码

代码部分public class WordCountStream {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"wordcount");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.247.201:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_DOC,"earliest"); // earliest latest

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,"false"); // 设置手动提交方式

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

// 创建流构造器

// wordcount-input

// hello world

// hello java

StreamsBuilder builder = new StreamsBuilder();

KTable count = builder.stream("wordcount-input") // 从kafka中一条一条的取数据

.flatMapValues( // 返回压扁后的数据

(value) -> { // 对数据进行按空格切割,返回List集合

String[] split = value.toString().split(" ");

List strings = Arrays.asList(split);

return strings;

}) // key:null value:hello ,key:null value:world ,key:null value:hello ,key:null value:java

.map((k, v) -> {

return new KeyValue(v,"1");

}).groupByKey().count();

count.toStream().foreach((k,v) -> {

System.out.println("key:"+k+" value:"+v);

});

count.toStream().map((x,y) -> {

return new KeyValue(x,y.toString());

}).to("wordcount-out");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

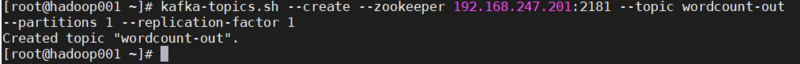

二、Kafka Shell 命令

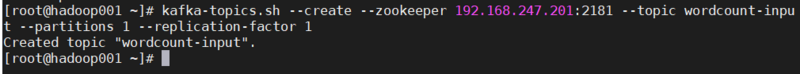

1、创建Topickafka-topics.sh --create --zookeeper 192.168.247.201:2181 --topic wordcount-input --partitions 1 --replication-factor 1

kafka-topics.sh --create --zookeeper 192.168.247.201:2181 --topic wordcount-out --partitions 1 --replication-factor 1

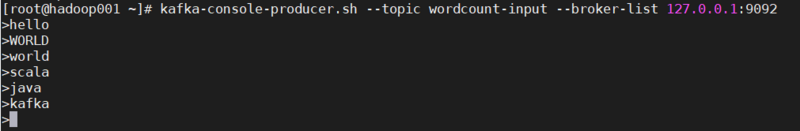

**2、运行Java代码,执行以下步骤:

生产消息**kafka-console-producer.sh --topic wordcount-input --broker-list 127.0.0.1:9092

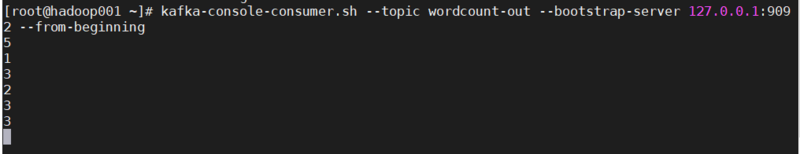

消费消息kafka-console-consumer.sh --topic wordcount-out --bootstrap-server 127.0.0.1:9092 --from-beginning

显示key消费消息kafka-console-consumer.sh --topic wordcount-out --bootstrap-server 127.0.0.1:9092 --property print.key=true --from-beginning

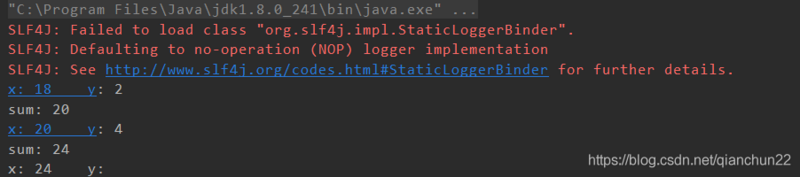

案例三:利用Kafka流实现对输入数字的求和

一、Kafka Java代码public class SumStream {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"sumstream");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.247.201:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_DOC,"earliest"); // earliest latest

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,"false"); // 设置手动提交方式

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream source = builder.stream("suminput");

source.map((key,value) ->

new KeyValue("sum: ",value.toString())

).groupByKey().reduce((x,y) ->{

System.out.println("x: "+x+" y: "+y);

Integer sum = Integer.valueOf(x)+Integer.valueOf(y);

System.out.println("sum: "+sum);

return sum.toString();

});

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

二、Kafka Shell 命令

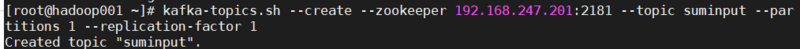

1、创建Topickafka-topics.sh --create --zookeeper 192.168.247.201:2181 --topic suminput --partitions 1 --replication-factor 1

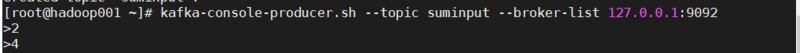

**2、运行Java代码,执行以下步骤:

生产消息**kafka-console-producer.sh --topic suminput --broker-list 127.0.0.1:9092

案例四:Kafka Stream实现不同窗口的流处理

一、Kafka Java代码package cn.kgc.kb09;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.protocol.types.Field;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KStream;

import org.apache.kafka.streams.kstream.SessionWindows;

import org.apache.kafka.streams.kstream.TimeWindows;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

/**

* @Qianchun

* @Date 2020/12/16

* @Description

*/

public class WindowStream {

public static void main(String[] args) {

Properties prop = new Properties();

// 不同的窗口流不能使用相同的应用ID

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"SessionWindow");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.247.201:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_DOC,"earliest"); // earliest latest

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,"false"); // 设置手动提交方式

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream source = builder.stream("windowdemo");

source.flatMapValues(value -> Arrays.asList(value.toString().split("s+")))

.map((x,y) -> {

return new KeyValue(y,"1");

}).groupByKey()

//以下所有窗口的时间均可通过下方参数调设

// Tumbling Time Window(窗口为5秒,5秒内有效)

// .windowedBy(TimeWindows.of(Duration.ofSeconds(5).toMillis()))

// Hopping Time Window(窗口为5秒,每次移动2秒,所以若5秒内只输入一次会出现5/2+1=3次)

// .windowedBy(TimeWindows.of(Duration.ofSeconds(5).toMillis())

// .advanceBy(Duration.ofSeconds(2).toMillis()))

// Session Time Window(20秒内只要输入Session就有效,距离下一次输入超过20秒Session失效,所有从重新从0开始)

// .windowedBy(SessionWindows.with(Duration.ofSeconds(20).toMillis()))

.count().toStream().foreach((x,y) -> {

System.out.println("x: "+x+" y:"+y);

});

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

二、Kafka Shell 命令

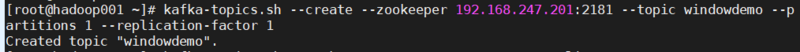

1、创建Topickafka-topics.sh --create --zookeeper 192.168.247.201:2181 --topic windowdemo --partitions 1 --replication-factor 1

**2、运行Java代码,执行以下步骤:

生产消息**kafka-console-producer.sh --topic windowdemo --broker-list 127.0.0.1:9092

注意:ERROR:Exception in thread “sum-a3bbe4d0-4cc9-4812-a7a0-e650a8a60c9f-StreamThread-1” java.lang.IllegalArgumentException: Window endMs time cannot be smaller than window startMs time.

数组越界

解决方案:大概率是窗口ID一致,请修改prop.put(StreamsConfig.APPLICATION_ID_CONFIG, "sessionwindow");的参数。