热门标签

热门文章

- 1android多个app跨进程通信(IPC)实现(一)_安卓java应用间交互

- 2Unity Shader入门精要 第八章——透明效果_unity 半透明shader

- 3基于Android的过程监控的学生成绩管理系统(Android studio毕业设计,Android课程设计)_as学生成绩管理

- 4java使用elasticsearchClient调用es7.17-生成连接、查询系统参数、索引相关操作_java 8 使用elasticsearchclient调用es

- 5pve虚拟机无法连接到服务器,许迎果 第190期 PVE创建虚拟机的注意事项 上篇

- 6http代理的静态ip如何实现YouTube运营?有何优势?

- 710、App启动过程分析与UIApplication自定义举例_- (bool)application:(uiapplication *)application c

- 8Java案例——实现验证码登陆_java 验证码登录

- 9Unity如何与Webview进行交互_游戏内嵌入web怎么和客户端交互

- 10EC修炼之道—代码架构_如果要学习写ec的程序

当前位置: article > 正文

Pytorch学习基础——LeNet从训练到测试_for step, (b_x, b_y) in enumerate(train_loader):

作者:小蓝xlanll | 2024-02-17 22:25:08

赞

踩

for step, (b_x, b_y) in enumerate(train_loader):

在上一篇Pytorch学习基础——CNN基本结构搭建中介绍了如何使用Pytorch.nn类搭建网络模型,结合MNIST数据集进行训练测试。

实现步骤:

- 导入必要的包并设置超参数:

- import torch

- import torchvision

- import torch.nn as nn

- from torch.autograd import Variable

- import torchvision.datasets as dsets

- import torchvision.transforms as transforms

- import matplotlib.pyplot as plt

-

- #define hyperparameter

- EPOCH = 1

- BATCH_SIZE = 64

- TIME_STEP = 28 #time_step / image_height

- INPUT_SIZE = 28 #input_step / image_width

- LR = 0.01

- DOWNLOAD = False

- 获取并加载数据集:下载MNIST到当前目录下,转换数据为Tensor张量;使用DataLoader转换为torch批次加载的形式;

- train_data = dsets.MNIST(root='./', train=True, transform=torchvision.transforms.ToTensor(), download=True)

- test_data = dsets.MNIST(root='./', train=False, transform=torchvision.transforms.ToTensor())

- test_x = Variable(torch.unsqueeze(test_data.test_data, dim=1),volatile = True).type(torch.FloatTensor)[:2000]/255

- test_y = test_data.test_labels[:2000]

- #use dataloader to batch input dateset

- train_loader = torch.utils.data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

- 定义并实例化网络模型:

- #define the RNN class

- class LeNet(nn.Module):

- #overload __init__() method

- def __init__(self):

- super(LeNet, self).__init__()

-

- self.layer1 = nn.Sequential(

- nn.Conv2d(1, 25, kernel_size=3),

- nn.BatchNorm2d(25),

- nn.ReLU(True),

- nn.MaxPool2d(kernel_size=2, stride=2),

- )

-

- self.layer2 = nn.Sequential(

- nn.Conv2d(25, 50, kernel_size=3),

- nn.BatchNorm2d(50),

- nn.ReLU(True),

- nn.MaxPool2d(kernel_size=2, stride=2),

- )

-

- self.classifier = nn.Sequential(

- nn.Linear(50*5*5, 1024),

- nn.ReLU(True),

- nn.Linear(1024, 128),

- nn.ReLU(True),

- nn.Linear(128, 10),

- )

-

- #overload forward() method

- def forward(self, x):

- out = self.layer1(x)

- out = self.layer2(out)

- out = out.view(out.size(0), -1)

- out = self.classifier(out)

- return out

-

- cnn = LeNet()

- print(cnn)

- 定义优化器和损失函数:

- #define optimizer with Adam optim

- optimizer = torch.optim.Adam(cnn.parameters(), lr=LR)

- #define cross entropy loss function

- loss_func = nn.CrossEntropyLoss()

- 训练模型:

- epoch = 0

- #training and testing

- for epoch in range(EPOCH):

- for step, (b_x, b_y) in enumerate(train_loader):

- b_x = Variable(b_x)

- b_y = Variable(b_y)

-

- output = cnn(b_x)

- loss = loss_func(output, b_y)

-

- optimizer.zero_grad()

- loss.backward()

- optimizer.step()

-

- if step % 50 == 0:

- test_output = cnn(test_x)

- pred_y = torch.max(test_output, 1)[1].data.squeeze()

- acc = float((pred_y == test_y).sum()) / float(test_y.size(0))

- print('Epoch: ', epoch, '| train loss: %.3f' % loss.data.numpy(), '| test accuracy: %.3f' % acc)

- print('Training ending')

- 验证模型:

- # print 100 predictions from test data

- numTest = 100

- test_output = cnn(test_x[:numTest])

- pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()

- print(pred_y, 'prediction number')

- print(test_y[:numTest], 'real number')

- ErrorCount = 0.0

- for i in pred_y:

- if pred_y[i] != test_y[i]:

- ErrorCount += 1

- print('ErrorRate : %.3f'%(ErrorCount / numTest))

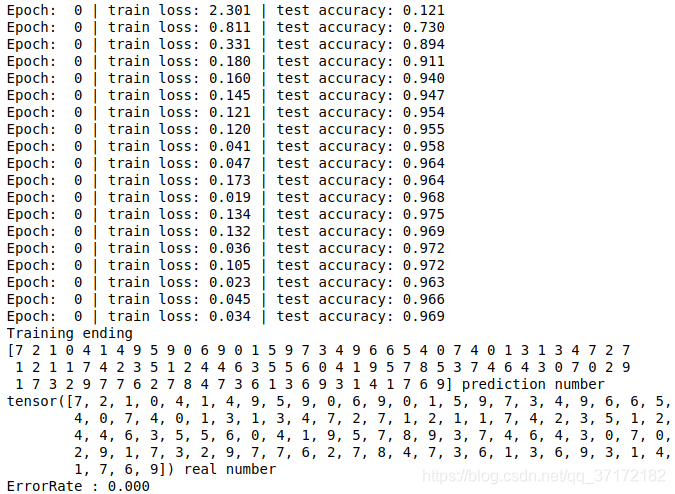

实验结果:

可以看到,对于简单的MNIST手写数字数据集,LeNet在较低训练时间内即能完成准确识别,从而证实了神经网络的高效识别能力,为大型数据集的识别分类提供了参考和借鉴。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小蓝xlanll/article/detail/101859

推荐阅读

相关标签