- 1转载--微信小程序之全局配置-window和tabBar_微信小程序winodws区域保持一致

- 2安装Hive及环境变量的配置(三种安装部署的方式)_配置hive环境变量

- 3AIAgent工作流中的机器学习应用:数据驱动决策与优化_ai agent工作流应用

- 4Web前端培训分享:Web前端三大主流框架对比_web前端三大主流框架使用量

- 52022年快到了, 10 个最值得推荐的 VS Code 插件_import cost

- 6python-opencv对本地视频进行分帧处理(图片)及保存_opencv视频分帧

- 7数据结构绪论——什么是数据结构?_数据结构由数据的1 point(s)、1 point(s)和1 point(s)三部分组成。

- 820-(JQuery动画)淡入淡出_jq infinite(20

- 9去噪扩散概率模型(Denoising Diffusion Probabilistic Model,DDPM)

- 10 (入门)上传GitHub怎么忽略node_modules

服务器编译spark3.3.1源码支持CDH6.3.2_spark3.3源码编译

赞

踩

1、一定要注意编译环境的配置

mvn:3.6.3

scala:2.12.17

JDK:1.8

spark:3.3.1

服务器内存至少需要 8G 重点

- 1

- 2

- 3

- 4

- 5

2、下载连接

wget https://dlcdn.apache.org/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.zip

wget https://downloads.lightbend.com/scala/2.12.17/scala-2.12.17.tgz

wget https://dlcdn.apache.org/spark/spark-3.3.1/spark-3.3.1.tgz

- 1

- 2

- 3

3、安装直接解压,到/opt/softwear/文件夹

4、配置环境变量

vim /etc/profile

添加:

export JAVA_HOME=/usr/java/jdk1.8.0_191

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATH

export JAVA_PATH=${JAVA_HOME}/bin:${JRE_HOME}/bin

export SCALA_HOME=/opt/softwear/scala-2.12.17

export MAVEN_HOME=/opt/softwear/apache-maven-3.6.3

export PATH=$PATH:${JAVA_PATH}:$SCALA_HOME/bin:$MAVEN_HOME/bin

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

5、更改相关配置文件

vim /opt/softwear/spark-3.3.1/pom.xml

- 1

一定注意下面的修改配置

<repository>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

<repository>

<id>gcs-maven-central-mirror</id>

<!--

Google Mirror of Maven Central, placed first so that it's used instead of flaky Maven Central.

See https://storage-download.googleapis.com/maven-central/index.html

-->

<name>GCS Maven Central mirror</name>

<url>https://maven-central.storage-download.googleapis.com/maven2/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<hadoop.version>3.0.0-cdh6.3.2</hadoop.version>

<maven.version>3.6.3</maven.version>

<compilerPlugins>

<compilerPlugin>

<groupId>com.github.ghik</groupId>

<artifactId>silencer-plugin_2.12.17</artifactId>

<version>1.7.12</version>

</compilerPlugin>

</compilerPlugins>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

6、修改mvn地址

vi /opt/softwear/spark-3.3.1/dev/make-distribution.sh

export MAVEN_OPTS="${MAVEN_OPTS:--Xmx4g -XX:ReservedCodeCacheSize=2g -XX:MaxDirectMemorySize=256m}"

MVN="/opt/softwear/apache-maven-3.6.3/bin/mvn"

- 1

- 2

- 3

6.1、如果编译报错栈已经满了修改如下

vi /opt/software/apache-maven-3.6.3/bin/mvn

exec "$JAVACMD" -XX:MaxDirectMemorySize=128m\

$MAVEN_OPTS \

$MAVEN_DEBUG_OPTS \

-classpath "${CLASSWORLDS_JAR}" \

"-Dclassworlds.conf=${MAVEN_HOME}/bin/m2.conf" \

"-Dmaven.home=${MAVEN_HOME}" \

"-Dlibrary.jansi.path=${MAVEN_HOME}/lib/jansi-native" \

"-Dmaven.multiModuleProjectDirectory=${MAVEN_PROJECTBASEDIR}" \

${CLASSWORLDS_LAUNCHER} "$@"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

7、更改 scala版本

/opt/softwear/spark-3.3.1/dev/change-scala-version.sh 2.12

- 1

8、执行脚本编译

/opt/softwear/spark-3.3.1/dev/make-distribution.sh --name 3.0.0-cdh6.3.2 --tgz -Pyarn -Phadoop-3.0 -Phive -Phive-thriftserver -Dhadoop.version=3.0.0-cdh6.3.2 -Dscala.version=2.12.17

- 1

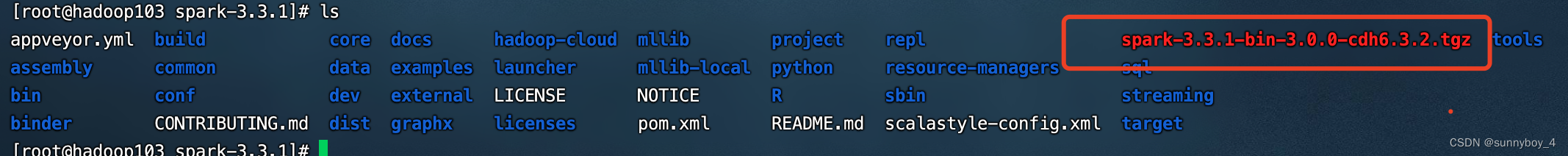

9、打包完在/opt/softwear/spark-3.3.1 有一个完整包

10、部署 Spark3 客户端

tar -zxvf spark-3.3.1-bin-3.0.0-cdh6.3.2.tgz -C /opt/cloudera/parcels/CDH/lib

cd /opt/cloudera/parcels/CDH/lib

mv spark-3.3.1-bin-3.0.0-cdh6.3.2/ spark3

- 1

- 2

- 3

11、将 CDH 集群的 spark-env.sh 复制到 /opt/cloudera/parcels/CDH/lib/spark3/conf 下

cp /etc/spark/conf/spark-env.sh /opt/cloudera/parcels/CDH/lib/spark3/conf

chmod +x /opt/cloudera/parcels/CDH/lib/spark3/conf/spark-env.sh

#修改 spark-env.sh

vim /opt/cloudera/parcels/CDH/lib/spark3/conf/spark-env.sh

export SPARK_HOME=/opt/cloudera/parcels/CDH/lib/spark3

- 1

- 2

- 3

- 4

- 5

- 6

- 7

12、将 gateway 节点的 hive-site.xml 复制到 spark3/conf 目录下,不需要做变动:

cp /etc/hive/conf/hive-site.xml /opt/cloudera/parcels/CDH/lib/spark3/conf/

- 1

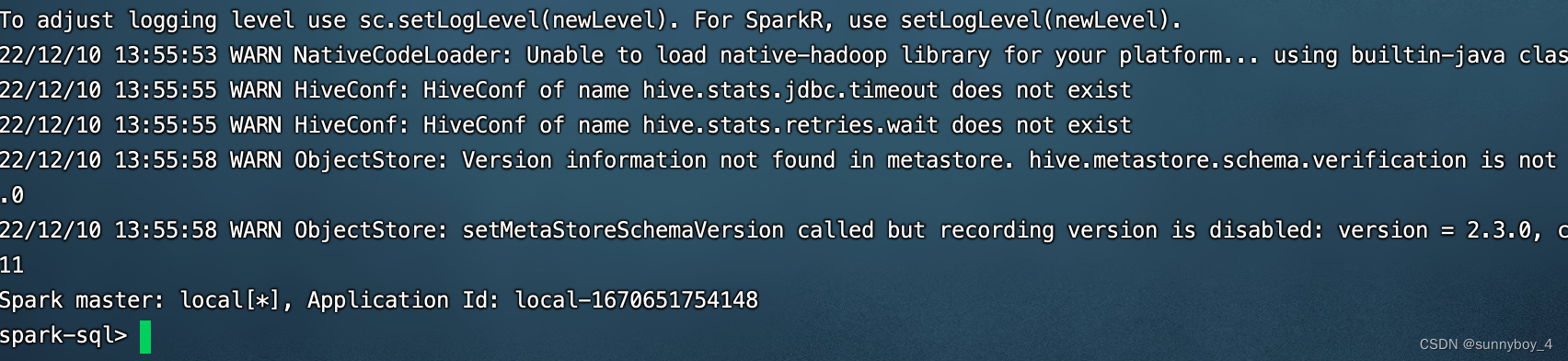

13、创建 spark-sql

vim /opt/cloudera/parcels/CDH/bin/spark-sql

#!/bin/bash

export HADOOP_CONF_DIR=/etc/hadoop/conf

export YARN_CONF_DIR=/etc/hadoop/conf

SOURCE="${BASH_SOURCE[0]}"

BIN_DIR="$( dirname "$SOURCE" )"

while [ -h "$SOURCE" ]

do

SOURCE="$(readlink "$SOURCE")"

[[ $SOURCE != /* ]] && SOURCE="$BIN_DIR/$SOURCE"

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

done

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

LIB_DIR=$BIN_DIR/../lib

export HADOOP_HOME=$LIB_DIR/hadoop

# Autodetect JAVA_HOME if not defined

. $LIB_DIR/bigtop-utils/bigtop-detect-javahome

exec $LIB_DIR/spark3/bin/spark-submit --class org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver "$@"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

14、配置 spark-sql 快捷方式

chmod +x /opt/cloudera/parcels/CDH/bin/spark-sql

alternatives --install /usr/bin/spark-sql spark-sql /opt/cloudera/parcels/CDH/bin/spark-sql 1

- 1

- 2

15、配置 conf

cd /opt/cloudera/parcels/CDH/lib/spark3/conf

## 开启日志

mv log4j2.properties.template log4j2.properties

## spark-defaults.conf 配置

cp /opt/cloudera/parcels/CDH/lib/spark/conf/spark-defaults.conf ./

# 修改 spark-defaults.conf

vim /opt/cloudera/parcels/CDH/lib/spark3/conf/spark-defaults.conf

删除 spark.extraListeners、spark.sql.queryExecutionListeners、spark.yarn.jars

添加 spark.yarn.jars=hdfs:///spark/3versionJars/*

只需要一台服务器上传spark的jar包就行

hadoop fs -mkdir -p /spark/3versionJars

cd /opt/cloudera/parcels/CDH/lib/spark3/jars

hadoop fs -put *.jar /spark/3versionJars

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

有可能会缺少 htrace-core4-4.1.0-incubating.jar 这个包,请下载让后放入到

这个目录下面/opt/cloudera/parcels/CDH/lib/spark3/jars

16、创建 spark3-submit

vim /opt/cloudera/parcels/CDH/bin/spark3-submit

#!/usr/bin/env bash

export HADOOP_CONF_DIR=/etc/hadoop/conf

export YARN_CONF_DIR=/etc/hadoop/conf

SOURCE="${BASH_SOURCE[0]}"

BIN_DIR="$( dirname "$SOURCE" )"

while [ -h "$SOURCE" ]

do

SOURCE="$(readlink "$SOURCE")"

[[ $SOURCE != /* ]] && SOURCE="$BIN_DIR/$SOURCE"

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

done

BIN_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

LIB_DIR=/opt/cloudera/parcels/CDH/lib

export HADOOP_HOME=$LIB_DIR/hadoop

# Autodetect JAVA_HOME if not defined

. $LIB_DIR/bigtop-utils/bigtop-detect-javahome

# disable randomized hash for string in Python 3.3+

export PYTHONHASHSEED=0

exec $LIB_DIR/spark3/bin/spark-class org.apache.spark.deploy.SparkSubmit "$@"

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

17、配置 spark3-submit 快捷方式

chmod +x /opt/cloudera/parcels/CDH/bin/spark3-submit

alternatives --install /usr/bin/spark3-submit spark3-submit /opt/cloudera/parcels/CDH/bin/spark3-submit 1

- 1

- 2

18、注意如果需要配置history server并通过YARN跳转spark-ui

修改spark-env.sh,更加自己的不同配置进行修改

export SPARK_HISTORY_OPTS="-Dspark.history.port=18088 -Dspark.history.fs.logDirectory=hdfs://nameservice1/user/spark/applicationHistory -Dspark.history.retainedApplication=30"

- 1

修改spark-defaults.conf

spark.eventLog.dir=hdfs://nameservice1/user/spark/applicationHistory

spark.yarn.historyServer.address=http://hadoop102:18088

spark.eventLog.enabled=true

spark.history.ui.port=18088

- 1

- 2

- 3

- 4

以上这些配置参考CDH自带的配置文件的配置即可

参考链接:

https://juejin.cn/post/7140053569431928845