- 12020华为校招软件测试:专业面试两轮的算法题_华为测试岗位 也考算法

- 2JVM内存溢出问题排查_内存溢出排查

- 3【Python】科研代码学习:二 dataclass,pipeline

- 4MobaXterm因网络断开连接_mobaxterm session stopped

- 5LeetCode - 92 - 反转链表|| - java - 两种解法 - 细喔~_leetcode 92

- 6HarmonyOS NEXT 开发者预览版Beta招募应知测试

- 7vue项目使用oidc-client实现单点登陆

- 8【学习笔记】深度学习理论基础

- 9GPU直通、GPU共享及GPU虚拟化比较_gpu直通和虚拟化的区别

- 10SpringBoot中整合Sharding Sphere实现数据加解密/数据脱敏/数据库密文,查询明文_shardingsphere 加密

pytorch笔记:04)resnet网络&解决输入图像大小问题_resnet如何把不同尺寸的图

赞

踩

因为torchvision对resnet18-resnet152进行了封装实现,因而想跟踪下源码

首先看张核心的resnet层次结构图(图1),它诠释了resnet18-152是如何搭建的,其中resnet18和resnet34结构类似,而resnet50-resnet152结构类似。下面先看resnet18的源码

图1

resnet18

首先是models.resnet18函数的调用

def resnet18(pretrained=False, **kwargs):

"""Constructs a ResNet-18 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

#[2, 2, 2, 2]和结构图[]X2是对应的

model = ResNet(BasicBlock, [2, 2, 2, 2], **kwargs)

if pretrained: #加载模型权重

model.load_state_dict(model_zoo.load_url(model_urls['resnet18']))

return model

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

这里涉及到了一个BasicBlock类(resnet18和34),这样的一个结构我们称为一个block。在block内部conv都使用了padding,因此输入的in_img_size和out_img_size都是56x56,在图2右边的shortcut只需要改变输入的channel的大小,输入block的输入tensor和输出tensor就可以相加(详细内容)

图2

事实上图2是Bottleneck类(用于resnet50-152,稍后分析),其和BasicBlock差不多,图3为图2的精简版(ps:可以把下图视为为一个box_block,即多个block叠加在一起,x3说明有3个上图一样的结构串起来):

图3

BasicBlock类,可以对比结构图中的resnet18和resnet34,类中expansion =1,其表示block内部最后一个卷积的输出channel与第一个卷积的输出channel比值,即:

e

x

p

a

n

s

i

o

n

=

l

a

s

t

_

b

l

o

c

k

_

c

h

a

n

n

e

l

/

f

i

r

s

t

_

b

l

o

c

k

_

c

h

a

n

n

e

l

expansion= last\_block\_channel/first\_block\_channel

expansion=last_block_channel/first_block_channel

def conv3x3(in_planes, out_planes, stride=1): "3x3 convolution with padding" return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride, padding=1, bias=False) class BasicBlock(nn.Module): expansion = 1 #inplanes其实就是channel,叫法不同 def __init__(self, inplanes, planes, stride=1, downsample=None): super(BasicBlock, self).__init__() self.conv1 = conv3x3(inplanes, planes, stride) self.bn1 = nn.BatchNorm2d(planes) self.relu = nn.ReLU(inplace=True) self.conv2 = conv3x3(planes, planes) self.bn2 = nn.BatchNorm2d(planes) self.downsample = downsample self.stride = stride def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) #把shortcut那的channel的维度统一 if self.downsample is not None: residual = self.downsample(x) out += residual out = self.relu(out) return out

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

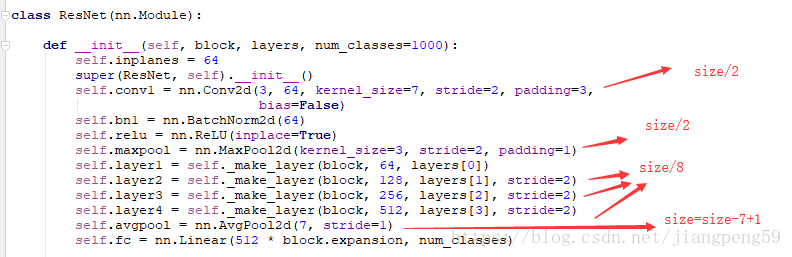

接下来是ResNet类,其和我们通常定义的模型差不多一个__init__()+forward(),代码有点长,我们一步步来分析:

- 参考前面的结构图,所有的resnet的第一个conv层都是一样的,输出channel=64

- 然后到了self.layer1 = self._make_layer(block, 64, layers[0]),这里的layers[0]=2,然后我们进入到_make_layer函数,由于stride=1或当前的输入channel和上一个块的输出channel一样,因而可以直接相加

- self.layer2 = self._make_layer(block, 128, layers[1], stride=2),此时planes=128而self.inplanes=64(上一个box_block的输出channel),此时channel不一致,需要对输出的x扩维后才能相加,而downsample 实现的就是该功能(ps:这里只有box_block中的第一个block需要downsample,为何?看图4)

- self.layer3 = self._make_layer(block, 256, layers[2], stride=2),此时planes=256而self.inplanes=128为,此时也需要扩维后才能相加,layer4 同理。

图4

图4中下标2,3,4和上面的步骤对应,图中箭头旁数值表示block输入或者输出的channel数。

具体看图4-2,上一个box_block的最后一个block输出channel为64(亦是下一个box_block的输入channel),而当前的box_block的第一个block的输出为128,在此需要扩维才能相加。然后到了当前box_block的第2个block,其输入channel和输出channel是一致的,因此无需扩维 (ps:在shotcut中若维度或者feature_size不一致则需要downsample,这里仅从维度方面进行阐述)。

就是说在box_block内部,只需要对第1个block进行扩维,因为在box_block内,第一个block输出channel和剩下的保持一致了。

class ResNet(nn.Module): def __init__(self, block, layers, num_classes=1000): self.inplanes = 64 super(ResNet, self).__init__() self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False) self.bn1 = nn.BatchNorm2d(64) self.relu = nn.ReLU(inplace=True) self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1) self.layer1 = self._make_layer(block, 64, layers[0]) self.layer2 = self._make_layer(block, 128, layers[1], stride=2) self.layer3 = self._make_layer(block, 256, layers[2], stride=2) self.layer4 = self._make_layer(block, 512, layers[3], stride=2) self.avgpool = nn.AvgPool2d(7, stride=1) self.fc = nn.Linear(512 * block.expansion, num_classes) for m in self.modules(): if isinstance(m, nn.Conv2d): n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels m.weight.data.normal_(0, math.sqrt(2. / n)) elif isinstance(m, nn.BatchNorm2d): m.weight.data.fill_(1) m.bias.data.zero_() def _make_layer(self, block, planes, blocks, stride=1): #downsample 主要用来处理H(x)=F(x)+x中F(x)和xchannel维度不匹配问题 downsample = None #self.inplanes为上个box_block的输出channel,planes为当前box_block块的输入channel #在shotcut中若维度或者feature_size不一致则需要downsample if stride != 1 or self.inplanes != planes * block.expansion: downsample = nn.Sequential( nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False), nn.BatchNorm2d(planes * block.expansion), ) layers = [] #只在这里传递了stride=2的参数,因而一个box_block中的图片大小只在第一次除以2 layers.append(block(self.inplanes, planes, stride, downsample)) self.inplanes = planes * block.expansion for i in range(1, blocks): layers.append(block(self.inplanes, planes)) return nn.Sequential(*layers) def forward(self, x): x = self.conv1(x) x = self.bn1(x) x = self.relu(x) x = self.maxpool(x) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) x = self.avgpool(x) x = x.view(x.size(0), -1) x = self.fc(x) return x

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

resnet152

resnet152和resnet18差不多,Bottleneck类替换了BasicBlock,[3, 8, 36, 3]也和上面结构图对应。

def resnet152(pretrained=False, **kwargs):

"""Constructs a ResNet-152 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = ResNet(Bottleneck, [3, 8, 36, 3], **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['resnet152']))

return model

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

Bottleneck类,这里需要注意的是 expansion = 4,前面2个block的channel没有变,最后一个变成了第一个的4倍,具体可看本文的第2个图。

class Bottleneck(nn.Module): expansion = 4 def __init__(self, inplanes, planes, stride=1, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False) self.bn1 = nn.BatchNorm2d(planes) self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(planes) self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False) self.bn3 = nn.BatchNorm2d(planes * 4) self.relu = nn.ReLU(inplace=True) self.downsample = downsample self.stride = stride def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) out = self.relu(out) out = self.conv3(out) out = self.bn3(out) if self.downsample is not None: residual = self.downsample(x) out += residual out = self.relu(out) return out

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

图像输入大小问题:

在旧版的torchvision中,其预训练权重的默认图片大小为224224,若图片大小经模型后缩小后和最后一层全连接层不匹配,则会抛出异常,比如输入大小256256

新版已经兼容了输入图片的大小,方法就是使用AdaptiveAvgPool2d

RuntimeError: size mismatch, m1: [1 x 8192], m2: [2048 x 1000] at c:\miniconda2\conda-bld\pytorch-cpu_1519449358620\work\torch\lib\th\generic/THTensorMath.c:1434

- 1

首先我们看下,resnet在哪些地方改变了输出图像的大小

conv和pool层的输出大小都可以根据下面公式计算得出

H

o

u

t

=

f

l

o

o

r

(

(

H

i

n

+

2

∗

p

a

d

d

i

n

g

[

0

]

−

k

e

r

n

e

l

_

s

i

z

e

[

0

]

)

/

s

t

r

i

d

e

[

0

]

)

+

1

H_{out} = floor((H_{in} + 2 * padding[0] - kernel\_size[0]) / stride[0]) + 1

Hout=floor((Hin+2∗padding[0]−kernel_size[0])/stride[0])+1

W

o

u

t

=

f

l

o

o

r

(

(

W

i

n

+

2

∗

p

a

d

d

i

n

g

[

1

]

−

k

e

r

n

e

l

_

s

i

z

e

[

1

]

)

/

s

t

r

i

d

e

[

1

]

)

+

1

W_{out} = floor((W_{in} + 2 * padding[1] -kernel\_size[1] ) / stride[1] )+ 1

Wout=floor((Win+2∗padding[1]−kernel_size[1])/stride[1])+1

方法1:

可以根据输入图片的大小和上面的公式先计算出最后一层神经元的数目calc_num,然后在替换掉fc层即可,比如

model = torchvision.models.resnet152()

model.fc = nn.Linear(calc_num, nb_classes)

- 1

- 2

但方法需要自己计算calc_num,推荐使用方法2

方法2:

暴力的方法,就是不管卷积层的输出大小,取其平均值做为输出,比如:

model = torchvision.models.resnet152()

model.avgpool = nn.AdaptiveAvgPool2d((1, 1))

- 1

- 2

第一次研究pytorch,请大神门轻喷

reference:

resnet详细介绍

deeper bottleneck Architectures详细介绍