MobileNetv1,v2网络详解并使用pytorch搭建MobileNetV2及基于迁移学习训练(超详细|附训练代码)

赞

踩

目录

前言

最近在完成学校暑假任务时候,推荐的b站视频中发现了一个非常好的 计算机视觉 + pytorch实战 的教程,相见恨晚,能让初学者少走很多弯路。

因此决定按着up给的教程路线:图像分类→目标检测→…一步步学习用 pytorch 实现深度学习在 cv 上的应用,并做笔记整理和总结。

up主教程给出了pytorch和tensorflow两个版本的实现,我暂时只记录pytorch版本的笔记。

参考内容来自:

up主的b站链接:霹雳吧啦Wz的个人空间-霹雳吧啦Wz个人主页-哔哩哔哩视频

up主将代码和ppt都放在了github:GitHub - WZMIAOMIAO/deep-learning-for-image-processing: deep learning for image processing including classification and object-detection etc.

up主的CSDN博客:深度学习在图像处理中的应用(tensorflow2.4以及pytorch1.10实现)_深度学习图像处理_太阳花的小绿豆的博客-CSDN博客

数据集:补充LeNet,resnet,mobilenet的出处_后来后来啊的博客-CSDN博客

学习资料

7.1 MobileNet网络详解_哔哩哔哩_bilibili

7.1.2 MobileNetv3网络详解_哔哩哔哩_bilibili

7.2 使用pytorch搭建MobileNetV2并基于迁移学习训练_哔哩哔哩_bilibili

Mobilenet系列模型作为当前主流的端侧轻量级模型被广泛应用,很多算法都会使用其作为backbone提取特征,这一章对Mobilenet系列模型做一个总结。

一、MobilnetV1

亮点:

- Depthwise Convolution(大大减少运算量和参数数量)

- 增加超参数α,β

缺点

- depthwise部分的卷积核容易废掉,即卷积核参数大部分为0

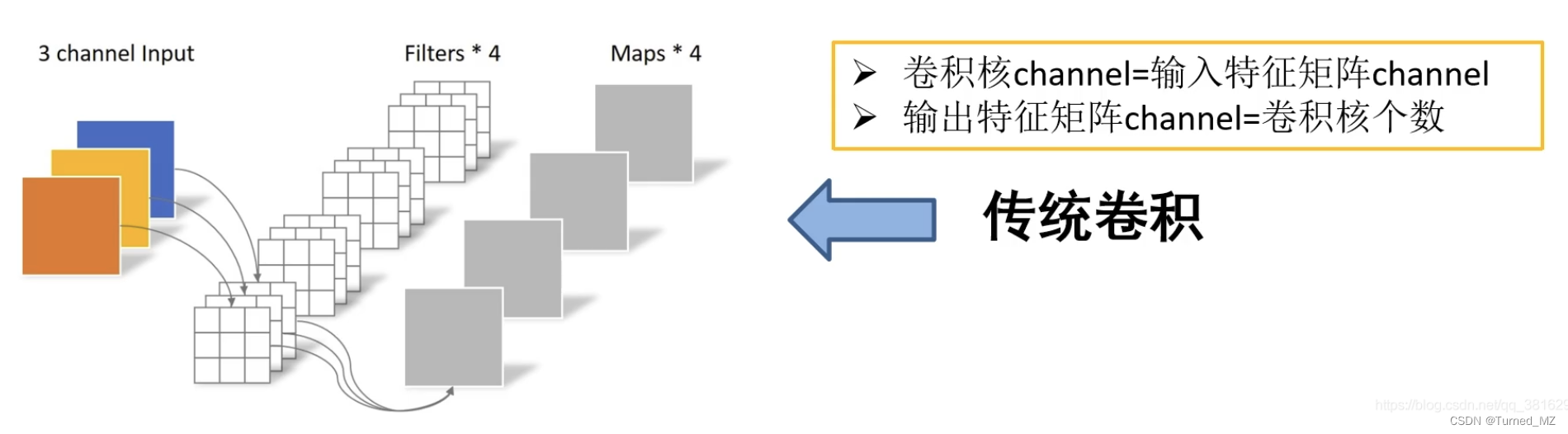

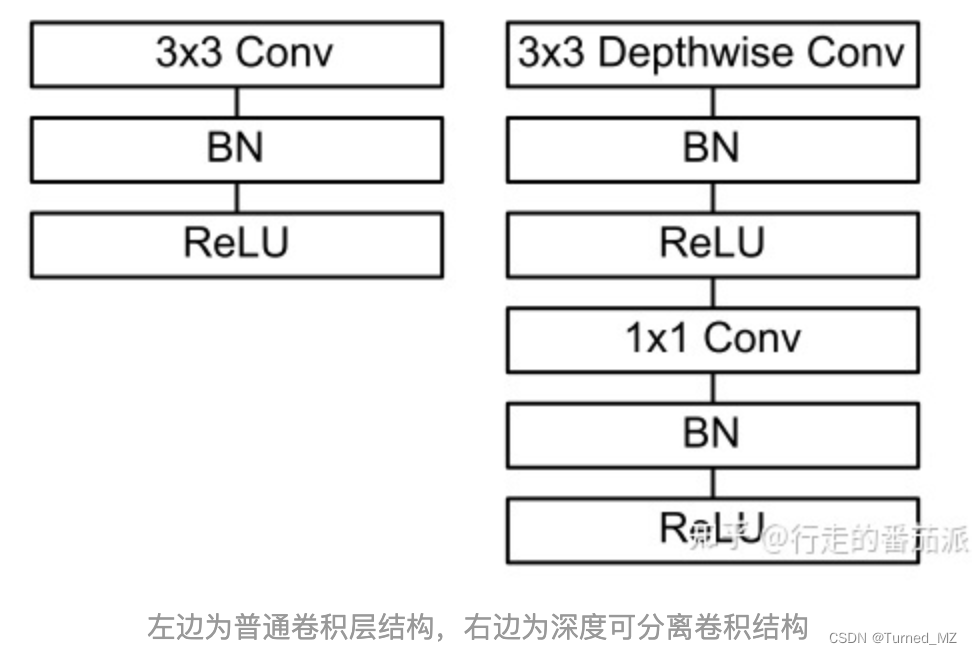

MobilenetV1提出了深度可分离卷积(Depthwise Convolution),它将标准卷积分解成深度卷积以及一个1x1的卷积即逐点卷积,大幅度减少了运算量和参数量。下面看一下普通卷积和深度可分卷积的对比:

普通卷积:

深度可分离卷积:

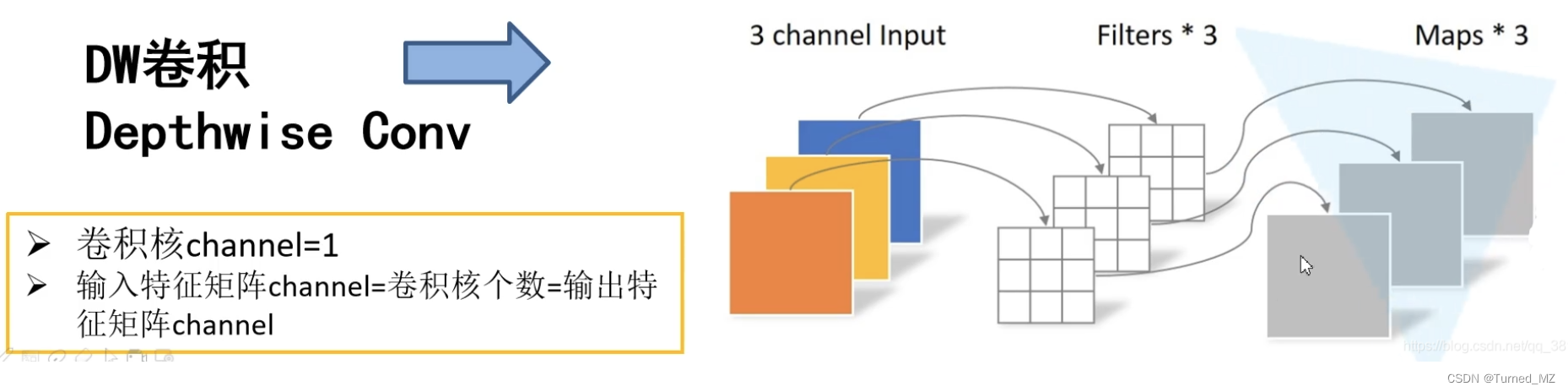

DW卷积:对每个输入通道(输入深度)应用一个滤波器

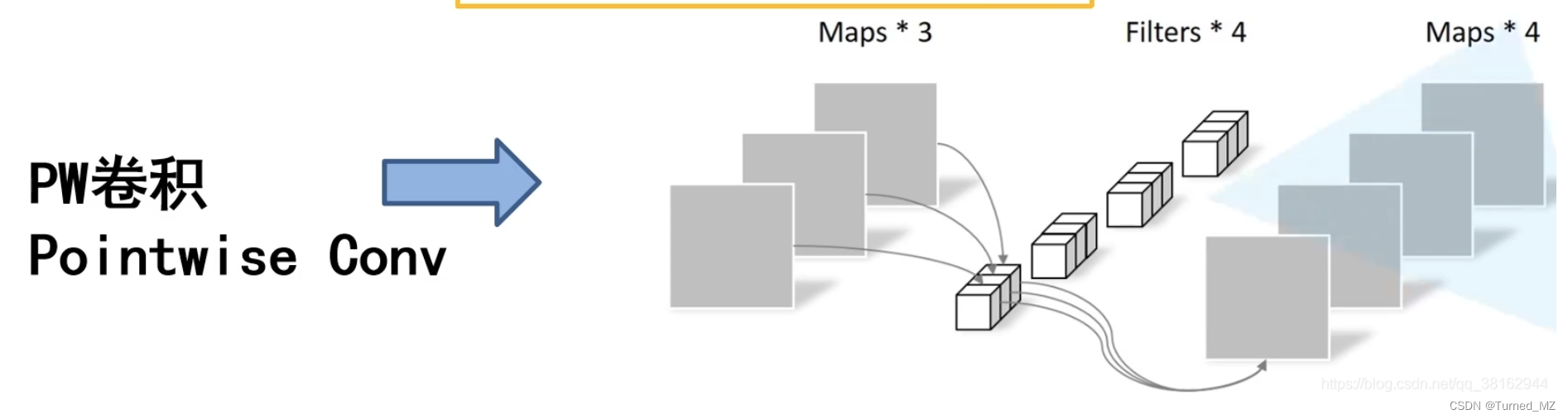

PW卷积:(就是普通的卷积,只不过卷积核的大小为1x1),一个简单的1×1卷积,然后被用来创建一个线性组合的输出的深度层

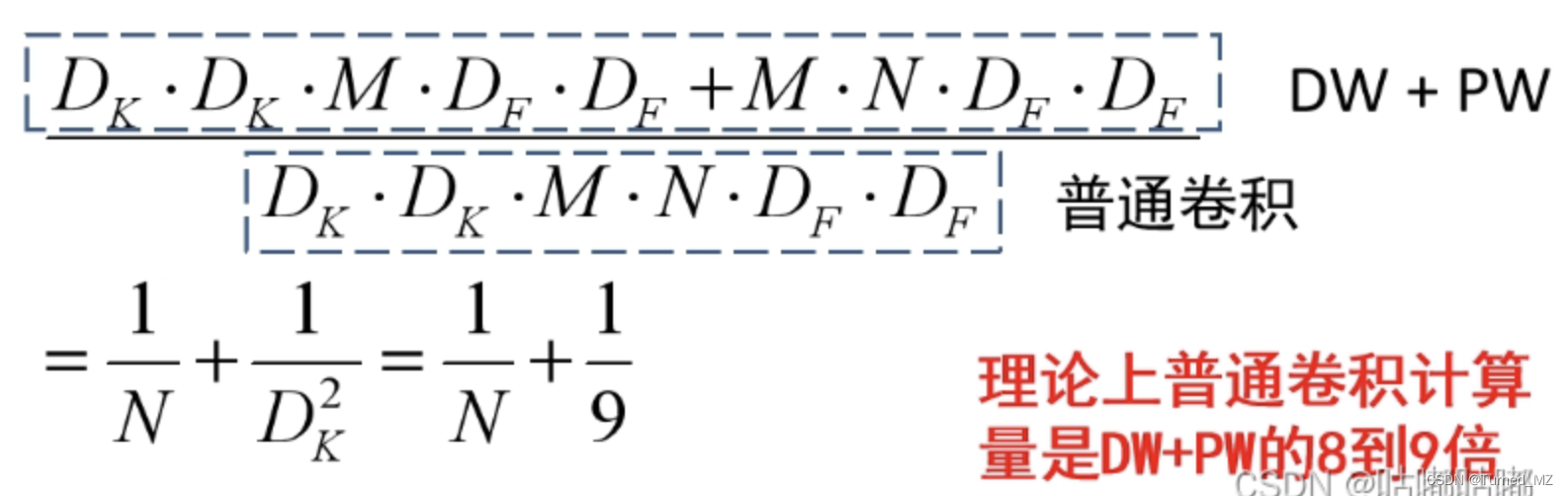

两种卷积计算量对比:

普通卷积层的特征提取与特征组合一次完成并输出,而深度可分离卷积先用厚度为1的3*3的卷积核(depthwise分层卷积),再用1*1的卷积核(pointwise 卷积)调整通道数,将特征提取与特征组合分开进行。

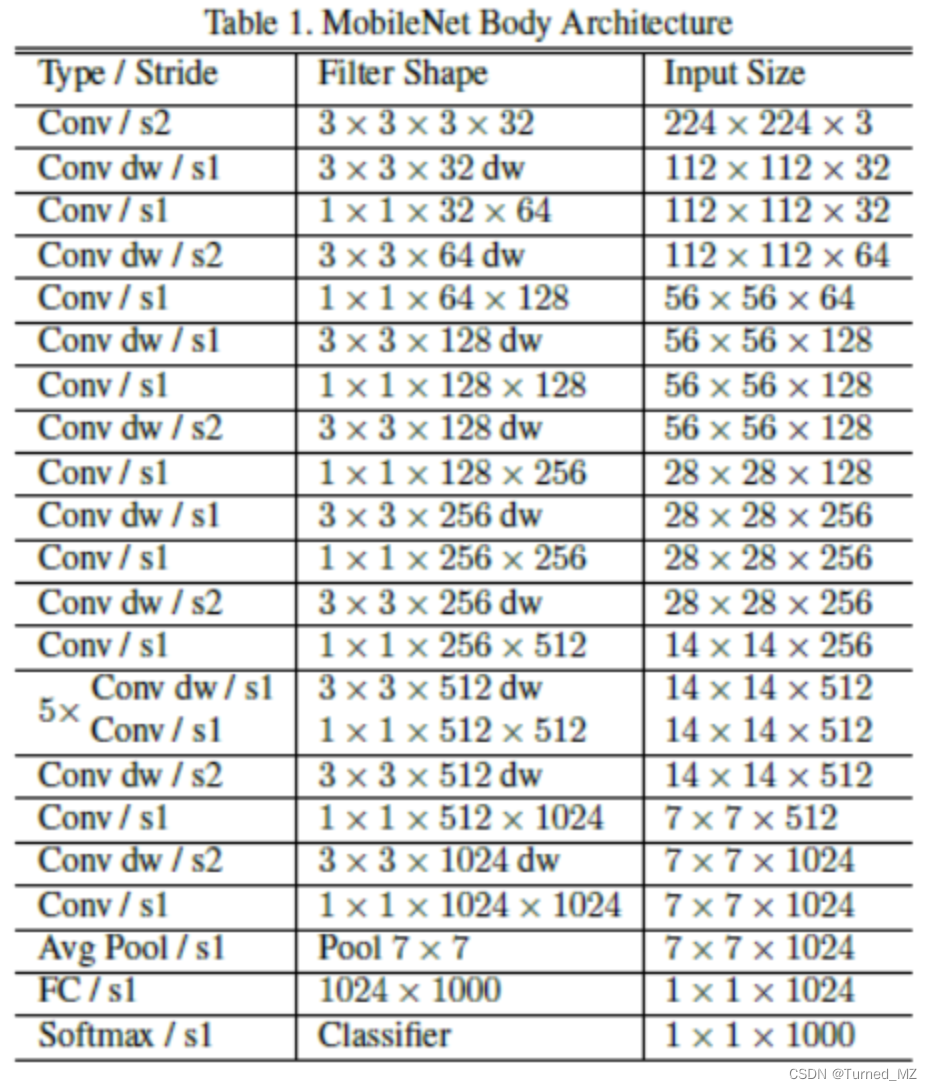

mobileNetV1的网络结构如下,前面的卷积层中除了第一层为标准卷积层外,其他都是深度可分离卷积(Conv dw + Conv/s1),卷积后接了一个7*7的平均池化层,之后通过全连接层,最后利用Softmax激活函数将全连接层输出归一化到0-1的一个概率值,根据概率值的高低可以得到图像的分类情况。

二、MobileNetV2

mobilenetV2相对于V1的主要优化点为:

- 倒残差结构:Inverted Residuals

- Linear Bottlenecks

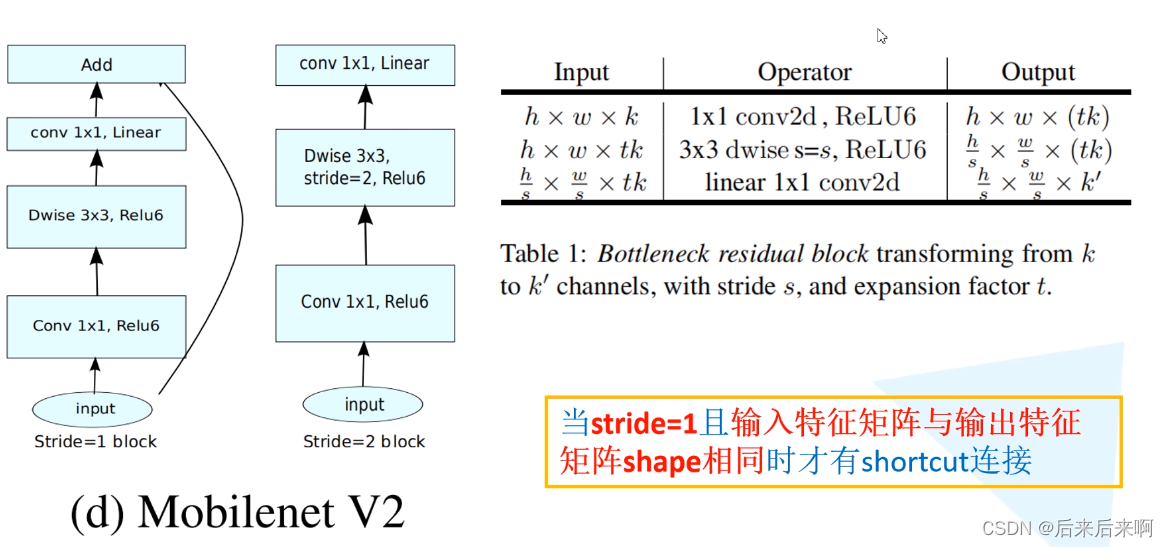

倒残差结构:

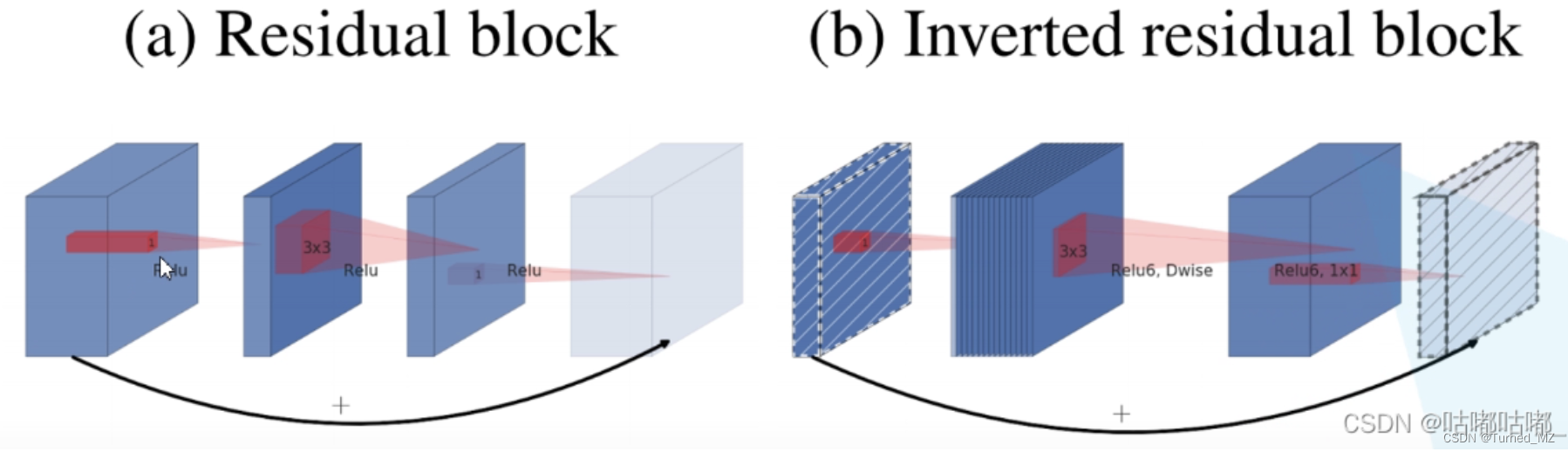

对于倒残差结构的理解,主要在于对通道数变化(维度变化)的理解。在残差结构中,先使用 1x1 卷积实现降维,再通过 3x3 卷积提取特征,最后使用 1×1 卷积实现升维。这是一个两头大、中间小的沙漏型结构。但在倒残差结构中,先使用 1x1 卷积实现升维,再通过 3x3 的 DW 卷积(逐通道卷积)提取特征,最后使用 1×1 卷积实现降维。调换了降维和升维的顺序,并将 3×3 的标准卷积换为 DW 卷积,呈两头小、中间大的梭型结构。二者比较参见下图:

相对于传统的残差结构使用relu激活函数,该网络使用relu6激活函数

那么什么是relu6激活函数呢

主要是为了在移动端float16的低精度的时候,也能有很好的数值分辨率,如果对ReLu的输出值不加限制,那么输出范围就是0到正无穷,而低精度的float16无法精确描述其数值,带来精度损失。

- ReLU和ReLU6图表对比:

- 残差模块

(1) 整个过程为 “压缩 - 卷积 - 扩张”,呈沙漏型;

(2) 卷积操作为:卷积降维 (1×1) - 标准卷积提取特征 (3×3) - 卷积升维 (1×1);

(3) 统一使用 ReLU 激活函数;- 倒残差模块

(1) 整个过程为 “扩展- 卷积 - 压缩”,呈梭型;

(2) 卷积操作为:卷积升维 (1×1) - DW卷积提取特征 (3×3) - 卷积降维 (1×1);

(3) 使用 ReLU6 激活函数和线性激活函数。

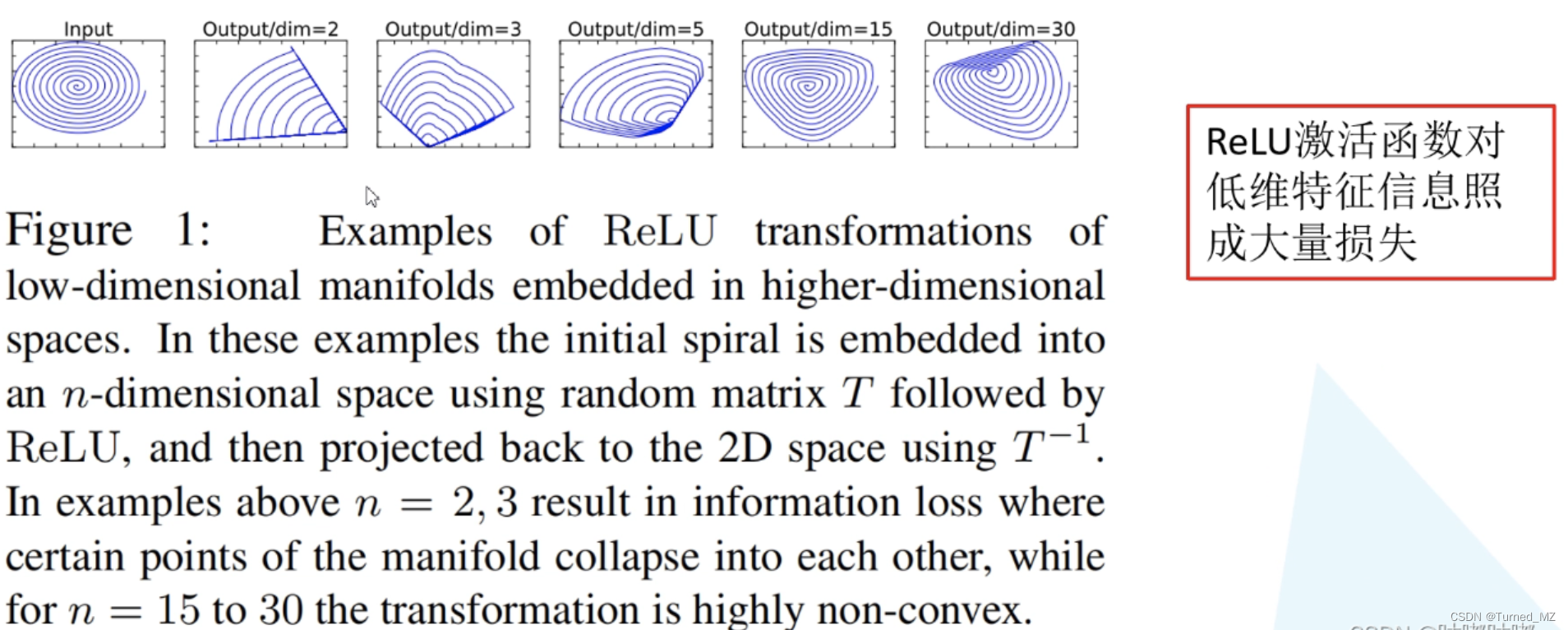

Linear Bottlenecks

线性瓶颈结构,就是末层卷积使用线性激活的瓶颈结构(将 ReLU 函数替换为线性函数),论文中的解释如下图

这里要注意,只有stride=1且输入特征矩阵与输出特征矩阵shape相同时,才有shortcut(捷径分支)连接

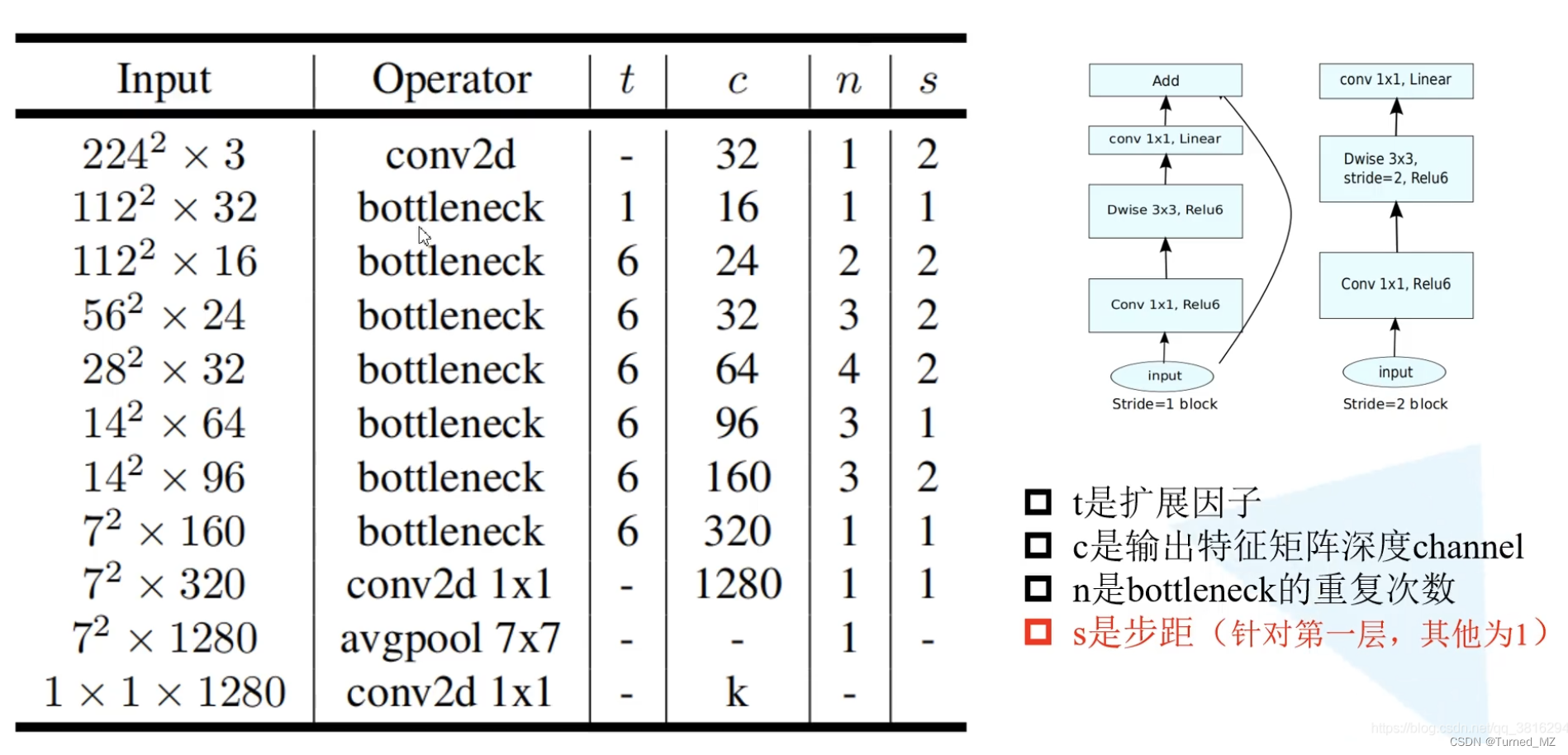

下面来看一下mbv2的模型结构:

上图t为cuda因子,c表示输出特征矩阵的深度(channel),n是bottleneck(倒残差结构)的重复次数,s是步距(针对第一层,其他为1),一个block由一系列bottleneck组成

此处为了更加明了,建议观看视频:7.1 MobileNet网络详解_哔哩哔哩_bilibili

三、MobileNetV3

mbv3的主要亮点为:

- 更新Block(bneck):加入SE模块、更新激活函数

- 使用NAS搜索参数(Neural Architecture Search)

- 重新设计耗时层结构:减少第一个卷积层的核数(32->16),更新last-stage

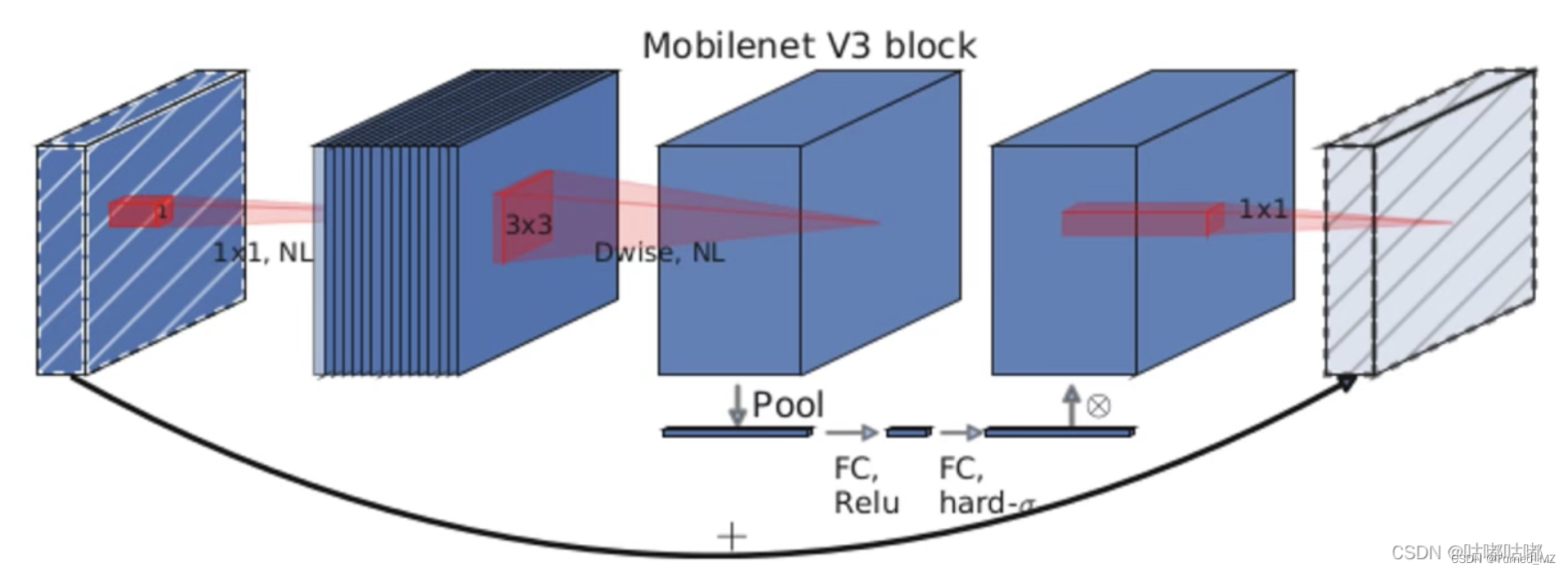

mbv3的bneck如下图:

(NL 代表使用非线性激活函数,并不特指)

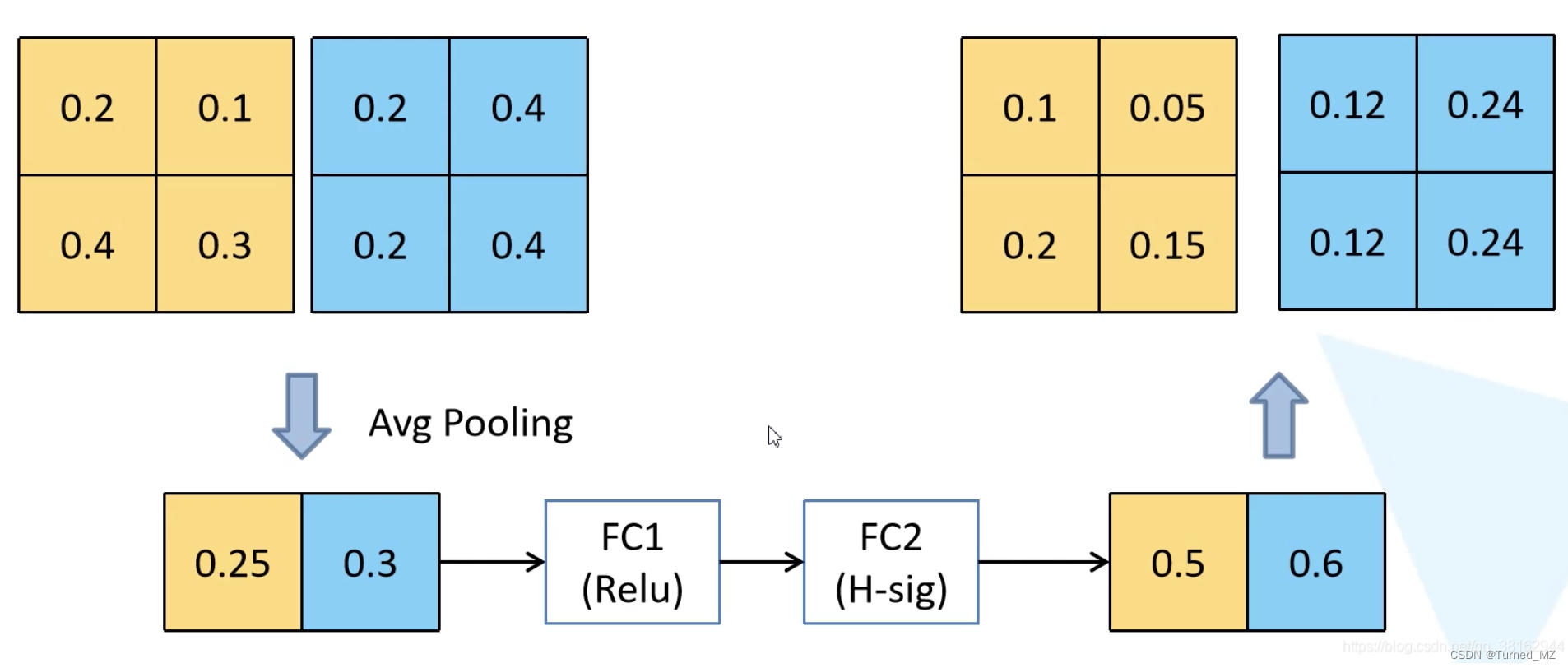

SE模块:

在bottlenet结构中加入了SE结构,并且放在了depthwise filter之后,如下图。因为SE结构会消耗一定的时间,所以作者在含有SE的结构中,将expansion layer的channel变为原来的1/4,这样作者发现,即提高了精度,同时还没有增加时间消耗。并且SE结构放在了depthwise之后。实质为引入了一个channel级别的注意力机制,其细节如下:

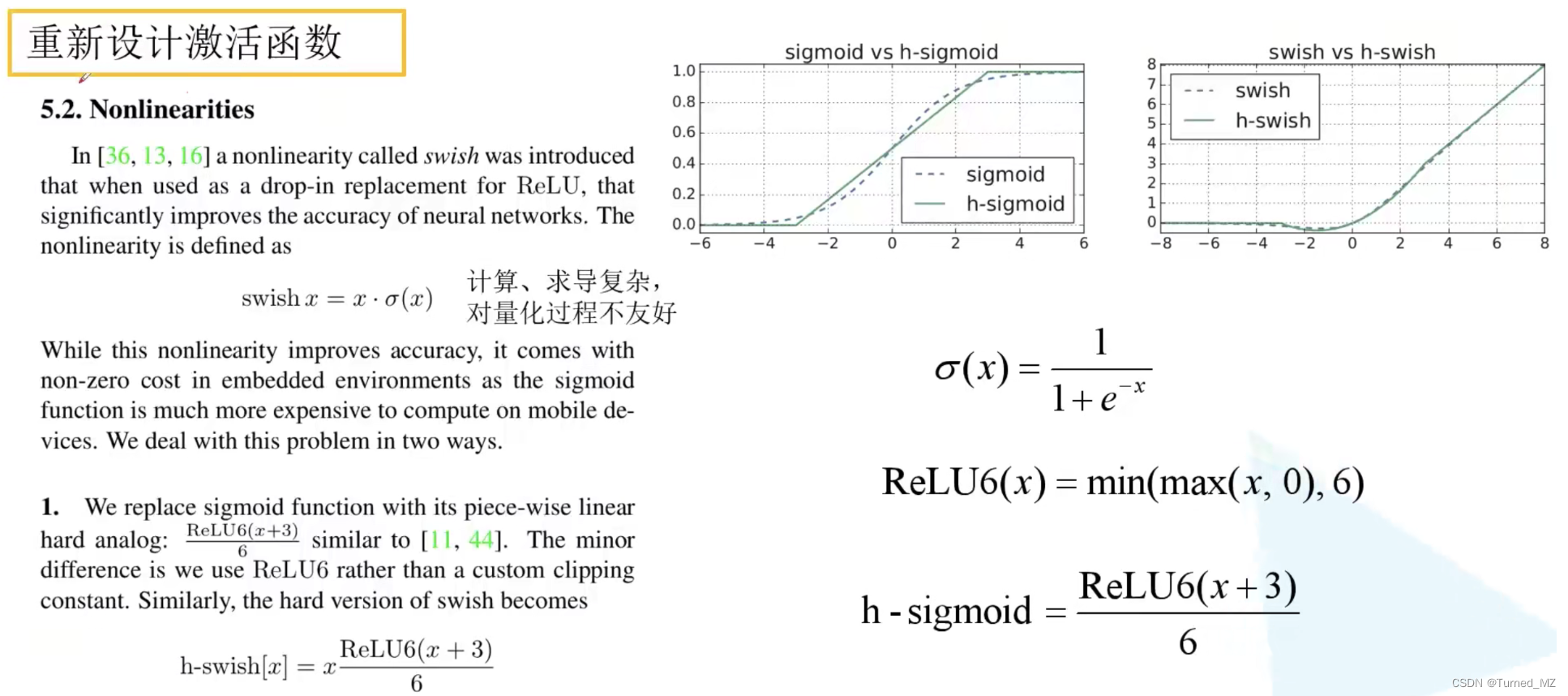

更新激活函数:

使用h-swish替换swish,swish是谷歌自家的研究成果,颇有点自卖自夸的意思,这次在其基础上,为速度进行了优化。swish与h-swish公式如下所示,由于sigmoid的计算耗时较长,特别是在移动端,这些耗时就会比较明显,所以作者使用ReLU6(x+3)/6来近似替代sigmoid,观察下图可以发现,其实相差不大的。利用ReLU有几点好处,1.可以在任何软硬件平台进行计算,2.量化的时候,它消除了潜在的精度损失,使用h-swish替换swith,在量化模式下回提高大约15%的效率,另外,h-swish在深层网络中更加明显。

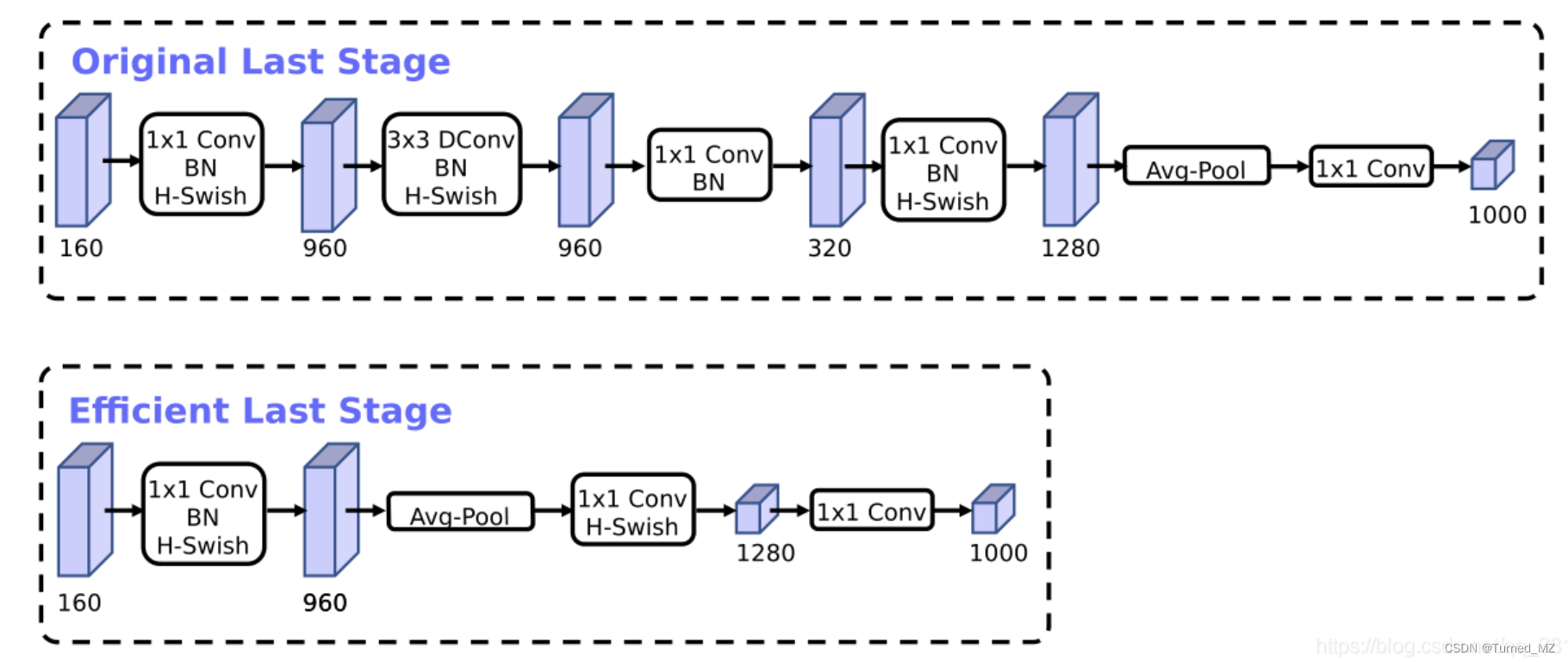

重新设计耗时层结构:

(1)减少第一个卷积层的卷积核个数(32—>16),减少卷积核的个数但是准确率没变,计算量反而会降低,检测速度更快

(2)精简Last Stage

将延迟时间减少了7毫秒,这是运行时间的11%,并将操作数量减少了3000万MAdds,几乎没有损失准确性。

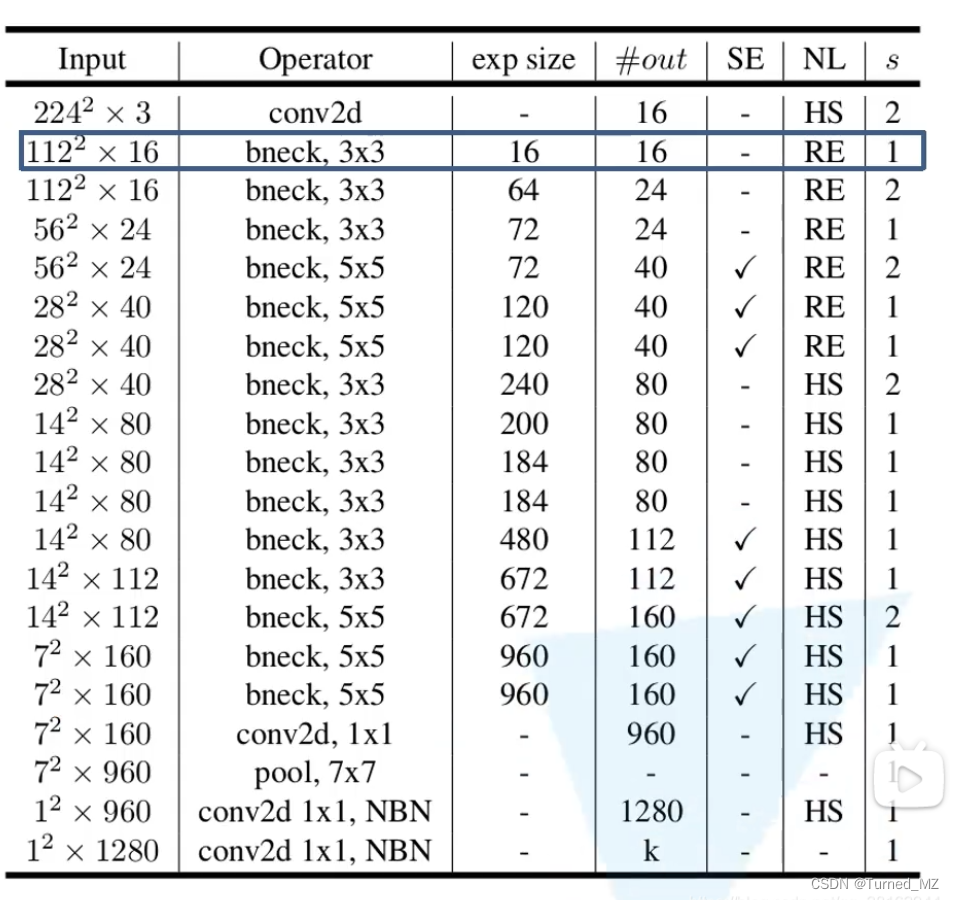

MobileNetV3-Large 模型结构

NBN是不使用bn层的 SE打钩才使用注意力机制 exp size对应倒残差块刚开始1*1卷积输出的深度 out对应倒残差块最后的深度。

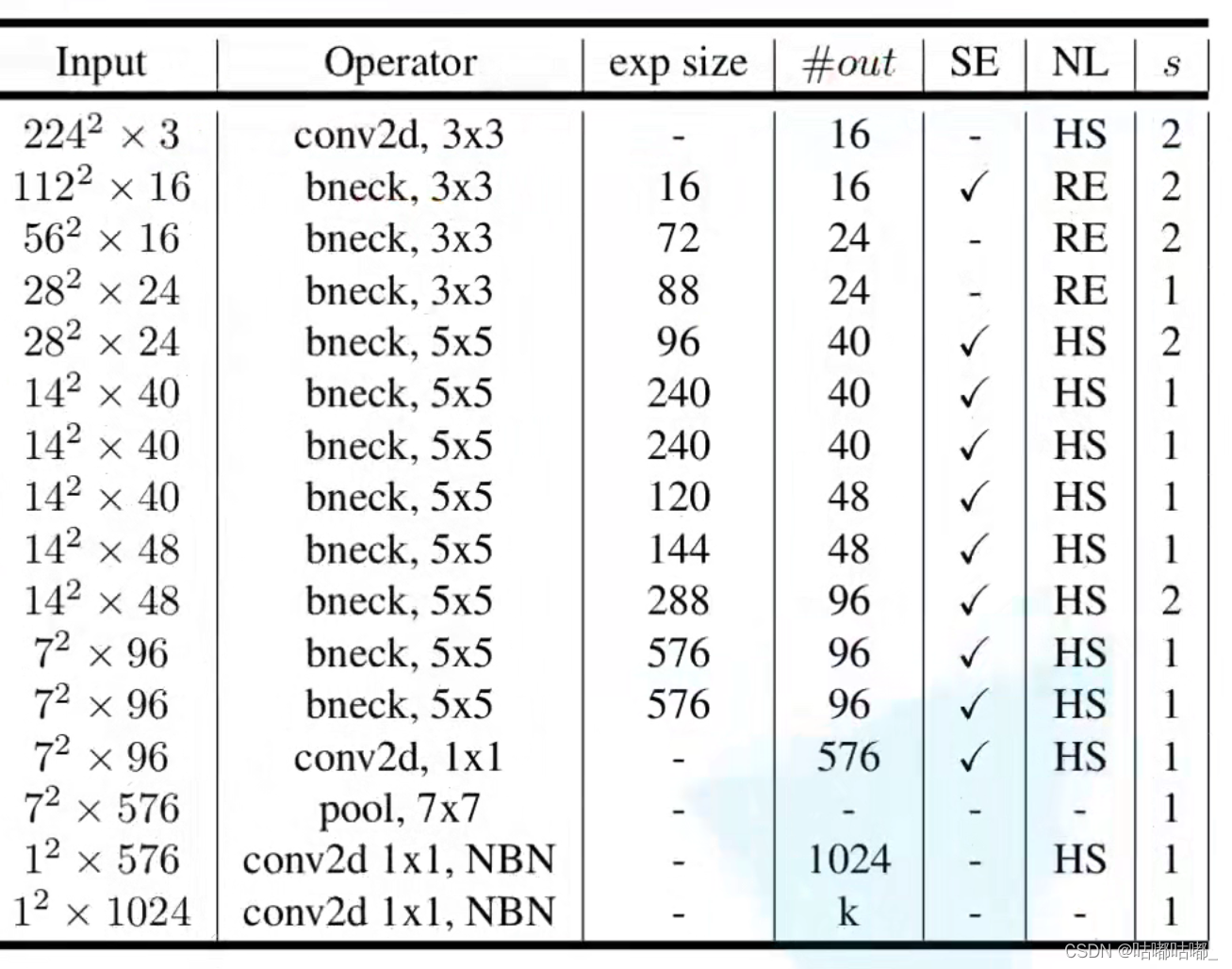

mbv3-small的模型结构:

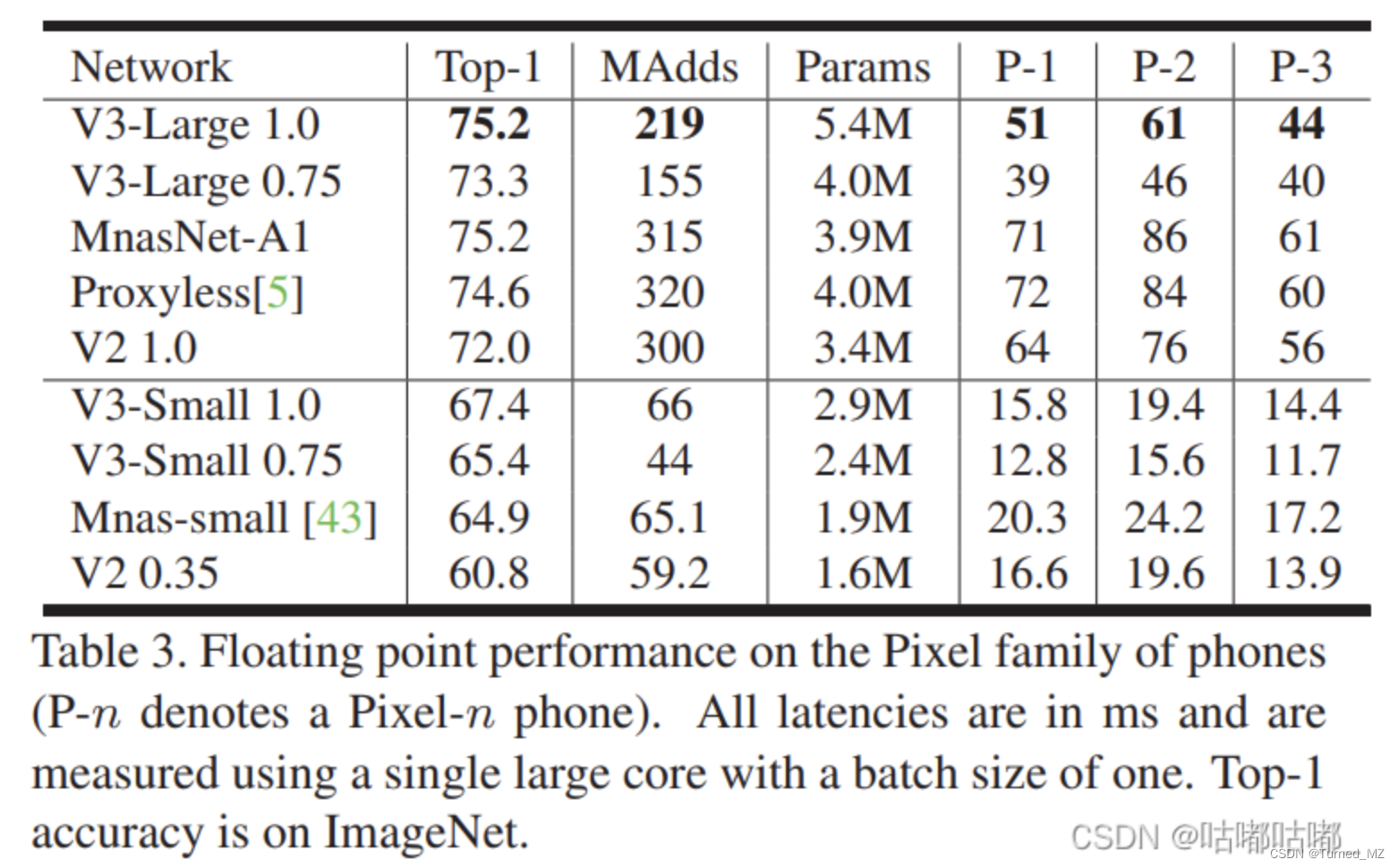

最后附上原论文实验结果:

使用pytorch搭建MobileNetv2网络结构

可参考

vision/torchvision/models/mobilenetv2.py at main · pytorch/vision (github.com)

MobileNetV2 解读 - 高峰OUC - 博客园 (cnblogs.com)

3.1 model.py

- 定义倒残差结构,即InvertedResidual

- 定义MobileNetv2网络结构

- from torch import nn

- import torch

-

-

- def _make_divisible(ch, divisor=8, min_ch=None):

- """

- This function is taken from the original tf repo.

- It ensures that all layers have a channel number that is divisible by 8

- It can be seen here:

- https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

- """

- if min_ch is None:

- min_ch = divisor #最小通道数,若为None,则为8,所以 最小为8

- new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor) #趋近于8的整数倍,四舍五入

- # Make sure that round down does not go down by more than 10%.

- if new_ch < 0.9 * ch:

- new_ch += divisor

- return new_ch

-

-

- class ConvBNReLU(nn.Sequential):

- def __init__(self, in_channel, out_channel, kernel_size=3, stride=1, groups=1): #如果groups=1则为普通卷积

- #如果groups=in_channel,则为DW卷积

- padding = (kernel_size - 1) // 2 #保证输出前后的图片大小不变

- super(ConvBNReLU, self).__init__(

- nn.Conv2d(in_channel, out_channel, kernel_size, stride, padding, groups=groups, bias=False),

- nn.BatchNorm2d(out_channel),

- nn.ReLU6(inplace=True)

- )

-

-

- class InvertedResidual(nn.Module): #定义倒残差结构

- def __init__(self, in_channel, out_channel, stride, expand_ratio):

- super(InvertedResidual, self).__init__()

- hidden_channel = in_channel * expand_ratio #扩展因子t

- self.use_shortcut = stride == 1 and in_channel == out_channel #只有stride=1且输入特征矩阵与输出特征矩阵shape相同时,才有shortcut(捷径分支)连接

-

- layers = []

- if expand_ratio != 1:

- # 1x1 pointwise conv

- layers.append(ConvBNReLU(in_channel, hidden_channel, kernel_size=1))

- layers.extend([

- # 3x3 depthwise conv

- ConvBNReLU(hidden_channel, hidden_channel, stride=stride, groups=hidden_channel),

- # 1x1 pointwise conv(linear) #线性激活函数:y=x

- nn.Conv2d(hidden_channel, out_channel, kernel_size=1, bias=False),

- nn.BatchNorm2d(out_channel),

- ])

-

- self.conv = nn.Sequential(*layers)

-

- def forward(self, x):

- if self.use_shortcut:

- return x + self.conv(x)

- else:

- return self.conv(x)

-

-

- class MobileNetV2(nn.Module):

- def __init__(self, num_classes=1000, alpha=1.0, round_nearest=8):

- super(MobileNetV2, self).__init__()

- block = InvertedResidual

- input_channel = _make_divisible(32 * alpha, round_nearest) #调整到8的整数倍

- last_channel = _make_divisible(1280 * alpha, round_nearest)

-

- inverted_residual_setting = [

- # t, c, n, s

- #t扩展因子,c输出特征矩阵深度channel,n是bottleneck的重复次数,s是步距(针对第一层,其他为1)

- [1, 16, 1, 1],

- [6, 24, 2, 2],

- [6, 32, 3, 2],

- [6, 64, 4, 2],

- [6, 96, 3, 1],

- [6, 160, 3, 2],

- [6, 320, 1, 1],

- ] #每个表格中对应的参数

-

- features = []

- # conv1 layer

- features.append(ConvBNReLU(3, input_channel, stride=2))

- # building inverted residual residual blockes

- for t, c, n, s in inverted_residual_setting:

- output_channel = _make_divisible(c * alpha, round_nearest)

- for i in range(n):

- stride = s if i == 0 else 1

- features.append(block(input_channel, output_channel, stride, expand_ratio=t))

- input_channel = output_channel

- # building last several layers

- features.append(ConvBNReLU(input_channel, last_channel, 1))

- # combine feature layers

- self.features = nn.Sequential(*features) #已经定义完特征结构

-

- # building classifier

- self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) #自适应下采样操作

- self.classifier = nn.Sequential(

- nn.Dropout(0.2),

- nn.Linear(last_channel, num_classes)

- )

-

- # weight initialization

- for m in self.modules():

- if isinstance(m, nn.Conv2d):

- nn.init.kaiming_normal_(m.weight, mode='fan_out')

- if m.bias is not None:

- nn.init.zeros_(m.bias)

- elif isinstance(m, nn.BatchNorm2d):

- nn.init.ones_(m.weight)

- nn.init.zeros_(m.bias)

- elif isinstance(m, nn.Linear):

- nn.init.normal_(m.weight, 0, 0.01)

- nn.init.zeros_(m.bias)

-

- def forward(self, x):

- x = self.features(x) #特征提取

- x = self.avgpool(x) #平均池化下采样

- x = torch.flatten(x, 1) #展平处理

- x = self.classifier(x) #分类器输出

- return x

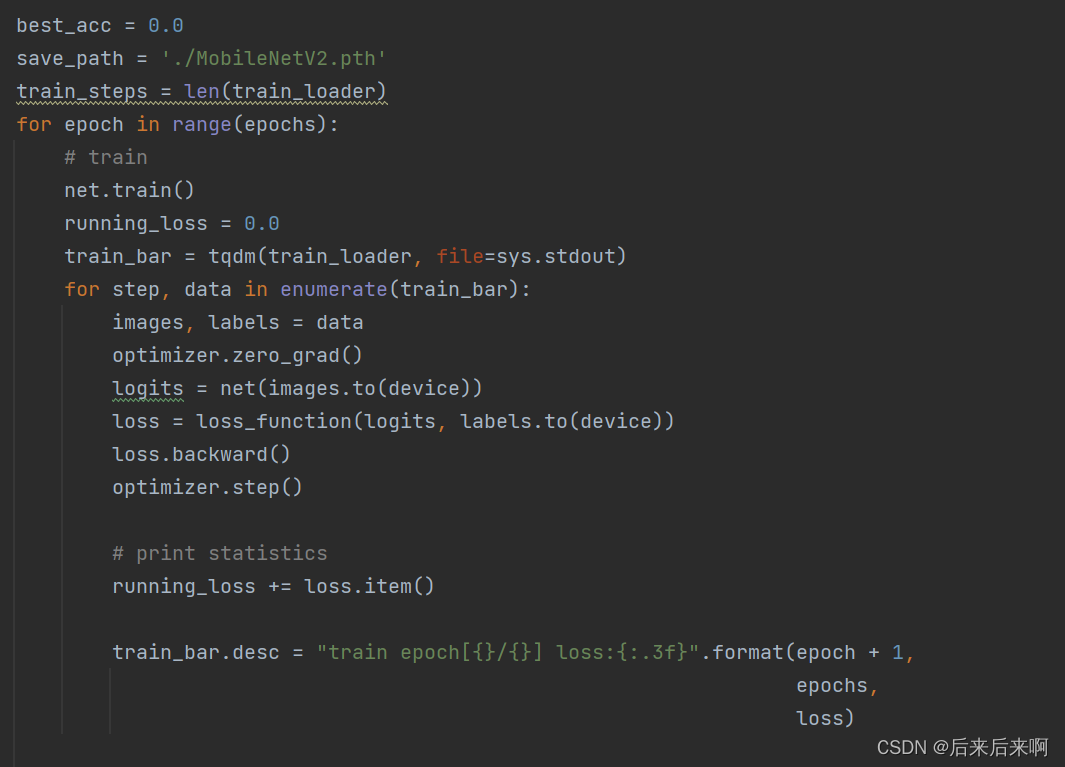

3.2 train.py

由于MobileNetv2网络较深,直接训练的话会非常耗时,因此用迁移学习的方法导入预训练好的模型参数:在pycharm中输入import torchvision.models.mobilenetv2,ctrl+左键mobilenetv2跳转到pytorch官方实现resnet的源码中,下载预训练的模型参数:

model_urls = { "mobilenet_v3_large": "https://download.pytorch.org/models/mobilenet_v3_large-8738ca79.pth", "mobilenet_v3_small": "https://download.pytorch.org/models/mobilenet_v3_small-047dcff4.pth",

'mobilenet_v2':

'https://download.pytorch.org/models/mobilenet_v2-b0353104.pth'

}

然后在实例化网络时导入预训练的模型参数。下面是完整代码:(看过vgg的宝子们应该知道把"study删去",但是为了怕你们麻烦,我就删去了"study",具体原因可以查看vgg)

- import os

- import sys

- import json

-

- import torch

- import torch.nn as nn

- import torch.optim as optim

- from torchvision import transforms, datasets

- from tqdm import tqdm

-

- from model_v2 import MobileNetV2

- import torchvision.models.mobilenetv2

-

- def main():

- device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- print("using {} device.".format(device))

-

- batch_size = 16

- epochs = 5

-

- data_transform = {

- "train": transforms.Compose([transforms.RandomResizedCrop(224),

- transforms.RandomHorizontalFlip(),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

- "val": transforms.Compose([transforms.Resize(256),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

-

- data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

- image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

- assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

- train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

- transform=data_transform["train"])

- train_num = len(train_dataset)

-

- # {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

- flower_list = train_dataset.class_to_idx

- cla_dict = dict((val, key) for key, val in flower_list.items())

- # write dict into json file

- json_str = json.dumps(cla_dict, indent=4)

- with open('class_indices.json', 'w') as json_file:

- json_file.write(json_str)

-

- nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

- print('Using {} dataloader workers every process'.format(nw))

-

- train_loader = torch.utils.data.DataLoader(train_dataset,

- batch_size=batch_size, shuffle=True,

- num_workers=nw)

-

- validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

- transform=data_transform["val"])

- val_num = len(validate_dataset)

- validate_loader = torch.utils.data.DataLoader(validate_dataset,

- batch_size=batch_size, shuffle=False,

- num_workers=nw)

-

- print("using {} images for training, {} images for validation.".format(train_num,

- val_num))

-

- # create model

- net = MobileNetV2(num_classes=5)

-

- # load pretrain weights

- # download url: https://download.pytorch.org/models/mobilenet_v2-b0353104.pth

- model_weight_path = "./mobilenet_v2.pth"

- assert os.path.exists(model_weight_path), "file {} dose not exist.".format(model_weight_path)

- pre_weights = torch.load(model_weight_path, map_location='cpu')

-

- # delete classifier weights

- pre_dict = {k: v for k, v in pre_weights.items() if net.state_dict()[k].numel() == v.numel()} #字典类型

- missing_keys, unexpected_keys = net.load_state_dict(pre_dict, strict=False)

-

- # freeze features weights

- for param in net.features.parameters():

- param.requires_grad = False

-

- net.to(device)

-

- # define loss function

- loss_function = nn.CrossEntropyLoss()

-

- # construct an optimizer

- params = [p for p in net.parameters() if p.requires_grad]

- optimizer = optim.Adam(params, lr=0.0001)

-

- best_acc = 0.0

- save_path = './MobileNetV2.pth'

- train_steps = len(train_loader)

- for epoch in range(epochs):

- # train

- net.train()

- running_loss = 0.0

- train_bar = tqdm(train_loader, file=sys.stdout)

- for step, data in enumerate(train_bar):

- images, labels = data

- optimizer.zero_grad()

- logits = net(images.to(device))

- loss = loss_function(logits, labels.to(device))

- loss.backward()

- optimizer.step()

-

- # print statistics

- running_loss += loss.item()

-

- train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

- epochs,

- loss)

-

- # validate

- net.eval()

- acc = 0.0 # accumulate accurate number / epoch

- with torch.no_grad():

- val_bar = tqdm(validate_loader, file=sys.stdout)

- for val_data in val_bar:

- val_images, val_labels = val_data

- outputs = net(val_images.to(device))

- # loss = loss_function(outputs, test_labels)

- predict_y = torch.max(outputs, dim=1)[1]

- acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

-

- val_bar.desc = "valid epoch[{}/{}]".format(epoch + 1,

- epochs)

- val_accurate = acc / val_num

- print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

- (epoch + 1, running_loss / train_steps, val_accurate))

-

- if val_accurate > best_acc:

- best_acc = val_accurate

- torch.save(net.state_dict(), save_path)

-

- print('Finished Training')

-

-

- if __name__ == '__main__':

- main()

3.3 predict.py

预测脚本跟之前的几章差不多,就不详细讲了

- import os

- import json

-

- import torch

- from PIL import Image

- from torchvision import transforms

- import matplotlib.pyplot as plt

-

- from model_v2 import MobileNetV2

-

-

- def main():

- device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

-

- data_transform = transforms.Compose(

- [transforms.Resize(256),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

-

- # load image

- img_path = "../tulip.jpg"

- assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

- img = Image.open(img_path)

- plt.imshow(img)

- # [N, C, H, W]

- img = data_transform(img)

- # expand batch dimension

- img = torch.unsqueeze(img, dim=0)

-

- # read class_indict

- json_path = './class_indices.json'

- assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

-

- with open(json_path, "r") as f:

- class_indict = json.load(f)

-

- # create model

- model = MobileNetV2(num_classes=5).to(device)

- # load model weights

- model_weight_path = "./MobileNetV2.pth"

- model.load_state_dict(torch.load(model_weight_path, map_location=device))

- model.eval()

- with torch.no_grad():

- # predict class

- output = torch.squeeze(model(img.to(device))).cpu()

- predict = torch.softmax(output, dim=0)

- predict_cla = torch.argmax(predict).numpy()

-

- print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

- predict[predict_cla].numpy())

- plt.title(print_res)

- for i in range(len(predict)):

- print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

- predict[i].numpy()))

- plt.show()

-

-

- if __name__ == '__main__':

- main()

3.4 class_indices.json

- {

- "0": "daisy",

- "1": "dandelion",

- "2": "roses",

- "3": "sunflowers",

- "4": "tulips"

- }

使用pytorch搭建MobileNetv3网络结构

差不多和上面同样的道理,我把path复制下里,其他的查看上文即可

model_urls = { "mobilenet_v3_large": "https://download.pytorch.org/models/mobilenet_v3_large-8738ca79.pth", "mobilenet_v3_small": "https://download.pytorch.org/models/mobilenet_v3_small-047dcff4.pth",

'mobilenet_v2':

'https://download.pytorch.org/models/mobilenet_v2-b0353104.pth'

}

4.1 model_v3

- from typing import Callable, List, Optional

-

- import torch

- from torch import nn, Tensor

- from torch.nn import functional as F

- from functools import partial

-

-

- def _make_divisible(ch, divisor=8, min_ch=None):

- """

- This function is taken from the original tf repo.

- It ensures that all layers have a channel number that is divisible by 8

- It can be seen here:

- https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

- """

- if min_ch is None:

- min_ch = divisor

- new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor)

- # Make sure that round down does not go down by more than 10%.

- if new_ch < 0.9 * ch:

- new_ch += divisor

- return new_ch

-

-

- class ConvBNActivation(nn.Sequential):

- def __init__(self,

- in_planes: int,

- out_planes: int,

- kernel_size: int = 3,

- stride: int = 1,

- groups: int = 1,

- norm_layer: Optional[Callable[..., nn.Module]] = None,

- activation_layer: Optional[Callable[..., nn.Module]] = None):

- padding = (kernel_size - 1) // 2

- if norm_layer is None:

- norm_layer = nn.BatchNorm2d

- if activation_layer is None:

- activation_layer = nn.ReLU6

- super(ConvBNActivation, self).__init__(nn.Conv2d(in_channels=in_planes,

- out_channels=out_planes,

- kernel_size=kernel_size,

- stride=stride,

- padding=padding,

- groups=groups,

- bias=False),

- norm_layer(out_planes),

- activation_layer(inplace=True))

-

-

- class SqueezeExcitation(nn.Module):

- def __init__(self, input_c: int, squeeze_factor: int = 4):

- super(SqueezeExcitation, self).__init__()

- squeeze_c = _make_divisible(input_c // squeeze_factor, 8)

- self.fc1 = nn.Conv2d(input_c, squeeze_c, 1)

- self.fc2 = nn.Conv2d(squeeze_c, input_c, 1)

-

- def forward(self, x: Tensor) -> Tensor:

- scale = F.adaptive_avg_pool2d(x, output_size=(1, 1))

- scale = self.fc1(scale)

- scale = F.relu(scale, inplace=True)

- scale = self.fc2(scale)

- scale = F.hardsigmoid(scale, inplace=True)

- return scale * x

-

-

- class InvertedResidualConfig:

- def __init__(self,

- input_c: int,

- kernel: int,

- expanded_c: int,

- out_c: int,

- use_se: bool,

- activation: str,

- stride: int,

- width_multi: float):

- self.input_c = self.adjust_channels(input_c, width_multi)

- self.kernel = kernel

- self.expanded_c = self.adjust_channels(expanded_c, width_multi)

- self.out_c = self.adjust_channels(out_c, width_multi)

- self.use_se = use_se

- self.use_hs = activation == "HS" # whether using h-swish activation

- self.stride = stride

-

- @staticmethod

- def adjust_channels(channels: int, width_multi: float):

- return _make_divisible(channels * width_multi, 8)

-

-

- class InvertedResidual(nn.Module):

- def __init__(self,

- cnf: InvertedResidualConfig,

- norm_layer: Callable[..., nn.Module]):

- super(InvertedResidual, self).__init__()

-

- if cnf.stride not in [1, 2]:

- raise ValueError("illegal stride value.")

-

- self.use_res_connect = (cnf.stride == 1 and cnf.input_c == cnf.out_c)

-

- layers: List[nn.Module] = []

- activation_layer = nn.Hardswish if cnf.use_hs else nn.ReLU

-

- # expand

- if cnf.expanded_c != cnf.input_c:

- layers.append(ConvBNActivation(cnf.input_c,

- cnf.expanded_c,

- kernel_size=1,

- norm_layer=norm_layer,

- activation_layer=activation_layer))

-

- # depthwise

- layers.append(ConvBNActivation(cnf.expanded_c,

- cnf.expanded_c,

- kernel_size=cnf.kernel,

- stride=cnf.stride,

- groups=cnf.expanded_c,

- norm_layer=norm_layer,

- activation_layer=activation_layer))

-

- if cnf.use_se:

- layers.append(SqueezeExcitation(cnf.expanded_c))

-

- # project

- layers.append(ConvBNActivation(cnf.expanded_c,

- cnf.out_c,

- kernel_size=1,

- norm_layer=norm_layer,

- activation_layer=nn.Identity))

-

- self.block = nn.Sequential(*layers)

- self.out_channels = cnf.out_c

- self.is_strided = cnf.stride > 1

-

- def forward(self, x: Tensor) -> Tensor:

- result = self.block(x)

- if self.use_res_connect:

- result += x

-

- return result

-

-

- class MobileNetV3(nn.Module):

- def __init__(self,

- inverted_residual_setting: List[InvertedResidualConfig],

- last_channel: int,

- num_classes: int = 1000,

- block: Optional[Callable[..., nn.Module]] = None,

- norm_layer: Optional[Callable[..., nn.Module]] = None):

- super(MobileNetV3, self).__init__()

-

- if not inverted_residual_setting:

- raise ValueError("The inverted_residual_setting should not be empty.")

- elif not (isinstance(inverted_residual_setting, List) and

- all([isinstance(s, InvertedResidualConfig) for s in inverted_residual_setting])):

- raise TypeError("The inverted_residual_setting should be List[InvertedResidualConfig]")

-

- if block is None:

- block = InvertedResidual

-

- if norm_layer is None:

- norm_layer = partial(nn.BatchNorm2d, eps=0.001, momentum=0.01)

-

- layers: List[nn.Module] = []

-

- # building first layer

- firstconv_output_c = inverted_residual_setting[0].input_c

- layers.append(ConvBNActivation(3,

- firstconv_output_c,

- kernel_size=3,

- stride=2,

- norm_layer=norm_layer,

- activation_layer=nn.Hardswish))

- # building inverted residual blocks

- for cnf in inverted_residual_setting:

- layers.append(block(cnf, norm_layer))

-

- # building last several layers

- lastconv_input_c = inverted_residual_setting[-1].out_c

- lastconv_output_c = 6 * lastconv_input_c

- layers.append(ConvBNActivation(lastconv_input_c,

- lastconv_output_c,

- kernel_size=1,

- norm_layer=norm_layer,

- activation_layer=nn.Hardswish))

- self.features = nn.Sequential(*layers)

- self.avgpool = nn.AdaptiveAvgPool2d(1)

- self.classifier = nn.Sequential(nn.Linear(lastconv_output_c, last_channel),

- nn.Hardswish(inplace=True),

- nn.Dropout(p=0.2, inplace=True),

- nn.Linear(last_channel, num_classes))

-

- # initial weights

- for m in self.modules():

- if isinstance(m, nn.Conv2d):

- nn.init.kaiming_normal_(m.weight, mode="fan_out")

- if m.bias is not None:

- nn.init.zeros_(m.bias)

- elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

- nn.init.ones_(m.weight)

- nn.init.zeros_(m.bias)

- elif isinstance(m, nn.Linear):

- nn.init.normal_(m.weight, 0, 0.01)

- nn.init.zeros_(m.bias)

-

- def _forward_impl(self, x: Tensor) -> Tensor:

- x = self.features(x)

- x = self.avgpool(x)

- x = torch.flatten(x, 1)

- x = self.classifier(x)

-

- return x

-

- def forward(self, x: Tensor) -> Tensor:

- return self._forward_impl(x)

-

-

- def mobilenet_v3_large(num_classes: int = 1000,

- reduced_tail: bool = False) -> MobileNetV3:

- """

- Constructs a large MobileNetV3 architecture from

- "Searching for MobileNetV3" <https://arxiv.org/abs/1905.02244>.

- weights_link:

- https://download.pytorch.org/models/mobilenet_v3_large-8738ca79.pth

- Args:

- num_classes (int): number of classes

- reduced_tail (bool): If True, reduces the channel counts of all feature layers

- between C4 and C5 by 2. It is used to reduce the channel redundancy in the

- backbone for Detection and Segmentation.

- """

- width_multi = 1.0

- bneck_conf = partial(InvertedResidualConfig, width_multi=width_multi)

- adjust_channels = partial(InvertedResidualConfig.adjust_channels, width_multi=width_multi)

-

- reduce_divider = 2 if reduced_tail else 1

-

- inverted_residual_setting = [

- # input_c, kernel, expanded_c, out_c, use_se, activation, stride

- bneck_conf(16, 3, 16, 16, False, "RE", 1),

- bneck_conf(16, 3, 64, 24, False, "RE", 2), # C1

- bneck_conf(24, 3, 72, 24, False, "RE", 1),

- bneck_conf(24, 5, 72, 40, True, "RE", 2), # C2

- bneck_conf(40, 5, 120, 40, True, "RE", 1),

- bneck_conf(40, 5, 120, 40, True, "RE", 1),

- bneck_conf(40, 3, 240, 80, False, "HS", 2), # C3

- bneck_conf(80, 3, 200, 80, False, "HS", 1),

- bneck_conf(80, 3, 184, 80, False, "HS", 1),

- bneck_conf(80, 3, 184, 80, False, "HS", 1),

- bneck_conf(80, 3, 480, 112, True, "HS", 1),

- bneck_conf(112, 3, 672, 112, True, "HS", 1),

- bneck_conf(112, 5, 672, 160 // reduce_divider, True, "HS", 2), # C4

- bneck_conf(160 // reduce_divider, 5, 960 // reduce_divider, 160 // reduce_divider, True, "HS", 1),

- bneck_conf(160 // reduce_divider, 5, 960 // reduce_divider, 160 // reduce_divider, True, "HS", 1),

- ]

- last_channel = adjust_channels(1280 // reduce_divider) # C5

-

- return MobileNetV3(inverted_residual_setting=inverted_residual_setting,

- last_channel=last_channel,

- num_classes=num_classes)

-

-

- def mobilenet_v3_small(num_classes: int = 1000,

- reduced_tail: bool = False) -> MobileNetV3:

- """

- Constructs a large MobileNetV3 architecture from

- "Searching for MobileNetV3" <https://arxiv.org/abs/1905.02244>.

- weights_link:

- https://download.pytorch.org/models/mobilenet_v3_small-047dcff4.pth

- Args:

- num_classes (int): number of classes

- reduced_tail (bool): If True, reduces the channel counts of all feature layers

- between C4 and C5 by 2. It is used to reduce the channel redundancy in the

- backbone for Detection and Segmentation.

- """

- width_multi = 1.0

- bneck_conf = partial(InvertedResidualConfig, width_multi=width_multi)

- adjust_channels = partial(InvertedResidualConfig.adjust_channels, width_multi=width_multi)

-

- reduce_divider = 2 if reduced_tail else 1

-

- inverted_residual_setting = [

- # input_c, kernel, expanded_c, out_c, use_se, activation, stride

- bneck_conf(16, 3, 16, 16, True, "RE", 2), # C1

- bneck_conf(16, 3, 72, 24, False, "RE", 2), # C2

- bneck_conf(24, 3, 88, 24, False, "RE", 1),

- bneck_conf(24, 5, 96, 40, True, "HS", 2), # C3

- bneck_conf(40, 5, 240, 40, True, "HS", 1),

- bneck_conf(40, 5, 240, 40, True, "HS", 1),

- bneck_conf(40, 5, 120, 48, True, "HS", 1),

- bneck_conf(48, 5, 144, 48, True, "HS", 1),

- bneck_conf(48, 5, 288, 96 // reduce_divider, True, "HS", 2), # C4

- bneck_conf(96 // reduce_divider, 5, 576 // reduce_divider, 96 // reduce_divider, True, "HS", 1),

- bneck_conf(96 // reduce_divider, 5, 576 // reduce_divider, 96 // reduce_divider, True, "HS", 1)

- ]

- last_channel = adjust_channels(1024 // reduce_divider) # C5

-

- return MobileNetV3(inverted_residual_setting=inverted_residual_setting,

- last_channel=last_channel,

- num_classes=num_classes)

train和predict均为上面,唯一需要注意的是train和predict代码中的v2应该为v3例如

train中

把第二句改为'./MobileNetV3.pth'

4.2 class_indices.json

- {

- "0": "daisy",

- "1": "dandelion",

- "2": "roses",

- "3": "sunflowers",

- "4": "tulips"

- }

以上就是全部内容了,若有什么疑惑,请多看看官方解释和上面推荐的视频,看到这樂,应该是个认真向上的宝,能点个赞收藏下吗

参考链接:MobilenetV1、V2、V3系列详解_mobilenetv1和v2区别_Turned_MZ的博客-CSDN博客