- 1鸿蒙ArkUI开发-实现增删Tab页签

- 2appium server日志分析_no app sent in, not parsing package/activity

- 323年互联网Java后端面试最全攻略,只花一周时间逼自己快速通关面试_java后端开发应届生如何顺利通过面试和笔试

- 4细说初阶到高阶嵌入式工程师的资质需求

- 5路由的 base 和webpack的publicPath(vite的base)区别_vite publicpath

- 6回溯法的应用- 0-1 背包问题_利用回溯法编程求解0-1背包问题

- 7ASM磁盘管理:从初始化参数到自动化管理的全面解析_oracle asm文件自动管理参数

- 8ArkTs 语法学习

- 9Java——程序的数据类型及基本结构_内容:编程实现以下四个内容要求:定义一个变量num1,值为12;num2,值为12.1

- 10【使用pycocoevalcap中meteor指标时遇到的报错[Errno 32] Broken pipe】_meteor' from 'pycocoevalcap.meteor

hbase日志显示zookeeper连接不稳定KeeperErrorCode = ConnectionLoss_org.apache.hadoop.hbase.shaded.org.apache.zookeepe

赞

踩

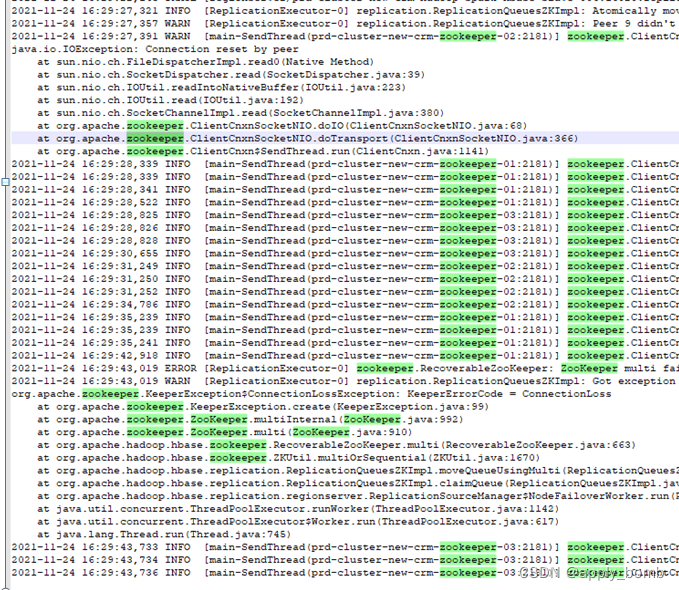

- 2021-11-29 21:49:10,303 WARN [main-SendThread(zookeeper-02:2181)] zookeeper.ClientCnxn: Session 0x7cc057820ec8b2 for server crm-zookeeper-02, unexpected error, closing socket connection and attempting reconnect

- java.io.IOException: 断开的管道

- at sun.nio.ch.FileDispatcherImpl.write0(Native Method)

- at sun.nio.ch.SocketDispatcher.write(SocketDispatcher.java:47)

- at sun.nio.ch.IOUtil.writeFromNativeBuffer(IOUtil.java:93)

- at sun.nio.ch.IOUtil.write(IOUtil.java:65)

- at sun.nio.ch.SocketChannelImpl.write(SocketChannelImpl.java:471)

- at org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:117)

- at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:366)

- at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1141)

- 2021-11-29 21:49:10,404 ERROR [ReplicationExecutor-0] zookeeper.RecoverableZooKeeper: ZooKeeper multi failed after 4 attempts

- 2021-11-29 21:49:10,404 WARN [ReplicationExecutor-0] replication.ReplicationQueuesZKImpl: Got exception in copyQueuesFromRSUsingMulti:

- org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss

- at org.apache.zookeeper.KeeperException.create(KeeperException.java:99)

- at org.apache.zookeeper.ZooKeeper.multiInternal(ZooKeeper.java:992)

- at org.apache.zookeeper.ZooKeeper.multi(ZooKeeper.java:910)

- at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.multi(RecoverableZooKeeper.java:663)

- at org.apache.hadoop.hbase.zookeeper.ZKUtil.multiOrSequential(ZKUtil.java:1670)

- at org.apache.hadoop.hbase.replication.ReplicationQueuesZKImpl.moveQueueUsingMulti(ReplicationQueuesZKImpl.java:291)

- at org.apache.hadoop.hbase.replication.ReplicationQueuesZKImpl.claimQueue(ReplicationQueuesZKImpl.java:210)

- at org.apache.hadoop.hbase.replication.regionserver.ReplicationSourceManager$NodeFailoverWorker.run(ReplicationSourceManager.java:686)

- at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

- at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

- at java.lang.Thread.run(Thread.java:745)

如上,这种日志每过几秒就出现一次,整个hbase日志都在刷ZooKeeper的东西

ZooKeeper连接不稳定,还导致我们生产环境的regionserver时不时就挂掉。

问题分析:

为什么regionserver会挂呢?

原因分析:该reginserver进行主从同步时需要将一个log日志添加到replication queue中,为此需要在zookeeper中添加一个节点,结果操作zookeeper失败

问题:为什么操作zookeeper失败了?

分析:因为zookeeper连接并不稳定,连接经常丢失或者关闭,以下日志平均每分钟出现一次,zookeeper连接断掉后又会自动重试新建连接

问题:为什么zookeeper连接不稳定?

分析:因为reginserver经常向zookeeper发送大小为1.7mb左右的请求,zookeeper认为请求高于阈值(1mb),于是zookeeper把连接关闭了

源码如下

问题:为什么reginserver经常向zookeeper发送大小为1.7mb左右的请求?

分析:因为reginserver正在尝试在zookeeper删除 hbase//replication/rs/new-crm-08,16020,1627407995288这个文件夹下的所有节点,但是由于这个文件夹下节点实在太多,于是批量删除的时候单次请求大小超过了1MB

问题:zookeeper文件夹 hbase//replication/rs/new-crm-08,16020,1627407995288存放的是什么?

分析:是准备主从复制的wal的文件名称。里面的所有wal文件都是7月到9月的。这些wal因为太旧了,在hadoop中早就被运维同事清除了

问题:zookeeper文件夹 hbase//replication/rs/new-crm-08,16020,1627407995288为什么有那么多节点?

分析:疑似主从复制阻塞,导致节点无法被及时删除导致

(后续发现这个znode节点增多疑似是2.02版本hbase的bug)

执行操作:手动命令删除多余的zk节点

结果:hbase恢复正常

相关参考文章:

Hbase2.2.1出现惊人大坑,为什么会出现oldWALs?导致磁盘空间莫名不断增加。 - 程序员大本营

HBase2.0 replication wal znode大量积压问题定位解决-阿里云开发者社区