- 1Ubuntu 配置iptables防火墙(转)

- 2卷积神经网络参数量和计算量的计算_卷积参数量计算

- 3在QML中使用QtWidgets的QFileDialog_qml qfiledialog

- 4统计排序 —— 简单的哈希统计

- 5中科院&地平线开源state-of-the-art行人重识别算法EANet:增强跨域行人重识别中的部件对齐...

- 6Python求解二元一次方程:简单、快速、准确_python解二元一次方程

- 7leap模型重点关注技术,如:能源结构清洁转型、重点领域如工业、交通节能减排降耗、新能源发电系统及发电成本最优化、区域碳达峰碳中和实现路径设计及政策评估

- 8ORB-SLAM2项目实战(4) — ROS下运行ORB-SLAM2稠密地图重建_orb slam2 稠密地图 ros noetic

- 9宝砾微PD车充移动电源双向四通道升降压芯片PL5500

- 10quartus时序逻辑的开始_quartus初始化为二进制?

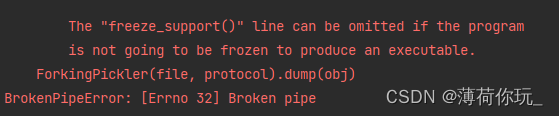

Pytorch BrokenPipeError: [Errno 32] Broken pipe 报错解决

赞

踩

一、报错原因

Windows下多线程的问题,和torch.utils.data.DataLoader类有关。num_workers参数设置不当

from torch.utils.data import DataLoader

...

dataset_train = DataLoader(train_data, batch_size=batch_size, shuffle=True, num_workers=16)

dataset_test = DataLoader(test_data, batch_size=batch_size, shuffle=False, num_workers=16)

- 1

- 2

- 3

- 4

num_workers参数官方API解释:num_workers (int, optional) – how many subprocesses to use for data loading. 0 means that the data will be loaded in the main process. (default: 0)

该参数是指在进行数据集加载时,启用的线程数目。num_workers参数必须大于等于0,0的话表示数据集加载在主进程中进行,大于0表示通过多个进程来提升数据集加载速度。默认值为0。

二、解决方法

- 将

num_workers值设为0

from torch.utils.data import DataLoader

...

dataset_train = DataLoader(train_data, batch_size=batch_size, shuffle=True, num_workers=0)

dataset_test = DataLoader(test_data, batch_size=batch_size, shuffle=False, num_workers=0)

- 1

- 2

- 3

- 4

- 如果

num_workers的值大于0,要将运行的部分放进if __name__ == '__main__':才不会报错:

from torch.utils.data import DataLoader

...

if __name__ == '__main__':

dataset_train = DataLoader(train_data, batch_size=batch_size, shuffle=True, num_workers=16)

dataset_test = DataLoader(test_data, batch_size=batch_size, shuffle=False, num_workers=16)

- 1

- 2

- 3

- 4

- 5

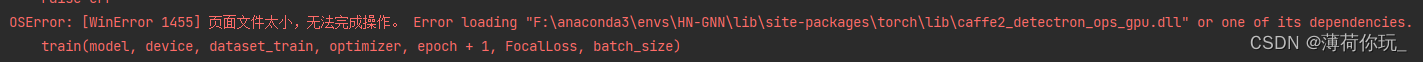

- 如果将运行部分放进

main方法里面还报错,一般是num_workers设置太大了。可以调小一点

OSError: [WinError 1455] 页面文件太小,无法完成操作。 Error loading “F:\anaconda3\envs\xxx\lib\site-packages\torch\lib\caffe2_detectron_ops_gpu.dll” or one of its dependencies.

train(model, device, dataset_train, optimizer, epoch + 1, FocalLoss, batch_size)

num_workers参数设置技巧:

数据集较小时(小于2W)建议num_works不用管默认就行,因为用了反而比没用慢。

当数据集较大时建议采用,num_works一般设置为(CPU线程数±1)为最佳,可以用以下代码找出最佳num_works:

import time import torch.utils.data as d import torchvision import torchvision.transforms as transforms if __name__ == '__main__': BATCH_SIZE = 100 transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (0.5,))]) train_set = torchvision.datasets.MNIST('\mnist', download=True, train=True, transform=transform) # data loaders train_loader = d.DataLoader(train_set, batch_size=BATCH_SIZE, shuffle=True) for num_workers in range(20): train_loader = d.DataLoader(train_set, batch_size=BATCH_SIZE, shuffle=True, num_workers=num_workers) # training ... start = time.time() for epoch in range(1): for step, (batch_x, batch_y) in enumerate(train_loader): pass end = time.time() print('num_workers is {} and it took {} seconds'.format(num_workers, end - start))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

参考文章:

https://blog.csdn.net/Ginomica_xyx/article/details/113745596

https://blog.csdn.net/qq_41196472/article/details/106393994