热门标签

热门文章

- 1最强开源模型来了!一文详解 Stable Diffusion 3 Medium 特点及用法

- 2免费AI网站,AI人工智能写作+在线AI绘画midjourney_免费网页ai

- 3零信任架构的实施规划——针对联邦系统管理员的规划指南_是nist标准《零信任架构》白皮书中列举的技术方案

- 4西门子S7_1200与E6C2_CWZ6C编码器设置_s7-1200与旋转编码器接线图

- 5LVDS硬件设计

- 6使用shedlock实现分布式互斥执行

- 7Git之Idea操作git_idea 登录git

- 82024HVV蓝队初级面试合集(非常详细)零基础入门到精通,收藏这一篇就够了_2024hvv面试题目

- 9毕业设计:基于java的企业员工信息管理系统设计与实现_员工信息查询功能的设计与实现

- 10视频服务器(4) webrtc-streamer(windows下卡住了)

当前位置: article > 正文

SpringAI项目之Ollama大模型工具【聊天机器人】

作者:木道寻08 | 2024-07-12 18:24:54

赞

踩

SpringAI项目之Ollama大模型工具【聊天机器人】

备注:

(1)大模型Ollama工具 安装 千问 或 llama3大模型

(2)SpringAI是新的Spring依赖,JDK17以上,Springboot3.2以上版本。

SpringAI项目开发【聊天机器人】

1、pom.xml依赖引入:

(1)首先构建一个springboot的web项目:

- <dependency>

- <groupId>org.springframework.boot</groupId>

- <artifactId>spring-boot-starter-web</artifactId>

- </dependency>

(2)引入SpringAI大模型依赖 -- spring-ai-ollama

- <dependency>

- <groupId>org.springframework.ai</groupId>

- <artifactId>spring-ai-ollama-spring-boot-starter</artifactId>

- <version>1.0.0-SNAPSHOT</version>

- </dependency>

- <!-- 因为maven暂时下载不了SpringAIOllama依赖,引用Spring快照依赖包 -->

- <repositories>

- <repository>

- <id>spring-snapshots</id>

- <name>Spring Snapshots</name>

- <url>https://repo.spring.io/snapshot</url>

- <releases>

- <enabled>false</enabled>

- </releases>

- </repository>

- </repositories>

总结:完整的pom.xml

- <?xml version="1.0" encoding="UTF-8"?>

- <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

- xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

- <modelVersion>4.0.0</modelVersion>

- <parent>

- <groupId>org.springframework.boot</groupId>

- <artifactId>spring-boot-starter-parent</artifactId>

- <version>3.2.4</version>

- <relativePath/> <!-- lookup parent from repository -->

- </parent>

- <groupId>com.new3s</groupId>

- <artifactId>springAiTest428</artifactId>

- <version>1.0-SNAPSHOT</version>

- <name>springAiTest428</name>

- <description>springAiTest428</description>

-

- <properties>

- <java.version>22</java.version>

- </properties>

- <dependencies>

- <dependency>

- <groupId>org.springframework.boot</groupId>

- <artifactId>spring-boot-starter-web</artifactId>

- </dependency>

-

- <dependency>

- <groupId>org.springframework.ai</groupId>

- <artifactId>spring-ai-ollama-spring-boot-starter</artifactId>

- <version>1.0.0-SNAPSHOT</version>

- </dependency>

-

- </dependencies>

-

- <repositories>

- <repository>

- <id>spring-snapshots</id>

- <name>Spring Snapshots</name>

- <url>https://repo.spring.io/snapshot</url>

- <releases>

- <enabled>false</enabled>

- </releases>

- </repository>

- </repositories>

- </project>

2、创建一个启动类

- package com.company;

-

- import org.springframework.boot.SpringApplication;

- import org.springframework.boot.autoconfigure.SpringBootApplication;

-

- @SpringBootApplication

- public class OllamaApplcation {

-

- public static void main(String[] args) {

- SpringApplication.run(OllamaApplcation.class, args);

- System.out.println("Hi, Spring AI Ollama!");

- }

-

- }

3、创建Controller

1> 千问的Controller

- package com.company.controller;

-

- import jakarta.annotation.Resource;

- import org.springframework.ai.chat.ChatResponse;

- import org.springframework.ai.chat.prompt.Prompt;

- import org.springframework.ai.ollama.OllamaChatClient;

- import org.springframework.ai.ollama.api.OllamaOptions;

- import org.springframework.beans.factory.annotation.Autowired;

- import org.springframework.web.bind.annotation.RequestMapping;

- import org.springframework.web.bind.annotation.RequestParam;

- import org.springframework.web.bind.annotation.RestController;

-

-

- @RestController

- public class QianWenController {

-

- @Resource

- private OllamaChatClient ollamaChatClient;

-

- @RequestMapping(value = "/qianwen-ai")

- private Object ollama(@RequestParam(value = "msg") String msg) {

- String called = ollamaChatClient.call(msg);

- System.out.println(called);

- return called;

- }

-

- @RequestMapping(value = "/qianwen-ai2")

- private Object ollama2(@RequestParam(value = "msg") String msg) {

- ChatResponse chatResponse = ollamaChatClient.call(new Prompt(msg, OllamaOptions.create()

- // 使用哪个模型:qwen:0.5b-chat模型

- .withModel("qwen:0.5b-chat")

- // 温度:温度值越高,准确率下降;温度值越低,准确率提高了

- .withTemperature(0.4F)

- ));

-

- //chatResponse.getResult().getOutput().getContent();

- System.out.println(chatResponse.getResult());

- return chatResponse.getResult();

- }

-

- }

2> llama3的Controller

- package com.company.controller;

-

- import jakarta.annotation.Resource;

- import org.springframework.ai.chat.ChatResponse;

- import org.springframework.ai.chat.prompt.Prompt;

- import org.springframework.ai.ollama.OllamaChatClient;

- import org.springframework.ai.ollama.api.OllamaOptions;

- import org.springframework.web.bind.annotation.RequestMapping;

- import org.springframework.web.bind.annotation.RequestParam;

- import org.springframework.web.bind.annotation.RestController;

-

- @RestController

- public class LlamaController {

-

- @Resource

- private OllamaChatClient ollamaChatClient;

-

- @RequestMapping(value = "/llama-ai")

- private Object ollama(@RequestParam(value = "msg") String msg) {

- String called = ollamaChatClient.call(msg);

- System.out.println(called);

- return called;

- }

-

- @RequestMapping(value = "/llama-ai2")

- private Object ollama2(@RequestParam(value = "msg") String msg) {

- ChatResponse chatResponse = ollamaChatClient.call(new Prompt(msg, OllamaOptions.create()

- // 使用哪个模型:llama3模型

- .withModel("llama3:8b")

- // 温度:温度值越高,准确率下降;温度值越低,准确率提高了

- .withTemperature(0.4F)

- ));

-

- System.out.println(chatResponse.getResult().getOutput());

- return chatResponse.getResult().getOutput();

- }

-

- }

4、资源配置【注意:Ollama的默认端口是11434】

- spring:

- ai:

- ollma:

- base-url: http://localhost:11434

- chat:

- options:

- # 配置文件指定时,现在程序中指定的模型,程序没有指定模型在对应查找配置中的模型

- # model: qwen:0.5b-chat

- model: llama3:8b

-

-

5、模型的运行结果

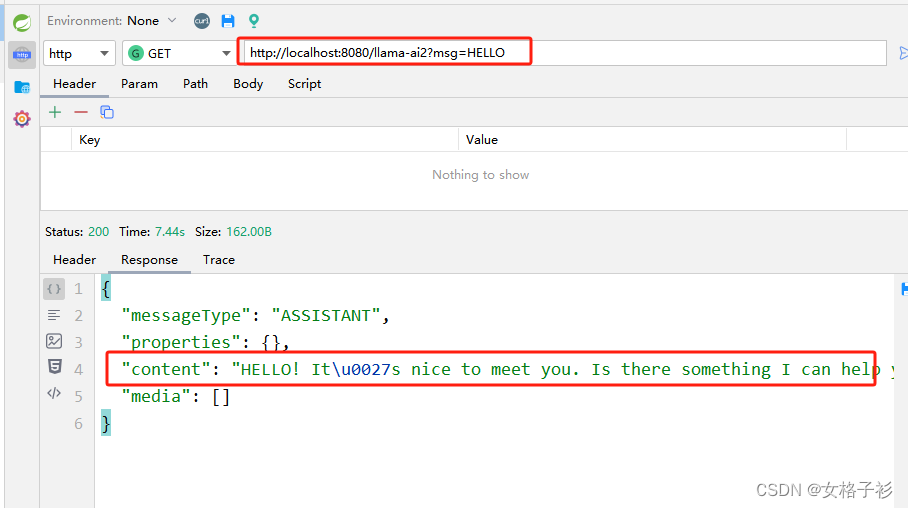

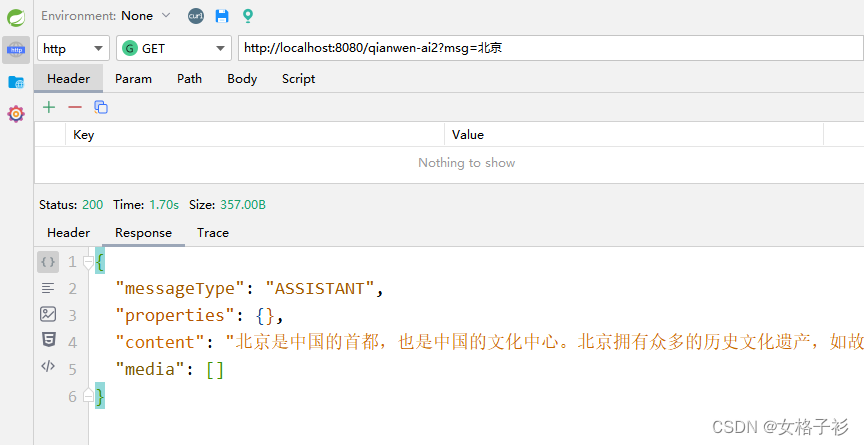

1> 调用llama3模型的对话接口

2> 调用qianwen模型的对话接口

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/木道寻08/article/detail/815431

推荐阅读

相关标签