实践:基于双向LSTM模型完成文本分类任务_lstm文本分类模型

赞

踩

目录

5.1 使用Pytorch内置的单向LSTM进行文本分类实验

编辑 5.2 使用Paddle内置的单向LSTM进行文本分类实验

电影评论可以蕴含丰富的情感:比如喜欢、讨厌、等等.情感分析(Sentiment Analysis)是为一个文本分类问题,即使用判定给定的一段文本信息表达的情感属于积极情绪,还是消极情绪.

本实践使用 IMDB 电影评论数据集,使用双向 LSTM 对电影评论进行情感分析.

1 数据处理

IMDB电影评论数据集是一份关于电影评论的经典二分类数据集.IMDB 按照评分的高低筛选出了积极评论和消极评论,如果评分 ,则认为是积极评论;如果评分

,则认为是消极评论.数据集包含训练集和测试集数据,数量各为 25000 条,每条数据都是一段用户关于某个电影的真实评价,以及观众对这个电影的情感倾向,其目录结构如下所示:

LSTM 模型不能直接处理文本数据,需要先将文本中单词转为向量表示,称为词向量(Word Embedding).为了提高转换效率,通常会事先把文本的每个单词转换为数字 ID,再使用第节中介绍的方法进行向量转换.因此,需要准备一个词典(Vocabulary),将文本中的每个单词转换为它在词典中的序号 ID.同时还要设置一个特殊的词 [UNK],表示未知词.在处理文本时,如果碰到不在词表的词,一律按 [UNK] 处理.

首先展示一下我都项目目录

在lstm.py中引入头文件(lstm.py为本次实验的主要文件,extend_1.py和extend2.py为实验书中的扩展部分),并且由于本人经受上一次LSTM一跑半个小时的荼毒,这次我将代码改为了gpu运行

- import os

- import torch

- import torch.nn as nn

- from torch.utils.data import Dataset

- from utils.data import load_vocab

- from functools import partial

- import time

- import random

- import numpy as np

- from nndl import Accuracy, RunnerV3

-

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

1.1 数据加载

原始训练集和测试集数据分别25000条,本节将原始的测试集平均分为两份,分别作为验证集和测试集,存放于./dataset目录下。使用如下代码便可以将数据加载至内存:

- def load_imdb_data(path):

- assert os.path.exists(path)

- trainset, devset, testset = [], [], []

- with open(os.path.join(path, "train.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- trainset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "dev.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- devset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "test.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- testset.append((sentence, sentence_label))

-

- return trainset, devset, testset

-

-

- # 加载IMDB数据集

- train_data, dev_data, test_data = load_imdb_data("./dataset/")

- # # 打印一下加载后的数据样式

- print(train_data[4])

输出结果如下:

![]()

从输出结果看,加载后的每条样本包含两部分内容:文本串和标签。

1.2 构造Dataset类

首先,我们构造IMDBDataset类用于数据管理,它继承自torch.utils.data.DataSet类。

由于这里的输入是文本序列,需要先将其中的每个词转换为该词在词表中的序号 ID,然后根据词表ID查询这些词对应的词向量,在获得词向量后会将其输入至模型进行后续计算。可以使用IMDBDataset类中的words_to_id方法实现这个功能。 具体而言,利用词表word2id_dict将序列中的每个词映射为对应的数字编号,便于进一步转为为词向量。当序列中的词没有包含在词表时,默认会将该词用[UNK]代替。words_to_id方法利用一个如图6.14所示的哈希表来进行转换。

代码实现如下:

- class IMDBDataset(Dataset):

- def __init__(self, examples, word2id_dict):

- super(IMDBDataset, self).__init__()

- # 词典,用于将单词转为字典索引的数字

- self.word2id_dict = word2id_dict

- # 加载后的数据集

- self.examples = self.words_to_id(examples)

-

- def words_to_id(self, examples):

- tmp_examples = []

- for idx, example in enumerate(examples):

- seq, label = example

- # 将单词映射为字典索引的ID, 对于词典中没有的单词用[UNK]对应的ID进行替代

- seq = [self.word2id_dict.get(word, self.word2id_dict['[UNK]']) for word in seq.split(" ")]

- label = int(label)

- tmp_examples.append([seq, label])

- return tmp_examples

-

- def __getitem__(self, idx):

- seq, label = self.examples[idx]

- return seq, label

-

- def __len__(self):

- return len(self.examples)

-

-

- # 加载词表

- word2id_dict = load_vocab("./dataset/vocab.txt")

-

- # 实例化Dataset

- train_set = IMDBDataset(train_data, word2id_dict)

- dev_set = IMDBDataset(dev_data, word2id_dict)

- test_set = IMDBDataset(test_data, word2id_dict)

-

- print('训练集样本数:', len(train_set))

- print('样本示例:', train_set[4])

load_vocab函数,位于utils文件夹下的data.py中

- import os

-

-

- def load_vocab(path):

- assert os.path.exists(path)

- words = []

- with open(path, "r", encoding="utf-8") as f:

- words = f.readlines()

- words = [word.strip() for word in words if word.strip()]

- word2id = dict(zip(words, range(len(words))))

- return word2id

输出结果如下:

1.3 封装DataLoader

在构建 Dataset 类之后,我们构造对应的 DataLoader,用于批次数据的迭代.和前几章的 DataLoader 不同,这里的 DataLoader 需要引入下面两个功能:

- 长度限制:需要将序列的长度控制在一定的范围内,避免部分数据过长影响整体训练效果

- 长度补齐:神经网络模型通常需要同一批处理的数据的序列长度是相同的,然而在分批时通常会将不同长度序列放在同一批,因此需要对序列进行补齐处理.

对于长度限制,我们使用max_seq_len参数对于过长的文本进行截断.

对于长度补齐,我们先统计该批数据中序列的最大长度,并将短的序列填充一些没有特殊意义的占位符 [PAD],将长度补齐到该批次的最大长度,这样便能使得同一批次的数据变得规整.比如给定两个句子:

- 句子1: This movie was craptacular.

- 句子2: I got stuck in traffic on the way to the theater.

将上面的两个句子补齐,变为:

- 句子1: This movie was craptacular [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD]

- 句子2: I got stuck in traffic on the way to the theater

具体来讲,本节定义了一个collate_fn函数来做数据的截断和填充. 该函数可以作为回调函数传入 DataLoader,DataLoader 在返回一批数据之前,调用该函数去处理数据,并返回处理后的序列数据和对应标签。

另外,使用[PAD]占位符对短序列填充后,再进行文本分类任务时,默认无须使用[PAD]位置,因此需要使用变量seq_lens来表示序列中非[PAD]位置的真实长度。seq_lens可以在collate_fn函数处理批次数据时进行获取并返回。需要注意的是,由于RunnerV3类默认按照输入数据和标签两类信息获取数据,因此需要将序列数据和序列长度组成元组作为输入数据进行返回,以方便RunnerV3解析数据。

- def collate_fn(batch_data, pad_val=0, max_seq_len=256):

- seqs, seq_lens, labels = [], [], []

- max_len = 0

- for example in batch_data:

- seq, label = example

- # 对数据序列进行截断

- seq = seq[:max_seq_len]

- # 对数据截断并保存于seqs中

- seqs.append(seq)

- seq_lens.append(len(seq))

- labels.append(label)

- # 保存序列最大长度

- max_len = max(max_len, len(seq))

- # 对数据序列进行填充至最大长度

- for i in range(len(seqs)):

- seqs[i] = seqs[i] + [pad_val] * (max_len - len(seqs[i]))

-

- # return (torch.tensor(seqs), torch.tensor(seq_lens)), torch.tensor(labels)

- return (torch.tensor(seqs).to(device), torch.tensor(seq_lens)), torch.tensor(labels).to(device)

这里需要将返回的处理后的seqs和labels在后面要作为参数训练,本次训练在gpu上进行,所以要将二者返回时加上.to(device)

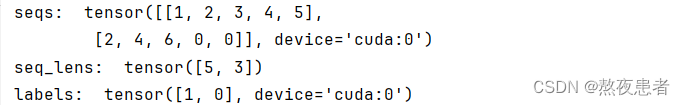

下面我们自定义一批数据来测试一下collate_fn函数的功能,这里假定一下max_seq_len为5,然后定义序列长度分别为6和3的两条数据,传入collate_fn函数中。

- max_seq_len = 5

- batch_data = [[[1, 2, 3, 4, 5, 6], 1], [[2, 4, 6], 0]]

- (seqs, seq_lens), labels = collate_fn(batch_data, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- print("seqs: ", seqs)

- print("seq_lens: ", seq_lens)

- print("labels: ", labels)

输出结果如下:

可以看到,原始序列中长度为6的序列被截断为5,同时原始序列中长度为3的序列被填充到5,同时返回了非`[PAD]`的序列长度。

接下来,我们将collate_fn作为回调函数传入DataLoader中, 其在返回一批数据时,可以通过collate_fn函数处理该批次的数据。 这里需要注意的是,这里通过partial函数对collate_fn函数中的关键词参数进行设置,并返回一个新的函数对象作为collate_fn。

在使用DataLoader按批次迭代数据时,最后一批的数据样本数量可能不够设定的batch_size,可以通过参数drop_last来判断是否丢弃最后一个batch的数据。

- max_seq_len = 256

- batch_size = 128

- collate_fn = partial(collate_fn, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size,

- shuffle=True, drop_last=False, collate_fn=collate_fn)

- dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

- test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

2 模型构建

本实践的整个模型结构如图

由如下几部分组成:

(1)嵌入层:将输入的数字序列进行向量化,即将每个数字映射为向量。这里直接使用Pytorch API:torch.nn.Embedding来完成。

> class torch.nn.Embedding(num_embeddings, embedding_dim, padding_idx=None, sparse=False, weight_attr=None, name=None)

该API有两个重要的参数:num_embeddings表示需要用到的Embedding的数量。embedding_dim表示嵌入向量的维度。

torch.nn.Embedding会根据[num\_embeddings, embedding\_dim]自动构造一个二维嵌入矩阵。参数padding_idx是指用来补齐序列的占位符[PAD]对应的词表ID,那么在训练过程中遇到此ID时,其参数及对应的梯度将会以0进行填充。在实现中为了简单起见,我们通常会将[PAD]放在词表中的第一位,即对应的ID为0。

(2)双向LSTM层:接收向量序列,分别用前向和反向更新循环单元。这里我们直接使用Pytorch API:torch.nn.LSTM来完成。只需要在定义LSTM时设置参数bidirectional为True,便可以直接使用双向LSTM。

> 思考: 在实现双向LSTM时,因为需要进行序列补齐,在计算反向LSTM时,占位符[PAD]是否会对LSTM参数梯度的更新有影响。如果有的话,如何消除影响?

由于占位符不包含有用的信息,它们在模型的前向传播和反向传播过程中都参与了计算,这可能导致梯度的不准确估计和更新。特别是在长序列中,如果大部分是占位符,那么梯度更新可能会受到极大的干扰。

为了消除占位符对LSTM参数梯度更新的影响,可以使用填充掩码(padding mask)来标记占位符的位置,并在计算梯度时将其屏蔽掉。填充掩码是一个与输入序列相同形状的二进制张量,其中占位符位置对应的元素值为0,而其他位置对应的元素值为1。

在计算损失函数时,可以通过将填充掩码与损失函数进行点乘操作,将占位符位置的梯度置零,从而忽略这些位置的梯度更新。这样,只有真实有效的位置参与了梯度的计算和参数的更新。

注:在调用torch.nn.LSTM实现双向LSTM时,可以传入该批次数据的真实长度,torch.nn.LSTM会根据真实序列长度处理数据,对占位符[PAD]进行掩蔽,[PAD]位置将返回零向量。

(3)聚合层:将双向LSTM层所有位置上的隐状态进行平均,作为整个句子的表示。

(4)输出层:输出层,输出分类的几率。这里可以直接调用torch.nn.Linear来完成。

汇聚层算子

汇聚层算子将双向LSTM层所有位置上的隐状态进行平均,作为整个句子的表示。这里我们实现了AveragePooling算子进行隐状态的汇聚,首先利用序列长度向量生成掩码(Mask)矩阵,用于对文本序列中[PAD]位置的向量进行掩蔽,然后将该序列的向量进行相加后取均值。代码实现如下:

将上面各个模块汇总到一起,代码实现如下:

- class AveragePooling(nn.Module):

- def __init__(self):

- super(AveragePooling, self).__init__()

-

- def forward(self, sequence_output, sequence_length):

- # 假设 sequence_length 是一个 PyTorch 张量

- sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

- # 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

- max_len = sequence_output.shape[1]

-

- mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

- mask = mask.to(torch.float32).unsqueeze(-1)

- # 对序列中paddling部分进行mask

-

- sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

- # 对序列中的向量取均值

- batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

- return batch_mean_hidden

模型汇总

将上面的算子汇总,组合为最终的分类模型。代码实现如下:

- class Model_BiLSTM_FC(nn.Module):

- def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

- super(Model_BiLSTM_FC, self).__init__()

- # 词典大小

- self.num_embeddings = num_embeddings

- # 单词向量的维度

- self.input_size = input_size

- # LSTM隐藏单元数量

- self.hidden_size = hidden_size

- # 情感分类类别数量

- self.num_classes = num_classes

- # 实例化嵌入层

- self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

- # 实例化LSTM层

- self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True, bidirectional=True)

- # 实例化聚合层

- self.average_layer = AveragePooling()

- # 实例化输出层

- self.output_layer = nn.Linear(hidden_size * 2, num_classes)

-

- def forward(self, inputs):

- # 对模型输入拆分为序列数据和mask

- input_ids, sequence_length = inputs

- # 获取词向量

- inputs_emb = self.embedding_layer(input_ids)

-

- packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

- enforce_sorted=False)

- # 使用lstm处理数据

- packed_output, _ = self.lstm_layer(packed_input)

- # 解包输出

- sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

- # 使用聚合层聚合sequence_output

- batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

- # 输出文本分类logits

- logits = self.output_layer(batch_mean_hidden)

- return logits

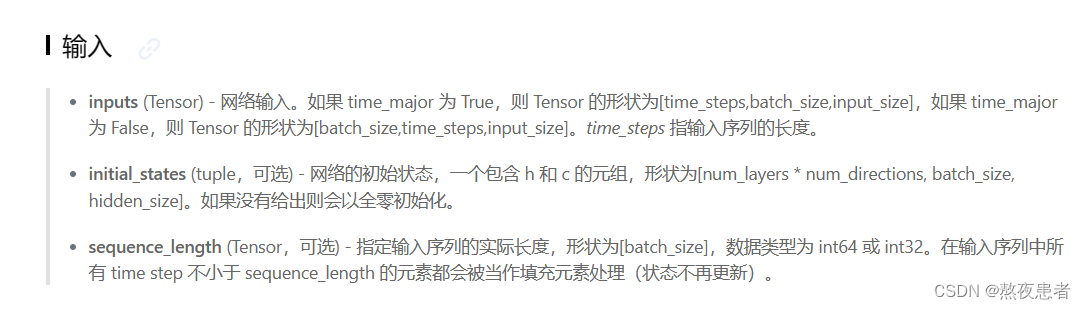

不同于实验书中的代码,我发现pytorch的nn.LSTM不支持变长序列的参数不同于paddleLSTM,需要手动打包成边长序列下面为paddle.nn.LSTM的输入参数,所以转化为pytorch,我们则需要借助torch.nn.utils.rnn.pad_packed_sequence,压缩数据达到一样的目的(当然也有其他方法,这种方式我觉得比较简单)

3 模型训练

本节将基于RunnerV3进行训练,首先指定模型训练的超参,然后设定模型、优化器、损失函数和评估指标,其中损失函数使用 torch.nn.CrossEntropyLoss ,该损失函数内部会对预测结果使用 softmax 进行计算,数字预测模型输出层的输出 logits 不需要使用 softmax 进行归一化,定义完Runner的相关组件后,便可以进行模型训练。代码实现如下

nndl.py 在本节需要实现如下功能

- import torch

- import matplotlib.pyplot as plt

-

-

- class RunnerV3(object):

- def __init__(self, model, optimizer, loss_fn, metric, **kwargs):

- self.model = model

- self.optimizer = optimizer

- self.loss_fn = loss_fn

- self.metric = metric # 只用于计算评价指标

-

- # 记录训练过程中的评价指标变化情况

- self.dev_scores = []

-

- # 记录训练过程中的损失函数变化情况

- self.train_epoch_losses = [] # 一个epoch记录一次loss

- self.train_step_losses = [] # 一个step记录一次loss

- self.dev_losses = []

-

- # 记录全局最优指标

- self.best_score = 0

-

- def train(self, train_loader, dev_loader=None, **kwargs):

- # 将模型切换为训练模式

- self.model.train()

-

- # 传入训练轮数,如果没有传入值则默认为0

- num_epochs = kwargs.get("num_epochs", 0)

- # 传入log打印频率,如果没有传入值则默认为100

- log_steps = kwargs.get("log_steps", 100)

- # 评价频率

- eval_steps = kwargs.get("eval_steps", 0)

-

- # 传入模型保存路径,如果没有传入值则默认为"best_model.pdparams"

- save_path = kwargs.get("save_path", "best_model.pdparams")

-

- custom_print_log = kwargs.get("custom_print_log", None)

-

- # 训练总的步数

- num_training_steps = num_epochs * len(train_loader)

-

- if eval_steps:

- if self.metric is None:

- raise RuntimeError('Error: Metric can not be None!')

- if dev_loader is None:

- raise RuntimeError('Error: dev_loader can not be None!')

-

- # 运行的step数目

- global_step = 0

- total_acces = []

- total_losses = []

- Iters = []

-

- # 进行num_epochs轮训练

- for epoch in range(num_epochs):

- # 用于统计训练集的损失

- total_loss = 0

-

- for step, data in enumerate(train_loader):

- X, y = data

- # 获取模型预测

- # 计算logits

- logits = self.model(X)

-

- # 将y转换为和logits相同的形状

- acc_y = y.view(-1, 1)

-

- # 计算准确率

- probs = torch.softmax(logits, dim=1)

- pred = torch.argmax(probs, dim=1)

- correct = (pred == acc_y).sum().item()

- total = acc_y.size(0)

- acc = correct / total

- total_acces.append(acc)

- # print(acc.numpy()[0])

-

- loss = self.loss_fn(logits, y) # 默认求mean

- total_loss += loss

- total_losses.append(loss.item())

- Iters.append(global_step)

-

- # 训练过程中,每个step的loss进行保存

- self.train_step_losses.append((global_step, loss.item()))

-

- if log_steps and global_step % log_steps == 0:

- print(

- f"[Train] epoch: {epoch}/{num_epochs}, step: {global_step}/{num_training_steps}, loss: {loss.item():.5f}")

- # 梯度反向传播,计算每个参数的梯度值

- loss.backward()

-

- if custom_print_log:

- custom_print_log(self)

-

- # 小批量梯度下降进行参数更新

- self.optimizer.step()

- # 梯度归零

- self.optimizer.zero_grad()

-

- # 判断是否需要评价

- if eval_steps > 0 and global_step != 0 and \

- (global_step % eval_steps == 0 or global_step == (num_training_steps - 1)):

-

- dev_score, dev_loss = self.evaluate(dev_loader, global_step=global_step)

- print(f"[Evaluate] dev score: {dev_score:.5f}, dev loss: {dev_loss:.5f}")

-

- # 将模型切换为训练模式

- self.model.train()

-

- # 如果当前指标为最优指标,保存该模型

- if dev_score > self.best_score:

- self.save_model(save_path)

- print(

- f"[Evaluate] best accuracy performence has been updated: {self.best_score:.5f} --> {dev_score:.5f}")

- self.best_score = dev_score

-

- global_step += 1

-

- # 当前epoch 训练loss累计值

- trn_loss = (total_loss / len(train_loader)).item()

- # epoch粒度的训练loss保存

- self.train_epoch_losses.append(trn_loss)

-

- draw_process("trainning acc", "green", Iters, total_acces, "trainning acc")

- print("total_acc:")

- print(total_acces)

- print("total_loss:")

-

- print(total_losses)

-

- print("[Train] Training done!")

-

- # 模型评估阶段,使用'paddle.no_grad()'控制不计算和存储梯度

- @torch.no_grad()

- def evaluate(self, dev_loader, **kwargs):

- assert self.metric is not None

-

- # 将模型设置为评估模式

- self.model.eval()

-

- global_step = kwargs.get("global_step", -1)

-

- # 用于统计训练集的损失

- total_loss = 0

-

- # 重置评价

- self.metric.reset()

-

- # 遍历验证集每个批次

- for batch_id, data in enumerate(dev_loader):

- X, y = data

-

- # 计算模型输出

- logits = self.model(X)

-

- # 计算损失函数

- loss = self.loss_fn(logits, y).item()

- # 累积损失

- total_loss += loss

-

- # 累积评价

- self.metric.update(logits, y)

-

- dev_loss = (total_loss / len(dev_loader))

- self.dev_losses.append((global_step, dev_loss))

-

- dev_score = self.metric.accumulate()

- self.dev_scores.append(dev_score)

-

- return dev_score, dev_loss

-

- # 模型评估阶段,使用'paddle.no_grad()'控制不计算和存储梯度

- @torch.no_grad()

- def predict(self, x, **kwargs):

- # 将模型设置为评估模式

- self.model.eval()

- # 运行模型前向计算,得到预测值

- logits = self.model(x)

- return logits

-

- def save_model(self, save_path):

- torch.save(self.model.state_dict(), save_path)

-

- def load_model(self, model_path):

- model_state_dict = torch.load(model_path)

- self.model.load_state_dict(model_state_dict)

-

-

- class Accuracy():

- def __init__(self, is_logist=True):

- # 用于统计正确的样本个数

- self.num_correct = 0

- # 用于统计样本的总数

- self.num_count = 0

-

- self.is_logist = is_logist

-

- def update(self, outputs, labels):

-

- # 判断是二分类任务还是多分类任务,shape[1]=1时为二分类任务,shape[1]>1时为多分类任务

- if outputs.shape[1] == 1: # 二分类

- outputs = torch.squeeze(outputs, dim=-1)

- if self.is_logist:

- # logist判断是否大于0

- preds = torch.tensor((outputs >= 0), dtype=torch.float32)

- else:

- # 如果不是logist,判断每个概率值是否大于0.5,当大于0.5时,类别为1,否则类别为0

- preds = torch.tensor((outputs >= 0.5), dtype=torch.float32)

- else:

- # 多分类时,使用'torch.argmax'计算最大元素索引作为类别

- preds = torch.argmax(outputs, dim=1)

-

- # 获取本批数据中预测正确的样本个数

- labels = torch.squeeze(labels, dim=-1)

- batch_correct = torch.sum(torch.tensor(preds == labels, dtype=torch.float32)).cpu().numpy()

- batch_count = len(labels)

-

- # 更新num_correct 和 num_count

- self.num_correct += batch_correct

- self.num_count += batch_count

-

- def accumulate(self):

- # 使用累计的数据,计算总的指标

- if self.num_count == 0:

- return 0

- return self.num_correct / self.num_count

-

- def reset(self):

- # 重置正确的数目和总数

- self.num_correct = 0

- self.num_count = 0

-

- def name(self):

- return "Accuracy"

-

- def draw_process(title,color,iters,data,label):

- plt.title(title, fontsize=24)

- plt.xlabel("iter", fontsize=20)

- plt.ylabel(label, fontsize=20)

- plt.plot(iters, data,color=color,label=label)

- plt.legend()

- plt.grid()

- print(plt.show())

lstm.py 开始训练

- np.random.seed(0)

- random.seed(0)

- torch.seed()

-

- # 指定训练轮次

- num_epochs = 3

- # 指定学习率

- learning_rate = 0.001

- # 指定embedding的数量为词表长度

- num_embeddings = len(word2id_dict)

- # embedding向量的维度

- input_size = 256

- # LSTM网络隐状态向量的维度

- hidden_size = 256

-

- # 实例化模型

- model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to(device)

- # 指定优化器

- optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

- # 指定损失函数

- loss_fn = nn.CrossEntropyLoss()

- # 指定评估指标

- metric = Accuracy()

- # 实例化Runner

- runner = RunnerV3(model, optimizer, loss_fn, metric)

- # 模型训练

- start_time = time.time()

- runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10, save_path="./checkpoints/best.pdparams")

- end_time = time.time()

- print("time: ", (end_time-start_time))

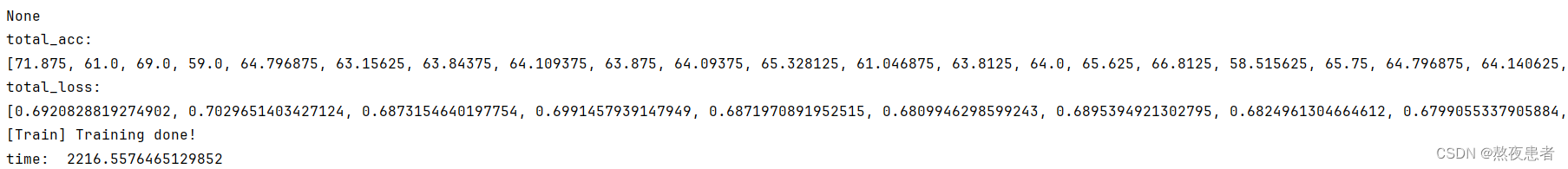

输出结果如下:

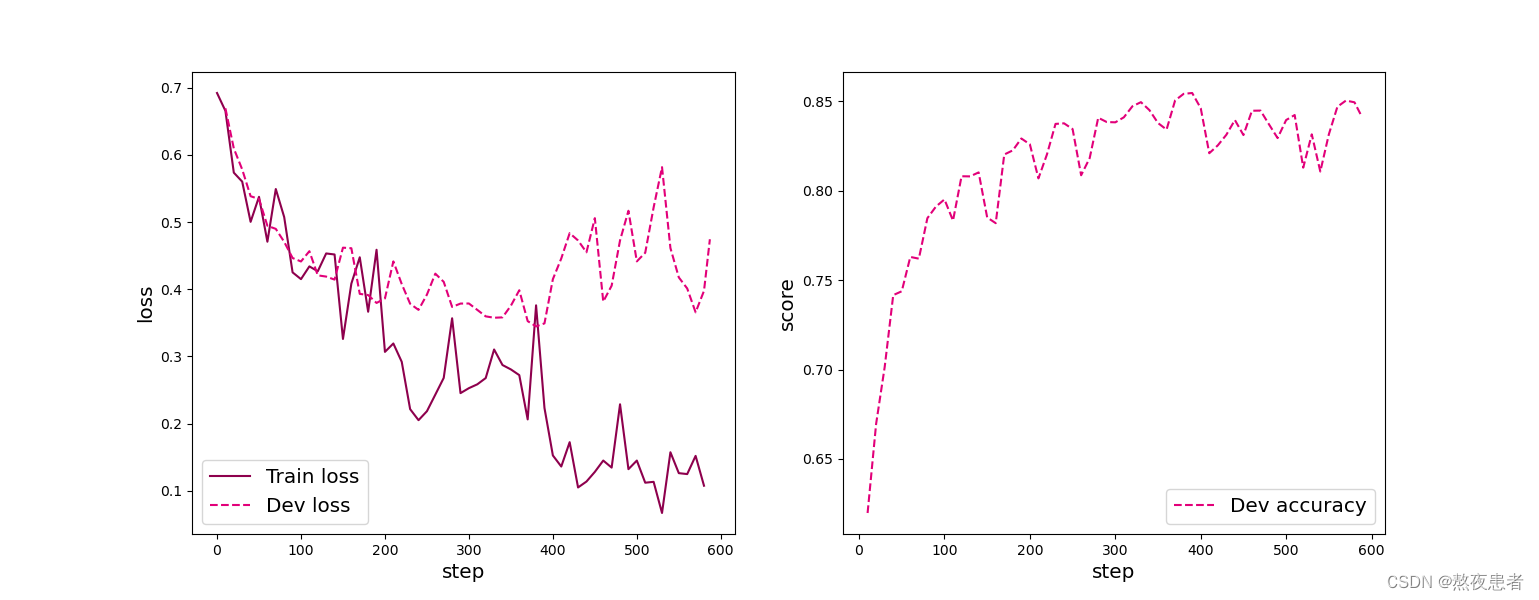

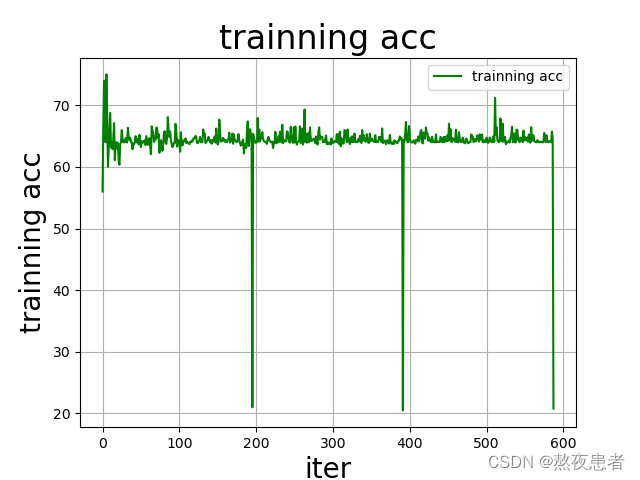

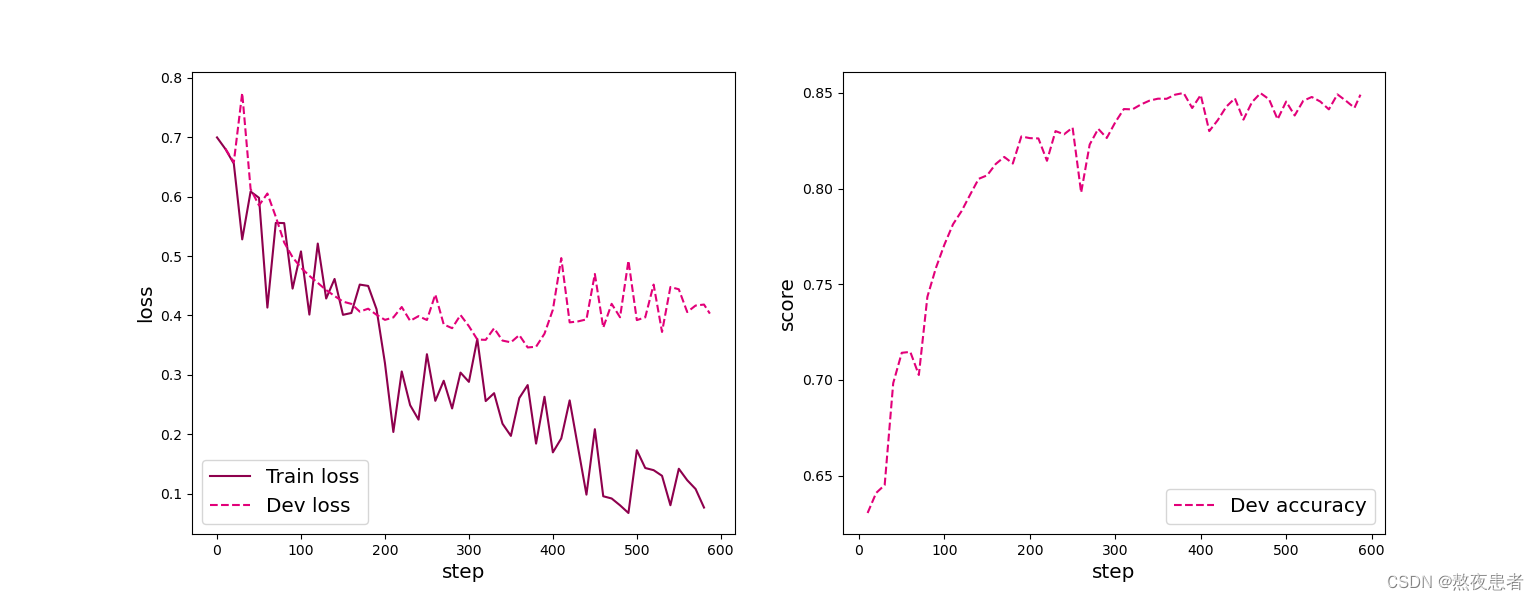

绘制训练过程中在训练集和验证集上的损失图像和在验证集上的准确率图像:

- from nndl import plot_training_loss_acc

-

- # 图像名字

- fig_name = "./images/6.16.pdf"

- # sample_step: 训练损失的采样step,即每隔多少个点选择1个点绘制

- # loss_legend_loc: loss 图像的图例放置位置

- # acc_legend_loc: acc 图像的图例放置位置

- plot_training_loss_acc(runner, fig_name, fig_size=(16,6), sample_step=10, loss_legend_loc="lower left", acc_legend_loc="lower right")

需要在nndl.py 中实现 plot_training_loss_acc,代码如下:

- def plot_training_loss_acc(runner, fig_name, fig_size=(16, 6), sample_step=10, loss_legend_loc="lower left",

- acc_legend_loc="lower left"):

- plt.figure(figsize=fig_size)

-

- plt.subplot(1, 2, 1)

- train_items = runner.train_step_losses[::sample_step]

- train_steps = [x[0] for x in train_items]

- train_losses = [x[1] for x in train_items]

-

- plt.plot(train_steps, train_losses, color='#8E004D', label="Train loss")

-

- while runner.dev_losses[-1][0] == -1:

- runner.dev_losses.pop()

- runner.dev_scores.pop()

- dev_steps = [x[0] for x in runner.dev_losses]

- dev_losses = [x[1] for x in runner.dev_losses]

- plt.plot(dev_steps, dev_losses, color='#E20079', linestyle='--', label="Dev loss")

- # 绘制坐标轴和图例

- plt.ylabel("loss", fontsize='x-large')

- plt.xlabel("step", fontsize='x-large')

- plt.legend(loc=loss_legend_loc, fontsize='x-large')

-

- plt.subplot(1, 2, 2)

- # 绘制评价准确率变化曲线

- plt.plot(dev_steps, runner.dev_scores, color='#E20079', linestyle="--", label="Dev accuracy")

-

- # 绘制坐标轴和图例

- plt.ylabel("score", fontsize='x-large')

- plt.xlabel("step", fontsize='x-large')

- plt.legend(loc=acc_legend_loc, fontsize='x-large')

-

- plt.savefig(fig_name)

- plt.show()

输出如下:

展示了文本分类模型在训练过程中的损失曲线和在验证集上的准确率曲线,其中在损失图像中,实线表示训练集上的损失变化,虚线表示验证集上的损失变化. 可以看到,随着训练过程的进行,训练集的损失不断下降, 验证集上的损失在大概200步后开始上升,这是因为在训练过程中发生了过拟合,可以选择保存在训练过程中在验证集上效果最好的模型来解决这个问题. 从准确率曲线上可以看到,首先在验证集上的准确率大幅度上升,然后大概200步后准确率不再上升,并且由于过拟合的因素,在验证集上的准确率稍微降低。

4 模型评价

加载训练过程中效果最好的模型,然后使用测试集进行测试。

- model_path = "./checkpoints/best.pdparams"

- runner.load_model(model_path)

- accuracy, _ = runner.evaluate(test_loader)

- print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")

输出如下:

![]()

5 模型预测

给定任意的一句话,使用训练好的模型进行预测,判断这句话中所蕴含的情感极性。

- id2label={0:"消极情绪", 1:"积极情绪"}

- text = "this movie is so great. I watched it three times already"

- # 处理单条文本

- sentence = text.split(" ")

- words = [word2id_dict[word] if word in word2id_dict else word2id_dict['[UNK]'] for word in sentence]

- words = words[:max_seq_len]

- sequence_length = torch.tensor([len(words)], dtype=torch.int64)

- words = torch.tensor(words, dtype=torch.int64).unsqueeze(0)

- # 使用模型进行预测

- logits = runner.predict((words.to(device), sequence_length.to(device)))

- max_label_id = torch.argmax(logits, dim=-1).cpu().numpy()[0]

- pred_label = id2label[max_label_id]

- print("Label: ", pred_label)

输出结果:

![]()

5 拓展实验

5.1 使用Pytorch内置的单向LSTM进行文本分类实验

首先,修改模型定义,将 nn.LSTM 中的 bidirectional 设置为 False 以使用单向LSTM模型(也可以删去此参数,默认为False),同时设置线性层的shape为[hidden_size, num_classes]。

- class AveragePooling(nn.Module):

- def __init__(self):

- super(AveragePooling, self).__init__()

-

- def forward(self, sequence_output, sequence_length):

- # 假设 sequence_length 是一个 PyTorch 张量

- sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

- # 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

- max_len = sequence_output.shape[1]

-

- mask = torch.arange(max_len) < sequence_length

- mask = mask.to(torch.float32).unsqueeze(-1)

- # 对序列中paddling部分进行mask

-

- sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

- # 对序列中的向量取均值

- batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

- return batch_mean_hidden

-

-

- class Model_BiLSTM_FC(nn.Module):

- def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

- super(Model_BiLSTM_FC, self).__init__()

- # 词典大小

- self.num_embeddings = num_embeddings

- # 单词向量的维度

- self.input_size = input_size

- # LSTM隐藏单元数量

- self.hidden_size = hidden_size

- # 情感分类类别数量

- self.num_classes = num_classes

- # 实例化嵌入层

- self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

- # 实例化LSTM层

- self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True)

- # 实例化聚合层

- self.average_layer = AveragePooling()

- # 实例化输出层

- self.output_layer = nn.Linear(hidden_size, num_classes)

-

- def forward(self, inputs):

- # 对模型输入拆分为序列数据和mask

- input_ids, sequence_length = inputs

- # 获取词向量

- inputs_emb = self.embedding_layer(input_ids)

-

- packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

- enforce_sorted=False)

- # 使用lstm处理数据

- packed_output, _ = self.lstm_layer(packed_input)

- # 解包输出

- sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

- # 使用聚合层聚合sequence_output

- batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

- # 输出文本分类logits

- logits = self.output_layer(batch_mean_hidden)

- return logits

接下来,基于Paddle的单向模型开始进行训练,代码实现如下:

- np.random.seed(0)

- random.seed(0)

- torch.seed()

-

- # 指定训练轮次

- num_epochs = 3

- # 指定学习率

- learning_rate = 0.001

- # 指定embedding的数量为词表长度

- num_embeddings = len(word2id_dict)

- # embedding向量的维度

- input_size = 256

- # LSTM网络隐状态向量的维度

- hidden_size = 256

-

- # 实例化模型

- model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to(device)

- # 指定优化器

- optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

- # 指定损失函数

- loss_fn = nn.CrossEntropyLoss()

- # 指定评估指标

- metric = Accuracy()

- # 实例化Runner

- runner = RunnerV3(model, optimizer, loss_fn, metric)

- # 模型训练

- start_time = time.time()

- runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10, save_path="./checkpoints/best_forward.pdparams")

- end_time = time.time()

- print("time: ", (end_time-start_time))

其他代码均与LSTM双向代码一致(我也忘了,写了很久了,最后回放全部代码,能跑通的)

5.2 使用Paddle内置的单向LSTM进行文本分类实验

5.2 使用Paddle内置的单向LSTM进行文本分类实验

由于之前实现的LSTM默认只返回最后时刻的隐状态,然而本实验中需要用到所有时刻的隐状态向量,因此需要对自己实现的LSTM进行修改,使其返回序列向量,代码实现如下:

- class LSTM(nn.Module):

- def __init__(self, input_size, hidden_size, Wi_attr=None, Wf_attr=None, Wo_attr=None, Wc_attr=None,

- Ui_attr=None, Uf_attr=None, Uo_attr=None, Uc_attr=None, bi_attr=None, bf_attr=None,

- bo_attr=None, bc_attr=None):

- super(LSTM, self).__init__()

- self.input_size = input_size

- self.hidden_size = hidden_size

-

- # 初始化模型参数

- if Wi_attr is None:

- Wi = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wi = torch.tensor(Wi_attr, dtype=torch.float32)

- self.W_i = torch.nn.Parameter(Wi.to('cuda'))

-

- if Wf_attr is None:

- Wf = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wf = torch.tensor(Wf_attr, dtype=torch.float32)

- self.W_f = torch.nn.Parameter(Wf.to('cuda'))

-

- if Wo_attr is None:

- Wo = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wo = torch.tensor(Wo_attr, dtype=torch.float32)

- self.W_o = torch.nn.Parameter(Wo.to('cuda'))

-

- if Wc_attr is None:

- Wc = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wc = torch.tensor(Wc_attr, dtype=torch.float32)

- self.W_c = torch.nn.Parameter(Wc.to('cuda'))

-

- if Ui_attr is None:

- Ui = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Ui = torch.tensor(Ui_attr, dtype=torch.float32)

- self.U_i = torch.nn.Parameter(Ui.to('cuda'))

-

- if Uf_attr is None:

- Uf = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Uf = torch.tensor(Uf_attr, dtype=torch.float32)

- self.U_f = torch.nn.Parameter(Uf.to('cuda'))

-

- if Uo_attr is None:

- Uo = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Uo = torch.tensor(Uo_attr, dtype=torch.float32)

- self.U_o = torch.nn.Parameter(Uo.to('cuda'))

-

- if Uc_attr is None:

- Uc = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Uc = torch.tensor(Uc_attr, dtype=torch.float32)

- self.U_c = torch.nn.Parameter(Uc.to('cuda'))

-

- if bi_attr is None:

- bi = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bi = torch.tensor(bi_attr, dtype=torch.float32)

- self.b_i = torch.nn.Parameter(bi.to('cuda'))

-

- if bf_attr is None:

- bf = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bf = torch.tensor(bf_attr, dtype=torch.float32)

- self.b_f = torch.nn.Parameter(bf.to('cuda'))

-

- if bo_attr is None:

- bo = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bo = torch.tensor(bo_attr, dtype=torch.float32)

- self.b_o = torch.nn.Parameter(bo.to('cuda'))

-

- if bc_attr is None:

- bc = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bc = torch.tensor(bc_attr, dtype=torch.float32)

- self.b_c = torch.nn.Parameter(bc.to('cuda'))

-

- # 初始化状态向量和隐状态向量

- def init_state(self, batch_size):

- hidden_state = torch.zeros(size=[batch_size, self.hidden_size], dtype=torch.float32).to('cuda')

- cell_state = torch.zeros(size=[batch_size, self.hidden_size], dtype=torch.float32).to('cuda')

- return hidden_state, cell_state

-

- # 定义前向计算

- def forward(self, inputs, states=None):

- # inputs: 输入数据,其shape为batch_size x seq_len x input_size

- batch_size, seq_len, input_size = torch.tensor(inputs).shape

-

- # 初始化起始的单元状态和隐状态向量,其shape为batch_size x hidden_size

- if states is None:

- states = self.init_state(batch_size)

- hidden_state, cell_state = states

-

- # 执行LSTM计算,包括:输入门、遗忘门和输出门、候选内部状态、内部状态和隐状态向量

- for step in range(seq_len):

- # 获取当前时刻的输入数据step_input: 其shape为batch_size x input_size

- step_input = inputs[:, step, :]

- # 计算输入门, 遗忘门和输出门, 其shape为:batch_size x hidden_size

- I_gate = F.sigmoid(torch.matmul(step_input, self.W_i) + torch.matmul(hidden_state, self.U_i) + self.b_i)

- F_gate = F.sigmoid(torch.matmul(step_input, self.W_f) + torch.matmul(hidden_state, self.U_f) + self.b_f)

- O_gate = F.sigmoid(torch.matmul(step_input, self.W_o) + torch.matmul(hidden_state, self.U_o) + self.b_o)

- # 计算候选状态向量, 其shape为:batch_size x hidden_size

- C_tilde = F.tanh(torch.matmul(step_input, self.W_c) + torch.matmul(hidden_state, self.U_c) + self.b_c)

- # 计算单元状态向量, 其shape为:batch_size x hidden_size

- cell_state = F_gate * cell_state + I_gate * C_tilde

- # 计算隐状态向量,其shape为:batch_size x hidden_size

- hidden_state = O_gate * F.tanh(cell_state)

-

- return hidden_state

接下来,修改Model_BiLSTM_FC模型,将`nn.LSTM`换为自己实现的LSTM模型,代码实现如下 :

- class AveragePooling(nn.Module):

- def __init__(self):

- super(AveragePooling, self).__init__()

-

- def forward(self, sequence_output, sequence_length):

- # 假设 sequence_length 是一个 PyTorch 张量

- sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

- # 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

- max_len = sequence_output.shape[1]

-

- mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

- mask = mask.to(torch.float32).unsqueeze(-1)

- # 对序列中paddling部分进行mask

-

- sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

- # 对序列中的向量取均值

- batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

- return batch_mean_hidden

-

-

- class Model_BiLSTM_FC(nn.Module):

- def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

- super(Model_BiLSTM_FC, self).__init__()

- # 词典大小

- self.num_embeddings = num_embeddings

- # 单词向量的维度

- self.input_size = input_size

- # LSTM隐藏单元数量

- self.hidden_size = hidden_size

- # 情感分类类别数量

- self.num_classes = num_classes

- # 实例化嵌入层

- self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

- # 实例化LSTM层

- self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True, bidirectional=True)

- # 实例化聚合层

- self.average_layer = AveragePooling()

- # 实例化输出层

- self.output_layer = nn.Linear(hidden_size * 2, num_classes)

-

- def forward(self, inputs):

- # 对模型输入拆分为序列数据和mask

- input_ids, sequence_length = inputs

- # 获取词向量

- inputs_emb = self.embedding_layer(input_ids)

-

- packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

- enforce_sorted=False)

- # 使用lstm处理数据

- packed_output, _ = self.lstm_layer(packed_input)

- # 解包输出

- sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

- # 使用聚合层聚合sequence_output

- batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

- # 输出文本分类logits

- logits = self.output_layer(batch_mean_hidden)

- return logits

运行并测试,代码如下:

- np.random.seed(0)

- random.seed(0)

- torch.seed()

-

- # 指定训练轮次

- num_epochs = 3

- # 指定学习率

- learning_rate = 0.001

- # 指定embedding的数量为词表长度

- num_embeddings = len(word2id_dict)

- # embedding向量的维度

- input_size = 256

- # LSTM网络隐状态向量的维度

- hidden_size = 256

-

- # 实例化模型

- model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to('cuda')

- # 指定优化器

- optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

- # 指定损失函数

- loss_fn = nn.CrossEntropyLoss()

- # 指定评估指标

- metric = Accuracy()

- # 实例化Runner

- runner = RunnerV3(model, optimizer, loss_fn, metric)

- # 模型训练

- start_time = time.time()

- runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10, save_path="./checkpoints/best_self_forward.pdparams")

- end_time = time.time()

- print("time: ", (end_time-start_time))

-

-

- model_path = "./checkpoints/best_self_forward.pdparams"

- runner.load_model(model_path)

- accuracy, _ = runner.evaluate(test_loader)

- print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")

总结

根据双向LSTM和单向LSTM的对比我们发现,正确率差距不大,但是速度缺相差较大,但理论上说双向LSTM具有比单向LSTM更多的参数,计算量更大,速度很慢,在这里却表现为速度大于单向,所以双向LSTM在有些时候是好于单向LSTM的,并且通过这次实验更能清晰的明白NLP的魅力

我感觉这次的实验难度来说是我这么多次最难的一次,因为有两个函数在邱老师的《神经网络与深度学习》的书中没给代码,需要自己编写(虽然写完了才发现可以去飞浆搜原版paddle的代码,害傻了),并且如果不修改gpu代码跑起来真的很慢,真的体会到老师说的跑半天出去溜达会,回来发现错了,改完接着跑那种感觉,虽然没有半天,跑一次40分钟,最终我也被迫在现实面前低头改了半天gpu,但是伴随而来的又是需要在很多位置都修改变量在gpu,又花了很多时间,但是回顾一下,真的自己修改+编写,感觉这次实验真的很有意义,远远大于之前所有时间带给我的成就感。(PS:头一次这么快发博客,竟然是因为一宿舍等着我的代码,乐死了)

放一下所有代码

utils-data.py

- import os

-

-

- def load_vocab(path):

- assert os.path.exists(path)

- words = []

- with open(path, "r", encoding="utf-8") as f:

- words = f.readlines()

- words = [word.strip() for word in words if word.strip()]

- word2id = dict(zip(words, range(len(words))))

- return word2id

nndl.py

- import torch

- import matplotlib.pyplot as plt

-

- def draw_process(title,color,iters,data,label):

- plt.title(title, fontsize=24)

- plt.xlabel("iter", fontsize=20)

- plt.ylabel(label, fontsize=20)

- plt.plot(iters, data,color=color,label=label)

- plt.legend()

- plt.grid()

- print(plt.show())

-

-

- def plot_training_loss_acc(runner, fig_name, fig_size=(16, 6), sample_step=10, loss_legend_loc="lower left",

- acc_legend_loc="lower left"):

- plt.figure(figsize=fig_size)

-

- plt.subplot(1, 2, 1)

- train_items = runner.train_step_losses[::sample_step]

- train_steps = [x[0] for x in train_items]

- train_losses = [x[1] for x in train_items]

-

- plt.plot(train_steps, train_losses, color='#8E004D', label="Train loss")

-

- while runner.dev_losses[-1][0] == -1:

- runner.dev_losses.pop()

- runner.dev_scores.pop()

- dev_steps = [x[0] for x in runner.dev_losses]

- dev_losses = [x[1] for x in runner.dev_losses]

- plt.plot(dev_steps, dev_losses, color='#E20079', linestyle='--', label="Dev loss")

- # 绘制坐标轴和图例

- plt.ylabel("loss", fontsize='x-large')

- plt.xlabel("step", fontsize='x-large')

- plt.legend(loc=loss_legend_loc, fontsize='x-large')

-

- plt.subplot(1, 2, 2)

- # 绘制评价准确率变化曲线

- plt.plot(dev_steps, runner.dev_scores, color='#E20079', linestyle="--", label="Dev accuracy")

-

- # 绘制坐标轴和图例

- plt.ylabel("score", fontsize='x-large')

- plt.xlabel("step", fontsize='x-large')

- plt.legend(loc=acc_legend_loc, fontsize='x-large')

-

- plt.savefig(fig_name)

- plt.show()

-

-

- class RunnerV3(object):

- def __init__(self, model, optimizer, loss_fn, metric, **kwargs):

- self.model = model

- self.optimizer = optimizer

- self.loss_fn = loss_fn

- self.metric = metric # 只用于计算评价指标

-

- # 记录训练过程中的评价指标变化情况

- self.dev_scores = []

-

- # 记录训练过程中的损失函数变化情况

- self.train_epoch_losses = [] # 一个epoch记录一次loss

- self.train_step_losses = [] # 一个step记录一次loss

- self.dev_losses = []

-

- # 记录全局最优指标

- self.best_score = 0

-

- def train(self, train_loader, dev_loader=None, **kwargs):

- # 将模型切换为训练模式

- self.model.train()

-

- # 传入训练轮数,如果没有传入值则默认为0

- num_epochs = kwargs.get("num_epochs", 0)

- # 传入log打印频率,如果没有传入值则默认为100

- log_steps = kwargs.get("log_steps", 100)

- # 评价频率

- eval_steps = kwargs.get("eval_steps", 0)

-

- # 传入模型保存路径,如果没有传入值则默认为"best_model.pdparams"

- save_path = kwargs.get("save_path", "best_model.pdparams")

-

- custom_print_log = kwargs.get("custom_print_log", None)

-

- # 训练总的步数

- num_training_steps = num_epochs * len(train_loader)

-

- if eval_steps:

- if self.metric is None:

- raise RuntimeError('Error: Metric can not be None!')

- if dev_loader is None:

- raise RuntimeError('Error: dev_loader can not be None!')

-

- # 运行的step数目

- global_step = 0

- total_acces = []

- total_losses = []

- Iters = []

-

- # 进行num_epochs轮训练

- for epoch in range(num_epochs):

- # 用于统计训练集的损失

- total_loss = 0

-

- for step, data in enumerate(train_loader):

- X, y = data

- # 获取模型预测

- # 计算logits

- logits = self.model(X)

-

- # 将y转换为和logits相同的形状

- acc_y = y.view(-1, 1)

-

- # 计算准确率

- probs = torch.softmax(logits, dim=1)

- pred = torch.argmax(probs, dim=1)

- correct = (pred == acc_y).sum().item()

- total = acc_y.size(0)

- acc = correct / total

- total_acces.append(acc)

- # print(acc.numpy()[0])

-

- loss = self.loss_fn(logits, y) # 默认求mean

- total_loss += loss

- total_losses.append(loss.item())

- Iters.append(global_step)

-

- # 训练过程中,每个step的loss进行保存

- self.train_step_losses.append((global_step, loss.item()))

-

- if log_steps and global_step % log_steps == 0:

- print(

- f"[Train] epoch: {epoch}/{num_epochs}, step: {global_step}/{num_training_steps}, loss: {loss.item():.5f}")

- # 梯度反向传播,计算每个参数的梯度值

- loss.backward()

-

- if custom_print_log:

- custom_print_log(self)

-

- # 小批量梯度下降进行参数更新

- self.optimizer.step()

- # 梯度归零

- self.optimizer.zero_grad()

-

- # 判断是否需要评价

- if eval_steps > 0 and global_step != 0 and \

- (global_step % eval_steps == 0 or global_step == (num_training_steps - 1)):

-

- dev_score, dev_loss = self.evaluate(dev_loader, global_step=global_step)

- print(f"[Evaluate] dev score: {dev_score:.5f}, dev loss: {dev_loss:.5f}")

-

- # 将模型切换为训练模式

- self.model.train()

-

- # 如果当前指标为最优指标,保存该模型

- if dev_score > self.best_score:

- self.save_model(save_path)

- print(

- f"[Evaluate] best accuracy performence has been updated: {self.best_score:.5f} --> {dev_score:.5f}")

- self.best_score = dev_score

-

- global_step += 1

-

- # 当前epoch 训练loss累计值

- trn_loss = (total_loss / len(train_loader)).item()

- # epoch粒度的训练loss保存

- self.train_epoch_losses.append(trn_loss)

-

- draw_process("trainning acc", "green", Iters, total_acces, "trainning acc")

- print("total_acc:")

- print(total_acces)

- print("total_loss:")

-

- print(total_losses)

-

- print("[Train] Training done!")

-

- # 模型评估阶段,使用'paddle.no_grad()'控制不计算和存储梯度

- @torch.no_grad()

- def evaluate(self, dev_loader, **kwargs):

- assert self.metric is not None

-

- # 将模型设置为评估模式

- self.model.eval()

-

- global_step = kwargs.get("global_step", -1)

-

- # 用于统计训练集的损失

- total_loss = 0

-

- # 重置评价

- self.metric.reset()

-

- # 遍历验证集每个批次

- for batch_id, data in enumerate(dev_loader):

- X, y = data

-

- # 计算模型输出

- logits = self.model(X)

-

- # 计算损失函数

- loss = self.loss_fn(logits, y).item()

- # 累积损失

- total_loss += loss

-

- # 累积评价

- self.metric.update(logits, y)

-

- dev_loss = (total_loss / len(dev_loader))

- self.dev_losses.append((global_step, dev_loss))

-

- dev_score = self.metric.accumulate()

- self.dev_scores.append(dev_score)

-

- return dev_score, dev_loss

-

- # 模型评估阶段,使用'paddle.no_grad()'控制不计算和存储梯度

- @torch.no_grad()

- def predict(self, x, **kwargs):

- # 将模型设置为评估模式

- self.model.eval()

- # 运行模型前向计算,得到预测值

- logits = self.model(x)

- return logits

-

- def save_model(self, save_path):

- torch.save(self.model.state_dict(), save_path)

-

- def load_model(self, model_path):

- model_state_dict = torch.load(model_path)

- self.model.load_state_dict(model_state_dict)

-

-

- class Accuracy():

- def __init__(self, is_logist=True):

- # 用于统计正确的样本个数

- self.num_correct = 0

- # 用于统计样本的总数

- self.num_count = 0

-

- self.is_logist = is_logist

-

- def update(self, outputs, labels):

-

- # 判断是二分类任务还是多分类任务,shape[1]=1时为二分类任务,shape[1]>1时为多分类任务

- if outputs.shape[1] == 1: # 二分类

- outputs = torch.squeeze(outputs, dim=-1)

- if self.is_logist:

- # logist判断是否大于0

- preds = torch.tensor((outputs >= 0), dtype=torch.float32)

- else:

- # 如果不是logist,判断每个概率值是否大于0.5,当大于0.5时,类别为1,否则类别为0

- preds = torch.tensor((outputs >= 0.5), dtype=torch.float32)

- else:

- # 多分类时,使用'torch.argmax'计算最大元素索引作为类别

- preds = torch.argmax(outputs, dim=1)

-

- # 获取本批数据中预测正确的样本个数

- labels = torch.squeeze(labels, dim=-1)

- batch_correct = torch.sum(torch.tensor(preds == labels, dtype=torch.float32)).cpu().numpy()

- batch_count = len(labels)

-

- # 更新num_correct 和 num_count

- self.num_correct += batch_correct

- self.num_count += batch_count

-

- def accumulate(self):

- # 使用累计的数据,计算总的指标

- if self.num_count == 0:

- return 0

- return self.num_correct / self.num_count

-

- def reset(self):

- # 重置正确的数目和总数

- self.num_correct = 0

- self.num_count = 0

-

- def name(self):

- return "Accuracy"

-

-

lstm.py

- import os

- import torch

- import torch.nn as nn

- from torch.utils.data import Dataset

- from utils.data import load_vocab

- from functools import partial

- import time

- import random

- import numpy as np

- from nndl import Accuracy, RunnerV3

-

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

-

-

- # 加载数据集

- def load_imdb_data(path):

- assert os.path.exists(path)

- trainset, devset, testset = [], [], []

- with open(os.path.join(path, "train.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- trainset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "dev.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- devset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "test.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- testset.append((sentence, sentence_label))

-

- return trainset, devset, testset

-

-

- # 加载IMDB数据集

- train_data, dev_data, test_data = load_imdb_data("./dataset/")

- # # 打印一下加载后的数据样式

- print(train_data[4])

-

-

- class IMDBDataset(Dataset):

- def __init__(self, examples, word2id_dict):

- super(IMDBDataset, self).__init__()

- # 词典,用于将单词转为字典索引的数字

- self.word2id_dict = word2id_dict

- # 加载后的数据集

- self.examples = self.words_to_id(examples)

-

- def words_to_id(self, examples):

- tmp_examples = []

- for idx, example in enumerate(examples):

- seq, label = example

- # 将单词映射为字典索引的ID, 对于词典中没有的单词用[UNK]对应的ID进行替代

- seq = [self.word2id_dict.get(word, self.word2id_dict['[UNK]']) for word in seq.split(" ")]

- label = int(label)

- tmp_examples.append([seq, label])

- return tmp_examples

-

- def __getitem__(self, idx):

- seq, label = self.examples[idx]

- return seq, label

-

- def __len__(self):

- return len(self.examples)

-

-

- # 加载词表

- word2id_dict = load_vocab("./dataset/vocab.txt")

-

- # 实例化Dataset

- train_set = IMDBDataset(train_data, word2id_dict)

- dev_set = IMDBDataset(dev_data, word2id_dict)

- test_set = IMDBDataset(test_data, word2id_dict)

-

- print('训练集样本数:', len(train_set))

- print('样本示例:', train_set[4])

-

-

- def collate_fn(batch_data, pad_val=0, max_seq_len=256):

- seqs, seq_lens, labels = [], [], []

- max_len = 0

- for example in batch_data:

- seq, label = example

- # 对数据序列进行截断

- seq = seq[:max_seq_len]

- # 对数据截断并保存于seqs中

- seqs.append(seq)

- seq_lens.append(len(seq))

- labels.append(label)

- # 保存序列最大长度

- max_len = max(max_len, len(seq))

- # 对数据序列进行填充至最大长度

- for i in range(len(seqs)):

- seqs[i] = seqs[i] + [pad_val] * (max_len - len(seqs[i]))

-

- # return (torch.tensor(seqs), torch.tensor(seq_lens)), torch.tensor(labels)

- return (torch.tensor(seqs).to(device), torch.tensor(seq_lens)), torch.tensor(labels).to(device)

-

- max_seq_len = 5

- batch_data = [[[1, 2, 3, 4, 5, 6], 1], [[2, 4, 6], 0]]

- (seqs, seq_lens), labels = collate_fn(batch_data, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- print("seqs: ", seqs)

- print("seq_lens: ", seq_lens)

- print("labels: ", labels)

-

- max_seq_len = 256

- batch_size = 128

- collate_fn = partial(collate_fn, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size,

- shuffle=True, drop_last=False, collate_fn=collate_fn)

- dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

- test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

-

-

- class AveragePooling(nn.Module):

- def __init__(self):

- super(AveragePooling, self).__init__()

-

- def forward(self, sequence_output, sequence_length):

- # 假设 sequence_length 是一个 PyTorch 张量

- sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

- # 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

- max_len = sequence_output.shape[1]

-

- mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

- mask = mask.to(torch.float32).unsqueeze(-1)

- # 对序列中paddling部分进行mask

-

- sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

- # 对序列中的向量取均值

- batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

- return batch_mean_hidden

-

-

- class Model_BiLSTM_FC(nn.Module):

- def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

- super(Model_BiLSTM_FC, self).__init__()

- # 词典大小

- self.num_embeddings = num_embeddings

- # 单词向量的维度

- self.input_size = input_size

- # LSTM隐藏单元数量

- self.hidden_size = hidden_size

- # 情感分类类别数量

- self.num_classes = num_classes

- # 实例化嵌入层

- self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

- # 实例化LSTM层

- self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True, bidirectional=True)

- # 实例化聚合层

- self.average_layer = AveragePooling()

- # 实例化输出层

- self.output_layer = nn.Linear(hidden_size * 2, num_classes)

-

- def forward(self, inputs):

- # 对模型输入拆分为序列数据和mask

- input_ids, sequence_length = inputs

- # 获取词向量

- inputs_emb = self.embedding_layer(input_ids)

-

- packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

- enforce_sorted=False)

- # 使用lstm处理数据

- packed_output, _ = self.lstm_layer(packed_input)

- # 解包输出

- sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

- # 使用聚合层聚合sequence_output

- batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

- # 输出文本分类logits

- logits = self.output_layer(batch_mean_hidden)

- return logits

-

-

-

- np.random.seed(0)

- random.seed(0)

- torch.seed()

-

- # 指定训练轮次

- num_epochs = 3

- # 指定学习率

- learning_rate = 0.001

- # 指定embedding的数量为词表长度

- num_embeddings = len(word2id_dict)

- # embedding向量的维度

- input_size = 256

- # LSTM网络隐状态向量的维度

- hidden_size = 256

-

- # 实例化模型

- model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to(device)

- # 指定优化器

- optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

- # 指定损失函数

- loss_fn = nn.CrossEntropyLoss()

- # 指定评估指标

- metric = Accuracy()

- # 实例化Runner

- runner = RunnerV3(model, optimizer, loss_fn, metric)

- # 模型训练

- start_time = time.time()

- runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10, save_path="./checkpoints/best.pdparams")

- end_time = time.time()

- print("time: ", (end_time-start_time))

-

- from nndl import plot_training_loss_acc

-

- # 图像名字

- fig_name = "./images/6.16.pdf"

- # sample_step: 训练损失的采样step,即每隔多少个点选择1个点绘制

- # loss_legend_loc: loss 图像的图例放置位置

- # acc_legend_loc: acc 图像的图例放置位置

- plot_training_loss_acc(runner, fig_name, fig_size=(16,6), sample_step=10, loss_legend_loc="lower left", acc_legend_loc="lower right")

-

-

- model_path = "./checkpoints/best.pdparams"

- runner.load_model(model_path)

- accuracy, _ = runner.evaluate(test_loader)

- print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")

-

-

- id2label={0:"消极情绪", 1:"积极情绪"}

- text = "this movie is so great. I watched it three times already"

- # 处理单条文本

- sentence = text.split(" ")

- words = [word2id_dict[word] if word in word2id_dict else word2id_dict['[UNK]'] for word in sentence]

- words = words[:max_seq_len]

- sequence_length = torch.tensor([len(words)], dtype=torch.int64)

- words = torch.tensor(words, dtype=torch.int64).unsqueeze(0)

- # 使用模型进行预测

- logits = runner.predict((words.to(device), sequence_length.to(device)))

- max_label_id = torch.argmax(logits, dim=-1).cpu().numpy()[0]

- pred_label = id2label[max_label_id]

- print("Label: ", pred_label)

-

extend_1.py(展现可以不这么多,自行取消图片的显示)

- import os

- import torch

- import torch.nn as nn

- from torch.utils.data import Dataset

- from utils.data import load_vocab

- from functools import partial

- import time

- import random

- import numpy as np

- from nndl import Accuracy, RunnerV3

-

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

-

-

- # 加载数据集

- def load_imdb_data(path):

- assert os.path.exists(path)

- trainset, devset, testset = [], [], []

- with open(os.path.join(path, "train.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- trainset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "dev.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- devset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "test.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- testset.append((sentence, sentence_label))

-

- return trainset, devset, testset

-

-

- # 加载IMDB数据集

- train_data, dev_data, test_data = load_imdb_data("./dataset/")

- # # 打印一下加载后的数据样式

- # print(train_data[4])

-

-

- class IMDBDataset(Dataset):

- def __init__(self, examples, word2id_dict):

- super(IMDBDataset, self).__init__()

- # 词典,用于将单词转为字典索引的数字

- self.word2id_dict = word2id_dict

- # 加载后的数据集

- self.examples = self.words_to_id(examples)

-

- def words_to_id(self, examples):

- tmp_examples = []

- for idx, example in enumerate(examples):

- seq, label = example

- # 将单词映射为字典索引的ID, 对于词典中没有的单词用[UNK]对应的ID进行替代

- seq = [self.word2id_dict.get(word, self.word2id_dict['[UNK]']) for word in seq.split(" ")]

- label = int(label)

- tmp_examples.append([seq, label])

- return tmp_examples

-

- def __getitem__(self, idx):

- seq, label = self.examples[idx]

- return seq, label

-

- def __len__(self):

- return len(self.examples)

-

-

- # 加载词表

- word2id_dict = load_vocab("./dataset/vocab.txt")

-

- # 实例化Dataset

- train_set = IMDBDataset(train_data, word2id_dict)

- dev_set = IMDBDataset(dev_data, word2id_dict)

- test_set = IMDBDataset(test_data, word2id_dict)

-

- print('训练集样本数:', len(train_set))

- print('样本示例:', train_set[4])

-

-

- def collate_fn(batch_data, pad_val=0, max_seq_len=256):

- seqs, seq_lens, labels = [], [], []

- max_len = 0

- for example in batch_data:

- seq, label = example

- # 对数据序列进行截断

- seq = seq[:max_seq_len]

- # 对数据截断并保存于seqs中

- seqs.append(seq)

- seq_lens.append(len(seq))

- labels.append(label)

- # 保存序列最大长度

- max_len = max(max_len, len(seq))

- # 对数据序列进行填充至最大长度

- for i in range(len(seqs)):

- seqs[i] = seqs[i] + [pad_val] * (max_len - len(seqs[i]))

-

- # return (torch.tensor(seqs), torch.tensor(seq_lens)), torch.tensor(labels)

- return (torch.tensor(seqs).to(device), torch.tensor(seq_lens)), torch.tensor(labels).to(device)

-

- max_seq_len = 5

- batch_data = [[[1, 2, 3, 4, 5, 6], 1], [[2, 4, 6], 0]]

- (seqs, seq_lens), labels = collate_fn(batch_data, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- print("seqs: ", seqs)

- print("seq_lens: ", seq_lens)

- print("labels: ", labels)

-

- max_seq_len = 256

- batch_size = 128

- collate_fn = partial(collate_fn, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size,

- shuffle=True, drop_last=False, collate_fn=collate_fn)

- dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

- test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

-

-

- class AveragePooling(nn.Module):

- def __init__(self):

- super(AveragePooling, self).__init__()

-

- def forward(self, sequence_output, sequence_length):

- # 假设 sequence_length 是一个 PyTorch 张量

- sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

- # 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

- max_len = sequence_output.shape[1]

-

- mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

- mask = mask.to(torch.float32).unsqueeze(-1)

- # 对序列中paddling部分进行mask

-

- sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

- # 对序列中的向量取均值

- batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

- return batch_mean_hidden

-

-

- class Model_BiLSTM_FC(nn.Module):

- def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

- super(Model_BiLSTM_FC, self).__init__()

- # 词典大小

- self.num_embeddings = num_embeddings

- # 单词向量的维度

- self.input_size = input_size

- # LSTM隐藏单元数量

- self.hidden_size = hidden_size

- # 情感分类类别数量

- self.num_classes = num_classes

- # 实例化嵌入层

- self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

- # 实例化LSTM层

- self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True)

- # 实例化聚合层

- self.average_layer = AveragePooling()

- # 实例化输出层

- self.output_layer = nn.Linear(hidden_size, num_classes)

-

- def forward(self, inputs):

- # 对模型输入拆分为序列数据和mask

- input_ids, sequence_length = inputs

- # 获取词向量

- inputs_emb = self.embedding_layer(input_ids)

-

- packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

- enforce_sorted=False)

- # 使用lstm处理数据

- packed_output, _ = self.lstm_layer(packed_input)

- # 解包输出

- sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

- # 使用聚合层聚合sequence_output

- batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

- # 输出文本分类logits

- logits = self.output_layer(batch_mean_hidden)

- return logits

-

-

-

- np.random.seed(0)

- random.seed(0)

- torch.seed()

-

- # 指定训练轮次

- num_epochs = 3

- # 指定学习率

- learning_rate = 0.001

- # 指定embedding的数量为词表长度

- num_embeddings = len(word2id_dict)

- # embedding向量的维度

- input_size = 256

- # LSTM网络隐状态向量的维度

- hidden_size = 256

-

- # 实例化模型

- model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to(device)

- # 指定优化器

- optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

- # 指定损失函数

- loss_fn = nn.CrossEntropyLoss()

- # 指定评估指标

- metric = Accuracy()

- # 实例化Runner

- runner = RunnerV3(model, optimizer, loss_fn, metric)

- # 模型训练

- start_time = time.time()

- runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10, save_path="./checkpoints/best_forward.pdparams")

- end_time = time.time()

- print("time: ", (end_time-start_time))

-

- from nndl import plot_training_loss_acc

-

- # 图像名字

- fig_name = "./images/6.16.pdf"

- # sample_step: 训练损失的采样step,即每隔多少个点选择1个点绘制

- # loss_legend_loc: loss 图像的图例放置位置

- # acc_legend_loc: acc 图像的图例放置位置

- plot_training_loss_acc(runner, fig_name, fig_size=(16,6), sample_step=10, loss_legend_loc="lower left", acc_legend_loc="lower right")

-

-

- model_path = "./checkpoints/best_forward.pdparams"

- runner.load_model(model_path)

- accuracy, _ = runner.evaluate(test_loader)

- print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")

-

-

- id2label={0:"消极情绪", 1:"积极情绪"}

- text = "this movie is so great. I watched it three times already"

- # 处理单条文本

- sentence = text.split(" ")

- words = [word2id_dict[word] if word in word2id_dict else word2id_dict['[UNK]'] for word in sentence]

- words = words[:max_seq_len]

- sequence_length = torch.tensor([len(words)], dtype=torch.int64)

- words = torch.tensor(words, dtype=torch.int64).unsqueeze(0)

- # 使用模型进行预测

- logits = runner.predict((words.to(device), sequence_length.to(device)))

- max_label_id = torch.argmax(logits, dim=-1).cpu().numpy()[0]

- pred_label = id2label[max_label_id]

- print("Label: ", pred_label)

-

extend2.py

- import torch

- import torch.nn as nn

- import torch.nn.functional as F

- import time

- import random

- import numpy as np

- from nndl import Accuracy, RunnerV3

- from functools import partial

- import os

- from torch.utils.data import Dataset

- from utils.data import load_vocab

-

-

- def load_imdb_data(path):

- assert os.path.exists(path)

- trainset, devset, testset = [], [], []

- with open(os.path.join(path, "train.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- trainset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "dev.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- devset.append((sentence, sentence_label))

-

- with open(os.path.join(path, "test.txt"), "r", encoding='utf-8') as fr:

- for line in fr:

- sentence_label, sentence = line.strip().lower().split("\t", maxsplit=1)

- testset.append((sentence, sentence_label))

-

- return trainset, devset, testset

-

-

- # 加载IMDB数据集

- train_data, dev_data, test_data = load_imdb_data("./dataset/")

- # # 打印一下加载后的数据样式

- # print(train_data[4])

-

-

- class IMDBDataset(Dataset):

- def __init__(self, examples, word2id_dict):

- super(IMDBDataset, self).__init__()

- # 词典,用于将单词转为字典索引的数字

- self.word2id_dict = word2id_dict

- # 加载后的数据集

- self.examples = self.words_to_id(examples)

-

- def words_to_id(self, examples):

- tmp_examples = []

- for idx, example in enumerate(examples):

- seq, label = example

- # 将单词映射为字典索引的ID, 对于词典中没有的单词用[UNK]对应的ID进行替代

- seq = [self.word2id_dict.get(word, self.word2id_dict['[UNK]']) for word in seq.split(" ")]

- label = int(label)

- tmp_examples.append([seq, label])

- return tmp_examples

-

- def __getitem__(self, idx):

- seq, label = self.examples[idx]

- return seq, label

-

- def __len__(self):

- return len(self.examples)

-

-

- # 加载词表

- word2id_dict = load_vocab("./dataset/vocab.txt")

-

- # 实例化Dataset

- train_set = IMDBDataset(train_data, word2id_dict)

- dev_set = IMDBDataset(dev_data, word2id_dict)

- test_set = IMDBDataset(test_data, word2id_dict)

-

- print('训练集样本数:', len(train_set))

- print('样本示例:', train_set[4])

-

-

- def collate_fn(batch_data, pad_val=0, max_seq_len=256):

- seqs, seq_lens, labels = [], [], []

- max_len = 0

- for example in batch_data:

- seq, label = example

- # 对数据序列进行截断

- seq = seq[:max_seq_len]

- # 对数据截断并保存于seqs中

- seqs.append(seq)

- seq_lens.append(len(seq))

- labels.append(label)

- # 保存序列最大长度

- max_len = max(max_len, len(seq))

- # 对数据序列进行填充至最大长度

- for i in range(len(seqs)):

- seqs[i] = seqs[i] + [pad_val] * (max_len - len(seqs[i]))

-

- # return (torch.tensor(seqs), torch.tensor(seq_lens)), torch.tensor(labels)

- return (torch.tensor(seqs).to('cuda'), torch.tensor(seq_lens)), torch.tensor(labels).to('cuda')

-

- max_seq_len = 5

- batch_data = [[[1, 2, 3, 4, 5, 6], 1], [[2, 4, 6], 0]]

- (seqs, seq_lens), labels = collate_fn(batch_data, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- print("seqs: ", seqs)

- print("seq_lens: ", seq_lens)

- print("labels: ", labels)

-

- max_seq_len = 256

- batch_size = 128

- collate_fn = partial(collate_fn, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

- train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size,

- shuffle=True, drop_last=False, collate_fn=collate_fn)

- dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

- test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size,

- shuffle=False, drop_last=False, collate_fn=collate_fn)

-

- class LSTM(nn.Module):

- def __init__(self, input_size, hidden_size, Wi_attr=None, Wf_attr=None, Wo_attr=None, Wc_attr=None,

- Ui_attr=None, Uf_attr=None, Uo_attr=None, Uc_attr=None, bi_attr=None, bf_attr=None,

- bo_attr=None, bc_attr=None):

- super(LSTM, self).__init__()

- self.input_size = input_size

- self.hidden_size = hidden_size

-

- # 初始化模型参数

- if Wi_attr is None:

- Wi = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wi = torch.tensor(Wi_attr, dtype=torch.float32)

- self.W_i = torch.nn.Parameter(Wi.to('cuda'))

-

- if Wf_attr is None:

- Wf = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wf = torch.tensor(Wf_attr, dtype=torch.float32)

- self.W_f = torch.nn.Parameter(Wf.to('cuda'))

-

- if Wo_attr is None:

- Wo = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wo = torch.tensor(Wo_attr, dtype=torch.float32)

- self.W_o = torch.nn.Parameter(Wo.to('cuda'))

-

- if Wc_attr is None:

- Wc = torch.zeros(size=[input_size, hidden_size], dtype=torch.float32)

- else:

- Wc = torch.tensor(Wc_attr, dtype=torch.float32)

- self.W_c = torch.nn.Parameter(Wc.to('cuda'))

-

- if Ui_attr is None:

- Ui = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Ui = torch.tensor(Ui_attr, dtype=torch.float32)

- self.U_i = torch.nn.Parameter(Ui.to('cuda'))

-

- if Uf_attr is None:

- Uf = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Uf = torch.tensor(Uf_attr, dtype=torch.float32)

- self.U_f = torch.nn.Parameter(Uf.to('cuda'))

-

- if Uo_attr is None:

- Uo = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Uo = torch.tensor(Uo_attr, dtype=torch.float32)

- self.U_o = torch.nn.Parameter(Uo.to('cuda'))

-

- if Uc_attr is None:

- Uc = torch.zeros(size=[hidden_size, hidden_size], dtype=torch.float32)

- else:

- Uc = torch.tensor(Uc_attr, dtype=torch.float32)

- self.U_c = torch.nn.Parameter(Uc.to('cuda'))

-

- if bi_attr is None:

- bi = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bi = torch.tensor(bi_attr, dtype=torch.float32)

- self.b_i = torch.nn.Parameter(bi.to('cuda'))

-

- if bf_attr is None:

- bf = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bf = torch.tensor(bf_attr, dtype=torch.float32)

- self.b_f = torch.nn.Parameter(bf.to('cuda'))

-

- if bo_attr is None:

- bo = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bo = torch.tensor(bo_attr, dtype=torch.float32)

- self.b_o = torch.nn.Parameter(bo.to('cuda'))

-

- if bc_attr is None:

- bc = torch.zeros(size=[1, hidden_size], dtype=torch.float32)

- else:

- bc = torch.tensor(bc_attr, dtype=torch.float32)

- self.b_c = torch.nn.Parameter(bc.to('cuda'))

-

- # 初始化状态向量和隐状态向量

- def init_state(self, batch_size):

- hidden_state = torch.zeros(size=[batch_size, self.hidden_size], dtype=torch.float32).to('cuda')

- cell_state = torch.zeros(size=[batch_size, self.hidden_size], dtype=torch.float32).to('cuda')

- return hidden_state, cell_state

-

- # 定义前向计算

- def forward(self, inputs, states=None):

- # inputs: 输入数据,其shape为batch_size x seq_len x input_size

- batch_size, seq_len, input_size = torch.tensor(inputs).shape

-

- # 初始化起始的单元状态和隐状态向量,其shape为batch_size x hidden_size

- if states is None:

- states = self.init_state(batch_size)

- hidden_state, cell_state = states

-

- # 执行LSTM计算,包括:输入门、遗忘门和输出门、候选内部状态、内部状态和隐状态向量

- for step in range(seq_len):

- # 获取当前时刻的输入数据step_input: 其shape为batch_size x input_size

- step_input = inputs[:, step, :]

- # 计算输入门, 遗忘门和输出门, 其shape为:batch_size x hidden_size

- I_gate = F.sigmoid(torch.matmul(step_input, self.W_i) + torch.matmul(hidden_state, self.U_i) + self.b_i)

- F_gate = F.sigmoid(torch.matmul(step_input, self.W_f) + torch.matmul(hidden_state, self.U_f) + self.b_f)

- O_gate = F.sigmoid(torch.matmul(step_input, self.W_o) + torch.matmul(hidden_state, self.U_o) + self.b_o)

- # 计算候选状态向量, 其shape为:batch_size x hidden_size

- C_tilde = F.tanh(torch.matmul(step_input, self.W_c) + torch.matmul(hidden_state, self.U_c) + self.b_c)

- # 计算单元状态向量, 其shape为:batch_size x hidden_size

- cell_state = F_gate * cell_state + I_gate * C_tilde

- # 计算隐状态向量,其shape为:batch_size x hidden_size

- hidden_state = O_gate * F.tanh(cell_state)

-

- return hidden_state

-

-

- class AveragePooling(nn.Module):

- def __init__(self):

- super(AveragePooling, self).__init__()

-

- def forward(self, sequence_output, sequence_length):

- # 假设 sequence_length 是一个 PyTorch 张量

- sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

- # 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

- max_len = sequence_output.shape[1]

-

- mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

- mask = mask.to(torch.float32).unsqueeze(-1)

- # 对序列中paddling部分进行mask

-

- sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

- # 对序列中的向量取均值

- batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

- return batch_mean_hidden

-

-

- class Model_BiLSTM_FC(nn.Module):

- def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

- super(Model_BiLSTM_FC, self).__init__()

- # 词典大小

- self.num_embeddings = num_embeddings

- # 单词向量的维度

- self.input_size = input_size

- # LSTM隐藏单元数量

- self.hidden_size = hidden_size

- # 情感分类类别数量

- self.num_classes = num_classes

- # 实例化嵌入层

- self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

- # 实例化LSTM层

- self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True, bidirectional=True)

- # 实例化聚合层

- self.average_layer = AveragePooling()

- # 实例化输出层

- self.output_layer = nn.Linear(hidden_size * 2, num_classes)

-

- def forward(self, inputs):

- # 对模型输入拆分为序列数据和mask

- input_ids, sequence_length = inputs

- # 获取词向量

- inputs_emb = self.embedding_layer(input_ids)

-

- packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

- enforce_sorted=False)

- # 使用lstm处理数据

- packed_output, _ = self.lstm_layer(packed_input)

- # 解包输出

- sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

- # 使用聚合层聚合sequence_output

- batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

- # 输出文本分类logits

- logits = self.output_layer(batch_mean_hidden)

- return logits

-

-

- np.random.seed(0)

- random.seed(0)

- torch.seed()

-

- # 指定训练轮次

- num_epochs = 3

- # 指定学习率

- learning_rate = 0.001

- # 指定embedding的数量为词表长度

- num_embeddings = len(word2id_dict)

- # embedding向量的维度

- input_size = 256

- # LSTM网络隐状态向量的维度

- hidden_size = 256

-

- # 实例化模型

- model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to('cuda')

- # 指定优化器

- optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

- # 指定损失函数

- loss_fn = nn.CrossEntropyLoss()

- # 指定评估指标

- metric = Accuracy()

- # 实例化Runner

- runner = RunnerV3(model, optimizer, loss_fn, metric)

- # 模型训练

- start_time = time.time()

- runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10, save_path="./checkpoints/best_self_forward.pdparams")

- end_time = time.time()

- print("time: ", (end_time-start_time))

-

-

- model_path = "./checkpoints/best_self_forward.pdparams"

- runner.load_model(model_path)

- accuracy, _ = runner.evaluate(test_loader)

- print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")