- 1Transformer模型:从自然语言处理到计算机视觉的革命_transform人工智能

- 2【全志V3s】SPI NAND Flash 驱动开发

- 3【报错】Their offer ssh-rsa,ssh-dss_no matching host key type found_their offer: ssh-rsa,ssh-dss

- 4【斩获7枚offer,入职阿里平台事业部】横扫阿里、美团、京东、 去哪儿之后,我写下了这篇面经!_这周约面试可以到下周吗

- 5自动驾驶中间件之二:通信中间件,DDS与SOMEIP 谁主沉浮?_dds over can

- 6【前端vue】 mac搭建vue环境_macos安装vue

- 7提示由于找不到MSVCR120.dll文件,怎么修复呢?MSVCR120.dll是什么文件?

- 8vue安装及环境配置(最新)_vue环境安装与配置

- 9odoo17开发教程(7):用户界面UI的交互-菜单_odoo17开发教程之图标

- 10要注册一个域名怎么注册?

Rust中 零成本抽象的 future_rust ffi experience

赞

踩

Rust中 零成本抽象的 future

零成本抽象,这不会太有意义。基本上,这意味着 Rust

可以在编译时做很多事情。如果您想使用高级抽象,如果您想在代码中使用泛型,我们希望这是编译时间成本。我们不想在运行时减慢我们的程序。这就是零成本抽象的含义。

One of the key gaps in Rust’s ecosystem has been a strong story for fast and productive asynchronous I/O. We have solid foundations, like the mio library, but they’re very low level: you have to wire up state machines and juggle callbacks directly.

Rust生态系统的一个关键缺陷是缺少快速高效的异步I/O。虽然我们拥有坚实的基础,例如 mio 库,但它们的级别非常低:您必须直接处理 连接状态机并处理回调。

We’ve wanted something higher level, with better ergonomics, but also better composability, supporting an ecosystem of asynchronous abstractions that all work together. This story might sound familiar: it’s the same goal that’s led to the introduction of futures (aka promises) in many languages, with some supporting async/await sugar on top.

我们想要更高层次的东西,具有更好的功效学,而且还有更好的可组合性,支持所有协同工作的异步抽象生态系统。这个故事可能听起来很熟悉:正是这个目标导致了许多语言中引入 future(又名 Promise),其中一些支持 async/await 语法糖。

A major tenet of Rust is the ability to build zero-cost abstractions, and that leads to one additional goal for our async I/O story: ideally, an abstraction like futures should compile down to something equivalent to the state-machine-and-callback-juggling code we’re writing today (with no additional runtime overhead).

Rust 的一个主要原则是构建零成本抽象的能力,这为我们的异步 I/O 带来了一个额外的目标:理想情况下,像 futures 这样的抽象应该 编译成实现等价功能的 状态机和回调代码(但没有额外的运行时开销)。

Over the past couple of months, Alex Crichton and I have developed a zero-cost futures library for Rust, one that we believe achieves these goals. (Thanks to Carl Lerche, Yehuda Katz, and Nicholas Matsakis for insights along the way.)

在过去的几个月里,Alex Crichton 和我为 Rust 开发了一个零成本的 future 库,我们相信它可以实现这些目标。 (感谢 Carl Lerche、Yehuda Katz 和 Nicholas Matsakis 一路以来的见解。)

Today, we’re excited to kick off a blog series about the new library. This post gives the highlights, a few key ideas, and some preliminary benchmarks. Follow-up posts will showcase how Rust’s features come together in the design of this zero-cost abstraction. And there’s already a tutorial to get you going.

今天,我们很高兴推出有关新库的博客系列。这篇文章给出了要点、一些关键想法和一些初步基准。后续帖子将展示 Rust 的功能如何在这种零成本抽象的设计中结合在一起。并且已经有一个教程可以帮助您入门。

Why async I/O? 为什么要异步 I/O?

Before delving into futures, it’ll be helpful to talk a bit about the past.

在深入研究未来(future)之前,先谈谈过去会很有帮助。

Let’s start with a simple piece of I/O you might want to perform: reading a certain number of bytes from a socket. Rust provides a function, read_exact, to do this:

让我们从您可能想要执行的一个简单的 I/O 开始:从套接字读取一定数量的字节。 Rust 提供了一个函数 read_exact 来执行此操作:

// reads 256 bytes into `my_vec`

socket.read_exact(&mut my_vec[..256]);

- 1

- 2

Quick quiz: what happens if we haven’t received enough bytes from the socket yet?

小测验:如果我们还没有从套接字接收到足够的字节会发生什么?

In today’s Rust, the answer is that the current thread blocks, sleeping until more bytes are available. But that wasn’t always the case.

在今天的 Rust 中,答案是当前线程阻塞,休眠直到有更多字节可用。但情况并非总是如此。

Early on, Rust had a “green threading” model, not unlike Go’s. You could spin up a large number of lightweight tasks, which were then scheduled onto real OS threads (sometimes called “M:N threading”). In the green threading model, a function like read_exact blocks the current task, but not the underlying OS thread; instead, the task scheduler switches to another task. That’s great, because you can scale up to a very large number of tasks, most of which are blocked, while using only a small number of OS threads.

早期,Rust 有一个“绿色线程”模型,与 Go 的模型没什么不同。您可以启动大量轻量级任务,然后将这些任务调度到真正的操作系统线程上(有时称为“M:N 线程”)。在绿色线程模型中,像 read_exact 这样的函数会阻塞当前任务,但不会阻塞底层操作系统线程;相反,任务调度程序会切换到另一个任务。这太棒了,因为您可以扩展到非常大量的任务,其中大多数任务被阻塞,同时只使用少量的操作系统线程。

The problem is that green threads were at odds with Rust’s ambitions to be a true C replacement, with no imposed runtime system or FFI costs: we were unable to find an implementation strategy that didn’t impose serious global costs. You can read more in the RFC that removed green threading.

问题在于,绿色线程与 Rust 成为真正的 C 替代品的雄心相悖,没有强加的运行时系统或 FFI 成本:我们无法找到一种不会强加严重的全局成本的实现策略。您可以在删除绿色线程的 RFC 中阅读更多内容。

So if we want to handle a large number of simultaneous connections, many of which are waiting for I/O, but we want to keep the number of OS threads to a minimum, what else can we do?

因此,如果我们想要处理大量并发连接,其中许多连接正在等待 I/O,但我们希望将操作系统线程的数量保持在最低限度,我们还能做什么呢?

Asynchronous I/O is the answer – and in fact, it’s used to implement green threading as well.

异步 I/O 就是答案——事实上,它也用于实现绿色线程。

In a nutshell, with async I/O you can attempt an I/O operation without blocking. If it can’t complete immediately, you can retry at some later point. To make this work, the OS provides tools like epoll, allowing you to query which of a large set of I/O objects are ready for reading or writing – which is essentially the API that mio provides.

简而言之,使用异步 I/O,您可以在不阻塞的情况下尝试 I/O 操作。如果无法立即完成,您可以稍后重试。为了实现这一点,操作系统提供了 epoll 等工具,允许您查询大量 I/O 对象中哪些对象已准备好进行读取或写入 – 这本质上是 mio 提供的 API。

The problem is that there’s a lot of painful work tracking all of the I/O events you’re interested in, and dispatching those to the right callbacks (not to mention programming in a purely callback-driven way). That’s one of the key problems that futures solve.

问题在于,跟踪您感兴趣的所有 I/O 事件并将其分派到正确的回调(更不用说以纯粹回调驱动的方式进行编程)需要进行大量痛苦的工作。这是future解决的关键问题之一。

Futures

So what is a future?

那么什么是future呢?

In essence, a future represents a value that might not be ready yet. Usually, the future becomes complete (the value is ready) due to an event happening somewhere else. While we’ve been looking at this from the perspective of basic I/O, you can use a future to represent a wide range of events, e.g.:

从本质上讲,future代表了一种可能尚未准备好的值。通常,由于其他地方发生的事件,future会完成(值已准备好)。虽然我们一直从基本 I/O 的角度来看待这个问题,但您可以使用 future 来表示各种事件,例如:

-

A database query that’s executing in a thread pool. When the query finishes, the future is completed, and its value is the result of the query.

在线程池中执行的数据库查询。当查询结束时,future就完成了,它的值就是查询的结果。 -

An RPC invocation to a server. When the server replies, the future is completed, and its value is the server’s response.

对服务器的 RPC 调用。当服务器回复时,future就完成了,它的值就是服务器的响应。 -

A timeout. When time is up, the future is completed, and its value is just

()(the “unit” value in Rust).

超时。当时间到了,future 就完成了,它的值就是()(Rust 中的“单位”值)。 -

A long-running CPU-intensive task, running on a thread pool. When the task finishes, the future is completed, and its value is the return value of the task.

长时间运行的 CPU 密集型任务,在线程池上运行。当任务完成时,future就完成了,它的值就是任务的返回值。 -

Reading bytes from a socket. When the bytes are ready, the future is completed – and depending on the buffering strategy, the bytes might be returned directly, or written as a side-effect into some existing buffer.

从套接字读取字节。当字节准备好时,未来就完成了——并且根据缓冲策略,字节可能会直接返回,或者作为副作用写入某些现有缓冲区。

And so on. The point is that futures are applicable to asynchronous events of all shapes and sizes. The asynchrony is reflected in the fact that you get a future right away, without blocking, even though the value the future represents will become ready only at some unknown time in the… future.

等等。要点是 future 适用于各种形状和大小的异步事件。异步性体现在这样一个事实:您立即获得一个 future,而不会阻塞,即使 future 所代表的值只会在未来的某个未知时间准备好。

In Rust, we represent futures as a trait (i.e., an interface), roughly:

在 Rust 中,我们将 future 表示为一个特征(即接口),大致如下:

trait Future {

type Item;

// ... lots more elided ...

}

- 1

- 2

- 3

- 4

The Item type says what kind of value the future will yield once it’s complete.

Item 类型表示future完成后将产生什么样的值。

Going back to our earlier list of examples, we can write several functions producing different futures (using impl syntax):

回到我们之前的示例列表,我们可以编写几个产生不同 future 的函数(使用 impl 语法):

// Lookup a row in a table by the given id, yielding the row when finished

fn get_row(id: i32) -> impl Future<Item = Row>;

// Makes an RPC call that will yield an i32

fn id_rpc(server: &RpcServer) -> impl Future<Item = i32>;

// Writes an entire string to a TcpStream, yielding back the stream when finished

fn write_string(socket: TcpStream, data: String) -> impl Future<Item = TcpStream>;

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

All of these functions will return their future immediately, whether or not the event the future represents is complete; the functions are non-blocking.

所有这些函数都会立即返回它们的 future,无论 future 代表的事件是否完成;这些函数是非阻塞的。

Things really start getting interesting with futures when you combine them. There are endless ways of doing so, e.g.:

当你把它们组合起来时,事情真的开始变得有趣了。有无数种方法可以做到这一点,例如:

-

Sequential composition:

f.and_then(|val| some_new_future(val)). Gives you a future that executes the futuref, takes thevalit produces to build another futuresome_new_future(val), and then executes that future.

顺序组合:f.and_then(|val| some_new_future(val))。为您提供一个执行 futuref的 future,使用它生成的val构建另一个 futuresome_new_future(val),然后执行该 future。 -

Mapping:

f.map(|val| some_new_value(val)). Gives you a future that executes the futurefand yields the result ofsome_new_value(val).

映射:f.map(|val| some_new_value(val))。为您提供一个执行futuref并产生some_new_value(val)结果的future。 -

Joining:

f.join(g). Gives you a future that executes the futuresfandgin parallel, and completes when both of them are complete, returning both of their values.

加入:f.join(g)。为您提供一个并行执行 future 的 futuref和g,并在它们都完成时完成,返回它们的值。 -

Selecting:

f.select(g). Gives you a future that executes the futuresfandgin parallel, and completes when one of them is complete, returning its value and the other future. (Want to add a timeout to any future? Just do aselectof that future and a timeout future!)

选择:f.select(g)。为您提供一个并行执行 future 和g的 future,并在其中一个完成时完成,返回其值和另一个 future。 (想要为任何future添加超时?只需对该future和超时future执行select即可!)

As a simple example using the futures above, we might write something like:

作为使用上面的 future 的简单示例,我们可能会编写如下内容:

id_rpc(&my_server).and_then(|id| {

get_row(id)

}).map(|row| {

json::encode(row)

}).and_then(|encoded| {

write_string(my_socket, encoded)

})

- 1

- 2

- 3

- 4

- 5

- 6

- 7

See this code for a more fleshed out example.

请参阅此代码以获得更具体的示例。

This is non-blocking code that moves through several states: first we do an RPC call to acquire an ID; then we look up the corresponding row; then we encode it to json; then we write it to a socket. Under the hood, this code will compile down to an actual state machine which progresses via callbacks (with no overhead), but we get to write it in a style that’s not far from simple blocking code. (Rustaceans will note that this story is very similar to Iterator in the standard library.) Ergonomic, high-level code that compiles to state-machine-and-callbacks: that’s what we were after!

这是一个非阻塞代码,会经历几个状态:首先我们进行 RPC 调用来获取 ID;然后我们查找对应的行;然后我们将其编码为json;然后我们将其写入套接字。在幕后,这段代码将编译为一个实际的状态机,该状态机通过回调进行处理(没有开销),但我们可以用一种与简单的阻塞代码相差不远的风格来编写它。 (Rustaceans 会注意到这个故事与标准库中的 Iterator 非常相似。)编译为状态机和回调的符合工效学的高级代码:这就是我们所追求的!

It’s also worth considering that each of the futures being used here might come from a different library. The futures abstraction allows them to all be combined seamlessly together.

还值得考虑的是,这里使用的每个 future 可能来自不同的库。future抽象允许它们无缝地组合在一起。

Streams 流

But wait – there’s more! As you keep pushing on the future “combinators”, you’re able to not just reach parity with simple blocking code, but to do things that can be tricky or painful to write otherwise. To see an example, we’ll need one more concept: streams.

但是等等——还有更多!当您不断 推动future的“组合器”时,您不仅能够达到与简单的阻塞代码相同的水平,而且能够完成一些否则编写起来可能会很棘手或痛苦的事情。为了看一个例子,我们还需要一个概念:流。

Futures are all about a single value that will eventually be produced, but many event sources naturally produce a stream of values over time. For example, incoming TCP connections or incoming requests on a socket are both naturally streams.

future都是关于最终会产生的 单个值,但随着时间的推移,许多事件源自然会产生 一系列值。例如,传入的 TCP 连接或套接字上的传入请求本质上都是流。

The futures library includes a Stream trait as well, which is very similar to futures, but set up to produce a sequence of values over time. It has a set of combinators, some of which work with futures. For example, if s is a stream, you can write:

futures 库还包含一个 Stream 特征,它与 futures 非常相似,但设置为随着时间的推移生成一系列值。它有一组组合器,其中一些与 future 一起使用。例如,如果 s 是一个流,则可以编写:

s.and_then(|val| some_future(val))

- 1

This code will give you a new stream that works by first pulling a value val from s, then computing some_future(val) from it, then executing that future and yielding its value – then doing it all over again to produce the next value in the stream.

此代码将为您提供一个新的流,该流的工作方式是首先从 s 中提取值 val ,然后从中计算 some_future(val) ,然后执行该 future 并产生它的值value – 然后再次执行此操作以生成流中的下一个值。

Let’s see a real example:

让我们看一个真实的例子:

// Given an `input` I/O object create a stream of requests

let requests = ParseStream::new(input);

// For each request, run our service's `process` function to handle the request

// and generate a response

let responses = requests.and_then(|req| service.process(req));

// Create a new future that'll write out each response to an `output` I/O object

StreamWriter::new(responses, output)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

Here, we’ve written the core of a simple server by operating on streams. It’s not rocket science, but it is a bit exciting to be manipulating values like responses that represent the entirety of what the server is producing.

在这里,我们通过对流进行操作来编写了一个简单服务器的核心。这不是火箭科学,但操纵像 responses 这样代表服务器正在生成的全部内容的值有点令人兴奋。

Let’s make things more interesting. Assume the protocol is pipelined, i.e., that the client can send additional requests on the socket before hearing back from the ones being processed. We want to actually process the requests sequentially, but there’s an opportunity for some parallelism here: we could read and parse a few requests ahead, while the current request is being processed. Doing so is as easy as inserting one more combinator in the right place:

让我们让事情变得更有趣。假设协议是管道式的,即客户端可以在收到正在处理的请求的回音之前在套接字上发送附加请求。我们希望实际按顺序处理请求,但这里有一些并行性的机会:我们可以在处理当前请求时提前读取和解析一些请求。这样做就像在正确的位置再插入一个组合器一样简单:

let requests = ParseStream::new(input);

let responses = requests.map(|req| service.process(req)).buffered(32); // <--

StreamWriter::new(responsesm, output)

- 1

- 2

- 3

The buffered combinator takes a stream of futures and buffers it by some fixed amount. Buffering the stream means that it will eagerly pull out more than the requested number of items, and stash the resulting futures in a buffer for later processing. In this case, that means that we will read and parse up to 32 extra requests in parallel, while running process on the current one.

buffered 组合器采用 future 流并按某个固定量对其进行缓冲。缓冲流意味着它将急切地提取超出请求数量的项目,并将生成的 future 存储在缓冲区中以供以后处理。在本例中,这意味着我们将并行读取和解析最多 32 个额外请求,同时在当前请求上运行 process 。

These are relatively simple examples of using futures and streams, but hopefully they convey some sense of how the combinators can empower you to do very high-level async programming.

这些是使用 future 和流的相对简单的示例,但希望它们传达了一些组合器如何使您能够进行非常高级的异步编程的感觉。

Zero cost? 零成本?

I’ve claimed a few times that our futures library provides a zero-cost abstraction, in that it compiles to something very close to the state machine code you’d write by hand. To make that a bit more concrete:

我曾多次声称我们的 futures 库提供了零成本抽象,因为它编译成非常接近您手写的状态机代码。为了更具体一点:

-

None of the future combinators impose any allocation. When we do things like chain uses of

and_then, not only are we not allocating, we are in fact building up a bigenumthat represents the state machine. (There is one allocation needed per “task”, which usually works out to one per connection.)

future的组合者都不会强行进行任何分配。当我们进行链式使用and_then之类的事情时,我们不仅没有进行分配,而且实际上是在构建一个代表状态机的大enum。 (每个“任务”需要一次分配,通常每个连接需要一次分配。) -

When an event arrives, only one dynamic dispatch is required.

当事件到达时,只需要一次动态调度。 -

There are essentially no imposed synchronization costs; if you want to associate data that lives on your event loop and access it in a single-threaded way from futures, we give you the tools to do so.

基本上没有强加的同步成本;如果您想关联事件循环中的数据并从 future 以单线程方式访问它,我们为您提供了执行此操作的工具。

And so on. Later blog posts will get into the details of these claims and show how we leverage Rust to get to zero cost.

等等。稍后的博客文章将详细介绍这些主张,并展示我们如何利用 Rust 实现零成本。

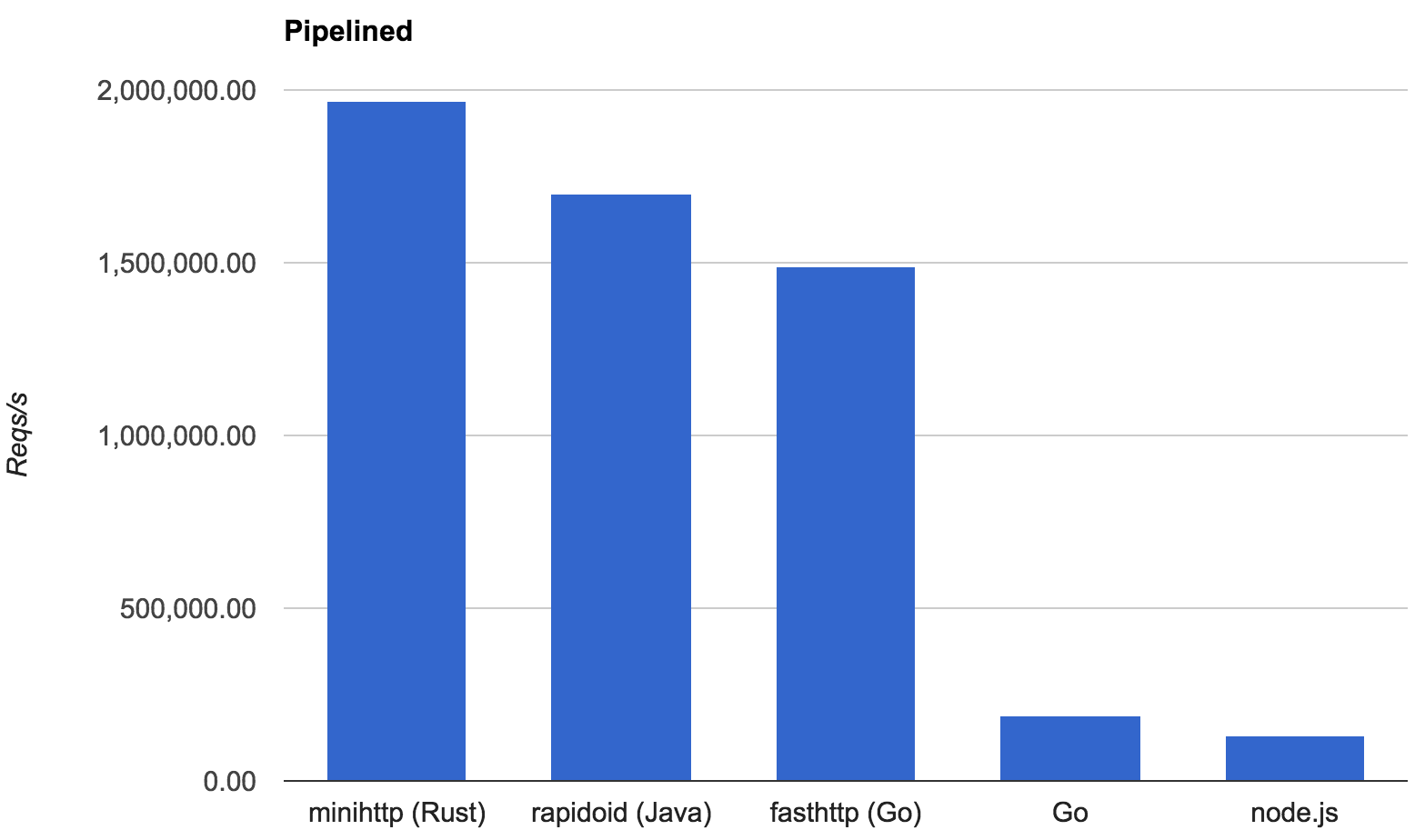

But the proof is in the pudding. We wrote a simple HTTP server framework, minihttp, which supports pipelining and TLS. This server uses futures at every level of its implementation, from reading bytes off a socket to processing streams of requests. Besides being a pleasant way to write the server, this provides a pretty strong stress test for the overhead of the futures abstraction.

但证据就在布丁中。我们编写了一个简单的 HTTP 服务器框架 minihttp,它支持管道和 TLS。该服务器在其实现的每个级别都使用 futures,从从套接字读取字节到处理请求流。除了是编写服务器的一种令人愉快的方式之外,这还为 future 抽象的开销提供了相当强大的压力测试。

To get a basic assessment of that overhead, we then implemented the TechEmpower “plaintext” benchmark. This microbenchmark tests a “hello world” HTTP server by throwing a huge number of concurrent and pipelined requests at it. Since the “work” that the server is doing to process the requests is trivial, the performance is largely a reflection of the basic overhead of the server framework (and in our case, the futures framework).

为了对开销进行基本评估,我们实施了 TechEmpower“明文”基准测试。该微基准测试通过向“hello world”HTTP 服务器抛出大量并发和管道请求来对其进行测试。由于服务器处理请求的“工作”是微不足道的,因此性能在很大程度上反映了服务器框架(在我们的例子中是 futures 框架)的基本开销。

TechEmpower is used to compare a very large number of web frameworks across many different languages. We compared minihttp to a few of the top contenders:

TechEmpower 用于比较跨多种不同语言的大量 Web 框架。我们将 minihttp 与一些顶级竞争者进行了比较:

-

rapidoid, a Java framework, which was the top performer in the last round of official benchmarks.

Rapidoid是一个Java框架,在上一轮官方基准测试中表现最好。 -

Go, an implementation that uses Go’s standard library’s HTTP support.

Go,使用 Go 标准库的 HTTP 支持的实现。 -

fasthttp, a competitor to Go’s standard library.

fasthttp,Go 标准库的竞争对手。 -

node.js. 节点.js。

Here are the results, in number of “Hello world!"s served per second on an 8 core Linux machine:

以下是在 8 核 Linux 机器上每秒服务的“Hello world!”数量的结果:

It seems safe to say that futures are not imposing significant overhead.

可以肯定地说,future并没有带来重大的开销。

Update: to provide some extra evidence, we’ve added a comparison of minihttp against a directly-coded state machine version in Rust (see "raw mio” in the link). The two are within 0.3% of each other.

更新:为了提供一些额外的证据,我们添加了 minihttp 与 Rust 中直接编码的状态机版本的比较(请参阅链接中的“raw mio”)。两者相差在 0.3% 以内。

The future

Thus concludes our whirlwind introduction to zero-cost futures in Rust. We’ll see more details about the design in the posts to come.

我们对 Rust 零成本期货的旋风介绍就到此结束了。我们将在接下来的帖子中看到有关设计的更多细节。

At this point, the library is quite usable, and pretty thoroughly documented; it comes with a tutorial and plenty of examples, including:

至此,该库已经非常有用,并且文档也相当完整;它附带了教程和大量示例,包括:

- a simple TCP echo server;

一个简单的 TCP 回显服务器; - an efficient SOCKSv5 proxy server;

高效的 SOCKSv5 代理服务器; minihttp, a highly-efficient HTTP server that supports TLS and uses Hyper’s parser;

minihttp,一个高效的HTTP服务器,支持TLS并使用Hyper的解析器;- an example use of minihttp for TLS connections,

使用 minihttp 进行 TLS 连接的示例,

as well as a variety of integrations, e.g. a futures-based interface to curl. We’re actively working with several people in the Rust community to integrate with their work; if you’re interested, please reach out to Alex or myself!

以及各种集成,例如基于 future 的 curl 接口。我们正在积极与 Rust 社区的一些人合作,以整合他们的工作;如果您有兴趣,请联系 Alex 或我本人!

If you want to do low-level I/O programming with futures, you can use futures-mio to do so on top of mio. We think this is an exciting direction to take async I/O programming in general in Rust, and follow up posts will go into more detail on the mechanics.

如果您想使用 future 进行低级 I/O 编程,您可以在 mio 之上使用 futures-mio 来完成此操作。我们认为这是在 Rust 中进行异步 I/O 编程的一个令人兴奋的方向,后续文章将更详细地介绍该机制。

Alternatively, if you just want to speak HTTP, you can work on top of minihttp by providing a service: a function that takes an HTTP request, and returns a future of an HTTP response. This kind of RPC/service abstraction opens the door to writing a lot of reusable “middleware” for servers, and has gotten a lot of traction in Twitter’s Finagle library for Scala; it’s also being used in Facebook’s Wangle library. In the Rust world, there’s already a library called Tokio in the works that builds a general service abstraction on our futures library, and could serve a role similar to Finagle.

或者,如果您只想使用 HTTP,则可以通过提供服务来在 minihttp 之上工作:一个接受 HTTP 请求并返回 HTTP 响应的未来的函数。这种 RPC/服务抽象为为服务器编写大量可重用的“中间件”打开了大门,并且在 Twitter 的 Scala Finagle 库中获得了很大的关注; Facebook 的 Wangle 库也使用了它。在 Rust 世界中,已经有一个名为 Tokio 的库正在开发中,它在我们的 future 库上构建了通用服务抽象,并且可以起到类似于 Finagle 的作用。

There’s an enormous amount of work ahead:

未来还有大量工作要做:

-

First off, we’re eager to hear feedback on the core future and stream abstractions, and there are some specific design details for some combinators we’re unsure about.

首先,我们渴望听到关于future核心 和流抽象的反馈,并且对于一些我们不确定的组合器有一些具体的设计细节。 -

Second, while we’ve built a number of future abstractions around basic I/O concepts, there’s definitely more room to explore, and we’d appreciate help exploring it.

其次,虽然我们已经围绕基本 I/O 概念构建了许多future的抽象,但肯定还有更多的探索空间,我们非常感谢您帮助探索它。 -

More broadly, there are endless futures “bindings” for various libraries (both in C and in Rust) to write; if you’ve got a library you’d like futures bindings for, we’re excited to help!

更广泛地说,各种库(C 和 Rust)都有无数的 future“绑定”需要编写;如果您有一个想要future绑定的库,我们很高兴为您提供帮助! -

Thinking more long term, an obvious eventual step would be to explore

async/awaitnotation on top of futures, perhaps in the same way as proposed in Javascript. But we want to gain more experience using futures directly as a library, first, before considering such a step.

从长远来看,一个明显的最终步骤是在 future 之上探索async/await表示法,也许与 Javascript 中提出的方式相同。但在考虑采取这样的步骤之前,我们首先希望获得更多直接使用 future 作为库的经验。

Whatever your interests might be, we’d love to hear from you – we’re acrichto and aturon on Rust’s IRC channels. Come say hi!

无论您的兴趣是什么,我们都希望收到您的来信 – 我们是 Rust IRC 频道的 acrichto 和 aturon 。过来打个招呼吧!

原文地址:Zero-cost futures in Rust

更多阅读: