热门标签

热门文章

- 1SQLserver C#将图片以二进制方式存储到数据库,再从数据库读出图片

- 2【Vue实战】使用vue-admin-template模板开发后台管理_vue admin template

- 3机器学习笔记_建立模型属于机器学习吗

- 4Antd表格滚动 宽度自适应 不换行_ant design 表格列宽根据内容自适应

- 5Alexnet网络的实现(tensorflow版本)_def cnn_inference(images, batch_size, n_classes):

- 6python autogui自动测试

- 7100个Python小游戏,上班摸鱼我能玩一整年【附源码】_python小游戏编程100例

- 8目标检测之Fast R-CNN_fastrcnn网络模型

- 9C语言<文件的打开与关闭>_c语言 7z 压缩 文件关闭

- 10黑马程序员-python课程笔记(26-50p)_python 黑马头条 笔记 网盘

当前位置: article > 正文

nginx整合Kafka_nginx tcp kafka 转发

作者:盐析白兔 | 2024-03-05 17:38:45

赞

踩

nginx tcp kafka 转发

nginx整合Kafka

需求: 将网站产生的用户日志使用通过nginx写入Kafka中,不通过log文件和flume采集

- 前端测试代码: 使用ajax发送用户数据

/** * 生命周期函数--监听页面初次渲染完成 */ onReady: function () { //在这个事件中,记录用户的行为,然后发送到后台服务器 //获取当前位置 wx.getLocation({ success: function (res) { //纬度 var lat = res.latitude; //经度 var log = res.longitude; //从本地存储中取出唯一身份标识 var openid = wx.getStorageSync('openid') console.info(openid) //发送request向mongo中添加数据(添加一条文档(json)) wx.request({ //用POST方式请求es可以只指定index和type,不用指定id // 向nginx服务器发送消息,将消息写入kafka中 url: "http://node01/kafka/user", data: { time: new Date(), openid: openid, lat: lat, log: log }, method: "POST" }) }, })

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 下载安装nginx,这里需要使用kafka依赖和kafka插件,具体参照

- https://github.com/brg-liuwei/ngx_kafka_module

- 由于nginx是由c语言编写,所以下载kafka插件也需要进行编译安装

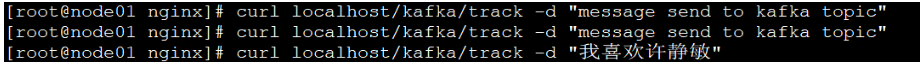

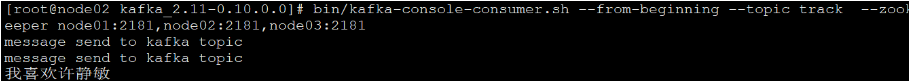

# 先安装git yum install git # 安装nginx # kafka依赖 git clone https://github.com/edenhill/librdkafka cd librdkafka # 安装编译依赖 yum install -y gcc gcc-c++ pcre-devel zlib-devel ./configure make sudo make install # kafka整合nginx所需插件安装编译 git clone https://github.com/brg-liuwei/ngx_kafka_module # cd /path/to/nginx ./configure --add-module=/path/to/ngx_kafka_module make sudo make install # or, use `sudo make upgrade` instead of `sudo make install` # 启动nginx会出现如下错误 [root@node01 nginx]# sbin/nginx -t sbin/nginx: error while loading shared libraries: librdkafka.so.1: cannot open shared object file: No such file or directory # 需要加载so库 echo "/usr/local/lib" >> /etc/ld.so.conf ldconfig # 配置nginx.conf #user nobody; worker_processes 1; #error_log logs/error.log; #error_log logs/error.log notice; #error_log logs/error.log info; #pid logs/nginx.pid; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; #tcp_nopush on; #keepalive_timeout 0; keepalive_timeout 65; #gzip on; kafka; kafka_broker_list node01:9092 node02:9092 node03:9092; server { listen 80; server_name node01; #charset koi8-r; #access_log logs/host.access.log main; # 配置该路径会将发送到nginx的数据写入kafka中的track主题 location = /kafka/track { kafka_topic track; } location = /kafka/user { kafka_topic user; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } } } # 启动kafka # 后台启动 nohup bin/kafka-server-start.sh config/server.properties 2>&1 & # 停止命令 bin/kafka-server-stop.sh # 创建Topic bin/kafka-topics.sh --create --zookeeper node01:2181 --replication-factor 2 --partitions 3 --topic track # 消费数据 bin/kafka-console-consumer.sh --from-beginning --topic track --zookeeper node01:2181,node02:2181,node03:2181 # 测试发送请求 curl localhost/kafka/track -d "message send to kafka topic" [root@node01 nginx]# curl localhost/kafka/track -d "message send to kafka topic" # 我们可以发现消费者可以消费到 [root@node02 kafka_2.11-0.10.0.0]# bin/kafka-console-consumer.sh --from-beginning --topic track --zookeeper node01:2181,node02:2181,node03:2181 message send to kafka topic

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

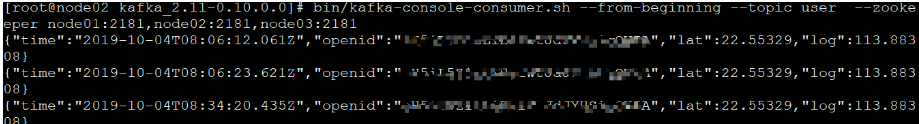

- 启动web项目发送log请求发现可以消费到,对应ajax中url: “http://node01/kafka/user”

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/盐析白兔/article/detail/192743

推荐阅读

相关标签