热门标签

热门文章

- 1什么是SAP HANA?

- 2android新建项目报错:Error Could not open proj remapped class cache for 8y6fc

- 3数据结构专题——二叉树

- 4zookeeper的启动和状态查看

- 5【抓包教程】BurpSuite联动雷电模拟器——安卓高版本抓包移动应用教程_雷电模拟器burpsuite抓包

- 6AIGC的发展

- 7Linux配置PX4时运行git submodule update --init --recursive命令出现如下错误:fatal: 远端意外挂断了fatal: 过早的文件结束符(EOF)的解决办法_fatal: 远端意外挂断了 fatal: 过早的文件结束符(eof) fatal: 无法读取远程仓

- 8java中集合List,Set,Queue,Map

- 9spark sql 总结

- 10最牛逼的编程语言,没有之一

当前位置: article > 正文

flink 继承connector源码二次开发思路_apache flink dashboard二次开发

作者:码创造者 | 2024-07-07 23:48:17

赞

踩

apache flink dashboard二次开发

文章目录

flink 继承connector源码二次开发思路

说明:其他连接器jdbc,kafka等等二次开发思路一致

推荐:公司基于flink开发内部平台,一些内部的特殊场景与需求,经常需要修改源码。但是修改源码在版本更新的情况下会导致开发成本大,周期长。本方案通过继承源码的方式,通过加强,打包覆盖源码的类解决上述问题。

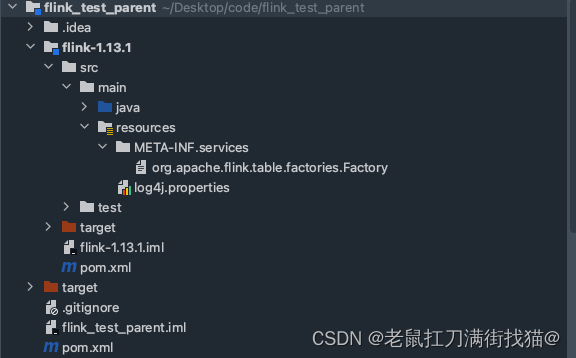

1. idea 创建module项目

2. pom文件说明-以elasticsearch6为例

2.1 参考官方提供的连接包设置

elasticsearch6 为案例,部分pom参考官方提供的连接包,版本号对应,

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-sql-connector-elasticsearch6_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

- 1

- 2

- 3

- 4

- 5

2.2 maven-shade-plugin

- 作用:maven-shade-plugin打包

- 拷贝flink-sql-connector-elasticsearch6_${scala.binary.version}的所有配置,其余的配置根据自身情况做相应的配置

2.3 elasticsearch6 二开pom配置

<properties> <maven.compiler.source>${java.version}</maven.compiler.source> <maven.compiler.target>${java.version}</maven.compiler.target> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> <java.version>1.8</java.version> <flink.version>1.13.1</flink.version> <scala.binary.version>2.11</scala.binary.version> <slf4j.version>1.7.15</slf4j.version> <sql.driver.version>8.0.21</sql.driver.version> <fastjson.version>1.2.75</fastjson.version> <google.guava.version>20.0</google.guava.version> <apache.commons.version>3.11</apache.commons.version> <cn.hutool.all.version>5.5.2</cn.hutool.all.version> <macasaet.version>1.5.0</macasaet.version> <!-- compile,provided --> <scope>provided</scope> </properties> <dependencies> <!-- flink --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-elasticsearch6_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <!-- kafka --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_${scala.binary.version}</artifactId> <version>${flink.version}</version> <scope>${scope}</scope> <exclusions> <exclusion> <artifactId>slf4j-api</artifactId> <groupId>org.slf4j</groupId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-csv</artifactId> <version>${flink.version}</version> <scope>${scope}</scope> </dependency> <dependency

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/码创造者/article/detail/797078

推荐阅读

相关标签