- 1NLP这两年:15个预训练模型对比分析与剖析

- 2关于loss不收敛的一些建议-loss问题汇总(不收敛、震荡、nan)_cyclea损失图像不收敛怎么办

- 3Navicat的使用:报2059错误解决方案_navicat 2059

- 4Kafka学习笔记

- 5hardlockup的原理说明_hard lockup

- 6python 使用pyqt5实现了一个汽车配件记录系统_py车辆使用记录程序

- 7第10章 PCA降维技术

- 8【Django】Django的AbstractUser的几大步骤-20220107_from django.contrib.auth.models import abstractuse

- 9C++ 哈希的应用【位图】_哈希表c++应用

- 10❤ uniapp开发遇见【app.json 文件内容错误-10】_routedone with a webviewid 11 that is not the curr

k8s集群监控方案--node-exporter+prometheus+grafana_node-exporter.yaml

赞

踩

目录

前置条件

安装好k8s集群(几个节点都可以,本人为了方便实验k8s集群只有一个master节点),注意prometheus是部署在k8s集群内部的,不同于传统监控分为监控端和被控端。

部署k8s参考教程:Linux部署单节点k8s_linux单节点安装k8s_luo_guibin的博客-CSDN博客

| 11.0.1.12 | k8s-master / node-exporter+prometheus+grafana |

一、下载yaml文件

链接:https://pan.baidu.com/s/1vmT0Xu7SBB36-odiCMy9zA (链接永久有效)

提取码:9999

解压

- [root@prometheus opt]# yum install -y zip unzip tree

- [root@prometheus opt]# unzip k8s-prometheus-grafana-master.zip

- [root@k8s-master k8s-prometheus-grafana-master]# pwd

- /opt/k8s-prometheus-grafana-master

- [root@k8s-master k8s-prometheus-grafana-master]# tree

- .

- ├── grafana

- │?? ├── grafana-deploy.yaml

- │?? ├── grafana-ing.yaml

- │?? └── grafana-svc.yaml

- ├── node-exporter.yaml

- ├── prometheus

- │?? ├── configmap.yaml

- │?? ├── prometheus.deploy.yml

- │?? ├── prometheus.svc.yml

- │?? └── rbac-setup.yaml

- └── README.md

二、部署yaml各个组件

kubectl命令tab补全

- [root@k8s-master ~]# yum install -y bash-completion

- [root@k8s-master ~]# source <(kubectl completion bash)

2.1 node-exporter.yaml

- [root@k8s-master k8s-prometheus-grafana-master]# kubectl apply -f node-exporter.yaml

- daemonset.apps/node-exporter created

- service/node-exporter created

-

- #因为只有一个节点,这里如果有多个节点,节点数=node-exporterPod数

- [root@k8s-master k8s-prometheus-grafana-master]# kubectl get pod -A | grep node-exporter

- kube-system node-exporter-kpdxh 0/1 ContainerCreating 0 28s

- [root@k8s-master k8s-prometheus-grafana-master]# kubectl get daemonset -A | grep exporter

- kube-system node-exporter 1 1 1 1 1 <none> 2m43s

- [root@k8s-master k8s-prometheus-grafana-master]# kubectl get service -A | grep exporter

- kube-system node-exporter NodePort 10.96.73.86 <none> 9100:31672/TCP 2m59s

2.2 Prometheus

- [root@k8s-master prometheus]# pwd

- /opt/k8s-prometheus-grafana-master/prometheus

- [root@k8s-master prometheus]# ls

- configmap.yaml prometheus.deploy.yml prometheus.svc.yml rbac-setup.yaml

按照顺序 rbac-setup.yaml configmap.yaml prometheus.deploy.yml prometheus.svc.yml ,yaml、yml文件没区别,不用在意。

- [root@k8s-master prometheus]# kubectl apply -f rbac-setup.yaml

- clusterrole.rbac.authorization.k8s.io/prometheus created

- serviceaccount/prometheus created

- clusterrolebinding.rbac.authorization.k8s.io/prometheus created

-

- [root@k8s-master prometheus]# kubectl apply -f configmap.yaml

- configmap/prometheus-config created

-

- [root@k8s-master prometheus]# kubectl apply -f prometheus.deploy.yml

- deployment.apps/prometheus created

-

- [root@k8s-master prometheus]# kubectl apply -f prometheus.svc.yml

- service/prometheus created

2.3 grafana

- [root@k8s-master grafana]# pwd

- /opt/k8s-prometheus-grafana-master/grafana

- [root@k8s-master grafana]# ls

- grafana-deploy.yaml grafana-ing.yaml grafana-svc.yaml

按照顺序安装 grafana-deploy.yaml grafana-svc.yaml grafana-ing.yaml

- [root@k8s-master grafana]# kubectl apply -f grafana-deploy.yaml

- deployment.apps/grafana-core created

-

- [root@k8s-master grafana]# kubectl apply -f grafana-svc.yaml

- service/grafana created

-

- [root@k8s-master grafana]# kubectl apply -f grafana-ing.yaml

- ingress.extensions/grafana created

检查三个pod(node-exporter可能有多个)

- [root@k8s-master grafana]# kubectl get pod -A | grep node-exporter

- kube-system node-exporter-kpdxh 1/1 Running 0 13m

-

- [root@k8s-master grafana]# kubectl get pod -A | grep prometheus

- kube-system prometheus-7486bf7f4b-xb4t8 1/1 Running 0 6m21s

-

- [root@k8s-master grafana]# kubectl get pod -A | grep grafana

- kube-system grafana-core-664b68875b-fhjvt 1/1 Running 0 2m18s

检查服务service,三个svc类型均为NodePort

- [root@k8s-master grafana]# kubectl get svc -A

- NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7h17m

- kube-system grafana NodePort 10.107.115.11 <none> 3000:31748/TCP 3m41s

- kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 7h17m

- kube-system node-exporter NodePort 10.96.73.86 <none> 9100:31672/TCP 15m

- kube-system prometheus NodePort 10.111.178.83 <none> 9090:30003/TCP 7m57s

2.4访问测试

curl访问测试

- [root@k8s-master grafana]# curl 127.0.0.1:31672

- <html lang="en">

- ......

-

- #node-exporter收集到的数据

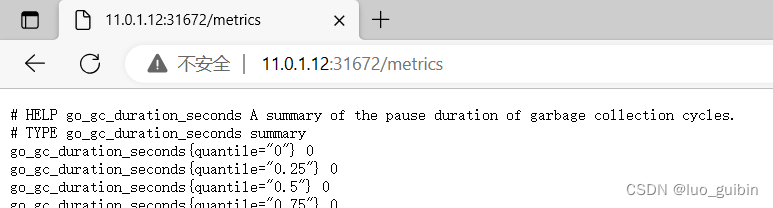

- [root@k8s-master grafana]# curl 127.0.0.1:31672/metrics

- # HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

-

- [root@k8s-master grafana]# curl 127.0.0.1:30003

- <a href="/graph">Found</a>.

-

- [root@k8s-master grafana]# curl 127.0.0.1:31748

- <a href="/login">Found</a>.

访问11.0.1.12:31672/metrics,node-exporter收集到的数据

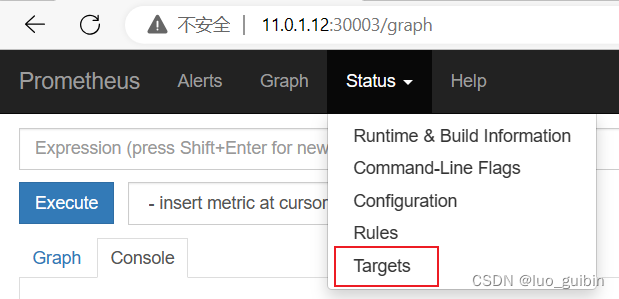

访问11.0.1.12:30003/graph

选择 "status"--"targets"

三、grafana初始化

访问11.0.1.12:31748/login

账号密码都是admin

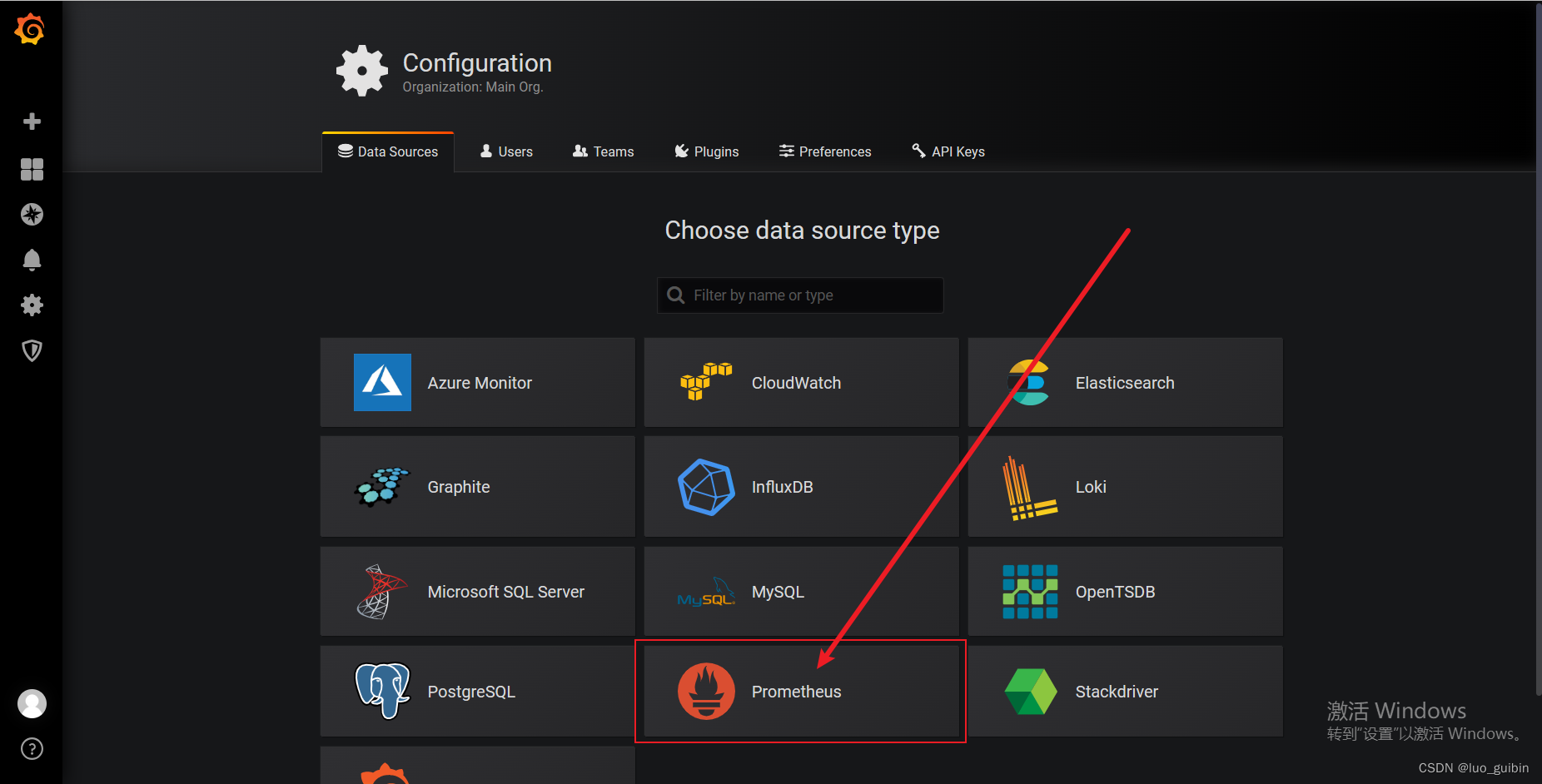

3.1加载数据源

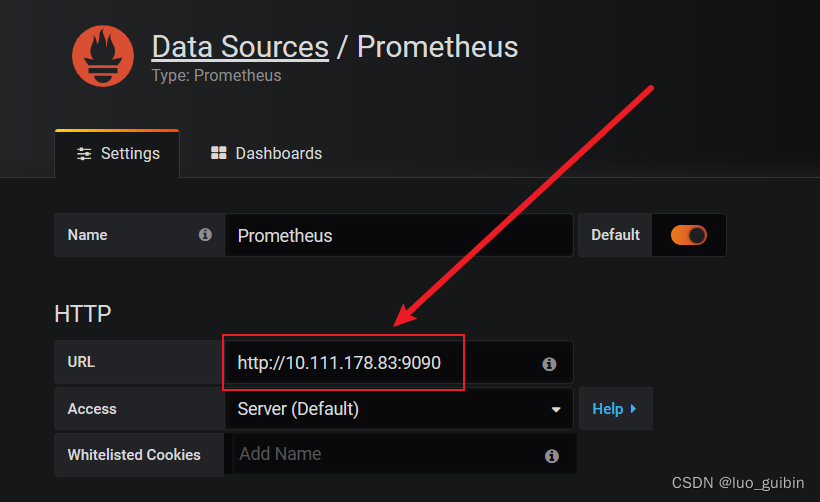

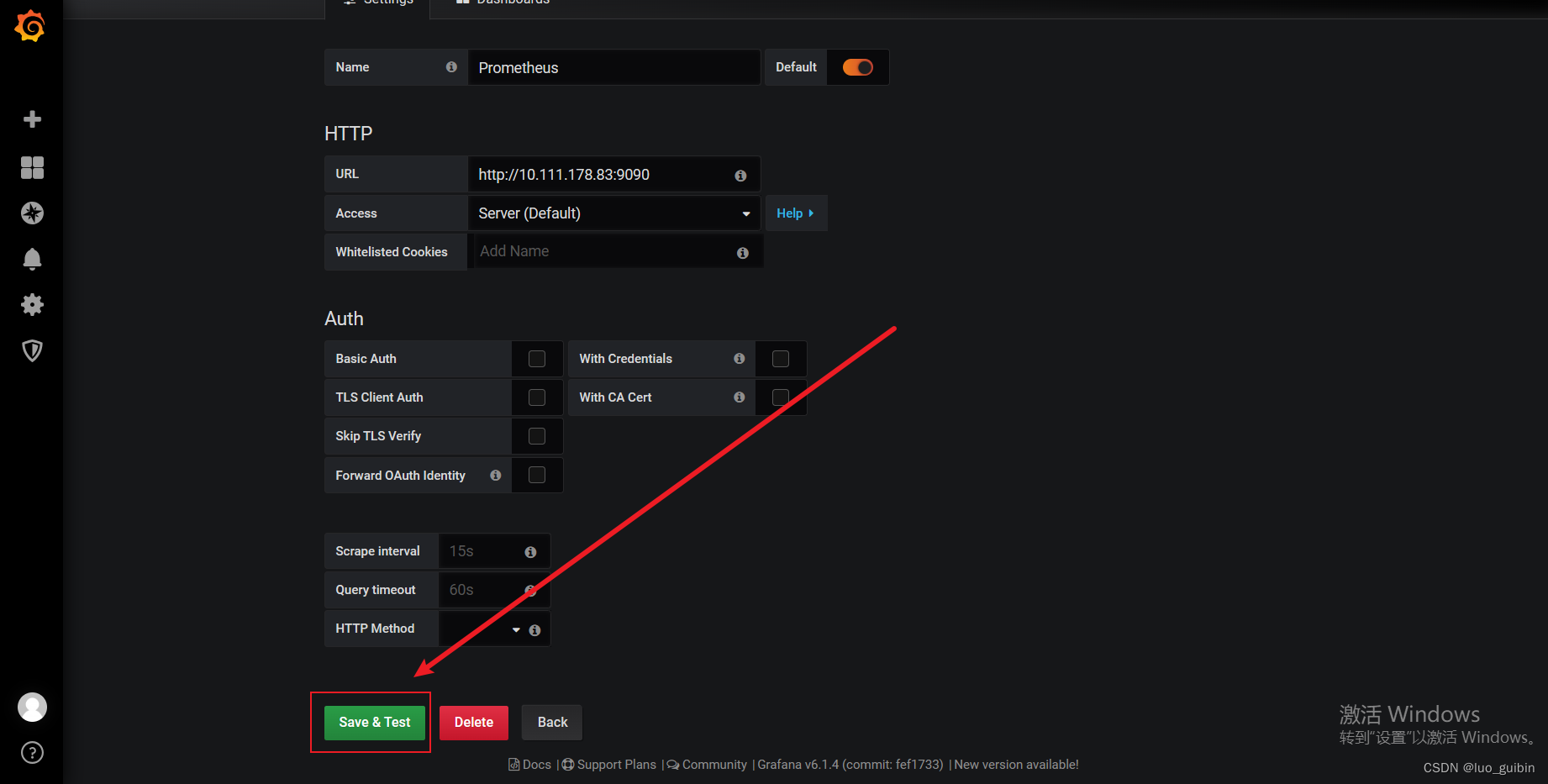

监听地址 10.111.178.83:9090,prometheus的service地址,重启后grafana数据源地址可能会改变导致grafana连接不上prometheus且,注意检测数据源地址。在左侧configuration--data source可编辑数据源。

- [root@k8s-master grafana]# kubectl get svc -A | grep prometheus

- kube-system prometheus NodePort 10.111.178.83 <none> 9090:30003/TCP 40m

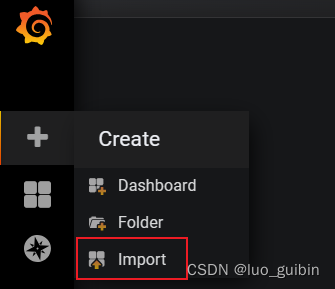

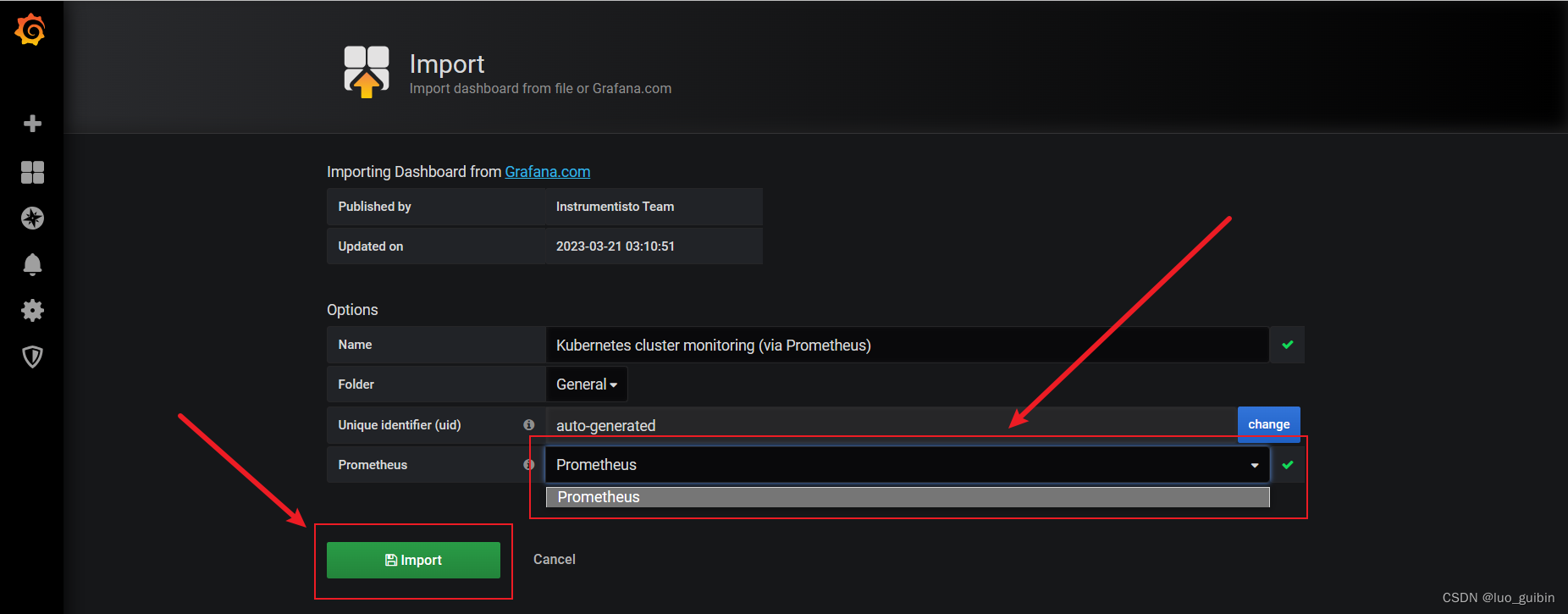

3.2导入模板

模板编号315

选择数据源prometheus

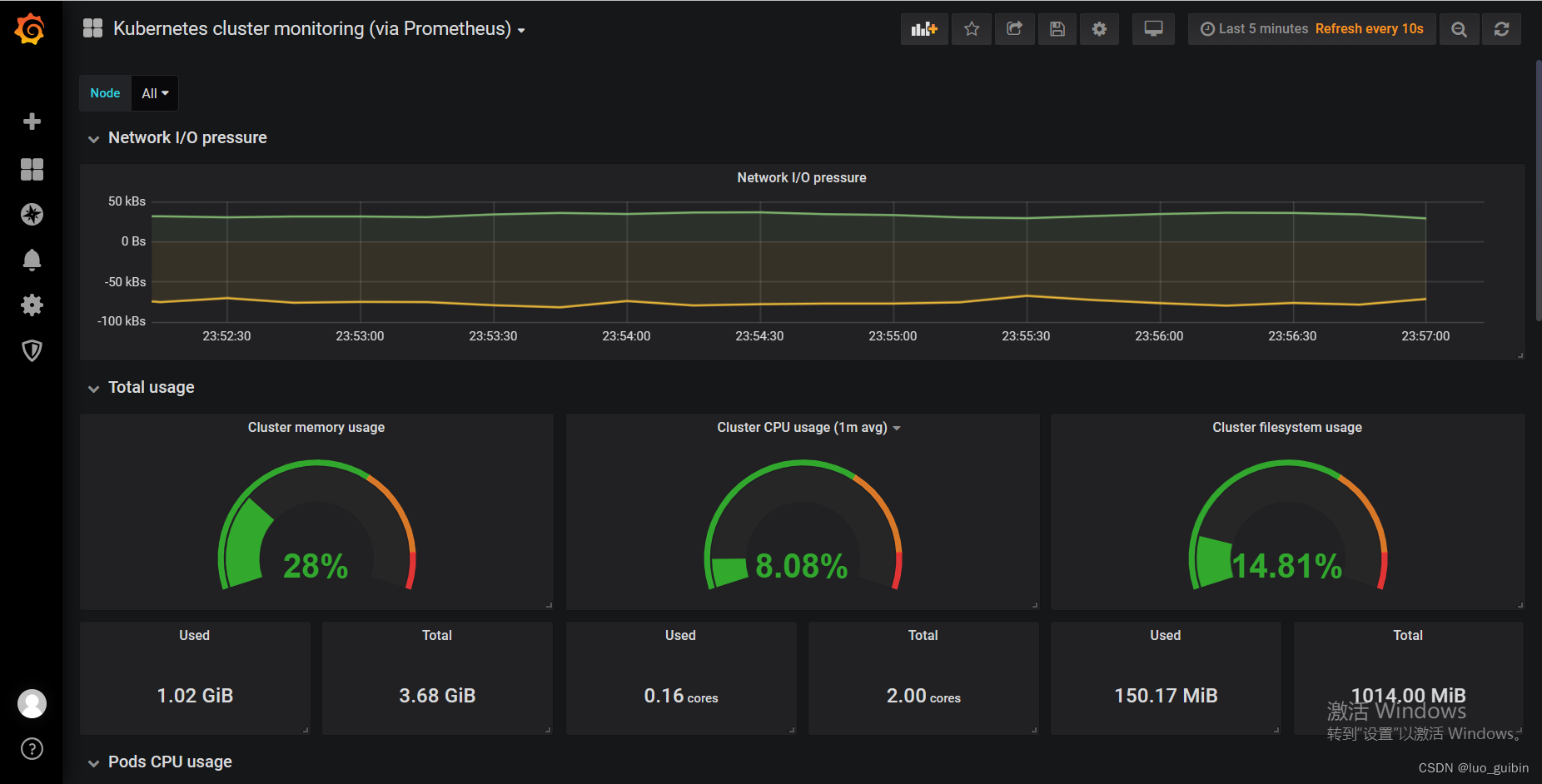

完成监控

四、helm方式部署

......(待更新)

参考文档: