- 1java数据结构之链表以及栈_java 链栈 出栈

- 2吉首大学21级python暨大数据应用开发语言实验一PTA习题集解答_将文件“student.txt”中的内容按行读出,并写入到文件“hello.txt”,且给每行加上

- 3We are what we repeatedly do. Excellence then, is not an act, but a habit.

- 4RabbitMQ--重试机制_rabbitmq 重试

- 5数据库期末考试

- 6LLM - Model、Data、Training、Generate Agruments 超参解析_modelargs

- 7【区块链】椭圆曲线数字签名算法(ECDSA)_ecdsa数字签名过程

- 8Springboot MSSQL连接异常处理_springboot sqlserver trustservercertificate

- 9【计算思维】蓝桥杯STEMA 科技素养考试真题及解析 6_下面4张卡片白色部分是透明的,将它们重叠在一起

- 10【Unity学习笔记】b站Unity架构课Unity3D 商业化的网络游戏架构(高级/主程级别)_unity架构思想

linux flush 文件,Linux Kernel文件系统写I/O流程代码分析(二)bdi_writeback

赞

踩

Linux Kernel文件系统写I/O流程代码分析(二)bdi_writeback

上一篇# Linux Kernel文件系统写I/O流程代码分析(一),我们看到Buffered IO,写操作写入到page cache后就直接返回了,本文主要分析脏页是如何刷盘的。

概述

由于内核page cache的作用,写操作实际被延迟写入。当page cache里的数据被用户写入但是没有刷新到磁盘时,则该page为脏页(块设备page cache机制因为以前机械磁盘以扇区为单位读写,引入了buffer_head,每个4K的page进一步划分成8个buffer,通过buffer_head管理,因此可能只设置了部分buffer head为脏)。

脏页在以下情况下将被回写(write back)到磁盘上:

脏页在内存里的时间超过了阈值。

系统的内存紧张,低于某个阈值时,必须将所有脏页回写。

用户强制要求刷盘,如调用sync()、fsync()、close()等系统调用。

以前的Linux通过pbflush机制管理脏页的回写,但因为其管理了所有的磁盘的page/buffer_head,存在严重的性能瓶颈,因此从Linux 2.6.32开始,脏页回写的工作由bdi_writeback机制负责。bdi_writeback机制为每个磁盘都创建一个线程,专门负责这个磁盘的page cache或者

buffer cache的数据刷新工作,以提高I/O性能。

BDI系统

BDI是backing device info的缩写,它用于描述后端存储(如磁盘)设备相关的信息。相对于内存来说,后端存储的I/O比较慢,因此写盘操作需要通过page cache进行缓存延迟写入。

最初的BDI子系统里,模块启动的时候创建bdi-default进程,然后为每个注册的设备创建flush-x:y(x,y为主次设备号)的进程,用于脏数据的回写。在Linux 3.10.0版本之后,BDI子系统使用workqueue机制代替原来的线程创建,需要回写时,将flush任务提交给workqueue,最终由通用的[kworker]进程负责处理。BDI子系统初始化的代码如下:

static int __init default_bdi_init(void)

{

int err;

bdi_wq = alloc_workqueue("writeback", WQ_MEM_RECLAIM | WQ_FREEZABLE |

WQ_UNBOUND | WQ_SYSFS, 0);

if (!bdi_wq)

return -ENOMEM;

err = bdi_init(&default_backing_dev_info);

if (!err)

bdi_register(&default_backing_dev_info, NULL, "default");

err = bdi_init(&noop_backing_dev_info);

return err;

}

subsys_initcall(default_bdi_init);

设备注册

当执行mount流程时,底层文件系统定义自己的struct backing_dev_info结构并将其注册到BDI子系统,如下是FUSE代码示例:

static int fuse_bdi_init(struct fuse_conn *fc, struct super_block *sb)

{

int err;

fc->bdi.name = "fuse";

fc->bdi.ra_pages = (VM_MAX_READAHEAD * 1024) / PAGE_CACHE_SIZE;

/* fuse does it's own writeback accounting */

fc->bdi.capabilities = BDI_CAP_NO_ACCT_WB | BDI_CAP_STRICTLIMIT;

err = bdi_init(&fc->bdi);

if (err)

return err;

fc->bdi_initialized = 1;

if (sb->s_bdev) {

err = bdi_register(&fc->bdi, NULL, "%u:%u-fuseblk",

MAJOR(fc->dev), MINOR(fc->dev));

} else {

err = bdi_register_dev(&fc->bdi, fc->dev);

}

if (err)

return err;

/*

* /sys/class/bdi//max_ratio

*/

bdi_set_max_ratio(&fc->bdi, 1);

return 0;

}

该函数先通过bdi_init()初始化struct backing_dev_info,然后通过bid_register()将其注册到BDI子系统。

其中bdi_init()会调用bdi_wb_init()初始化struct bdi_writeback:

static void bdi_wb_init(struct bdi_writeback *wb, struct backing_dev_info *bdi)

{

memset(wb, 0, sizeof(*wb));

wb->bdi = bdi;

wb->last_old_flush = jiffies;

INIT_LIST_HEAD(&wb->b_dirty);

INIT_LIST_HEAD(&wb->b_io);

INIT_LIST_HEAD(&wb->b_more_io);

spin_lock_init(&wb->list_lock);

INIT_DELAYED_WORK(&wb->dwork, bdi_writeback_workfn);

}

其中初始化了一个默认处理函数为bdi_writeback_workfn的work,用于回写处理。

数据回写

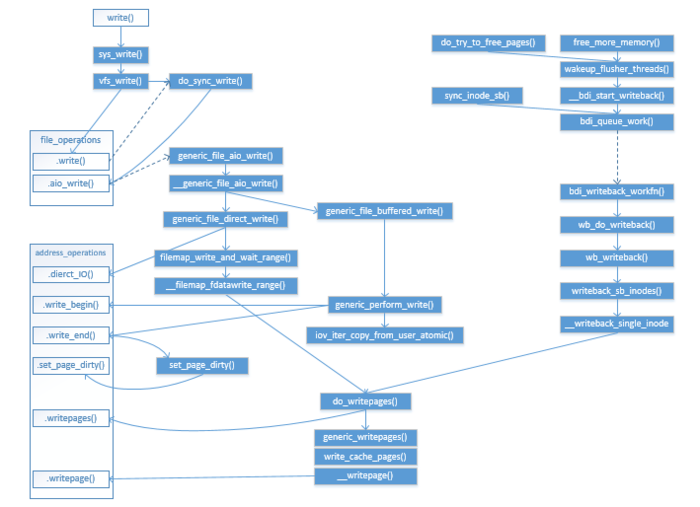

在上一篇的基础上,将图补充了bdi回写的部分,如下所示:

bdi_queue_work

BDI子系统使用workqueue机制进行数据回写,其回写接口为bdi_queue_work()将具体某个bdi的回写请求(wb_writeback_work)挂到bdi_wq上。代码如下:

static void bdi_queue_work(struct backing_dev_info *bdi,

struct wb_writeback_work *work)

{

trace_writeback_queue(bdi, work);

spin_lock_bh(&bdi->wb_lock);

if (!test_bit(BDI_registered, &bdi->state)) {

if (work->done)

complete(work->done);

goto out_unlock;

}

list_add_tail(&work->list, &bdi->work_list);

mod_delayed_work(bdi_wq, &bdi->wb.dwork, 0);

out_unlock:

spin_unlock_bh(&bdi->wb_lock);

}

调用该函数的地方包括:

sync_inode_sb(): 将该super block上所有的脏inode回写。

writeback_inodes_sb_nr():回写super block上指定个数脏inode。

**__bdi_start_writeback()**:定时调用或者需要释放pages或者需要更多内存时调用。

bdi_writeback_workfn

bdi_queue_work()提交了work给bdi_wq上,由对应的bdi处理函数进行处理,默认的函数为bdi_writeback_workfn,其代码如下:

void bdi_writeback_workfn(struct work_struct *work)

{

struct bdi_writeback *wb = container_of(to_delayed_work(work),

struct bdi_writeback, dwork);

struct backing_dev_info *bdi = wb->bdi;

long pages_written;

set_worker_desc("flush-%s", dev_name(bdi->dev));

current->flags |= PF_SWAPWRITE;

if (likely(!current_is_workqueue_rescuer() ||

!test_bit(BDI_registered, &bdi->state))) {

/*

* The normal path. Keep writing back @bdi until its

* work_list is empty. Note that this path is also taken

* if @bdi is shutting down even when we're running off the

* rescuer as work_list needs to be drained.

*/

do {

pages_written = wb_do_writeback(wb);

trace_writeback_pages_written(pages_written);

} while (!list_empty(&bdi->work_list));

} else {

/*

* bdi_wq can't get enough workers and we're running off

* the emergency worker. Don't hog it. Hopefully, 1024 is

* enough for efficient IO.

*/

pages_written = writeback_inodes_wb(&bdi->wb, 1024,

WB_REASON_FORKER_THREAD);

trace_writeback_pages_written(pages_written);

}

if (!list_empty(&bdi->work_list))

mod_delayed_work(bdi_wq, &wb->dwork, 0);

else if (wb_has_dirty_io(wb) && dirty_writeback_interval)

bdi_wakeup_thread_delayed(bdi);

current->flags &= ~PF_SWAPWRITE;

}

首先判断当前workqueue能否获得足够的worker进行处理,如果能则将bdi上所有work全部提交,否则只提交一个work并限制写入1024个pages。

正常情况下通过调用wb_do_writeback函数处理回写。

wb_do_writeback

该函数代码如下,遍历bdi上所有work,通过调用wb_writeback()进行数据写入。

static long wb_do_writeback(struct bdi_writeback *wb)

{

struct backing_dev_info *bdi = wb->bdi;

struct wb_writeback_work *work;

long wrote = 0;

set_bit(BDI_writeback_running, &wb->bdi->state);

while ((work = get_next_work_item(bdi)) != NULL) {

trace_writeback_exec(bdi, work);

wrote += wb_writeback(wb, work);

/*

* Notify the caller of completion if this is a synchronous

* work item, otherwise just free it.

*/

if (work->done)

complete(work->done);

else

kfree(work);

}

/*

* Check for periodic writeback, kupdated() style

*/

wrote += wb_check_old_data_flush(wb);

wrote += wb_check_background_flush(wb);

clear_bit(BDI_writeback_running, &wb->bdi->state);

return wrote;

}

wb_writeback()函数最终调用__writeback_single_inode()将某个inode上脏页刷回。

**__writeback_single_inode**

__writeback_single_inode()的代码如下,最终通过调用do_writepages()函数写盘:

static int

__writeback_single_inode(struct inode *inode, struct writeback_control *wbc)

{

struct address_space *mapping = inode->i_mapping;

long nr_to_write = wbc->nr_to_write;

unsigned dirty;

int ret;

WARN_ON(!(inode->i_state & I_SYNC));

trace_writeback_single_inode_start(inode, wbc, nr_to_write);

ret = do_writepages(mapping, wbc);

/*

* Make sure to wait on the data before writing out the metadata.

* This is important for filesystems that modify metadata on data

* I/O completion. We don't do it for sync(2) writeback because it has a

* separate, external IO completion path and ->sync_fs for guaranteeing

* inode metadata is written back correctly.

*/

if (wbc->sync_mode == WB_SYNC_ALL && !wbc->for_sync) {

int err = filemap_fdatawait(mapping);

if (ret == 0)

ret = err;

}

/*

* Some filesystems may redirty the inode during the writeback

* due to delalloc, clear dirty metadata flags right before

* write_inode()

*/

spin_lock(&inode->i_lock);

/* Clear I_DIRTY_PAGES if we've written out all dirty pages */

if (!mapping_tagged(mapping, PAGECACHE_TAG_DIRTY))

inode->i_state &= ~I_DIRTY_PAGES;

dirty = inode->i_state & I_DIRTY;

inode->i_state &= ~(I_DIRTY_SYNC | I_DIRTY_DATASYNC);

spin_unlock(&inode->i_lock);

/* Don't write the inode if only I_DIRTY_PAGES was set */

if (dirty & (I_DIRTY_SYNC | I_DIRTY_DATASYNC)) {

int err = write_inode(inode, wbc);

if (ret == 0)

ret = err;

}

trace_writeback_single_inode(inode, wbc, nr_to_write);

return ret;

}

do_writepages

函数do_writepages()在上一篇已经介绍过了,它负责调用底层文件系统的a_ops->writepages将pages写入后端存储。