热门标签

热门文章

- 1鸿蒙HarmonyOS开发框架—ArkTS语言(基本语法 二)_鸿蒙 struct

- 2是时候给你的产品配一个AI问答助手了!_ai知识库模型训练faq相似问课程

- 3GPT 作词 + Suno 作曲|2 分钟成就一个音乐梦想!_gpt写出来的歌

- 4【项目实战】十分钟学习完 Spring Boot 拦截器_springboot拦截器

- 5**探索数字世界的边缘:DikuMUD3,一款革新性的多用户地下城**

- 6Flink CDC2,20天内看完这套GitHub标星18k+的大数据开发资料_flink cdc github

- 702_openstack私有云部署_opentack私有云平台软件包

- 8【使用C++开发MCU】01-IIC实例之接口概述(基于NXP S32K1 MCU)_nxp s32k iic编程

- 9WiFi安全技术及wpa连接四次握手_eapol四次握手

- 10SparkSQL的3种Join实现_sparksql的三种join实现

当前位置: article > 正文

基于PSO优化LSSVM的时序预测MATLAB实战_pso-lssvm

作者:笔触狂放9 | 2024-06-17 12:03:51

赞

踩

pso-lssvm

今天给大家分享PSO优化LSSVM的时序预测代码实战,主要从算法原理和代码实战展开。需要了解更多算法代码的,可以点击文章左下角的阅读全文,进行获取哦~需要了解智能算法、机器学习、深度学习和信号处理相关理论的可以后台私信哦,下一期分享的内容就是你想了解的内容~

一、算法原理

支持向量机是针对小样本问题,基于结构风险最小化,较好地解决了以往机器学习模型中的过学习、非线性、维数灾难以及局部极小值等问题,具有较好的泛化能力;然而,该方法在大规模训练样本时,存在训练速度慢、稳定性差等缺陷,从而制约了其使用范围(学习过程中需要求解二次规划问题)。为加快支持向量机的训练速度和简化计算复杂度,最小二乘支持向量机(Least Square Support Vector Machine, LSSVM)被提出。最小二乘支持向量机(LSSVM)是标准支持向量机的一种扩展,该算法将支持 向量机的求解从二次规划问题转化为线性方程组。它与支持向量机的不同之处在于它把不等式约束改成等式约束,并把经验风险由偏差的一次方改为二次方。

本文分享的实战为时序预测问题是一类回归问题,因此接下来介绍的算法原理为最小二乘支持向量回归算法(LSSVR)。

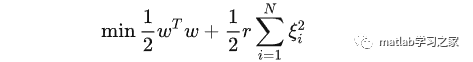

(1)构建LSSVR的优化问题的目标函数:

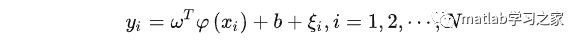

(2) 将传统SVM的不等式约束转换为等式约束:

(3)构造拉格朗日函数:

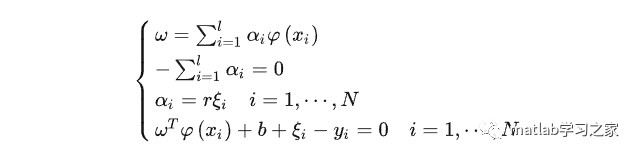

(4)进行KKT转换,得到最优对应的约束条件:

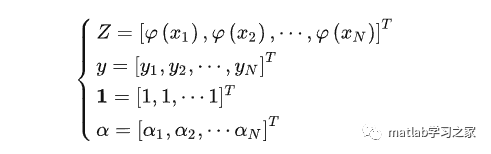

(5)得到对应的线性方程系统为:

(6)最终求解得到:

关于粒子群算法的原理可以参考我以前的文章:

二、代码实战

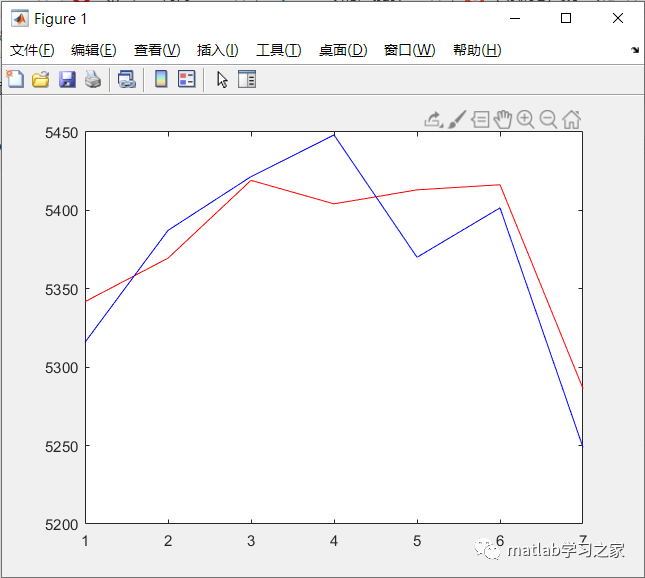

%% 文件说明% main.m : 主文件,进行预测,将生成的网络保存到 'nets.mat'中% main_back.m : 使用'nets.mat'重现结果% nets.mat : 保存 3 * 48 个grnn网络,用于结果重现% QLD1.csv : 原始数据(用的什么时候的数据你点开看一下就明白了)% QLD1.mat : 导成matlab格式的数据% adaboost : 组合算法, 输出结果的第一个数是组合后的mape%% 原理% 输入前一个周7个相同时间点的数据 得到本周7个相同时间点的数据% 不用执行本文件,运行`main_back.m`即可看到结果% net1MeanMape =% 0.0165 <- grnn1% net2MeanMape =% 0.0168 <- grnn2% net3MeanMape =% 0.0168 <- grnn3% combineMeanMapes =% 0.0157 <- adaboostclc;close;clear;testWeekNum = 1;%% 训练数据周数trainWeekNum = 228;load QLD1;QLD1 = reshape(QLD1, 48, length(QLD1)/48);testWeekInd = 1;trainWeekInd = 1 + (1:trainWeekNum);testWeekX = [];testWeekY = [];for yWeekInd = testWeekInd yWeekDayInd = (yWeekInd*7-6):(yWeekInd*7); xWeekDayStart = yWeekInd * 7 + 1; xWeekDayEnd = (yWeekInd + 1) * 7; xWeekDayInd = xWeekDayStart:xWeekDayEnd; yDayData = QLD1(:, yWeekDayInd); xDayData = QLD1(:, xWeekDayInd); testWeekY = [testWeekY yDayData']; testWeekX = [testWeekX xDayData'];endtrainWeekX = [];trainWeekY = [];for yWeekInd = trainWeekInd yWeekDayInd = (yWeekInd*7-6):(yWeekInd*7); xWeekDayStart = yWeekInd * 7 + 1; xWeekDayEnd = (yWeekInd + 1) * 7; xWeekDayInd = xWeekDayStart:xWeekDayEnd; yDayData = QLD1(:, yWeekDayInd); xDayData = QLD1(:, xWeekDayInd); trainWeekY = [trainWeekY yDayData']; trainWeekX = [trainWeekX xDayData'];endnets = {};netMapes = [];for time = 1:48 disp([' time:', num2str(time)]); testInd = (0:(testWeekNum-1)) * 48 + time; trainInd = (0:(trainWeekNum-1)) * 48 + time; global trainX trainY xmap ymap testX testY; testX = testWeekX(:, testInd); testY = testWeekY(:, testInd); trainX = trainWeekX(:,trainInd); trainY = trainWeekY(:,trainInd); [trainX, xmap] = mapminmax(trainX, 0.0000001, 1); [trainY, ymap] = mapminmax(trainY, 0.0000001, 1); testX = mapminmax('apply', testX, xmap); [bestGam, bestSig2] = optimizeLSSVM(); rand('seed', 2); net = initlssvm(trainX', trainY', 'function estimation', bestGam, bestSig2, 'RBF_kernel'); net = trainlssvm(net); ySim = simlssvm(net, testX')'; ySim = mapminmax('reverse', ySim, ymap); mape_ = mape(testY, ySim); nets{time, 1} = net; netMapes = [netMapes mape_];endmeanMape = mean(netMapes)save nets nets;%% 48 7 -> 7clc;close;clear;testWeekNum = 1; trainWeekNum = 228;load QLD1;load nets;QLD1 = reshape(QLD1, 48, length(QLD1)/48);netMapes = [];testWeekInd = 1;trainWeekInd = 1 + (1:trainWeekNum);testWeekX = [];testWeekY = [];for yWeekInd = testWeekInd yWeekDayInd = (yWeekInd*7-6):(yWeekInd*7); xWeekDayStart = yWeekInd * 7 + 1; xWeekDayEnd = (yWeekInd + 1) * 7; xWeekDayInd = xWeekDayStart:xWeekDayEnd; yDayData = QLD1(:, yWeekDayInd); xDayData = QLD1(:, xWeekDayInd); testWeekY = [testWeekY yDayData']; testWeekX = [testWeekX xDayData'];endtrainWeekX = [];trainWeekY = [];for yWeekInd = trainWeekInd yWeekDayInd = (yWeekInd*7-6):(yWeekInd*7); xWeekDayStart = yWeekInd * 7 + 1; xWeekDayEnd = (yWeekInd + 1) * 7; xWeekDayInd = xWeekDayStart:xWeekDayEnd; yDayData = QLD1(:, yWeekDayInd); xDayData = QLD1(:, xWeekDayInd); trainWeekY = [trainWeekY yDayData']; trainWeekX = [trainWeekX xDayData'];endfor time = 1:48 disp([' time:', num2str(time)]); testInd = (0:(testWeekNum-1)) * 48 + time; trainInd = (0:(trainWeekNum-1)) * 48 + time; global trainX trainY xmap ymap testX testY; testX = testWeekX(:, testInd); testY = testWeekY(:, testInd); trainX = trainWeekX(:,trainInd); trainY = trainWeekY(:,trainInd); [trainX, xmap] = mapminmax(trainX, 0.0000001, 1); [trainY, ymap] = mapminmax(trainY, 0.0000001, 1); testX = mapminmax('apply', testX, xmap); net = nets{time, 1}; ySim = simlssvm(net, testX')'; ySim = mapminmax('reverse', ySim, ymap); mape_ = mape(testY, ySim); netMapes = [netMapes mape_];end % end timemeanMape = mean(netMapes)figure(1)plot(ySim,'r');hold on plot(testY,'b');仿真结果:

完整代码

部分知识来源于网络,如有侵权请联系作者删除~

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/笔触狂放9/article/detail/731122

推荐阅读

相关标签