- 1Python爬虫解析器BeautifulSoup4_python beautifulsoup4

- 2如何训练一个高效的GPT模型

- 3VMware 16新建虚拟机CentOS 64 以及启动配置_vm16虚拟机

- 4电子课程设计 - 基于51单片机的停车场车位管理系统_基于单片机的停车场管理系统

- 5VS跨平台开发框架DevExtreme使用教程:在XAF应用中使用DevExtreme Widgets_devexpress exteme vs

- 6查看Anaconda版本、Anaconda和python版本对应关系和快速下载_anaconda版本推荐

- 7计算机科学的知识单元,计算机科学-的技术专业知识点体系-课程体系.ppt

- 8switch与if效率实例解析·5年以下编程经验必看【C#】_c#做一次if判断要多长时间

- 9python统计一组图片的均值与标准差_mean(axis=0,keepdims=true)

- 10比肩ChatGPT的国产AI:文心一言——有话说_ai有话说

【flask整合深度学习】ubuntu系统下显示深度学习视觉检测结果图片并可在web端访问,配置允许手机浏览器打开_深度学习模型识别结果统计网页展示

赞

踩

介绍

之前有一篇flask和mongodb交互的记录文:

https://blog.csdn.net/qq_41358574/article/details/117845077

首先需要先下载的工具:pycharm,pytorch一堆的相关包,flask相关包

本电脑没有cuda,故模型传入时输入:

device = torch.device('cpu')

# Set up modella

model = Darknet(opt.model_def, img_size=opt.img_size).to(device)

if opt.weights_path.endswith(".weights"):

# Load darknet weights

model.load_darknet_weights(opt.weights_path)

else:

# Load checkpoint weights

model.load_state_dict(torch.load(opt.weights_path,map_location=device))

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

项目在深度学习的pytorch框架下载入模型对图片进行检测,然后结果保存在文件夹中,用flask渲染前段页面动画显示结果,且可以手机浏览器输入ip+端口号访问页面

效果:

(字体是动画的,图片看不出来)

flask文件

目录:

utils 和model.py是和深度学习有关的文件代码。

utils 和model.py是和深度学习有关的文件代码。

detect.py相当于main.py,深度学习的目标检测也写在里面了,所以代码挺多

from __future__ import division from models import * from utils.utils import * from utils.datasets import * from utils.augmentations import * from utils.transforms import * import os import sys import time import datetime import argparse from PIL import Image import torch import torchvision.transforms as transforms from torch.utils.data import DataLoader from torchvision import datasets from torch.autograd import Variable import matplotlib.pyplot as plt import matplotlib.patches as patches from matplotlib.ticker import NullLocator from flask import Flask,request,make_response,render_template import socket from time import sleep myhost = socket.gethostbyname(socket.gethostname()) app = Flask(__name__) igpath = '/home/heziyi/pic/' @app.route('/', methods=['GET', 'POST']) # 使用methods参数处理不同HTTP方法 def home(): return render_template('index.html') #@app.route('/img/<string:filename>',methods=['GET']) @app.route('/img/',methods=['GET']) def display(): if request.method == 'GET': # if filename is None: # pass # else: image = open("/static/he_21.png", "rb").read() # image = open(igpath+filename,"rb").read() response = make_response(image) response.headers['Content-Type'] = 'image/jpg' return response if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument("--image_folder", type=str, default="data/custom/dd", help="path to dataset") parser.add_argument("--model_def", type=str, default="config/yolov3-custom.cfg", help="path to model definition file") parser.add_argument("--weights_path", type=str, default="checkpoints/ckpt_88.pth", help="path to weights file") parser.add_argument("--class_path", type=str, default="data/custom/classes.names", help="path to class label file") parser.add_argument("--conf_thres", type=float, default=0.8, help="object confidence threshold") parser.add_argument("--nms_thres", type=float, default=0.4, help="iou thresshold for non-maximum suppression") parser.add_argument("--batch_size", type=int, default=1, help="size of the batches") parser.add_argument("--n_cpu", type=int, default=0, help="number of cpu threads to use during batch generation") parser.add_argument("--img_size", type=int, default=416, help="size of each image dimension") parser.add_argument("--checkpoint_model", type=str,default="checkpoints/ckpt_88.pth",help="path to checkpoint model") opt = parser.parse_args() print(opt) #device = torch.device("cuda" if torch.cuda.is_available() else "cpu") device = torch.device('cpu') os.makedirs("../output", exist_ok=True) # Set up modella model = Darknet(opt.model_def, img_size=opt.img_size).to(device) if opt.weights_path.endswith(".weights"): # Load darknet weights model.load_darknet_weights(opt.weights_path) else: # Load checkpoint weights model.load_state_dict(torch.load(opt.weights_path,map_location=device)) #cpu!!!!!! model.eval() # Set in evaluation mode dataloader = DataLoader( ImageFolder(opt.image_folder, transform= \ transforms.Compose([DEFAULT_TRANSFORMS, Resize(opt.img_size)])), batch_size=opt.batch_size, shuffle=False, num_workers=opt.n_cpu, ) classes = load_classes(opt.class_path) # Extracts class labels from file Tensor = torch.cuda.FloatTensor if torch.cuda.is_available() else torch.FloatTensor imgs = [] # Stores image paths img_detections = [] # Stores detections for each image index print("\nPerforming object detection:") prev_time = time.time() for batch_i, (img_paths, input_imgs) in enumerate(dataloader): # Configure input input_imgs = Variable(input_imgs.type(Tensor)) # Get detections with torch.no_grad(): detections = model(input_imgs) detections = non_max_suppression(detections, opt.conf_thres, opt.nms_thres) # Log progress current_time = time.time() inference_time = datetime.timedelta(seconds=current_time - prev_time) prev_time = current_time print("\t+ Batch %d, Inference Time: %s" % (batch_i, inference_time)) # Save image and detections imgs.extend(img_paths) img_detections.extend(detections) # Bounding-box colors cmap = plt.get_cmap("tab20b") colors = [cmap(i) for i in np.linspace(0, 1, 20)] print("\nSaving images:") # Iterate through images and save plot of detections for img_i, (path, detections) in enumerate(zip(imgs, img_detections)): print("(%d) Image: '%s'" % (img_i, path)) # Create plot img = np.array(Image.open(path)) plt.figure() fig, ax = plt.subplots(1) ax.imshow(img) # Draw bounding boxes and labels of detections if detections is not None: # Rescale boxes to original image detections = rescale_boxes(detections, opt.img_size, img.shape[:2]) unique_labels = detections[:, -1].cpu().unique() n_cls_preds = len(unique_labels) bbox_colors = random.sample(colors, n_cls_preds) for x1, y1, x2, y2, conf, cls_conf, cls_pred in detections: print("\t+ Label: %s, Conf: %.5f" % (classes[int(cls_pred)], cls_conf.item())) box_w = x2 - x1 box_h = y2 - y1 color = bbox_colors[int(np.where(unique_labels == int(cls_pred))[0])] # Create a Rectangle patch bbox = patches.Rectangle((x1, y1), box_w, box_h, linewidth=2, edgecolor=color, facecolor="none") print(int(box_w)*int(box_h)) # if(box_w*box_h>10000): # se.write("1".encode()) # time.sleep(3) # se.write("0".encode()) # Add the bbox to the plot ax.add_patch(bbox) # Add label plt.text( x1, y1, s=classes[int(cls_pred)], color="white", verticalalignment="top", bbox={"color": color, "pad": 0}, ) # Save generated image with detections plt.axis("off") plt.gca().xaxis.set_major_locator(NullLocator()) plt.gca().yaxis.set_major_locator(NullLocator()) filename = os.path.basename(path).split(".")[0] output_path = os.path.join("../output", f"{filename}.png") plt.savefig(output_path, bbox_inches="tight", pad_inches=0.0) plt.close() app.run() #启动flask服务器

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

其中函数display():用于在浏览器输入地址后直接返回图片

@app.route('/img/',methods=['GET'])

def display():

if request.method == 'GET':

# if filename is None:

# pass

# else:

image = open("/static/he_21.png", "rb").read()

# image = open(igpath+filename,"rb").read()

response = make_response(image)

response.headers['Content-Type'] = 'image/jpg'

return response

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

除了最后一行app.run()其他的都是深度学习的代码,学过的应该很容易看懂。

前端代码

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <title>Title</title> <link rel="stylesheet" type="text/css" href="static/Login.css"/> </head> <body> <h1 >检测结果</h1> <h1>hello!!!!!!!!!!!!```</h1> <h2>this is the detection result</h2> <img src="/static/he_21.png"> <img src="/static/he_14.png"> <img src="/static/he_4.png"> </body> </html>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

css:

html{ width: 100%; height: 100%; overflow: hidden; font-style: sans-serif; } body{ width: 100%; height: 100%; font-family: 'Open Sans',sans-serif; margin: 0; background-color: #4A374A; } img { widht: 300px; height: 300px; border: 1px solid red; } h1 { text-align:center; color:#fff; font-size:48px; text-shadow: 1px 1px 1px #ccc, 0 0 10px #fff, 0 0 20px #fff, 0 0 30px #fff, 0 0 40px #ff00de, 0 0 70px #ff00de, 0 0 80px #ff00de, 0 0 100px #ff00de, 0 0 150px #ff00de; transform-style: preserve-3d; -moz-transform-style: preserve-3d; -webkit-transform-style: preserve-3d; -ms-transform-style: preserve-3d; -o-transform-style: preserve-3d; animation: run ease-in-out 9s infinite; -moz-animation: run ease-in-out 9s infinite ; -webkit-animation: run ease-in-out 9s infinite; -ms-animation: run ease-in-out 9s infinite; -o-animation: run ease-in-out 9s infinite; } @keyframes run { 0% {transform:rotateX(-5deg) rotateY(0);} 50% { transform:rotateX(0) rotateY(180deg); text-shadow: 1px 1px 1px #ccc, 0 0 10px #fff, 0 0 20px #fff, 0 0 30px #fff, 0 0 40px #3EFF3E, 0 0 70px #3EFFff, 0 0 80px #3EFFff, 0 0 100px #3EFFee, 0 0 150px #3EFFee; } 100% {transform:rotateX(5deg) rotateY(360deg);} } @-webkit-keyframes run { 0% {transform:rotateX(-5deg) rotateY(0);} 50% { transform:rotateX(0) rotateY(180deg); text-shadow: 1px 1px 1px #ccc, 0 0 10px #fff, 0 0 20px #fff, 0 0 30px #fff, 0 0 40px #3EFF3E, 0 0 70px #3EFFff, 0 0 80px #3EFFff, 0 0 100px #3EFFee, 0 0 150px #3EFFee; } 100% {transform:rotateX(5deg) rotateY(360deg);} } @-moz-keyframes run { 0% {transform:rotateX(-5deg) rotateY(0);} 50% { transform:rotateX(0) rotateY(180deg); text-shadow: 1px 1px 1px #ccc, 0 0 10px #fff, 0 0 20px #fff, 0 0 30px #fff, 0 0 40px #3EFF3E, 0 0 70px #3EFFff, 0 0 80px #3EFFff, 0 0 100px #3EFFee, 0 0 150px #3EFFee; } 100% {transform:rotateX(5deg) rotateY(360deg);} } @-ms-keyframes run { 0% {transform:rotateX(-5deg) rotateY(0);} 50% { transform:rotateX(0) rotateY(180deg); text-shadow: 1px 1px 1px #ccc, 0 0 10px #fff, 0 0 20px #fff, 0 0 30px #fff, 0 0 40px #3EFF3E, 0 0 70px #3EFFff, 0 0 80px #3EFFff, 0 0 100px #3EFFee, 0 0 150px #3EFFee; } 100% {transform:rotateX(5deg) rotateY(360deg);} }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

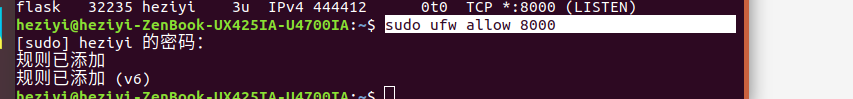

输入命令

export FLASK_APP=“detect.py” #由于是linux故输入这个

flask run --host=0.0.0.0 --port=8000

记得开放ubuntu的端口:

记得开放ubuntu的端口:

sudo ufw allow 8000

附:

开启/禁用

sudo ufw allow|deny [service]

sudo ufw allow smtp 允许所有的外部IP访问本机的25/tcp (smtp)端口

sudo ufw allow 22/tcp 允许所有的外部IP访问本机的22/tcp (ssh)端口

sudo ufw allow 53 允许外部访问53端口(tcp/udp)

sudo ufw allow from 192.168.1.100 允许此IP访问所有的本机端口

sudo ufw allow proto udp 192.168.0.1 port 53 to 192.168.0.2 port 53

查看防火墙状态

sudo ufw status