热门标签

热门文章

- 1NLP-信息抽取-NER-2015-BiLSTM+CRF(一):命名实体识别【预测每个词的标签】【评价指标:精确率=识别出正确的实体数/识别出的实体数、召回率=识别出正确的实体数/样本真实实体数】_信息抽取评价指标

- 2数据结构----线性结构----多维数组和广义表_多维数组属于什么数据结构

- 3java 公司面试题_java企业的面试笔试题

- 4动态规划基础_算法动态规划的基本公式

- 5【git】本地仓库与远程仓库如何建立连接_git与远程仓库建立连接

- 6LeetCode--1346. 检查整数及其两倍数是否存在(C++描述)_.请编写一个程序,读入两个整数,确认第一个是否为第二个数的倍数并打印结果。

- 7工业级Netty网关,京东是如何架构的?_京东数据架构

- 8Springboot集成Mybatis的报错_mybatis the last packet sent successfully to the s

- 9人工智能时代,软件工程师们将会被取代?

- 10"字节跳动杯"2018中国大学生程序设计竞赛-女生专场

当前位置: article > 正文

使用多项式朴素贝叶斯对中文垃圾短信进行分类_中文垃圾短信数据集

作者:小蓝xlanll | 2024-04-12 13:09:23

赞

踩

中文垃圾短信数据集

The finals

使用多项式朴素贝叶斯对中文垃圾短信进行分类

- # 1. 导入所需函数库

- import pandas as pd

- import jieba

- from sklearn.feature_extraction.text import CountVectorizer

- from sklearn.model_selection import train_test_split

- from sklearn.metrics import classification_report

- from sklearn.naive_bayes import MultinomialNB

- from wordcloud import WordCloud

- from scipy.sparse import csr_matrix

- import matplotlib.pyplot as plt

- plt.rcParams['font.sans-serif'] = ['SimHei'] #黑体

-

-

- # 2. 读取数据

- data = pd.read_csv('../data/message80W/message80W.csv', header=None,names=['number', 'label','news'])

-

- # 3. 去重

- data = data.drop_duplicates()

-

- # 4. 加载自定义词典

- # jieba.load_userdict('your_custom_dict.txt')

-

- # 5. 分词

- data['分词内容'] = data['news'].apply(lambda x: ' '.join(jieba.cut(x)))

-

- # 6. 去停

- with open('../data/stopwords/stopwords-master/hit_stopwords.txt','r', encoding='utf-8') as f:

- stopwords = [line.strip() for line in f.readlines()]

-

- data['分词内容'] = data['分词内容'].apply(lambda x: ' '.join([word for word in x.split() if word not in stopwords]))

- data['分词内容'] = data['分词内容'].apply(lambda x: x.replace('x', ''))

-

- # 7. 词频统计

- vectorizer = CountVectorizer()

- X = vectorizer.fit_transform(data['分词内容'])

- X_1 = vectorizer.transform(data[data['label'] == 1]['分词内容'])

- X_0 = vectorizer.transform(data[data['label'] == 0]['分词内容'])

-

- # 将词频矩阵转换为稀疏矩阵

- X_sparse = csr_matrix(X)

- # 统计词频

- word_freq = pd.DataFrame({'词语': vectorizer.get_feature_names(), '词频': X_sparse.sum(axis=0).tolist()[0]})

- # 按词频降序排序

- word_freq = word_freq.sort_values(by='词频', ascending=False)

- # 展示词频统计结果

- print("全部的词频:")

- print(word_freq.head(10))

-

- X_sparse_1 = csr_matrix(X_1)

- # 统计词频

- word_freq_1 = pd.DataFrame({'词语': vectorizer.get_feature_names(), '词频': X_sparse_1.sum(axis=0).tolist()[0]})

- # 按词频降序排序

- word_freq_1 = word_freq_1.sort_values(by='词频', ascending=False)

- # 展示词频统计结果

- print("垃圾短信词频:")

- print(word_freq_1.head(10))

-

- X_sparse_0 = csr_matrix(X_0)

- # 统计词频

- word_freq_0 = pd.DataFrame({'词语': vectorizer.get_feature_names(), '词频': X_sparse_0.sum(axis=0).tolist()[0]})

- # 按词频降序排序

- word_freq_0 = word_freq_0.sort_values(by='词频', ascending=False)

- # 展示词频统计结果

- print("非垃圾短信词频:")

- print(word_freq_0.head(10))

-

-

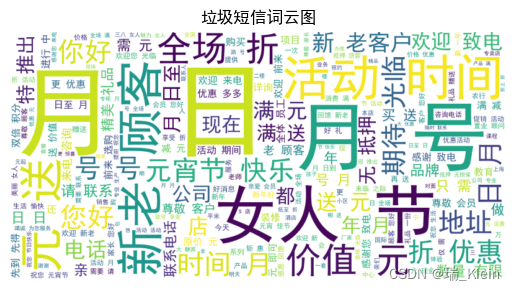

- # 8. 绘制垃圾短信词云图

- spam_words = ' '.join(data[data['label'] == 1]['分词内容'])

- # spam_words

-

- spam_wordcloud = WordCloud(font_path='simhei.ttf',width=800, height=400, background_color='white').generate(spam_words)

- plt.imshow(spam_wordcloud, interpolation='bilinear')

- plt.title('垃圾短信词云图')

- plt.axis('off')

- plt.show()

-

- ham_words = ' '.join(data[data['label'] == 0]['分词内容'])

- # ham_words

-

- # 9. 绘制非垃圾短信词云图

- ham_words = ' '.join(data[data['label'] == 0]['分词内容'])

- ham_wordcloud = WordCloud(font_path='simhei.ttf',width=800, height=400, background_color='white').generate(ham_words)

- plt.imshow(ham_wordcloud, interpolation='bilinear')

- plt.title('非垃圾短信词云图')

- plt.axis('off')

- plt.show()

-

- # 10. 加载、划分训练集和测试集

- X_train, X_test, y_train, y_test = train_test_split(X, data['label'], test_size=0.2, random_state=42)

-

- # 11. 计算条件概率

- classifier = MultinomialNB()

- classifier.fit(X_train, y_train)

-

- # 12. 判断所属分类

- y_pred = classifier.predict(X_test)

-

- print("处理的数据条数:",len(data))

- print("准确率:",classifier.score(X_test,y_test))

- print(classification_report(y_test, y_pred, target_names=['垃圾短信', '非垃圾短信']))

全部的词频: 词语 词频 192917 手机 33399 242851 活动 24488 118954 南京 17539 263987 电脑 17491 352274 飞机 16675 74142 中国 16628 100411 公司 16357 239646 没有 13232 85539 今天 12818 259982 现在 12684

垃圾短信词频: 词语 词频 242851 活动 18887 187875 您好 11338 88880 优惠 10761 231309 欢迎 8936 100411 公司 7113 141591 地址 6955 264103 电话 6904 99271 全场 6693 152777 女人 5584 201334 推出 5385

非垃圾短信词频: 词语 词频 192917 手机 31705 118954 南京 17391 263987 电脑 17129 352274 飞机 16667 74142 中国 15510 239646 没有 12774 85539 今天 11818 267084 百度 11470 5225 2015 11333 243687 浙江 11305

处理的数据条数: 800000 准确率: 0.97909375 precision recall f1-score support 垃圾短信 1.00 0.98 0.99 143939 非垃圾短信 0.85 0.97 0.90 16061 accuracy 0.98 160000 macro avg 0.92 0.97 0.95 160000 weighted avg 0.98 0.98 0.98 160000

声明:本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:【wpsshop博客】

推荐阅读

相关标签