- 1人脸表情识别相关研究_大学开展表情识别技术的相关研究工作

- 2当YOLOv5碰上PyQt5_yolov5加入pyqt5

- 3centos系列:【 全网最详细的安装配置Nginx,亲测可用,解决各种报错】_centos 安装nginx

- 4Python从入门到自闭(基础篇)

- 5EVO轨迹评估工具学习笔记_evo轨迹对准怎么从轨迹最开始对准

- 6学术英语视听说2听力原文_每天一套 | 高中英语听力专项训练(2)(录音+原文+答案)...

- 7深度解析:六个维度透视Claude3的潜能与局限_claude技术剖析

- 8git revert是个好东西_git revert 某个文件

- 9Jetson orin部署大模型示例教程_jetson部署

- 10YOLOv9:目标检测的新里程碑

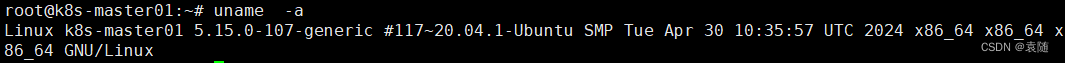

ubuntu系统 kubeadm方式搭建k8s集群

赞

踩

服务器环境与要求:

三台服务器

k8s-master01 192.168.26.130 操作系统: Ubuntu20.04

k8s-woker01 192.168.26.140 操作系统: Ubuntu20.04

k8s-woker02 192.168.26.150 操作系统: Ubuntu20.04

最低配置:2核 2G内存 20G硬盘

1、环境准备:(所有服务器都需要操作)

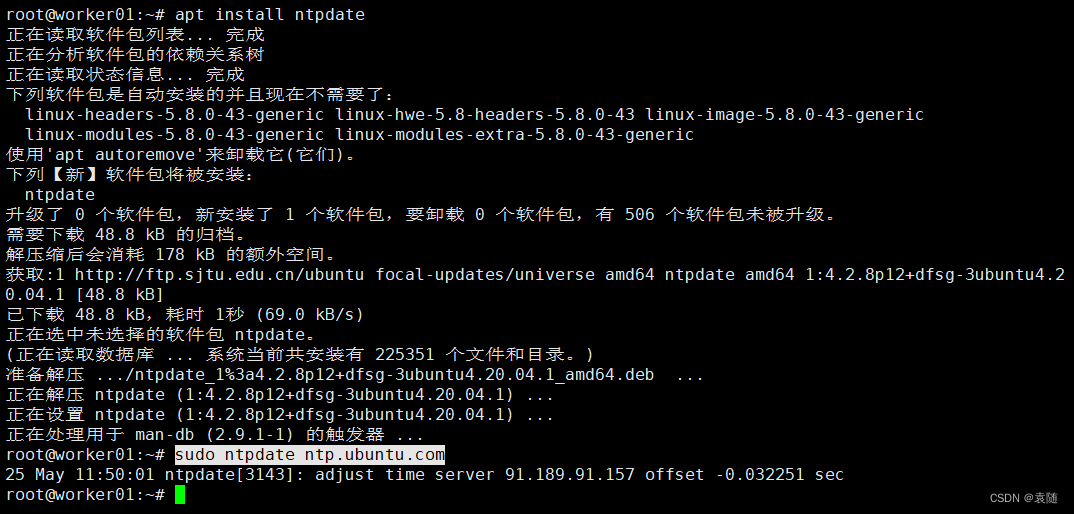

1、时间同步

timedatectl set-timezone Asia/Shanghai

sudo apt install ntpdate

sudo ntpdate ntp.ubuntu.com

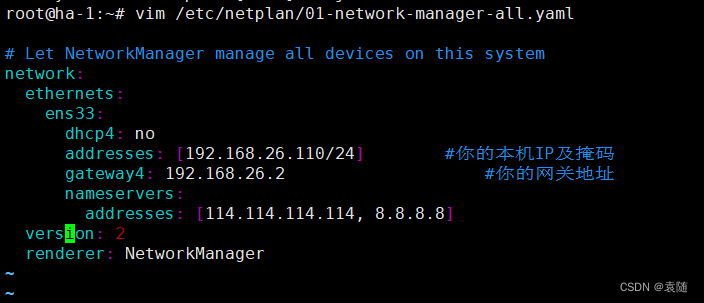

2、固定IP

Ubuntu系统设置静态固定IP保姆级教程_ubuntu 设置固定ip-CSDN博客

可按照本人上面的文档来操作

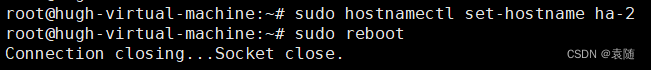

3、修改主机名

sudo hostnamectl set-hostname k8s-master

sudo reboot

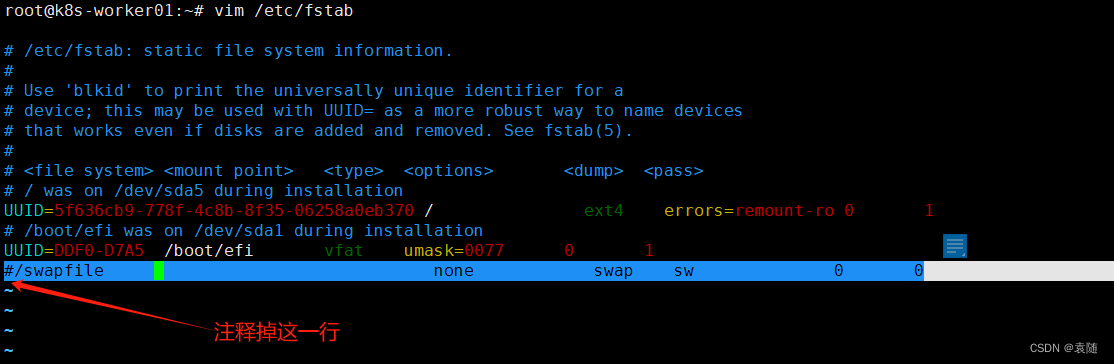

4、 关闭swap分区

就是说ha-1和ha-2不需要安装

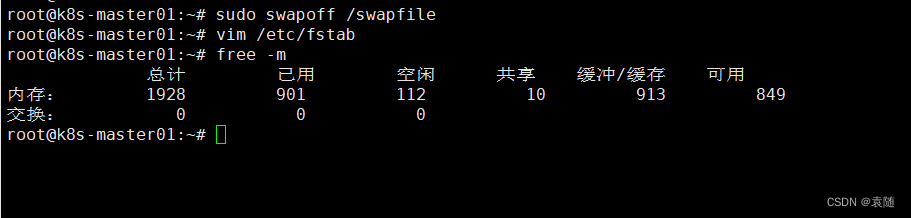

临时禁用:

sudo swapoff /swapfile

永久禁用:

vim /etc/fstab 打开文件按照下图注释一行。

使用

free -m命令来查看确认交换分区已经被禁用

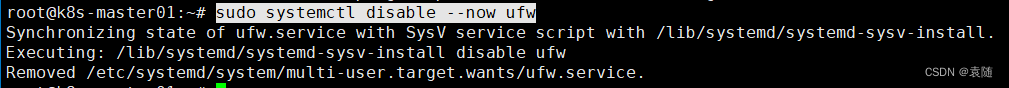

5、关闭防火墙

sudo systemctl disable --now ufw

6、修改改为国内镜像源地址

6、修改改为国内镜像源地址

备份配置文件

sudo cp -a /etc/apt/sources.list /etc/apt/sources.list.bak

修改镜像源为华为云镜像

sudo sed -i "s@http://.*archive.ubuntu.com@http://repo.huaweicloud.com@g" /etc/apt/sources.list

sudo sed -i "s@http://.*security.ubuntu.com@http://repo.huaweicloud.com@g" /etc/apt/sources.list

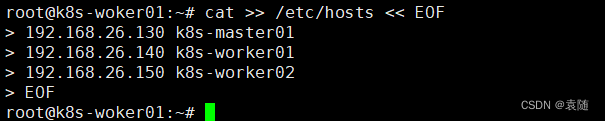

7、主机名与IP地址解析配置

cat >> /etc/hosts << EOF

192.168.26.130 k8s-master01

192.168.26.140 k8s-worker01

192.168.26.150 k8s-worker02

EOF

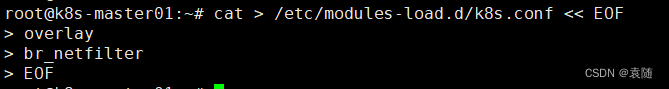

配置内核转发及网桥过滤:

cat > /etc/modules-load.d/k8s.conf << EOF

overlay

br_netfilter

EOF

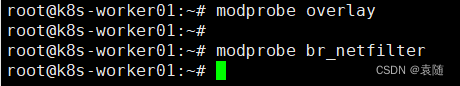

加载配置:

modprobe overlay

modprobe br_netfilter

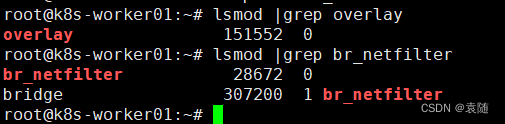

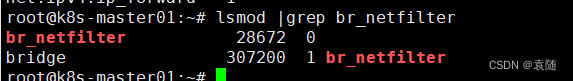

查看是否加载:

lsmod |grep overlay

lsmod |grep br_netfilter

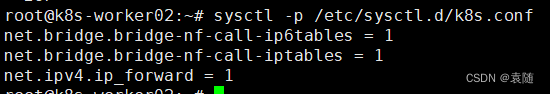

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

让配置生效:

sysctl -p /etc/sysctl.d/k8s.conf

查看是否加载生效

lsmod |grep br_netfilter

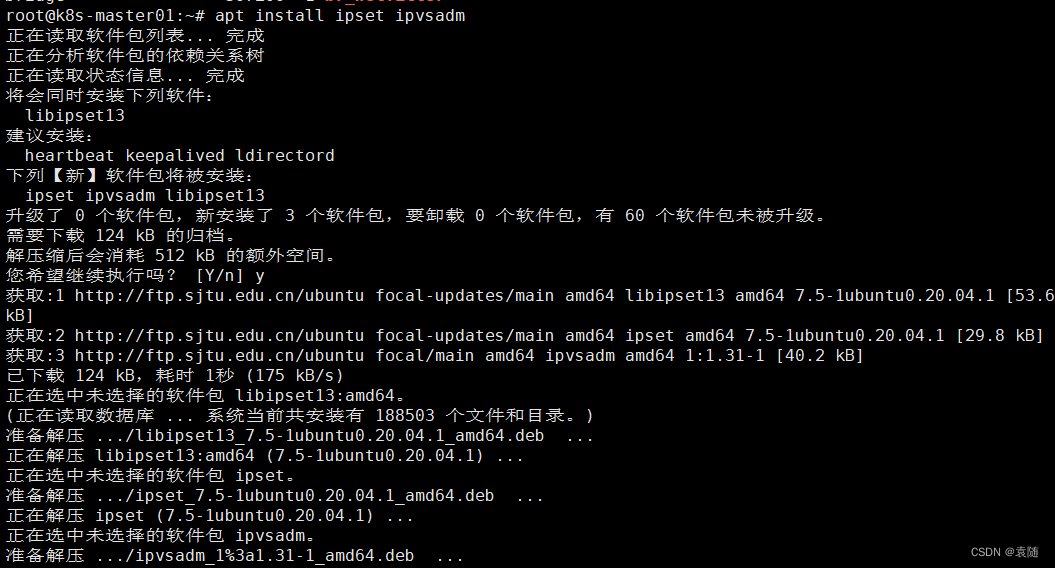

8、安装ipset和ipvsadm

apt install ipset ipvsadm

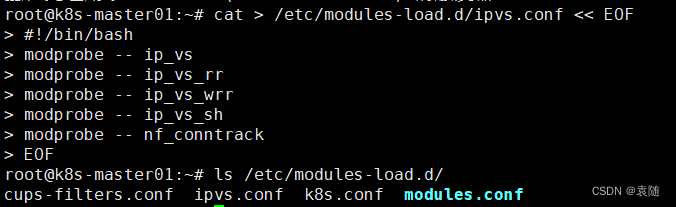

配置 ipvsadm 模块加载方式

cat > /etc/modules-load.d/ipvs.conf << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

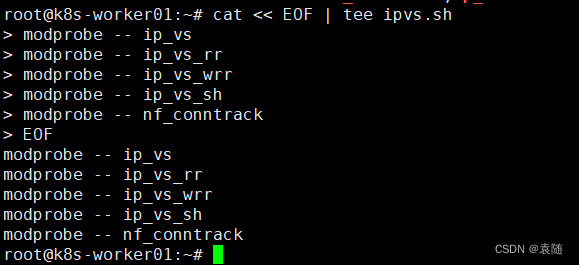

写成一个脚本文件

cat << EOF | tee ipvs.sh

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

授权运行检查:

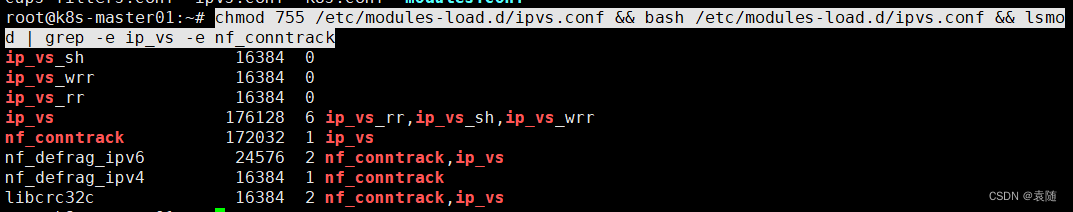

chmod 755 /etc/modules-load.d/ipvs.conf && bash /etc/modules-load.d/ipvs.conf && lsmod | grep -e ip_vs -e nf_conntrack

9、容器运行时containetd

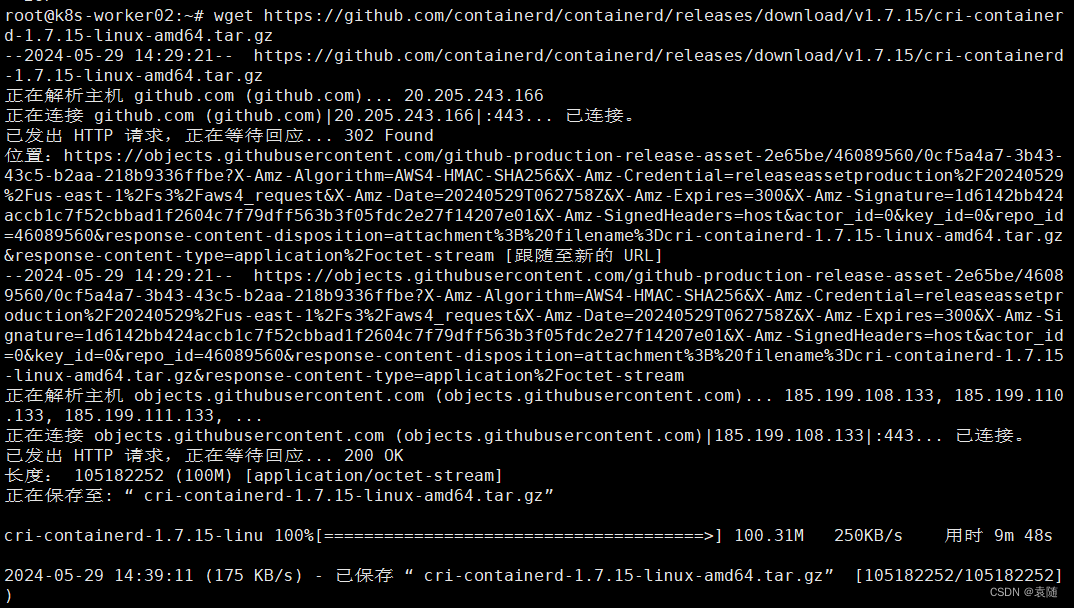

wget https://github.com/containerd/containerd/releases/download/v1.7.15/cri-containerd-1.7.15-linux-amd64.tar.gz

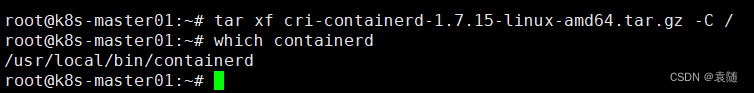

解压并查看

tar xf cri-containerd-1.7.15-linux-amd64.tar.gz -C /

which containerd

10、containerd配置文件生成并修改

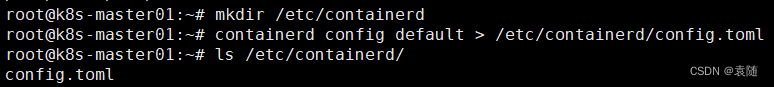

创建文件:

mkdir /etc/containerd

生成配置文件:

containerd config default > /etc/containerd/config.toml

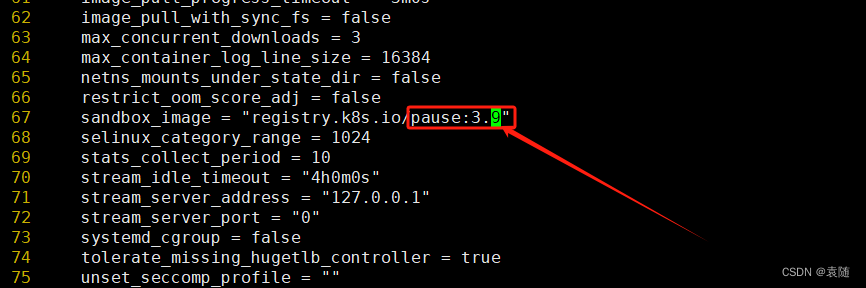

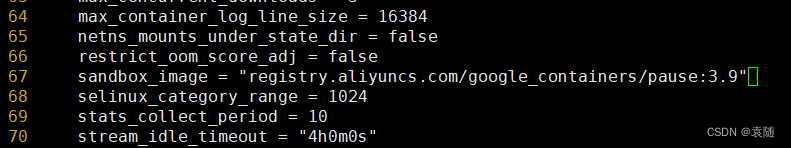

修改配置文件将3.8改为3.9

或者改为阿里云:registry.aliyuncs.com/google_containers/pause:3.9

vim /etc/containerd/config.toml

或者改为阿里云镜像和上图二选一即可

配置镜像加速

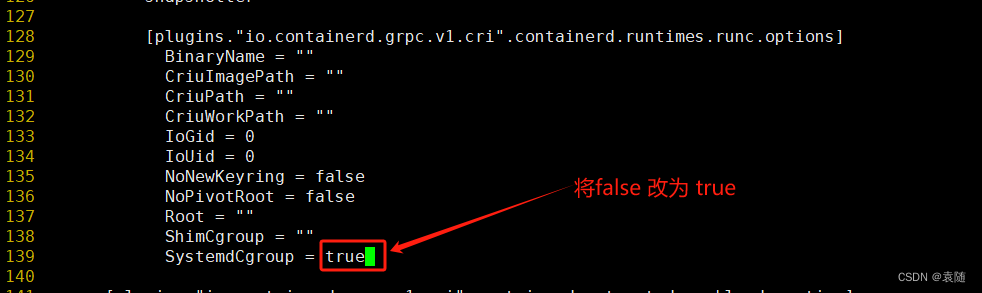

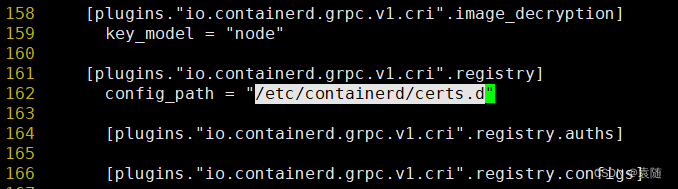

修改Config.toml文件vim /etc/containerd/config.toml。如下:

创建上图相应的文件目录

mkdir -p /etc/containerd/certs.d/docker.io

配置加速

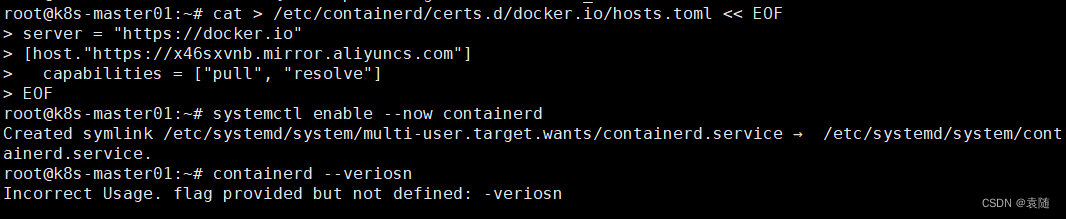

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://x46sxvnb.mirror.aliyuncs.com"]

capabilities = ["pull", "resolve"]

EOF

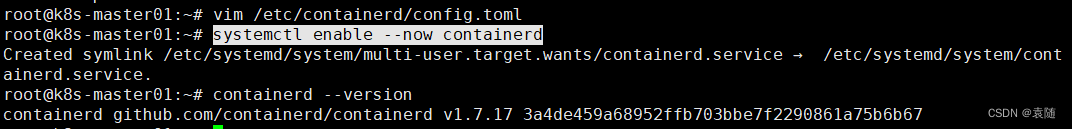

启动并设置开机自启

systemctl enable --now containerd

查看版本:

containerd --version

2、集群部署(所有服务器都需要操作)

1、下载用于kubernetes软件包仓库的公告签名密钥

k8s社区源(和下面的阿里云源二选一即可)

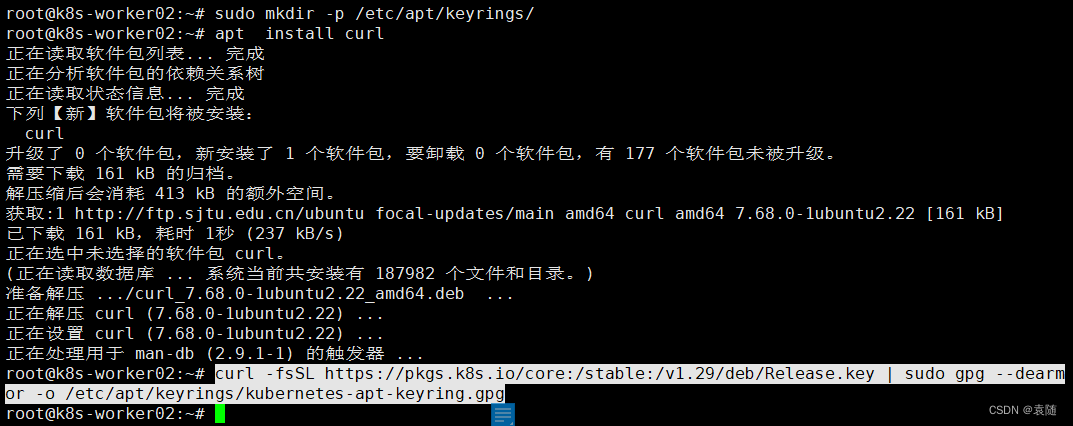

创建目录:

sudo mkdir -p /etc/apt/keyrings/

下载密钥

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

阿里云源

创建目录:

sudo mkdir -p /etc/apt/keyrings/

下载密钥

curl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/deb/Release.key | gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

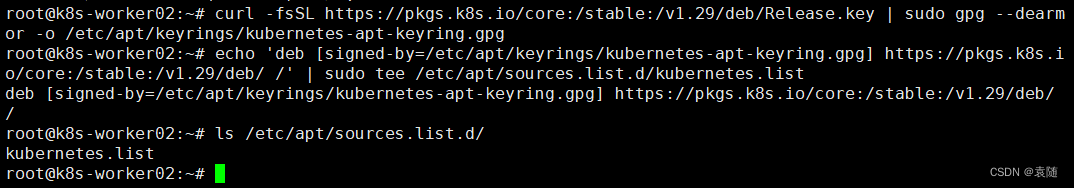

k8s社区(和下面的阿里云源二选一即可)

添加kubernetes apt仓库

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

阿里云

添加kubernetes apt仓库

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

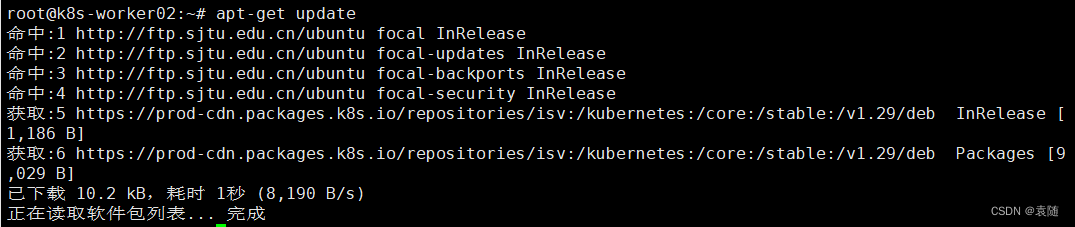

更新仓库

apt-get update

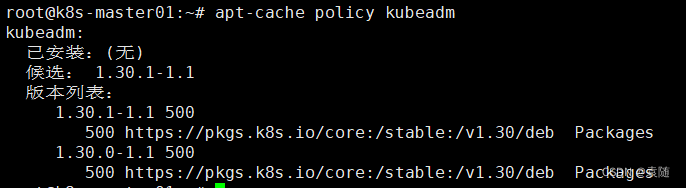

查看软件列表:

apt-cache policy kubeadm

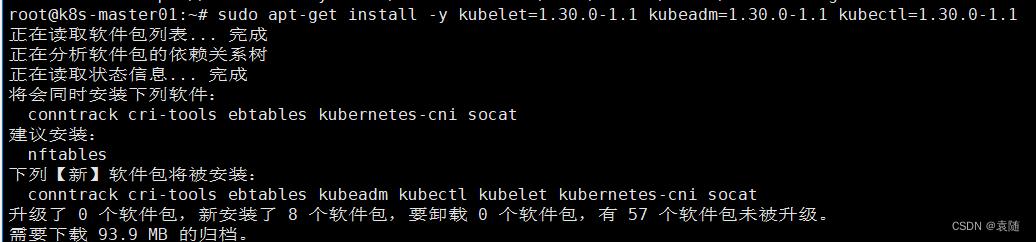

安装指定版本:

sudo apt-get install -y kubelet=1.30.0-1.1 kubeadm=1.30.0-1.1 kubectl=1.30.0-1.1

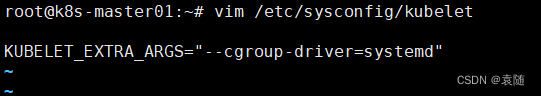

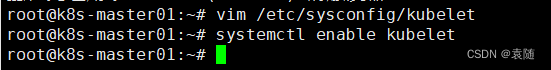

修改kubelet配置

vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

设置为开机自启

systemctl enable kubelet

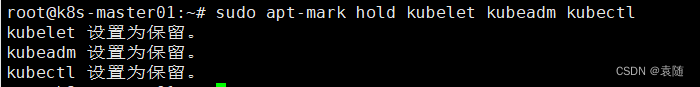

锁定版本,防止后期自动更新。

sudo apt-mark hold kubelet kubeadm kubectl

解锁版本,可以执行更新

sudo apt-mark unhold kubelet kubeadm kubectl

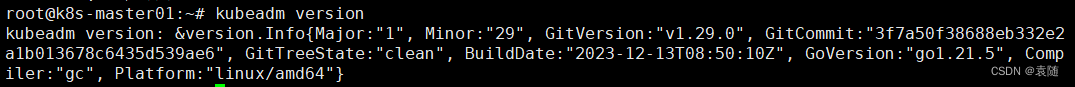

3、集群初始化:(k8s-master01节点操作)

查看版本

kubeadm version

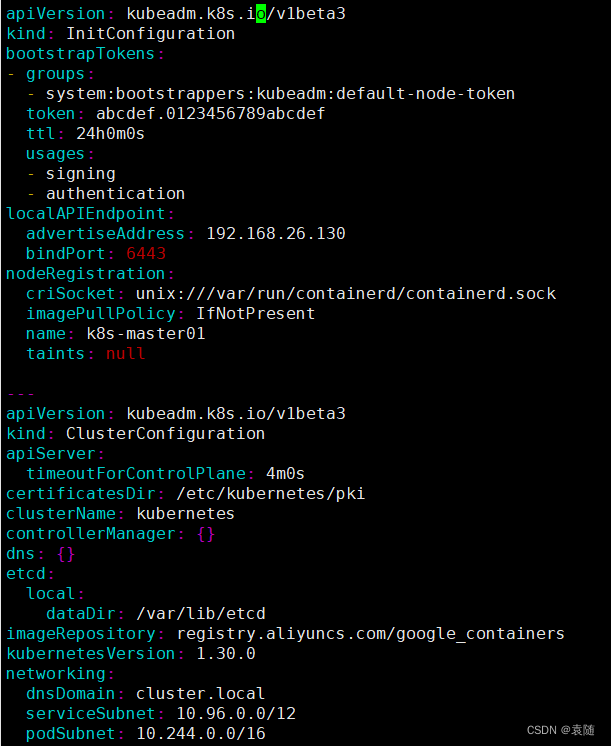

生成配置文件:

kubeadm config print init-defaults > kubeadm-config.yaml

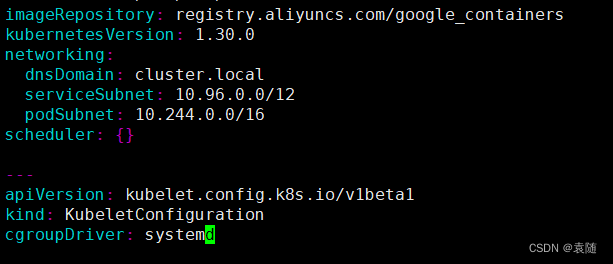

按照下图修改配置文件:

vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

localAPIEndpoint:

advertiseAddress: 192.168.26.130

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master01

taints: null---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

apiServer:

timeoutForControlPlane: 4m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kubernetesVersion: 1.30.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

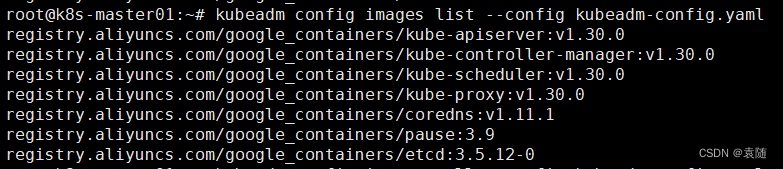

查看镜像

kubeadm config images list --config kubeadm-config.yaml

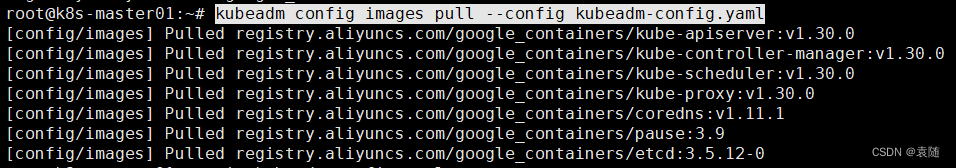

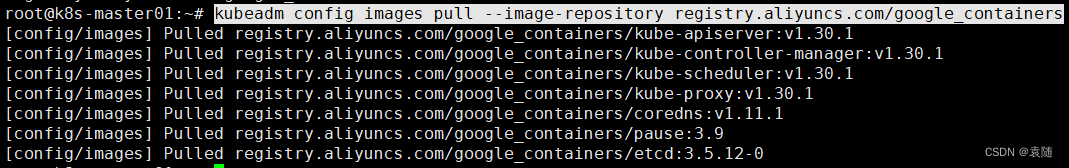

下载镜像

kubeadm config images pull --config kubeadm-config.yaml

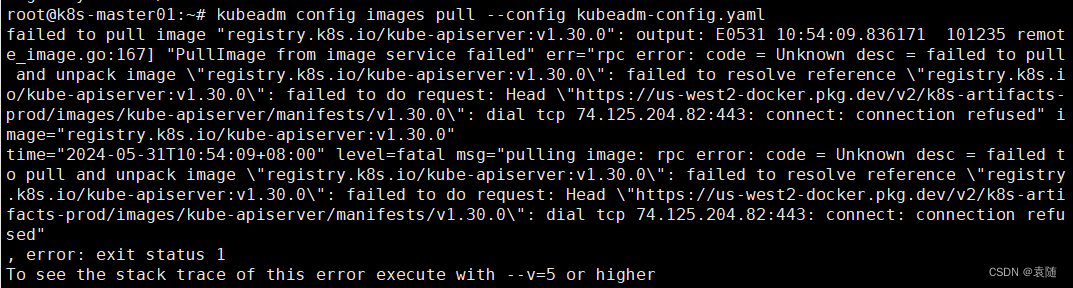

如果出现报错镜像拉取不下来如下图错误

解决办法(我们在阿里云仓库进行拉取镜像)

kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

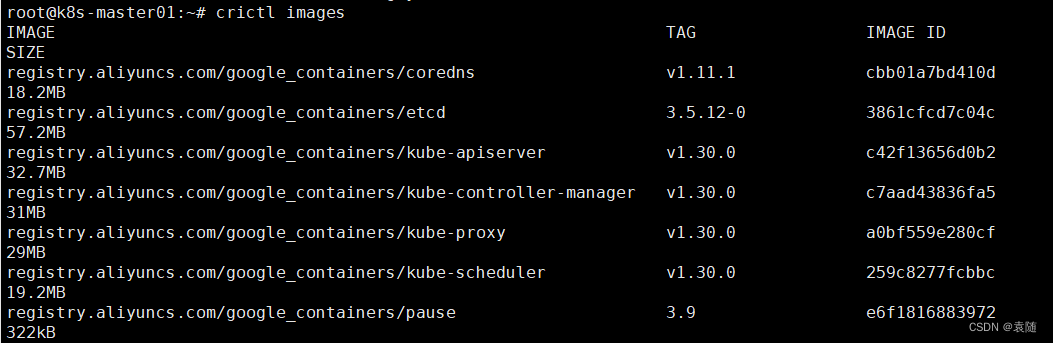

查看镜像

crictl images

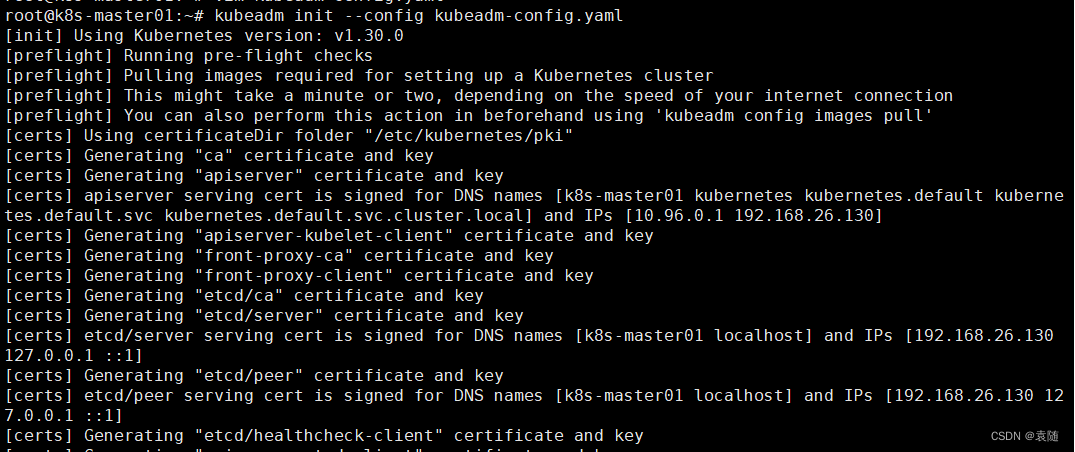

初始化集群

kubeadm init --config kubeadm-config.yaml

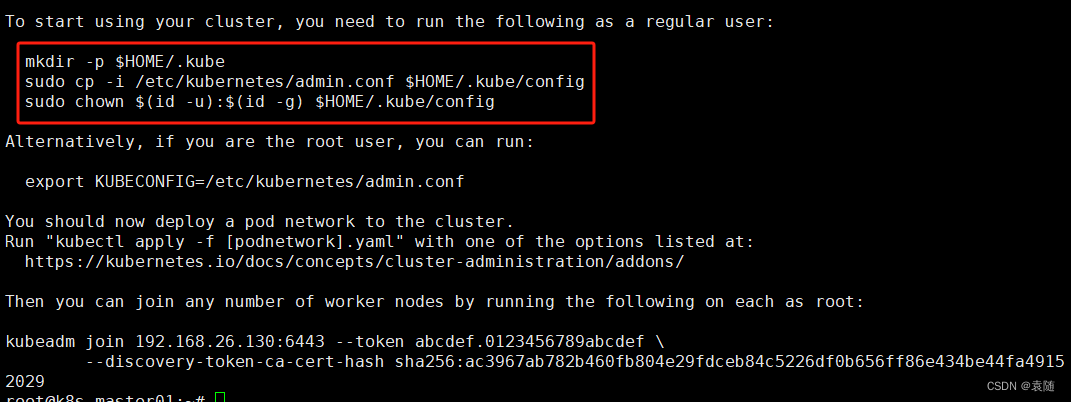

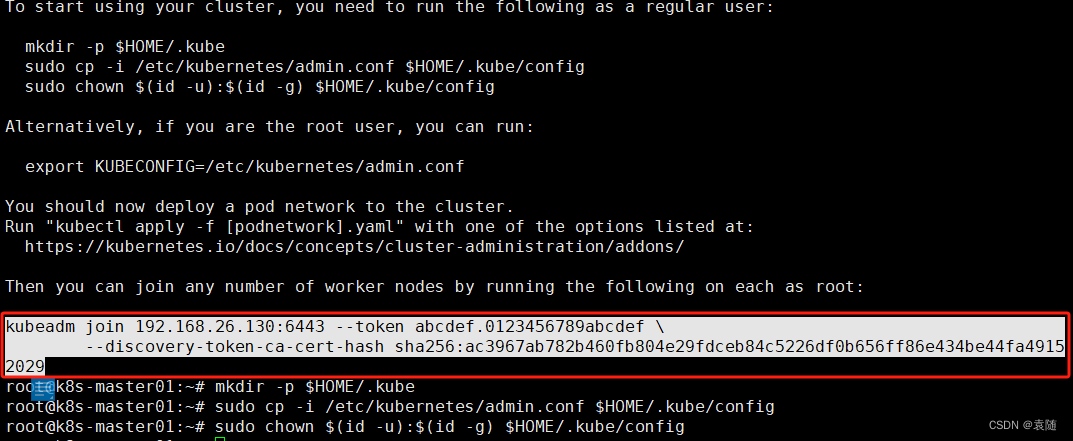

初始完成后按照反馈的命令执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

将下图命令完整复制到我们的k8s-worker节点执行此命令,将节点加入集群

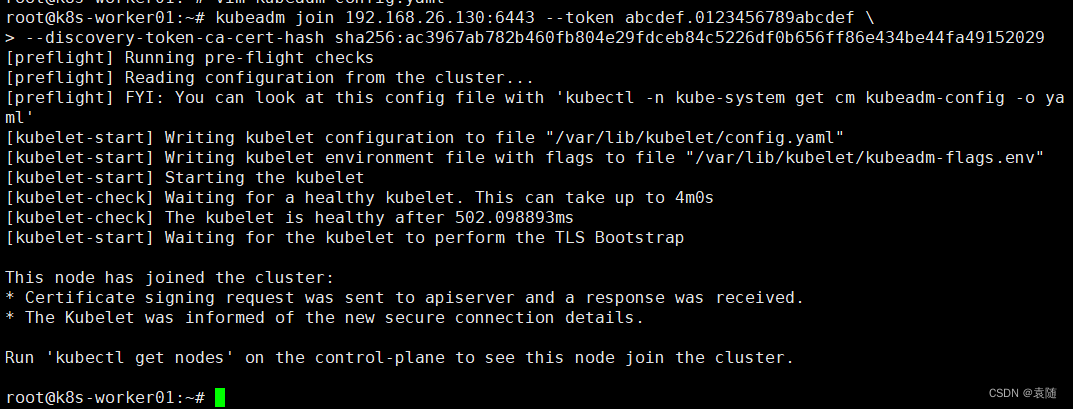

k8s-worker01和k8s-worker02节点执行上图复制的命令

kubeadm join 192.168.26.130:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ac3967ab782b460fb804e29fdceb84c5226df0b656ff86e434be44fa49152029

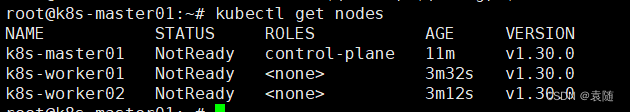

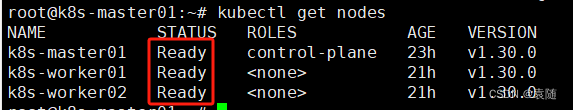

在k8s-master01节点查看是否有工作节点加入

kubectl get nodes

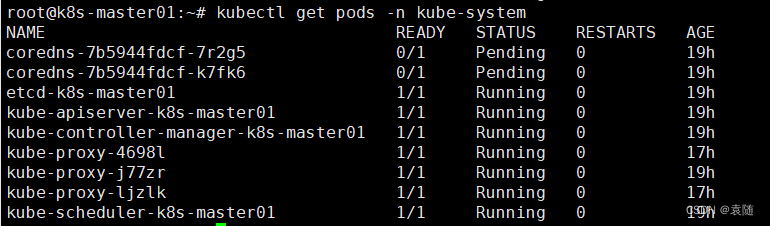

kubectl get pods -n kube-system

但是从上图我们可以看到集群状态是NotReady是因为我们缺少网络插件所以接下来我们需要安装网络插件

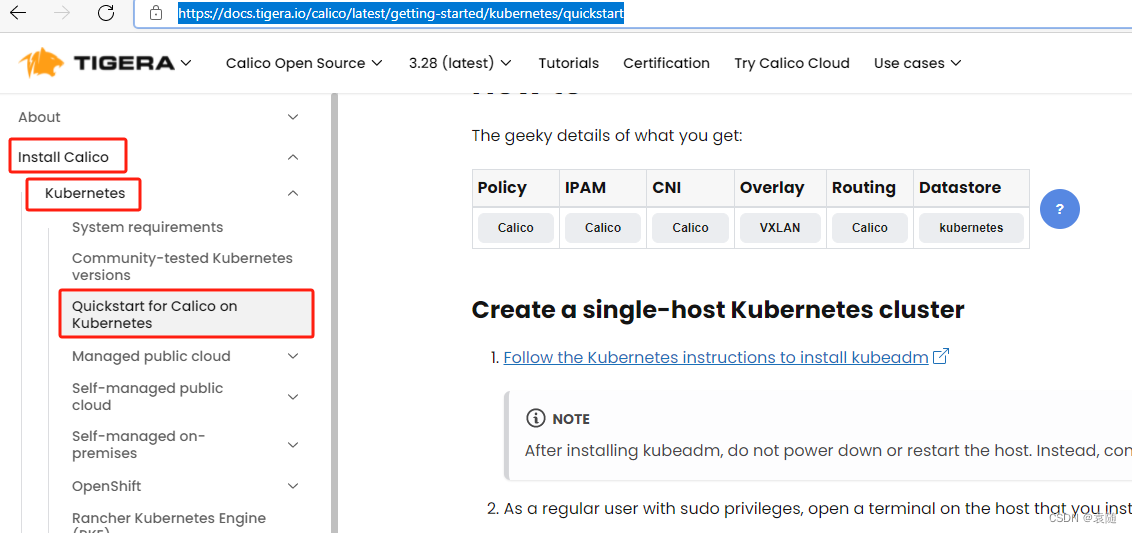

4、网络插件安装部署(k8s-master01节点操作)

访问calico的官网查看

Quickstart for Calico on Kubernetes | Calico Documentation (tigera.io)

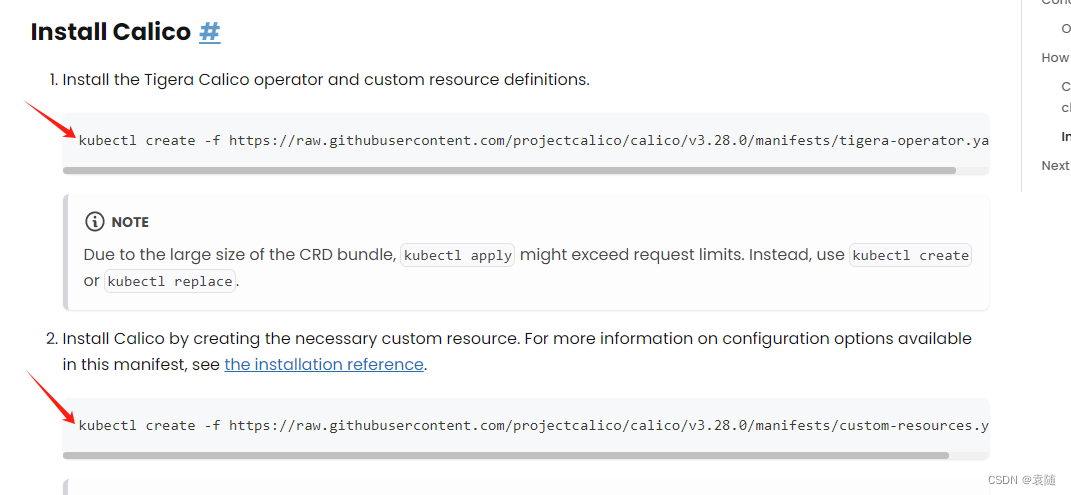

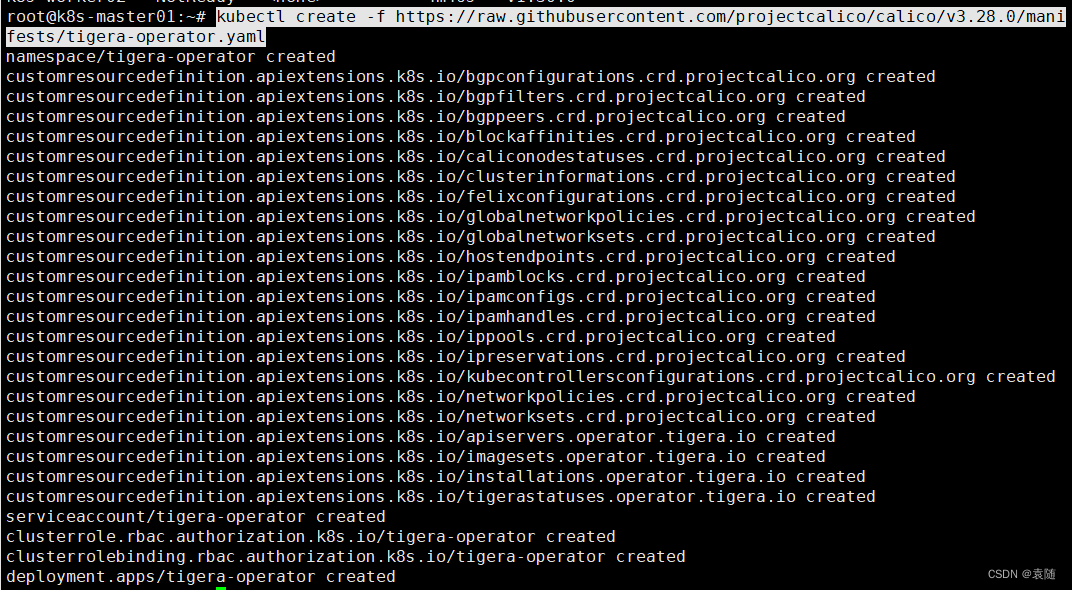

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/tigera-operator.yaml

查看是否运行:

kubectl get pods -n tigera-operator

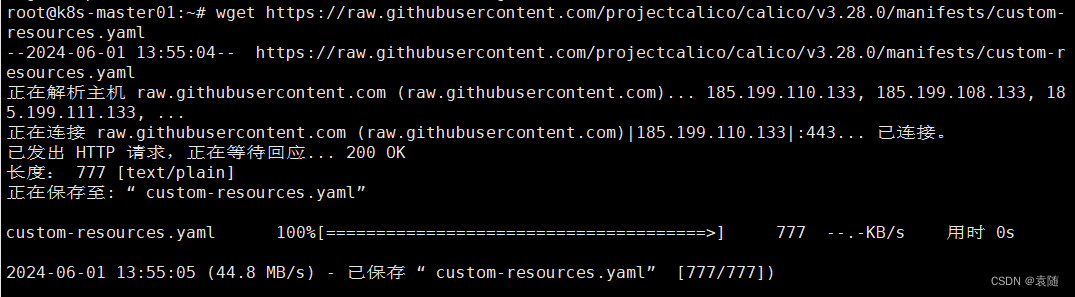

下载calico文件 建议使用wget 复制官网的链接进行下载,因为官网的命令是直接下载运行的,我们需要对文件先进行修改在运行,

wget https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/custom-resources.yaml

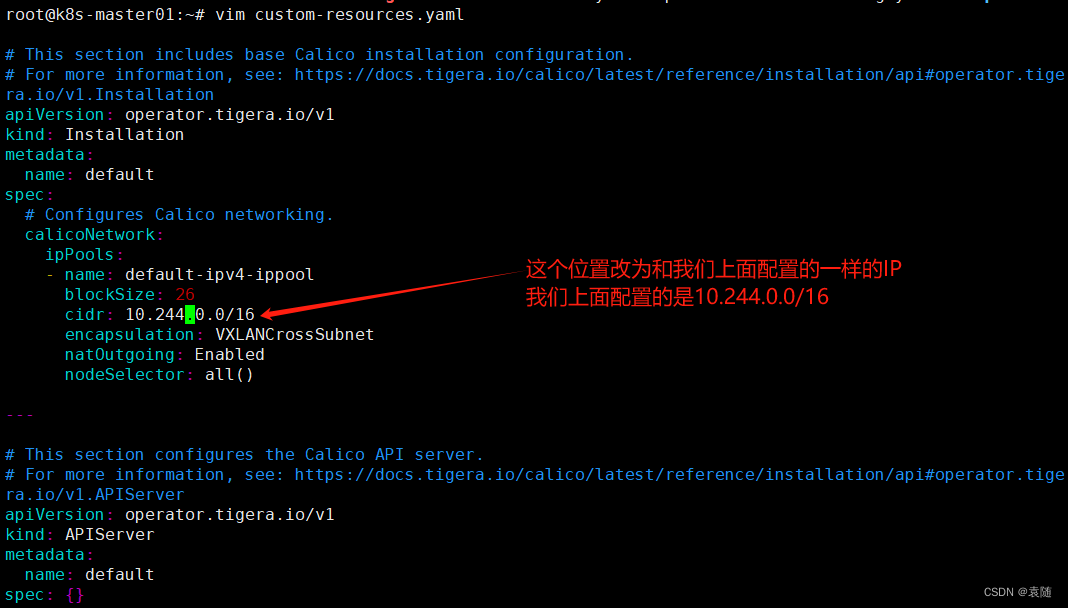

修改文件

注我们在kubeadm-config.yaml文件中添加的pod网段是10.244.0.0/16所以我们要修改为一样的

vim custom-resources.yaml

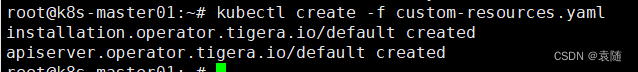

启动

kubectl create -f custom-resources.yaml

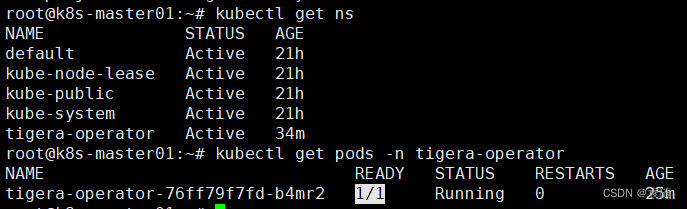

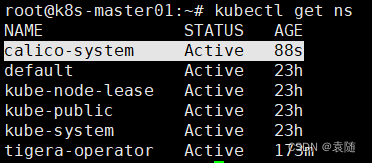

查看命名空间

kubectl get ns

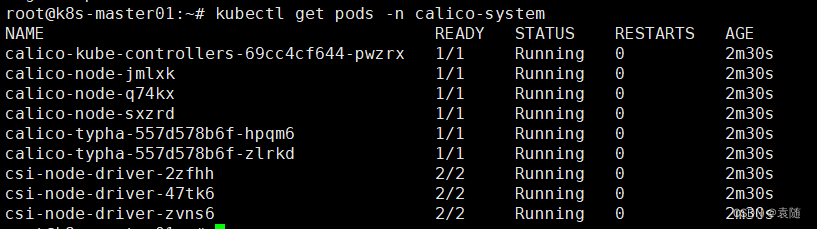

查看命名空间中运行的pods 如果没有全部起来稍微等一下,应该是在创建中,如果网络慢可能要半个小时。

kubectl get pods -n calico-system

查看命名空间中运行的pods

kubectl get pods -n kube-system

然后再次查看我们的集群状态:

kubectl get nodes

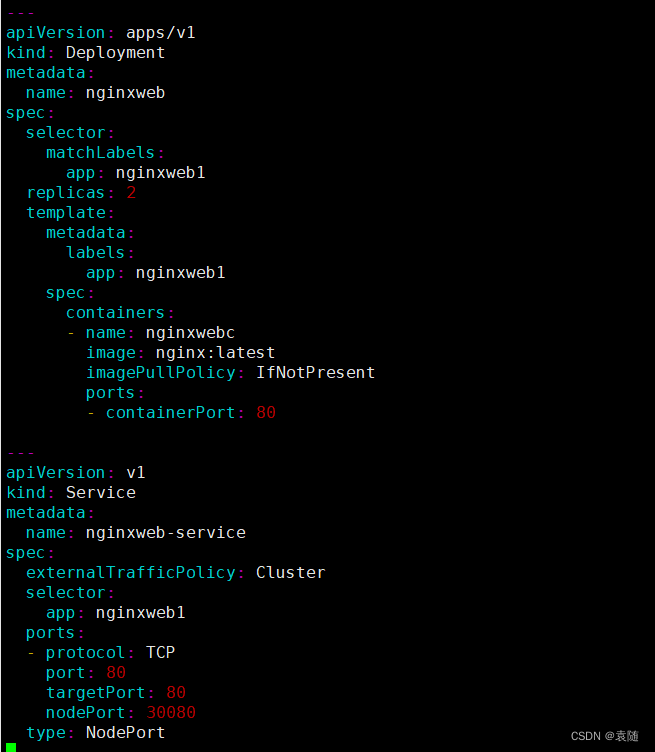

5、创建nginx测试集群可用性(k8s-master操作)

vim nginx.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginxweb

spec:

selector:

matchLabels:

app: nginxweb1

replicas: 2

template:

metadata:

labels:

app: nginxweb1

spec:

containers:

- name: nginxwebc

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80---

apiVersion: v1

kind: Service

metadata:

name: nginxweb-service

spec:

externalTrafficPolicy: Cluster

selector:

app: nginxweb1

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080

type: NodePort

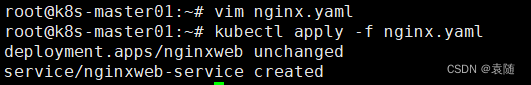

启动创建容器

kubectl apply -f nginx.yaml

查看是否创建成功

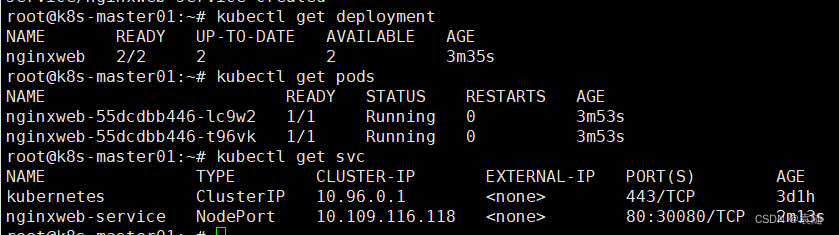

kubectl get deployment

kubectl get pods

kubectl get svc

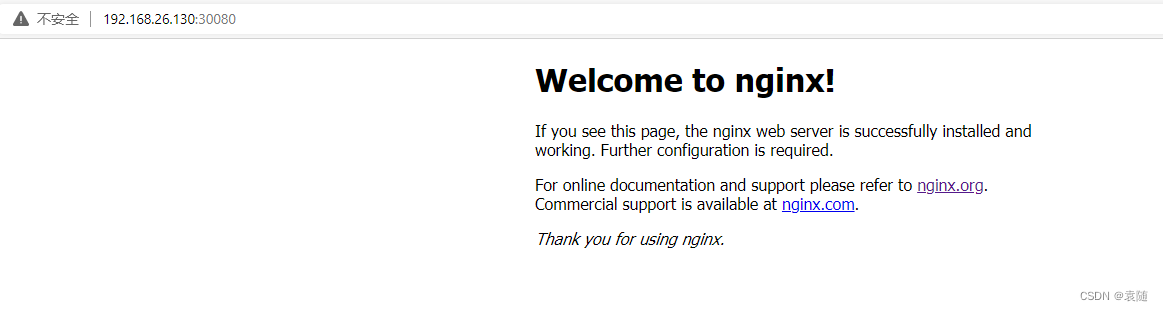

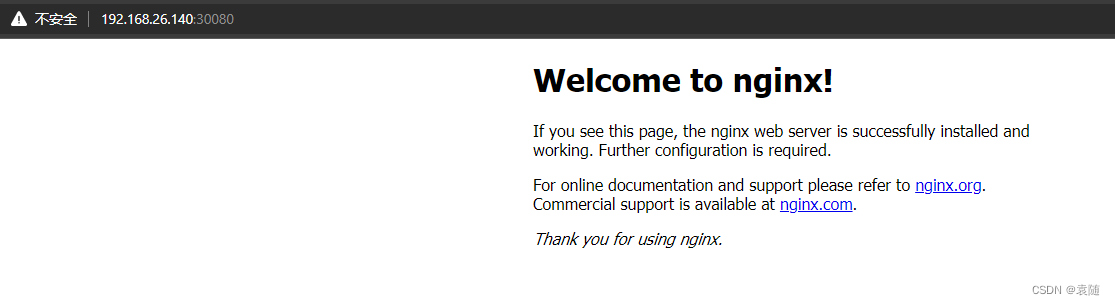

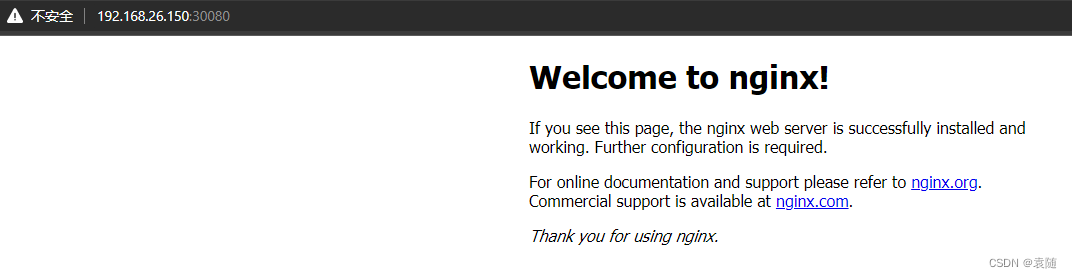

在浏览器访问集群中任何一台服务器的IP加30080端口都可以访问到我们的nginx

以上步骤说明我们的k8s集群部署已经完成了