计算机视觉基本任务综述

写在前面:由于本人在本科阶段没有接触深度学习,仅了解部分传统图像处理方法,刚开始学习计算机视觉相关知识,因此想写点东西对所学做一些总结,如有问题,也请各位多多指教。本文出于方便整理的缘故,部分参考copy网络相关博客,如有侵权,请联系我删除。

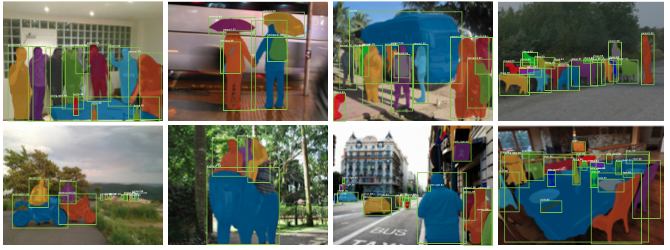

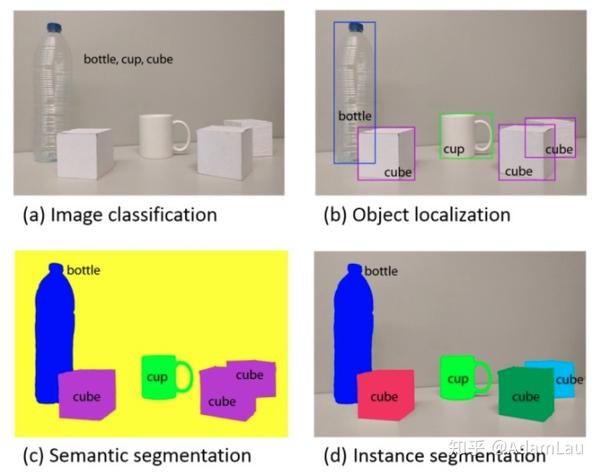

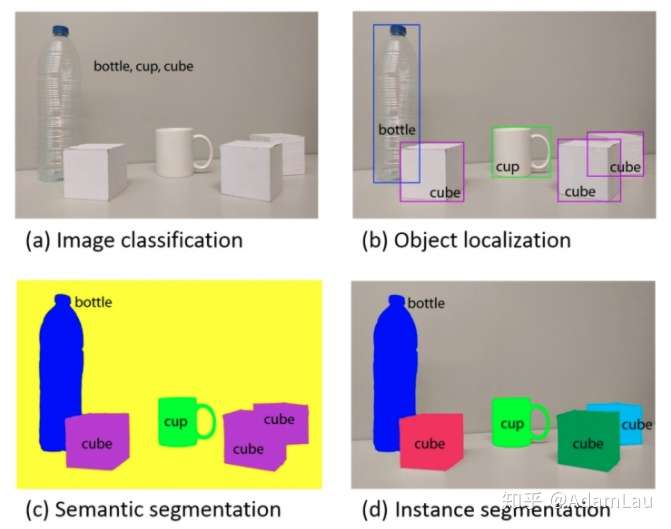

计算机视觉基本任务共四大类:分类、目标检测、语义分割、实例分割

a)分类(Classification)

图像分类要求给定一个图片输出图片里含有哪些分类,例如在图1(a)中检测出图中有瓶子、杯子以及立方体。

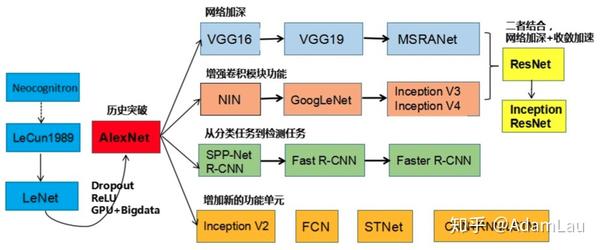

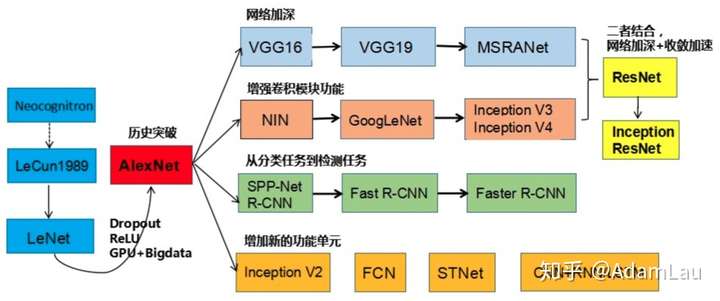

即将要介绍到的分类网络(ILSVRC历年冠亚军):LeNet、AlexNet(2012冠军)、VGG(2014亚军)、GoogLeNet(2014冠军)、ResNet(2015冠军)、DenseNet

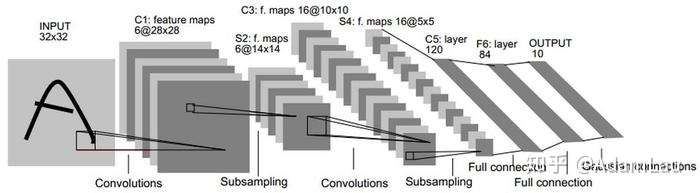

1.LeNet-5:卷积神经网络的祖师爷LeCun在1998年提出,用于解决手写数字识别的视觉任务。自那时起,CNN的最基本的架构就定下来了:卷积层、池化层、全连接层。conv1 (6) -> pool1 -> conv2 (16) -> pool2 -> fc3 (120) -> fc4 (84) -> fc5 (10) -> softmax 网络名称中有5表示它有5层conv/fc层。

创新点:定义了CNN的基本组件,是CNN的鼻祖。

LeNet torch 实现,应用于cifar-10

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, kernel_size=5)

self.conv2 = nn.Conv2d(6, 16, kernel_size=5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

<span class="k">def</span> <span class="nf">forward</span><span class="p">(</span><span class="bp">self</span><span class="p">,</span> <span class="n">x</span><span class="p">):</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">func</span><span class="o">.</span><span class="n">relu</span><span class="p">(</span><span class="bp">self</span><span class="o">.</span><span class="n">conv1</span><span class="p">(</span><span class="n">x</span><span class="p">))</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">func</span><span class="o">.</span><span class="n">max_pool2d</span><span class="p">(</span><span class="n">x</span><span class="p">,</span> <span class="mi">2</span><span class="p">)</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">func</span><span class="o">.</span><span class="n">relu</span><span class="p">(</span><span class="bp">self</span><span class="o">.</span><span class="n">conv2</span><span class="p">(</span><span class="n">x</span><span class="p">))</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">func</span><span class="o">.</span><span class="n">max_pool2d</span><span class="p">(</span><span class="n">x</span><span class="p">,</span> <span class="mi">2</span><span class="p">)</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">x</span><span class="o">.</span><span class="n">view</span><span class="p">(</span><span class="n">x</span><span class="o">.</span><span class="n">size</span><span class="p">(</span><span class="mi">0</span><span class="p">),</span> <span class="o">-</span><span class="mi">1</span><span class="p">)</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">func</span><span class="o">.</span><span class="n">relu</span><span class="p">(</span><span class="bp">self</span><span class="o">.</span><span class="n">fc1</span><span class="p">(</span><span class="n">x</span><span class="p">))</span>

<span class="n">x</span> <span class="o">=</span> <span class="n">func</span><span class="o">.</span><span class="n">relu</span><span class="p">(</span><span class="bp">self</span><span class="o">.</span><span class="n">fc2</span><span class="p">(</span><span class="n">x</span><span class="p">))</span>

<span class="n">x</span> <span class="o">=</span> <span class="bp">self</span><span class="o">.</span><span class="n">fc3</span><span class="p">(</span><span class="n">x</span><span class="p">)</span>

<span class="k">return</span> <span class="n">x</span></code></pre></div><p data-pid="LWXkiMVx">2.AlexNet:在ILSVRC 2012夺得冠军,并领先第二名10.9个百分点。网络结构:conv1 (96) -> pool1 -> conv2 (256) -> pool2 -> conv3 (384) -> conv4 (384) -> conv5 (256) -> pool5 -> fc6 (4096) -> fc7 (4096) -> fc8 (1000) -> softmax</p><figure data-size="normal"><noscript><img src="https://pic1.zhimg.com/v2-9d6827da91e857836f6ffdef99cb9a54_b.jpg" data-caption="" data-size="normal" data-rawwidth="1074" data-rawheight="517" class="origin_image zh-lightbox-thumb" width="1074" data-original="https://pic1.zhimg.com/v2-9d6827da91e857836f6ffdef99cb9a54_r.jpg"/></noscript><img src="https://pic1.zhimg.com/80/v2-9d6827da91e857836f6ffdef99cb9a54_720w.jpg" data-caption="" data-size="normal" data-rawwidth="1074" data-rawheight="517" class="origin_image zh-lightbox-thumb lazy" width="1074" data-original="https://pic1.zhimg.com/v2-9d6827da91e857836f6ffdef99cb9a54_r.jpg" data-actualsrc="https://pic1.zhimg.com/v2-9d6827da91e857836f6ffdef99cb9a54_b.jpg" data-lazy-status="ok"></figure><p data-pid="ErXlk4Nw">创新点:(1)<b>更深</b>的网络。(2)使用了<b>ReLU</b>激活函数,使之有更好的梯度特性、训练更快。(3)大量使用<b>数据增广</b>技术,防止过拟合。(4)让人们意识到利用<b>GPU加速</b>训练。(5)使用了<b>随机失活(dropout)</b>,该方法通过让全连接层的神经元(该模型在前两个全连接层引入Dropout)以一定的概率失去活性(比如0.5)失活的神经元不再参与前向和反向传播,相当于约有一半的神经元不再起作用。在测试的时候,让所有神经元的输出乘0.5。Dropout的引用,有效缓解了模型的过拟合。</p><p data-pid="bTPegVpk">AlexNet torch 实现,应用于cifar-10</p><div class="highlight"><pre><code class="language-python"><span class="n">NUM_CLASSES</span> <span class="o">=</span> <span class="mi">10</span>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8