- 1Java获取cpu和内存相关参数_java获取内存信息

- 2前端技能树,面试复习第 27 天—— React Diff 算法的原理,和 Vue 有什么区别 | 虚拟 DOM | key 的原理,为什么要用_react和vuediff算法区别

- 3vue2引入Element UI组件去创建新页面的详细步骤--项目阶段2

- 4批处理命令实现批量创建快捷方式_批处理创建快递方式

- 52021全球程序员收入报告,字节跳动年薪274万元排第5!

- 6好用的URL

- 7力扣206.反转链表(C++)_力扣206题c++测试

- 8Android网络编程(六)OkHttp3用法全解析_android studio 网络编程 okhttp3 用法

- 924届双非日常实习测开面经-腾讯、字节、英伟达、OPPO、深信服、Red Hat面经大合集_英伟达 面经

- 10pythonw. exe_pythonw.exe或python.exe?

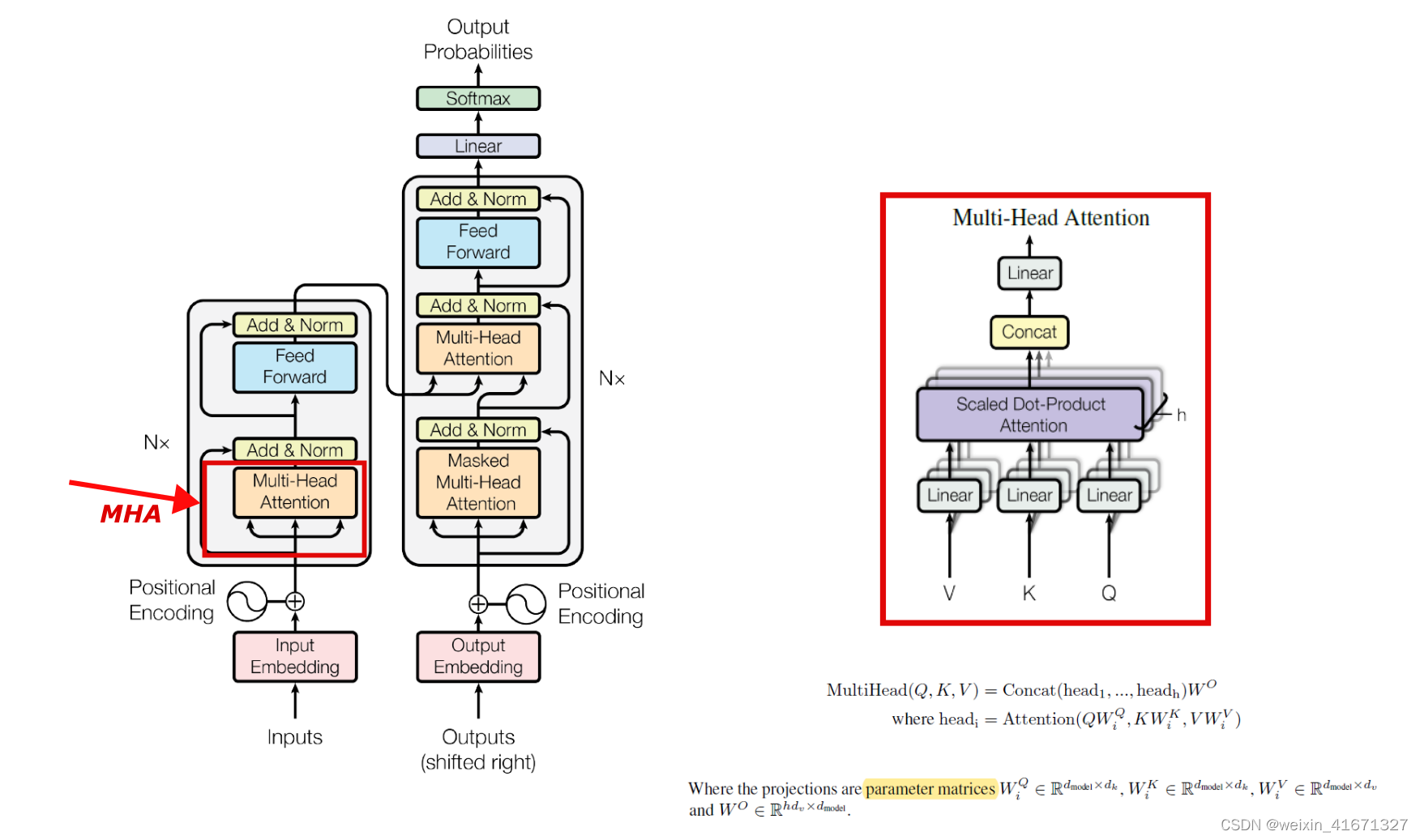

深度学习注意力机制(MHA)的训练(Eigen)_深度学习mha

赞

踩

简介

本文使用Eigen3在Transformer模型中实现多头注意力的前向传播和反向传播。具体来说,这个eigenMHA (eigenDNN)【源码:https://github.com/jundaf2/eigenMHA】所对应了大致如下的cuDNN的api的功能:

- cudnnCreateAttnDescriptor()

- cudnnSetAttnDescriptor()

- cudnnGetAttnDescriptor()

- cudnnDestroyAttnDescriptor()

- cudnnGetMultiHeadAttnBuffers()

- cudnnGetMultiHeadAttnWeights()

- cudnnMultiHeadAttnForward()

- cudnnMultiHeadAttnBackwardData()

- cudnnMultiHeadAttnBackwardWeights()

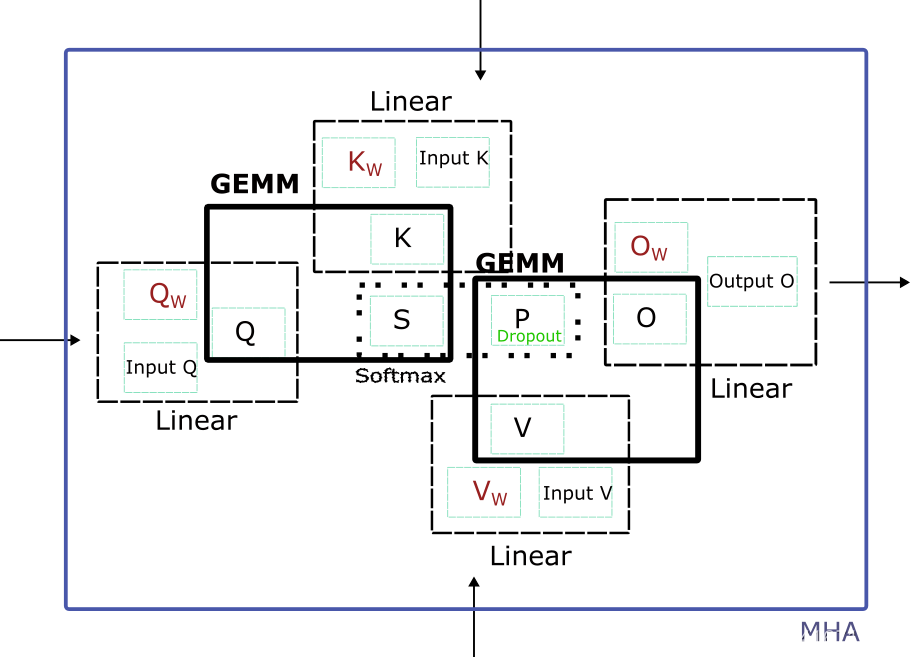

简单来说,MHA作为Tranformer模型中的一个模块,在训练中既要在需要将embedding,通过Q K V的线性层、S=Q*K^T(GEMM)、P=Softmax(Mask(S))、P=Dropout(P)、O=P*V(GEMM)、O的线性层 前向传播到下一层(可能是Layernorm),然后再在反向传播中,将输出O的梯度,通过O的线性层、O=P*V(GEMM)、P=Dropout(P)、P=Softmax(S)、S=Q*K^T(GEMM)、Q K V的线性层反向传播回输入端(embedding的梯度)。

MHA训练过程涉及到的变量

MHA训练前向

- 输入QKV线性层的embeddings (前向起始点)

Q i n K i n V i n \mathbf{Q}_{in} \quad \mathbf{K}_{in} \quad \mathbf{V}_{in} QinKinVin

- 线性层权重和偏置

W Q b Q \mathbf{W}_{Q} \quad \mathbf{b}_{Q} WQbQ

W K b K \mathbf{W}_{K} \quad \mathbf{b}_{K} WKbK

W V b V \mathbf{W}_{V} \quad \mathbf{b}_{V} WVbV

W O b O \mathbf{W}_{O} \quad \mathbf{b}_{O} WObO

- 计算中间变量

- O的线性层输出值 和 目标值

O o u t O t a r g e t \mathbf{O}_{out}\quad\mathbf{O}_{target} OoutOtarget

MHA前向传播公式如下:

Q = Q i n ∗ W Q + b Q \mathbf{Q} = \mathbf{Q}_{in}*\mathbf{W}_{Q}+\mathbf{b}_{Q} Q=Qin∗WQ+bQ

K = K i n ∗ W K + b K \mathbf{K} = \mathbf{K}_{in}*\mathbf{W}_{K}+\mathbf{b}_{K} K=Kin∗WK+bK

V = V i n ∗ W V + b V \mathbf{V} = \mathbf{V}_{in}*\mathbf{W}_{V}+\mathbf{b}_{V} V=Vin∗WV+bV

S = Q ∗ K T \mathbf{S} = \mathbf{Q}*\mathbf{K}^T S=Q∗KT

KaTeX parse error: Undefined control sequence: \bfrac at position 42: …ask(\mathbf{S}*\̲b̲f̲r̲a̲c̲{1}{\sqrt{d}}))…

P = D r o p o u t F W D ( P ) \mathbf{P} = DropoutFWD(\mathbf{P}) P=DropoutFWD(P)

O = P ∗ V \mathbf{O}=\mathbf{P}*\mathbf{V} O=P∗V

O o u t = O ∗ W O + b O \mathbf{O}_{out} = \mathbf{O}*\mathbf{W}_{O}+\mathbf{b}_{O} Oout=O∗WO+bO

MSE Loss

在这个训练的计算结构中,反向传播的起始点是损失函数,因为我们仅仅关注于MHA本身,因此将MHA的输出

O

o

u

t

\mathbf{O}_{out}

Oout和预设的目标

O

t

a

r

g

e

t

\mathbf{O}_{target}

Otarget输入MSE函数取得误差

l

o

s

s

loss

loss和反向传播的梯度

g

r

a

d

_

O

o

u

t

\mathbf{grad\_O}_{out}

grad_Oout 。

l

o

s

s

=

M

S

E

L

o

s

s

(

O

o

u

t

,

O

t

a

r

g

e

t

)

loss = MSELoss(\mathbf{O}_{out},\mathbf{O}_{target})

loss=MSELoss(Oout,Otarget)

MHA训练反向

- MHA输出(O的线性层输出)的梯度 (来自于 LayerNorm,反向起始点)

g r a d _ O o u t \mathbf{grad\_O}_{out} grad_Oout

- 中间变量的梯度

- 输入的梯度

g r a d _ Q i n g r a d _ K i n g r a d _ V i n \mathbf{grad\_Q}_{in} \quad \mathbf{grad\_K}_{in} \quad \mathbf{grad\_V}_{in} grad_Qingrad_Kingrad_Vin

- 权重和偏置的梯度

g r a d _ W Q g r a d _ b Q \mathbf{grad\_W}_{Q} \quad \mathbf{grad\_b}_{Q} grad_WQgrad_bQ

g r a d _ W K g r a d _ b K \mathbf{grad\_W}_{K} \quad \mathbf{grad\_b}_{K} grad_WKgrad_bK

g r a d _ W V g r a d _ b V \mathbf{grad\_W}_{V} \quad \mathbf{grad\_b}_{V} grad_WVgrad_bV

g r a d _ W O g r a d _ b O \mathbf{grad\_W}_{O} \quad \mathbf{grad\_b}_{O} grad_WOgrad_bO

MHA反向传播公式如下:

g r a d _ O = g r a d _ O o u t ∗ W O \mathbf{grad\_O} = \mathbf{grad\_O}_{out}*\mathbf{W}_{O} grad_O=grad_Oout∗WO

g r a d _ W O = g r a d _ O o u t T ∗ O \mathbf{grad\_W}_{O} = \mathbf{grad\_O}_{out}^T*\mathbf{O} grad_WO=grad_OoutT∗O

g r a d _ b O = c o l s u m ( g r a d _ O o u t ) \mathbf{grad\_b}_{O} = colsum(\mathbf{grad\_O}_{out}) grad_bO=colsum(grad_Oout)

g r a d _ P = g r a d _ O ∗ V T \mathbf{grad\_P} = \mathbf{grad\_O}*\mathbf{V}^T grad_P=grad_O∗VT

g r a d _ V = P T ∗ g r a d _ O \mathbf{grad\_V} = \mathbf{P}^T*\mathbf{grad\_O} grad_V=PT∗grad_O

g r a d _ P = D r o p o u t B W D ( g r a d _ P ) \mathbf{grad\_P} = DropoutBWD(\mathbf{grad\_P}) grad_P=DropoutBWD(grad_P)

g r a d _ S = S o f t m a x B W D ( P , g r a d _ P ) ∗ 1 d \mathbf{grad\_S} = SoftmaxBWD(\mathbf{P},\mathbf{grad\_P})*\frac{1}{\sqrt{d}} grad_S=SoftmaxBWD(P,grad_P)∗d 1

g r a d _ Q = g r a d _ S ∗ K \mathbf{grad\_Q} = \mathbf{grad\_S}*\mathbf{K} grad_Q=grad_S∗K

g r a d _ K = g r a d _ S T ∗ Q \mathbf{grad\_K} = \mathbf{grad\_S}^T*\mathbf{Q} grad_K=grad_ST∗Q

g r a d _ Q i n = g r a d _ Q ∗ W Q \mathbf{grad\_Q}_{in} = \mathbf{grad\_Q}*\mathbf{W}_{Q} grad_Qin=grad_Q∗WQ

g r a d _ W Q = g r a d _ Q T ∗ Q i n \mathbf{grad\_W}_{Q} = \mathbf{grad\_Q}^T*\mathbf{Q}_{in} grad_WQ=grad_QT∗Qin

g r a d _ b Q = c o l s u m ( g r a d _ Q ) \mathbf{grad\_b}_{Q} = colsum(\mathbf{grad\_Q}) grad_bQ=colsum(grad_Q)

g r a d _ K i n = g r a d _ K ∗ W K \mathbf{grad\_K}_{in} = \mathbf{grad\_K}*\mathbf{W}_{K} grad_Kin=grad_K∗WK

g r a d _ W K = g r a d _ K T ∗ K i n \mathbf{grad\_W}_{K} = \mathbf{grad\_K}^T*\mathbf{K}_{in} grad_WK=grad_KT∗Kin

g r a d _ b K = c o l s u m ( g r a d _ K ) \mathbf{grad\_b}_{K} = colsum(\mathbf{grad\_K}) grad_bK=colsum(grad_K)

g r a d _ V i n = g r a d _ V ∗ W V \mathbf{grad\_V}_{in} = \mathbf{grad\_V}*\mathbf{W}_{V} grad_Vin=grad_V∗WV

g r a d _ W V = g r a d _ V T ∗ V i n \mathbf{grad\_W}_{V} = \mathbf{grad\_V}^T*\mathbf{V}_{in} grad_WV=grad_VT∗Vin

g r a d _ b V = c o l s u m ( g r a d _ V ) \mathbf{grad\_b}_{V} = colsum(\mathbf{grad\_V}) grad_bV=colsum(grad_V)

MHA训练库的组成部分

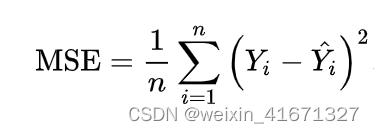

MSE损失函数

损失函数作为深度学习系统的起源,产生了损失量和回传梯度,是深度学习系统的基本组成部分。

eidnnStatus_t eidnnMSELoss(

eidnnHandle_t handle,

const Tensor<float, 3> &output,

const Tensor<float, 3> &target,

Tensor<float, 0> &loss,

Tensor<float, 3> &d_loss);

- 1

- 2

- 3

- 4

- 5

- 6

线性层

cuDNN 没有给线性层操作提供了专门的API

在eigenDNN, 我们有

eidnnStatus_t eidnnLinearForward(eidnnHandle_t handle,

const Tensor<float, 3>& x, // data

const Tensor<float, 2>& w, // weight

const Tensor<float, 1>& bias, // bias

Tensor<float, 3>& y);

eidnnStatus_t eidnnLinearBackward(eidnnHandle_t handle,

const Tensor<float, 3>& dy,

const Tensor<float, 3>& x,

const Tensor<float, 2>& w,

Tensor<float, 3>& dx, // gradient of input data

Tensor<float, 2>& dw, // accumulated gradient of weight

Tensor<float, 1>& dbias // accumulated gradient of bias

);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

批量矩阵乘法

C = β ∗ C + α ∗ O p c ( M a t M u l ( O p a ( A ) , O p b ( B ) ) ) C = \beta * C + \alpha*Op_c(MatMul(Op_a(A),Op_b(B))) C=β∗C+α∗Opc(MatMul(Opa(A),Opb(B)))

, 其中 O p m ( M ) Op_m(M) Opm(M) 是对 M M M 是否采取转置操作.

cuDNN 没有给批量矩阵乘法操作提供了专门的API

在eigenDNN, 我们有

eidnnStatus_t eidnnStridedBatchedGemmForward(

eidnnHandle_t handle,

float alpha,

float beta,

bool trans_A, // Op_a

bool trans_B, // Op_b

bool trans_C, // Op_c

const Tensor<float, 4> &A,

const Tensor<float, 4> &B,

Tensor<float, 4> &C);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

eidnnStatus_t eidnnStridedBatchedGemmBackward(

eidnnHandle_t handle,

float alpha,

float beta,

bool trans_A, // Op_a

bool trans_B, // Op_b

bool trans_C, // Op_c

const Tensor<float, 4> &A, // A

const Tensor<float, 4> &B, // B

const Tensor<float, 4> &d_C, // gradient of C

Tensor<float, 4> &d_A, // gradient of A

Tensor<float, 4> &d_B // gradient of B

);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

Softmax

cuDNN 给softmax 操作提供了如下 API.

在eigenDNN, 我们有

eidnnStatus_t eidnnSoftmaxForward(eidnnHandle_t handle,

eidnnSoftmaxAlgorithm_t algo,

eidnnSoftmaxMode_t mode,

const Tensor<float, 4>& x,

Tensor<float, 4>& y);

- 1

- 2

- 3

- 4

- 5

eidnnStatus_t eidnnSoftmaxBackward(eidnnHandle_t handle,

eidnnSoftmaxAlgorithm_t algo,

eidnnSoftmaxMode_t mode,

const Tensor<float, 4>& y,

const Tensor<float, 4>& dy,

Tensor<float, 4>& dx);

- 1

- 2

- 3

- 4

- 5

- 6

Dropout

cuDNN 给dropout 操作提供了如下 API.

- cudnnCreateDropoutDescriptor()

- cudnnDestroyDropoutDescriptor()

- cudnnDropoutGetStatesSize()

- cudnnDropoutGetReserveSpaceSize()

- cudnnDropoutForward()

- cudnnGetDropoutDescriptor()

- cudnnRestoreDropoutDescriptor()

- cudnnSetDropoutDescriptor()

- cudnnDropoutBackward()

在eigenDNN, 我们有

// dropout rate,

// pointer to memory space of states (allocated by forward pass),

// size of memory space in bytes (calculated by forward pass),

// random seed

using eidnnDropoutDescriptor_t = std::tuple<float, void*, size_t, unsigned long long>;

- 1

- 2

- 3

- 4

- 5

eidnnStatus_t eidnnDropoutForward(

eidnnHandle_t handle,

eidnnDropoutDescriptor_t &dropoutDesc,

const Tensor<float, 4> &x, // input data

Tensor<float, 4> &y // input data after dropout

);

- 1

- 2

- 3

- 4

- 5

- 6

eidnnStatus_t eidnnDropoutBackward(

eidnnHandle_t handle,

const eidnnDropoutDescriptor_t dropoutDesc,

const Tensor<float, 4> &dy, // gradient of dropout output data

Tensor<float, 4> &dx // gradient of dropout input data

);

- 1

- 2

- 3

- 4

- 5

- 6

Please star this project [https://github.com/jundaf2/eigenMHA] if you find it useful~