- 1论文阅读 - SegFormer_segformer结构

- 22022/12/10牛客网python专项练习学习笔记_在python中,vector3变量

- 3SpringBoot + Redis尝试实现外卖拼单(三)_基于springboot的外卖点餐小程序添加拼团

- 4volatile 关键字

- 546.在ROS中实现global planner(2)- A*规划算法预研_global_planner的两种算法

- 6猫头虎分享:什么是Promise异步编程

- 7wireless iwpriv

- 8使用 .NET的IO(5)_身io.5嗯 ◇.. .

- 9基于stm32单片机的信号发生器设计_基于stm32的信号发生器

- 10Playwright对比selenium_selenium被淘汰了

使用ElasticSearch完成大模型+本地知识库:BM25+Embedding模型+Learned Sparse Encoder 新特性_elasticsearch结合大模型进行全文搜索

赞

踩

本文指出,将BM25,向量检索Embedding模型后近似KNN相结合,可以让搜索引擎既能理解用户查询的字面意义,又能捕捉到查询的深层次语义,从而提供更全面、更精确的搜索结果。这种混合方法在现代搜索引擎中越来越普遍,因为它结合了传统搜索的精确性和基于AI的搜索的语义理解能力。然后在8.8引入Learned Sparse Encoder新特性,因为dense vector search 密集向量搜索通常需要在领域内进行重新训练。如果没有在领域内进行重新训练,它们甚至可能表现不如传统的词汇评分,比如Elastic的BM25。

How to get the best of lexical and AI-powered search with Elastic’s vector database 如何利用Elastic的向量数据库实现词汇和人工智能搜索的最佳效果

作者

2023年7月3日

Maybe you came across the term “vector database” and are wondering whether it’s the new kid on the block of data retrieval systems. Maybe you are confused by conflicting claims about vector databases. The truth is, the approach used by vector databases has been around for a few years. If you’re looking for the best retrieval performance, hybrid approaches that combine keyword-based search (sometimes referred to as lexical search) with vector-based approaches represent the state of the art. 也许你曾经听说过“向量数据库”这个术语,想知道它是否是数据检索系统中的新生力量。也许你对向量数据库的争议性说法感到困惑。事实是,向量数据库所采用的方法已经存在几年了。如果你正在寻找最佳的检索性能,将基于关键词的搜索(有时被称为词汇搜索)与基于向量的方法相结合的混合方法代表了最先进的技术。

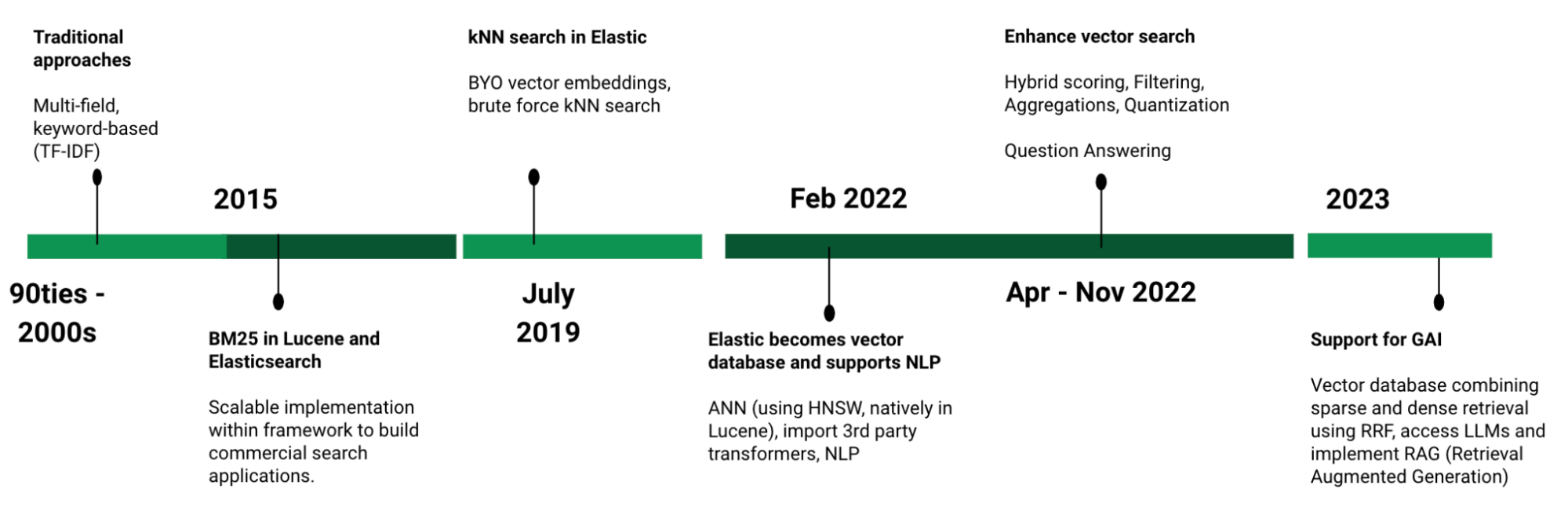

In Elasticsearch®, you can get the best of both worlds: lexical and vector search. Elastic® made lexical columnar retrieval popular, implemented in Lucene, and has been perfecting that approach for more than 10 years. In addition, for several years, Elastic has been investing in vector database capabilities, such as a native implementation of approximate nearest neighbor search using hierarchical navigable small world (HNSW) (available in 8.0 and later releases). 在Elasticsearch中,您可以兼得词法和向量搜索的优势。Elastic将词法列式检索变得流行,该方法在Lucene中实现,并已经完善了这种方法超过10年。此外,多年来,Elastic一直在投资于向量数据库功能,例如使用分层可导航小世界(HNSW)的近似最近邻搜索的本机实现(在8.0及更高版本中可用)。

Figure 1: Timeline of search innovations delivered by Elastic, including building up vector search capabilities since 2019 图1:Elastic提供的搜索创新时间表,包括自2019年以来构建向量搜索能力

Elastic is positioned to be a leader in the rapidly evolving vector database market: Elastic定位为快速发展的向量数据库市场的领导者

-

Fully performant and scalable vector database functionality, including storing embeddings and efficiently searching for nearest neighbor 完全高性能和可扩展的矢量数据库功能,包括存储嵌入并高效搜索最近邻

-

A proprietary sparse retrieval model that implements semantic search out of the box 一个专有的稀疏检索模型,可以直接实现语义搜索

-

Industry-leading relevance of all types — keyword, semantic, and vector 所有类型的行业领先相关性——关键词、语义和向量

-

The ability to apply generative AI and enrich large language models (LLMs) with proprietary, business-specific data as context 将生成式人工智能应用于大型语言模型(LLMs),并丰富其专有的、业务特定的数据作为上下文的能力

-

All capabilities in a single platform: execute vector search, embed unstructured data into vector representations applying off-the-shelf and custom models, and implement search applications in production, complete with solutions for observability and security 在一个平台上拥有所有功能:执行向量搜索,将非结构化数据嵌入向量表示中并应用现成和定制模型,以及在生产环境中实现搜索应用,包括可观察性和安全性解决方案

In this blog, learn more about the concepts relating to vector databases, how they work, which use cases they apply to, and how you can achieve superior search relevance with vector search. 在这篇博客中,了解有关向量数据库相关概念,它们的工作原理,适用的使用案例以及如何通过向量搜索实现更高的搜索相关性。

The basics of vector databases 矢量数据库的基础

Why is there so much attention on vector databases? 为什么向量数据库受到如此多的关注?

A vector database is a term for a system capable of executing vector search. So to understand vector databases, let’s start with vector search and why it has garnered so much attention lately. 矢量数据库是指能够执行矢量搜索的系统。因此,要理解矢量数据库,让我们从矢量搜索开始,以及为什么最近引起了如此多的关注。

Vector search plays an important role in recent discussions about how AI is transforming literally everything, from business workflows to education. Why does vector search play such an important role on this topic? First, vector search enables fast and accurate semantic search of unstructured data — without extensive curation of metadata, keywords, and synonyms. Second, vector search contributes a piece to the recent excitement around generative AI because it can provide accurate context from proprietary sources outside what LLMs “know” (i.e., have seen during their training). 矢量搜索在最近的讨论中扮演着重要角色,讨论的内容涉及人工智能如何从业务工作流程到教育等方面进行转变。为什么矢量搜索在这个话题上扮演如此重要的角色呢?首先,矢量搜索能够快速准确地对非结构化数据进行语义搜索,而无需对元数据、关键词和同义词进行大量整理。其次,矢量搜索为最近围绕生成式人工智能的兴奋情绪做出了贡献,因为它可以从专有来源中提供准确的上下文,超出了大型语言模型“知道”的范围(即在它们的训练过程中见过的内容)。

What are vector databases used for? 矢量数据库用于什么?

Most standard databases let you retrieve related information by matching on structured fields, including matching keywords in descriptions, and values in numeric fields. By contrast, a vector database captures the meaning of unstructured text and finds you “what you mean” instead of matching text — also known as semantic search. 大多数标准数据库都可以通过匹配结构化字段来检索相关信息,包括匹配描述中的关键词和数值字段中的数值。相比之下,向量数据库捕捉了非结构化文本的含义,并找到了您所指的内容,而不是匹配文本,也被称为语义搜索。

Additionally, vector databases allow you to: 此外,矢量数据库还允许您:

-

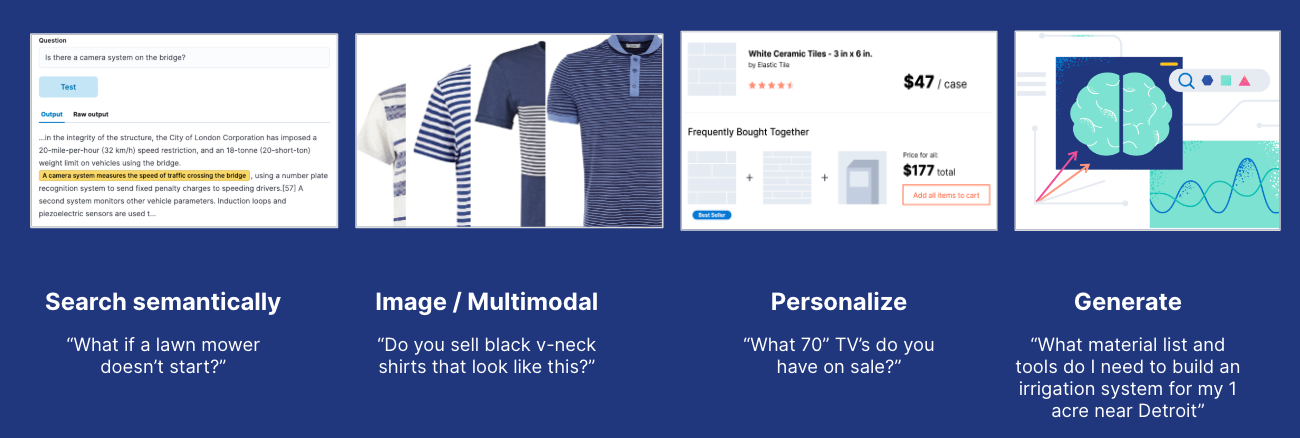

Search unstructured data other than text, including images or audio. Searches that involve more than one type of data have been referred to as “multimodal search” — like searching for images using a textual description. 搜索非结构化数据,包括图像或音频。涉及多种类型数据的搜索被称为“多模式搜索”——比如使用文本描述搜索图像。

-

Personalize user experiences by modeling user characteristics or behaviors in a statistical (vector) model and matching others against that. 通过在统计(向量)模型中对用户特征或行为进行建模,并将其他用户与之匹配,个性化用户体验。

-

Create “generative” experiences, where the system doesn’t just return a list of documents related to a query the user issued, but engages the user in conversations, explains multi-step processes, and generates an interaction that goes well beyond perusing related information. 创建“生成式”体验,系统不仅仅返回与用户查询相关的文档列表,而是与用户进行对话,解释多步骤过程,并生成远远超出浏览相关信息的互动。

What is a vector database, and how does it work? 什么是矢量数据库,它是如何工作的?

A vector database consists of two primary components: 一个向量数据库由两个主要组成部分组成:

-

Indexing and storing embeddings, which is what the multi-dimensional numeric representation of unstructured data is generally called. Embeddings are generated by deep neural networks that were trained to classify that type of unstructured data and capture the meaning, context, and associations of unstructured data in a “dense” vector, usually hundreds to thousands dimensions deep — the secret sauce of vector search. 索引和存储嵌入,通常指的是非结构化数据的多维数值表示。嵌入是由经过训练以分类该类型非结构化数据并在“密集”向量中捕捉非结构化数据的含义、上下文和关联的深度神经网络生成的,通常是数百到数千个维度深的 — 向量搜索的秘密武器。

-

A search algorithm that efficiently finds nearest neighbors in the high dimensional “embedding space,” where vector proximity means similarity in meaning. Different ways to search indices exist, also known as approximate nearest neighbor (ANN) search, with HNSW being one of the most commonly utilized algorithms by vector database providers. 在高维的“嵌入空间”中高效地找到最近邻的搜索算法,其中向量的接近意味着相似的含义。存在不同的搜索索引方法,也被称为近似最近邻(ANN)搜索,HNSW是向量数据库提供者最常用的算法之一。

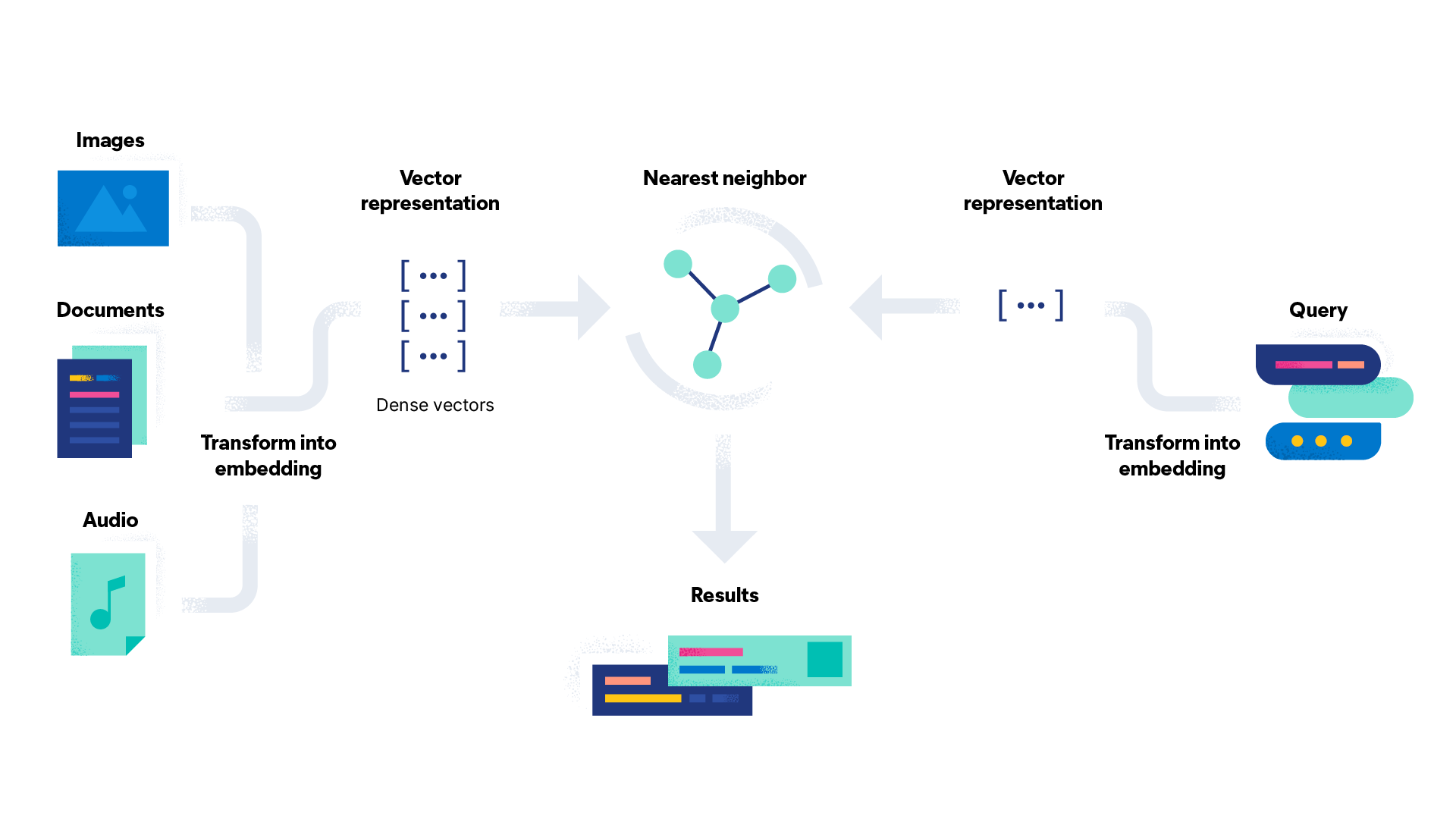

Figure 2: Key components for executing vector search 图2:执行向量搜索的关键组件

Some vector databases only provide the capability to store and search embeddings, depicted as A in Figure 2 above. However, this approach leaves developers with the challenge of how to generate those embeddings. Typically, that requires access to an embedding model (shown as C) and an API to apply it to your data and queries (B). And you may be able to store only very limited meta data along with the embeddings, making it more complex to provide comprehensive information in your user application. 一些向量数据库只提供存储和搜索嵌入的功能,如上图中的A所示。然而,这种方法让开发人员面临如何生成这些嵌入的挑战。通常,这需要访问一个嵌入模型(如C所示)和一个API来将其应用于您的数据和查询(B)。您可能只能存储与嵌入一起的非常有限的元数据,这使得在用户应用程序中提供全面信息变得更加复杂。

Further, dedicated vector databases leave you to figure out how to integrate the search capability into your application, as alluded to on the right side in Figure 2. Managing the components of your software architecture to solve those challenges involves evaluating many solutions offered from different vendors with varying quality levels and support. 此外,专用的向量数据库让您自行解决如何将搜索功能整合到您的应用程序中,如图2右侧所示。管理软件架构的各个组件以解决这些挑战,涉及评估来自不同供应商提供的许多解决方案,其质量和支持程度各不相同。

Elastic as a vector database 弹性作为矢量数据库

Elastic provides all capabilities you should expect from a vector database and more! Elastic提供了您从向量数据库中所期望的所有功能,甚至更多!

In contrast to dedicated vector databases, Elastic supports three capabilities in a single platform that are critical to implement applications powered by vector search: storing embeddings (A), efficiently searching for nearest neighbor (B), and embedding text into vector representations (C). 与专用的向量数据库相比,Elastic在单个平台上支持三种关键能力,这对于实现基于向量搜索的应用程序至关重要:存储嵌入(A)、高效搜索最近邻居(B)和将文本嵌入向量表示(C)。

This approach eliminates inefficiencies and complexities compared to accessing them through APIs, as necessary with other vector databases. Elastic implements approximate nearest neighbor search using HNSW natively in Lucene and lets you apply filtering (as pre-filtering, for accurate results) using an algorithm that switches between brute force and approximate nearest neighbors as appropriate (i.e., falls back to brute force when the pre-filter removes a large portion of the candidate list). 这种方法消除了与通过API访问它们相比的低效和复杂性,这在其他矢量数据库中是必要的。Elastic在Lucene中本地实现了使用HNSW的近似最近邻搜索,并允许您应用过滤(作为预过滤,以获得准确的结果),使用在适当时切换在蛮力和近似最近邻之间的算法(即,在预过滤移除候选列表的大部分时退回到蛮力)。

Use our market-leading Learned Sparse Encoder model or bring your own embedding model. Learn more about loading transformers created in PyTorch into Elastic in this blog. 使用我们市场领先的学习稀疏编码器模型,或者使用您自己的嵌入模型。在这篇博客中了解如何将在PyTorch中创建的transformers加载到Elastic中。

Figure 3: Elastic combines all the components of a vector database in a single platform 图3:Elastic将矢量数据库的所有组件合并到一个平台中

How to get optimal retrieval performance with vector search 如何通过向量搜索获得最佳检索性能

Challenges with implementing vector search 实施向量搜索时面临的挑战

To the heart of how to implement superior semantic search, let’s understand the challenges with (dense) vector search: 要实现卓越的语义搜索,让我们先了解(密集)向量搜索的挑战:

-

Picking the right embedding model: Standard embedding models deteriorate out of domain, like the ones available off the shelf on public repositories — as shown, for example, in Table 2 of our earlier blog on benchmarking vector search. If you’re lucky, a pre-trained model works well enough for your use case, but generally you have to adapt them with domain data, which requires annotated data and expertise in training deep neural networks. You can find a blueprint for the model adaptation process in this blog. 选择合适的嵌入模型:标准嵌入模型在领域外表现不佳,比如在公共存储库中提供的模型,正如我们早期博客中的表2所示,用于基准向量搜索。如果你很幸运,预训练模型对你的用例效果良好,但通常你需要用领域数据对其进行调整,这需要有标注数据和深度神经网络训练方面的专业知识。你可以在这篇博客中找到模型调整过程的蓝图。

Figure 4: Pre-trained embedding models deteriorate out-of-domain, compared to BM25 图4:与BM25相比,预训练的嵌入模型在域外表现下降

-

Implementing efficient filtering: In search and recommender systems, you are typically not done returning a list of relevant documents; users want to apply filters. Filtering for metadata with vector search is challenging: if you filter after running the vector search, you risk being left with too few (or no) results matching the filter conditions (known as “post filtering”). Otherwise, if you filter first, the nearest neighbor search isn’t as efficient because it’s performed on a small subset of the data, whereas the data structure used during vector search (like the HNSW graph) was created for the whole data set. Restricting the search scope to relevant vectors is — for many use-cases — an absolute necessity for providing a better customer experience. 实现高效的过滤:在搜索和推荐系统中,通常不能仅返回相关文档列表;用户希望能够应用过滤器。使用向量搜索进行元数据过滤具有挑战性:如果在运行向量搜索后进行过滤,您可能会冒着剩下与过滤条件匹配的结果太少(或没有)的风险(称为“后过滤”)。否则,如果首先进行过滤,最近邻搜索效率不高,因为它是在数据的一个小子集上执行的,而向量搜索期间使用的数据结构(如HNSW图)是为整个数据集创建的。将搜索范围限制在相关向量上对于提供更好的客户体验来说,在许多用例中都是绝对必要的。

-

Performing hybrid search: For best performance, you typically have to combine vector search with traditional lexical approaches 执行混合搜索:为了获得最佳性能,通常需要将向量搜索与传统的词汇方法相结合

Dense versus sparse vector retrieval 密集与稀疏向量检索

There are two big families of retrieval approaches, often referred to as “dense” and “sparse.” Both use a vector representation of text, which encodes meaning and associations, and both perform a search for close matches as a second step, as indicated in Figure 5 below. All vector-based retrieval approaches have that in common. 检索方法通常分为“密集”和“稀疏”两大类。两者都使用文本的向量表示,编码了含义和关联,并且都在第二步进行接近匹配的搜索,如下图5所示。所有基于向量的检索方法都具有这一共同点。

Above we described what more specifically is known as “dense” vector search, where unstructured data is transformed into a numeric representation using an embedding model, and you find matches as a nearest neighbor to a query in embedding space. To deliver high relevance results, dense vector search generally requires in-domain retraining. Without in-domain retraining, they may underperform even traditional lexical scoring, such as Elastic’s BM25. The upside and reason why vector search has garnered so much attention is that it can outperform all other approaches when fine-tuned, and it allows you to search unstructured data other than text, like images or audio, which has become known as “multimodal search.” The vector is considered “dense” because most of its values are non-zero. 上面我们描述了更具体被称为“密集”向量搜索的内容,其中非结构化数据通过嵌入模型转换为数值表示,然后在嵌入空间中找到与查询最近的邻居匹配。为了提供高相关性的结果,密集向量搜索通常需要在领域内进行重新训练。如果没有在领域内进行重新训练,它们甚至可能表现不如传统的词汇评分,比如Elastic的BM25。向量搜索受到如此多的关注的好处和原因是,当进行精细调整时,它可以胜过所有其他方法,并且它允许您搜索除文本之外的非结构化数据,比如图像或音频,这被称为“多模态搜索”。向量被认为是“密集”的,因为它的大部分值都是非零的。

By contrast to “dense” vectors described above, “sparse” representations contain very few non-zero values. For example, lexical search that made Elasticsearch popular (BM25) is an example of a sparse retrieval method. It uses a bag-of-words representation for text and achieves high relevance by modifying the basic relevance scoring method known as TF-IDF (term frequency, inverse document frequency) for factors like length of the document. 与上文描述的“密集”向量相比,“稀疏”表示法包含非常少的非零值。例如,使Elasticsearch流行的词汇搜索(BM25)就是一种稀疏检索方法的例子。它使用词袋表示文本,并通过修改基本相关性评分方法(称为TF-IDF,即词项频率-逆文档频率)来考虑文档长度等因素,从而实现高相关性。

Figure 5: Understanding the commonalities and differences between dense and sparse “vector” search 图5:理解密集和稀疏“向量”搜索之间的共同点和差异

Learned sparse retrievers: Highly performant semantic search out of the box 学习稀疏检索器:开箱即用的高性能语义搜索

The latest sparse retrieval approaches use learned sparse representations that offer multiple advantages over other approaches: 最新的稀疏检索方法使用了学习到的稀疏表示,相比其他方法具有多种优势:

-

High relevance without any in-domain retraining: They can be used out of the box without adapting the model on the specific domain of the documents. 高相关性,无需进行领域内的再训练:它们可以直接使用,无需在文档的特定领域上调整模型。

-

Interpretability: You can follow along which terms are matched, and the score attached by sparse encoders indicates how relevant a term is to a query — very interpretable — whereas dense vector search relies on the numeric representations of meaning that were derived by applying an embedding model, which is “black box” like many machine learning approaches. 可解释性:您可以跟踪匹配的术语,稀疏编码器附加的分数表示一个术语对查询的相关性有多高,非常易解释;而密集向量搜索依赖于通过应用嵌入模型得出的意义的数值表示,这类似于许多机器学习方法的“黑匣子”。

-

Fast: Sparse vectors fit right into inverted indices that have made established sparse retrievers like Lucene and Elasticsearch so fast. But sparse retrievers only apply to text data — not to images or other types of unstructured data. 快速:稀疏向量完全适用于倒排索引,这使得像Lucene和Elasticsearch这样的稀疏检索器变得如此快速。但稀疏检索器仅适用于文本数据,而不适用于图像或其他类型的非结构化数据。

Key tradeoffs between sparse and dense vector-based retrieval 稀疏和密集向量检索之间的关键权衡

| Sparse retrieval 稀疏检索 | Dense vector-based retrieval 基于密集向量的检索 |

|---|---|

| Good relevance without tuning (learned sparse) 良好的相关性,无需调整(学习稀疏) | Adapted to domain; can beat other approaches 适应领域;能够击败其他方法 |

| Interpretable 可解释的 | Not interpretable 不可解释 |

| Fast 快速 | Multimodal 多模态 |

Elastic 8.8 introduced our own learned sparse retriever, included with the Elasticsearch Relevance EngineTM (ESRETM), which expands any text with related relevant words. Here’s how it works: a structure is created to represent terms found in the document, as well as their synonyms. In a process called term expansion, the model adds terms based on their relevance to the document, from a static vocabulary of 30K fixed tokens, words, and sub-word units. Elastic 8.8引入了我们自己学习的稀疏检索器,包含在Elasticsearch相关性引擎(ESRE)中,它可以扩展任何文本的相关相关词。它的工作原理是:创建一个结构来表示文档中发现的术语及其同义词。在一个称为术语扩展的过程中,模型根据它们与文档的相关性,从30K个固定标记、单词和子词单元的静态词汇中添加术语。

This is similar to vector embedding in that an auxiliary data structure is created and stored in each document, which then can be used for just-in-time semantic matching within a query. Each term also has an associated score, which captures its contextual importance within the document and therefore is interpretable — unlike embeddings. 这类似于向量嵌入,因为在每个文档中创建并存储了一个辅助数据结构,然后可以用于查询中的即时语义匹配。每个术语还有一个相关的分数,捕捉了它在文档中的上下文重要性,因此是可解释的 — 不像嵌入。

Our pre-trained sparse encoder lets you implement semantic search out of the box and also addresses the other challenges with vector-based retrieval described above: 我们的预训练稀疏编码器可以让您立即实现语义搜索,并解决上述基于向量检索的其他挑战:

-

You don’t need to worry about picking an embedding model — Elastic’s Learned Sparse Encoder model comes pre-loaded into Elastic, and you can activate it with a single click. 您不需要担心选择嵌入模型——Elastic的Learned Sparse Encoder模型已预装到Elastic中,您只需点击一次即可激活它。

-

There are multiple ways to implement hybrid search, including reciprocal rank fusion (RRF) and linear combination. 有多种实现混合搜索的方法,包括互惠排名融合(RRF)和线性组合。

-

Keep memory and storage in check by using quantized (byte-size) vectors and leveraging all the recent innovations in Elasticsearch that reduce data storage requirements. 通过使用量化(字节大小)向量并利用Elasticsearch中所有最新的创新技术来减少数据存储需求,来控制内存和存储。

-

Get all that in a hardened platform that can handle petabyte scale. 在一个能处理百万亿字节规模的硬化平台上获得所有这些。

You can learn about the model’s architecture, how we trained it, and how it outperforms alternative approaches in this blog describing the Elastic Learned Sparse Encoder. 您可以在描述弹性学习稀疏编码器的博客中了解模型的架构、我们是如何训练它的,以及它如何胜过替代方法。

Why choose Elastic as your vector database? 为什么选择 Elastic 作为您的向量数据库?

Elastic’s vector database is a strong offering in the fast developing vector search market. It provides: Elastic的向量数据库是快速发展的向量搜索市场中的一个强大选择。它提供:

-

Semantic search out of the box 开箱即用的语义搜索

-

Best-in-class retrieval performance: Hybrid search with Elastic’s Learned Sparse Encoder combined with BM25 outperforms SPLADE, ColBERT, and high-end embedding models offered by OpenAI. 最佳检索性能:弹性学习稀疏编码器与BM25的混合搜索优于SPLADE、ColBERT和OpenAI提供的高端嵌入模型。

Figure 6: Elastic’s sparse retrieval model outperforms other popular vector databases, evaluated using NDCG@10 over a subset of the public BEIR benchmark 图6:Elastic的稀疏检索模型在公共BEIR基准测试的子集上,使用NDCG@10评估,表现优于其他流行的向量数据库

-

The flexibility of using our market leading learned sparse encoder model, picking any off-the-shelf model, or bringing your own optimized model — so you can keep up with innovations in this rapidly evolving space 利用我们市场领先的学习稀疏编码器模型的灵活性,选择任何现成模型,或者使用自己优化的模型 —— 这样你就能跟上这个快速发展的领域的创新

-

Efficient pre-filtering on HNSW using a practical algorithm that appropriately trades off speed against loss in relevancy 在HNSW上使用高效的预过滤,使用一个实用的算法,适当地在速度和相关性损失之间进行权衡

-

Capabilities needed in most search applications that dedicated vector databases do not provide, like aggregation, filtering, faceted search, and auto-complete 大多数搜索应用程序需要的功能,专用向量数据库无法提供,如聚合、过滤、分面搜索和自动完成