torchtext安装报错终极解决方案 and N4:调用与搭建word2vec网络进行训练_为什么我安装了torchtext却无法使用

赞

踩

torchtext安装终极方案

方法1:直接新建环境按照指定版本安装。其中较新版如下,建议安装环境完全一样。(建议不要在自己常用环境尝试,一旦安装错误,就会卸载掉你的gpu环境!!!使你大多数情况下不能用)

方法2:你头铁,非要在自己的环境下安装。请极其慎重的按照如下步骤安装

(如果使cpu环境,那不用考虑,直接安装最新版就行 pip install torchtext)

(最好先新建一个环境尝试下,成功后再在自己环境按,不新建也行,一定要按步骤)

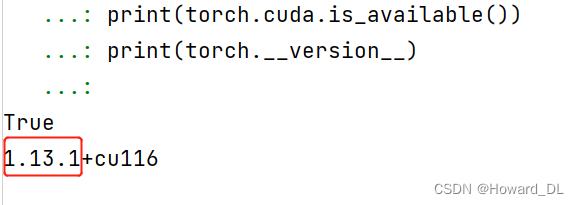

Step1:查看自己的pytoch版本

- import torch

- print(torch.cuda.is_available())

- print(torch.__version__)

Step2:计算torchtext版本号

我的版本为1.13.1。那么请记住安装公式,设版本号为1.a.b我的a=13,b=1。那么你安装的torchtext版本应该为0.(a+1). b 所以我安装的版本为0.14.1。

Step3:安装

pip install torchtext==0.14.1(0.a+1.b)此时应该能正常运作,除非你出现了意外,此时再查看并安装一下对应的torchdata版本号就可

N4:调用与搭建word2vec网络进行训练

思路:

1导包

2数据集处理

3dataset and dataloader建立

4model 建立

5训练

6评估

1导包

- import torch

- import torch.nn as nn

- import warnings

- import pandas as pd

- import torchvision

- from torchvision import transforms,datasets

- warnings.filterwarnings('ignore')

-

- device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')

- print(device)

2数据集处理

- train_df=pd.read_csv('train.csv',sep='\t',header=None)

- print(train_df.head)

-

- x=train_df[0].values[:]

- y=train_df[1].values[:]

- #%%

- from gensim.models.word2vec import Word2Vec

- import numpy as np

-

- #训练word2vec浅层网络模型

- w2v=Word2Vec(vector_size=100,min_count=3)

- #vector_size指特征向量维度,默认为100

- #对词频少于min_count的单词进行截断

- w2v.build_vocab(x)

- w2v.train(x,total_examples=w2v.corpus_count,

- epochs=20)

-

-

- #定义将文本转化为向量的函数

- def total_vec(text):

- vec=np.zeros(100).reshape((1,100))

- for word in text:

- try:

- vec+=w2v.wv[word].reshape((1,100))

- except:

- continue

- return vec

-

- #将词向量保存为ndarray

- #x_vec=np.concatenate([total_vec(z) for z in x])

- #上述函数只是为了保存与显示,实际训练中并没有用

-

- #保存word2vec模型及词向量

- w2v.save('w2v_model.pkl')

数据集见前期文章N2

w2v=Word2Vec(vector_size=100,min_count=3)实例化网络

w2v.build_vocab(x)以数据集重建词表

注意word2vec建立的词表都是单个字建的,没有切词。比如‘双鸭山’这个词,只有,双,鸭,山的词嵌入,没有双鸭,鸭山,双鸭山的词嵌入。

w2v.train()进行训练

注意一定要用w2v.wv[word].reshape((1,100)),否则在np.concatenate([],axis=1)要在concatenate中声明维度按列拼接。reshape的意思是把以为向量(100,)变为二维向量(1,100)前者为[1,2,3,..,100],后者为[[1,2,3,..,100]]的形式

3dataset and dataloader建立

- label_name=list(set(train_df[1].values[:]))

- print(label_name)

-

- text_pipeline=lambda x:total_vec(x)

- label_pipeline=lambda x:label_name.index(x)

-

- from torch.utils.data import DataLoader

-

- def collate_batch(batch):

- label_list,text_list=[],[]

-

- for (textt,labell) in batch:

- label_list.append(label_pipeline(labell))

- processed_text=torch.tensor(text_pipeline(textt),dtype=torch.float32)

- text_list.append(processed_text)

- label_list=torch.tensor(label_list,dtype=torch.int64)

- text_list=torch.cat(text_list)

-

- return text_list.to(device),label_list.to(device)

-

- def coustom_data_iter(texts,labels):

- for x,y in zip(texts,labels):

- yield x,y

-

-

- from torch.utils.data.dataset import random_split

- from torchtext.data.functional import to_map_style_dataset

-

- train_iter = coustom_data_iter(x,y)

- train_dataset = to_map_style_dataset(train_iter)

-

- split_train_, split_valid_ = random_split(train_dataset,

- [int(len(train_dataset)*0.8),int(len(train_dataset)*0.2)])

-

- train_dataloader = DataLoader(split_train_, batch_size=batch_size,

- shuffle=True, collate_fn=collate_batch)

-

- valid_dataloader = DataLoader(split_valid_, batch_size=batch_size,

- shuffle=True, collate_fn=collate_batch)

4model 建立

- class TextClassificationModel(nn.Module):

-

- def __init__(self, num_class):

- super().__init__()

- self.fc = nn.Linear(100, num_class)

-

- def forward(self, text):

- return self.fc(text)

就是一个简单的MLP层

5训练

- #%%训练评估函数

- import time

-

- Epochs = 20

- Learning_rate = 5

- batch_size = 64

- model = TextClassificationModel(len(label_name)).to(device)

- criterion = torch.nn.CrossEntropyLoss()

- optimizer = torch.optim.SGD(model.parameters(), lr=Learning_rate)

- scheduler = torch.optim.lr_scheduler.StepLR(optimizer, 1.0, gamma=0.1)

- total_accu = None

- num_class = len(label_name)

- vocab_size = 100000

- em_size = 12

-

- def train(dataloader):

- model.train() # 切换为训练模式

- total_acc, train_loss, total_count = 0, 0, 0

- log_interval = 50

- start_time = time.time()

-

- for idx, (text,label) in enumerate(dataloader):

- predicted_label = model(text)

-

- optimizer.zero_grad() # grad属性归零

- loss = criterion(predicted_label, label) # 计算网络输出和真实值之间的差距,label为真实值

- loss.backward() # 反向传播

- torch.nn.utils.clip_grad_norm_(model.parameters(), 0.1) # 梯度裁剪

- optimizer.step() # 每一步自动更新

-

- # 记录acc与loss

- total_acc += (predicted_label.argmax(1) == label).sum().item()

- train_loss += loss.item()

- total_count += label.size(0)

-

-

- def evaluate(dataloader):

- model.eval() # 切换为测试模式

- total_acc, train_loss, total_count = 0, 0, 0

-

- with torch.no_grad():

- for idx, (text,label) in enumerate(dataloader):

- predicted_label = model(text)

-

- loss = criterion(predicted_label, label) # 计算loss值

- # 记录测试数据

- total_acc += (predicted_label.argmax(1) == label).sum().item()

- train_loss += loss.item()

- total_count += label.size(0)

-

- return total_acc/total_count, train_loss/total_count

-

-

-

- #%%训练模型

- for epoch in range(1, Epochs + 1):

- epoch_start_time = time.time()

- train(train_dataloader)

- val_acc, val_loss = evaluate(valid_dataloader)

-

- # 获取当前的学习率

- lr = optimizer.state_dict()['param_groups'][0]['lr']

-

- if total_accu is not None and total_accu > val_acc:

- scheduler.step()

- else:

- total_accu = val_acc

- print('-' * 69)

- print('| epoch {:1d} | time: {:4.2f}s | '

- 'valid_acc {:4.3f} valid_loss {:4.3f} | lr {:4.6f}'.format(epoch,

- time.time() - epoch_start_time,

- val_acc,val_loss,lr))

-

- print('-' * 69)

6评估

- #%%对指定内容进行测试

- def predict(text, text_pipeline):

- with torch.no_grad():

- text = torch.tensor(text_pipeline(text), dtype=torch.float32)

- print(text.shape)

- output = model(text)

- return output.argmax(1).item()

-

- # ex_text_str = "随便播放一首专辑阁楼里的佛里的歌"

- ex_text_str = "还有双鸭山到淮阴的汽车票吗13号的"

- model = model.to("cpu")

- print("该文本的类别是:%s" %label_name[predict(ex_text_str, text_pipeline)])

结果: