热门标签

热门文章

- 1华为OD机试Java - 螺旋数字矩阵

- 2水一篇之用vscode插件bito实现react类组件转函数组件_react vscode 插件

- 3USB Hub

- 4芯动力——硬件加速设计方法学习笔记(第二章)高质量VerilogHDL描述方法(3)模块复用、流水线、乒乓操作_verilog模块复用

- 5乳腺癌组织病理图像分类_免疫组化图像数据库

- 6flask无法从公网访问的解决方法_flask路由无法访问

- 7Qt 按钮样式_qt按钮样式

- 8Flink系列-11、Flink DataStream的Sink_rowstream.addsink ,jdbcsink 不生效

- 9Centos7搭建Hadoop HA完全分布式集群(6台机器)(内含hbase,hive,flume,kafka,spark,sqoop,phoenix,storm)_centos7 hbase安装

- 10秋招面试的自我介绍100天精通Python(基础篇(2),【工作感悟_python面试自我介绍

当前位置: article > 正文

【Hive】Centos7安装单机版Hive_hive单机环境搭建centos7

作者:Gausst松鼠会 | 2024-05-25 04:38:04

赞

踩

hive单机环境搭建centos7

Hive依赖MySQL存储元数据信息,安装Hive前需要先安装MySQL

一、安装MySQL

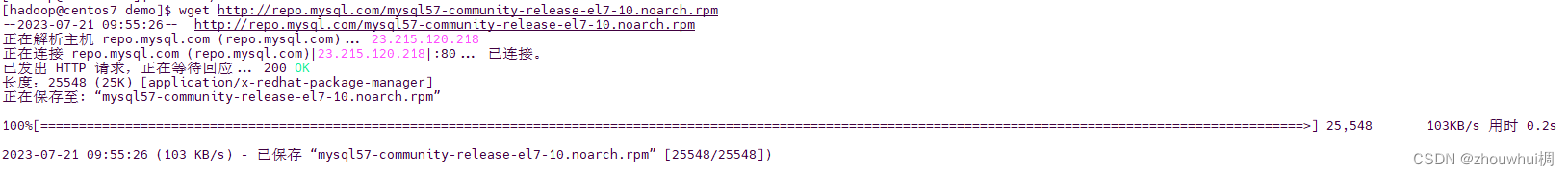

- 下载mysql安装包

wget http://repo.mysql.com/mysql57-community-release-el7-10.noarch.rpm

- 1

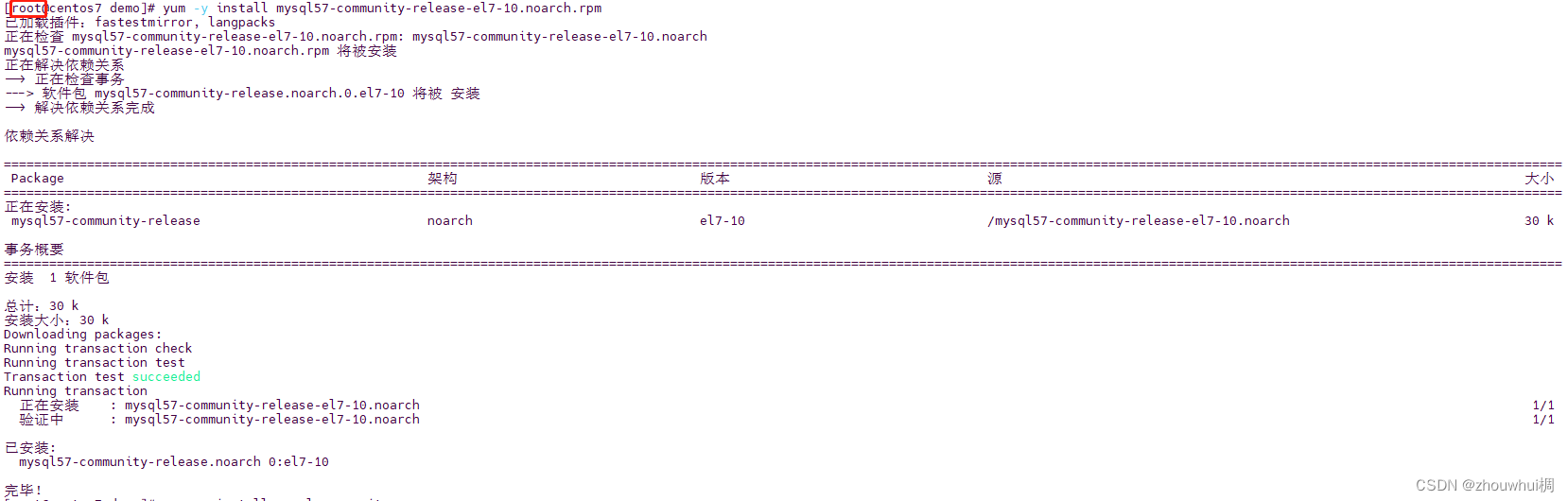

2. 如果不是root用户需要先切换到root用户,安装第1步下载的rpm包

yum -y install mysql57-community-release-el7-10.noarch.rpm

- 1

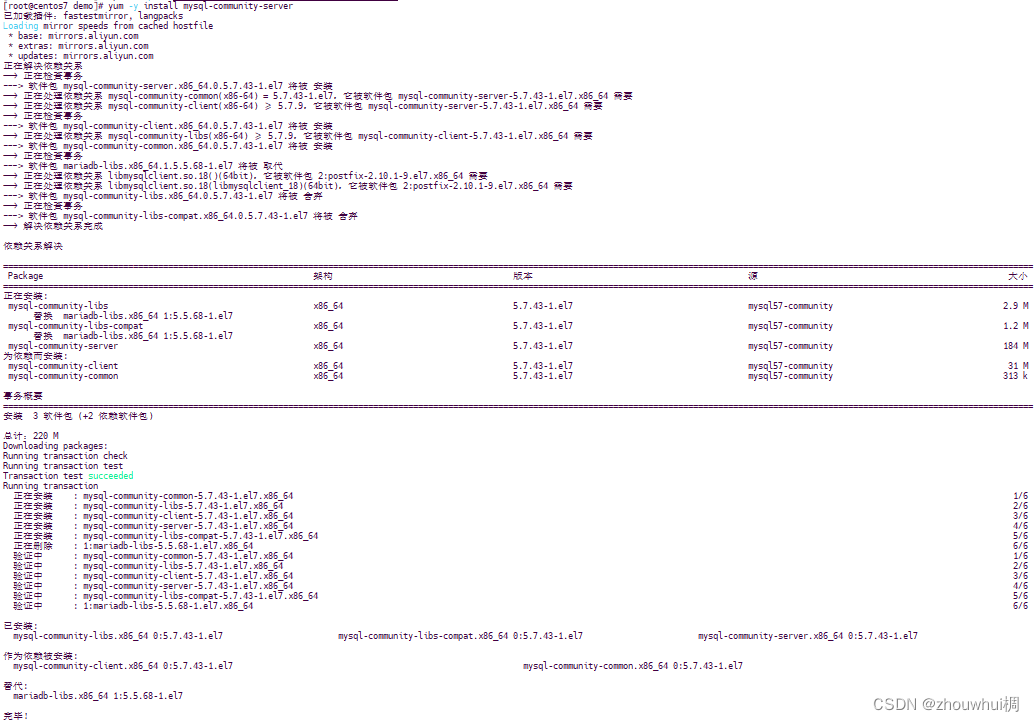

- 安装mysql服务端

yum -y install mysql-community-server

- 1

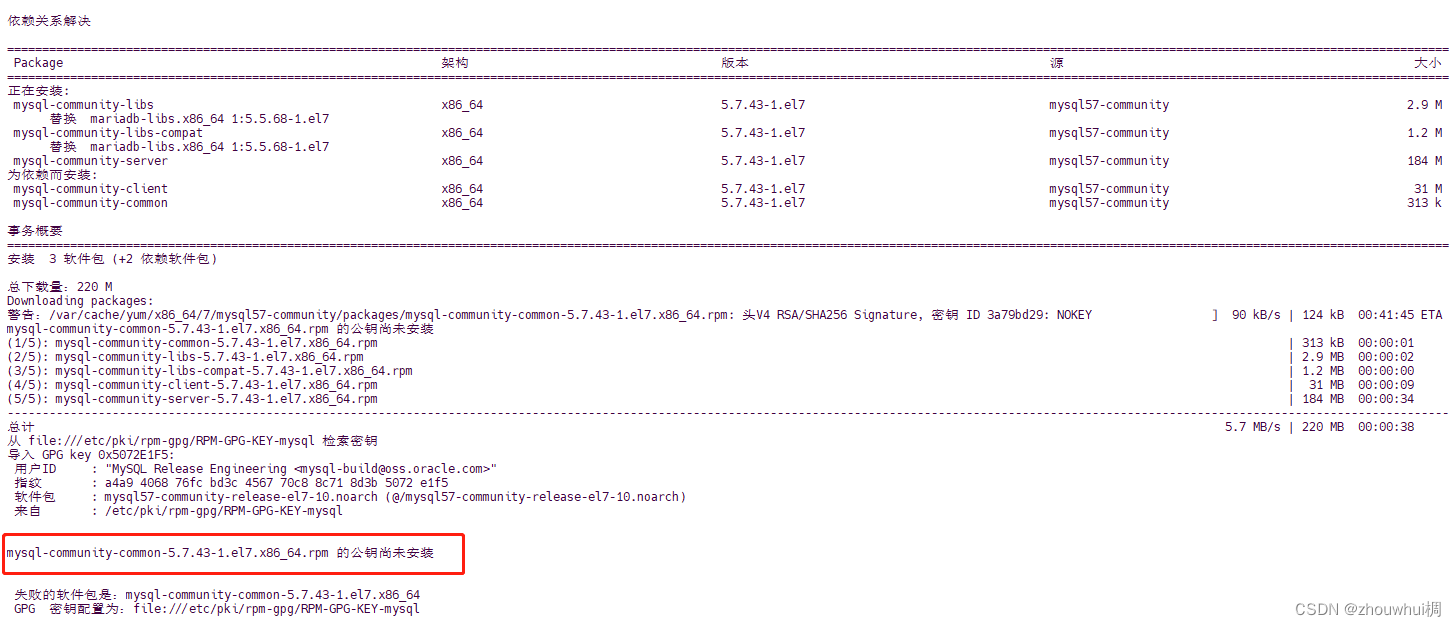

- 如果安装报错(未安装公钥)

执行以下命令安装公钥

rpm --import https://repo.mysql.com/RPM-GPG-KEY-mysql-2022

- 1

安装完后再次执行第3步操作就可以安装成功

- 启动MySql服务

systemctl start mysqld.service

- 1

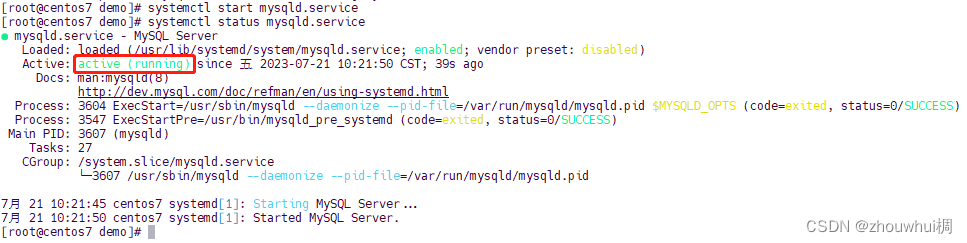

启动后状态如下图所示

- 更改初始密码

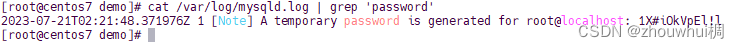

安装会未执行安装命令的系统用户(当前为root)创建一个同名数据库用户,有一个随机初始密码,更改密码便于后续使用

使用以下命令查询初始密码

cat /var/log/mysqld.log | grep 'password'

- 1

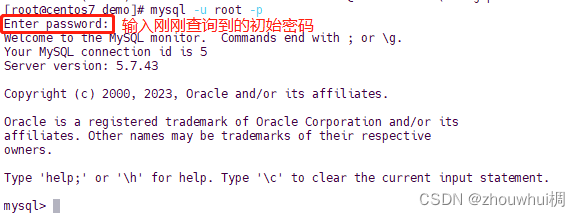

- 登录到进MySQL shell更改初始密码

执行以下命令登录到shell

mysql -u root -p

- 1

执行以下命令进行密码更改,注意密码有复杂度要求

在MySQL 5.7中,密码策略在安装时已默认启用,并定义了以下要求:

- 密码长度至少为8个字符

- 包含至少一个小写字母、一个大写字母、一个数字和一个特殊字符

alter user 'root'@'localhost' identified by '新密码';

- 1

exit;命令退出MySql shell

二、安装Hive(切换回普通用户,我这里使用的是hadoop)

- 下载安装包并解压

wget http://archive.apache.org/dist/hive/hive-3.1.0/apache-hive-3.1.0-bin.tar.gz

- 1

解压安装包

tar xvf apache-hive-3.1.0-bin.tar.gz -C /home/hadoop/software/

- 1

下载驱动

wget https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-5.1.47.tar.gz

- 1

解压驱动

tar xvf mysql-connector-java-5.1.47.tar.gz -C /home/hadoop/software

- 1

- 配置环境变量

将解压后的得到的文件夹名称改为hive,便于以后使用

mv /home/hadoop/software/apache-hive-3.1.0-bin /home/hadoop/software/hive

- 1

由于环境变量配置文件只有root用户有权限更改,使用sudo命令获取超管权限,执行以下命令,将环境变量配置写入配置文件

sudo echo 'export PATH=$PATH:/home/hadoop/software/hive/bin' >> /etc/profile

- 1

执行以下命令使环境变量生效

source /etc/profile

- 1

- 添加MySQL驱动

将驱动复制到hive的bin目录下(/home/hadoop/software/hive/bin/)

cp /home/hadoop/software/mysql-connector-java-5.1.47/mysql-connector-java-5.1.47-bin.jar /home/hadoop/software/hive/lib

- 1

- 添加配置文件hive-site.xml

切换到hive存放配置文件的目录“/home/hadoop/software/hive/conf/”,编辑hive-site.xml文件(如果文件不存在会自动创建)

vi hive-site.xml

- 1

将以下内容添加到文件中

<?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <!-- MySQL配置 --> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&useUnicode=true&characterEncoding=UTF-8&useSSL=false</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>MySQL的root用户登录密码</value> </property> <property> <name>datanucleus.readOnlyDatastore</name> <value>false</value> </property> <property> <name>datanucleus.fixedDatastore</name> <value>false</value> </property> <property> <name>datanucleus.autoCreateSchema</name> <value>true</value> </property> <property> <name>datanucleus.schema.autoCreateAll</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateTables</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateColumns</name> <value>true</value> </property> <property> <name>hive.metastore.local</name> <value>true</value> </property> <!-- 显示表的列名 --> <property> <name>hive.cli.print.header</name> <value>true</value> </property> <!-- 显示数据库名称 --> <property> <name>hive.cli.print.current.db</name> <value>true</value> </property> <property> <name>hive.exec.local.scratchdir</name> <value>/home/hadoop/sofatware/hive</value> <description>Local scratch space for Hive jobs</description> </property> <!-- 自定义目录start --> <property> <name>hive.downloaded.resources.dir</name> <value>/home/hadoop/sofatware/hive/hive-downloaded-addDir/</value> <description>Temporary local directory for added resources in the remote file system.</description> </property> <property> <name>hive.querylog.location</name> <value>/home/hadoop/sofatware/hive/querylog-location-addDir/</value> <description>Location of Hive run time structured log file</description> </property> <property> <name>hive.server2.logging.operation.log.location</name> <value>/home/hadoop/sofatware/hive/hive-logging-operation-log-addDir/</value> <description>Top level directory where operation logs are stored if logging functionality is enabled</description> </property> </configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

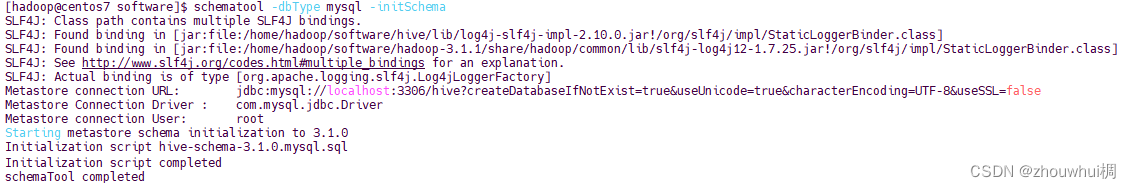

- 格式化数据库

schematool -dbType mysql -initSchema

- 1

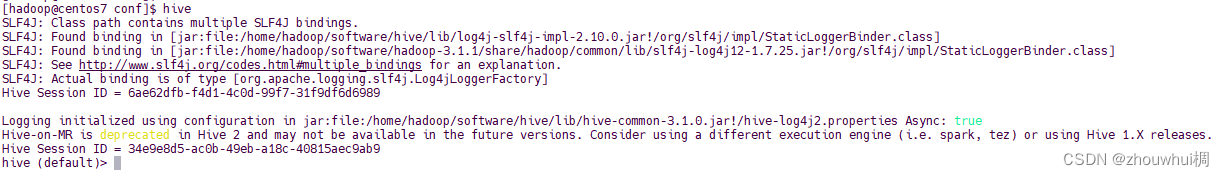

- 启动hive(确保MySql是启动状态)

hive依赖HDFS存储数据,启动那个hive前需要先启动HDFS或Hadoop

hive

- 1

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Gausst松鼠会/article/detail/620594

推荐阅读

相关标签